Abstract

The intensive pursuit for quantum advantage in terms of computational complexity has further led to a modernized crucial question of when and how will quantum computers outperform classical computers. The next milestone is undoubtedly the realization of quantum acceleration in practical problems. Here we provide a clear evidence and arguments that the primary target is likely to be condensed matter physics. Our primary contributions are summarized as follows: 1) Proposal of systematic error/runtime analysis on state-of-the-art classical algorithm based on tensor networks; 2) Dedicated and high-resolution analysis on quantum resource performed at the level of executable logical instructions; 3) Clarification of quantum-classical crosspoint for ground-state simulation to be within runtime of hours using only a few hundreds of thousand physical qubits for 2d Heisenberg and 2d Fermi-Hubbard models, assuming that logical qubits are encoded via the surface code with the physical error rate of p = 10−3. To our knowledge, we argue that condensed matter problems offer the earliest platform for demonstration of practical quantum advantage that is order-of-magnitude more feasible than ever known candidates, in terms of both qubit counts and total runtime.

Similar content being viewed by others

Introduction

When and how will quantum computers outperform classical computers? This pressing question drove the community to perform random sampling in quantum devices that are fully susceptible to noise1,2,3. We anticipate that the precedent milestone after this quantum transcendence is to realize quantum acceleration for practical problems. In this context, a remaining outstanding question is to identify which problem we shall aim next. This encompasses research across a range of fields, including natural science, computer science, and, notably, quantum technology.

Research on quantum acceleration is predominantly focused on two areas: cryptanalysis and quantum chemistry. In the realm of cryptanalysis, there has been a substantial progress since Shor introduced a polynomial time quantum algorithm for integer factorization and finding discrete logarithms4,5,6,7. Gidney et al. have estimated that a fully fault-tolerant quantum computer with 20 million (2 × 107) qubits could decipher a 2048-bit RSA cipher in eight hours, and a 3096-bit cipher in approximately a day7. This represents an almost hundred-fold enhancement in the the spacetime volume of the algorithm compared to similar efforts, which generally require several days5,6. Given that the security of nearly all asymmetric cryptosystems is predicated on the classical intractability of integer factoring or discrete logarithm findings8,9, the successful implementation of Shor’s algorithm is imperative to safeguard the integrity of modern and forthcoming communication networks.

The potential impact of accelerating quantum chemistry calculations, including first-principles calculations, is immensely significant as well. Given its broad applications in materials science and life sciences, it is noted that computational chemistry, though not exclusively quantum chemistry, accounts for 40% of HPC resources in the world10. Among numerous benchmarks, a notable target with significant impact is quantum advantage in simulation of energies of a molecule called FeMoco, found in the reaction center of a nitrogen-fixing enzyme11. According to the resource estimation that employs the state-of-the-art quantum algorithm, calculation of the ground-state energy of FeMoco requires about four days on a fault-tolerant quantum computer equipped with four million (4 × 106) physical qubits12. Additionally, Goings et al. conducted a comparison between quantum computers and the contemporary leading heuristic classical algorithm for cytochrome P450 enzymes, suggesting that the quantum advantage is realized only in computations extending beyond four days13.

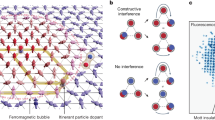

A practical quantum advantage in both domains has been proposed to be achievable within a timescale of days with millions of physical qubits. Such a spacetime volume of algorithm may not represent the most promising initial application of fault-tolerant quantum computers. This paper endeavors to highlight condensed matter physics as a promising candidate (See Fig. 1). We emphasize that while models in condensed matter physics encapsulate various fundamental quantum many-body phenomena, their structure is simpler than that of quantum chemistry Hamiltonians. Lattice quantum spin models and lattice fermionic models serve as nurturing grounds for strong quantum correlations, facilitating phenomena such as quantum magnetism, quantum condensation, topological order, quantum criticality, and beyond. Given the diversity and richness of these models, coupled with the difficulties of simulating large-scale systems using classical algorithms, even with the most advanced techniques, it would be highly beneficial to reveal the location of the crosspoint between quantum and classical computing based on runtime analysis.

Our work contributes to the community’s knowledge in three primary ways: 1) Introducing a systematic analysis method to estimate runtime to simulating quantum states within target energy accuracy using the extrapolation techniques, 2) Conducting an end-to-end runtime analysis of quantum resources at the level of executable logical instructions, 3) Clearly identifying the quantum-classical crosspoint for ground-state simulation to be within the range of hours using physical qubits on the order of 105, which are both less demanding by at least order of magnitude compared to other candidates. To the best of our knowledge, this suggests the most imminent practical and feasible platform for the crossover.

We remark that there are some works that assess the quantum resource to perform quantum simulation on quantum spin systems14,15, while the estimation is done solely regarding the dynamics; they do not involve time to extract information on any physical observables. Also, there are existing works on phase estimation for Fermi-Hubbard models16,17 that do not provide estimation on the classical runtime. In this regard, there has been no clear investigation on the quantum-classical crossover prior to the current study that assesses end-to-end runtime.

Results

Our argument on the quantum-classical crossover is based on the runtime analysis needed to compute the ground state energy within desired total energy accuracy, denoted as ϵ. The primal objective in this section is to provide a framework that elucidates the quantum-classical crosspoint for systems whose spectral gap is constant or polynomially-shrinking. In this work, we choose two models that are widely known due to their profoundness despite the simplicity: the 2d J1-J2 Heisenberg model and 2d Fermi-Hubbard model on a square lattice (see the Method section for their definitions). Meanwhile, it is totally unclear whether a feasible crosspoint exists at all when the gap closes exponentially.

It is important to keep in mind that condensed matter physics often entails extracting physical properties beyond merely energy, such as magnetization, correlation function, or dynamical responses. Therefore, in order to assure that expectation value estimations can done consistently (i.e. satisfy N-representability), we demand that we have the option to measure the physical observable after computation of the ground state energy is done. In other words, for instance the classical algorithm, we perform the variational optimization up to the desired target accuracy ϵ; we exclude the case where one calculates less precise quantum states with energy errors ϵi ≥ ϵ and subsequently perform extrapolation. The similar requirement is imposed on the quantum algorithm as well.

Runtime of classical algorithm

Among the numerous powerful classical methods available, we have opted to utilize the DMRG algorithm, which has been established as one of the most powerful and reliable numerical tools to study strongly-correlated quantum lattice models especially in one dimension (1d)18,19. In brief, the DMRG algorithm performs variational optimization on tensor-network-based ansatz named Matrix Product State (MPS)20,21. Although MPS is designed to efficiently capture 1d area-law entangled quantum states efficiently22, the efficacy of DMRG algorithm allows one to explore quantum many-body physics beyond 1d, including quasi-1d and 2d systems, and even all-to-all connected models, as considered in quantum chemistry23,24.

A remarkable characteristic of the DMRG algorithm is its ability to perform systematic error analysis. This is intrinsically connected to the construction of ansatz, or the MPS, which compresses the quantum state by performing site-by-site truncation of the full Hilbert space. The compression process explicitly yields a metric called “truncation error," from which we can extrapolate the truncation-free energy, E0, to estimate the ground truth. By tracking the deviation from the zero-truncation result E − E0, we find that the computation time and error typically obeys a scaling law (See Fig. 2 for an example of such a scaling behavior in 2d J1-J2 Heisenberg model). The resource estimate is completed by combining the actual simulation results and the estimation from the scaling law. [See Supplementary Note 2 for detailed analysis.]

Although the simulation itself does not reach ϵ = 0.01, learning curves for different bond dimensions ranging from D = 600 to D = 3000 collapse into a single curve, which implies the adequacy to estimate runtime according to the obtained scaling law. All DMRG simulations are executed using ITensor library61.

We remark that it is judicious to select the DMRG algorithm for 2d models, even though the formal complexity of number of parameters in MPS is expected to increase exponentially with system size N, owing to its intrinsic 1d-oriented structure. Indeed, one may consider another tensor network states that are designed for 2d systems, such as the Projected Entangled Pair States (PEPS)25,26. When one use the PEPS, the bond dimension is anticipated to scale as \(D=O(\log (N))\) for gapped or gapless non-critical systems and D = O(poly(N)) for critical systems27,28,29 to represent the ground state with fixed total energy accuracy of ϵ = O(1) (it is important to note that the former would be D = O(1) if considering a fixed energy density). Therefore, in the asymptotic limit, the scaling on the number of parameters of the PEPS is exponentially better than that of the MPS. Nonetheless, regarding the actual calculation, the overhead involved in simulating the ground state with PEPS is substantially high, to the extent that there are practically no scenarios where the runtime of the variational PEPS algorithm outperforms that of DMRG algorithm for our target models.

Overview of quantum resource estimation

Quantum phase estimation (QPE) is a quantum algorithm designed to extract the eigenphase ϕ of a given unitary U by utilizing ancilla qubits to indirectly read out the complex phase of the target system. More concretely, given a trial state \(\left\vert \psi \right\rangle\) whose fidelity with the k-th eigenstate \(\left\vert k\right\rangle\) of the unitary is given as fk = ∥〈k∣ψ〉∥2, a single run of QPE projects the state to \(\left\vert k\right\rangle\) with probability fk, and yields a random variable \(\hat{\phi }\) which corresponds to a m-digit readout of ϕk.

It was originally proposed by ref. 30 that eigenenergies of a given Hamiltonian can be computed efficiently via QPE by taking advantage of quantum computers to perform Hamiltonian simulation, e.g., \(U=\exp (-iH\tau )\). To elucidate this concept, it is beneficial to express the gate complexity for the QPE algorithm as schematically shown in Fig. 3 as

where we have defined CSP as the cost for state preparation, CHS for the controlled Hamiltonian simulation, and \({C}_{{{{{\rm{QFT}}}}}^{{\dagger} }}\) for the inverse quantum Fourier transformation, respectively (See Supplementary Note 4 for details). The third term \({C}_{{{{{\rm{QFT}}}}}^{{\dagger} }}\) is expected to be the least problematic with \({C}_{{{{{\rm{QFT}}}}}^{{\dagger} }}=O(\log (N))\), while the second term is typically evaluated as CHS = O(poly(N)) when the Hamiltonian is, for instance, sparse, local, or constituted from polynomially many Pauli terms. Conversely, the scaling of the third term CSP is markedly nontrivial. In fact, the ground state preparation of local Hamiltonian generally necessitates exponential cost, which is also related to the fact that the ground state energy calculation of local Hamiltonian is categorized within the complexity class of QMA-complete31,32.

State preparation cost in quantum algorithms

Although the aforementioned argument seems rather formidable, it is important to note that the QMA-completeness pertains to the worst-case scenario. Meanwhile, the average-case hardness in translationally invariant lattice Hamiltonians remains an open problem, and furthermore we have no means to predict the complexity under specific problem instances. In this context, it is widely believed that a significant number of ground states that are of substantial interest in condensed matter problems can be readily prepared with a polynomial cost33. In this work, we take a further step to argue that the state preparation cost can be considered negligible as CSP ≪ CHS for our specific target models, namely the gapless spin liquid state in the J1-J2 Heisenberg model or the antiferromagnetic state in the Fermi-Hubbard model. Our argument is based on numerical findings combined with upper bounds on the complexity, while we leave the theoretical derivation for scaling (e.g. Eq. (4)) as an open problem.

For concreteness, we focus on the scheme of the Adiabatic State Preparation (ASP) as a deterministic method to prepare the ground state through a time evolution of period tASP. We introduce a time-dependent interpolating function \(s(t):{\mathbb{R}}\mapsto [0,1](s(0)=0,s({t}_{{{{\rm{ASP}}}}})=1)\) such that the ground state is prepared via time-dependent Schrödinger equation given by

where H(t) = H(s(t)) = sHf + (1 − s)H0 for the target Hamiltonian Hf and the initial Hamiltonian H0. We assume that the ground state of H0 can be prepared efficiently, and take it as the initial state of the ASP. Early studies suggested a sufficient (but not necessary) condition for preparing the target ground state scales as tASP = O(1/ϵfΔ3)34,35,36 where ϵf = 1 − ∣〈ψGS∣ψ(tASP)〉∣ is the target infidelity and Δ is the spectral gap. This has been refined in recent research as

Two conditions independently achieve the optimality with respect to Δ and ϵf. Evidently, the ASP algorithm can prepare the ground state efficiently if the spectral gap is constant or polynomially small as Δ = O(1/Nα).

For both of our target models, numerous works suggest that α = 1/237,38,39, which is one of the most typical scalings in 2d gapless/critical systems such as the spontaneous symmetry broken phase with the Goldstone mode and critical phenomena described by 2d conformal field theory. With the polynomial scaling of Δ to be granted, now we ask what the scaling of CSP is, and how does it compare to other constituents, namely CHS and \({C}_{{{{\rm{QFT}}}}}^{{\dagger} }\).

In order to estimate the actual cost, we have numerically calculated tASP required to achieve the target fidelity (See Supplementary Note 3 for details) up to 48 qubits. With the aim of providing a quantitative way to estimate the scaling of tASP in larger sizes, we reasonably consider the combination of the upper bounds provided in Eq. (3) as

Figure 4a, b illustrates the scaling of tASP concerning ϵf and Δ, respectively. Remarkably, we find that Eq. (4) with β = 1.5 gives an accurate prediction for 2d J1-J2 Heisenberg model. This implies that the ASP time scaling is \({t}_{{{{\rm{ASP}}}}}=O({N}^{\beta /2}\log (1/{\epsilon }_{f}))\), which yields gate complexity of \(O({N}^{1+\beta /2}{{{\rm{polylog}}}}(N/{\epsilon }_{f}))\) under optimal simulation for time-dependent Hamiltonians40,41. Thus, CSP proves to be subdominant in comparison to CHS if β < 2, which is suggested in our simulation. Furthermore, under assumption of Eq. (4), we can estimate tASP to at most a few tens for practical system size of N ~ 100 under infidelity of ϵf ~ 0.1. This is fairly negligible compared to the controlled Hamiltonian simulation that requires dynamics duration to be order of tens of thousands in our target models (One must note that there is a slight different between two schemes. Namely, the time-dependent Hamiltonian simulation involves the quantum signal processing using the block-encoding of H(t), while the qubitization for the phase estimation only requires the block-encoding. This implies that T-count in the former would encounter overhead as seen in the Taylorization technique. However, we confirm that this overhead, determined by the degrees of polynomial in the quantum signal processing, is orders of tens41, so that the required T-count for state preparation is still suppressed by orders of magnitude compared to the qubitization). This outcome stems from the fact that the controlled Hamiltonian simulation for the purpose of eigenenergy extraction obeys the Heisenberg limit as CHS = O(1/ϵ), a consequence of time-energy uncertainty relation. This is in contrast to the state preparation, which is not related to any quantum measurement and thus there does not exist such a polynomial lower bound.

(a) Scaling with the target infidelity ϵf for system size of 4 × 4 lattice. The interpolation function is taken so that the derivative up to κ-th order is zero at t = 0, tASP. Here we consider the linear interpolation for κ = 0, and for smoother ones we take \({{{{\mathcal{S}}}}}_{\kappa }\) and \({{{{\mathcal{B}}}}}_{\kappa }\) that are defined from sinusoidal and incomplete Beta functions, respectively (see Supplementary Note 3). While smoothness for higher κ ensures logarithmic scaling for smaller ϵf, for the current target model, we find that it suffices to take s(t) whose derivative vanishes up to κ = 2 at t = 0, tASP. (b) Scaling with the spectral gap Δ. Here we perform the ASP using the MPS state for system size of Lx × Ly, where results for Lx = 2, 4, 6 is shown in cyan, blue, and green data points. We find that the scaling exhibits tASP ∝ 1/Δβ with β ~ 1.5.

Dominant quantum resource

As we have seen in the previous sections, the dominant contribution to the quantum resource is CHS, namely the controlled Hamiltonian simulation from which the eigenenergy phase is extracted into the ancilla qubits. Fortunately, with the scope of performing quantum resource estimation for the QPE and digital quantum simulation, numerous works have been devoted to analyzing the error scaling of various Hamiltonian simulation techniques, in particular the Trotter-based methods42,43,44. Nevertheless, we point out that crucial questions remain unclear; (A) which technique is the best practice to achieve the earliest quantum advantage for condensed matter problems, and (B) at which point does the crossover occur?

Here we perform resource estimation under the following common assumptions: (1) logical qubits are encoded using the formalism of surface codes45; (2) quantum gate implementation is based on Clifford+T formalism; Initially, we address the first question (A) by comparing the total number of T-gates, or T-count, across various Hamiltonian simulation algorithms, as the application of a T-gate involves a time-consuming procedure known as magic-state distillation. Although not necessarily, this procedure is considered to dominate the runtime in many realistic setups. Therefore, we argue that T-count shall provide sufficient information to determine the best Hamiltonian simulation technique. Then, with the aim of addressing the second question (B), we further perform high-resolution analysis on the runtime. We in particular consider concrete quantum circuit compilation with specific physical/logical qubit configuration compatible with the surface code implemented on a square lattice.

Let us first compute the T-counts to compare the state-of-the-art Hamiltonian simulation techniques: (randomized) Trotter product formula46,47, qDRIFT44, Taylorization48,49,50, and qubitization40. The former two commonly rely on the Trotter decomposition to approximate the unitary time evolution with sequential application of (controlled) Pauli rotations, while the latter two, dubbed as “post-Trotter methods," are rather based on the technique called the block-encoding, which utilize ancillary qubits to encode desired (non-unitary) operations on target systems (See Supplementary Note 5). While post-Trotter methods are known to be exponentially more efficient in terms of gate complexity regarding the simulation accuracy48, it is nontrivial to ask which is the best practice in the crossover regime, where the prefactor plays a significant role.

We have compiled quantum circuits based on existing error analysis to reveal the required T-counts (See Supplementary Notes 4, 6, and 7). From results presented in Table 1, we find that the qubitization algorithm provides the most efficient implementation in order to reach the target energy accuracy ϵ = 0.01. Although the post-Trotter methods, i.e., the Taylorization and qubitization algorithms require additional ancillary qubits of \(O(\log (N))\) to perform the block encoding, we regard this overhead as not a serious roadblock, since the target system itself and the quantum Fourier transformation requires qubits of O(N) and \(O(\log (N/\epsilon ))\), respectively. In fact, as we show in Fig. 5, the qubitization algorithms are efficient at near-crosspoint regime in physical qubit count as well, due to the suppressed code distance (see Supplementary Note 9 for details).

Here, we estimate the ground state energy up to target accuracy ϵ = 0.01 for 2d J1-J2 Heisenberg model (J2/J1 = 0.5) and 2d Fermi-Hubbard model (U/t = 4), both with lattice size of 10 × 10. The blue, orange, green, and orange points indicate the results that employ qDRIFT, 2nd-order random Trotter, Taylorization, and qubitization, where the circle and star markers denote the spin and fermionic models, respectively. Two flavors of the qubitization, the sequential and newly proposed product-wise construction (see Supplementary Note 5 for details), are discriminated by filled and unfilled markers. Note that Nph here does not account for the magic state factories, which are incorporated in Fig. 7.

We also mention that, for 2d Fermi-Hubbard model, there exists some specialized Trotter-based methods that improve the performance significantly16,17. For instance, the T-count of the QPE based on the state-or-the-art PLAQ method proposed in ref. 17 can be estimated to be approximately 4 × 108 for 10 × 10 system under ϵ = 0.01, which is slightly higher than the T-count required for the qubitization technique. Since the scaling of PLAQ is similar to the 2nd order Trotter method, we expect that the qubitization remains the best for all system size N.

The above results motivate us to study the quantum-classical crossover entirely using the qubitization technique as the subroutine for the QPE. As is detailed in Supplementary Note 8, our runtime analysis involves the following steps:

-

(I)

Hardware configuration. Determine the architecture of quantum computers (e.g., number of magic state factories, qubit connectivity etc.).

-

(II)

Circuit synthesis and transpilation. Translate high-level description of quantum circuits to Clifford+T formalism with the provided optimization level.

-

(III)

Compilation to executable instructions. Decompose logical gates into the sequence of executable instruction sets based on lattice surgery.

It should be noted that the ordinary runtime estimation only involves the step (II); simply multiplying the execution time of T-gate to the T-count as NTtT. However, we emphasize that this estimation method loses several vital factors in time analysis which may eventually lead to deviation of one or two orders of magnitude. In sharp contrast, our runtime analysis comprehensively takes all steps into account to yield reliable estimation under realistic quantum computing platforms.

Crossover under p = 10−3

Figure 6 shows the runtime of classical/quantum algorithms simulating the ground state energy in 2d J1-J2 Heisenberg model and 2d Fermi-Hubbard model. In both figures, we observe clear evidence of quantum-classical crosspoint below a hundred-qubit system (at lattice size of 10 × 10 and 6 × 6, respectively) within plausible runtime. Furthermore, a significant difference from ab initio quantum chemistry calculations is highlighted in the feasibility of system size N ~ 1000 logical qubit simulations, especially in simulation of 2d Heisenberg model that utilizes the parallelization technique for the oracles (See Supplementary Note 8 for details).

Here we show the results for (a) 2d J1-J2 Heisenberg model of J2/J1 = 0.5 and (b) 2d Fermi-Hubbard model of U/t = 4. The blue and red circles are the runtime estimate for the quantum phase estimation using the qubitization technique as a subroutine, whose analysis involves quantum circuit compilation of all the steps (I), (II), and (III). All the gates are compiled under the Clifford+T formalism with each logical qubits encoded by the surface code with code distance d around 17 to 25 assuming physical error rate of p = 10−3 (See Supplementary Note 9). Here, the number of magic state factories nF and number of parallelization threads nth are taken as (nF, nth) = (1, 1) and (16, 16) for “Single" and “Parallel," respectively. The dotted and dotted chain lines are estimates that only involve the analysis of step (II); calculation is based solely on the T-count of the algorithm with realistic T-gate consumption rate of 1kHz and 1MHz, respectively. The green stars and purple triangles are data obtained from the actual simulation results of classical DMRG and variational PEPS algorithms, respectively, with the shaded region denoting the potential room for improvement by using the most advanced computational resource (See Supplementary Note 2). Note that the system size is related with the lattice size M × M as N = 2M2 in the Fermi-Hubbard model.

For concreteness, let us focus on the simulation for systems with lattice size of 10 × 10, where we find the quantum algorithm to outperform the classical one. Using the error scaling, we find that the DMRG simulation is estimated to take about 105 and 109 seconds in 2d Heisenberg and 2d Fermi-Hubbard models, respectively. On the other hand, the estimation based on the dedicated quantum circuit compilation with the most pessimistic equipment (denoted as “Single" in Fig. 6) achieves runtime below 105 seconds in both models. This is further improves by an order when we assume a more abundant quantum resource. Concretely, using a quantum computer with multiple magic state factories (nF = 16) that performs multi-thread execution of the qubitization algorithm (nTh = 16), the quantum advantage can be achieved within a computational time frame of several hours. We find it informative to also display the usual T-count-based estimation; it is indeed reasonable to assume a clock rate of 1–10 kHz for single-thread execution, while its precise value fluctuates depending on the problem instance.

We note that the classical algorithm (DMRG) experiences an exponential increase in the runtime to reach the desired total energy accuracy ϵ = 0.01. This outcome is somewhat expected, since one must enforce the MPS to represent 2d quantum correlations into 1d via cylindrical boundary condition38,51. Meanwhile, the prefactor is significantly lower than that of other tensor-network-based methods, enabling its practical use in discussing the quantum-classical crossover. For instance, although the formal scaling is exponentially better in variational PEPS algorithm, the runtime in 2d J1-J2 Heisenberg model exceeds 104 seconds already for the 6 × 6 model, while the DMRG algorithm consumes only 102 seconds (See Fig. 6a). Even if we assume that the bond dimension of PEPS can be kept constant for larger N, the crossover between DMRG and variational PEPS occurs only above the size of 12 × 12. As we have discussed previously, we reasonably expect \(D=O(\log (N))\) for simulation of fixed total accuracy, and furthermore expect that the number of variational optimization also scales polynomially with N. This implies that the scaling is much worse than O(N); in fact, we have used constant value of D for L = 4, 6, 8 and observe that the scaling is already worse than cubic in our setup. Given such a scaling, we conclude that DMRG is better suited than the variational PEPS for investigating the quantum-classical crossover, and also that quantum algorithms with quadratic scaling on N runs faster in the asymptotic limit.

Portfolio of crossover under various algorithmic/hardware setups

It is informative to modify the hardware/algorithmic requirements to explore the variation of quantum-classical crosspoint. For instance, the code distance of the surface code depends on p and ϵ as (See Supplementary Note 9)

Note that this also affects the number of physical qubits via the number of physical qubit per logical qubit 2d2. We visualize the above relationship explicitly in Fig. 7, which considers the near-crosspoint regime of 2d J1-J2 Heisenberg model and 2d Fermi-Hubbard model. It can be seen from Fig. 7a, b, d, e that the improvement of the error rate directly triggers the reduction of the required code distance, which results in s significant suppression of the number of physical qubits. This is even better captured by Fig. 7c, f. By achieving a physical error rate of p = 10−4 or 10−5, for instance, one may realize a 4-fold or 10-fold reduction of the number of physical qubits.

The panels denote (a) code distance d and (b) number of physical qubits Nph required to simulate the ground state of 2d J1-J2 Heisenberg model with lattice size of 10 × 10 with J2 = 0.5. Here, the qubit plane is assumed to be organized as (nF, #thread) = (1, 1). The setup used in the maintext, ϵ = 0.01 and p = 10−3, is indicated by the orange stars. c Focused plot at ϵ = 0.01. Blue and red points show the results for code distance d and Nph, respectively, where the filled and empty markers correspond to floor plans with (nF, #thread) = (1, 1) and (16, 16), respectively. (d–f) Plots for 2d Fermi-Hubbard model of lattice size 6 × 6 with U = 4, corresponding to (a–c) for the Heisenberg model.

The logarithmic dependence for ϵ in Eq. (5) implies that the target accuracy does not significantly affect the qubit counts; it is rather associated with the runtime, since the total runtime scaling is given as

which now shows polynomial dependence on ϵ. Note that this scaling is based on multiplying a factor of d to the gate complexity, since we assumed that the runtime is dominated by the magic state generation, of which the time is proportional to the code distance d, rather than by the classical postprocessing (see Supplementary Notes 8 and 9). As is highlighted in Fig. 8, we observe that in the regime with higher ϵ, the computation is completed within minutes. However, we do not regard such a regime as an optimal field for the quantum advantage. The runtime of classical algorithms typically shows higher-power dependence on ϵ, denoted as O(1/ϵγ), with γ ~ 2 for J1-J2 Heisenberg model and γ ~ 4 for the Fermi-Hubbard model (see Supplementary Note 2), which both implies that classical algorithms are likely to run even faster than quantum algorithms under large ϵ values. We thus argue that the setup of ϵ = 0.01 provides a platform that is both plausible for the quantum algorithm and challenging by the classical algorithm.

Panels (a) and (c) show results for 2d J1-J2 Heisenberg model of lattice size 10 × 10 with J2 = 0.5, while (b) and (d) show results for 2d Fermi-Hubbard model of lattice size 6 × 6 with U = 4. The floor plan of the qubit plane is assumed as (nF, #thread) = (1, 1) and (16, 16) for (a, b) and (c, d), respectively. The setup ϵ = 0.01 and p = 10−3, employed in Fig. 6, is shown by the black open stars.

Discussion

Our work has presented a detailed analysis of the quantum-classical crossover in condensed matter physics, specifically, pinpointing the juncture where the initial applications of fault-tolerant quantum computers demonstrate advantages over classical algorithms. Unlike previous studies, which primarily focused on exact simulation techniques to represent classical methods, we have proposed utilizing error scaling to estimate runtime using one of the most powerful variational simulation method—the DMRG algorithm. We have also scrutinized the execution times of quantum algorithms, conducting a high-resolution analysis that takes into account the topological restrictions on physical qubit connectivity, the parallelization of Hamiltonian simulation oracles, among other factors. This rigorous analysis has led us to anticipate that the crossover point is expected to occur within feasible runtime of a few hours when the system size N reaches about a hundred. Our work serves as a reliable guiding principle for establishing milestones across various platform of quantum technologies.

Various avenues for future exploration can be envisioned. We would like to highlight primary directions here. Firstly, expanding the scope of runtime analysis to encompass a wider variety of classical methods is imperative. In this study, we concentrated on the DMRG and variational PEPS algorithms due to their simplicity in runtime analysis. However, other quantum many-body computation methods such as quantum Monte Carlo (e.g. path-integral Monte Carlo, variational Monte Carlo etc.), coupled-cluster techniques, or other tensor-network-based methods hold equal importance. In particular, devising a systematic method to conduct estimates on Monte Carlo methods shall be a nontrivial task.

Secondly, there is a pressing need to further refine quantum simulation algorithms that are designed to extract physics beyond the eigenenergy, such as the spacial/temporal correlation function, nonequilibrium phenomena, finite temperature properties, among others. Undertaking error analysis on these objective could prove to be highly rewarding.

Thirdly, it is important to survey the optimal method of state preparation. While we have exclusively considered the ASP, there are numerous options including the Krylov technique52, recursive application of phase estimation53, and sparse-vector encoding technique54. Since the efficacy of state preparation methods heavily relies on individual instances, it would be crucial to elaborate on the resource estimation in order to discuss quantum-classical crossover in other fields including high-energy physics, nonequilibrium physics, and so on.

Fourthly, it is interesting to seek the possibility of reducing the number of physical qubits by replacing the surface code with other quantum error-correcting codes with a better encoding rate55. For instance, there have been suggestions that the quantum LDPC codes may enable us to reduce the number of physical qubits by a factor of tens to hundreds55. Meanwhile, there are additional overheads in implementation and logical operations, which may increase the runtime and problem sizes for demonstrating quantum advantage.

Lastly, exploring the possibilities of a classical-quantum hybrid approach is an intriguing direction. This could involve twirling of Solovey-Kitaev errors into stochastic errors that can be eliminated by quantum error mitigation techniques originally developed for near-future quantum computers without error correction56,57.

Methods

Target models

Condensed matter physics deals with intricate interplay between microscopic degrees of freedom such as spins and electrons, which, in many cases, form translationally symmetric structures. Our focus is on lattice systems that not only reveal the complex and profound nature of quantum many-body phenomena, but also await to be solved despite the existing intensive studies (See Supplementary Note 1):

-

(1)

Antiferromagnetic Heisenberg model. Paradigmatic quantum spin models frequently involve frustration between interactions as source of complex quantum correlation. One highly complex example is the spin-1/2 J1-J2 Heisenberg model on the square lattice, whose ground state property has remained a persistent problem over decades:

$$H={J}_{1}\mathop{\sum}\limits_{\langle p,q\rangle }\mathop{\sum}\limits_{\alpha \in \{X,Y,Z\}}{S}_{p}^{\alpha }{S}_{q}^{\alpha }+{J}_{2}\mathop{\sum}\limits_{\langle \langle p,q\rangle \rangle }\mathop{\sum}\limits_{\alpha \in \{X,Y,Z\}}{S}_{p}^{\alpha }{S}_{q}^{\alpha },$$where 〈 ⋅ 〉 and 〈〈 ⋅ 〉〉 denote pairs of (next-)nearest-neighboring sites that are coupled via Heisenberg interaction with amplitude J1(2), and \({S}_{p}^{\alpha }\) is the α-component of spin-1/2 operator on the p-th site. Due to the competition between the J1 and J2 interaction, tje formation of any long-range order is hindered at J2/J1 ~ 0.5, at which a quantum spin liquid phase is expected to realize39,58,59. In the following we set J2 = 0.5 with J1 to be unity, and focus on cylindrical boundary conditions.

-

(2)

Fermi-Hubbard model. One of the most successful fermionic models that captures the essence of electronic and magnetic behavior in quantum materials is the Fermi-Hubbard model. Despite the concise construction, it exhibits a variety of features such as the unconventional superfluidity/superconductivity, quantum magnetism, and interaction-driven insulating phase (or Mott insulator)60. With this in mind, we consider the following half-filled Hamiltonian:

$$H=-t\mathop{\sum}\limits_{\langle p,q\rangle ,\sigma }({c}_{p,\sigma }^{{\dagger} }{c}_{q,\sigma }+\,{{\mbox{h.c.}}}\,)+U\mathop{\sum}\limits_{p}{c}_{p,\uparrow }^{{\dagger} }{c}_{p,\uparrow }{c}_{p,\downarrow }^{{\dagger} }{c}_{p,\downarrow },$$where t = 1 is the hopping amplitude and U is the repulsive onsite potential for annihilation (creation) operators \({c}_{p,\sigma }^{({\dagger} )}\), defined for a fermion that resides on site p with spin σ. Here the summation on the hopping is taken over all pairs of nearest-neighboring sites 〈p, q〉. Note that one may further introduce nontrivial chemical potential to explore cases that are not half-filled, although we leave this for future work.

Data availability

All study data are included in this article and Supplementary Materials.

Code availability

Codes are available upon request.

References

Arute, F. et al. Quantum supremacy using a programmable superconducting processor. Nature 574, 505–510 (2019).

Zhong, H.-S. et al. Quantum computational advantage using photons. Science 370, 1460–1463 (2020).

Zhong, H.-S. et al. Phase-programmable gaussian boson sampling using stimulated squeezed light. Phys. Rev. Lett. 127, 180502 (2021).

Shor, P. W. Polynomial-time algorithms for prime factorization and discrete logarithms on a quantum computer. SIAM Rev. 41, 303–332 (1999).

Fowler, A. G., Mariantoni, M., Martinis, J. M. & Cleland, A. N. Surface codes: Towards practical large-scale quantum computation. Phys. Rev. A 86, 032324 (2012).

Gheorghiu, V. & Mosca, M. Benchmarking the quantum cryptanalysis of symmetric, public-key and hash-based cryptographic schemes. Preprint at https://arxiv.org/abs/1902.02332 (2019).

Gidney, C. & Ekerå, M. How to factor 2048 bit RSA integers in 8 hours using 20 million noisy qubits. Quantum 5, 433 (2021).

Rivest, R. L., Shamir, A. & Adleman, L. A method for obtaining digital signatures and public-key cryptosystems. Commun. ACM 21, 120–126 (1978).

Kerry, C. F. & Gallagher, P. D. Digital signature standard (dss) (FIPS PUB, 2013) 186–4.

Sherrill, C. D., Manolopoulos, D. E., Martínez, T. J. & Michaelides, A. Electronic structure software. J. Chem. Phys. 153, 070401 (2020).

Reiher, M., Wiebe, N., Svore, K. M., Wecker, D. & Troyer, M. Elucidating reaction mechanisms on quantum computers. PNAS 114, 7555–7560 (2017).

Lee, J. et al. Even more efficient quantum computations of chemistry through tensor hypercontraction. PRX Quantum 2, 030305 (2021).

Goings, J. J. et al. Reliably assessing the electronic structure of cytochrome p450 on today’s classical computers and tomorrow’s quantum computers. PNAS 119, e2203533119 (2022).

Childs, A. M., Maslov, D., Nam, Y., Ross, N. J. & Su, Y. Toward the first quantum simulation with quantum speedup. PNAS 115, 9456–9461 (2018).

Beverland, M. E. et al. Assessing requirements to scale to practical quantum advantage. Preprint at https://arxiv.org/abs/2211.07629 (2022).

Kivlichan, I. D. et al. Improved Fault-Tolerant Quantum Simulation of Condensed-Phase Correlated Electrons via Trotterization. Quantum 4, 296 (2020).

Campbell, E. T. Early fault-tolerant simulations of the hubbard model. Quantum Sci. Technol. 7, 015007 (2021).

White, S. R. Density matrix formulation for quantum renormalization groups. Phys. Rev. Lett. 69, 2863–2866 (1992).

White, S. R. & Huse, D. A. Numerical renormalization-group study of low-lying eigenstates of the antiferromagnetic s=1 heisenberg chain. Phys. Rev. B 48, 3844–3852 (1993).

Östlund, S. & Rommer, S. Thermodynamic limit of density matrix renormalization. Phys. Rev. Lett. 75, 3537–3540 (1995).

Dukelsky, J., Martín-Delgado, M. A., Nishino, T. & Sierra, G. Equivalence of the variational matrix product method and the density matrix renormalization group applied to spin chains. Europhys. Lett. 43, 457–462 (1998).

Eisert, J., Cramer, M. & Plenio, M. B. Colloquium: Area laws for the entanglement entropy. Rev. Mod. Phys. 82, 277–306 (2010).

Wouters, S. & Van Neck, D. The density matrix renormalization group for ab initio quantum chemistry. Eur. Phys. J. D 68, 272 (2014).

Baiardi, A. & Reiher, M. The density matrix renormalization group in chemistry and molecular physics: Recent developments and new challenges. J. Chem. Phys. 152, 040903 (2020).

Nishino, T. et al. Two-Dimensional Tensor Product Variational Formulation. Prog. Theor. Phys. 105, 409–417 (2001).

Verstraete, F. & Cirac, J. I. Renormalization algorithms for quantum-many body systems in two and higher dimensions. Preprint at https://arxiv.org/abs/cond-mat/0407066 (2004).

Verstraete, F., Wolf, M. M., Perez-Garcia, D. & Cirac, J. I. Criticality, the area law, and the computational power of projected entangled pair states. Phys. Rev. Lett. 96, 220601 (2006).

Haghshenas, R. & Sheng, D. N. u(1)-symmetric infinite projected entangled-pair states study of the spin-1/2 square J1 − J2 heisenberg model. Phys. Rev. B 97, 174408 (2018).

Rader, M. & Läuchli, A. M. Finite correlation length scaling in lorentz-invariant gapless ipeps wave functions. Phys. Rev. X 8, 031030 (2018).

Abrams, D. S. & Lloyd, S. Quantum algorithm providing exponential speed increase for finding eigenvalues and eigenvectors. Phys. Rev. Lett. 83, 5162–5165 (1999).

Kitaev, A. Y., Shen, A., Vyalyi, M. N. & Vyalyi, M. N. Classical and quantum computation, 47 (American Mathematical Soc., 2002).

Kempe, J., Kitaev, A. & Regev, O. The complexity of the local hamiltonian problem. SIAM J. Comput. 35, 1070–1097 (2006).

Deshpande, A., Gorshkov, A. V. & Fefferman, B. Importance of the spectral gap in estimating ground-state energies. PRX Quantum 3, 040327 (2022).

Kato, T. On the adiabatic theorem of quantum mechanics. J. Phys. Soc. Jpn. 5, 435–439 (1950).

Elgart, A. & Hagedorn, G. A. A note on the switching adiabatic theorem. J. Math. Phys. 53, 102202 (2012).

Ge, Y., Molnár, A. & Cirac, J. I. Rapid adiabatic preparation of injective projected entangled pair states and gibbs states. Phys. Rev. Lett. 116, 080503 (2016).

Imada, M., Fujimori, A. & Tokura, Y. Metal-insulator transitions. Rev. Mod. Phys. 70, 1039–1263 (1998).

Wang, L. & Sandvik, A. W. Critical level crossings and gapless spin liquid in the square-lattice spin-1/2 J1 − J2 heisenberg antiferromagnet. Phys. Rev. Lett. 121, 107202 (2018).

Nomura, Y. & Imada, M. Dirac-type nodal spin liquid revealed by refined quantum many-body solver using neural-network wave function, correlation ratio, and level spectroscopy. Phys. Rev. X 11, 031034 (2021).

Low, G. H. & Chuang, I. L. Hamiltonian simulation by qubitization. Quantum 3, 163 (2019).

Dong, Y., Meng, X., Whaley, K. B. & Lin, L. Efficient phase-factor evaluation in quantum signal processing. Physical Review A 103, 042419 (2021).

Suzuki, M. General correction theorems on decomposition formulae of exponential operators and extrapolation methods for quantum monte carlo simulations. Phys. Lett. A 113, 299–300 (1985).

Childs, A. M., Su, Y., Tran, M. C., Wiebe, N. & Zhu, S. Theory of trotter error with commutator scaling. Phys. Rev. X 11, 011020 (2021).

Campbell, E. Random compiler for fast hamiltonian simulation. Phys. Rev. Lett. 123, 070503 (2019).

Kitaev, A. Y. Quantum computations: algorithms and error correction. Russ. Math. Surv. 52, 1191–1249 (1997).

Suzuki, M. General theory of fractal path integrals with applications to many–body theories and statistical physics. J. Math. Phys. 32, 400–407 (1991).

Childs, A. M., Ostrander, A. & Su, Y. Faster quantum simulation by randomization. Quantum 3, 182 (2019).

Berry, D. W., Childs, A. M., Cleve, R., Kothari, R. & Somma, R. D. Exponential improvement in precision for simulating sparse hamiltonians. In Proceedings of the Forty-Sixth Annual ACM Symposium on Theory of Computing, STOC’14, 283–292 (Association for Computing Machinery, 2014).

Berry, D. W., Childs, A. M., Cleve, R., Kothari, R. & Somma, R. D. Simulating hamiltonian dynamics with a truncated taylor series. Phys. Rev. Lett. 114, 090502 (2015).

Meister, R., Benjamin, S. C. & Campbell, E. T. Tailoring Term Truncations for Electronic Structure Calculations Using a Linear Combination of Unitaries. Quantum 6, 637 (2022).

LeBlanc, J. P. F. et al. Solutions of the two-dimensional hubbard model: Benchmarks and results from a wide range of numerical algorithms. Phys. Rev. X 5, 041041 (2015).

Kirby, W., Motta, M. & Mezzacapo, A. Exact and efficient lanczos method on a quantum computer. Quantum 7, 1018 (2023).

Zhao, J., Wu, Y.-C., Guo, G.-C. & Guo, G.-P. State preparation based on quantum phase estimation. Preprint at https://arxiv.org/abs/1912.05335 (2019).

Zhang, X.-M., Li, T. & Yuan, X. Quantum state preparation with optimal circuit depth: Implementations and applications. Phys. Rev. Lett. 129, 230504 (2022).

Bravyi, S. et al. High-threshold and low-overhead fault-tolerant quantum memory. Nature 627, 778–782 (2023).

Suzuki, Y., Endo, S., Fujii, K. & Tokunaga, Y. Quantum error mitigation as a universal error reduction technique: Applications from the nisq to the fault-tolerant quantum computing eras. PRX Quantum 3, 010345 (2022).

Piveteau, C., Sutter, D., Bravyi, S., Gambetta, J. M. & Temme, K. Error mitigation for universal gates on encoded qubits. Phys. Rev. Lett. 127, 200505 (2021).

Zhang, G.-M., Hu, H. & Yu, L. Valence-bond spin-liquid state in two-dimensional frustrated spin-1/2 heisenberg antiferromagnets. Phys. Rev. Lett. 91, 067201 (2003).

Jiang, H.-C., Yao, H. & Balents, L. Spin liquid ground state of the spin-\(\frac{1}{2}\) square J1-J2 heisenberg model. Phys. Rev. B 86, 024424 (2012).

Esslinger, T. Fermi-hubbard physics with atoms in an optical lattice. Ann. Rev. Condensed Matter. Phys. 1, 129–152 (2010).

Fishman, M., White, S. R. & Stoudenmire, E. M. The ITensor Software Library for Tensor Network Calculations. SciPost Phys. Codebases 4, https://scipost.org/10.21468/SciPostPhysCodeb.4 (2022).

Acknowledgements

The authors are grateful to the fruitful discussions with Sergei Bravyi, Keisuke Fujii, Zongping Gong, Takuya Hatomura, Kenji Harada, Will Kirby, Sam McArdle, Takahiro Sagawa, Kareljan Schoutens, Kunal Sharma, Kazutaka Takahashi, Zhi-Yuan Wei, and Hayata Yamasaki. N.Y. wishes to thank JST PRESTO No. JPMJPR2119 and the support from IBM Quantum. T.O. wishes to thank JST PRESTO Grant Number JPMJPR1912, JSPS KAKENHI Nos. 22K18682, 22H01179, and 23H03818, and support by the Endowed Project for Quantum Software Research and Education, The University of Tokyo (https://qsw.phys.s.u-tokyo.ac.jp/). Y. S. wishes to thank JST PRESTO Grant Number JPMJPR1916 and JST Moonshot R&D Grant Number JPMJMS2061. W.M. wishes to thank JST PRESTO No. JPMJPR191A, JST COI-NEXT program Grant No. JPMJPF2014 and MEXT Quantum Leap Flagship Program (MEXT Q-LEAP) Grant Number JPMXS0118067394 and JPMXS0120319794. This work was supported by JST Grant Number JPMJPF2221. This work was supported by JST ERATO Grant Number JPMJER2302 and JST CREST Grant Number JPMJCR23I4, Japan. A part of computations were performed using the Institute of Solid State Physics at the University of Tokyo.

Author information

Authors and Affiliations

Contributions

N.Y. and T.O. conducted resource estimation on classical computation. N.Y., Y.S. and Y.K. performed resource estimation on quantum algorithms. N.Y. and W.M. conceived the idea. All authors contributed to the discussion and manuscript.

Corresponding authors

Ethics declarations

Competing interests

Y.S. and W.M. own stock/options in QunaSys Inc. Y.S. owns stock/options in Nippon Telegraph and Telephone.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yoshioka, N., Okubo, T., Suzuki, Y. et al. Hunting for quantum-classical crossover in condensed matter problems. npj Quantum Inf 10, 45 (2024). https://doi.org/10.1038/s41534-024-00839-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-024-00839-4