Abstract

Limb movement direction can be inferred from local field potentials in motor cortex during movement execution. Yet, it remains unclear to what extent intended hand movements can be predicted from brain activity recorded during movement planning. Here, we set out to probe the directional-tuning of oscillatory features during motor planning and execution, using a machine learning framework on multi-site local field potentials (LFPs) in humans. We recorded intracranial EEG data from implanted epilepsy patients as they performed a four-direction delayed center-out motor task. Fronto-parietal LFP low-frequency power predicted hand-movement direction during planning while execution was largely mediated by higher frequency power and low-frequency phase in motor areas. By contrast, Phase-Amplitude Coupling showed uniform modulations across directions. Finally, multivariate classification led to an increase in overall decoding accuracy (>80%). The novel insights revealed here extend our understanding of the role of neural oscillations in encoding motor plans.

Similar content being viewed by others

Introduction

The direction of arm movements can be inferred from the firing pattern of individual neurons in the primary motor cortex (M1)1,2. Critically, the firing rate of M1 neurons has been shown to depend on the movement direction, a well-established phenomenon known as directional tuning. A directionally tuned neuron exhibits maximum firing rates during arm movement in its “preferred direction” and gradually lower rates for other directions. Most studies have used single unit activity (SUA) to decode movement parameters in non-humans3,4,5,6 or humans7.

A growing body of research suggests that multi-unit activity (MUA) and Local Field Potential (LFP) signals can also be used to predict movement directions from the monkey motor cortex8,9,10,11,12. Further evidence in macaques has revealed clear task-related LFP modulations in the gamma band in the posterior parietal cortex during both the planning and execution of arm and eye movements13,14. In humans, LFP-based movement type identification and directional tuning have been investigated with intracranial EEG (iEEG) data acquired during pre-surgical evaluations in patients with drug-resistant epilepsy11,15,16,17,18,19. Interestingly, when comparing the decoding power achieved by the different frequency components of the iEEG signal, these studies show that the highest directional tuning is often found in the low-pass filtered signals (<4 Hz) and in the power of the so-called broadband gamma band (~60–140 Hz). This corroborates directional tuning findings reported in monkeys9,16,20 and non-invasively in humans21. The ability to infer movement type and direction from invasive and non-invasive recordings has a direct clinical application for brain–computer interfaces22,23,24.

The above studies provide compelling evidence that limb movement direction can be decoded using spectral power properties of both invasive and non-invasive motor cortex recordings during movement execution. However, it is still not clear whether other frequency-domain features, such as oscillation phase and cross-frequency interactions, exhibit directional tuning. Furthermore, little is known about the temporal dynamics with which movement direction is represented in the brain during planning. To address these gaps, we investigated whether the classification of arm movement directions was possible using phase, amplitude, and phase–amplitude coupling (PAC) features during both planning and execution. We then asked whether movement directions share common neural representations during both phases. To tackle this question, we trained a classifier at execution and tested whether it was able to decode movement directions at planning and conversely. Investigating the cross-temporal generalizations in both directions was aimed at probing similarities of neural representations of movement directions between planning and execution. Importantly, we were able to explore this question using depth recordings from over 700 cortical sites across six epilepsy patients. Local field potentials were continuously monitored using stereotactic-EEG (SEEG) while participants performed a classical delayed center-out motor task. We hypothesized that movement decoding should be possible from the moment movement planning starts, i.e. during the delay period preceding movement onset (0–1500 ms). In addition, based on previous work emphasizing the importance of phase and PAC in motor tasks25,26,27,28, we also hypothesized that these features, alongside spectral power, would display directional tuning.

Our results provide evidence for successful prediction (up to 86%) of intended arm movements in humans, using combinations of oscillatory phase and power features extracted from LFPs in motor and non-motor structures. Single-feature direction decoding revealed the prominent role of alpha oscillations and broadband gamma activity during planning and execution, respectively. Finally, our multi-site electrophysiological decoding framework reveals insights into the temporal dynamics of movement encoding.

Results

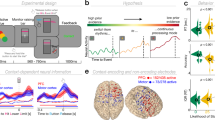

In the following we present the findings of decoding arm movement directions in human epileptic patients implanted with intracranial electrodes and performing a center-out motor task (Fig. 1). We begin with (i) the results of single-feature classification of movement directions, followed by (ii) insights on the temporal evolution and generalization of the decoding, and finally (iii) the multivariate classification results. But, first, we illustrate the features probed in this study; Figs. 2–4 illustrate the extracted features for a representative premotor electrode, and they show the temporal evolution including (i) pre-stimulus rest, (ii) planning, and (iii) execution windows for the amplitude and phase features. The PAC illustrations are shown using co-modulograms for each direction in both planning and execution periods. These represent the features that were computed for 748 sites from all participants in this study.

a Top, front and right views of the depth-electrode recording sites. b Top, left and right views of the number of recording sites that contribute to each vertex (i.e. spatial density). c SEEG locations per subject d Experimental design of the delayed center-out motor task. After a 1 s rest period (rest, −1000 to 0 ms) a first cue (Cue 1) instructed subjects to prepare to move their hand (motor planning, 0–1500 ms). Next, a go signal (Cue 2) appeared prompting participants to execute the movement (motor execution, 1500–3000 ms).

Comodulograms representing PAC as a function of frequency for phase and amplitude during planning (a) and execution (b). Singe-trial PAC modulations, per direction, for delta [2, 4 Hz], theta [5, 7 Hz] and alpha [8,13 Hz] phase coupling with high-gamma [60, 200 Hz] amplitude for planning c and execution d windows.

Decoding movement directions: single features findings

Significant decoding of movement direction during both planning and execution periods using power and phase features were prominent in motor areas (i.e. supplementary motor area (SMA), premotor cortex (PMC) and primary motor (M1)) and parietal brain regions showed (Fig. 5). During the planning period, the highest decoding accuracy of 49.37% (chance level 25%, p < 0.05) was found using alpha power in the posterior middle frontal gyrus (pMFG), anterior middle temporal gyrus (aMTG), posterior cingulate and ventral precuneus. The anterior pre-SMA and ventral precuneus also showed significant decoding using alpha power during the execution period. The spatial distribution of significant decoding during planning using beta power was similar to the decoding patterns obtained using the alpha band but the maximum of decoding accuracy was slightly smaller (46.25%, p < 0.05). During execution, hand-movement direction classification using beta power reached a maximum of 50.75% (p < 0.05) in the PMC. High-gamma (60–200 Hz) power led to 62.94% (p < 0.05) correct classification during the execution in the posterior pre-SMA. Decoding results clearly surpassed those obtained in the lower-frequency bands. Still, during the execution, high-gamma power also revealed statistically significant decoding in M1. Interestingly, the posterior pre-SMA and the ventral precuneus also showed significant decoding for both planning and execution. Among all non-power features, only the VLFC phase exhibited brain areas with significant decoding (i.e., posterior pre-SMA) during the execution with a maximum of 44.38% (p < 0.05).

As far as PAC is concerned, we found an increase of alpha-gamma coupling in the dorsal sensorimotor and premotor cortices during movement planning, followed by a decrease during execution. Although subtle differences in PAC were observed across directions, the differences were not sufficient to allow for significant PAC-based movement classification.

Decoding network associated with intended and executed limb movements

Several brain structures allowed for direction prediction at various moments in time during either planning or execution. To explore the spatial and temporal dimensions of the decoding, we computed and visualized for, at each time bin and within various regions of interest (ROIs), the number of sites with statistically significant decoding (Fig. 6). This figure was obtained using a univariate gaussian kernel estimate (kdeplot from the Python package Seaborn). To isolate the features that presented decoding robustness across time, we kept only features with significant decoding in at least three consecutive time bins after correction for multiple comparisons (p < 0.05). Through the task, alpha power was the first feature to enable decoding during the planning period starting in the pMFG [150, 1150 ms] and almost simultaneous in the ventral precuneus [250–450 ms]. Interestingly, this last structure is the only one that also revealed a second window of decoding during the execution [2250, 2600 ms]. Gamma power then took over, allowing for a decoding starting from the end of the planning period in the anterior pre-SMA [1050, 2650 ms] followed by the posterior pre-SMA [1550, 2650 ms]. It is interesting to note that around 2000 ms, the density of significant time bins decreased in the anterior pre-SMA while increasing in the posterior pre-SMA. In the PMC, the executed direction was successfully predicted between [1700, 2650 ms] using the gamma power and in an almost identical time interval [1800, 2600 ms] using the beta power.

Time-resolved modulations and decoding

To exploit the temporal resolution of the SEEG-based decoding framework, we chose to illustrate the temporal dynamics of modulation and classification across movement direction in three key sites. Figure 7 illustrates the time-resolved directional tuning and single feature decoding in three sites, using power in alpha and gamma bands and VLFC phase. Two sites located in the pMFG (Fig. 7a, b) shared the same alpha power pattern: a uniform alpha power across the four directions during rest, followed by directional tuning during planning and finally, a similar alpha desynchronization during execution. It is worth noting that alpha power inter-trial variability (assessed by the standard error on the mean) across directions was higher during the planning, compared to the execution period. For the first site in the pMFG (Fig. 7a), the four directions were independently modulated from 300 ms after the onset of the planning period (Cue 1). The power difference across directions was maximal around 1050 ms. This was also the time associated with the maximal decoding accuracy of 43.6% (p < 0.05). For the second site in the pMFG (Fig. 7c), direction-specific modulations were observed earlier, around 200 ms before the onset of planning and single-feature maximum decoding of 47.4% was reached around 250 ms. Interestingly, planning horizontal movements (i.e left and right directions) were clearly dissociated with alpha power modulations going in opposite directions. Conversely, vertical movements (i.e up and down directions) seemed to be stable during planning. In comparison, the gamma power in the pre-SMA posterior presented no direction-specific patterns during rest and planning (Fig. 7c). Instead, gamma power allowed decoding directions from 200 ms after the execution onset (Cue 2), with a maximum decoding accuracy of 62.5% at 2250 ms (i.e., 750 ms after Cue 2). Interestingly, in this same site in posterior pre-SMA, significant direction classification (46.4%, p < 0.05) was also achieved using VLFC phase (Fig. 7d). Similarly to gamma power, the VLFC phase happened to have a consistent behavior across directions during rest and planning phase, but showed direction-specific modulations during execution. Intriguingly, VLFC phases showed the highest differences during the execution at approximately the same time as gamma power (around 2245 ms), which led to a significant decoding.

(up: red; right; brown: down: blue; left: green) and associated decoding accuracies (purple) using an LDA with a 10 times 10-fold cross-validation on three SEEG sites. The power is computed every 50 ms using a 700 ms window. The two vertical lines at 0 and 1500 ms, respectively, represent the onset of the planning phase (Cue 1) and the execution phase (Go signal, Cue 2). The horizontal black plain line represents the theoretical chance level (4-classes, 25%) and the red dotted line represents the significance level computed from permutations at p < 0.05 after correction for multiple comparisons through time points using maximum statistics, a and b alpha power [8, 13 Hz] for two electrode contacts located in the posterior middle frontal gyrus (pMFG), c high-gamma power [60, 200 Hz] of a posterior pre-SMA electrode contact, d VLFC phase [0.1, 1.5 Hz] of the same posterior pre-SMA site. Shaded areas represent the SEM.

Temporal generalization of the decoding of movement directions

We then tested whether movement direction representation was shared between planning and execution. To this end, we used TG using either single or multiple power features (e.g., alpha alone or in combination with gamma) to find if some SEEG sites were able to decode during the execution while training the classifier during the planning period and conversely (Fig. 8). Panels a–c represent TG using single power features respectively in pMFG (alpha), and two distinct motor sites in the posterior pre-SMA (high-gamma) while panel D is the TG for those three combined sites and features. In pMFG, TG analysis showed that classifiers trained during the first 500 ms of planning were able to generalize to data from 0 to 1000 ms after Cue 1 with a maximum decoding of 48.75% using alpha power modulations (Fig. 8a). In the posterior pre-SMA, classifiers were able to generalize to data within the execution period and reached a maximum of 63.13% (Fig. 8b). This execution-related sustained neural pattern did not share enough common representation with the planning period in order to show generalization. By contrast, Fig. 8c presents another SEEG site located in posterior pre-SMA for which part of the information about hand directions was shared between planning and execution. Indeed, a classifier trained during execution provided significant decoding during execution (max. 50.63%) but also during the planning phase (max. 44.38%). Interestingly, training the classifier during the planning did not lead to significant decoding during the execution (i.e., non-symmetrical behavior). Finally, significant decoding patterns of the three previous TGs were conserved when features were combined (Fig. 8d); Training during the execution and testing during planning reached a maximum of 55% (i.e. +4% compared to the posterior pre-SMA site only). Moreover, a distinct decoding pattern emerged when the classifier was trained during the planning phase (~200–600 ms) and tested during the execution (~2300–2500 ms).

The vertical and horizontal lines at 0 and 1500 ms stand respectively for Cue 1 and Cue 2. White contoured zones delimit statistically significant decodings at p < 0.01 (binomial test) after Bonferroni correction through time. No decoding are performed on the diagonal, a TG in a pMFG site using alpha [8, 13 Hz] power, b and c TG in two distinct SEEG sites located in the posterior pre-SMA using high-gamma [60, 200 Hz] power, d TG of the three combined sites (alpha pMFG + high-gamma posterior pre-SMA).

Decoding results of the multi-feature procedure

To explore the joint relevance of multiple features in predicting movement directions, we extended the decoding process to a multi-feature (MF) framework combining all computed feature types (power, phase and PAC), all frequency bands and SEEG sites (Fig. 9). The MF classification was carried out using feature selection (see the “Methods” section) for each of the 67 time points, leading to a distinct set of features at each time bin. The time-resolved decoding accuracy in subject S1 (Fig. 9a) yielded two clear bumps: during the movement intention phase, with a maximal decoding of 76.12% at 850 ms, followed later on by a second bump during the middle of the execution phase and a maximum decoding of 84.25% at 2050 ms. Because the decoding performance at each time bin was based on a distinct set of features, we counted the number of times each feature appeared (i.e. occurrence) during the entire planning and execution period, and we also grouped those features by Brodmann areas (BA) (Fig. 9b). The most frequently selected features were predominantly power-based but the importance of each frequency band varied between preparation and execution periods. Among all of the non-power features (i.e. phase and PAC), the VLFC phase and the coupling between delta phase and gamma amplitude (delta-gamma) were the most selected features during the execution only. For planning, slow oscillations (i.e. beta and alpha) were predominantly selected. Unlike planning, the execution was predominantly decoded using high-frequency power features. We also summarized the maximum decoding reached across subjects for both planning and execution (Fig. 9c), as well as the most frequently selected features across subjects (Fig. 9d). Both decoding of motor planning and execution reached a maximum of 86% (S1, for both hands). In general, the decoding of movements during execution was higher (or at least equal to) the accuracies obtained during planning except for subject 5. This subject presents a 14% difference (i.e. 84% for planning and 70% for execution) which probably reflects the fact that this subject has an SEEG implantation that is more suitable for intention prediction. Subjects 4 and 6 did not present significant decoding even with the multi-features procedure. The MF direction decoding during the planning phase was primarily achieved using slower frequencies (i.e. delta, theta, alpha, and beta) in premotor, prefrontal, and parietal areas (BA6-9-40). During execution, decoding directions more frequently involved high frequency power (i.e. low and high gamma), especially in pre and primary motor areas (BAs 4-6-8-11).

a Time-resolved decoding accuracy and associated deviation using the MF selection for the subject S1. Cue 1 and Cue 2 are represented with two solid gray lines. Blue lines indicate the maximum decodings reached respectively during the planning and execution periods. The horizontal solid gray line represents the theoretical chance level of 25% and the solid red line is the corrected decoding accuracy (p < 0.05 corrected using maximum statistics across time points) obtained by randomly shuffling the label vector (permutations). Shaded areas represent the SEM. b Most selected features during planning (blue bars) and execution (red bars). For each barplot, the y-axis show the number of times a feature was selected (occurrence), and the x-axis shows the feature type (power, phase and PAC) as well as the name of the frequency band. c Best decoding accuracies per subject for intention and execution. The solid black line represents the theoretical chance level of a 4-class classification problem (25%) and the dotted red line (~40%), the statistical chance level at p < 0.05 (corrected using maximum statistics across subjects). d Most recurrent selected power features during the multi-features procedure as a function of Brodmann area. For each power frequency band and for each Brodmann area, we subtracted the number of features selected during preparation from those during execution. Thus, blue and red colors mean that a feature has been selected more times respectively during the preparation and execution of the movement (specificity). In the same way, the white color means that as many features have been selected for both conditions while black rectangles stand for no selected features.

Discussion

The goal of the present study was to expand on the current understanding of how neural oscillations encode movement direction. In particular, we examined whether movements can be predicted from a range of oscillatory features recorded during or even prior to execution (i.e. in the delay period while participants waited for the go cue). To this end, we employed a machine learning approach, where the success rate of each oscillatory brain feature (or combination thereof) in predicting movement direction was taken as a measure of functional relevance to direction encoding. Alongside well-established spectral power features, the supervised learning framework we used also probed the predictive strength of phase and phase-amplitude coupling, both of which have received very little attention in the context of movement decoding. A further added value of the present study was the use of SEEG, providing multi-site LFP depth recordings in humans; This allowed us to probe distributed network decoding patterns across time and over widely distributed brain areas, not limited to primary motor regions.

Our findings show that the direction of upcoming movements can be predicted using spectral features extracted during movement planning from widely distributed human LFPs. In fact, the accuracy by which movements were predicted from neural signals acquired during the delay period (up to 86%) was equivalent to the rate of successful decoding achieved with data acquired during actual movement execution. However, the anatomical locations and main frequency bands of the LFP features that led to the best classifications during execution differed from those that allowed for the highest predictions during planning. During execution, the best features were high gamma power in motor and premotor areas, while the classification of movement intentions was mainly achieved through alpha and beta power in premotor, prefrontal, and parietal regions. From a decoding perspective, the highest decoding accuracies were obtained in a multivariate decoding framework combining multiple oscillatory signal features (e.g., power, phase, and phase–amplitude coupling) across multiple intracranial recording sites (e.g., motor, premotor, but also non-motor-areas).

The fact that oscillatory power in multiple frequency bands, especially in motor areas, carries directional information is well-established9,21. Additionally, previous research suggests that phase signals can be used to infer hand position, velocity, and acceleration through low-frequency components26,29,30. Yet, to the best of our knowledge, this is the first study to jointly explore the relative importance of amplitude, phase and PAC features for decoding planned or executed limb movement directions in humans. Our results confirm the prominent role of power features in movement direction decoding and show that very low frequency (<1.5 Hz) phase features also led to statistically significant direction prediction, specifically during execution (Fig. 5). Furthermore, we previously reported that PAC varies considerably across motor states, specifically when comparing intention and execution states28 but whether PAC is differentially modulated across individual movement directions was so far unclear. The results in the present study suggest that PAC varies only weakly across directions and that these modulations are not sufficient for PAC-based single-feature classification of movements. This is consistent with a previous study by Yanagisawa et al. (2012) who showed that PAC differs between motor planning and execution, but not across movement type25. Taken together, these data support the idea that PAC in sensorimotor areas may be a large-scale mechanism that constrains gamma activity during rest and planning periods (into slow phasic amplitude fluctuations) and releases it for the purpose of motor execution. Whether other PAC-related metrics (such as the preferred phase, i.e. the phase at which binned amplitude is maximum) might allow for better PAC-based movement decoding still needs to be investigated.

Directional tuning of arm movements in the space of single-unit activity in the motor cortex is widely established textbook knowledge1. Moreover, it has also been shown that multi-unit activity and LFP signals can be used to predict movement directions from monkey motor cortex8,9,10,11,12. Interestingly, further evidence in macaques has revealed task-related gamma-range LFP modulations in the posterior parietal cortex during both planning and execution of arm and eye movements13,14. In humans, movement-type decoding using LFP-based spectral features was also shown in non-primary motor areas19. However, the question of whether population activity recorded in brain areas outside the primary motor cortex also exhibits directional tuning has not received much attention. Our findings indicate that movement direction can be inferred from primary and non-primary motor cortices, as well as from non-motor areas including parietal and prefrontal areas. Using single-feature decoding of movement planning, we first found a strong implication of the posterior middle frontal gyrus (pMFG-BA6) especially using alpha and beta power (Figs. 5, 6, 7a, b) with a maximum decoding of 49.37%. Almost concurrently and using the same frequencies, motor direction planning could also be decoded from the posterior cingulate/precuneus (Figs. 5, 6), which could be consistent with the involvement of this area in internal self-representation31 and previous reports suggesting that it encodes motor intentions before complete awareness32,33. Decoding motor execution was essentially possible through gamma power, essentially in motor-related areas (Figs. 5, 6, 7c, d) and reached a maximum of 62.94%. We also observed that significant decoding via gamma activity in pre-SMA began first in the anterior part followed by the posterior part. Interestingly, the significant movement classification via gamma activity in anterior SMA occurred in the very early stages of the execution, around the go signal, before the decoding in the primary motor cortex (Fig. 6). Finally, the model achieved a modest but above-chance decoding of 44% using the VLFC phase in the posterior pre-SMA during the execution. The VLFC is intricately linked to the readiness potential, a brain signal that arises in motor cortices during voluntary or self-paced movements with a time-locked onset34,35. Consequently, it is plausible that the observed decoding accuracy using the VLFC was influenced, at least in part, by varying movement onset times corresponding to each direction.

Movement planning and execution shared a spatially overlapping motor-related network36. To address the dynamics of movement direction representation in the brain during planning and execution, we investigated the ability of classifiers to generalize to temporally distant time points using different features. This was achieved using a temporal generalization procedure37. Our illustrative analysis (Fig. 8) shows how some premotor sites, involved in externally driven cued movement38, decode directions only during planning or only during execution (i.e. Fig. 8a, b) while others were able to generalize to both phases (Fig. 8c). Thus, directional tuning of LFPs during planning and execution share partly overlapping neural representations, mainly through alpha and gamma oscillations. However, cross-temporal generalization decoding also showed that directional tuning of LFPs during planning and execution reflects a single and sustained process rather than a dynamically changing coding phenomenon39. It has been proposed that pre-SMA acts as an interface to transform visual information into information required for motor planning. Animal studies revealed that pre-SMA neurons encode the spatial location of the target40. Therefore, it is possible that the shared information between the execution and planning phase is explained by the target location instead of movement parameters. Furthermore, extending the temporal generalization to multi-feature classifiers (Fig. 8d) illustrates how multi-site feature combinations may lead to models capable of bidirectional generalization (i.e. be trained on execution data and generalize to the planning period, or be trained on the planning and generalize to the execution widow).

We also addressed the decoding complementarity of the spectral features using multivariate classification, where different features from motor and non-motor areas are combined (Fig. 9). Using feature selection within the classification framework allows us to determine the combinations of features that lead to the highest decoding accuracies. By applying this procedure at each time point, we obtained an example of time-resolved decoding reaching 84.25% during the execution and 75.12% during the intention phase (Fig. 9a). Interestingly, in addition to power features, VLFC phase and delta-gamma coupling were also selected by the algorithm, which suggests complementarity in terms of the directional information these features provide (Fig. 9b). Such spectral features can also be combined to graph-theoretical measurements to further improve decoding performance41. Non-surprisingly, the result of multi-features classification varied across subjects highlighting how much the decoding accuracy depends on the intracranial implantation in each patient (Fig. 9c). A general trend was that anterior superior frontal gyrus and temporal implantations did not allow significant decoding, even with multi-feature classification (S4 and S6) while implantations with a majority of middle and medial frontal SEEG sites (S1-2-3-5) allowed significant dissociation up to 86% of the 4-directions for both planning and execution (Fig. 1c). Finally, the data-driven feature selection procedure highlighted the prominent role of the power of slower oscillations (theta, alpha and beta) in the premotor, prefrontal and parietal cortex (BA 6-9-40) for decoding motor intentions. Our findings of directional tuning in BA9 and BA40 is consistent with their role in motor processes, particularly the planning of movement directions18. BA40, part of the posterior parietal cortex, is also involved in motor planning processes14,42,43,44. This region is involved in the emulation of sensory information into motor commands, especially during the coding of spatial relationships44,45. For direction classification during movement execution the feature selection algorithm predominantly used the power in higher frequency bands (low and high gamma) in the motor, premotor, frontal, and cingulate areas (BA 4-6-11-32) (Fig. 9d).

The present study has several limitations that need to be acknowledged. First, intracranial recordings provide high-quality signals at the cost of a heterogeneous and incomplete coverage of the brain. Even with more than 500 recording sites, the probed brain areas were not equally represented in our sample of patients. The implantations across the six subjects (see Fig. 1) yielded a reasonable coverage of frontal (although the right hemisphere was over-represented compared to the left hemisphere) and central areas but the parietal cortex was under-represented. Moreover, four out of six patients had uni-lateral implantations, and the two others had non-symmetrical implantation. Because of this limitation, which is inherent to invasive recordings, it was not possible to separate contralateral from ipsilateral effects on direction decoding. It would be a great benefit to examine whether ipsilateral activity also carries directional information46. This could be assessed using group-level statistics on a larger number of patients implanted with intracranial recordings47 or with EEG or MEG recordings using a similar center-out paradigm. Furthermore, although we systematically excluded electrodes with typical epileptic waveforms (e.g., epileptic spikes), the mere fact that this research was conducted in epilepsy patients may limit the generalizability to healthy subjects. Future studies could investigate the feasibility of reconstructing the continuous 3D hand position using deep learning fitted on depth recordings48,49. Finally, we investigated the decoding of only four directions of movement, mainly because of the limited time with the patients. Several studies have addressed the decoding of a higher number of directions during movement execution30,50,51. These studies attempted to either decode 8 directions of movements using EEG or ECoG recordings52,53 or attempted to provide a prediction of a continuous movement using ECoG recordings11,26,54,55. However, predicting a continuous trajectory from movement planification signals still remains an open challenge.

Conclusion

The present study investigated the feasibility of decoding planned and executed limb-movement directions from human intracranial recordings, using a wide range of spectral features (i.e. power, phase, and phase–amplitude coupling in multiple frequency bands and brain areas). We found that decoding during the planning phase mainly involved lower frequencies of power (i.e. alpha and beta) in the posterior middle frontal cortex and parietal areas. We also found significant decoding during movement execution using high-gamma power in motor and premotor areas but also using very low-frequency phase (1.5 Hz). These findings, in addition to the illustrations of the feasibility of multi-feature temporal generalization of directional tuning representation in the human brain, advance our understanding of the role of spectral properties of brain activity in movement planning and control and open up new paths that could be explored in next-generation brain-machine interfaces.

Methods

Participants

We collected SEEG recordings from six drug-resistant epilepsy patients (6 females, mean age 22.17 ± 4.6, all right-handed). Multi-lead EEG depth electrodes were implanted at the Epilepsy Department of the Grenoble Neurological Hospital (Grenoble, France). Trials containing artefacts or pathological waveforms were systematically excluded through visual inspection in collaboration with the medical staff, as in previous studies28,56,57,58,59. All participants provided written informed consent, and the experimental procedures were approved by the Institutional Review Board, as well as by the National French Science Ethical Committee. The demographic and clinical details of the patients are summarized in Table 1.

Electrode implantation and stereotactic EEG recordings

Stereotactic electroencephalography (SEEG) electrodes had a diameter of 0.8, 2 mm wide and 1.5 mm apart (DIXI Medical Instrument®). Each electrode consisted of 10 to 15 contacts according to the implanted structure. This yielded a total of 748 intracerebral sites when pooling the sites of our sample of patients (i.e., 126 sites in each patient, except for one patient who had 118 recording sites). At the time of acquisition, a white matter electrode was used as reference, and data was bandpass filtered from 0.1 to 200 Hz and sampled at 1024 Hz. Electrode locations were determined using the stereotactic implantation scheme and the Talairach and Tournoux proportional atlas60. For each subject, electrode location was standardized on Talairach space (based on post-implantation CT). The Talairach coordinates of each electrode were finally converted into the MNI coordinate system according to standard routines56,61,62 (see Supplementary Table 1). To be able to visualize intracranial recording sites on a 3-D standard MNI brain (Fig. 1a) and to project SEEG data to the nearest cortical surface (Fig. 1b), we used an open-access visualization Python toolbox called Visbrain63. Each SEEG site is represented by a color ball into the transparent brain. Cortical projection was obtained by taking the intersection between the cortical surface and a 10 mm radius sphere around each site. Non-significant decoding is systematically turned in gray. By convention, the left hemisphere (LH) is presented on the left in all brain visualizations.

Experimental design

The experimental paradigm used in this study consisted of a classical delayed center-out motor task28. After a rest period of 1000 ms (−1000 to 0 ms), the participants were visually cued to prepare a movement towards a visually presented target in one of four possible directions: up, down, left, or right (Planning phase, 0–1500 ms). Next, after a 1500 ms delay period, A Go signal, a central cue switching from white to black, prompting the subjects to move the cursor towards the target (Execution Phase, 1500–3000 ms) (Fig. 1d).

Data preprocessing

The data were preprocessed using standard procedures, consistent with our previous intracranial EEG work39,56,58. Data recorded in each SEEG site was bipolarized. This procedure consists in subtracting the activity from successive sites in order to remove or reduce artifacts, and increase the spatial specificity while minimizing the influence of distant sources. Re-referencing each contact to its direct neighbor led to a spatial resolution of ~3 mm56,64,65. This bipolarization led to 580 bipolar derivations across all subjects. Finally, trials contaminated by epileptic activity and electrodes located close to the extra-ocular eye muscles were removed from the analyses by visual inspection of the time-series and time–frequency decomposition and insights from the clinical staff. The final number of trials retained for analyses across patients varied between 120 and 360 (215 ± 77).

Spectral analyses

A wide range of oscillatory brain features (power, phase and phase-amplitude coupling) were extracted from the SEEG recording using the Hilbert transform. To this end, we first filtered the data in the required band using a two-way zero-phase lag finite impulse response (FIR) Least-Squares filter implemented in the EEGLAB toolbox66. Then, phase and amplitude components were computed using the Hilbert transform on filtered data. The following frequency bands were considered: very low-frequency component (VLFC) [0.1; 1.5 Hz], delta (δ) [2–4 Hz], theta (θ) [5–7 Hz], alpha (α) [8–13 Hz], beta (–) [13–30 Hz], low-gamma (low γ) [30–60 Hz] and broadband gamma (high γ) [60-200 Hz]. Power features were computed for δ, θ, α, β, low-γ and high-γ, while phase values were extracted for VLFC, δ, θ, and α. PAC was computed between δ, θ, and α phases and high-γ amplitude. Furthermore, in order to investigate the time course of decoding performance, we systematically considered 67 points across time. The choice of temporal resolution/windows was different and will be described in their respective sub-sections. Eventually, each feature involved 67 time points. For each SEEG site, 13 features were calculated (6 of power, 4 of phase and 3 of PAC) with 67 time points. Across all SEEG sites, this led to a total of 505180 ((6 + 4 + 3) * 67 * 580) independent features to classify.

Instantaneous power features estimation

From the band-specific Hilbert transform, power modulations were computed by taking the square of time resolved amplitude. For the specific case of the high-gamma band, the [60, 200 Hz] was split into 10 Hz non-overlapping sub-bands, and final gamma power modulations were obtained by taking the mean of those multiple sub-bands, according to previous routines56,58,62,67,68,69,70. Power was averaged using a 700 ms sliding window, with a 50 ms shifting, leading to 67 time points. The classification was applied to unnormalized power. We applied a normalization only for the specific case of the visualization (time-frequency maps and single trial representation, see Fig. 2). To this end, to each frequency band, we subtracted then divided by the mean of a 500 ms baseline window, centered according to the pre-stimulus rest period ([−750, −250 ms]).

Instantaneous phase features estimation

For a specific frequency band, phase features were extracted from the angle of the Hilbert transform. For classification, we selected a point every 50 ms from this instantaneous phase. Finally, we used Rayleigh’s test to estimate significant phase modulations71,72,73, using the circular statistics toolbox74. This instantaneous phase is then used for the classification. To observe phase-alignment consistency across trials, we compute the Phase Locking Factor, defined as the mean across the modulus of a single trial phase71 (Fig. 3). In order to have consistency with power features, we selected the instantaneous phase at each center of above defined 700 ms power time windows, which led to 67 phase points across time.

Phase–amplitude coupling features estimation

First, the filter order for extracting phase and amplitude was systematically adapted, using three cycles of slow oscillations (for phase) and six cycles for amplitude75. PAC estimations can be estimated by a large variety of measures76,77,78,79,80. We tested several of them, mainly the mean vector length (MVL)81 and the Kullback–Leiber divergence (KL)78. Both methods yielded similar results, but after slightly adapting the MVL, we obtained PAC estimation leading to better decoding accuracies compared to the KL method. In order to improve PAC robustness, we generated surrogates by randomly swapping phase and amplitude trials78. Then, the original modulus is z-scored normalized using the mean and the deviation of 200 generated surrogates (Fig. 4). PAC algorithms used in this paper are all implemented into an open-source Python package called Tensorpac82. The PAC was estimated using the same windows as power features, meaning windows of length of 700 ms shifted every 50 ms, which led to the same number of 67 windows.

Signal classification

We explored the feasibility of time-resolved direction decoding from human LFP using two strategies of increasing dimensionality: (a) a single feature approach to evaluate the performance of each feature, (b) an inter-site and inter-feature approach using a feature selection procedure to estimate the final decoding using all available intracranial EEG recordings. These two strategies are performed at each of the 67 time points defined above, providing an overview of which feature, where and when they are decoding, and how reliable they are. In contrast to brain decoding approaches with non-invasive brain recordings (e.g. EEG or MEG), inter-subject cross-validation is not straightforward for SEEG since electrode implantations differ across subjects. We thus performed intra-subject cross-validation28. All classifications were implemented in Python 3 using the sci-kit-learn package83.

Single feature evaluation

We classified each feature at each of the 67-time windows defined above for all subjects and all recording sites. We compared the performance of several classification algorithms (linear discriminant analysis (LDA), Naïve Bayes (NB), kth nearest neighbor (KNN), and support vector machine (SVM) with linear and radial basis function (RBF) kernels. They all provided similar performances, and we finally chose the LDA for its efficiency. The performance of the classifier was evaluated by computing the % decoding accuracy (i.e. proportion of successfully classified samples in the test set), which was obtained following a standard stratified 10 times 10-fold cross-validation scheme. To assess the statistical significance of the decoding performances, we used permutation testing to generate null distributions by randomly shuffling the data labels84,85. Correction for multiple comparisons was assessed by generating a distribution of permutation maxima across time, space, and frequency (i.e. maximum statistics)86,87.

Cross-temporal generalization of classification

To evaluate whether a classifier trained during execution can be used to decode movement directions during preparation (or vice versa), we used a temporal generalization (TG) procedure37. In principle, a classifier is trained at a particular moment in the task (training time axe) and then tested at another time (testing time axe). Note that we performed TG using both single and multi-feature classification.

Multi-feature classifications using feature selection

To identify groups of features that jointly lead to higher decoding performances compared to single-feature classification results, we used multi-feature (MF) classification. For each time sample, the MF procedure determines the best possible combination across all feature types (power, phase, and phase–amplitude coupling) and across all SEEG recording sites per subject. We combined a wrapper method88,89,90 (Select k-best, with k between [1,10]) with a filter method91,92 (false discovery rate, FDR with a type 1 error rate of 5%) which are respectively the SelectKBest and the SelectFdr functions of scikit-learn83. The MF classification was performed using a linear SVM for the whole MF pipeline. As recommended, we linearly rescaled each attribute to be zero mean with a unit variance93. Multi-feature pipeline: To estimate MF performances, we implemented the following pipeline: (1) A first 10-fold cross-validation was defined to generate a training and testing set, (2) the training dataset is used to fit parameters of the transformation for data rescaling, then, this set is rescaled. (3) We optimized the number of selected features for the k-best using a 3-fold cross-validated grid search. We then took the union of selected features determined by the k-best and FDR and got a reduced version of our training set, (4) we trained the linear SVM on this optimal training set, (5) the testing set is rescaled with the same parameters used for the training set. Then, the selected attributes of the training set are used to select those on the testing set, (6) the already trained classifier was finally tested to predict labels on this optimal testing set and turn this prediction into decoding accuracy. For the statistical evaluation, this whole pipeline is embedded in a loop of 200 occurrences where, for each occurrence, the label vector is shuffled. Those 200 permutations allow statistical assessments with p-values as low as 0.005.

Statistics and reproducibility

All data were analyzed using custom Python code. Statistical analysis was performed using non-parametric tests.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

Raw data cannot be shared due to ethics committee restrictions related to the clinical acquisition setting. Intermediate as well as final processed data that support the findings of this study are available from the corresponding author (E.C.) upon reasonable request.

Code availability

The custom codes used to generate the figures and statistics are available from the lead contact (E.C.) upon request.

References

Georgopoulos, A. P., Kalaska, J. F., Caminiti, R. & Massey, J. T. On the relations between the direction of two-dimensional arm movements and cell discharge in primate motor cortex. J. Neurosci. 2, 1527–1537 (1982).

Georgopoulos, A., Schwartz, A. & Kettner, R. Neuronal population coding of movement direction. Science 233, 1416–1419 (1986).

Taylor, D. M. Direct cortical control of 3D neuroprosthetic devices. Science 296, 1829–1832 (2002).

Heldman, D. A., Wang, W., Chan, S. S. & Moran, D. W. Local field potential spectral tuning in motor cortex during reaching. IEEE Trans. Neural Syst. Rehabil. Eng. 14, 180–183 (2006).

Stark, E. & Abeles, M. Predicting movement from multiunit activity. J. Neurosci. 27, 8387–8394 (2007).

Wang, W., Chan, S. S., Heldman, D. A. & Moran, D. W. Motor cortical representation of position and velocity during reaching. J. Neurophysiol. 97, 4258–4270 (2007).

Tankus, A., Yeshurun, Y., Flash, T. & Fried, I. Encoding of speed and direction of movement in the human supplementary motor area. J. Neurosurg. 110, 1304–1316 (2009).

Mehring, C. et al. Inference of hand movements from local field potentials in monkey motor cortex. Nat. Neurosci. 6, 1253–1254 (2003).

Rickert, J. Encoding of movement direction in different frequency ranges of motor cortical local field potentials. J. Neurosci. 25, 8815–8824 (2005).

Liu, J. & Newsome, W. T. Local field potential in cortical area MT: stimulus tuning and behavioral correlations. J. Neurosci. 26, 7779–7790 (2006).

Schalk, G. et al. Decoding two-dimensional movement trajectories using electrocorticographic signals in humans. J. Neural Eng. 4, 264 (2007).

Chao, Z. C., Nagasaka, Y. & Fujii, N. Long-term asynchronous decoding of arm motion using electrocorticographic signals in monkey. Front. Neuroeng. 3, 3 (2010).

Pesaran, B., Pezaris, J. S., Sahani, M., Mitra, P. P. & Andersen, R. A. Temporal structure in neuronal activity during working memory in macaque parietal cortex. Nat. Neurosci. 5, 805–811 (2002).

Andersen, R. A. & Cui, H. Intention, action planning, and decision making in parietal-frontal circuits. Neuron 63, 568–583 (2009).

Leuthardt, E. C., Schalk, G., Wolpaw, J. R., Ojemann, J. G. & Moran, D. W. A brain–computer interface using electrocorticographic signals in humans. J. Neural Eng. 1, 63 (2004).

Mehring, C. et al. Comparing information about arm movement direction in single channels of local and epicortical field potentials from monkey and human motor cortex. J. Physiol. -Paris 98, 498–506 (2004).

Ball, T., Schulze-Bonhage, A., Aertsen, A. & Mehring, C. Differential representation of arm movement direction in relation to cortical anatomy and function. J. Neural Eng. 6, 016006 (2009).

Gunduz, A. et al. Differential roles of high gamma and local motor potentials for movement preparation and execution. Brain–Comput. Interfaces 3, 88–102 (2016).

Li, G. et al. Assessing differential representation of hand movements in multiple domains using stereo-electroencephalographic recordings. NeuroImage 250, 118969 (2022).

Waldert, S. et al. A review on directional information in neural signals for brain-machine interfaces. J. Physiol. -Paris 103, 244–254 (2009).

Waldert, S. et al. Hand movement direction decoded from MEG and EEG. J. Neurosci. 28, 1000–1008 (2008).

Rezeika, A. et al. Brain–computer interface spellers: a review. Brain Sci. 8, 57 (2018).

Caldwell, D. J., Herron, J. A., Ko, A. L. & Ojemann, J. G. Motor BMIs have entered the clinical realm. In Handbook of Neuroengineering (ed. Thakor, N.V.) 1–37 (Springer, Singapore, 2022).

Tang, X., Shen, H., Zhao, S., Li, N. & Liu, J. Flexible brain–computer interfaces. Nat. Electron. 6, 109–118 (2023).

Yanagisawa, T. et al. Regulation of motor representation by phase-amplitude coupling in the sensorimotor cortex. J. Neurosci. 32, 15467–15475 (2012).

Hammer, J. et al. The role of ECoG magnitude and phase in decoding position, velocity, and acceleration during continuous motor behavior. Front. Neurosci. 7, 200 (2013).

Hemptinne, et al. Exaggerated phase amplitude coupling in the primary motor cortex in Parkinson disease. Proc. Natl. Acad. Sci. USA https://doi.org/10.1073/pnas.1214546110 (2013).

Combrisson, E. et al. From intentions to actions: Neural oscillations encode motor processes through phase, amplitude and phase-amplitude coupling. NeuroImage 147, 473–487 (2017).

Jerbi, K. et al. Coherent neural representation of hand speed in humans revealed by MEG imaging. Proc. Natl Acad. Sci. USA 104, 7676–7681 (2007).

Jerbi, K. et al. Inferring hand movement kinematics from MEG, EEG and intracranial EEG: From brain–machine interfaces to motor rehabilitation. IRBM 32, 8–18 (2011).

Cavanna, A. E. & Trimble, M. R. The precuneus: a review of its functional anatomy and behavioural correlates. Brain 129, 564–583 (2006).

Soon, C. S., Brass, M., Heinze, H.-J. & Haynes, J.-D. Unconscious determinants of free decisions in the human brain. Nat. Neurosci. 11, 543–545 (2008).

Soon, C. S., He, A. H., Bode, S. & Haynes, J.-D. Predicting free choices for abstract intentions. Proc. Natl Acad. Sci. USA 110, 6217–6222 (2013).

Schurger, A., Pak, J. & Roskies, A. L. What is the readiness potential? Trends Cogn. Sci. 25, 558–570 (2021).

Shibasaki, H. & Hallett, M. What is the bereitschaftspotential? Clin. Neurophysiol. 117, 2341–2356 (2006).

Hanakawa, T., Dimyan, M. A. & Hallett, M. Motor planning, imagery, and execution in the distributed motor network: a time-course study with functional MRI. Cereb. Cortex 18, 2775–2788 (2008).

King, J.-R. & Dehaene, S. Characterizing the dynamics of mental representations: the temporal generalization method. Trends Cogn. Sci. 18, 203–210 (2014).

Thut, G. et al. Internally driven vs. externally cued movement selection: a study on the timing of brain activity. Cogn. Brain Res. 9, 261–269 (2000).

Thiery, T. et al. Decoding the neural dynamics of free choice in humans. PLoS Biol. 18, e3000864 (2020).

Hoshi, E. & Tanji, J. Differential roles of neuronal activity in the supplementary and presupplementary motor areas: from information retrieval to motor planning and execution. J. Neurophysiol. 92, 3482–3499 (2004).

Hosseini, S. M., Aminitabar, A. H. & Shalchyan, V. Investigating the application of graph theory features in hand movement directions decoding using EEG signals. Neurosci. Res. 194, 24–35 (2023).

Snyder, L. H., Batista, A. P. & Andersen, R. A. Coding of intention in the posterior parietal cortex. Nature 386, 167–170 (1997).

Andersen, R. A. & Buneo, C. A. Intentional maps in posterior parietal cortex. Annu. Rev. Neurosci. 25, 189–220 (2002).

Buneo, C. A. & Andersen, R. A. The posterior parietal cortex: sensorimotor interface for the planning and online control of visually guided movements. Neuropsychologia 44, 2594–2606 (2006).

Karnath, H.-O. New insights into the functions of the superior temporal cortex. Nat. Rev. Neurosci. 2, 569 (2001).

Gallivan, J. P., McLean, D. A., Flanagan, J. R. & Culham, J. C. Where one hand meets the other: limb-specific and action-dependent movement plans decoded from preparatory signals in single human frontoparietal brain areas. J. Neurosci. 33, 1991–2008 (2013).

Combrisson, E. et al. Group-level inference of information-based measures for the analyses of cognitive brain networks from neurophysiological data. NeuroImage 258, 119347 (2022).

Rao, R. P. Towards neural co-processors for the brain: combining decoding and encoding in brain–computer interfaces. Curr. Opin. Neurobiol. 55, 142–151 (2019).

Śliwowski, M., Martin, M., Souloumiac, A., Blanchart, P. & Aksenova, T. Decoding ECoG signal into 3D hand translation using deep learning. J. Neural Eng. 19, 026023 (2022).

Gunduz, A. & Schalk, G. Ecog-based bcis. Brain–Comput. Interfaces Handb. 297, 322 (2018).

Tam, W., Wu, T., Zhao, Q., Keefer, E. & Yang, Z. Human motor decoding from neural signals: a review. BMC Biomed. Eng. 1, 22 (2019).

Wang, Z. et al. Decoding onset and direction of movements using electrocorticographic (ECoG) signals in humans. Front. Neuroeng. 5, 15 (2012).

Wolpaw, J. R. & McFarland, D. J. Control of a two-dimensional movement signal by a noninvasive brain–computer interface in humans. Proc. Natl Acad. Sci. USA 101, 17849–17854 (2004).

Pistohl, T., Ball, T., Schulze-Bonhage, A., Aertsen, A. & Mehring, C. Prediction of arm movement trajectories from ECoG-recordings in humans. J. Neurosci. Methods 167, 105–114 (2008).

Schalk, G. et al. Two-dimensional movement control using electrocorticographic signals in humans. J. Neural Eng. 5, 75–84 (2008).

Jerbi, K. et al. Task-related gamma-band dynamics from an intracerebral perspective: review and implications for surface EEG and MEG. Hum. Brain Mapp. 30, 1758–1771 (2009).

Jung, J. et al. Brain responses to success and failure: direct recordings from human cerebral cortex. Hum. Brain Mapp. 31, 1217–1232 (2010).

Bastin, J. et al. Direct recordings from human anterior insula reveal its leading role within the error-monitoring network. Cereb. Cortex bhv352 (2016) https://doi.org/10.1093/cercor/bhv352.

Combrisson, E. et al. Neural interactions in the human frontal cortex dissociate reward and punishment learning. eLife https://doi.org/10.7554/eLife.92938.1 (2023).

Talairach, J. & Tournoux, P. Referentially Oriented Cerebral MRI Anatomy: An Atlas of Stereotaxic Anatomical Correlations for Gray and White Matter (Thieme, 1993).

Jerbi, K. et al. Exploring the electrophysiological correlates of the default-mode network with intracerebral EEG. Front. Syst. Neurosci. 4, 27 (2010).

Ossandon, T. et al. Transient suppression of broadband gamma power in the default-mode network is correlated with task complexity and subject performance. J. Neurosci. 31, 14521–14530 (2011).

Combrisson, E. et al. Visbrain: a multi-purpose GPU-accelerated open-source suite for multimodal brain data visualization. Front. Neuroinform. 13, 14 (2019).

Lachaux, J. P., Rudrauf, D. & Kahane, P. Intracranial EEG and human brain mapping. J. Physiol. -Paris 97, 613–628 (2003).

Kahane, P., Landré, E., Minotti, L., Francione, S. & Ryvlin, P. The Bancaud and Talairach view on the epileptogenic zone: a working hypothesis. Epileptic Disord. Int. Epilepsy J. Videotape 8, S16–S26 (2006).

Delorme, A. & Makeig, S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21 (2004).

Perrone-Bertolotti, M. et al. How silent is silent reading? Intracerebral evidence for top-down activation of temporal voice areas during reading. J. Neurosci. 32, 17554–17562 (2012).

Vidal, J. R. et al. Long-distance amplitude correlations in the high gamma band reveal segregation and integration within the reading network. J. Neurosci. 32, 6421–6434 (2012).

Vidal, J. R. et al. Neural repetition suppression in ventral occipito-temporal cortex occurs during conscious and unconscious processing of frequent stimuli. Neuroimage 95, 129–135 (2014).

Hamamé, C. M. et al. Functional selectivity in the human occipitotemporal cortex during natural vision: evidence from combined intracranial EEG and eye-tracking. NeuroImage 95, 276–286 (2014).

Tallon-Baudry, C., Bertrand, O., Delpuech, C. & Pernier, J. Stimulus specificity of phase-locked and non-phase-locked 40 Hz visual responses in human. J. Neurosci. 16, 4240–4249 (1996).

Babiloni, C. et al. Human brain oscillatory activity phase-locked to painful electrical stimulations: a multi-channel EEG study. Hum. Brain Mapp. 15, 112–123 (2002).

Lakatos, P. An oscillatory hierarchy controlling neuronal excitability and stimulus processing in the auditory cortex. J. Neurophysiol. 94, 1904–1911 (2005).

Berens, P. CircStat a MATLAB toolbox for circular statistics. J. Stat. Softw. 31, 1–21 (2009).

Bahramisharif, A. et al. Propagating neocortical gamma bursts are coordinated by traveling alpha waves. J. Neurosci. 33, 18849–18854 (2013).

Jensen, O. & Colgin, L. L. Cross-frequency coupling between neuronal oscillations. Trends Cogn. Sci. 11, 267–269 (2007).

Canolty, R. T. & Knight, R. T. The functional role of cross-frequency coupling. Trends Cogn Sci. 14, 506–515 (2010).

Tort, A. B. L., Komorowski, R., Eichenbaum, H. & Kopell, N. Measuring phase-amplitude coupling between neuronal oscillations of different frequencies. J. Neurophysiol. 104, 1195–1210 (2010).

Soto, J. L. P. & Jerbi, K. Investigation of cross-frequency phase-amplitude coupling in visuomotor networks using magnetoencephalography. In 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society 1550–1553 (IEEE, San Diego, USA, 2012).

Aru, J. et al. Untangling cross-frequency coupling in neuroscience. Curr. Opin. Neurobiol. 31, 51–61 (2015).

Canolty, R. T. et al. High gamma power is phase-locked to theta oscillations in human neocortex. Science 313, 1626–1628 (2006).

Combrisson, E. et al. Tensorpac: an open-source Python toolbox for tensor-based phase-amplitude coupling measurement in electrophysiological brain signals. PLoS Comput. Biol. 16, e1008302 (2020).

Pedregosa, F. et al. Scikit learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Ojala, M. & Garriga, G. C. Permutation tests for studying classifier performance. J. Mach. Learn. Res. 11, 1833–1863 (2010).

Combrisson, E. & Jerbi, K. Exceeding chance level by chance: the caveat of theoretical chance levels in brain signal classification and statistical assessment of decoding accuracy. J. Neurosci. Methods 250, 126–136 (2015).

Nichols, T. E. & Holmes, A. P. Nonparametric permutation tests for functional neuroimaging: a primer with examples. Hum. Brain Mapp. 15, 1–25 (2002).

Pantazis, D., Nichols, T. E., Baillet, S. & Leahy, R. M. A comparison of random field theory and permutation methods for the statistical analysis of MEG data. NeuroImage 25, 383–394 (2005).

Das, S. Filters, wrappers and a boosting-based hybrid for feature selection. In Proceedings of the International Conference on Machine Learning Vol. 1, 74–81 (Citeseer, 2001).

Guyon, I. & Elisseeff, A. An introduction to variable and feature selection. J. Mach. Learn. Res. 3, 1157–1182 (2003).

Liu, J., Ranka, S. & Kahveci, T. Classification and feature selection algorithms for multi-class CGH data. Bioinformatics 24, i86–i95 (2008).

Yu, L. & Liu, H. Redundancy based feature selection for microarray data. In Proc. 10th ACM SIGKDD international conference on knowledge discovery and data mining 737–742 (ACM, New York, USA, 2004).

Ding, C. & Peng, H. Minimum redundancy feature selection from microarray gene expression data. J. Bioinform. Comput. Biol. 3, 185–205 (2005).

Hsu, C.-W., Chang, C.-C., Lin, C.-J. et al. A Practical Guide To Support Vector Classification. Technical report, Department of Computer Science, National Taiwan University. http://www.csie.ntu.edu.tw/~cjlin/papers/guide/guide.pdf (2003).

Acknowledgements

E.C. was supported in part by a Ph.D. Scholarship from the Ecole Doctorale Inter-Disciplinaire Sciences-Santé (EDISS), Lyon, France, and funding from the Natural Sciences and Engineering Research Council of Canada (NSERC). We acknowledge support from the Brazilian Ministry of Education (CAPES grant 1719-04-1), the Foundation pour la Recherche Médicale (FRM, France), and the Fulbright Commission to J.L.P.S. K.J. was supported by funding from the Canada Research Chairs program and a Discovery Grant (RGPIN-2015-04854) from the Natural Sciences and Engineering Research Council of Canada (NSERC), a New Investigators Award from the Fonds de Recherche du Québec—Nature et Technologies (2018-NC-206005) and an IVADO-Apogée fundamental research project grant. The authors are grateful for the collaboration of the patients and clinical staff at the Epilepsy Department of the Grenoble University Hospital. The operation of the supercomputer is funded by the Canada Foundation for Innovation (CFI), the Ministère de l'Économie, de la Science et de l’Innovation du Québec (MESI) and the Fonds de Recherche du Québec—Nature et technologies (FRQNT). Computations were made on the supercomputer Guillimin from the University of Montréal, managed by Calcul Québec and Compute Canada. The operation of this supercomputer is funded by the Canada Foundation for Innovation (CFI), the ministère de l'Économie, de la science et de l’innovation du Québec (MESI) and the Fonds de recherche du Québec—Nature et technologies (FRQ-NT). Computations were made on the supercomputer Guillimin from the University of Montréal, managed by Calcul Québec and Compute Canada.

Author information

Authors and Affiliations

Contributions

E.C.: Conceptualization, software, formal analysis, visualization, writing—original draft; F.D.R.: Writing—original draft and review & editing; A.-L.S.: Conceptualization, Software, Methodology; M.P.-B.: Resources; J.LP.S.: Methodology; P.K.: Resources; J-P.L.: Resources; A.G.: Conceptualization, supervision, writing—original draft and review & editing; K.J.: Conceptualization, writing—original draft and review & editing, supervision, project administration, funding acquisition.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Biology thanks Muthuraman Muthuraman and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Primary Handling Editors: Christian Beste and Joao Valente. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Combrisson, E., Di Rienzo, F., Saive, AL. et al. Human local field potentials in motor and non-motor brain areas encode upcoming movement direction. Commun Biol 7, 506 (2024). https://doi.org/10.1038/s42003-024-06151-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42003-024-06151-3

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.