Abstract

Fluorescence correlation spectroscopy (FCS) is a single molecule sensitive tool for the quantitative measurement of biomolecular dynamics and interactions. Improvements in biology, computation, and detection technology enable real-time FCS experiments with multiplexed detection even in vivo. These new imaging modalities of FCS generate data at the rate of hundreds of MB/s requiring efficient data processing tools to extract information. Here, we briefly review FCS’s capabilities and limitations before discussing recent directions that address these limitations with a focus on imaging modalities of FCS, their combinations with super-resolution microscopy, new evaluation strategies, especially machine learning, and applications in vivo.

Similar content being viewed by others

Introduction

The year 2022 marks the golden anniversary of the first paper1 describing the fluctuation-based spectroscopic technique called fluorescence correlation spectroscopy (FCS). FCS is based on the fluctuation dissipation theorem and provides information about equilibrium and reaction kinetics that could previously only be obtained by the various relaxation techniques pioneered by Manfred Eigen2,3. In relaxation techniques, the return to equilibrium of a reaction system whose temperature, pressure or electric field was perturbed provides information about the system’s reaction kinetics (Fig. 1).

a The kinetics of a process in equilibrium is quantified by two different methods-invasively, by intentionally forcing the system away from equilibrium using an external stressor (perturbation analysis) or non-invasively by analyzing the spontaneous deviation from equilibrium (fluctuation analysis). b In relaxation analysis, one characterizes the time needed for the system to dissipate the effect of the perturbation, i.e., to return to the original equilibrium state, by fitting a theoretical model (smooth solid line) to compute the characteristic time constant. c In equilibrium kinetics, the characteristic time of fluctuations around the equilibrium (left) is typically examined using temporal autocorrelation analysis (right). To this aim, the autocorrelation function is computed for different lag times and plotted as a function of lag time to yield an autocorrelation curve (red), which is then fitted using a theoretical model (black) to determine the characteristic decay time of the autocorrelation curve.

Fluctuations are spontaneous deviations from equilibrium that contain information about the system’s relaxation to equilibrium and thus their analysis carries the same information as the perturbation experiments. Therefore, without any external perturbation, FCS exploits the information in fluctuations around the equilibrium state to understand the kinetics of the system. Apart from being non-invasive, the use of fluorescence as the fluctuating quantity to monitor the equilibrium provided better specificity and sensitivity over other fluctuation monitoring techniques including scattering. Detailed descriptions of the theory4, experimental realization5, and statistical accuracy6 of FCS have been published earlier.

Fluctuations in fluorescence intensity are defined mathematically as deviations from the mean fluorescence intensity. For systems in equilibrium, the mean fluctuation in fluorescence intensity is zero and hence is a challenging physical parameter to analyze and interpret. As a result, a convenient way to analyze fluctuations is to use autocorrelation functions which measure the self-similarity, of the fluctuating signal. Typically, while performing an FCS experiment, the autocorrelation function of the detected fluorescence is first computed for different lag times. Then, the computed function is approximated by a suitable theoretical model7 to determine the underlying physical parameters of the fluctuating signal.

The initial FCS experiments were plagued by high background, limited computational capabilities and long measurement times. The reduction in background by the use of confocal based detection in FCS led to a breakthrough in the field8, which manifested as a ten-fold increase in number of papers per year compared to the preceding decade. The commercialization of FCS by Evotec and Zeiss made it widely available and sparked an increase in FCS applications especially in biology9.

The widespread use of confocal microscopy in biology and the ability to perform FCS in live cells rendered FCS a suitable technique for investigating various physicochemical phenomena observed in cell10 and developmental biology11,12,13. FCS has been used to investigate diffusion, convection, chemical kinetics14, affinities15 for binding to both immobile and mobile structures, concentrations, stoichiometry, or the microscale organization of cell-membranes16. An overview of the versatility of FCS can be gained from various reviews on the topic17,18,19,20. Apart from standard FCS, scanning FCS (line-scan21 or circular22) refers to the group of techniques where the measurement volume is moved across the sample and is very useful for investigating slow diffusion on membranes. The interested reader is referred here for a detailed description of scanning FCS23.

Measurements described so far enabled the quantification of dynamics at only one confocal diffraction limited spot at a time. Any attempt at covering larger areas required scanning the sample one spot at a time which proved to be time consuming, led to asynchronous measurements and was prone to photobleaching. Raster Image Correlation Spectroscopy (RICS) improved on this situation by using fast scanning and by utilizing the intrinsic time structure of the scanning process to combine spatial and temporal correlations24,25. As RICS can be implemented at any available confocal microscope it is widely accessible26,27. Typically employed as a single point method, current implementations of RICS enable multiplexed detection leading to creation of diffusion maps27,28,29. Further advances were made using multiple confocal spots with multiple detectors30 or detectors with multiple elements31,32,33 which have culminated in so-called massively parallel FCS34,35,36.

Alternatively, spatial fluctuations were utilized in Image Correlation Spectroscopy (ICS)37,38 to investigate clustering and aggregation of molecules or molecular complexes, even those with sizes well below the diffraction limit, initially not including dynamics. Later, ICS measurements were also collected in time to evaluate the temporal development of spatial correlations39,40.

The true simultaneous analysis of spatial and temporal41 correlations over whole images was made possible by the introduction of fast and sensitive array detectors (EMCCD42,43,44,45, sCMOS31,46,47, and SPAD arrays48,49). The spatiotemporal analysis of image stacks is referred to as spatiotemporal ICS (STICS) or Imaging FCS (Fig. 2).

Correlation-based techniques are classified based on whether they evaluate spatial, temporal, or spatiotemporal correlations. ICS utilized spatial correlations to investigate clustering and aggregation of molecules or molecular complexes. FCS utilizes temporal correlations to investigate diffusion, convection, chemical kinetics, affinities. RICS utilizes the intrinsic time structure of the scanning process to obtain the correlations. Spatiotemporal analysis of image stacks is performed in STICS and Imaging FCS. Current methodologies making use of either point or array detection have accordingly been combined with various super-resolution techniques. DNA-PAINT involves labeling single molecules with fluorescently tagged DNA transiently binding to target sequences on the sample. Sequential detection and precise localization of single molecules result in super-resolved images. STED involves depleting the fluorescence at the fringes of the excitation beam by stimulated emission leading to detection in sub-diffraction limited volumes in the sample. SIM involves illuminating the sample with structured illumination patterns leading to detection of spatial frequencies in an image that would otherwise be below the diffraction limit. Deconvolution microscopy is a technique that reverses the blurring effects of the point spread function of the microscope on the images. SOFI calculates correlation functions, in which the PSF appears in higher powers, resulting in improved resolution. SRRF determines the convergence point of the radial gradients of light distributions to estimate the localization of the source of the emission.

Apart from multiplexing, i.e., the recording of multiple simultaneous measurements, Imaging FCS allows calculating all spatiotemporal correlations between any pixels or group of pixels50. Therefore, a single measurement contains the data for the analysis of the dynamics over many length scales by pixel binning or by choosing any point of interest and analyze their relations. It also avoids sampling bias by recording and quantifying the heterogeneity in an entire cell51. Other useful methods to quantify heterogeneity are pair-correlation functions52,53 and differences in the spatial correlation functions of adjacent pixels (ΔCCF)50.

This capability of analyzing fast molecular dynamics over whole images with single molecule sensitivity at physiologically relevant concentrations made FCS an attractive tool for combination with super-resolution techniques, which provide superior structural details but at much lower time resolution. This combination of recording simultaneously fast dynamics and super-resolution images was exploited either by combinations of correlation spectroscopies with experimental or computational super-resolution techniques.

Combination of FCS and super-resolution

FCS has been combined with experimental and computational super-resolution techniques, leading to improved simultaneous spatiotemporal resolution. On the experimental super-resolution front, correlation spectroscopies have been integrated with stimulated emission depletion microscopy (STED)54, DNA points accumulation for imaging in nanoscale topography (DNA-PAINT)55, structured illumination microscopy (SIM)56, and airyscan units57.

In STED, fluorophores are selectively depleted at certain regions to improve the resolution providing access to measure diffusion and concentrations on length scales as small as 30 nm54. This was employed to distinguish free and anomalous diffusion due to transient binding. A combination of FCS with DNA-PAINT called localization-based FCS55 (lbFCS) enables the quantification of absolute number/concentration of molecules. SIM, which applies low laser powers with standard fluorophores using patterned light sources in live cells, was combined with STICS56,58,59 to measure transport velocities. As STICS calculates spatial correlations, the better resolution of SIM resulted in better-resolved velocity parametric maps. The combination of STICS with SIM has also been extended to cross-correlations60. By utilizing two different markers localized on cell-membrane and cytoskeleton, correlations of the velocity parametric maps demonstrated coupling between the flows of the cell-membrane and cytoskeleton.

On the computational side, correlation spectroscopies have been combined with super-resolution optical fluctuation imaging (SOFI)47,61,62,63, super-resolved radial fluctuation (SRRF)47, and deconvolution47, with other potential techniques being mean-shift super-resolution (MSSR)64, sparsity-based super-resolution correlation imaging (SPARCOM)65, or multiple signal classification algorithm (MUSICAL)66.

In super-resolved optical fluctuation imaging (SOFI)61, second, fourth, or even higher order autocorrelation functions are calculated on time-traces of fluorescent molecules which show blinking behavior. As a result of higher order autocorrelation analysis, the point spread function (PSF) is reduced leading to an improvement in resolution. Typically, an nth order correlation leads to a \(\sqrt{n}\) improvement in resolution61. Super-resolution radial fluctuations (SRRF)47 microscopy is a super-resolution technique that performs a SOFI analysis on radiality stacks.

SOFI47,62 and SRRF47 are computational super-resolution techniques and thus no hardware add-ons are necessary to be installed with the microscope. They can be thus applied to the exact same data as Imaging FCS. However, these techniques need different acquisition strategies. Hence the initial raw imaging data is collected at the best experimentally accessible spatiotemporal resolution. The data is rescaled in space or time depending on the needs of the individual technique. For instance, the super-resolution techniques (SOFI, SRRF, and deconvolution) record small pixels but typically illuminate longer to reach a certain SNR. On the contrary, Imaging FCS uses larger pixels to be able to record fast for the same reason. Therefore, in combinations of super-resolution and Imaging FCS one typically acquires at the best spatiotemporal resolution and then bins in time or space for super-resolution microscopy or FCS, respectively, to reach a sufficient SNR.

Using the strategy described earlier, both SOFI and SRRF have been combined with Imaging FCS on a sample of LifeAct-labeled actin fibers. This allowed correlating the dynamics measured by Imaging FCS to the better localized actin fibers, providing information how LifeAct interacts with actin18. Interestingly the FCS data could also be used to remove artefacts from SRRF images via the dynamics data. FCS has also been combined with deconvolution microscopy47 which is a computational technique aimed at reversing the blurring effects of the point spread function of the microscope on the images.

The advantage of computational super-resolution techniques is that they can use the same data as FCS and thus do not need any specialized equipment. This makes these combinations immediately accessible without any modifications. On the other hand, experimental super-resolution techniques, despite requiring specialized equipment, typically reach better spatial resolution. But not all modalities can be readily combined with FCS. Although FCS has single-molecule sensitivity, it measures at concentrations much higher than what would be acceptable for single-molecule localization microscopy techniques. While this can be overcome by using photoswitchable dyes and recording in two colors, one at high, one at very low concentration, as has been demonstrated in the combinations of FCS with single particle tracking (SPT)67, it is difficult to do that in a single color.

Advances in data-handling and data processing in FCS

Developments in optical instrumentation in the last three decades described in the sections above leading to improvements in FCS went together with improvements in the field of computing. Computing power has roughly doubled every two years since the seventies68 as predicted by Moore’s law. The use of a data-parallel approach in FPGAs69 and later GPUs in multiplexed FCS has led to a significant reduction in the time taken to calculate and fit the autocorrelation functions35,47,70 since the same function can be evaluated in every processing element of the GPU. In a further boost to faster evaluation, currently direct camera readout strategies enable numerical analysis while the data is being recorded71. As a result, we are at a stage where an experimenter potentially generates data at the rate of hundreds of MB/s. Number-crunching operations on this mammoth dataset is complete within minutes and the experimenter is faced with the complex task of choosing a suitable theoretical model to fit the autocorrelation data. Some of the approaches to fit the data in FCS include non-linear least squares or maximum entropy based fitting routine (MEMFCS)72. The choice of a suitable fitting model is typically parameterized as a classification problem in computation.

One of the ways of solving this classification problem in FCS is using Bayesian approaches73,74,75,76,77. The utility of other machine learning classifiers including multilayer perceptron, random forests, and support vector machines in FCS are under investigation78. In the next section, we describe how deep learning widely used in fluorescence microscopic image processing79,80,81 is utilized for various applications in FCS.

Use of convolutional neural networks in FCS

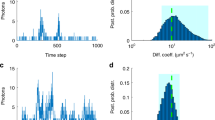

Although publications are sparse, the most common deep learning network architecture used in FCS are Convolutional Neural Networks (CNNs)78,82,83,84,85,86,87,88,89. CNNs are powerful analysis tools applied to image and time-series analysis (Fig. 3). The major uses of CNNs in FCS are to decide the fitting model and to estimate the kinetic and mobility parameters. Hence CNNs for FCS are designed to solve classification or regression problems in a supervised manner. Unsupervised learning approaches to cluster data to understand its inherent structure in FCS remain to be explored.

a The input in Imaging FCS is an image stack. b In conventional non-linear least squares fitting approach, the autocorrelations are calculated and then fitted to a theoretical model. The spatially resolved physicochemical parameters are the output from this approach. c In the machine learning approach, there are two possibilities to estimate the physicochemical parameters. Autocorrelations are calculated and used as a feature selection method. They are used to train a convolutional neural network. Otherwise, the raw intensity is used to train a convolutional neural network. After successful training, when any image stack is given as an input to the network, the parametric maps are obtained as output. Another network architecture relevant for Imaging FCS data analysis is the autoencoder. Autoencoders are used for data-compression and denoising. One of the greatest challenges of using machine learning for FCS is the lack of training data. One solution to generate training data is to use a generative adversarial network. These are two neural networks working together to generate data similar to the one given as an input. In all three types of architectures, the input neurons are shown in red, the output neurons are shown in blue, the convolution neurons are shown in yellow, and the hidden neurons are shown in gray.

Briefly, CNNs consist of multiple layers, each of which performs a convolutional operation with a defined kernel and variable weights. Data sets are propagated through the CNN and the output is compared to a desired outcome or ground truth. The weights are then adjusted in defined ways to reduce the difference between the CNN’s output and the ground truth. This operation is repeated with a large training set so that the CNN gradually learns the properties of the data set. There are a number of different CNN architectures but possible starting points to construct CNNs for FCS are ResNet90 and Inception networks91 which can be used to construct deep networks and can evaluate multiple scales in parallel, respectively, to reach a good approximation for FCS. ResNet, for instance, has been used in the construction of U-Net, which is widely used in super-resolution and image segmentation.

The universal approximation theorem states that with sufficient learning, neural networks can approximate mathematical functions to a certain level of accuracy and precision92. Hence deep CNNs are suitable to learn the autocorrelation function from image stacks. The number of layers in the CNNs determine the depth of the CNN. An increase in the number of layers leads to an increased demand for computational resources (memory and time). Hence one must maintain a delicate balance between efficiency and computational demands while designing CNNs suitable for FCS.

While training the CNN, one must exercise caution not to overfit the data. Overfitted networks perform well only for the training data and not for the test data. Overfitting typically occurs when the size of the training dataset is small. Training on very small datasets leads to over-parameterization of the input data and the network tends to memorize the input and the behavior. Hence the training data must be large enough encompassing a large variety of experimental scenarios to sufficiently train the network. As such the first step in any supervised machine learning paradigm is to obtain labeled ground truth data for training.

However, obtaining sufficient data with the corresponding ground truth data in FCS for training is difficult for at least two reasons. First, it is very time consuming to acquire a large set of FCS data that covers the full range of applicable parameters, including diffusion coefficients, concentrations, and signal-to-noise ratios among others. Second, while accurate estimation of diffusion coefficients of standard samples was made possible by 2f-FCS93, standard samples do not cover the entire range of diffusion coefficients accessible to FCS. Even if measured, the diffusion coefficients will be estimated from non-linear least squares fit of a model to the autocorrelation function which has an inherent error associated with the procedure. Although not impossible94, creating experimental training sets would suffer from incomplete parameter coverage and inherent errors of the measurements system in addition to time-consuming experiments that need to be repeated for different instruments and conditions, and – due to the required fitting of the data – is model-dependent.

Instead, one can resort to simulations, with parameters chosen that are matched as close as possible to the experimental parameters95. In this way, the creation of the training set becomes an automated task. Since any experimental or theoretical illumination and detection geometry and any dynamic processes can be simulated, the CNN becomes (fit) model independent. This way evaluations can be achieved even in cases where no closed-form solution of the correlation function exists, and non-linear least-squares fitting is either not possible or can only be achieved with numerical models that are very time-consuming to evaluate. One should note, though, that a suitable noise model must be incorporated into the simulations to represent experimental conditions. The use of simulations for training has been verified in FCS83,84,85,86 and SPT79. Some software currently available to perform simulations (reviewed here96) are SimFCS24, PAM97, and Imaging FCS98,99. Since CNNs are data-hungry, one of the strategies is to simulate data on the fly without saving it to reduce memory requirements.

The simulations for FCS100,101 are modeled on the fundamental physics of diffusion which is a stationary process. The presence of deviations from stationarity (for instance due to photobleaching or inhomogeneities) in the experimental data will lead to errors of the estimates of physicochemical parameters from the CNNs. Two different strategies can be followed here. Either the simulated training sets include these non-stationary processes, or the experimental data must be corrected (for instance by bleach correction) before being evaluated by CNN.

One of the factors determining the precision of estimates recovered from NLS fitting of FCS data is the length of the time-series of the image stack. Empirically, it was shown that to obtain at most a 20% coefficient of variation101 (standard deviation/mean), the measurement time needs to be at least a hundred times the diffusion time of the molecule being investigated. However, we can train CNNs with many such shorter image-stacks which are typically not sufficient for a good NLS fit. By training the CNN on a large set of these small data sets over a wide parameter range we can obtain a CNN that fits data at significantly reduced measurement time, which an NLS fit could not properly evaluate.

CNNs have been trained on FCS data in two different ways so far83,84,85,86,87 (Fig. 3). Either the CNN is trained on raw imaging data and thus learns the dynamics directly from the intensity traces or the CNN is trained on pre-computed features, i.e., in our case the correlation functions of interest. The advantage of using computed features is that this approach preselects features for training reducing the dimensionality of the search space to perform the optimization. For instance, while training for FCS, one could train the neural networks on the raw images or on the pixelwise autocorrelation functions. Assuming 50,000 frames of 128 × 128 images stored in 16-bit format are used, the size of one training data is ~2 GB. Instead, if one uses the autocorrelation function, which has generally <1000 points, as a pre-computed feature, the size of the training dataset is ~65 MB, corresponding to a reduction of training data size by a factor 25.

Both strategies have their advantages. While using the correlation functions as training sets, the much smaller size of the test data leads to smaller, more compact CNNs which do not impose huge demands on the computational infrastructure. The use of the raw images, on the other hand, has the advantage that it is not limited by the temporal averaging performed by the correlation functions. One of the other advantages of using the raw imaging data is to avoid sampling artefacts due to the multi-tau algorithm used to calculate the autocorrelation function7,100,102

CNNs have the potential to address several limitations of FCS. First, the measurement time required is dictated by the fact that the autocorrelation function in FCS is a biased estimator103 and it converges only at long measurement times101,104. Once properly trained, CNNs learn the bias and produce more accurate and precise parameter estimates even when using less data83,84,85,86,87.

Second, FCS resolution is limited in distinguishing multiple particles with different diffusion times. Using conventional non-linear least squares fitting on FCS data where the SNR is not a limiting factor and when there is an equal distribution of fast and slow particles, the ratio of the faster particle’s diffusion coefficient to that of the slower particle’s must be at least 1.6 in order to distinguish them as two different particles105. As the proportion or the SNR varies, the minimum ratio of diffusion coefficients necessary to be distinguished as two different diffusing particles increases and can easily exceed a factor 10 in diffusion coefficients (a factor 1000 in mass). CNN-based data analysis88 enabled reliable estimation of diffusion coefficients of fast and slow particles even in situations when the ratio of the faster particle’s diffusion coefficient to that of slower particle was <1.6 in simulations.

Third, CNN-based analysis also provides considerable improvement in the time taken to estimate the parameters. Sufficiently trained CNNs are faster than the iterative non-linear least square fits which require multiple rounds of calculation to reach the optimal value. This becomes even more evident in cases where no analytical solution is available and numerical models need to be employed95. Fourth, the knowledge gained by any trained network on free diffusion also has the potential to be adapted for novel but similar tasks (referred to as transfer learning). Transfer learning for a similar task is faster to train when compared to training a network ab-initio for the same task. For instance, by transfer learning, networks trained for obtaining mobility estimates could be utilized for spatial investigations such as presence of diffusion inhomogeneities. Fifth, the effects of systemic behavior affecting the autocorrelation functions (large aggregates passing through the detection area, sample movement, or photobleaching) can be mitigated using deep learning. In principle, networks can be trained to correct for these artefacts and thus lead to more robust and simpler FCS systems.

CNNs have the potential to be used in the classification of the type of diffusion. In biological samples diffusion is often not free as biomolecules move in a complex matrix and have many opportunities to interact with their surroundings specifically or non-specifically. This is often determined by measuring the diffusion coefficient of a sample in dependence on the area observed, a procedure commonly referred to as diffusion law analysis106. Briefly, if one changes the size of the observation area, e.g., by binning adjacent pixels in Imaging FCS, or by changing the laser spot size in a confocal or STED microscope54,107, the average time taken by molecules undergoing free diffusion to traverse the areas of different size increase linearly with observation area. Any deviations from linearity imply different diffusion modes, which can subsequently be identified from the type and strength of the deviation106. Without calculating and fitting the autocorrelation at different length scales as performed in FCS diffusion law analysis, the spatiotemporal information could be directly extracted from a raw image stack. As a result, CNNs can be trained on raw image stacks of simulations of various diffusion modes and hence can predict the diffusive modes of the particle.

Apart from use of CNNs to classify diffusion, CNNs also have the potential to be used in number and brightness (N&B) analysis108,109. N&B analysis is an offshoot of FCS providing only the aggregation and concentration profiles of the dynamic system under investigation. Typically, in N&B analysis, the observed intensity of the pixel is deconstructed mathematically to reveal the contributions of the concentration of the fluorophore and of the aggregation state of the fluorophore. Current mathematical methods are not efficient in deconstructing the aggregation states when multiple aggregation states of the same molecule are present at the same time. CNNs modeled as a regression problem have the potential to improve the deconvolution of the aggregation states in N&B analysis.

Apart from CNNs, other deep learning architectures which have potential for FCS are autoencoders110, generative adversarial networks111, and mixture density networks112. Mixture density networks hold great promise in estimating the parameters of combinations of probability distributions similar to the MEMFCS72 approach.

As the name suggests autoencoders learn the important characteristics of the input data and yield a lean representation of the same data. Typically used for dimensionality reduction, autoencoders serve as a denoiser for the raw data. Hence FCS data have the potential to be denoised using an autoencoder before being used for data analysis.

Generative adversarial networks are another class of neural networks which consist of two networks working in tandem. These are typically used to create datasets similar to the dataset given as an input. The first ‘generative’ network creates the dataset while the next ‘adversarial’ network discriminates whether the created dataset is similar or dissimilar to the original dataset. This is very useful for generating data for training with similar noise structures to the experimental data used as an input. This approach overcomes the need to have an analytical noise model to create simulation data for training.

Power and current limitations in live tissues

A field that stands to gain considerably by FCS advances involving super-resolution microscopy and machine learning are measurements in live tissues and organisms. Measurements of cell autonomous processes can be conducted in cell cultures, at least qualitatively. But even here, there are indications that quantitative results differ between cell and in vivo measurements. Consequently, there have been a number of investigations in various model organisms, albeit at a much lower number than FCS applications in cells or in vitro. Today, FCS has mainly been used to study transport45,46,75,98,113,114,115,116,117,118,119,120,121,122,123,124,125,126,127,128,129,130, binding131,132,133,134, and organization135 in live organisms including fish (reviewed here136,137,138), worms, and flies (Table 1).

The major advantage of FCS in live tissues is the power to quantify the dynamics of various biological processes in the presence of all relevant interaction partners, which can significantly influence biomolecular interactions, and under physiological environmental conditions. However, the use of FCS in live tissues has been hampered by various challenges. First, tissue movement cannot be avoided under all circumstances (for examples, tissue development and growth, heartbeat, or blood flow) distorting correlation functions and making data evaluation unreliable. Second, measurements in live tissues often have higher background and thus do not have a SNR as high as those in cells or purified systems and thus limiting measurement accuracy. And spherical aberration due to index heterogeneity in the tissue further reduce resolution and SNR.

New developments are addressing at least some if not all these issues. Direct camera-readout71 and online analysis of FCS data enables identification of movement artefacts enabling user intervention and avoiding unnecessary data loss. Alternatively, while analyzing data from live tissues containing anomalies including drifts, spikes, or unwanted fluctuations103, one can apply a recently developed theoretical framework utilizing temporal segmentation. It remains to be tested if machine learning can aid in the analysis of data with distortions. Second, it has been shown that CNNs can be constructed that require less data to obtain the same parameter accuracy and better precision compared to standard non-linear least-squares curve fitting. Finally, adaptive optics shows great promise to improve data acquisition in complex environments with heterogenous refractive index distributions139,140. Coupled with super-resolution and FCS this could lead to new accuracy and precision of spatiotemporal events even in complex environments.

Outlook

FCS has been widely used to study diffusion and binding in biological systems. However, in its classical confocal modality, FCS has been limited in multiple ways. It provided high intrinsic resolution of molecular dynamics but was diffraction-limited in its analysis. It provided only a single spot or at best a few spots for measurements and did not provide a contiguous image of a sample, making integration with imaging modalities difficult. It required long measurement times to calculate correlations that could be evaluated with sufficient accuracy and precision. Data analysis was also complicated due to a variety of factors including the inhomogeneity of the errors of the correlation functions, the fact that correlation function models could be calculated only for the simplest cases when applying appropriate approximations, the choice of the model for the fitting function and finally the large number of calculations required for multiplexed approaches.

Many of these challenges have been met or are being addressed. The use of STED-FCS achieved sub-diffraction resolution for FCS. Later the use of fast and sensitive array detectors in FCS enabled multiplexing. Online evaluation of sub-micrometer spatially resolved structural data and sub-millisecond temporally resolved dynamic data in a variety of biological samples is already possible and parallel processing of data using GPUs with multiple computing elements has dramatically reduced the time taken for data-evaluation. The use of machine learning in FCS promises user autonomous, model-independent data analysis with increased time-resolution. The combination of FCS and super-resolution, deep learning, and the use of more powerful sensors141 permits creating new imaging modalities that provide spatial and temporal information on molecular processes with unprecedented resolution in real-time and at a repetition rate of seconds. In combination with computational super-resolution techniques these modalities do not require specialized equipment. The integration of FCS and super-resolution microscopy promises to provide unprecedented spatiotemporal resolution in biological samples, even in vivo. The implementation of machine learning algorithms in combination with GPU processing will allow real-time data analysis, render data evaluation model-independent, and simplify experiments, making these powerful techniques available to non-expert users.

Box 1 – Important developments and future challenges of FCS

Important developments

-

FCS in its various forms and its hyphenated techniques with super-resolution microscopy allows long-term observations of various biological processes across a variety of biological scales including cells, tissues, organoids, and whole organisms providing complementary information on dynamics and structure within a single measurement.

-

The introduction of online evaluation and GPU-based parallel computing in FCS has led to a reduction in the time taken to analyze the data. The use of machine learning in FCS promises model-independent data analysis. This is especially important in cases where currently no analytic fit function is available.

Future challenges

-

Today, deep learning has the capacity to simplify and accelerate data evaluation for the user making FCS more applicable and easier to use for the wider biological community. Which network architectures in deep learning are useful for FCS analysis is an open question and will need to be addressed in the future. Extensive benchmarking and stress testing needs to be performed to understand the advantages of deep learning over conventional regression approaches including NLS fitting. The use of explainable AI or interpretable AI in FCS will lead to a better comprehension of the decision making of AI while estimating the parameters in FCS.

-

The combination of FCS with super-resolution has combined dynamics with structure. The next frontier to tackle is to combine functional assays with FCS and super-resolution. From a single measurement, one will then be able to obtain a spatially resolved map of structure, function, and dynamics.

-

Bringing these advances into live tissues and organisms will be a major challenge. It will allow obtaining dynamics, structure, and interaction maps in physiologically relevant environments within a single measurement in real time. The simultaneous measurement of these parameters will provide new information and insights not available to date.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

References

Magde, D., Elson, E. & Webb, W. W. Thermodynamic fluctuations in a reacting system-measurement by fluorescence correlation spectroscopy. Phys. Rev. Lett. 29, 705–708 (1972).

Rigler, R. & Widengren, J. Fluorescence-based monitoring of electronic state and ion exchange kinetics with FCS and related techniques: from T-jump measurements to fluorescence fluctuations. Eur. Biophys. J. 47, 479–492 (2018).

Schwille, P. There and back again: from the origin of life to single molecules. Eur. Biophys. J. 47, 493–498 (2018).

Elson, E. L. & Magde, D. Fluorescence correlation spectroscopy. I. Conceptual basis and theory. Biopolymers 13, 1–27 (1974).

Magde, D., Elson, E. L. & Webb, W. W. Fluorescence correlation spectroscopy. II. An experimental realization. Biopolymers 13, 29–61 (1974).

Koppel, D. E. Statistical accuracy in fluorescence correlation spectroscopy. Phys. Rev. A 10, 1938–1945 (1974).

Wohland, T., Maiti, S. & Macháň, R. An Introduction to Fluorescence Correlation Spectroscopy. (IOP Publishing, 2020). This is a comprehensive resource covering various aspects of FCS including mathematical foundations, instrumentation and data analysis.

Rigler, R., Mets, Ü., Widengren, J. & Kask, P. Fluorescence correlation spectroscopy with high count rate and low background: analysis of translational diffusion. Eur. Biophys. J. 22, 169–175 (1993).

Jankowski, T. & Janka, R. Fluorescence Correlation Spectroscopy: Theory and Applications, Rigler. p 331-345 R. (ed. Elson, E. S.) (Springer Berlin Heidelberg, 2001).

Macháň, R. & Wohland, T. Recent applications of fluorescence correlation spectroscopy in live systems. FEBS Lett. 588, 3571–3584 (2014).

Wang, X., Wohland, T. & Korzh, V. Developing in vivo biophysics by fishing for single molecules. Dev. Biol. 347, 1–8 (2010).

Veerapathiran, S. & Wohland, T. Fluorescence techniques in developmental biology. J. Biosci. 43, 541–553 (2018).

Ng, X. W., Sampath, K. & Wohland, T. Fluorescence correlation and cross-correlation spectroscopy in Zebrafish. Methods Mol. Biol. 1863, 67–105 (2018).

Elson, E. L. Fluorescence correlation spectroscopy measures molecular transport in cells. Traffic 2, 789–796 (2001).

Hwang, L. C. & Wohland, T. Recent advances in fluorescence cross-correlation spectroscopy. Cell Biochem. Biophys. 49, 1–13 (2007).

Chiantia, S., Ries, J. & Schwille, P. Fluorescence correlation spectroscopy in membrane structure elucidation. Biochim. Biophys. Acta 1788, 225–233 (2009).

Oleg, K. & Grégoire, B. Fluorescence correlation spectroscopy: the technique and its applications. Rep. Prog. Phys. 65, 251 (2002).

Digman, M. A. & Gratton, E. Lessons in fluctuation correlation spectroscopy. Annu. Rev. Phys. Chem. 62, 645–668 (2011).

Elson, ElliotL. Fluorescence correlation spectroscopy: past, present, future. Biophys. J. 101, 2855–2870 (2011).

Gupta, A., Sankaran, J. & Wohland, T. Fluorescence correlation spectroscopy: the technique and its applications in soft matter. Phys. Sci. Rev. 4, 20170104 (2019).

Ries, J., Chiantia, S. & Schwille, P. Accurate determination of membrane dynamics with line-scan FCS. Biophys. J. 96, 1999–2008 (2009).

Petrášek, Z., Derenko, S. & Schwille, P. Circular scanning fluorescence correlation spectroscopy on membranes. Opt. Express 19, 25006–25021 (2011).

Petrásek, Z., Ries, J. & Schwille, P. Scanning FCS for the characterization of protein dynamics in live cells. Methods Enzymol. 472, 317–343 (2010).

Rossow, M. J., Sasaki, J. M., Digman, M. A. & Gratton, E. Raster image correlation spectroscopy in live cells. Nat. Protoc. 5, 1761–1774 (2010).

Longfils, M. et al. Raster image correlation spectroscopy performance evaluation. Biophys. J. 117, 1900–1914 (2019).

Brown, C. M. et al. Raster image correlation spectroscopy (RICS) for measuring fast protein dynamics and concentrations with a commercial laser scanning confocal microscope. J. Microsc 229, 78–91 (2008).

Hendrix, J., Dekens, T., Schrimpf, W., Lamb & Don, C. Arbitrary-region raster image correlation spectroscopy. Biophys. J. 111, 1785–1796 (2016).

Ranjit, S., Lanzano, L. & Gratton, E. Mapping diffusion in a living cell via the phasor approach. Biophys. J. 107, 2775–2785 (2014).

Scipioni, L. et al. Local raster image correlation spectroscopy generates high-resolution intracellular diffusion maps. Commun. Biol. 1, 10 (2018).

Yamamoto, J., Mikuni, S. & Kinjo, M. Multipoint fluorescence correlation spectroscopy using spatial light modulator. Biomed. Opt. Express 9, 5881–5890 (2018).

Gösch, M. et al. Parallel single molecule detection with a fully integrated single-photon 2x2 CMOS detector array. J. Biomed. Opt. 9, 913–921 (2004).

Sisan, D. R., Arevalo, R., Graves, C., McAllister, R. & Urbach, J. S. Spatially resolved fluorescence correlation spectroscopy using a spinning disk confocal microscope. Biophys. J. 91, 4241–4252 (2006).

Needleman, D. J., Xu, Y. & Mitchison, T. J. Pin-hole array correlation imaging: highly parallel fluorescence correlation spectroscopy. Biophys. J. 96, 5050–5059 (2009).

Vitali, M. et al. Avalanche camera for fluorescence lifetime imaging microscopy and correlation spectroscopy. IEEE J. Sel. Top. Quant. Electron. 20, 344–353 (2014).

Krmpot, A. J. et al. Functional fluorescence microscopy imaging: quantitative scanning-free confocal fluorescence microscopy for the characterization of fast dynamic processes in live cells. Anal. Chem. 91, 11129–11137 (2019). The use of GPU for data analysis in this study enabled rapid analysis of FCS data.

Oasa, S. et al. Dynamic cellular cartography: mapping the local determinants of oligodendrocyte transcription factor 2 (OLIG2) function in live cells using massively parallel fluorescence correlation spectroscopy integrated with fluorescence lifetime imaging microscopy (mpFCS/FLIM). Anal. Chem. 93, 12011–12021 (2021).

Petersen, N. O., Höddelius, P. L., Wiseman, P. W., Seger, O. & Magnusson, K. E. Quantitation of membrane receptor distributions by image correlation spectroscopy: concept and application. Biophys. J. 65, 1135–1146 (1993).

Ciccotosto, G. D., Kozer, N., Chow, T. T., Chon, J. W. & Clayton, A. H. Aggregation distributions on cells determined by photobleaching image correlation spectroscopy. Biophys. J. 104, 1056–1064 (2013).

Hebert, B., Costantino, S. & Wiseman, P. W. Spatiotemporal image correlation spectroscopy (STICS) theory, verification, and application to protein velocity mapping in living CHO cells. Biophys. J. 88, 3601–3614 (2005). Spatial and temporal correlations of imagestacks enabled the quantification of diffusion coefficients and velocities in living cells.

Wiseman, P. W. Image correlation spectroscopy: principles and applications. Cold Spring Harb. Protoc. 2015, 336–348 (2015).

Bag, N. & Wohland, T. Imaging fluorescence fluctuation spectroscopy: new tools for quantitative bioimaging. Annu Rev. Phys. Chem. 65, 225–248 (2014).

Kannan, B. et al. Electron multiplying charge-coupled device camera based fluorescence correlation spectroscopy. Anal. Chem. 78, 3444–3451 (2006).

Burkhardt, M. & Schwille, P. Electron multiplying CCD based detection for spatially resolved fluorescence correlation spectroscopy. Opt. Express 14, 5013–5020 (2006).

Kannan, B. et al. Spatially resolved total internal reflection fluorescence correlation microscopy using an electron multiplying charge-coupled device camera. Anal. Chem. 79, 4463–4470 (2007).

Wohland, T., Shi, X., Sankaran, J. & Stelzer, E. H. K. Single Plane Illumination Fluorescence Correlation Spectroscopy (SPIM-FCS) probes inhomogeneous three-dimensional environments. Opt. Express 18, 10627–10641 (2010). This is the introduction of light sheet-based FCS to investigate diffusion in 3D in various samples including live zebrafish embryos.

Struntz, P. & Weiss, M. Multiplexed measurement of protein diffusion in Caenorhabditis elegans embryos with SPIM-FCS. J. Phys. D Appl. Phys. 49, 044002 (2016).

Sankaran, J. et al. Simultaneous spatiotemporal super-resolution and multi-parametric fluorescence microscopy. Nat. Commun. 12, 1748 (2021). FCS was combined with SRRF to investigate Lifeact labeled actin fibers and their link to EGFR.

Buchholz, J. et al. Widefield high frame rate single-photon SPAD imagers for SPIM-FCS. Biophys. J. 114, 2455–2464 (2018).

Slenders, E. et al. Confocal-based fluorescence fluctuation spectroscopy with a SPAD array detector. Light. Sci. Appl. 10, 31 (2021).

Sankaran, J., Manna, M., Guo, L., Kraut, R. & Wohland, T. Diffusion, transport, and cell membrane organization investigated by imaging fluorescence cross-correlation spectroscopy. Biophys. J. 97, 2630–2639 (2009).

Yavas, S., Macháň, R. & Wohland, T. The epidermal growth factor receptor forms location-dependent complexes in resting cells. Biophys. J. 111, 2241–2254 (2016).

Digman, M. A. & Gratton, E. Imaging barriers to diffusion by pair correlation functions. Biophys. J. 97, 665–673 (2009).

Hedde, P. N., Staaf, E., Singh, S. B., Johansson, S. & Gratton, E. Pair correlation analysis maps the dynamic two-dimensional organization of natural killer cell receptors at the synapse. ACS Nano 13, 14274–14282 (2019).

Sezgin, E. et al. Measuring nanoscale diffusion dynamics in cellular membranes with super-resolution STED-FCS. Nat. Protoc. 14, 1054–1083 (2019).

Stein, J. et al. Toward absolute molecular numbers in DNA-PAINT. Nano Lett. 19, 8182–8190 (2019).

Ashdown, G. W. & Owen, D. M. Spatio-temporal image correlation spectroscopy and super-resolution microscopy to quantify molecular dynamics in T cells. Methods 140-141, 112–118 (2018).

Scipioni, L., Lanzanó, L., Diaspro, A. & Gratton, E. Comprehensive correlation analysis for super-resolution dynamic fingerprinting of cellular compartments using the Zeiss Airyscan detector. Nat. Commun. 9, 5120 (2018).

Ashdown, GeorgeW. et al. Molecular flow quantified beyond the diffraction limit by spatiotemporal image correlation of structured illumination microscopy data. Biophys. J. 107, L21–L23 (2014).

Ashdown, G., Pandžić, E., Cope, A., Wiseman, P. & Owen, D. Cortical actin flow in T cells quantified by spatio-temporal image correlation spectroscopy of structured illumination microscopy data. J. Vis. Exp. 106, e53749 (2015).

Ashdown, G. W. et al. Live-cell super-resolution reveals F-actin and plasma membrane dynamics at the t cell synapse. Biophys. J. 112, 1703–1713 (2017).

Dertinger, T., Colyer, R., Iyer, G., Weiss, S. & Enderlein, J. Fast, background-free, 3D super-resolution optical fluctuation imaging (SOFI). Proc. Natl Acad. Sci. USA 106, 22287–22292 (2009).

Kisley, L. et al. Characterization of porous materials by fluorescence correlation spectroscopy super-resolution optical fluctuation imaging. ACS Nano 9, 9158–9166 (2015). This paper describes combining FCS with SOFI and its applications to characterize porous materials.

Grußmayer, K. S. et al. Spectral cross-cumulants for multicolor super-resolved SOFI imaging. Nat. Commun. 11, 3023 (2020).

Torres-García, E. et al. Extending resolution within a single imaging frame. Nat. Commun. 13, 7452 (2022).

Solomon, O., Mutzafi, M., Segev, M. & Eldar, Y. C. Sparsity-based super-resolution microscopy from correlation information. Opt. Express 26, 18238–18269 (2018).

Agarwal, K. & Macháň, R. Multiple signal classification algorithm for super-resolution fluorescence microscopy. Nat. Commun. 7, 13752 (2016).

Harwardt, M.-L. I. E., Dietz, M. S., Heilemann, M. & Wohland, T. SPT and imaging FCS Provide complementary information on the dynamics of plasma membrane molecules. Biophys. J. 114, 2432–2443 (2018).

Powell, J. R. The quantum limit to Moore’s law. Proc. IEEE 96, 1247–1248 (2008).

Buchholz, J. et al. FPGA implementation of a 32x32 autocorrelator array for analysis of fast image series. Opt. Express 20, 17767–17782 (2012).

Yoshida, S., Schmid, W., Vo, N., Calabrase, W. & Kisley, L. Computationally-efficient spatiotemporal correlation analysis super-resolves anomalous diffusion. Opt. Express 29, 7616–7629 (2021).

Aik, D. Y. K. & Wohland, T. Microscope alignment using real-time Imaging FCS. Biophys. J. 121, 2663–2670 (2022). Direct camera-readout enables for the first time real-time data analysis in Imaging FCS and is used for microscope alignment.

Sengupta, P., Garai, K., Balaji, J., Periasamy, N. & Maiti, S. Measuring size distribution in highly heterogeneous systems with fluorescence correlation spectroscopy. Biophys. J. 84, 1977–1984 (2003).

Guo, S.-M. et al. Bayesian approach to the analysis of fluorescence correlation spectroscopy data ii: application to simulated and in vitro data. Anal. Chem. 84, 3880–3888 (2012).

Guo, S.-M., Bag, N., Mishra, A., Wohland, T. & Bathe, M. Bayesian total internal reflection fluorescence correlation spectroscopy reveals hIAPP-induced plasma membrane domain organization in live cells. Biophys. J. 106, 190–200 (2014).

Sun, G. et al. Bayesian model selection applied to the analysis of fluorescence correlation spectroscopy data of fluorescent proteins in vitro and in vivo. Anal. Chem. 87, 4326–4333 (2015).

Jazani, S. et al. An alternative framework for fluorescence correlation spectroscopy. Nat. Commun. 10, 3662 (2019). This paper introduces a Bayesian non-parametric approach to analyze FCS data with significanlty reduced measurement times.

Tavakoli, M. et al. Pitching single-focus confocal data analysis one photon at a time with bayesian nonparametrics. Phys. Rev. X 10, 011021 (2020).

Uthamacumaran, A. et al. Machine intelligence-driven classification of cancer patients-derived extracellular vesicles using fluorescence correlation spectroscopy: results from a pilot study. arXiv 2202, 00495 (2022).

Newby, J. M., Schaefer, A. M., Lee, P. T., Forest, M. G. & Lai, S. K. Convolutional neural networks automate detection for tracking of submicron-scale particles in 2D and 3D. Proc. Natl Acad. Sci. USA 115, 9026–9031 (2018).

Weigert, M. et al. Content-aware image restoration: pushing the limits of fluorescence microscopy. Nat. Methods 15, 1090–1097 (2018).

Wang, H. et al. Deep learning enables cross-modality super-resolution in fluorescence microscopy. Nat. Methods 16, 103–110 (2019).

Obert, J. & Ferguson, M. In Deep Time Series Neural Networks and Fluorescence Data Stream Noise Detection, pp 18–32 (Springer International Publishing, 2019).

Sim, S. R., Rollin, A. & Wohland, T. Focus on Microscopy, Online, Convolution Neural Networks for FCS Data Fitting (Focus on microscopy, 2021).

Wohland, T., Hoh Tang, W., Ren Sim, S., Aik, D. & Röllin, A. Deep learning approaches for imaging fluorescence correlation spectroscopy parameter estimation with limited data sets. Biophys. J. 121, 533a (2022).

Wohland, T., Tang, W. H., Sim, S. R., Aik, D. & Rollin, A. Focus on Microscopy, Online, Deep Learning Approaches For Imaging Fluorescence Correlation Spectroscopy Parameter Estimation With Limited Data Sets (Focus on microscopy, 2022).

Wohland, T., Tang, W. H., Sim, S. R., Aik, D. & Rollin, A. Methods And Applications In Fluorescence, Gothenburg, Imaging Fluorescence Correlation Spectroscopy Comes Of Age: Direct Camera Access And Machine Learning For Online Data Evaluation (Methods and Applications in Fluorescence, 2022).

Sim, S. R. Imaging Fluorescence Correlation Spectroscopy Analysis Using Convolutional Neural Networks (National University of Singapore, 2022).

Xibeijia, G. Machine Learning Approach To Fluorescence Correlation Spectroscopy (National University of Singapore, 2018).

Poon, C. S., Long, F. & Sunar, U. Deep learning model for ultrafast quantification of blood flow in diffuse correlation spectroscopy. Biomed. Opt. Express 11, 5557–5564 (2020).

He, K.; Zhang, X.; Ren, S.; Sun, J. In Deep Residual Learning for Image Recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 27-30 June 2016, pp 770–778 (2016).

Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. In Rethinking the inception architecture for computer vision. Proceedings Of The IEEE Conference On Computer Vision And Pattern Recognition, pp 2818–2826 (2016)

Hornik, K., Stinchcombe, M. & White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 2, 359–366 (1989).

Dertinger, T. et al. Two-focus fluorescence correlation spectroscopy: a new tool for accurate and absolute diffusion measurements. Chemphyschem 8, 433–443 (2007).

Bogawat, Y., Krishnan, S., Simmel, F. C. & Santiago, I. Tunable 2D diffusion of DNA nanostructures on lipid membranes. Biophys. J. 121, 4810–4818 (2022).

Culbertson, M. J. et al. Numerical fluorescence correlation spectroscopy for the analysis of molecular dynamics under nonstandard conditions. Anal. Chem. 79, 4031–4039 (2007). Numerical fluorescence correlation spectroscopy (NFCS) is a model-free approach, matching FCS data to simulations in experimentally determined observation geometries.

Lerner, E. et al. FRET-based dynamic structural biology: challenges, perspectives and an appeal for open-science practices. eLife 10, e60416 (2021).

Schrimpf, W., Barth, A., Hendrix, J. & Lamb, D. C. PAM: a framework for integrated analysis of imaging, single-molecule, and ensemble fluorescence data. Biophys. J. 114, 1518–1528 (2018).

Sankaran, J., Shi, X., Ho, L. Y., Stelzer, E. H. & Wohland, T. ImFCS: a software for imaging FCS data analysis and visualization. Opt. Express 18, 25468–25481 (2010).

Krieger, J. W. et al. Imaging fluorescence (cross-) correlation spectroscopy in live cells and organisms. Nat. Protoc. 10, 1948–1974 (2015).

Wohland, T., Rigler, R. & Vogel, H. The standard deviation in fluorescence correlation spectroscopy. Biophys. J. 80, 2987–2999 (2001).

Sankaran, J., Bag, N., Kraut, R. S. & Wohland, T. Accuracy and precision in camera-based fluorescence correlation spectroscopy measurements. Anal. Chem. 85, 3948–3954 (2013).

Schaetzel, K. & Peters, R. Noise On Multiple-tau Photon Correlation Data. Vol. 1430 (SPIE, 1991).

Kohler, J., Hur, K.-H. & Mueller, J. D. Autocorrelation function of finite-length data in fluorescence correlation spectroscopy. Biophys. J. 122, 241–253 (2023). This study provides a theoretical framework for analyzing FCS data with drifts or spikes in fluorescence which are not amenable to conventional FCS data analysis.

Tcherniak, A., Reznik, C., Link, S. & Landes, C. F. Fluorescence correlation spectroscopy: criteria for analysis in complex systems. Anal. Chem. 81, 746–754 (2009).

Meseth, U., Wohland, T., Rigler, R. & Vogel, H. Resolution of fluorescence correlation measurements. Biophys. J. 76, 1619–1631 (1999).

Wawrezinieck, L., Rigneault, H., Marguet, D. & Lenne, P. F. Fluorescence correlation spectroscopy diffusion laws to probe the submicron cell membrane organization. Biophys. J. 89, 4029–4042 (2005).

Eggeling, C. et al. Direct observation of the nanoscale dynamics of membrane lipids in a living cell. Nature 457, 1159–1162 (2009). This paper introduces STED-FCS with a spot size down to 30 nm to study the dynamics of biomolecules in nanoscale domains in live cell membranes.

Digman, M. A., Dalal, R., Horwitz, A. F. & Gratton, E. Mapping the number of molecules and brightness in the laser scanning microscope. Biophys. J. 94, 2320–2332 (2008).

Unruh, J. R. & Gratton, E. Analysis of molecular concentration and brightness from fluorescence fluctuation data with an electron multiplied CCD camera. Biophys. J. 95, 5385–5398 (2008).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning. (MIT Press, 2016).

Creswell, A. et al. Generative adversarial networks: an overview. IEEE Signal Process. Mag. 35, 53–65 (2018).

Bishop, C. M. Mixture Density Networks (Aston University, 1994).

Korzh, S. et al. Requirement of vasculogenesis and blood circulation in late stages of liver growth in zebrafish. BMC Dev. Biol. 8, 84 (2008).

Shi, X. et al. Probing events with single molecule sensitivity in zebrafish and Drosophila embryos by fluorescence correlation spectroscopy. Dev. Dyn. 238, 3156–3167 (2009).

Yu, S. R. et al. Fgf8 morphogen gradient forms by a source-sink mechanism with freely diffusing molecules. Nature 461, 533–536 (2009). FCS was used to demonstrate that Fgf8 morphogen gradients are maintained by rapid Fgf8 diffusion and receptor endocytosis in living zebrafish embryos.

Nowak, M., Machate, A., Yu, S. R., Gupta, M. & Brand, M. Interpretation of the FGF8 morphogen gradient is regulated by endocytic trafficking. Nat. Cell Biol. 13, 153–158 (2011).

Teh, C., Sun, G., Shen, H., Korzh, V. & Wohland, T. Modulating the expression level of secreted Wnt3 influences cerebellum development in zebrafish transgenics. Development 142, 3721–3733 (2015).

Wang, Y., Wang, X., Wohland, T. & Sampath, K. Extracellular interactions and ligand degradation shape the nodal morphogen gradient. Elife 5, e13879 (2016).

Wang, J. et al. Anosmin1 shuttles Fgf to facilitate its diffusion, increase its local concentration, and induce sensory organs. Dev. Cell 46, 751–766.e12 (2018).

Koh, A. et al. Fluorescence correlation spectroscopy reveals survival motor neuron oligomerization but no active transport in motor axons of a zebrafish model for spinal muscular atrophy. Front. Cell Dev. Biol. 9, 639904 (2021).

Wang, Z., Marcu, O., Berns, M. & Marsh, J. L. In vivo FCS measurements of ligand diffusion in intact tissues. Vol. 5323 (SPIE, 2004).

Abu-Arish, A., Porcher, A., Czerwonka, A., Dostatni, N. & Fradin, C. High mobility of bicoid captured by fluorescence correlation spectroscopy: implication for the rapid establishment of its gradient. Biophys. J. 99, L33–L35 (2010).

Beam, M., Silva, M. C. & Morimoto, R. I. Dynamic imaging by fluorescence correlation spectroscopy identifies diverse populations of polyglutamine oligomers formed in vivo. J. Biol. Chem. 287, 26136–26145 (2012).

Zhao, P. et al. Aurora-A breaks symmetry in contractile actomyosin networks independently of its role in centrosome maturation. Dev. Cell 48, 631–645.e6 (2019).

Dhasmana, D. et al. Wnt3 is lipidated at conserved cysteine and serine residues in zebrafish neural tissue. Front. Cell Dev. Biol. 9, 671218 (2021).

Pan, X., Yu, H., Shi, X., Korzh, V. & Wohland, T. Characterization of flow direction in microchannels and zebrafish blood vessels by scanning fluorescence correlation spectroscopy. J. Biomed. Opt. 12, 014034 (2007).

Pan, X., Shi, X., Korzh, V., Yu, H. & Wohland, T. Line scan fluorescence correlation spectroscopy for three-dimensional microfluidic flow velocity measurements. J. Biomed. Opt. 14, 024049 (2009).

Ries, J., Yu, S. R., Burkhardt, M., Brand, M. & Schwille, P. Modular scanning FCS quantifies receptor-ligand interactions in living multicellular organisms. Nat. Methods 6, 643–645 (2009).

Petrásek, Z. et al. Characterization of protein dynamics in asymmetric cell division by scanning fluorescence correlation spectroscopy. Biophys. J. 95, 5476–5486 (2008).

Petrášek, Z., Hoege, C., Hyman, A. & Schwille, P. Two-photon Fluorescence Imaging And Correlation Analysis Applied To Protein Dynamics In C. elegans Embryo. Vol. 6860 (SPIE, 2008).

Shi, X. et al. Determination of dissociation constants in living zebrafish embryos with single wavelength fluorescence cross-correlation spectroscopy. Biophys. J. 97, 678–686 (2009).

Mattes, B. et al. Wnt/PCP controls spreading of Wnt/β-catenin signals by cytonemes in vertebrates. eLife 7, e36953 (2018).

Veerapathiran, S. et al. Wnt3 distribution in the zebrafish brain is determined by expression, diffusion and multiple molecular interactions. Elife 9, e59489 (2020).

Brunt, L. et al. Vangl2 promotes the formation of long cytonemes to enable distant Wnt/β-catenin signaling. Nat. Commun. 12, 2058 (2021).

Ng, X. W., Teh, C., Korzh, V. & Wohland, T. The secreted signaling protein wnt3 is associated with membrane domains in vivo: a SPIM-FCS study. Biophys. J. 111, 418–429 (2016).

Shi, X., Foo, Y. H., Korzh, V., Ahmed, S. & Wohland, T. Live Imaging in Zebrafish, pp 69–103 (World Scientific Publishing Co. Pte. Ltd., 2010).

Müller, P., Rogers, K. W., Yu, S. R., Brand, M. & Schier, A. F. Morphogen transport. Development 140, 1621–1638 (2013).

Dawes, M. L., Soeller, C. & Scholpp, S. Studying molecular interactions in the intact organism: fluorescence correlation spectroscopy in the living zebrafish embryo. Histochem. Cell Biol. 154, 507–519 (2020).

Leroux, C. E., Wang, I., Derouard, J. & Delon, A. Adaptive optics for fluorescence correlation spectroscopy. Opt. Express 19, 26839–26849 (2011). The authors demonstrate how adaptive optics correct the effect of optical aberrations on FCS measurements.

Barbotin, A., Galiani, S., Urbančič, I., Eggeling, C. & Booth, M. J. Adaptive optics allows STED-FCS measurements in the cytoplasm of living cells. Opt. Express 27, 23378–23395 (2019).

Fossum, E. R., Ma, J., Masoodian, S., Anzagira, L. & Zizza, R. The quanta image sensor: every photon counts. Sensors 16, 1260 (2016).

Perez-Camps, M. et al. Quantitative imaging reveals real-time Pou5f3-Nanog complexes driving dorsoventral mesendoderm patterning in zebrafish. Elife 5, e11475 (2016).

Kesavan, G. et al. Isthmin1, a secreted signaling protein, acts downstream of diverse embryonic patterning centers in development. Cell Tissue Res. 383, 987–1002 (2021).

Zhou, S. et al. Free extracellular diffusion creates the Dpp morphogen gradient of the Drosophila wing disc. Curr. Biol. 22, 668–675 (2012).

Acknowledgements

The authors would like to thank Dr. Wai Hoh Tang for comments on the manuscript. T.W. gratefully acknowledges funding from the Singapore Ministry of Education (MOE2016-T3-1-005).

Author information

Authors and Affiliations

Contributions

J.S. and T.W. prepared the layout of the article and wrote the text in this Mini Review.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Biology thanks Steve Presse, Jelle Hendrix, Paul Wiseman, and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Primary Handling Editor: Manuel Breuer.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sankaran, J., Wohland, T. Current capabilities and future perspectives of FCS: super-resolution microscopy, machine learning, and in vivo applications. Commun Biol 6, 699 (2023). https://doi.org/10.1038/s42003-023-05069-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42003-023-05069-6

This article is cited by

-

More than double the fun with two-photon excitation microscopy

Communications Biology (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.