Abstract

Robots and animals both experience the world through their bodies and senses. Their embodiment constrains their experiences, ensuring that they unfold continuously in space and time. As a result, the experiences of embodied agents are intrinsically correlated. Correlations create fundamental challenges for machine learning, as most techniques rely on the assumption that data are independent and identically distributed. In reinforcement learning, where data are directly collected from an agent’s sequential experiences, violations of this assumption are often unavoidable. Here we derive a method that overcomes this issue by exploiting the statistical mechanics of ergodic processes, which we term maximum diffusion reinforcement learning. By decorrelating agent experiences, our approach provably enables single-shot learning in continuous deployments over the course of individual task attempts. Moreover, we prove our approach generalizes well-known maximum entropy techniques and robustly exceeds state-of-the-art performance across popular benchmarks. Our results at the nexus of physics, learning and control form a foundation for transparent and reliable decision-making in embodied reinforcement learning agents.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Data supporting the findings of this study are available via Zenodo at https://doi.org/10.5281/zenodo.10723320 (ref. 71).

Code availability

Code supporting the findings of this study is available via Zenodo at https://doi.org/10.5281/zenodo.10723320 (ref. 71).

References

Degrave, J. et al. Magnetic control of tokamak plasmas through deep reinforcement learning. Nature 602, 414–419 (2022).

Won, D.-O., Müller, K.-R. & Lee, S.-W. An adaptive deep reinforcement learning framework enables curling robots with human-like performance in real-world conditions. Sci. Robot. 5, eabb9764 (2020).

Irpan, A. Deep reinforcement learning doesn’t work yet. Sorta Insightful www.alexirpan.com/2018/02/14/rl-hard.html (2018).

Henderson, P. et al. Deep reinforcement learning that matters. In Proc. 32nd AAAI Conference on Artificial Intelligence (eds McIlraith, S. & Weinberger, K.) 3207–3214 (AAAI, 2018).

Ibarz, J. et al. How to train your robot with deep reinforcement learning: lessons we have learned. Int. J. Rob. Res. 40, 698–721 (2021).

Lillicrap, T. P. et al. Proc. 4th International Conference on Learning Representations (ICLR, 2016).

Haarnoja, T., Zhou, A., Abbeel, P. & Levine, S. Soft actor-critic: off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Proc. 35th International Conference on Machine Learning (eds Dy, J. & Krause, A.) 1861–1870 (PMLR, 2018).

Plappert, M. et al. Proc. 6th International Conference on Learning Representations (ICLR, 2018).

Lin, L.-J. Self-improving reactive agents based on reinforcement learning, planning and teaching. Mach. Learn. 8, 293–321 (1992).

Schaul, T., Quan, J., Antonoglou, I. & Silver, D. Proc. 4th International Conference on Learning Representations (ICLR, 2016).

Andrychowicz, M. et al. Hindsight experience replay. In Proc. Advances in Neural Information Processing Systems 30 (eds Guyon, I. et al.) 5049–5059 (Curran Associates, 2017).

Zhang, S. & Sutton, R. S. A deeper look at experience replay. Preprint at https://arxiv.org/abs/1712.01275 (2017).

Wang, Z. et al. Proc. 5th International Conference on Learning Representations (ICLR, 2017).

Hessel, M. et al. Rainbow: combining improvements in deep reinforcement learning. In Proc. 32nd AAAI Conference on Artificial Intelligence (eds McIlraith, S. and Weinberger, K.) 3215–3222 (AAAI Press, 2018).

Fedus, W. et al. Revisiting fundamentals of experience replay. In Proc. 37th International Conference on Machine Learning (eds Daumé III, H. & Singh, A.) 3061–3071 (JMLR.org, 2020).

Mnih, V. et al. Human-level control through deep reinforcement learning. Nature 518, 529–533 (2015).

Ziebart, B. D., Maas, A. L., Bagnell, J. A. & Dey, A. K. Maximum entropy inverse reinforcement learning. In Proc. 23rd AAAI Conference on Artificial Intelligence (ed. Cohn, A.) 1433–1438 (AAAI, 2008).

Ziebart, B. D., Bagnell, J. A. & Dey, A. K. Modeling interaction via the principle of maximum causal entropy. In Proc. 27th International Conference on Machine Learning (eds Fürnkranz, J. & Joachims, T.) 1255–1262 (Omnipress, 2010).

Ziebart, B. D. Modeling Purposeful Adaptive Behavior with the Principle of Maximum Causal Entropy. PhD thesis, Carnegie Mellon Univ. (2010).

Todorov, E. Efficient computation of optimal actions. Proc. Natl Acad. Sci. USA 106, 11478–11483 (2009).

Toussaint, M. Robot trajectory optimization using approximate inference. In Proc. 26th International Conference on Machine Learning (eds Bottou, L. & Littman, M.) 1049–1056 (ACM, 2009).

Rawlik, K., Toussaint, M. & Vijayakumar, S. On stochastic optimal control and reinforcement learning by approximate inference. In Proc. Robotics: Science and Systems VIII (eds Roy, N. et al.) 353–361 (MIT, 2012).

Levine, S. & Koltun, V. Guided policy search. In Proc. 30th International Conference on Machine Learning (eds Dasgupta, S. & McAllester, D.) 1–9 (JMLR.org, 2013).

Haarnoja, T., Tang, H., Abbeel, P. & Levine, S. Reinforcement learning with deep energy-based policies. In Proc. 34th International Conference on Machine Learning (eds Precup, D. & Teh, Y. W.) 1352–1361 (JMLR.org, 2017).

Haarnoja, T. et al. Learning to walk via deep reinforcement learning. In Proc. Robotics: Science and Systems XV (eds Bicchi, A. et al.) (RSS, 2019).

Eysenbach, B. & Levine, S. Proc. 10th International Conference on Learning Representations (ICLR, 2022).

Chen, M. et al. Top-K off-policy correction for a REINFORCE recommender system. In Proc. 12th ACM International Conference on Web Search and Data Mining (eds Bennett, P. N. & Lerman, K.) 456–464 (ACM, 2019).

Afsar, M. M., Crump, T. & Far, B. Reinforcement learning based recommender systems: a survey. ACM Comput. Surv. 55, 1–38 (2022).

Chen, X., Yao, L., McAuley, J., Zhou, G. & Wang, X. Deep reinforcement learning in recommender systems: a survey and new perspectives. Knowl. Based Syst. 264, 110335 (2023).

Sontag, E. D. Mathematical Control Theory: Deterministic Finite Dimensional Systems (Springer, 2013).

Hespanha, J. P. Linear Systems Theory 2nd edn (Princeton Univ. Press, 2018).

Mitra, D. W-matrix and the geometry of model equivalence and reduction. Proc. Inst. Electr. Eng. 116, 1101–1106 (1969).

Dean, S., Mania, H., Matni, N., Recht, B. & Tu, S. On the sample complexity of the linear quadratic regulator. Found. Comput. Math. 20, 633–679 (2020).

Tsiamis, A. & Pappas, G. J. Linear systems can be hard to learn. In Proc. 60th IEEE Conference on Decision and Control (ed. Prandini, M.) 2903–2910 (IEEE, 2021).

Tsiamis, A., Ziemann, I. M., Morari, M., Matni, N. & Pappas, G. J. Learning to control linear systems can be hard. In Proc. 35th Conference on Learning Theory (eds Loh, P.-L. & Raginsky, M.) 3820–3857 (PMLR, 2022).

Williams, G. et al. Information theoretic MPC for model-based reinforcement learning. In Proc. IEEE International Conference on Robotics and Automation (ed. Nakamura, Y.) 1714–1721 (IEEE, 2017).

So, O., Wang, Z. & Theodorou, E. A. Maximum entropy differential dynamic programming. In Proc. IEEE International Conference on Robotics and Automation (ed. Kress-Gazit, H.) 3422–3428 (IEEE, 2022).

Thrun, S. B. Efficient Exploration in Reinforcement Learning. Technical report (Carnegie Mellon Univ., 1992).

Amin, S., Gomrokchi, M., Satija, H., van Hoof, H. & Precup, D. A survey of exploration methods in reinforcement learning. Preprint at https://arXiv.org/2109.00157 (2021).

Jaynes, E. T. Information theory and statistical mechanics. Phys. Rev. 106, 620–630 (1957).

Dixit, P. D. et al. Perspective: maximum caliber is a general variational principle for dynamical systems. J. Chem. Phys. 148, 010901 (2018).

Chvykov, P. et al. Low rattling: a predictive principle for self-organization in active collectives. Science 371, 90–95 (2021).

Kapur, J. N. Maximum Entropy Models in Science and Engineering (Wiley, 1989).

Moore, C. C. Ergodic theorem, ergodic theory, and statistical mechanics. Proc. Natl Acad. Sci. USA 112, 1907–1911 (2015).

Taylor, A. T., Berrueta, T. A. & Murphey, T. D. Active learning in robotics: a review of control principles. Mechatronics 77, 102576 (2021).

Seo, Y. et al. State entropy maximization with random encoders for efficient exploration. In Proc. 38th International Conference on Machine Learning, Virtual (eds Meila, M. & Zhang, T.) 9443–9454 (ICML, 2021).

Prabhakar, A. & Murphey, T. Mechanical intelligence for learning embodied sensor-object relationships. Nat. Commun. 13, 4108 (2022).

Chentanez, N., Barto, A. & Singh, S. Intrinsically motivated reinforcement learning. In Proc. Advances in Neural Information Processing Systems 17 (eds Saul, L. et al.) 1281–1288 (MIT, 2004).

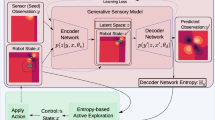

Pathak, D., Agrawal, P., Efros, A. A. & Darrell, T. Curiosity-driven exploration by self-supervised prediction. In Proc. 34th International Conference on Machine Learning (eds Precup, D. & Teh, Y. W.) 2778–2787 (JLMR.org, 2017).

Taiga, A. A., Fedus, W., Machado, M. C., Courville, A. & Bellemare, M. G. Proc. 8th International Conference on Learning Representations (ICLR, 2020).

Wang, X., Deng, W. & Chen, Y. Ergodic properties of heterogeneous diffusion processes in a potential well. J. Chem. Phys. 150, 164121 (2019).

Palmer, R. G. Broken ergodicity. Adv. Phys. 31, 669–735 (1982).

Islam, R., Henderson, P., Gomrokchi, M. & Precup, D. Reproducibility of benchmarked deep reinforcement learning tasks for continuous control. Preprint at https://arXiv.org/1708.04133 (2017).

Moos, J. et al. Robust reinforcement learning: a review of foundations and recent advances. Mach. Learn. Knowl. Extr. 4, 276–315 (2022).

Strehl, A. L., Li, L., Wiewiora, E., Langford, J. & Littman, M. L. PAC model-free reinforcement learning. In Proc. 23rd International Conference on Machine Learning (eds Cohen, W. W. & Moore, A.) 881–888 (ICML, 2006).

Strehl, A. L., Li, L. & Littman, M. L. Reinforcement learning in finite MDPs: PAC analysis. J. Mach. Learn. Res. 10, 2413–2444 (2009).

Kirk, R., Zhang, A., Grefenstette, E. & Rocktäaschel, T. A survey of zero-shot generalisation in deep reinforcement learning. J. Artif. Intell. Res. 76, 201–264 (2023).

Oh, J., Singh, S., Lee, H. & Kohli, P. Zero-shot task generalization with multi-task deep reinforcement learning. In Proc. 34th International Conference on Machine Learning (eds Precup, D. & Teh, Y. W.) 2661–2670 (JLMR.org, 2017).

Krakauer, J. W., Hadjiosif, A. M., Xu, J., Wong, A. L. & Haith, A. M. Motor learning. Compr. Physiol. 9, 613–663 (2019).

Lu, K., Grover, A., Abbeel, P. & Mordatch, I. Proc. 9th International Conference on Learning Representations (ICLR, 2021).

Chen, A., Sharma, A., Levine, S. & Finn, C. You only live once: single-life reinforcement learning. In Proc. Advances in Neural Information Processing Systems 35 (eds Koyejo, S. et al.) 14784–14797 (NeurIPS, 2022).

Ames, A., Grizzle, J. & Tabuada, P. Control barrier function based quadratic programs with application to adaptive cruise control. In Proc. 53rd IEEE Conference on Decision and Control 6271–6278 (IEEE, 2014).

Taylor, A., Singletary, A., Yue, Y. & Ames, A. Learning for safety-critical control with control barrier functions. In Proc. 2nd Conference on Learning for Dynamics and Control (eds Bayen, A. et al.) 708–717 (PLMR, 2020).

Xiao, W. et al. BarrierNet: differentiable control barrier functions for learning of safe robot control. IEEE Trans. Robot. 39, 2289–2307 (2023).

Seung, H. S., Sompolinsky, H. & Tishby, N. Statistical mechanics of learning from examples. Phys. Rev. A 45, 6056–6091 (1992).

Chen, C., Murphey, T. D. & MacIver, M. A. Tuning movement for sensing in an uncertain world. eLife 9, e52371 (2020).

Song, S. et al. Deep reinforcement learning for modeling human locomotion control in neuromechanical simulation. J. Neuroeng. Rehabil. 18, 126 (2021).

Berrueta, T. A., Murphey, T. D. & Truby, R. L. Materializing autonomy in soft robots across scales. Adv. Intell. Syst. 6, 2300111 (2024).

Sutton, R. S. & Barto, A. G. Reinforcement Learning: An Introduction (MIT, 2018).

Virtanen, P. et al. SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat. Methods 17, 261–272 (2020).

Berrueta, T. A., Pinosky, A. & Murphey, T. D. Maximum diffusion reinforcement learning repository. Zenodo https://doi.org/10.5281/zenodo.10723320 (2024).

Acknowledgements

We thank A. T. Taylor, J. Weber and P. Chvykov for their comments on early drafts of this work. We acknowledge funding from the US Army Research Office MURI grant no. W911NF-19-1-0233 and the US Office of Naval Research grant no. N00014-21-1-2706. We also acknowledge hardware loans and technical support from Intel Corporation, and T.A.B. is partially supported by the Northwestern University Presidential Fellowship.

Author information

Authors and Affiliations

Contributions

T.A.B. derived all theoretical results, performed supplementary data analyses and control experiments, supported RL experiments and wrote the manuscript. A.P. developed and tested RL algorithms, carried out all RL experiments and supported manuscript writing. T.D.M. secured funding and guided the research programme.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks the anonymous reviewers for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Notes 1–4, Tables 1 and 2 and Figs. 1–9.

Supplementary Video 1

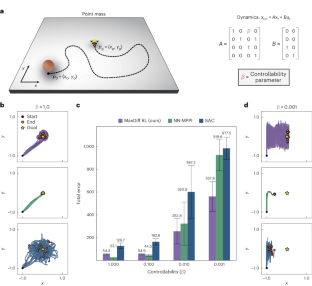

Depicts an application of MaxDiff RL to MuJoCo’s swimmer environment. We explore the role of the temperature parameter’s performance by varying it across three orders of magnitude.

Supplementary Video 2

Depicts an application of MaxDiff RL to MuJoCo’s swimmer environment, with comparisons to NN-MPPI and SAC. The performance of MaxDiff RL does not vary across seeds. This is tested across two different system conditions: one with a light-tailed and more controllable swimmer and one with a heavy-tailed and less controllable swimmer.

Supplementary Video 3

Depicts an application of MaxDiff RL to MuJoCo’s swimmer environment. We perform a transfer learning experiment in which neural representations are learned on a system with a given set of properties and then deployed on a system with different properties. MaxDiff RL remains task-capable across agent embodiments.

Supplementary Video 4

Depicts an application of MaxDiff RL to MuJoCo’s swimmer environment under a substantial modification. Agents cannot reset their environment, which requires solving the task in a single deployment. First, representative snapshots of single-shot deployments are shown. A complete playback of an individual MaxDiff RL single-shot learning trial is shown. Playback is staggered such that the first swimmer covers environment steps 1–2,000, the next one 2,001–4,000, and so on, for a total of 20,000 environment steps.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Berrueta, T.A., Pinosky, A. & Murphey, T.D. Maximum diffusion reinforcement learning. Nat Mach Intell 6, 504–514 (2024). https://doi.org/10.1038/s42256-024-00829-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-024-00829-3