Abstract

Emerging functional materials such as halide perovskites are intrinsically unstable, causing long-term instability in optoelectronic devices made from these materials. This leads to difficulty in capturing useful information on device degradation through time-consuming optical characterization in their operating environments. Despite these challenges, understanding the degradation mechanism is crucial for advancing the technology towards commercialization. Here we present a self-supervised machine learning model that utilizes a multi-channel correlation and blind denoising to recover images without high-quality references, enabling fast and low-dose measurements. We perform operando luminescence mapping of various emerging optoelectronic semiconductors, including organic and halide perovskite photovoltaic and light-emitting devices. By tracking the spatially resolved degradation in electroluminescence of mixed-halide perovskite blue-light-emitting diodes, we discovered that lateral ion migration (perpendicular to the external electric field) during device operation triggers the formation of chloride-rich defective regions that emit poorly—a mechanism that would not be resolvable with conventional imaging approaches.

Similar content being viewed by others

Main

Imaging in low illumination is challenging due to underexposure. Slow camera speeds can reduce noise in dark-scene photography but are not easily applicable to microscopy imaging due to irreversible photobleaching and material degradation1. Emerging functional materials such as halide perovskites for photovoltaic and light-emitting applications are unstable due to their soft, ionic material nature2. The long-term operation of solar cells and light-emitting diodes (LEDs) made from these materials remains a grand challenge, with noticeable changes in optoelectronic properties, particularly for materials with mixed halides, in which halide segregation readily occurs3. Degradation is particularly severe in LEDs, in which high fields and current densities cause very rapid degradation, and the pathways for these processes are poorly understood4. These nascent devices suffer from low photoluminescence (PL) and electroluminescence (EL), hindering imaging of the devices in their operating environments to capture useful information about device degradation. Such insights are critical for addressing stability issues of any emerging devices, and hence for driving these technologies forward.

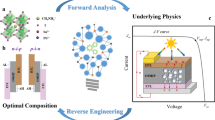

With a limited photon budget, a trade-off must be made between image quality, illumination and imaging speed5,6. To extend the boundary of this trilemma, photon-efficient computational approaches have been developed towards rapid and low-power imaging7 (Fig. 1a). Recently, algorithms based on machine learning (ML) have surpassed human performance in imaging, leading to promising results in image classification8, reconstruction9 and super-resolution10. However, these models often require adequate datasets of matched noisy and high-quality image pairs11, and such datasets are difficult to obtain for emerging functional materials that are easily degraded when exposed to the ambient, bias and/or light source12,13. Synthetically generated datasets have been proposed as an alternative to physically acquired datasets by synthesizing high-quality data through well established physical models14, but such an approach requires a sophisticated understanding of identical or similar samples15. Furthermore, concerns have been raised regarding the trustworthiness of ML-based models when predicting unseen samples that fall outside the distribution of the training data16,17. To address this issue, blind denoising algorithms18 that learn the data distribution directly from the noisy image have been developed. Methods such as Noise2Void19, Noise2Fast20 and Self2Self21 have achieved promising results in biological imaging. However, since these methods are primarily optimized for RGB or even greyscale images, they demand considerable amounts of computational resources to process large three-dimensional (3D) data. This limitation makes them impractical for use in multispectral imaging.

a, An imaging trilemma between quality, illumination and speed. With photon-efficient computational techniques, we can push the instrument’s capability towards lower illumination and faster imaging while maintaining a good SNR. b, A convolutional residual network with a universal noise-level estimator. The noise-level value corresponds to the s.d. of Gaussian noise. c, Non-blind denoising performance of various methods on a publicly available multispectral dataset containing a 3D airborne hyperspectral image of the Washington DC Mall29. Inset: the two-dimensional wavelength-specific images at 400 nm. Methods with names in blue are deep-learning-based algorithms. d, Study of model performance across a range of noise levels, comparing our proposed generalized model with low-/medium-/heavy-noise-specific models that were trained with a given Gaussian noise. e, The PSNR improvement after denoising against the number of channels (n) of the input image (σ = 20). The dataset was prepared by cropping the first n channels from the original data cube. QRNN3D and our approach were trained with multiple noise levels (σ = 10, 20 and 50).

Here, we overcome these challenges by developing a self-supervised ML method that utilizes multi-channel correlation to recover 3D images without high-quality references of the unknown sample, uniquely allowing us to speed up image capture. We develop experimental capabilities to measure hyperspectral in situ PL and in operando EL maps of semiconductor films and devices and generate data from a variety of next-generation halide perovskite and organic materials for photovoltaic and light-emitting applications. Applying our model to these data allows us to visualize dynamic processes and unveil device degradation mechanisms in a way that would not be possible with conventional imaging techniques.

Results

We implemented our approach with convolutional neural networks (CNNs) composed of a noise-level estimator followed by a residually connected U-Net22 architecture (Extended Data Fig. 1). The estimator calculates the extent of noise present in the multi-channel images, and the results are concatenated with the noisy image cube and passed to the feed-forward CNNs to reconstruct noise-suppressed images (Fig. 1b; see Extended Data Fig. 2 for the influence of the noise-level estimator on hidden layers). Our model efficiently denoises data by uniquely utilizing cross-spectrum correlations with an additional loss function that optimizes the network to recover noisy pixels with information from neighbouring channels (Methods). While previous works that utilize noise parameter23,24,25,26 or distortion kernels27,28 for noise modelling are difficult to implement in real-world scenarios due to challenges in obtaining this information (Extended Data Table 1), our noise-level detector provides an automatic estimation of the noise level, which makes it suitable for a range of scientific imaging applications (see noise-type discussion in Supplementary Note 1, and network interpretation in Supplementary Note 4).

The noise-level detector enables blind denoising based on a self-supervised21 learning strategy. Self-supervised learning is a form of ML where a model learns to make predictions about unlabelled data without explicit human annotations. Instead of relying on manually labelled examples, self-supervised learning leverages the inherent structure or patterns within the data to create training signals. In our method, the learning process is self-supervised as the network trains itself to denoise a specific material sample image by first learning to recover the original input image from noisy versions of it with synthesized noise. With this strategy, the system generates synthesized training image pairs automatically and learns to denoise itself. Through modelling the correlations of relative noise levels, our method can denoise the raw image by sensing the noise levels between the raw image and the desired output with the aid of the trained noise-level detector (Fig. 1b).

We first evaluated the non-blind denoising performance of our model by pretraining it on a publicly available, standard benchmark dataset in remote sensing29, which was a 3D airborne map that contained many wavelength-specific images. An image cube of 32 wavelength channels was selected and corrupted with additive Gaussian noise of σ = 20 (for a data range of 0–255) and then denoised using our strategies, compared with various methods from the literature (Fig. 1c). Our model achieved average improvements of 17.2% and 16.9% in peak signal-to-noise ratio (PSNR) and structural similarity index measure30 (which assesses the visual quality), respectively, over conventional handcrafted algorithms (BM3D23, LLRT24 and BM4D31) and recent ML-based models (HS-Prior32, HSI-DeNet33 and QRNN3D34) (Fig. 1c and Extended Data Fig. 3; see Extended Data Table 2 for all noise levels). Details on the noisy image were accurately restored by our model (Fig. 1c, inset), and our denoised images achieved 21.9% less error against the reference spectrum when compared with the best algorithms (Extended Data Table 2).

We further demonstrate the effectiveness of the noise-level estimator by evaluating models across different light conditions. With a noise-level estimator and multi-noise-level training (training with noisy images of σ = 10, 20 and 50 simultaneously), the model achieved the best PSNR when compared with our best CNN models that had been trained with one specific noise level (Fig. 1d). When we removed the noise-level estimator, the remaining CNN models and other literature models encountered performance drops near low-noise and high-noise extremes (Extended Data Table 3). Our deeply fused noise-level estimator strengthens the robustness of the model against uncontrollable light conditions, leading to improved denoising performance across all noise levels, and thus it overcomes the complexities of learning multiple tasks at the same time (Fig. 1d).

The performance of our model improves as the number of channels in the input image increases and our loss function leverages information from neighbouring channels (Fig. 1e). The model reaches its optimum performance when the input image contains more than 24 channels. For a 32-channel model, the pretraining step takes 8 h, while denoising a testing image only requires 3.5 s (Supplementary Table 1). The processing step is substantially shorter when compared with traditional algorithms such as LLRT, which typically take more than 1 h (Supplementary Table 1).

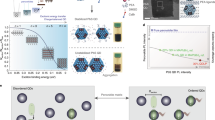

Having strong performance in pretraining, the model can then fine-tune itself on the raw image that we want it to denoise by using our self-learning approach. We perform blind denoising on hyperspectral luminescence microscopy images of semiconductor films of emerging technologies (Fig. 2). Since the evaluation is challenging for unstable materials due to difficulties in capturing paired noisy–clean data, we started by monitoring the PL of an organic emitter for organic LEDs (OLEDs) with moderate stability, (4s,6s)-2,4,5,6-tetra(9H-carbazol-9-yl) isophthalonitrile (4CzIPN), where we used a wide range of camera settings in dataset collection. As the integration time decreased, features in the film became indiscernible to the human eye (Supplementary Fig. 1). According to the Rose model, an estimated signal-to-noise ratio (SNR) of 5–6 is required for human observers to reach 90% correct responses in detecting disk signals35,36. Therefore, we selected the noisy image taken with a 0.03 s exposure and an average film SNR of 2 at the PL peak wavelength (560 nm) to compare different denoising methods.

a, Visual comparison of each method on a 3D hyperspectral image of organic mCBP (3,3-di(9H-carbazole-9-yl)biphenyl)-doped 4CzIPN films taken with a hyperspectral microscopy set-up. The sample was captured under the excitation of a 405 nm continuous-wave (CW) laser with an intensity of 100 mW cm−2 in air at room temperature. Two-dimensional PL-intensity maps at 560 nm are shown. For the Gaussian filtering method, we used a kernel size of 10. b,c, Normalized PL curves across wavelength channels of the full image (b) and one single pixel (white circle in a) (c). d, Processing speed in kilopixels per second of each method with different input image sizes. A cross indicates that the algorithm has exhausted the computational resources (that is, out of graphics processing unit memory) and failed to process the image during either the training or testing stage. All algorithms were run on a single Tesla P100 graphics processing unit with 16 GB memory. W, H and C are the width, height and number of channels of the 3D image, respectively.

Our model successfully restored the surface features and accurately fitted the PL spectra of 4CzIPN (Fig. 2a; see the bulk spectrum in Fig. 2b and the single-pixel spectrum in Fig. 2c). Traditional handcrafted approaches such as Gaussian filtering and LLRT generated undersmoothed or oversmoothed images (Fig. 2a), while the deep-prior-based blind denoising models, Noise2Void and Noise2Fast, distorted the PL spectra of the film (Fig. 2c). We note that Self2Self, the state-of-the-art blind denoising algorithm, while having slightly better visual results in Fig. 2a, failed to denoise a full-sized 3D image due to its high computational resource demands (Fig. 2d). We demonstrate that our method can handle big 3D hyperspectral data (1,024 × 1,024 × 176, in which the size of channels can be further expanded; Methods) while maintaining considerably higher processing speed when compared with other methods. We attribute the low performance of Noise2Fast to its optimized protocol for greyscale images, which processes individual two-dimensional image slices and misses crucial information from the cross-channel correlation.

We now explore the application of our method to halide perovskites (Fig. 3), emerging semiconductor materials for photovoltaic and light-emitting applications, which are sensitive to light and ambient conditions. We synthesized CsPbBr3 nanoplatelets (NPLs) for sky-blue LEDs37 and captured PL maps of deposited films with the hyperspectral PL microscope (Supplementary Fig. 2). We found spatial inhomogeneity in the PL spectrum, which corresponds to NPLs with various numbers of octahedral monolayers ranging from n = 3 to 6 (Fig. 3a)38. After denoising, we observed NPL clusters with far less visual uncertainty when the SNR of the raw image was below 1 (Fig. 3b), and the model achieved an improvement in SNR of up to twofold across each wavelength (Fig. 3c).

a,b, Normalized PL-intensity maps of a self-assembled CsPbBr3 perovskite NPL film comparing raw (a) with processed (b) mapping from 450 to 530 nm. c, SNR (above) and percentage improvement (below) after image restoration with respect to wavelength-specific images. The statistics was calculated from evenly cropped subzones (sample size n = 100) of the original image in Supplementary Fig. 2. The percentage improvements are presented as median values with the error bars of the lower and upper quartiles. d, Micro-PL peak position estimations based on raw and processed data for regions with high PL intensities (average photon counts of ≥5). Inset: histogram of differences between raw and processed predictions. e, Peak-wavelength estimation based on the raw data (above) and processed data (below) against the manually derived ground truth. f,g, PL images of triple-cation Cs0.05FA0.78MA0.17Pb(I0.83Br0.17)3 perovskite films comparing raw (f) and processed (g) normalized PL-intensity maps of given wavelengths. Arrows highlight local grain regions with distinct emission characteristics, suggesting variations in halide ratios within these regions. h, Local-PL spectra of thermally evaporated wide-gap FA0.7Cs0.3Pb(I0.6Br0.4)3 perovskite films of one camera pixel, comparing the obtained PL spectra of the first scan captured with an integration time of 1 s (slow scan) and 0.1 s (fast scan) per wavelength step, with subsequent denoising on the fast scan results. i, Local-PL evolution of the wide-gap perovskite film captured by fast scan (above) and our proposed method (below) over 20 min. A 405 nm CW laser was used to excite samples with an intensity of 100 mW cm−2.

To evaluate the performance of our approach in restoring the optical properties, we compared the raw and processed data in estimating the micro-PL peak wavelength positions. Our approach accurately modelled the local emission spectrum for each NPL cluster, as demonstrated by the strong agreement between the two estimations in high-PL-intensity regions (with an s.d. difference of only 0.19 nm) (Fig. 3d). We further examined the low-PL-intensity regions by comparing the results with the ground truth derived from a map of the same region, which had lower spatial resolution and better signals (Fig. 3e; see also Extended Data Fig. 4 for mapping and Supplementary Note 7 for method). Our approach reduced the PL peak estimation error by over 40% when compared with an estimation based on raw data (Extended Data Fig. 4). This approach allows us to capture hyperspectral maps with high image quality and minimal damage to the sample from the measurement.

We next imaged perovskite films of a composition widely used for photovoltaic applications. We solution-processed triple-cation Cs0.05FA0.78MA0.17Pb(I0.83Br0.17)3 perovskite films (FA, formamidinium; MA, methylammonium) on top of a SnO2/indium tin oxide (ITO)/glass half device stack39. We resolved individual grains under the PL microscope and observed nanoscale PL inhomogeneities between the grain boundaries and the grain interior (Fig. 3f), which were recently found in MAPbI3 films40. However, even with a long integration time of 1 s per wavelength step and a relatively emissive sample, the PL spectra of individual pixels still contained noticeable noise, and the noise became visually evident in PL maps close to the emission tail (Fig. 3f, at 740 and 850 nm), hindering human visual detection of small features and therefore inhibiting the scientific usefulness of the results35. With our approach, the SNRs of the wavelength-specific maps were improved by over 190% on average across the emission spectra (Extended Data Fig. 5), and we were able to precisely locate the most redshifted regions at the intersection of multiple perovskite grains (Fig. 3g, at 850 nm; see zoomed-in PL tails in Extended Data Fig. 5). Finally, we applied our restoration method to microscope mapping of CsPbBr3 nanosheet films and found that the microsized nanosheet had slightly thinner edges with blueshifted PL spectra when compared with the centre (Supplementary Fig. 3)41.

We have shown that our method can restore information under low illumination while minimizing distortions of the local spectrum. This enables us to actively reduce the camera integration time and push the microscope limit for experiments with extremely low photon budgets. We now tracked the in situ local-PL evolution of evaporated wide-gap FA0.7Cs0.3Pb(I0.6Br0.4)3 perovskite films relevant for tandem cells42 with different camera exposure settings, where the PL emission of the film redshifted over time under laser illumination due to halide segregation. Using a standard measurement approach where each wavelength step was integrated for 1 s, the local-PL spectrum of a pixel exhibited strong distortion in the first hyperspectral scan, including a redshifted emission shoulder, whereas the spectrum with a fast integration of 0.1 s contained high levels of noise (Fig. 3h). Our approach restored the spectrum from the noisy observations and generated a PL emission profile with substantially reduced noise. Compared with the traditional Gaussian filtering, our method eliminates human error in estimating the s.d. of the Gaussian kernel, where a manual guess of the parameter that is slightly off the optimum will result in completely different research claims (Supplementary Fig. 4). This enabled us to track the local spectrum evolution with shorter intervals than standard imaging protocols (Fig. 3i), important to finely capture the changes and the true images without interference from halide segregation3, which would otherwise give misleading results about the early-time film composition.

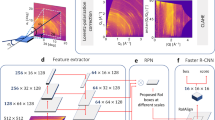

To take the approach even further, we investigated the operando degradation of mixed Br–Cl blue-emitting perovskite LEDs (Fig. 4). Perovskite LEDs with blue emission are showing relatively slow development compared with that of other colours due to colour instability caused by halide segregation under device operation43. We fabricated mixed Br–Cl blue perovskite LEDs with a method modified from ref. 44 and acquired both PL and EL mapping set-ups of the operating encapsulated device (set-ups shown in Fig. 4a; characterization details in Methods). We selected a region of interest near the pixel edge where we could see both inside and outside a device pixel (Fig. 4b). We recorded local EL changes with 25 continuous hyperspectral scans over 10 min of device operation, where each scan was fast enough to track the EL evolution with minimized spectrum distortion. We compare these with local-PL maps taken before and after the bias where the devices were shut off (Fig. 4c). After 10 min of bias at 6 V, a typical voltage for the device operation, the blue LED degraded rapidly with its luminance decreased to 40% of the initial value (Fig. 4g, inset).

a, Schematics of in situ PL (upper) and in operando EL (lower) mapping set-ups for measuring blue perovskite LEDs under the hyperspectral microscope. A 405 nm CW laser was used to excite the perovskite film and an external Keithley source meter was used to apply a bias voltage to the devices. b, Normalized PL-intensity map of a fresh blue perovskite LED pixel at 490 nm with no bias history. Regions both inside (below dashed line) and outside (above dashed line) the pixel are seen. c, PL peak-wavelength maps of blue perovskite LEDs operated for 0 min and 10 min. d,e, Normalized EL intensity evolution of the region in the dashed rectangle in b at 490 nm over 10 min, comparing raw (d) and processed (e) maps for 0–10 min. f, EL evolution of a given pixel at the centre of the circle in b with raw (upper) and processed (lower) spectra before and after Gaussian fitting. Estimated EL peak positions are shown in dotted lines. a.u., arbitrary units. g, The success rate of the curve fits against the average EL counts of a pixel. The inset shows the current density and luminance evolution of blue perovskite LEDs over time with a high voltage bias of 6 V. h, EL peak over time for the defective zones (inset) for five randomly selected local points (upper) and the zoomed-in statistic plot of all data points after denoising (lower). The centreline represents the median of the EL distribution.

Considering the limited photon budget and rapid degradation, the captured EL images contained a large degree of noise due to the short exposure times necessary to track the dynamic changes (Fig. 4d). With our approach, we successfully restored features of the growing defect regions in consecutive EL maps (Fig. 4e and Extended Data Fig. 6). The local EL evolution of a given pixel after denoising had much smoother transitions when compared with the raw data, resulting in a clear trend of spectral shift over time (Fig. 4f; see Extended Data Fig. 6 for EL peak maps). Moreover, as the perovskite LED degraded, lower EL counts were collected, leading to failures in finding the EL peak with Gaussian fitting at these locations in the raw data (Fig. 4g). Our method improved the success rate of fitting by over 20% when the average EL counts were low (Fig. 4g and Supplementary Fig. 5), and successfully reduced the estimation error in spatially resolved EL peak distribution of zones with distinct features (Fig. 4f; Fig. 4h, upper and lower quartiles in blue).

These combined improvements led to a precise tracking of the local EL evolutions to the extent that we were able to cross-correlate PL and EL shifts for the local regions over these in operando timescales (Fig. 4h). We first examined the micrometre-sized defective regions with high PL in Fig. 4b but low EL in Fig. 4e, which was probably caused by a thicker perovskite cluster with cracks through the device layout, resulting in strong PL intensity but poor electrical charge injection (and hence EL). After device operation, the PL of the defective regions was blueshifted (Fig. 4c), while the EL of the same defective regions was redshifted (Supplementary Fig. 5). This suggests that different perovskite compositions, one Br-rich (lower bandgap) and one Cl-rich (wider bandgap), were formed under bias at the defect locations. During device operation the injected carriers were funnelled into the lower-energy sites and recombined with each other to produce EL, while upon photoexcitation the generated excitons were able to readily recombine without requiring migration to find other carriers, leading to PL emission from slightly higher-energy sites than for EL. We attribute the blueshifted PL of the defective region to lateral ion migration of Cl ions towards the defective region, as the migration barrier of Cl− is considerably lower than that of Br− due to its smaller ionic radius45. This is probably caused by differences in carrier density concentrations across the lateral direction of the device, leading to more migration of Cl− ions into the central defective region. This mechanism could be considered as akin to the light-soaking behaviour of perovskites, where photo-induced ion migration of perovskite films follows the laser illumination profile from high-carrier-density to low-carrier-density regions46. The defective region acts as a sink for Cl− ions and leads to the outward lateral growth of the defective region under device operation (Fig. 4e). The rest of the active region surrounding the defective region lost Cl− and thus became more Br− rich, resulting in redshifts in both PL and EL over time (Fig. 4c,h). We believe that such lateral degradation pathways are also likely to occur at a much smaller scale (~100 nm), as the compositional inhomogeneities of perovskite may induce locally varying electric fields under bias. This is confirmed with Kelvin probe force microscopy measurement on the perovskite/polyvinylcarbazole (PVK)/ITO device stack, where the local work functions of perovskite emissive film varied by over 150 mV across a relatively smooth region (roughness of ~2 nm) (Extended Data Fig. 7).

As unveiled by our model, lateral ion migration mediated by the defective regions could contribute to the rapid degradation of the operational blue LED. The cross-correlated PL and EL allowed us to explain the evolution of local chemical, optoelectrical and morphological disorders that severely undermine LED performance. These results enabled by our platform realize a new understanding of degradation pathways that will rationally guide new passivation approaches and the use of precursor additives to control heterogeneities and inhibit such instabilities. We expect this approach to now enable a wider, systematic understanding of degradation pathways in halide perovskite LEDs that will accelerate progress in the field.

Discussion

Future work will further improve the approach by addressing current limitations of our method. First, training the models using synthesized Gaussian noise may not capture the full range of noise found in real images. Incorporating other noise types such as Poisson and strip noise during training can provide additional knowledge about various noise sources encountered in real-world microscopy. By considering a wider range of noise types, the denoising models could achieve improved performance and generalizability. Second, transitioning to a lightweight neural network with fewer hidden layers could accelerate the training and fine-tuning process, although it may result in a slight decrease in accuracy. This change would also lower the barrier of entry for constructing ML-assisted measuring platforms in the laboratory, making them accessible even without a graphics processing unit.

Designing downstream tasks that target specific research questions, such as predicting material properties from the denoised data, has the potential to provide valuable physical insights by interpreting the higher-level visual representation from the hidden layers or extracting the relationships the model has learned. In research questions where known physical equations can be employed, incorporating that knowledge can further enhance the accuracy and robustness of the model.

While we have conducted experiments to ensure that the restored high-resolution information remains consistent with the physical properties of materials after denoising, there is a possibility that our system may generate inaccurate information in local regions that are not well represented in the training data. In our study, we meticulously examined the denoised results against the raw data to ensure that the local spectrum is not distorted. Moving forward, it would be valuable to develop widely used benchmark datasets and validation tasks comprising a large number of materials. This would enable the evaluation of the generalizability and credibility of newly developed models for sample characterization.

We have here demonstrated the model on a range of relevant optoelectronic materials and devices including OLEDs and halide perovskite LEDs and solar cells—generating new insight into the materials and degradation behaviour—and we expect further discoveries to be enabled in these fields through this method. Furthermore, the demonstration here provides a platform for collecting and curating a wide range of datasets from different systems in a number of other fields, translating to scientific insights driven by powerful ML-driven algorithms.

Methods

Algorithm implementation details

Denoising model

A 3D image can be represented by the following model:

where I ∈ RW×H×C is the observed image matrix, which contains a clear image matrix X with true signals, and a noise matrix N with various noise components.

While conventional algorithms struggle to find the complex correlation between image pairs, a straightforward solution is presented by deep learning models using a nonlinear end-to-end mapping of the corrupted image and the ground-truth image,

where R is an image restoration algorithm, which reconstructs the corrupted input I to predict the ground-truth image. ϕ is a regularization term that can be determined by hand or by the algorithm (in deep learning methods, it can be the additional loss). γ is the trade-off weight of this term.

Physics-aware convolutional neural network (PA-CNN)

Our model is designed to actively fit the emission spectrum of an object instead of purely relying on pixel-wise mean square error, thus avoiding misrepresentation of the emission spectrum of a real-world object. To prevent the model from losing cross-channel information during training, we introduce the spectral gradient regularization ϕSPEC as a loss component in PA-CNN:

where θU = {W1:L; b1:L} represents all the trainable weights and biases of an L-layer U-Net CNN U.

The PA-CNN architecture is shown in Extended Data Fig. 1. It consists of Conv2D/batch normalization/LeakyReLU layers, which are adopted from a common U-Net architecture that is widely used for image processing tasks such as image classification, denoising and super-resolution. Several residual links connect the layers at the two ends of the network, improving the model training stability and avoiding gradient vanish.

The optimization target of this model is to find the optimal parameters \({\hat{\theta }}_{U}\) for the CNN:

where α is a trade-off between mean square error and the spectral gradient loss LSPEC.

Noise-level-aware image restoration network (PA-Net)

To push the generalization performance of our model, the network is designed to consist of two consecutive parts: the detector and CNN (Extended Data Fig. 1). The detector estimates σ and feeds this information to the CNN. The CNN incorporates the noise level and performs noise-level-specific denoising. Note that, to reduce the overwhelming contribution to the network, all noise levels are normalized by the maximum noise level before being fed into the network.

The detector estimates the noise level on the basis of the input image:

The CNN is effectively a modified version of PA-CNN. Apart from processing the image I ∈ RW×H×C, the model now utilizes the predicted output σ′ from the detector D as an extra channel of information. Thus, the input matrix becomes

where J is an all-ones matrix of size W × H × 1 and \(X^{\frown }Y\) represents the concatenation of matrix X and matrix Y on the channel dimension. Therefore, the output image is obtained from

The padding strategy is based on the following rationale. The output feature map of the first convolutional layer is computed as follows:

where Oxyk is a single pixel of the output matrix, B is the bias matrix, s is the filter kernel size, k is the index of convolution filters and \({f}_{{ijc}}^{\widetilde{\,k}}\) is the (i, j) th element of the reversed filter which works on the cth input channel (that is, \({f}_{{ijc}}^{\widetilde{\,k}}={f}_{\left(s-i\right)\left(s-j\right)c}^{\,k}\)).

Therefore, the padding layer provides an additional activation to the output feature map. Given the LeakyReLU activation function used in the network,

the additional activation can provide a more directional activation strategy for the filters. The filter can either be more activated or suppressed under different noise levels.

The optimization target of PA-Net is to solve the following equation:

Our training process is demonstrated below. The hyperparameters in our experiments are α = 0.1, β = 0.1 and σ = {5, 10, 20, 50}. An adaptive learning rate method, the Adam optimizer, with parameters lr = 0.0002, β1 = 0.5 and β2 = 0.999, is used to train our network.

The pseudocode of the described algorithm is as follows.

Algorithm

Framework of PA-Net Training

Require: α, weight of spectral loss \({L}_{\mathrm{SPEC}}^{U}\). β, weight of detector loss \({L}_{\mathrm{MSE}}^{D}\). m, the batch size. Σ = {σ1, σ2, …}, the collection of noise levels being trained. lr, β1, β2, training parameters for the Adam optimizer.

Require: θ0, initial weights of the network.

-

1.

\(t=0\)

-

2.

while Training has not completed do

-

3.

\(t\leftarrow t+1\)

-

4.

Sample \(\{X^{\left(i\right)}\}_{i=1}^m\) from training data

-

5.

Generate \(\{N^{\left(i\right)}\}_{i=1}^{m}\approx {\mathscr{N}}\left(0,{{\rm{\sigma }}}_{\mathrm{rand}}\right)\), where σrand is sampled i.i.d. from Σ

-

6.

\(\{I^{\left(i\right)}\}_{i=1}^{m}=\{X^{\left(i\right)}\}_{i=1}^{m}+\{N^{\left(i\right)}\}_{i=1}^{m}\)

-

7.

\({g}_{t}\leftarrow \nabla \left({L}_{\mathrm{MSE}}^{U}\left({{\rm{\theta }}}_{t-1}\right)+{\rm{\beta }}{L}_{\mathrm{MSE}}^{D}\left({{\rm{\theta }}}_{t-1}\right)+{\rm{\alpha }}{L}_{\mathrm{SPEC}}^{U}\left({{\rm{\theta }}}_{t-1}\right)\right)\)

-

8.

\(\theta _{t}\leftarrow \theta _{t-1}-{\rm{{ADAM}}}\left({\mathrm{lr}},{\beta }_{1},{\beta }_{2},{g}_{t}\right)\)

-

9.

Get \(\{{X_{{\rm{valid}}}}^{\left(i\right)}\}_{{\rm{i}}=1}^{\left|\Sigma \right|}\leftarrow\) validation data

-

10.

for i = 1; i ≤ |Σ| do

-

11.

\(N_{\mathrm{valid}}^{\left(i\right)}\approx {\mathscr{N}}\left(0,{\sigma }_{i}\right)\), where σi ← Σ(i)\({{\rm{\sigma }}}_{i}\leftarrow {\Sigma }^{\left(i\right)}\)

-

12.

end for

-

13.

\(\{{I_{\mathrm{valid}}}^{\left(i\right)}\}_{i=1}^{\left|\varSigma \right|}=\{{X_{\mathrm{valid}}}^{\left(i\right)}\}_{i=1}^{\left|\varSigma \right|}+\{{N_{\mathrm{valid}}}^{\left(i\right)}\}_{i=1}^{\left|\varSigma \right|}\)

-

14.

\({L}_{\mathrm{valid}}\left(\theta _{t}\right)\leftarrow {L}_{\mathrm{MSE}}^{U}\left(\theta _{t}\right)\)

-

15.

end while

-

16.

\(\hat{\theta }\leftarrow {\rm{arg }}\,{\min }_{{\theta }_{t}}{L}_{\mathrm{valid}}({\theta }_{t})\)

Compared dataset

For non-blind Gaussian denoising, we used a widely used publicly available airborne hyperspectral image captured over the Washington DC Mall in August 1995 using the HYDICE sensor29. The DC Mall dataset consists of a single massive remote-sensing image with dimensions of 1,280 × 303 × 191 pixels (W × H × C). The image was normalized to [−1, 1] and corrupted with additive Gaussian noise of σ = 5, 10, 20, 50 or 100 (with respect to a data range of 0–255), leading to one noisy image for each noise level. An image cube covering the wavelength range from 400 to 530 nm (with a total of 32 wavelength channels) was segmented along the width dimension to prepare the training, validation and testing sets, which occupied 50%, 25% and 25% of the whole image, respectively.

For blind denoising of real-world multispectral microscopy data, we collected microscale PL images of photostable organic film mCBP-doped 4CzIPN with various camera integration times (Supplementary Fig. 1). The acquired image cubes had dimensions of 1,024 × 1,024 × 176 pixels and covered a wavelength range from 450 to 800 nm. The image data were calibrated to compensate for sensitivity differences resulting from changing the filter at 600 nm. To compare different methods without exceeding computational resources, we used a subsection of the image cube (400 × 400 × 176 pixels) and normalized the data to [−1, 1]. We used one noisy image with a camera exposure of 0.03 s per wavelength channel, along with one ground-truth image captured with a 3 s exposure.

Compared methods

HSSNR, BM3D, BM4D, LRMR, LLRT

We input the noisy image with data renormalized to [0, 1], except for BM3D23, which required a renormalization of [0, 255]. HSSNR47 was readily used without requiring additional inputs. For BM3D and LLRT24, we provided the noise s.d. as one of the additional inputs. For BM4D31, we set the algorithm to the Gaussian distribution mode with an unknown noise s.d., while leaving other parameters at their default values. For LRMR48, all parameters were set to their recommended values.

Deep HS-Prior, HSI-DeNet

The algorithms were used for single-noise-level non-blind denoising evaluation. For HS-Prior32, the image data were renormalized to [0, 1], and we followed the procedure outlined in the published GitHub notebook by using the testing dataset for both training and testing. We provided the noise s.d. as one of the required inputs, and a maximum of 1,400 iterations was used. For HSI-DeNet33, we used the training, validation and testing datasets accordingly, with a default patch size of 40, a training epoch size of 100 and a batch size of 128.

CBDNet, QRNN3D

The algorithms were used for multi-noise-level non-blind denoising evaluation. To compare the capability of multi-noise-level learning with our method, the data loader selected random noisy–clear image pairs with various noise s.d. values of 10, 20 or 50 to create a training batch. For CBDNet49, we used a patch size of 512, a batch size of 10 and a total epoch size of 10,000. For training of QRNN3D34, we used a patch size of 64, a total epoch size of 500 and 100 steps per epoch with a batch size of 16. For validation and testing, we slightly reduced the height and width of the input image by 7 pixels to avoid 3D convolution errors.

Noise2Void, Noise2Fast, Self2Self

The algorithms were used for blind denoising evaluation. We trained the Noise2Void model19 following their GitHub 3D example notebook, using a default patch size of 64, an epoch size of 100 and a batch size of 4. For Noise2Fast20, we utilized their multi-channel ‘N2F_4D’ method without any further modifications. For Self2Self21, the input image data were renormalized to [0, 1], and we adhered to a default learning rate of 0.0001 and default settings of 150,000 iterations.

PA-CNN, PA-Net

We used a patch size of 64, a batch size of 32 and a total of 20,000 steps during training. For non-blind denoising, we used the as-prepared training, validation and testing datasets accordingly. For blind denoising, we prepared the training data by corrupting the original noisy image with additive Gaussian noise of σ = 5, 10, 20 and 50 (one noisy image for each noise level). For the best performance, we loaded the pretrained model based on the previous non-blind denoising task, and fine-tuned the model on the current dataset. After training, the full noisy image was fed into the model to produce the denoised results. Two actual images have been used in blind denoising: one from the publicly available remote-sensing dataset, one from the current input.

When denoising the full-sized image, if the channel size of the noisy image C was greater than the channel size of the model n, we segmented the full image into a set of images with n channels (0–n, 1–n + 1, …, (C − n)–C) and denoised them individually. The denoised images were stacked back into C channels and the overlapped channels were averaged to yield the final results.

The optimal patch size of the model may vary across different images, and choosing the correct patch size that captures relevant local information while also allowing the model to learn larger-scale features is desirable for achieving the best results (Supplementary Table 3).

Blind algorithms that are impractical to use

Noise2Noise50 requires pairs of two noisy images of the same sample, assuming that the noise in the two images is independent and identically distributed. In practice, it is impossible to capture sensitive samples that are easily degraded twice. There are several reasons for this: (1) the optical and morphological properties of the measured material change during the measurement, resulting in two completely different images, and (2) maintaining identical testing conditions is challenging (for example, the stage may move slightly over time). Noisier2Noise51 requires prior knowledge of the noise σ to prepare the noisier/noisy training data, making it non-blind and unsuitable for practical use where the noise level of the image is unknown.

Sample preparation

Materials

N, N-Dimethylformamide (DMF, anhydrous, 99.8%), dimethylsulfoxide (DMSO, anhydrous, 99.9%), isopropanol (anhydrous, 99.9%), chlorobenzene (99.99%), hexane (anhydrous, 95%), 1-octadecene (≥99.0%), oleic acid (≥99%), oleylamine (≥98%), octanoic acid (≥99%), octylamine (≥99.5%), caesium chloride (CsCl, ≥99.999%) and caesium bromide (CsBr, 99.999%, perovskite grade) were purchased from Sigma-Aldrich. Formamidinium iodide (FAI, 99.9%), methylammonium iodide (MAI, 99.9%) and methylammonium bromide (MABr, 99.9%) were purchased from Greatcell Solar Materials and used without further purification. Lead iodide (PbI2, 98%) and lead bromide (PbBr2, 98%) were purchased from TCI. Tin(IV) oxide (SnO2, 15% in water colloidal dispersion) and decon 90 were purchased from Thermo Fisher Scientific. All chemicals were used without further purification.

Organic film fabrication

The substrates were cleaned with ultrapurified water and acetone for 10 min each by sonication, and then dry-cleaned for 15 min by exposure to a UV–ozone ambient. Next, a 50-nm-thick film of 20 wt% 4CzIPN doped with mCBP as an emitting layer was spin-coated from a chlorobenzene solution (15 mg ml−1) at a spin speed of 2,000 r.p.m. and annealed at 100 °C for 10 min in a nitrogen atmosphere.

Perovskite NPL film fabrication

Sky-blue CsPbBr3 perovskite NPLs were synthesized via an injection-based method described by Bohn and co-workers37. The Cs oleate precursor was prepared by dissolving 0.1 M Cs2CO3 in 10 ml oleic acid. PbBr2 complex precursor was prepared by dissolving 0.1 M PbBr2 in a mixed solvent of 10 ml toluene, 100 μl oleylamine and 100 μl oleic acid. Both precursors were stirred at 100 °C until all the solids were dissolved, then cooled to room temperature. Cs oleate precursor (50 μl) was added to 1.2 ml PbBr2-complex solution under vigorous stirring at room temperature, and 2 ml acetone was quickly added afterwards. The solution was shaken using a vortex mixer for 1 min and then centrifuged at 4,000 r.p.m. for 4 min. The supernatant was discarded and the remaining NPLs were redispersed in 4 ml hexane. The NPL solution was deposited on top of glass substrates at 2,000 r.p.m. for 30 s without thermal treatment.

Triple-cation perovskite half device fabrication

The perovskite solar cell devices based on a SnO2 electron transport layer were fabricated according to previously reported procedures with modifications52. The ITO substrates were cleaned with deionized water and decon 90, followed by a rinse with isopropanol. The substrates were dried and subsequently treated with oxygen plasma for 5 min. The SnO2 nanoparticles were diluted in deionized water and then spin-coated on the ITO substrates at 3,000 r.p.m. for 30 s. The substrates were annealed at 180 °C for 30 min. The triple-cation perovskite precursor Cs0.05MA0.16FA0.79Pb(I0.83 Br0.17)3 was prepared using previously reported recipes39. PbI2 (1.1 M), PbBr2 (0.22 M), FAI (1 M) and MABr (0.2 M) were dissolved in 1 ml of anhydrous DMF:DMSO (4:1 v/v%) mixed solution. An additional 5 vol.% of a 1.5 M CsI stock solution was introduced into the perovskite solution, which was then stirred at a temperature of 100 °C for 30 min. The perovskite solution was cooled to room temperature and spin-coated onto substrates using a two-step spinning process: 1,000 r.p.m. for 10 s, followed by 6,000 r.p.m. for 35 s. Chlorobenzene was dropped on top of the substrates 10 s before the programme ended. The perovskite films were annealed at 100 °C for 40 min in a nitrogen glovebox. The samples were left in ambient conditions for a week, promoting the merging of grains into larger microsized grains.

Perovskite nanosheet film fabrication

1-Octadecene (10 ml), lead (II) bromide (0.013 g), oleic acid (250 µl), oleylamine (250 µl), octanoic acid (500 µl) and octylamine (500 µl) were mixed and dissolved in a 20 ml vial for 20 min at 115 °C. After complete solubilization of the PbBr2 salt, the temperature was increased to 150 °C and 1 ml of caesium oleate solution (0.032 g Cs2CO3 dissolved in 10 ml oleic acid at 120 °C) was swiftly injected. After 5 min, the reaction mixture was slowly cooled to room temperature using a water bath. To collect the nanosheets, 10 ml of hexane was added to the crude solution and then the mixture was centrifuged at 60 g for 5 min. After centrifugation, the supernatant was discarded and the nanosheets were redispersed in hexane.

Wide-gap perovskite film fabrication

The ITO substrates were transferred to a hybrid evaporator (CreaPhys) in a glovebox. The substrate stage was kept at a temperature around 18 °C by in-house cooling water and the chamber wall at −20 °C by another cooling water system. To achieve the wide-bandgap perovskite, fourth-source evaporation was carried out with FAI, CsBr, PbI2 and PbBr2. The rates of precursors were set as 1 Å s−1 for FAI, 0.6 Å s−1 for PbI2, 0.1 Å s−1 for CsBr and 0.2 Å s−1 for PbBr2. Note that the tooling factors of PbI2 and PbBr2 were fine-tuned to 93.6% and 115.2% to obtain the desired bandgap. The FAI, PbI2 and PbBr2 powder was fresh every time before evaporation. The deposition pressure was between 1 and 4 × 10−6 mbar during deposition. The perovskite film was annealed at 135 °C for 30 min in a nitrogen-filled glovebox.

Perovskite blue LED fabrication

The perovskite precursor was prepared by dissolving 44 mg PbBr2, 19 mg CsCl, 2.7 mg CsBr and 20 mg phenethylammonium bromide in 1 ml DMSO and stirred for 2 h at room temperature before use. Prepatterned ITO substrates (10–15 Ω sq−1, Kintec) were cleaned using an ultrasonic bath with detergent, water, acetone and isopropanol for 5 min, respectively. Then the ITO substrates were dried and treated by oxygen plasma etching (forward power 200 W, reflected power 0 W) for 10 min. Clean ITO substrates were transferred into a nitrogen-filled glovebox for film deposition. PVK (6 mg ml−1 in chlorobenzene) was spin-coated on ITO substrate at 6,000 r.p.m. and then annealed at 110 °C for 10 min. Subsequently, perovskite precursor solution was spin-coated at 6,000 r.p.m. for 90 s and then annealed at 80 °C for 5 min. Finally, 35 nm of 1, 3, 5-tris(N-phenylbenzimiazole-2-yl)benzene, 1 nm of LiF and 100 nm of Al were sequentially evaporated through a shadow mask.

Characterization methods

LED characterizations

A Keithley 2400 source-meter unit was used for the luminance–current density–voltage measurement. All LEDs were measured from zero bias to forward bias at a rate of 0.05 V s−1. The luminance of the LEDs was calculated on the basis of the EL spectrum and the spectral response of the silicon photodiode. External quantum efficiency was then calculated assuming a Lambertian profile. The detailed protocol has been described by Anaya and co-workers53.

Hyperspectral microscopy measurements

The hyperspectral microscopy experiment was conducted following the previously published protocol54. PL maps were collected using a wide-field hyperspectral microscope (IMA VISTM, Photon etc.) with a low-noise silicon CCD (charge-coupled device) camera and a 405 nm CW laser. Images at various wavelengths were gathered using a diffraction grating and then combined to construct a 3D data cube. Within this data cube, the x and y axes functioned as navigation coordinates, while the c axis represented the wavelength dimension. Before each measurement, background signals were recorded and subsequently subtracted from the acquired signals.

For the in operando LED measurements, encapsulated perovskite LEDs were operated with a Keithley 2450 source-meter unit. The drive voltage was held at 6 V over 10 min, and the hyperspectral EL scans were continuously taken with a 1,024 × 1,024 resolution and a 20×/100× objective lens through the glass side of the LEDs. The area covered by each pixel was 330 × 330 nm. Each pixel contained an emission spectrum from 480 to 500 nm with a step size of 2 nm and a camera exposure time of 0.2 s per wavelength step. Gaussian fitting was performed on the local EL curve of each pixel, and the peak position was found at the maximum of the fitted EL spectra. Hyperspectral PL images were taken before and after device operation with a camera exposure time of 1 s per wavelength step.

Kelvin probe force microscopy measurements

Atomic force microscopy (to obtain the morphology) and Kelvin probe force microscopy were performed on a wafer-scale Bruker Dimension Icon atomic force microscope. The atomic force microscopy and Kelvin probe force microscopy maps were acquired using 256 × 256 pixels and frequency-modulated Kelvin probe force microscopy. Pt–Ir-coated Si probes (model SCM-PIT) with an average resonant frequency of 75 kHz and a spring constant of 2.8 N m−1 were implemented for this measurement. All data were acquired in the dark and in ambient atmospheric conditions.

Data availability

The data that support the findings of this study are available for download at the University of Cambridge Repository https://doi.org/10.17863/CAM.101509 (ref. 55). Source data are provided with this paper.

Code availability

The Python code that supports the findings of this study is publicly available at https://github.com/KangyuJi/PA-Net (ref. 56). The specific neural network architecture used is described in Methods and Supplementary Information, together with the self-learning training algorithm in pseudocode.

References

Ha, T. & Tinnefeld, P. Photophysics of fluorescent probes for single-molecule biophysics and super-resolution imaging. Annu. Rev. Phys. Chem. 63, 595–617 (2012).

Park, B. & Seok, S. I. Intrinsic instability of inorganic–organic hybrid halide perovskite materials. Adv. Mater. 31, 1805337 (2019).

Barker, A. J. et al. Defect-assisted photoinduced halide segregation in mixed-halide perovskite thin films. ACS Energy Lett. 2, 1416–1424 (2017).

Woo, S.-J., Kim, J. S. & Lee, T.-W. Characterization of stability and challenges to improve lifetime in perovskite LEDs. Nat. Photon. 15, 630–634 (2021).

Scherf, N. & Huisken, J. The smart and gentle microscope. Nat. Biotechnol. 33, 815–818 (2015).

Skylaki, S., Hilsenbeck, O. & Schroeder, T. Challenges in long-term imaging and quantification of single-cell dynamics. Nat. Biotechnol. 34, 1137–1144 (2016).

Altmann, Y. et al. Quantum-inspired computational imaging. Science 361, eaat2298 (2018).

Chen, D. et al. Automating crystal-structure phase mapping by combining deep learning with constraint reasoning. Nat. Mach. Intell. 3, 812–822 (2021).

Zhu, B., Liu, J. Z., Cauley, S. F., Rosen, B. R. & Rosen, M. S. Image reconstruction by domain-transform manifold learning. Nature 555, 487–492 (2018).

Wang, H. et al. Deep learning enables cross-modality super-resolution in fluorescence microscopy. Nat. Methods 16, 103–110 (2019).

Hoffman, D. P., Slavitt, I. & Fitzpatrick, C. A. The promise and peril of deep learning in microscopy. Nat. Methods 18, 131–132 (2021).

Jena, A. K., Kulkarni, A. & Miyasaka, T. Halide perovskite photovoltaics: background, status, and future prospects. Chem. Rev. 119, 3036–3103 (2019).

Fakharuddin, A. et al. Perovskite light-emitting diodes. Nat. Electron. 5, 203–216 (2022).

Weigert, M. et al. Content-aware image restoration: pushing the limits of fluorescence microscopy. Nat. Methods 15, 1090–1097 (2018).

Belthangady, C. & Royer, L. A. Applications, promises, and pitfalls of deep learning for fluorescence image reconstruction. Nat. Methods 16, 1215–1225 (2019).

Park, Y. & Kellis, M. Deep learning for regulatory genomics. Nat. Biotechnol. 33, 825–826 (2015).

Begoli, E., Bhattacharya, T. & Kusnezov, D. The need for uncertainty quantification in machine-assisted medical decision making. Nat. Mach. Intell. 1, 20–23 (2019).

Tian, C. et al. Deep learning on image denoising: an overview. Neural Netw. 131, 251–275 (2020).

Krull, A., Buchholz, T.-O. & Jug, F. Noise2Void—learning denoising from single noisy images. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (eds Davis, L. et al.) 2129–2137 (IEEE, 2019).

Lequyer, J., Philip, R., Sharma, A., Hsu, W.-H. & Pelletier, L. A fast blind zero-shot denoiser. Nat. Mach. Intell. 4, 953–963 (2022).

Quan, Y., Chen, M., Pang, T. & Ji, H. Self2Self with dropout: learning self-supervised denoising from single image. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (eds Boult, T. et al.) 1887–1895 (IEEE, 2020).

Ronneberger, O., Fischer, P. & Brox, T. U-Net: convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015 Vol. 9351 (eds Navab, N. et al.) 234–241 (Springer, 2015).

Dabov, K., Foi, A., Katkovnik, V. & Egiazarian, K. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 16, 2080–2095 (2007).

Chang, Y., Yan, L. & Zhong, S. Hyper-Laplacian regularized unidirectional low-rank tensor recovery for multispectral image denoising. In Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (eds Chellappa, R. et al.) 4260–4268 (IEEE, 2017).

Zhang, K., Zuo, W. & Zhang, L. FFDNet: toward a fast and flexible solution for CNN-based image denoising. IEEE Trans. Image Process. 27, 4608–4622 (2018).

Brooks, T. et al. Unprocessing images for learned raw denoising. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (eds Chellappa, R. et al.) 11036–11045 (IEEE, 2019).

Zhang, K., Zuo, W. & Zhang, L. Learning a single convolutional super-resolution network for multiple degradations. In Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (eds Brown, M. et al.) 3262–3271 (IEEE, 2018).

Zhang, K., Zuo, W. & Zhang, L. Deep plug-and-play super-resolution for arbitrary blur kernels. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (eds Davis, L. et al.) 1671–1681 (IEEE, 2019).

Mitchell, P. A. Hyperspectral digital imagery collection experiment (HYDICE). Proc. SPIE 2587, 70–95 (1995).

Wang, Z., Bovik, A. C., Sheikh, H. R. & Simoncelli, E. P. Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13, 600–612 (2004).

Maggioni, M., Katkovnik, V., Egiazarian, K. & Foi, A. Nonlocal transform-domain filter for volumetric data denoising and reconstruction. IEEE Trans. Image Process. 22, 119–133 (2013).

Sidorov, O. & Hardeberg, J. Y. Deep hyperspectral prior: single-image denoising, inpainting, super-resolution. In Proc. IEEE/CVF International Conference on Computer Vision Workshop (ICCVW) (eds Sato, Y. & Yi, J.) 3844–3851 (IEEE, 2019).

Chang, Y., Yan, L., Fang, H., Zhong, S. & Liao, W. HSI-DeNet: hyperspectral image restoration via convolutional neural network. IEEE Trans. Geosci. Remote Sens. 57, 667–682 (2019).

Wei, K., Fu, Y. & Huang, H. 3-D quasi-recurrent neural network for hyperspectral image denoising. IEEE Trans. Neural Netw. Learn. Syst. 32, 363–375 (2021).

Burgess, A. E. The Rose model, revisited. J. Opt. Soc. Am. A 16, 633–646 (1999).

Burgess, A. E. & Ghandeharian, H. Visual signal detection. II. Signal-location identification. J. Opt. Soc. Am. A 1, 906–910 (1984).

Bohn, B. J. et al. Boosting tunable blue luminescence of halide perovskite nanoplatelets through postsynthetic surface trap repair. Nano Lett. 18, 5231–5238 (2018).

Kumar, S. et al. Efficient blue electroluminescence using quantum-confined two-dimensional perovskites. ACS Nano 10, 9720–9729 (2016).

Saliba, M. et al. Cesium-containing triple cation perovskite solar cells: improved stability, reproducibility and high efficiency. Energy Env. Sci. 9, 1989–1997 (2016).

Du, T. et al. Overcoming nanoscale inhomogeneities in thin-film perovskites via exceptional post-annealing grain growth for enhanced photodetection. Nano Lett. 22, 979–988 (2022).

Shamsi, J. et al. Colloidal synthesis of quantum confined single crystal CsPbBr3 nanosheets with lateral size control up to the micrometer range. J. Am. Chem. Soc. 138, 7240–7243 (2016).

Chiang, Y.-H. et al. Efficient all-perovskite tandem solar cells by dual-interface optimisation of vacuum-deposited wide-bandgap perovskite. Preprint at https://doi.org/10.48550/arxiv.2208.03556 (2022).

Hoye, R. L. Z. et al. Identifying and reducing interfacial losses to enhance color-pure electroluminescence in blue-emitting perovskite nanoplatelet light-emitting diodes. ACS Energy Lett. 4, 1181–1188 (2019).

Yuan, S. et al. Efficient and spectrally stable blue perovskite light-emitting diodes employing a cationic π-conjugated polymer. Adv. Mater. 33, 2103640 (2021).

Rybin, N. et al. Effects of chlorine mixing on optoelectronics, ion migration, and gamma-ray detection in bromide perovskites. Chem. Mater. 32, 1854–1863 (2020).

deQuilettes, D. W. et al. Photo-induced halide redistribution in organic–inorganic perovskite films. Nat. Commun. 7, 11683 (2016).

Othman, H. & Qian, S.-E. Noise reduction of hyperspectral imagery using hybrid spatial–spectral derivative-domain wavelet shrinkage. IEEE Trans. Geosci. Remote Sens. 44, 397–408 (2006).

Zhang, H., He, W., Zhang, L., Shen, H. & Yuan, Q. Hyperspectral image restoration using low-rank matrix recovery. IEEE Trans. Geosci. Remote Sens. 52, 4729–4743 (2014).

Guo, S., Yan, Z., Zhang, K., Zuo, W. & Zhang, L. Toward convolutional blind denoising of real photographs. Preprint at https://doi.org/10.48550/arXiv.1807.04686 (2019).

Lehtinen, J. et al. Noise2Noise: learning image restoration without clean data. Preprint at https://doi.org/10.48550/arXiv.1803.04189 (2018).

Moran, N., Schmidt, D., Zhong, Y. & Coady, P. Noisier2Noise: learning to denoise from unpaired noisy data. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (eds Boult, T. et al.) 12061–12069 (IEEE, 2020).

Jiang, Q. et al. Enhanced electron extraction using SnO2 for high-efficiency planar-structure HC(NH2)2PbI3-based perovskite solar cells. Nat. Energy 2, 16177 (2017).

Anaya, M. et al. Best practices for measuring emerging light-emitting diode technologies. Nat. Photon. 13, 818–821 (2019).

Sun, Y. et al. Bright and stable perovskite light-emitting diodes in the near-infrared range. Nature 615, 830–835 (2023).

Ji, K. et al. Research data supporting ‘Self-supervised deep learning for tracking degradation of perovskite LEDs with multispectral imaging’. Apollo https://doi.org/10.17863/CAM.101509 (2023).

Ji, K. KangyuJi/PA-Net: PANet. Zenodo https://doi.org/10.5281/zenodo.8281088 (2023).

Acknowledgements

This project has received funding from the European Research Council (HYPERION, 756962) and Engineering and Physical Sciences Research Council (EPSRC, EP/R023980/1, EP/V027131/1). K.J. thanks the Royal Society for a studentship. W.L. thanks Trinity International Bursaries. W.L. and Y.S. acknowledge funding from China Scholarship Council and Cambridge Trust. L.-S.C. thanks the National Natural Science Foundation of China (52103242) and acknowledges USTC Research Funds of the Double First-Class Initiative. Y.-H.C. thanks the Taiwan Cambridge Scholarship and Rank Prize Funds. E.M.T. thanks Marie Skłodowska-Curie Actions (841265). L.D. thanks the European Research Council (PEROVSCI, 957513). Q.L. thanks the European Research Council Project (949949 gAIa). K.F. acknowledges a George and Lilian Schiff Studentship, a Winton Studentship, an Engineering and Physical Sciences Research Council Studentship, a Cambridge Trust Scholarship and a Robert Gardiner Scholarship. M.A. thanks Marie Skłodowska-Curie Actions (841386). S.D.S. thanks the Royal Society and Tata Group (UF150033). This work used the Research Computing Services of the University of Cambridge HPC platform (https://www.hpc.cam.ac.uk/). For the purpose of open access, the authors have applied a Creative Commons Attribution (CC BY) licence to any Author Accepted Manuscript version arising from this submission.

Author information

Authors and Affiliations

Contributions

K.J. conceived the project with support from Q.L. and S.D.S.; K.J. and W.L. developed the algorithms. Y.S., L.-S.C., J.S., Y.-H.C. and L.D. prepared the samples. K.J., K.F. and M.A. collected the hyperspectral microscopy data. K.J. processed the hyperspectral microscopy data. E.M.T. carried out Kelvin probe force microscopy. K.J., W.L., Y.S., J.C. and S.D.S. analysed the experimental data. K.J. and W.L. wrote and all authors edited this manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Trevor Rhone, Laurence Pelletier, Aikaterini Vriza and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Model architecture.

(1) Noise detector, which senses the overall noise level of the input data cube (details in Supplementary Note 4). (2) Detected sigma level forms a padding layer. (3) Deep residual PA-CNN. It takes in a noisy image and produces denoised images.

Extended Data Fig. 2 Influence of the noise-level estimator on the activation of hidden layers.

Activation of the first 32 channels from the model output of the first BN layer using noisy image inputs of various noise levels. a, PA-CNN. b, PA-Net. The activation in b varies across different noise levels, which is desired by our proposed model. The activation features were extracted using methods described in Supplementary Note 4.

Extended Data Fig. 3 Image restoration results of an airborne hyperspectral image - the Washington DC Mall.

Normalized wavelength-specific reflectance images at 400 nm. a, The input image with simulated Gaussian noise of σ = 20. b-h, Denoised images via (b) HSSHR, (c) BM3D, (d) BM4D, (e) LRMR, (f) LLRT, (g) HSI-DeNet, and (h) our method. i, High-quality ground truth image.

Extended Data Fig. 4 Local-PL peak map of CsPbBr3 perovskite nanoplatelets.

a, The raw (above) and processed (below) local-PL peak maps of the indicated zone in Supplementary Fig. 4a where the average photon counts per pixel are greater than 1. The Gaussian function was used to fit the local-PL curve for each pixel, and the peak position was found at the maximum of the fitted spectra. b, Error in peak prediction of raw (above) and processed (below) maps against the manually derived ground truth (detailed methods in Supplementary Note 7). c, Statistics of signal-to-noise ratio (SNR), the percentage improvement, and the number of pixels are plotted against signal strength (average photon counts). The line represents the median value of the distribution. Scalebars in a and b are 10 μm.

Extended Data Fig. 5 Local-PL peak map of Cs0.05FA0.78MA0.17Pb(I0.83Br0.17)3 perovskite film on SnO2/ITO/glass substrates.

a, The processed local-PL peak maps of the indicated zone in Fig. 2f. Gaussian function was used to fit the local-PL curve for each pixel, and the peak position was found at the maximum of the fitted spectra. Scalebar is 1 μm. b, Local-PL spectra of the center pixel from three representative regions labeled ‘I’, ‘II’, and ‘III’ in a. ‘I’ is located within the grain, ‘II’ is on the grain boundary between two adjacent grains, and ‘III’ is on the grain boundary between multiple grains. Arrow shows the emission shoulder. c, A zoomed in plot of b focusing on the PL emission tail towards near-infrared. Arrow indicates the trace of the most redshifted PL. d, Statistics of SNR (above) and the percentage improvement (below) after image restoration with respect to wavelength. Inset: normalized PL intensity map at 850 nm. Scalebars in a and b are 1 μm, and in d are 2 μm. The statistics was calculated from evenly cropped subzones (sample size n = 100) of the full-size hyperspectral image. The percentage improvements are presented as median values with the error bar of the lower and upper quartile. Signal-to-noise ratio was calculated using the mean of the signal intensity divided by the standard deviation of the background region where no perovskite signal was captured.

Extended Data Fig. 6 EL mapping for mixed Br/Cl blue perovskite LEDs operated under 6 V bias voltage.

a, Normalized EL intensity maps after denoising at 490 nm. b, EL peak estimation across the red line in a. c, EL peak maps comparing estimation from raw and processed data. Top region outside of the LED pixel has been excluded from the mapping results. Pixels with failed spectra fitting are colored in white. Scalebar is 20 μm.

Extended Data Fig. 7 Kelvin probe force microscopy analysis on the mixed Br/Cl blue perovskite film on PVK/ITO substrates.

a, Topography, and b, simultaneous KPFM mapping of the perovskite films. CPD: the potential difference between the sample and the probe tip.

Supplementary information

Supplementary Information

Supplementary Notes 1–7, Figs. 1–5 and Tables 1–4.

Supplementary Data 1

Statistical source data for Supplementary Fig. 4.

Supplementary Data 2

Statistical source data for Supplementary Fig. 5.

Source data

Source Data Fig. 1

Statistical source data for Fig. 1.

Source Data Fig. 2

Statistical source data for Fig. 2.

Source Data Fig. 3

Statistical source data for Fig. 3.

Source Data Fig. 4

Statistical source data for Fig. 4.

Source Data Extended Data Fig. 2

Statistical source data for Extended Data Fig. 2.

Source Data Extended Data Fig. 4

Statistical source data for Extended Data Fig. 4.

Source Data Extended Data Fig. 5

Statistical source data for Extended Data Fig. 5.

Source Data Extended Data Fig. 6

Statistical source data for Extended Data Fig. 6.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ji, K., Lin, W., Sun, Y. et al. Self-supervised deep learning for tracking degradation of perovskite light-emitting diodes with multispectral imaging. Nat Mach Intell 5, 1225–1235 (2023). https://doi.org/10.1038/s42256-023-00736-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-023-00736-z