Abstract

The importance of a trust-based relationship between patients and medical professionals has been recognized as one of the most important predictors of treatment success and patients’ satisfaction. We have developed a novel legal, social and regulatory envelopment of medical AI that is explicitly based on the preservation of trust between patients and medical professionals. We require that the envelopment fosters reliance on the medical AI by both patients and medical professionals. Focusing on this triangle of desirable attitudes allows us to develop eight envelopment components that will support, strengthen and preserve these attitudes. We then demonstrate how each envelopment component can be enacted during different stages of the systems development life cycle and demonstrate that this requires the involvement of medical professionals and patients at the earliest stages of the life cycle. Therefore, this framework requires medical AI start-ups to cooperate with medical professionals and patients throughout.

Similar content being viewed by others

Introduction

The use of medical AI has increased rapidly throughout the World’s largest healthcare systems. In many cases, medical AI can match, or best, the diagnostic performance of human medical professionals and it offers additional advantages in lowering the costs for medical treatment and supporting patient-led disease management. Therefore, we begin the project described in this paper with the assumption that a further increase in the use of medical AI is both desirable as well as inevitable. The question we are trying to answer is therefore not whether medical AI should become an integral part of future healthcare but how this can be done in a way that preserves the crucial interests of both patients and medical professionals. To address this question, we propose a framework for the legal, social and regulatory envelopment of medical AI that is based on the need (i) to preserve the trust-relationship between medical professionals and patients and (ii) to allow both medical professionals and patients to rely on medical AI.

As Siala & Wang have pointed out, there is currently no comprehensive framework to guide the development and implementation of medical AI1. The framework presented here aims to fill this lacuna. We have taken the view that, rather than the fostering of general values in healthcare, e.g., Siala & Wang’s SHIFT – Sustainability, Human-Centredness, Inclusivity, Fairness and Transparency1, the core concern of such a framework should be fostering desirable attitudes between patients, medical professionals and medical AI. In particular, the framework needs to ensure that patients can trust in medical professionals and that both medical professionals and patients can rely on medical AI. Our focus on this triangle of attitudes does not mean that other values, e.g., like SHIFT, should not be given due regard. However, we consider those to be to a large degree encapsulated in the attitudes of trust and reliance we hope to foster, e.g., as we will discuss in the sections Envelopment for Medical AI and Framework for Enacting Medical AI Envelopment, fostering both reliance and trust in this context requires transparency in the development and documentation of the AI as well as fairness in ensuring that concerns of all patient groups are given equal consideration. The importance of trust between medical professionals and patients has been well established as one of the prime predictors for successful treatment (we will make this argument in greater detail in Trust in and Reliance on Medical AI) and we therefore consider the centring of trust in our framework a correct reflection of this importance. Furthermore, focusing on attitudes rather than abstract values allows us to take an immediately practice-focused approach in the framework and draw on the considerable amount of research on how those attitudes can be fostered.

The methodological approach taken in the development of the project is one from the general to the specific. We begin with a consideration of the difference between trust and reliance (Trust and Reliance) and their importance in the context of healthcare and medical AI, in particular (Trust and Reliance in Healthcare and Medical AI). From this we develop a triangle of desirable attitudes between patients, medical professionals and AI: namely, trust between patients and medical professionals; reliance on medical AI by medical professionals; and reliance on medical AI by patients. We use this triangle as the normative base for the development of an enveloping of medical AI (Envelopment for Medical AI). Enveloping here refers to a process during which any AI is placed in an ‘envelope’ of regulations, laws, practices, norms and guidelines that make it function safely and effectively in a given societal context2. We then derive eight specific components of an enveloping for medical professionals’ reliance on medical AI (Envelopment for medical professionals’ reliance on medical AI); for patients’ trust in medical professionals (Enveloping for Patients’ Trust in Medical Professionals); and for patients’ reliance on medical AI (Enveloping for Patients’ Reliance on Medical AI). In Framework for Enacting Medical AI Envelopment, we then develop a framework based on the product life cycle of a typical medical AI that gives specific guidance on how those enveloping components should be implemented during the development (Development); launch and implementation (Implementation); and wide-spread application (Application) of a medical AI. Our framework therefore provides specific guidance for the involvement of healthcare providers, medical professionals, developers and manufacturers and patients’ advocacy group at each stage of the lifecycle of a medical AI. To our knowledge, no framework with the same degree of specificity is currently available.

Before deriving the framework, we will briefly introduce the key terms used in this paper and clarify the specific meaning we assign to them. Such a glossary is necessary as many of the terms used here have highly heterogenous extensions and, consequently, have occasionally been used to refer to widely different things, people and concepts. We use the term healthcare system to describe any set of medical institutions governed by the same regulatory framework as well as the specific institutions in which such regulatory frameworks are derived. We use the term medical institution to describe any smaller entity operating in a given healthcare system, e.g., a particular medical trust; a hospital or a GP practice. We use the term medical professional to describe any person working in a medical profession, e.g., clinicians, GPs, nurses or therapists. Importantly, we assume that a medical profession is one that, beyond professional standards, requires adherence to a medical creed, e.g., in many cases, First Do No Harm. We use the term medical AI to describe any application that (i) uses advanced learning algorithms and (ii) is employed in a medical context. We will thereby recognise two important subclasses: clinical medical AI, which is used to aid the diagnostic process, e.g., through the analysis of MRI images or the maintenance of a dynamic databank, and patient-accessible AI, which is directly accessible to the patient and can be used largely independently (in theory, we will discuss the need for such AI to not entirely replace interactions with medical professionals in Envelopment for Medical AI and Framework for Enacting Medical AI Envelopment), e.g., self-diagnosis or monitoring applications. Those two classes of medical AI do not cover every single application but allow us to draw a meaningful distinction between the two primary functions of medical AI.

Trust in and reliance on medical AI

We encounter the notion of trust in various everyday situations: paradigmatically, we trust in our parents and are likewise trusted by our children; friendship is often viewed as mutual trust in each other. However, unpacking what it means to trust and to be trustworthy has proven more difficult than the ubiquitousness of the term would suggest3. In particular, there is an ongoing debate on what distinguishes trust from related concepts like reliance and dependence. For the purposes of this paper, we will focus on one particular construal of the relationships between trust and reliance (Trust and Reliance), which has been put forward by Baier (1986) and McGreer & Pettit (2017), respectively4,5. We also find those definitions of trust and reliance to be particularly good descriptions of the manifestation of those concepts in healthcare settings (Trust and Reliance in Healthcare and Medical AI).

Trust and reliance

The definitions of reliance we will use here requires two components: (i) the ascription of a disposition to the entity one relies on and (ii) the willingness to express belief in this disposition through one’s actions5. Explicitly:

Reliance

Reliance is the belief in a disposition and the willingness to act on this belief.

For example: reliance on the correctness of a clock is the belief that this clock has the disposition to correctly tell the time and the willingness to use the clock to schedule one’s activities; reliance on the postal service is the belief that the service has the disposition to safely deliver parcels and letters and the willingness to use it for the one’s own mail; and reliance on the help of a volunteer is the belief that the volunteer has the disposition to carry out the assigned tasks and the willingness to schedule further work requiring those tasks to be completed. It is easy to see that reliance is an attitude that can be extended to a larger number of entities, including inanimate objects and organisations. Furthermore, the fact that it is common in colloquial language to use ‘trust’ and ‘reliance’ interchangeably (e.g., to say that one ‘trusts the post service’ or ‘trusts a volunteer’), indicates that there is conceptual overlap between the two concepts.

For the purposes of considering trust in the context of healthcare and medical AI, we have found it sufficient to use one particular definition of the relationship between trust and reliance4: trust is reliance on the good will of the trustor towards the trustee. Baier then further unpacks ‘good will’ as often involving ‘caring’ and, in particular, caring for (an aspect of) the trustee’s wellbeing. Therefore, we can define trust as:

Trust

Trust is the reliance on the trustor’s care for [an aspect of] the trustee’s wellbeing.

In an attempt to avoid falling into a death spiral of definitions, we will take ‘care’ to have the intuitive meaning of an ascription of particularly high importance to the cared for entity, so that its preservation or improvement acts as a significant motivation for the carer’s actions. In Trust and Reliance in Healthcare and Medical AI, we will see that this definition provides a very good description of trusting relationships in healthcare settings. However, it is also a good fit for other paradigmatic cases of trust: e.g., trusting a parent can be adequately described as a reliance on their particular care for the child’s wellbeing; friendship can be described as mutual care for each other’s wellbeing.

This definition of trust has a number of important implications for its specific properties as an attitude3. Here, we will only discuss the two properties of trust that are particularly important for the discussion of attitudes towards medical AI: (i) the fact that trust puts the trustee in a vulnerable position; and (ii) the fact that trust can only be extended to entities capable of caring for the trustee’s wellbeing.

Aspect (i), the vulnerability introduced by trusting has been discussed by various authors (most prominently, Baier, 1986)4. It is also immediately obvious if we further expand our definition of trust: trust is the belief in and willingness to act on the disposition of the trustor towards care for [an aspect of] the trustee’s wellbeing. Therefore, if trust is disappointed, i.e., the trustor does not actually have a disposition to care for the trustee’s wellbeing, then the trustee’s wellbeing has been put at risk or even diminished. Furthermore, by being willing to act on the belief in this disposition, the trustee has potentially foregone other opportunities to ensure or increase their wellbeing. Accordingly, trusting introduces a power imbalance between the trustee and the trustor, in that the latter can harm the former by disappointing their trust.

Aspect (ii) has also been the subject of some debate among philosophers, especially in the context of well-being focused technology or sophisticated, anthropomorphic AI-systems. However, there is a general consensus that the definition of trust used here excludes inanimate objects from being trustors. While this seems obvious in the case of ‘low-tech’ objects like a clock, the consensus among ethicists extends to AI-technology6,7. The argument underpinning this assumption is broadly the following: being trusted (in the definition used here or in suitably similar definitions) requires the ability to care for the trustee’s wellbeing, which requires a true understanding of the moral and emotional worth of preserving another being’s wellbeing. As it is generally accepted that AI is incapable of gaining true moral and emotional understanding7, it cannot care for another’s wellbeing and, therefore, not be trusted. Notably, this does not imply that AI cannot be relied on, e.g., we can easily imagine a situation in which a very sophisticated care robot could be relied on to meet an infant’s every need, yet it could not be trusted (in the above) definition to look after the infant in the same way that a parent could. This distinction is a qualitative one between the different kinds of attitude one can extend towards a care robot and a human carer and is as such independent of the outcome of the attitude extended, i.e., independent of the quality of tending to the infant receives.

Trust and reliance in healthcare and medical AI

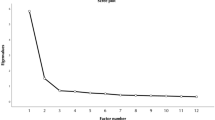

Using the definitions introduced in Trust and Reliance, we can identify the following trust- and reliance-attitudes in typical scenarios of the use of medical AI: (i) the patients’ trust in the medical professionals involved in their treatment; (ii) the medical professionals’ reliance on the medical AI they are using; and (iii) the patients’ reliance on the medical AI they are using, or which is employed in their treatment. In contrast to most existing discussions of trust in medical AI, we therefore do not focus solely on the attitude of patients towards medical AI but view this attitude as part of a triangle of attitudes governing the relationships between patients, medical professionals and medical AI. The triangle of attitudes is illustrated in Fig. 1.

The triangle of attitudes identified here presents the ideal case, i.e., those are the attitudes we think should exist between medical professionals, patients and medical AI. We will justify this normative ascription for each of the three attitudes below. Having identified the attitudes that we should foster in a healthcare setting with medical AI will allow us to use those as cornerstones for the epistemic, social and organisational ‘envelope’ for medical AI (Envelopment for Medical AI).

Attitude (i), i.e., the patients’ trust in medical professionals, has virtually unequivocally been recognised as crucial for patients’ satisfaction with their treatment and many other health outcome parameters8. Trust of a patient in a medical professional implies (see Trust and Reliance) the reliance of the patient on the medical professional’s care for the trustee’s wellbeing. As discussed by Wolfsberger & Wrigley patients usually find themselves in a situation that confronts them with a high-level of epistemic and moral uncertainty9. Trusting in the medical professionals who guide them through a shared decision-making process, and/or carry out risky - and often epistemically opaque – procedures, reduces the level of uncertainty and complexity to a manageable level. In contrast, constant questioning of whether the healthcare professional’s decisions and recommendations are motivated by a particular concern for the patient’s welfare, i.e., an absence of trust, can lead to emotional turmoil, decision fatigue and an inability to commit to the treatment10. Therefore, we will take the claim that patients must be able to trust medical professionals as true. Any successful enveloping for medical AI must therefore aim to preserve and foster such trust (Enveloping for Patients’ Trust in Medical Professionals).

With this aim in mind, it is worthwhile discussing which factors can destroy or inhibit trust in medical professionals. From the definition of trust (see Trust and Reliance), it is obvious that a betrayal of trust would involve the display of a lack of care for the patient’s wellbeing, i.e., behaviour that indicates that the medical professionals do not view the patient’s wellbeing as an important motivation for their decisions and actions. This is borne out in the substantial literature on factors leading to a lack of trust and to increased patient-vulnerability: paradigm examples include the prioritizing of financial or organisational goals over the patient’s wellbeing; the believe in the physician´s expertise and his or her ability to answer upcoming questions expertly or a failure to facilitate the patient’s agency in making healthcare decisions, which is in itself seen as crucial to the patient’s wellbeing (e.g. self-management and chronic conditions)11,12. Accordingly, any successful use of medical AI, i.e., one that preserves the trust of patients in medical professionals, must avoid introducing or amplifying these, or similar, detracting factors.

The existing literature on medical AI overwhelmingly focuses on the attitudes of patients towards the AI, often with a focus on patient-accessible medical AI13,14,15,16. However, in most healthcare scenarios, it will be predominantly healthcare professionals that are using, prescribing or integrating medical AI into existing medical processes. Therefore, we propose to pay more attention to attitude (ii), the attitude of medical professional to the medical AI they use, prescribe or supervise the patient in using. As with any medical device, the attitude of the medical professionals towards this form of technology should be one of reliance, i.e., according to the definition introduced in Trust and Reliance, the belief in the disposition of the device to carry out its expected tasks and a willingness to act upon this belief by e.g., using or prescribing the device. The willingness of medical professionals to rely on new technologies is influenced by several factors: studies relatively unequivocally show that persistent success in carrying out the allotted task, demonstrated through subject-appropriate clinical and laboratory trials, is paramount to medical professionals’ willingness to rely on particular devices17, in other words, the tenets of evidence-based medicine remain key guiding principles in the interaction of medical professionals with medical technology18. However, decisions to adopt new technologies are also influenced by the professionals’ ability to understand the device’s workings and the practicality of integrating it into existing treatment and working structures19. We maintain that there is no reason to treat AI differently from any other medical technology (e.g., glucose monitors, X-ray machines etc) and that therefore the same factors will influence medical professionals’ ability to rely on medical AI. Accordingly, the enveloping of these technologies (Enveloping for Patients’ Trust in Medical Professionals) needs to facilitate subject-appropriate testing and success-monitoring, make available appropriate levels of technical understanding and allow for medical AI to be integrated into the established structures of medical practice.

As discussed in Trust and Reliance, inanimate objects are generally not trustworthy. Accordingly, it follows that attitude (iii) of patients towards medical AI cannot be one of trust, but only of reliance. This is independent of the function of the AI, i.e., even for patient-accessible AI that might present a highly anthropomorphized user interface, true trust is not possible. Extrapolating from studies into the willingness of patients to rely on non-AI technology, their willingness to rely on medical AI will significantly depend on the medical professional’s willingness to rely on the medical AI, on their own understanding of the technology and the degree to which their concerns about the technology and the practicality of using it have been taken into account20. The enveloping of medical AI (Enveloping for Patients’ Trust in Medical Professionals) therefore needs to foster those reliance-relevant factors.

Having normatively established the triangle of attitudes surrounding medical AI, we will now develop eight primary components of a successful enveloping of medical AI (Envelopment for Medical AI). In Framework for Enacting Medical AI Envelopment, we will then present a framework for the implementation of those enveloping components that can be adopted by healthcare organisations, patients’ advocacy groups, developers and providers of medical AI.

Envelopment for medical AI

The notion of ‘enveloping’ describes the process of ‘surrounding’ the development and use of AI by an ‘envelope’ of regulations, laws, practices, norms and guidelines that allow the AI to be used socially (rather than merely technically) successfully2,21. The precise nature of this envelope will be determined by the specific social scenario and the social goals the relevant stakeholders wish to achieve. In the case of medical AI, we have identified (Trust in and Reliance on Medical AI) those goals as persevering and fostering the desired attitudes summarised in Fig. 1. In the following subsections, we will outline the basic components of an enveloping of medical AI for each of the relevant attitudes.

It has generally been recognised that the construction of an envelopment for AI requires a higher level of technical transparency than is currently available for many AI applications22. Robbins describe this transparency-problem as follows2:

The central idea of envelopment is that machines are successful when they are inside an ‘envelope’. This envelop constrains the system in a manner of speaking, allowing it to achieve a desired output given limited capacities. However, to create an envelope for any given AI-powered machine we must have some basic knowledge of that machine—knowledge that we often lack.

This is particularly true for deep-learning applications which use highly variable algorithms that process enormous amounts of data. A tracking of the intermediate computational steps of the algorithm is therefore practically not possible; in other words, the computation takes place in a metaphorical ‘black box’. However, there are now several computational methods that make the intermediate steps of data-intensive, adaptive algorithms more accessible to the developers and users23, i.e., that result in transparent AIs. A successful envelopment of any AI will therefore usually begin with the requirement that such methods are used in the development of the AI. In particular, Robbins requires that sufficient information is made available about the composition and potential biases of the algorithm’s training data2; the boundaries of reliably processed input data; the reliability of the algorithm’s success on any given tasks; and the space of possible outputs. With respect to medical AI, the amount of knowledge about the algorithm that is necessary to foster attitudes of reliance on medical AI in medical professionals and patients (Trust and Reliance in Healthcare and Medical AI) will be different for each of those groups and for each form of medical AI. In particular, greater knowledge is generally required for AIs involved in high-risk, life-affecting treatments. However, it should generally be noted that reliance on the AI by medical professionals seems to exclude fully opaque ‘black box’-applications, as the professional needs to be able to make professional judgements in the knowledge that they are able to check the algorithm for errors and biases.

Making available decision-guiding information about medical AI to the individual medical professionals and patients only constitutes part of the envelopment. In addition, this information should be used to develop general guidelines and regulations for the safe use of a given medical AI. Such guidelines and regulations are usually enacted on the level of healthcare organisations and systems. As we are focusing on the attitudes between healthcare professionals, patients and medical AI, we will primarily discuss the information that should be directly available to those parties. The provision of such information will likely be regulated to some degree by regulations and policies on the organisational and national level. However, we assume that the exact formulation of such policies should be guided by what medical professionals and patients need to preserve the desired attitudes (Trust and Reliance in Healthcare and Medical AI) and should therefore supervene on the parts of the envelopment pertaining directly to the core triangle of attitudes (Fig. 1).

The successful envelopment of medical AI clearly faces challenges, in particular, with respect to compliance and the degree to which the interest groups in each scenario are willing to engage with the recommend actions. To be fully successful, the enveloping therefore requires the buy-in of at least the majority of stakeholders, appropriate institutional support and – if necessary – regulatory oversight. In the envelopment components as presented below, we have flagged up areas were institutional time and resources should be made available to allow the components to be implemented and were regulatory reviews (usually by an oversight organisation like NICE) should be conducted. In order to foster buy-in and compliances, we recommend that all stakeholder groups are involved from the very early stages of the development of the medical AI and that great focus is placed on the accessibility of any information about the AI. During the former, it will be particularly important to take into account concerns by each stakeholder about the practicality of the enveloping, e.g., enveloping components for a particular medical AI need to take into account the limited time physicians have to engage with documentation or the specific organisational capabilities of the targeted health care sector. For the latter, it is important to work with communication specialist and to elicit feedback from patients and medical professionals on whether they have been able to gain a sufficient understanding of the medical AI. The research on efficient communication about complex medical issues and medical AI in particular is currently in its infancy. We envision that the envelopment will track state-of-the art developments in this area and potentially even generate data on which communication strategies are effective, to feed back into this process.

The envelopment is not a fail-safe way of ensuring that every scenario of medical AI-use turns out optimally with respect to preserving the attitudes as summarised in Fig. 1. In particular, clinical settings are replete with complex and changing constraints, be that on the medical professionals’ time or the patients’ attention span in moments of crisis. Dealing with those constraints is a problem that exceeds the remits of the envelopment for medical AI and this project. We propose that the best way of acknowledging and minimising these limitations is to require that the implementation of the envelopment complies with state-of-the-art empirical data on how to mitigate these factors, e.g., large-amount of ‘fine-print’ as initially deployed in internet safety regulations should be avoided (Implementation), and that, in turn, insights gained from the implementation of the envelopment components for different medical AI are appropriately recorded and used to gain further insights into how to mitigate the difficulties of the implementation of envelopments into real-life clinical settings. With ongoing clinical implementation of medical AI, established tools like clinical quality management systems, the use of a clinical incident reporting system or root cause analysis techniques, like the London protocol, will help to further shape the envelopment on the organisational level.

In the following, we will discuss the enveloping necessary to preserve each of the intended attitudes in the medical professionals-patients-AI triangle. In Framework for Enacting Medical AI Envelopment, we will then show how each component should be implemented during different stages of the product cycle of an AI application.

Envelopment for medical professionals’ reliance on medical AI

As discussed in Trust and Reliance in Healthcare and Medical AI, the acceptance of any medical technology by medical professionals is conditional on the technology passing the success requirements of evidence-based medicine, i.e., that the technology has been demonstrated to have acceptable success rates in rigorous clinical and experimental trials and that its workings can be adequately understood by the medical professional. The latter part of the requirement prescribes the implementation of suitable transparent algorithms and precludes the use of opaque black box AI applications. This is particularly important for diagnostic medical AI, which often uses deep learning algorithms that are particularly difficult to make transparent. However, there now exists a growing range of tools and programming methods that can make intermittent data processing steps visible23 and those should be routinely employed in the development of medical AI. This condition implies that some potential medical AIs, namely those that cannot be made explainable, are not suitable to be developed with the prospect of immediate employment in a medical setting. We consider this restriction an acceptable trade-off for making sure that medical professionals can gain an adequate understanding of the technology and therefore develop an attitude of reliance on the technology while maintaining a trust-relationships with their patients. The restriction also leaves open the possibility of developing ‘black box’-applications technical prototypes, which can then be moved into the regulatory envelope once explainability tools become available.

The use of explainable, rather than opaque, medical AI will also aide the fulfilment of the second part of the requirement, namely the demonstration of the success and reliability of medical AI in rigorous clinical experiments and trials. With respect to medical AI, this requirement roughly corresponds to Robbin’s requirement that the reliability of the outcomes of any task-based AI needs to be comprehensively examined and quantified2. However, there are two specific problems that arise in providing empirical evidence for the success of medical AI: (i) it can be difficult to fix appropriate success-standards for different medical AIs; and (ii) success rates can be wildly different for different populations of patients.

With respect to aspect (i), success-standards will necessarily be different for different kinds of AI. In the cases where AI replaces a medical professional in carrying out a given task, i.e., diagnostic AI, the success-standard usually is that the error-rate needs to be lower and the success-rate higher than the same task carried out by a human. However, such standards can be more difficult to fix in cases where the AI carries out tasks that are not as easily quantified, i.e., health-monitoring AI or AI that provides more holistic healthcare advice to patients. It is evidently harder to quantify how well such AI performs in improving patients’ health outcomes, and the studies needed to provide empirical data on their success require long-term monitoring of patients. The envelopment of medical AI cannot reduce the difficulties in quantifying the success of such applications. However, it needs to guarantee that medical professionals can access data about the performance of medical AI in clinical trials, or in long-term monitoring, and are likewise made aware of any lack of such studies.

With respect to aspect (ii), the problem of biases in healthcare provision to minorities and, in particular, biases in the data upon which healthcare practices are based, exists in many healthcare settings24. It can be amplified in the use of medical AI which are heavily data reliant and often show extreme performance differences between mainstream and minority patient groups25. In particular, the performance of deep-learning AIs – a technology often used for diagnostic medical AI – is crucially dependent on availability of the training data, i.e., of pre-diagnosed samples, and will heavily reflect historical biases in data collection. An often-used example using already existing technology is the use of deep learning in the detection of skin cancer from photographs of suspicious skin regions26. The data is trained on existing images of potential lesions. However, skin cancer detection has been notoriously biased towards detection of lesions on Caucasian skin, and the amount and quality of training data that exist for Caucasian patients is a lot higher than that for other skin types. Accordingly, skin cancer detection AI will work less well for non-Caucasian patients. Furthermore, if a Deep Learning application, like a skin cancer detection programme, produces markedly less accurate results for a particular group of patients, then the ‘black box’-nature of the algorithm makes it impossible to pinpoint where in the algorithm the mistakes occur. Explainable AI methods can help to visualise how the images are processed through the algorithm and which features are extracted and could thereby lead to a more targeted search for better input data for particular patient groups23.

Therefore, in order to judge whether medical AI can be relied upon, medical professionals need to be made aware of the composition of the training data as well as any differences in the performances of the algorithm for different patient groups. To facilitate this and the identification of biases that are amplified by the algorithm, medical AI should generally be explainable AI (see above). Furthermore, biases in the training data, known error amplification and differences in the success-rates for different patient-groups should translate into the identification of restraints on the possible inputs into the AI, i.e., into guidance for the medical professional for which patient groups the AI is safe to use.

The accessibility of extensive and patient-group specific data on the efficiency of the medical AI provides the first component of the envelopment aimed towards fostering an attitude of reliance on medical AI by medical professionals. The second part is the preservation of a central maxim of modern medicine: First Do No Harm27. This often translates into a cautionary attitude to any medical treatment: what needs to be considered is not just the success-rate of the proposed treatment but also the risks it poses to the patient, and the avoidance of such risk generally takes precedence over the trying for success. In order to rely on medical AI, medical professionals need to be able to enact this precautionary principle in their use of the AI. This includes having data on performances and biases available but also the freedom to decide whether the use of a given medical AI is appropriate for an individual patient. Therefore, the envelopment needs to guarantee that the medical professional can appropriately oversee the employment of the AI and has the autonomy to override and/or eschew the use of the medical AI.

How can these abstract requirements of the envelopment be implemented in the practical development and use of medical AI? We propose three practical requirements that the development and use of each medical AI should include: (MR1) medical professionals should be involved in the development of the AI, and be internally and externally responsible for the implementation of the envelopment framework; (MR2) each medical AI should come with standardised factsheet addressing the information requirement about safe uses, biases and performance in clinical trials as laid out above; and (MR3) each medical AI should be subject to an ethical assessment on the institutional level, taking into account the information provided on the fact sheet and the report of the medical officers. We will provide justifications for each of these recommendations below.

With respect to enveloping-component (MR1), the involvement of medical professionals during the development of the AI, this appears to be the most efficient way to both ensure an in-depth understanding of the algorithm by the medical community: beyond the direct experience of the individual ‘liaison’ professionals, they can transmit this understanding to their professional community through targeted reports and advisement on accessible documentation of the medical AI. It also means that they can advise during the development phase on what features are needed to allow professionals to use the AI within the ‘First Do No Harm’-maxim as outlined above, e.g., the ability to override the medical AI as they see fit and a targeted use of the AI for particular patient-groups only. The mandatory involvement of a medical professional in the early stages of the development process also establishes a position (e.g., medical officer, medical supervisor) whose main responsibility is to ensure the implementation and application of the envelopment framework, meaning that the position must be equipped with far-reaching rights and veto power regarding the medical AI development.

Enveloping-component (MR2), a standardised, comprehensive factsheet for each medical AI, targeted towards the medical professionals who will be using the AI in a clinical setting, is the easiest way to transmit the necessary information for a safe use of medical AI, as outlined generally by Robbins (2020) and specified for medical AI above: it should contain comprehensive information on the composition of training data, including biases; on the range of safe input ranges and formats, taking into account training-data biases; on the general workings of the algorithm; on the expected outputs and their interpretation; and on the performance of the AI in field-standard clinical trials. While the kind of information included in the factsheet should be standardised, the presentation of technical details can be advised upon by the medical professionals involved in the medical AI’s development (MR1), thereby making sure they are accessible to the community of medical professionals.

Enveloping component (MR3), a comprehensive, independent ethical evaluation of the medical AI on an institutional level, will draw upon the other two enveloping-components, i.e., the reports of the medical professionals involved in the development of the AI and the factsheet on the capabilities and limitations (broadly construed) of the AI. The ethical review will provide a further safeguard ensuring the use of the AI complies with fundamental patient rights and patient autonomy and should take into account whether the medical AI is right for the anticipated patient population; whether it can safely be integrated into existing clinical practice and whether medical professionals feel that they have been provided with sufficient information to rely on the medical AI in the sense outlined in Trust and Reliance.

Enveloping for patients’ trust in medical professionals

As described in Trust and Reliance in Healthcare and Medical AI, preserving patients’ trust in medical professionals entails preserving the patients’ reliance on the medical professional’s specific care for their wellbeing (Trust and Reliance). In the context of the use of medical AI, it has been shown that this reliance is inhibited if patients feel that either (i) the medical professionals decisions are based on the restrictions posed by the AI e.g., if it assumes the position of a virtual-decision maker (“computer says no”), rather than being used in an assisting capacity, or ii) that the introduction of the medical AI has reduced possibilities for shared decision-making and dialogue28. Those concerns are consistent with the spelling out of trust as the ascription of a specific concern for one’s wellbeing: in this context, patients cannot trust in a medical professional if they belief that decisions about their treatment are either (i) made by an entity that cannot exhibit this specific concern, i.e., the algorithms of the medical AI, or (ii) they cannot transmit enough relevant information about their wellbeing to the medical professional.

Given the asymmetrical nature of the relationship between medical professional and patient, fostering justified reliance of the medical professional on the medical AI will also foster patients’ trust in the decision of the medical professional to use the AI: as with other medical technology, their trust-relationship will include an assumption that the medical professional can make good judgements about what technology to rely on (Trust and Reliance in Healthcare and Medical AI). Therefore, enveloping components (MR1-3), as discussed in Envelopment for Medical Professionals’ Reliance on Medical AI, will also be crucial to preserving and fostering trust between the medical professional and the patient. In addition, we propose to add two new developing components to address concerns (i) and (ii): (PT1) the involvement of patients and patient advocacy groups at the development stage to identify and address trust-inhibiting treatment-restrictions in the AI; (PT2) the development of targeted, institutional guidelines for the integration of each medical AI into the medical consultation and decision-making process, which preserves the ability of patients and medical professionals to enter into a joined decision-making process.

Enveloping to address the first inhibitor to a trust-based relationship between the patient and medical professional should be aimed at providing the medical professional with the knowledge of and control over the medical AI that allows them to use the AI as a tool for (rather than a restriction on) the patient’s treatment. Therefore, the enveloping components aimed at allowing the medical professional to rely on the medical AI (Envelopment for Medical Professionals’ Reliance on Medical AI) are also crucial to preserving patient’s trust in the medical professional. In particular, enveloping component (MP1) – the involvement of the medical professional at the development stage - needs to include considerations on how to avoid ‘computer says no’-situations, i.e., situations in which the limitations of the AI rather than the medical professionals’ opinions would determine the course of treatment. Medical professionals should proactively identify situations in which the AI might place undue restrictions on treatment options and should be involved in the development of individualisation options and overrides. If medical professionals are involved in the development of the AI, they will not only be able to advise on the necessary individualisation options for the AI, but also gain the technical mastery and insights to explain their use of the medical AI to the patients, thereby enabling the kind of dialogue the fosters trust between patients and medical professionals.

In addition to making sure that the medical AI allows sufficient individualisation options and that concerns about any restrictions of treatment options are addressed (or, at least, recorded), we propose an additional enveloping component: namely, (PT1) the involvement of patients and patients’ advocacy groups in the development of the AI. Involving patients in the development of the AI means that potentially harmful restrictions in treatment options can be addressed before the AI is deployed and that the AI’s algorithm contains suitable individualisation options. Furthermore, patients’ advocacy groups are often best placed to raise concerns about data biases and historic neglect of specific patient-groups, which should be used to identify deficiencies in the training data and determine the limitations and restrictions of the AI. If those restrictions and limitations are recorded on the factsheet (enveloping component MR2), enveloping component (PT1) will also foster the reliance of medical professionals on the medical AI (Envelopment for Medical Professionals’ Reliance on Medical AI). In the case of patient-accessible AI, the input of patients at the development stage will also lead to better user interface design and to better guidelines on when patients should be encouraged to use the medical AI independently.

We also propose a second enveloping component (PT2) to foster and preserve patients’ trust in medical professionals: namely, the development of a comprehensive set of specific guidelines for each medical AI on how it is to be integrated into the diagnostic and treatment process. The development of those guidelines should involve representatives of all stakeholders: different patient groups, medical professionals and the relevant medical institutions. However, a particular emphasis should be put on keeping the interaction between patients and medical professionals as a key part of the treatment process and, therefore, ensuring that sufficient opportunities for dialogue and shared decision-making are preserved. As with other medical technology, patients need to be given sufficient opportunities to discuss the results or recommendations of the medical AI with medical professionals, and institutional routes need to be developed to preserve patients’ ability to make decisions about their own treatment, including forgoing the use of medical AI all together.

Enveloping for patients’ reliance on medical AI

It is a specific aspect of patient-accessible medical AI that some of its applications will involve use by patients with minimal intervention by medical professionals, e.g., in ‘smart’ health monitoring, through self-diagnostic tools or in the context of interactional therapies like ‘talking therapy bots’. However, both patients16 and medical professionals13 have expressed reluctance to rely (Trust and Reliance) on patient-facing medical AI. In particular, such concerns include the (i) low quality of the interaction with an AI, including the ascription of ‘spookiness’ or ‘uncanniness’ to such applications; (ii) the potential for unintentional misuse or misinterpretation of the medical AI’s recommendations by the patients; (iii) the unclear attribution of legal responsibility in case of errors by the medical AI; (iv) and the storage, security and legal further use of patient data inputted into the medical AI. The enveloping of the medical AI therefore needs to address those concerns in order to foster the patients’ ability to rely on the medical AI.

The envelopment components introduced in the previous sections will already address some of those concerns: in particular, (MR3) and (PT2) will ensure that, within an institutional setting, medical AI is not used as a substitute for the interaction between medical professionals and patients but is embedded in a treatment process that fosters these interactions at the appropriate moments. Similarly, (MR1) and (PT1), which require the involvement of patients and medical professionals in the development of medical AI, will allow these groups to input on how misuse and misinterpretations of the medical AI can be prevented, and which user interface components or additional information will reduce reluctance in interacting with the medical AI. Envelopment component (PT1), i.e., the involvement of patients and patient advocacy groups in the development of the medical AI, will also empower advocacy groups for different patient groups to effectively communicate the benefits and drawbacks of the AI to their specific client groups, hence enabling patients to make informed decisions about the use of the medical AI.

However, while those existing envelopment components largely address concerns (i) and (ii), addressing concerns (iii) and (iv) requires the introduction of further, targeted envelopment components. We, therefore, propose three further envelopment components: (PR1) the development of comprehensive, specific documentation for each medical AI that allows patients to understand how data is handled by the AI and which security risks to their data exist; (PR2) a set of comprehensive, specific documentation for each medical AI specifying the legal responsibility of the distributors, developers, medical professionals and institutions, including a clear identification of potential ‘grey’ or unregulated areas; (PR3) the development of appropriate legally or institutionally binding safeguards that offer clearly signposted opportunities for the patients to involve medical professionals at appropriate moments in the use of the AI.

Most data-based applications come with some extensive, small-printed documentation of their data usage – which most users ignore due to the fact that they find it incomprehensible. Given the sensitivity of medical data, the need to make such documentation more accessible to the user is particularly pressing for medical AIs. Accordingly, envelopment component (PR1) should focus on developing documentation that is easily understandable and allows the user to engage actively with questions of data security and usage. This will require the cooperation of developers, distributing companies, medical professionals, communications specialists and patient advocacy groups. The inclusion of the latter two will ensure that the documentation contains information that is important to different patient groups and that it is presented in formats that are accessible to different users. Furthermore, patients’ advocacy groups and medical professionals can make use of such documentation to inform specific patient groups about the data usage and security of the medical AI specifically, and through different media. The aim of envelopment component (PR1) should therefore be that no patient uses the medical AI without having previously considered its data usage and security, thereby empowering patients to make truly informed decisions about the use of the medical AI.

The aims of (PR2) are similar to those of (PR1), but with a focus on the legal framework and on who is legally responsible for the different aspects of the AI, rather than questions of data security. This set of documentations should also be developed through a comprehensive cooperation of different stakeholders: distributing companies, legal experts, medical professionals, patients’ advocacy groups and communication experts. As with envelopment component (PR1), a strong emphasis should be put on the accessibility of the conveyed information to different patient groups.

Beyond the difficulty of communicating legal information about the medical AI in an accessible and engaging format, the documentation needs to recognise that the legal framework for AI-use in the European Union and the UK is currently still underdeveloped29. The enactment of such a framework is often a question of both national and international law and depends on a large number of institutions beyond the medical profession. As it is unlikely that the large-scale deployment of medical AI will be delayed until the legal framework has caught up, we will therefore view existing AI-legislation as an external factor, which the enveloping must content with (rather than e.g., making changes to this legislation part of the envelopment). This means that grey areas and under-regulation need to be accepted as fact. However, they should be clearly identified and flagged in the legal documentation that constitutes (PR2). Making patients and medical professionals aware of such legal lacunas will empower them to make informed decisions about each specific medical AI, including the choice to forgo use or advocacy of the AI if they consider the legal framework to be insufficient.

Lastly, enveloping component (PR3) – legally or institutionally binding safeguards enforcing the involvement of medical professionals at appropriate moments in medical AI use – will bolster efforts to address concerns (i) and (ii). The precise nature of such mandated safeguards will depend on the specific nature of the medical AI and could range from prompts to contact a medical professional to automatic alerts if the AI registers certain results. However, we envision that a guiding factor to developing such safeguards will be the need to ensure that internationally recognized clinical pathways will still be followed. Accordingly, it needs to be ensured that patient-accessible medical AI leads patients back onto those pathways. For example, it needs to be made clear and suitably enacted in laws and guidelines what the results of a medical AI enable patients to do in seeking further treatment, e.g., whether the result of a diagnostic AI would enable patients to order prescription medication directly from a pharmacy. Generally, this should only be the case, if the recognized clinical pathway allows similarly self-directed action.

Framework for enacting medical AI envelopment

The eight envelopment components derived in Envelopment for Medical AI are summarised in Table 1. In this section, we will present a framework for the actual enacting of the envelopment components into the product life cycle of a medical AI application. Following an adapted version of the systems development life cycle (SDLC), we will distinguish between the development (Development), implementation (Implementation) and widespread application (Application) of medical AIs. For each of those three stages, we will identify the envelopment components that should be enacted and briefly outline how this could be done. The stages and the envelopment components they encompass are summarised in Fig. 2.

It is undeniable that the enactment of the envelopment derived here comes at considerable cost in time, money and effort for all stakeholders. As acknowledged in the introduction, the project is based on the assumption that the use of medical AI is ultimately desirable on social, medical and financial ground and that reasonable costs to make its employment socially successful through the envelopment developed here are generally justified. Given the amount of involvement required from all stakeholder groups, those are shared costs between medical institutions, advocacy groups, professional bodies and the developers of medical AI. The exact distribution of those costs will, as in all processes in medical care, be subject to considerable negotiation between all parties. However, as the deployment of medical AI is forecasted to lead to considerable efficiency savings in medical settings, we assume that healthcare systems will be willing to invest in the enactment of an enveloping that will make this deployment socially as well as technically successful. Similarly, given the profitability of AI applications, we are confident that the costs borne by the developers will not be prohibitive for the majority of projects and that the process of a successful envelopment ultimately will routinely be included in evaluations as part of the health technology assessment (HTA).

The UK National Institute for Healthcare Research (NIHR) defines an HTA as addressing the following questions: (i) whether the technology works; (ii) for whom; (iii) at what cost; (iv) how it compares with the alternatives. If AI developers, medical institutions and patient advocacy groups choose to engage with the protocol presented here, then the additional costs of enacting this protocol will have to be included in answering question (iii) and, indirectly, in the comparison to alternatives (iv) or the current gold standard. It is undeniable that the protocol will raise the costs of a medical AI product and we will talk about particular costs in detail below (in Development and Implementation). However, those costs will be shared costs among all stakeholders (developers, medical institutions, regulatory bodies and patients). Furthermore, in the context of HTAs, the additional costs identified in the answer to (iii) will be offset in gains to answers in the question of (ii) and (iv). Medical professionals and users frequently fear a disruption of their trust-relationship or feel insufficiently informed about the technology. Our protocol will remedy such objections. Ultimately, this will also raise the cost-benefit balance of the product as a higher rate of adoption usually raises profitability or lowers break-even-points.

Development

It is obvious from the description of the different envelopment components (Table 1) that the development phase is the most important phase for the enacting of the medical AI envelopment. This contrasts with existing practices, where often a finished product is presented to the medical community by developers and manufacturers. It requires the opening up of the developing process to medical professionals and patients and, in turn, the taking up of responsibilities for the application’s development by these groups. As discussed in Envelopment for Medical Professionals’ Reliance on Medical AI, this will involve creating dedicated positions that require both medical and (some) technical expertise, e.g., in the form of a Chief Medical Officer or Medical Information Officer, at the institutional level. The following discussion assumes that such positions have been created, and that, therefore, medical professionals and patient representatives with sufficient time, training and resources are available to take part in the process.

As discussed above, the process of opening up the development phase to medical professionals and patients, and funding the needed human resources, will lead to increased costs for the development of medical AI applications. Those are shared costs among all stakeholder groups and, given the anticipated benefits of AI applications (e.g. the financial gains for the pharmaceutical industry from widespread introduction of medical AI have been cited by Bohr and Memarzadeh to be in excess of USD 100 billion annually)26 should be subsidized by oversight bodies, institutions and governments, i.e., they should not be solely borne by the developers of AI. As argued above, we do think that these costs are worth the ethical gains achieved through preserving the desired attitudes between patients, professionals and medical AI. Furthermore, while participation in the framework presented here comes with initial extra costs, it might ultimately raise the profitability of the application by making it more acceptable to patients and medical professionals. For example, the wide-spread adoption of diagnostic tools by medical professionals is often impeded by feeling insufficiently briefed on how the technology works and insufficiently informed about biases and potential difficulties. The framework presented here will remedy such lacunas and could therefore ultimately increase the profitability of a product.

The enactment of envelopment components (MR1) and (PT1) requires that both medical professionals and patients are involved in in discussions about the training data and methodology, including gaining an in-depth understanding of the limitations and difficulties of the algorithm, as well as in the design of the application’s eventual user interface. This requires that developers identify relevant groups of medical professionals and patients before the main phase of the development process and as explained above that the corresponding positions are created by medical institutions and patients’ advocacy groups. It is likely that this process can utilise existing representational and advocacy infrastructure, e.g., an application aimed at diagnosing skin cancer could contact national skin cancer patients’ advocacy groups and professional dermatologists’ organisation, in the first instance. However, special care should be taken to identify minority groups within both the patients’ and medical professionals’ communities. For example, an application to be deployed in different geographical regions should have representation (in the form of expertise and in-depth knowledge, not necessarily in person) from all those regions involved in the development process. We are currently at a stage in medical AI distribution where channels to establish such routine involvement of medical professionals and patients in the development of medical AI could be established as standard components of the development phase before the division between commercial developers and users becomes embedded. It is therefore paramount to begin the envelopment and enactment of medical AI as soon as possible, to avoid the calcification of structures that ultimately do not fulfil the aims of enabling a socially successful use medical AI (Envelopment for Medical AI).

While (MR1) and (PT1) require enactment throughout the development period, (MR2), (MR3) and (PR1) should also be enacted in the later part of the medical AI’s development stage. To enact (MR2), once the first development and training phase of the AI-application has been concluded, a comprehensive, accessible factsheet describing its limitations, risks and safe use parameters should be compiled, using input from medical professional, patients and developers. Given that even patient-accessible AI will still require the supervision of medical professionals, the target audience for this fact sheet are the medical professionals that will be using or supervising the use of the medical AI. Once the factsheet is available, medical institutions can begin the enactment of (MR3), i.e., an ethical evaluation of the product using the factsheet as well as testimony from the medical professional and patients involved in the development process (MR1, PT1). Similarly, towards the end of the product development stage, the enactment of envelopment component (PR1), i.e., the compilation and design of accessible documentation about the data safety and algorithmic limitations of the AI should begin. This documentation should have patients as its target audience and (as discussed in Enveloping for Patients’ Reliance on Medical AI) a special emphasis should be placed on accessibility. In other words, the well-known example of presenting users with a wealth of inaccessible small print right before clicking on the application should be avoided.

Implementation

At the end of the development phase, medical institutions should be able to gain access to a standardised factsheet (MR2) and a beta-version of the medical AI. They can then start implementing the AI into clinical structures and treatment protocols. Ideally, each institution would have a chance to interact directly with the developers, to make particular inquiries and to obtain specific guidance and information. This should enable institutions to enact enveloping component (MR3), i.e., the ethical assessment on the institutional level. It is important to stress that this ethical assessment needs to be conducted before the test phase in general, and for each institution separately, taking into account the realities of their clinical and organisational structures. Similarly, the information about the medical AI provided to the medical institution must be detailed enough to enable them to judge whether the application can be used for their specific cohort of patients. This needs to involved being aware of biases in the data and algorithm that might affect their patient cohort specifically, i.e., biases in the availability of training data for minority groups or of specific problems in using the application under certain conditions. In some cases – e.g., patient-accessible medical AIs – the ethical evaluation will likely be conducted primarily by an oversight body (e.g., NICE in the UK) – rather than individual institutions.

If the application passes the first ethic assessment, then institutions will likely want to beta-test the product, i.e., either by using it in a restricted setting or by using the medical AI in addition to established treatment and diagnosis protocols. At this stage, the institution will enact enveloping component (PT2), i.e., the development of guidelines for the integration of medical AI into clinical consultation and treatment processes. The guidelines need to address the fact that – in order to preserve trust between the medical professional and the patient (Enveloping for Patients’ Trust in Medical Professionals) – patients need to have ring-fenced opportunities to discuss the results of any medical AI with a medical professional. Similarly, medical professionals need to be given time and resources to gain a sufficient understanding of the AI to conduct such consultations and must feel able to make independent decisions about the use of the AI in specific cases. It should always be possible for clinicians to opt out of using an application, e.g., if they think that it is too opaque for them to rely on or that it is the wrong choice for the treatment of a specific patient. The guidelines therefore need to guarantee that the institutional treatment protocols and clinical pathways allow for such decisions to be made, e.g., by requiring a brief written assessment for the suitability of using the medical AI to be conducted before each implementation into a treatment plan, or by similar institutional control structures. Most importantly, the guidelines developed under component (PT2) need to ensure that any desire to capitalise on the time-saving potential of medical AI does not lead to a scenario where medical professionals do not weigh up the advantages and disadvantages of using the medical AI in each case and where patients lack any opportunity to consult with medical professionals about the use and results of a given medical AI.

The latter concern, i.e., the fact that AI applications have the potentiality to disrupt the trust-based relationship between medical professionals and patients by reducing opportunities to have personal consultations, should be addressed through envelopment component PR3, i.e., the enacting of binding safeguards for the involvement of medical professionals. In particular, the implementation of medical AI into any treatment protocols must include a face-to-face consultation for each patient that is specifically set aside for discussing the use of the medical AI. In the first instance, binding safeguards can be implemented either through (international or national) medical, clinical or standard treatment guidelines by professional associations which are adapted by the individual medical institutions. Alternatively, they can become legal requirements enacted by the relevant ministerial office (e.g., the Medicine and Healthcare products Regulatory Agency in the UK). In practice, binding safeguards are often initially implemented as guidelines to be adopted by medical institutions, which are enforced through quality reporting and rating systems and are only enforced through legal requirement if this approach fails (e.g., see the recent enactment of ‘Martha’s Rule’ on the patient’s right to a second opinion30). We assume that a similar dynamic approach will be taken in the case of medical AI and that continued engagement with envelopment frameworks like the one presented here will eventually lead to a stable combination of national and international guidelines and legal regulations.

Lastly, during the implementation phase, enveloping component (PR2) needs to be enacted, i.e., legal and best-practice guidelines and documentation for each medical AI need to be made available to patients. As with the data-safety documentation developed under (PR1), a particular emphasis should be placed on the accessibility to patients. It is not sufficient to simply make actual legal texts or the medical societies’ guidelines available to patients. Instead, the information needs to be presented such that patients can gain a comprehensive understanding within a reasonable amount of time and with reasonable effort. Therefore, the development of the documentation should involve both patient advocacy groups as well as communication specialists. Inspiration here could be taken from documentation developed for pharmaceuticals or more complex medical scenarios like organ transplantation. However, given the particular challenges of communicating the workings of AI, it would be best if this documentation could be developed in consultation with science communication specialists. How much documentation is necessary will depend on the medical AI and the risk to the patient, e.g., it could vary from medical information sheets to short information films, interactive websites or leaflets.

In addition to written documentation, medical institutions should also be required to offer opportunities for patients to consult with medical professionals and administrators on the laws and regulations pertaining to the medical AI. Conceivably, as is the case with other complex treatment protocols, patient advocacy groups should also be funded to offer advice, consultations and further documentation of the legal and regulatory framework.

Notably, all enveloping components must be implemented before the mainstream application of the medical AI. In other words, a medical AI should only be released for general use once it is fully enveloped, i.e., once it is embedded in a framework of appropriate guidelines, regulations and legislation (Envelopment for Medical AI). During the final phase of the systems life cycle (Application), the enactment of the envelopment components only needs to be updated to fit the changing use of the product.

Application

As is evident from the discussion above, all enveloping components should be enacted during the first two phases of the systems life cycle. However, during the application phase of the product, it is important that further enactment of the envelopment takes place and that existing enactments are updates continuously. In particular, in order to enact enveloping component (MR3), i.e., ethical assessment on the institutional level, regular ethical assessments need to be performed during the application phase of the medical AI’s lifecycle. Such assessments should involve eliciting feedback from both medical professionals and patients on the use of the medical AI. Additionally, patient advocacy groups and medical professionals should regularly be consulted upon ethical issues that can arise during the mass rollout of the AI and its use in the treatment of more and more patient groups. Therefore, medical institutions should have regular ethics assessments of the use of the medical AI throughout its lifecycle and during growth-periods, in particular.

The increased use of a medical AI during the growth period will also make more feedback available on the existing enactments of (PT2), (PR2), and (PR3), i.e., guidelines for the integration of medical AI into clinical consultations and medical assessment; legal and regulatory documentation for each medical AI; and the binding safeguards for the involvement of medical professionals, respectively. Oversights bodies, legislators and medical institutions need to make sure that such feedback is regularly collected from both patients and medical professionals, including feedback on the accessibility of the documentation of relevant legislation. In order to ensure that such updating happens regularly, oversight bodies will likely need to enforce a schedule of regular reviews and audits. The exact spacing of such reviews will depend on the specifics of the medical AI but – at least in the early stages of application or particular growth periods – should be at least annually.

Discussion

We developed a framework for the social successful use of medical AI, based on the assumption that a core feature of such successful use will be the preservation of a trust-based relationship between patients and medical professionals (Trust and Reliance in Healthcare and Medical AI). In addition to fostering trust between patients and medical professionals, we also require that both medical professionals and patients can rely (Trust and Reliance) on the medical AI. By making this triangle of attitudes (Fig. 1), the focal point of our framework, we were able to derive eight envelopment components that are directly aimed at fostering and preserving those attitudes (Table 1). Thereby we use the term envelopment in the definition of providing an envelope of guidelines, norms, laws and practices for the AI, which will allow its socially successful use. The initial analysis of which attitudes between patients, medical professionals, and medical AI have been found to foster treatment success and satisfaction allowed us to capitalise on existing research into those attitudes and to use this research to unpack the notion of ‘socially successful’ in the definition of envelopment. To our knowledge, we are unique in taking this relationship- and attitude-focused approach. Similarly, the eight enveloping components derived are novel in that they are more comprehensive compared to other discussions of the ethics of medical AI.

We have then developed a guide for the enactment of those envelopment components at each of the three stages of an AI’s lifecycle (Fig. 2). Our framework is demanding in that it requires a close interaction between medical institutions, medical professionals, patients and the developers of medical AI. In particular, it will require the former three groups to take a much more active role during the development and implementation of the medical AI than is currently the case. However, we believe that this is necessary to preserve the (often judged to be fragile, see Trust and Reliance in Healthcare and Medical AI) trust of patients into medical professionals and for medical professionals to be able to rely on medical AI as a medical methodology. However, the detailed description of how each component can be enacted, that we provide, demonstrates that the framework can be enacted within the usual sequence of product development. Our project is also novel in having brought together an ethical framework (Envelopment for Medical AI) with an enactment plan based on a product lifecycle. In the future, we hope to partner with developers, medical institutions and patient advocacy groups to enact the framework in real-life for new medical AI applications. This paper therefore constitutes the theoretical underpinning of a practice-focused larger project for the envelopment of medical AI.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

References

Siala, H. & Wang, Y. SHIFTing Artificial Intelligence to be Responsible in Healthcare. a systematic review. Social Science & Medicine 296, https://doi.org/10.1016/j.socscimed.2022.114782 (2022).

Robbins, S. AI and the path to envelopment: knowledge as a first step towards the responsible regulation and use of AI-powered machines. AI Soc. 35, 391–400 (2020).

Faulkner, P. & Simpson, T. Introduction. In Faulkner, P. & Simpson, T. (Ed.). The Philosophy of Trust 1-15 (Oxford: Oxford University Press, 2017)

Baier, A. Trust and Antitrust. Ethics 96, 231–260 (1986).

McGreer, V. & Pettit, P. The Empowering Theory of Trust. In Faulkner P. & Simpson, T. (Ed.). The Philosophy of Trust 15-34 (Oxford: Oxford University Press, 2017)

Stahl, B. C. Information, ethics & computers: the problem of autonomous moral agents. Minds Mach. 14, 67–83 (2004).

Johnson, D. G. Computer systems: moral entities but not moral agents. Ethics Inf. Technol. 8, 195–204 (2006).

Birkhaeuer, J. et al. Trust in health care professionals and health outcomes: a meta-analysis. PloS One, https://doi.org/10.1371/journal.pone.0170988 (2017).

Wolfsberger, M. & Wrigley, A. Can We Restore Trust. In Wolfsberger, M. & Wrigley, A. (Ed.). Trust In Medicine: Its Nature, Justification, Significance, and Decline, 207-224 (Cambridge: Cambridge University Press, 2017).

Brown, M. T. et al. Medication adherence: truth and consequences. Am. J. Med. Sci. 351, 387–399 (2016).

Lean, M. et al. Self-Management Interventions for People with Severe Mental Illness: Systematic Review and Meta-Analysis. Br. J. Psychiatry 214, 260–268 (2019).

Massimi, A. et al Are community-based nurse-led self-management support interventions effective in chronic patients? Results of a systematic review and meta-analysis. PLoS One, https://doi.org/10.1371/journal.pone.0173617 (2017).

Brown, C., Story, G. W., Mourao-Miranda, J. & Baker, J. T. Will Artificial Intelligence Eventually Replace Psychiatrists? British Journal of Psychiatry,1-4 (2019).

Cumyn, A. et al. Informed consent within a learning health system: a scoping review. Learn. Health Syst. 4, 1–14 (2020).

Carter, M. S. et al. The ethical, legal and social implications of using artificial intelligence systems in breast cancer care. Breast 49, 25–32 (2020).

Morley, J. et al. The ethics of AI in health care: a mapping review. Soc. Sci. Med. 206, 113–172 (2020).

Chang, S. & Lee, T. H. Beyond evidence-based medicine. N. Engl. J. Med. 379, 1983–1985 (2018).

Masic, I., Miokovic, M. & Muhamedagic, B. Evidence-based medicine: new approaches and challenges. Acta Inform. Med. 16, 219–252 (2008).

Ammenwerth, E. Technology acceptance models in health informatics: TAM & UTAUT. Stud. Health Technol. Inform. 263, 64–71 (2019).

Kerasidou, C., Kerasidou, A., Buscher, M. & Wilkinson, S. Before and beyond trust: reliance on medical AI. J. Med. Ethics 48, 852–856 (2021).

Floridi, L. Children of the fourth revolution. Philos. Technol. 24, 227–232 (2011).

Kiseleva, A., Kotzinos, D. & De Hert, P. Transparency of AI in healthcare as a multilayered system of accountabilities: between legal requirements and technical limitations. Front. Artif. Intell. 5, 1–21 (2022).

De, T., Prasenjit, G., Mevawala, A., Nemani, R. & Deo, A. Explainable AI: a hybrid-approach to generate human-interpretable explanation for deep learning predictions. Procedia Comput. Sci. 168, 40–48 (2020).

Kawaga-Singer, M. Population science is science only If you know the population. J. Cancer Educ. 21, 22–31 (2006).

Chellappa, R. & Niiler, E. Can We Trust in AI? (Baltimore: John Hopkins University Press, 2022).

Bohr, A. & Memarzadeh, K. (Ed.) Artificial Intelligence in Healthcare (Academic Press, 2020).

World Medical Association (2017). WMA Declaration of Geneva. 68th WMA General Assembly, https://www.wma.net/policies-post/wma-declaration-of-geneva/ (2017).