Abstract

Advances in digital technologies have allowed remote monitoring and digital alerting systems to gain popularity. Despite this, limited evidence exists to substantiate claims that digital alerting can improve clinical outcomes. The aim of this study was to appraise the evidence on the clinical outcomes of digital alerting systems in remote monitoring through a systematic review and meta-analysis. A systematic literature search, with no language restrictions, was performed to identify studies evaluating healthcare outcomes of digital sensor alerting systems used in remote monitoring across all (medical and surgical) cohorts. The primary outcome was hospitalisation; secondary outcomes included hospital length of stay (LOS), mortality, emergency department and outpatient visits. Standard, pooled hazard ratio and proportion of means meta-analyses were performed. A total of 33 studies met the eligibility criteria; of which, 23 allowed for a meta-analysis. A 9.6% mean decrease in hospitalisation favouring digital alerting systems from a pooled random effects analysis was noted. However, pooled weighted mean differences and hazard ratios did not reproduce this finding. Digital alerting reduced hospital LOS by a mean difference of 1.043 days. A 3% mean decrease in all-cause mortality from digital alerting systems was noted. There was no benefit of digital alerting with respect to emergency department or outpatient visits. Digital alerts can considerably reduce hospitalisation and length of stay for certain cohorts in remote monitoring. Further research is required to confirm these findings and trial different alerting protocols to understand optimal alerting to guide future widespread implementation.

Similar content being viewed by others

Introduction

With our ever-ageing population, a result of significant improvements in healthcare delivery, medicine, personal & environmental hygiene, a greater burden is placed on our primary and secondary care healthcare facilities1. The rising costs of healthcare delivery require novel strategies to improve healthcare service provision2, particularly one that proves to be cost-effective and is widely accepted by citizens.

Telemedicine, a concept since the 1970s, has evolved to be synonymous with terms such as digital health, e-health, m-health, wireless health, and, remote monitoring, among others. Indeed, over 100 unique definitions have been uncovered for ‘telemedicine’, a variation, which is likely to be attributed to the progression of these technologies3,4. Remote monitoring allows people to continue living at home rather than in expensive hospital facilities through the use of non-invasive digital technologies (such as wearable sensors) to collect health data, support health provider assessment and clinical decision making5. Several randomised trials have demonstrated the potential for remote monitoring in reducing in-hospital visits, time required for patient follow-up, and hospital costs in individuals fitted with cardiovascular implantable electronic devices6,7,8.

Vital signs including, heart rate (HR), respiratory rate (RR), blood pressure (BP), temperature, and oxygen saturations, are considered a basic component of clinical care and an aide in detecting clinical deterioration; changes in these parameters may occur several hours prior to an adverse event9,10. With wearable sensors being light-weight, small, and discrete they can be powerful diagnostic tools for continuously monitoring important physiological signs and offer a non-invasive, unobtrusive opportunity for sensor alerting systems to remotely monitor patients, driving the potential to improve timeliness of care and health-related outcomes11.

Feedback loops and alerting mechanisms allow for appropriate action following recognition of clinical deterioration. Current alerting mechanisms for remote monitoring include alert transmission to a mobile device; automated emails generated to a healthcare professional; video consultation; interactive voice responses; or web-based consultations12. The feedback loops can be relayed to nurses, pharmacists, physicians, counsellors, and physicians but also to patients13. Earlier recognition of deterioration, through alerting mechanisms, has potential to improve clinical outcomes, such as hospitalisation, length of stay, mortality, and subsequent hospital visits, through earlier detection but has been inadequately studied.

A recent systematic review reported outcomes for remote monitoring undertaken in individuals in the community with chronic diseases (e.g., hypertension, obesity, and heart failure), but many of the included studies were of low quality and underpowered; the meta-analyses were on obesity related intervention outcomes (body mass index, weight, waist circumference, body fat percentage, systolic blood pressure, and diastolic blood pressure), consisting of few studies13. Additionally, the evaluation of feedback loops and alerting mechanisms following recognition of abnormal parameters was not the main focus of this study, a pivotal phase where intervention could influence clinical outcomes. With the search performed in 2006, and the rapid evolution of such a field, an updated systematic review aimed at digital alerting mechanisms is warranted, with the inclusion of wider medical and surgical cohorts for generalisability. The aim of this systematic review is to identify studies evaluating digital alerting systems used in remote monitoring and describe the associated clinical outcomes.

Results

Study characteristics

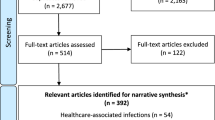

A total of 2417 citations were retrieved through literature searches. An additional two articles were found from bibliography cross-referencing. Full-text review was performed for 128 articles with 33 meeting the inclusion criteria for analysis, of which, 21 were randomised controlled trials with the remaining prospective or retrospective studies. Of the 33 included studies, 23 allowed for meta-analysis. The characteristics of included studies is shown in Table 1. A PRISMA flow diagram can be seen in Fig. 1.

Hospitalisation and inpatient admissions

Six studies demonstrated a mean decrease in hospitalisation/inpatient admissions of 9.6% (95% CI 4.9–14.3%, I2 = 96.4%, Fig. 2) favouring digital alerting systems from a pooled random effects analysis. However, pooled WMD reported no change in hospitalisation from six studies (WMD 0.061; 95% CI −0.197–0.318, I2 = 78%)14,15,16,17,18,19. Pooled HRs for all-cause hospitalisation similarly demonstrated no significant difference (HR 0.916; 95% CI 0.781–1.074, I2 = 0%)20,21.

Six additional studies, reporting on cardiovascular related hospitalisation, revealed no significant relationship with digital alerting (mean decrease 10.1%; 95% CI −24.9–4.7%, I2 = 95.6% and pooled HRs 0.907; 95% CI 0.757–1.088, I2 = 2.4%)20,22,23,24,25

Mortality

A total of 16 papers were included; pooled random effects analysis demonstrated a 3% mean decrease in all-cause mortality from digital alerting systems (95% CI 2–3%, Fig. 3) from 12 studies; there was high heterogeneity with this analysis (I2 = 94.4%). However, pooled HRs of five studies reported no change in all-cause mortality (HR 0.89; 95% CI 0.79–1.01, I2 = 30.3%)20,21,25,26,27.

A sub-group cardiovascular cohort pooled random effect analysis failed to demonstrate a relationship between cardiovascular mortality and digital alerting (mean decrease 0.9%, 95% CI −0.6–2.4%, I2 = 25.7%)20,24.

Length of stay

Ten studies were included; digital alerting reduced hospital LOS by a mean difference of 1.043 days (95% CI 0.028–2.058 days, p < 0.001, I2 = 95.5%)14,15,16,17,18,24,28,29,30,31. Three studies reported on LOS in chronic obstructive pulmonary disease (COPD) cases found no benefit of digital alerting (mean difference 0.919 days; 95% CI −1.878–3.717 days, p = 0.213, I2 = 35.3%)17,30,31.

Emergency department visits

Eight studies were included; pooled random effects analysis of ED visits demonstrated no statistical benefit of digital alerting (mean difference 0.025; 95% CI −0.032–0.082, I2 = 51.8%)14,16,17,18,19,22,28,32.

Outpatient and office visits

Five studies were included; pooled random effects analysis demonstrated no benefit of digital alerting (mean difference 0.223 days; 95% CI −0.412–0.858, I2 = 95.7%)14,17,18,28,32. Sub-group data from Ringbaek et al. (respiratory and non-respiratory) and Lewis et al. (primary care chest and non-chest related visits) were combined for this analysis.

Similarly, no statistically significant mean decrease in outpatient visits was noted from three additional studies27,33,34.

Sub-group analysis of a respiratory cohort demonstrated a mean difference of 1.346 days (95% CI 0.102–2.598, I2 = 93.8%)17,28.

Risk of bias assessment

The assessment of risk of bias for included randomised trials is presented in Fig. 4.

Allocation was random across all 20 studies with 15 adequately stating the method used for generating random sequence17,18,19,20,21,28,30,31,33,34,35,36,37,38,39. Vianello et al.31 utilised a dedicated algorithm to check for imbalances for baseline variables with clear randomisation sequence methods detailed. However, concealment measures were not mentioned, resulting in a judgement of ‘some concerns’ for risk of bias for randomisation. Three additional studies were given the same judgement due to lack of concealment descriptions15,19,35. Ringbaek et al.17 clearly described their method for randomisation but information on concealment was not given and baseline demographic differences were noted between groups; as such, randomisation was judged to be at high risk of bias. Similarly, randomisation for Scherr et al.24 was deemed to be at high risk of bias.

Sink et al.39 blinded participants with digital alerts not forwarded to healthcare providers in the control arm. This, a result of their automated telephone intervention collecting self-reported symptom data rather than continuous physiological parameter recording through wearable sensors or smart devices, as utilised by the other trials, made participant blinding possible. A low risk of bias was, therefore, judged.

The risk of attrition bias was deemed low across all included studies with missing numbers clearly reported and deemed to not have impacted the overall results. There was mostly a complete follow-up of all participants.

Insufficient information was provided to assess whether other important risk of biases exists in four studies so were judged as some concerns17,20,23,31. Basch et al.35 clustered groups into computer experienced and computer in-experienced but numbers across various arms were unequal for selected outcome measures. Therefore, a judgement of high risk of bias was given. Comparably, Scherr et al.24 performed multiple analyses with both intention-to-treat and per-protocol. Only the latter revealed significant results favouring their telemonitoring system.

Overall, seven studies were deemed to be at low risk, ten studies had some concerns, and the remaining were judged as high risk of bias.

Alerting mechanisms and response to alerts

Table 2 summaries the alerting mechanisms utilised within the studies. Mechanisms include text messaging, email notifications, alerts on telemonitoring hubs/web-based platforms, as well as, trialling audible alerts to study participants rather than healthcare professionals.

Discussion

This meta-analysis provides evidence that digital alerting mechanisms used for remote monitoring are associated with reductions in hospitalisation and inpatient admissions. All pooled studies were prospective with the majority being randomised trials. However, most studies included were low in quality (Table 1) and only two studies had follow-up periods beyond 12 months20,21. The included studies were particularly heterogenous meaning that the results should be interpreted cautiously but may suggest that digital alerting in remote monitoring could be beneficial across a variety of patient cohorts. Pooled mean differences, however, did not reproduce this finding. The included studies consisted of longer follow-up periods14,15,16,17,18,19. One possible explanation could be that difference in cohorts analysed, with the latter containing more individuals suffering from chronic medical conditions (e.g., COPD, heart failure) compared to the former, which encompassed acute surgical cohorts with shorter follow-up periods.

A study in 2016 reported that avoidable hospitalisation increased by a factor of 1.35 for each additional chronic condition and 1.55 for each additional body system affected40,41. Clearly, a chronic disease cohort is particularly susceptible to recurrent hospitalisations and, while digitisation may play role in changing healthcare delivery, hospital departmental factors (e.g., seniority of clinician reviewing, busyness of department, community service delivery) and external factors (e.g., patient education and activation, behavioural insights towards digitisation, social support available) are likely to significantly contribute and may impact widespread deployment of novel digital technologies42.

Hospital length of stay was found to be reduced with digital alerting. This is likely a result of earlier recognition of deterioration resulting in prompt clinical review and treatment administration; a recent systematic review concluded that digital alerts similarly reduced hospital length of stay in sepsis by 1.3 days43. This review adds further support to the literature demonstrating the benefit of digital alerting in remote settings across medical and surgical cohorts.

A small reduction in all-cause mortality from digital alerting systems was noted. A relationship not reproduced from pooled hazard ratios, which may be explained by the difference of study qualities included in the analyses. Only three studies included were high quality; Of which, significant weighting was given to a 2013 study by Baker et al.25,26,27 utilising the Health Buddy telemonitoring platform, which has since become obsolete. Early iterations of digital alerting and telemonitoring platforms may suffer significant pitfalls, preventing successful use, a possible explanation for the described relationships.

Visits to the emergency departments demonstrated no benefit of digital alerting mechanisms from pooled mean differences. Earlier recognition of deterioration should prevent presentation to emergency departments and inpatient hospitalisations with non-urgent reviews scheduled for outpatient visits. Despite this, there was no change in overall outpatient or clinic visits. However, respiratory sub-group data did demonstrate a reduction in outpatient visits though the analysis was a culmination of only two studies. Further randomised trials for specific medical cohorts and conditions may address the benefit of digital alerting in affecting outpatient visits. Additionally, research capturing scheduled and unscheduled presentations to hospital, including emergency department visits, outpatient visits, and hospitalisations would be vital in addressing whether workloads can be altered across these departments.

Despite the significance of the outcomes assessed, our analysis had limitations based on the variety of methodologies used and overall study quality, with the majority scoring low. One of the challenges of this review was the relatively broad study into the effectiveness of digital alerting on clinical outcomes. While this allowed us to examine the similarities across various alerting mechanisms, it created significant heterogeneity. The justification of which was to determine effectiveness of alerting tools pragmatically across various cohorts, determining their overall efficacy as a tool to assist clinical decision making. Nevertheless, this limitation, largely a result of the paucity of high-quality literature, is to be acknowledged. The paucity in high quality, robust, literature limits the conclusions drawn in our review. The included non-randomised trials, due to their observational nature, are prone to selection biases, particularly pre-post implementation designs, which can be theoretically confounded by longitudinal changes in healthcare provision. Moreover, integrated feedback loops and responses to alerts are likely to feed into the Hawthorne effect44, an additional source of bias. Nonetheless, a great number of variables allowing for comprehensive characterisation of the digital alerting literature has been conducted which, to the authors’ knowledge, has not been undertaken previously.

Further research to answer several important questions is required. First, the optimal frequency of alerting; a range of remote monitoring schedules were utilised for data collection, including continuous15, daily16,17,18,21,22,23,24,25,27,29,30,34,38,39,45,46,47,48,49, only during office working hours (Monday–Friday)28,31,32, and weekly19. Indeed, given the diverse methodology in the literature, response time variation would be expected with potential for missing early signs of acute deterioration. Studies with less intense monitoring schedules may be suited for a cohort of individuals less prone to acute deterioration, regardless, a ‘window of opportunity’ presents itself for missing clinical deterioration in less frequent schedules. Second, which team members to be alerted and what nature of alert to be utilised. Alerts were frequently generated when pre-established thresholds, often tailorable, were breeched or for concerning responses to symptom questionnaires resulting in web-platform-based notifications, email alerts, telephone calls, texts, or pagers sent to members of a healthcare team (Table 2). In contrast, Santini et al.49 used audible alarms to alert patients when thresholds were breeched, empowering individuals to contact their responsible physician for further assessment. It is unlikely that one type of alert will be suitable for all individuals but further work identifying the most rapidly acknowledged and actionable alerts is required, including the exploration of alerts sent to individuals alongside healthcare professionals.

In conclusion, this review provides evidence that digital alerts used in remote monitoring can reduce hospital length of stay, mortality, and may reduce hospitalisations. Digital technologies continue to innovate and have the capacity to change current healthcare provision, particularly in the current COVID era. There is need for large, robust, multicentre, randomised trials studying digital alerting mechanisms in a varied cohort of individuals. Trials should seek to cycle different alerting protocols to understand optimal alerting to guide future widespread implementation not only within secondary and tertiary care settings but, importantly, in primary care, as implementation of new technologies within home settings has potential to truly revolutionise healthcare delivery.

Methods

Search strategy and databases

This systematic review was conducted in accordance to the Preferred Reporting Items for Systematic Reviews and Meta-analyses (PRISMA) guidelines50. The review was registered at the International Prospective Register of Systematic Reviews (PROSPERO ID: CRD42020171457).

A systematic search was performed using electronic databases through Ovid in Medline, EMBASE, Global health, health management information consortium (HMIC), and PsychINFO databases without language restriction. The appropriate MeSH terms and free text all field search was performed and combined with appropriate Boolean operators for “home”, “monitoring”, “remote sensing”, “self-monitor*”, “self-track*”, “remote monitor*”, “home monitor*”, “biosensing techniques”, “wireless technology”, “telemedicine”, “monitoring, physiologic”, “monitoring, ambulatory”, “home care services”, “ehealth”, “mhealth”, “telehealth”, “digital”, “mobile”, “social networking”, “internet”, “smartphone”, “cell phone”, “wearable electronic devices”, “internet”, “electronic alert*”, “alert*”, “messag*”, “text messaging”, “inform”, “communicat*”, “communication”, “patient-reported outcome measures”, “outcome and process assessment”, “outcome”, “treatment outcome”, “outcome assessment”, “fatal outcome”, “adverse outcome pathways”, “patient outcome assessment”, “morbidity”, “mortality”, “length of stay”, “patient admission”, “readmission”. Further studies not captured by the search were identified through bibliometric cross-referencing.

All identified studies were uploaded to Covidence, a Cochrane supported systematic review package tool51. Initial screening was conducted by one investigator and verified by a second to determine if the eligibility criteria were met. Discrepancies were discussed and resolved by consensus. Studies meeting the inclusion criteria underwent full-text screening; supplemental references were scrutinised for additional relevant articles.

Study selection criteria and outcome measures

Studies published containing the primary and secondary outcomes listed below were included. No language restrictions were placed. Included study participants were adults (aged 18 years or over) discharged home with a digital alerting system (i.e., wearable sensor, non-invasive wireless technology, telemedicine, or remote monitoring). The last search was performed in October 2019.

Abstracts, conference articles, opinion pieces, editorials, case studies, reviews, and meta-analyses were excluded from the final review. Studies with inadequate published data relating to the primary and secondary outcome measures were additionally excluded.

Data extraction

The primary outcome measure was hospitalisation and inpatient visits. Secondary outcome measures include mortality, hospital length of stay (LOS), emergency department visits, and outpatient visits.

All included study characteristics and outcome measures were extracted by one investigator and verified by a second. All full-text reports of studies identified as potentially eligible after title and abstract review were obtained for further review.

Quality assessment (risk of bias)

Methodological quality of randomised trials (RCTs) was assessed with the Jadad Scale52. The scores range from 0–5; scores <3 were considered low quality and scores ≥3 were considered high quality52. The risk of bias Cochrane tool was used to assess internal validity; this assesses: (i) randomisation sequence allocation; (ii) allocation concealment; (iii) blinding; (iv) completeness of outcome data; and (v) selective outcome reporting, classifying studies into low, high or unclear risk of bias53. Non-randomised trials were assessed using the Newcastle-Ottawa scale54. It comprises three variables: (i) patient selection; (ii) comparability of study groups; and (iii) assessment of outcomes. Scores range from 0–9, scores ≤3 were considered low quality, between 4–6 moderate quality, and ≥7 high quality. Quality assessment was assessed by one reviewer and validated by a second.

Data analysis

A standard, hazard ratio, and proportion of means meta-analyses were performed using Stata (v15.1. StataCorp LCC, TX). Effect sizes were transformed into a common metric (e.g., days for time). A percentage change for outcomes between control and intervention arms were calculated where possible. Hospitalisation and inpatient admissions were grouped into one variable.

Continuous variables were compared through weighted mean differences (WMD) with 95% CI. Where only the median was reported, it was substituted for mean. Where range was reported, it was converted to standard deviation through division of four. As assumption of normal distribution was made for this to occur. Forest plots were generated for all included studies.

Data were pooled using a random effects model and heterogeneity was assessed with the I2 statistic. We considered a value <30% as low heterogeneity, between 30 and 60% moderate, and over 60% as high.

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Majumder, S., Mondal, T. & Deen, M. J. Wearable sensors for remote health monitoring. Sensors (Basel, Switz.) 17, 130 (2017).

Prince, M. J. et al. The burden of disease in older people and implications for health policy and practice. Lancet 385, 549–562 (2015).

Sood, S. et al. What is telemedicine? A collection of 104 peer-reviewed perspectives and theoretical underpinnings. Telemed. e-Health 13, 573–590 (2007).

Vegesna, A., Tran, M., Angelaccio, M. & Arcona, S. Remote patient monitoring via non-invasive digital technologies: a systematic review. Telemed. J. E Health 23, 3–17 (2017).

Martirosyan, M., Caliskan, K., Theuns, D. A. M. J. & Szili-Torok, T. Remote monitoring of heart failure: benefits for therapeutic decision making. Expert Rev. Cardiovasc. Ther. 15, 503–515 (2017).

Crossley, G. H., Boyle, A., Vitense, H., Chang, Y. & Mead, R. H. The CONNECT (Clinical Evaluation of Remote Notification to Reduce Time to Clinical Decision) trial: the value of wireless remote monitoring with automatic clinician alerts. J. Am. Coll. Cardiol. 57, 1181–1189 (2011).

Slotwiner, D. et al. HRS Expert Consensus Statement on remote interrogation and monitoring for cardiovascular implantable electronic devices. Heart Rhythm 12, e69–e100 (2015).

Varma, N., Michalski, J., Stambler, B. & Pavri, B. B. Superiority of automatic remote monitoring compared with in-person evaluation for scheduled ICD follow-up in the TRUST trial-testing execution of the recommendations. Eur. Heart J. 35, 1345–1352 (2014).

DeVita, M. A. et al. “Identifying the hospitalised patient in crisis”–a consensus conference on the afferent limb of rapid response systems. Resuscitation 81, 375–382 (2010).

Smith, G. B. In-hospital cardiac arrest: is it time for an in-hospital ‘chain of prevention’? Resuscitation 81, 1209–1211 (2010).

Agoulmine, N., Deen, M. J., Lee, J.-S. & Meyyappan, M. U-health smart home. IEEE Nanotechnol. Mag. 5, 6–11 (2011).

Kitsiou, S., Paré, G. & Jaana, M. Effects of home telemonitoring interventions on patients with chronic heart failure: an overview of systematic reviews. J. Med. Internet Res. 17, e63–e63 (2015).

Noah, B. et al. Impact of remote patient monitoring on clinical outcomes: an updated meta-analysis of randomized controlled trials. NPJ Digital Med. 1, 20172 (2018).

Chen, Y. H. et al. Clinical outcome and cost-effectiveness of a synchronous telehealth service for seniors and nonseniors with cardiovascular diseases: quasi-experimental study. J. Med. Internet Res. 15, e87 (2013).

Heidbuchel, H. et al. EuroEco (European Health Economic Trial on Home Monitoring in ICD Patients): a provider perspective in five European countries on costs and net financial impact of follow-up with or without remote monitoring. Eur. Heart J. 36, 158–169 (2015).

Martin-Lesende, I. et al. Telemonitoring in-home complex chronic patients from primary care in routine clinical practice: Impact on healthcare resources use. Eur. J. Gen. Pract. 23, 135–142 (2017).

Ringbaek, T. et al. Effect of tele health care on exacerbations and hospital admissions in patients with chronic obstructive pulmonary disease: a randomized clinical trial. Int. J. Copd. 10, 1801–1808 (2015).

Seto, E. et al. Mobile phone-based telemonitoring for heart failure management: A randomized controlled trial. J. Med. Internet Res. 14, 190–203 (2012).

Yount, S. E. et al. A randomized trial of weekly symptom telemonitoring in advanced lung cancer. J. Pain. Symptom Manag. 47, 973–989 (2014).

Bohm, M. et al. Fluid status telemedicine alerts for heart failure: a randomized controlled trial. Eur. Heart J. 37, 3154–3163 (2016).

Kotooka, N. et al. The first multicenter, randomized, controlled trial of home telemonitoring for Japanese patients with heart failure: home telemonitoring study for patients with heart failure (HOMES-HF). Heart Vessels 33, 866–876 (2018).

Luthje, L. et al. A randomized study of remote monitoring and fluid monitoring for the management of patients with implanted cardiac arrhythmia devices. Europace 17, 1276–1281 (2015).

Pedone, C., Rossi, F. F., Cecere, A., Costanzo, L. & Antonelli Incalzi, R. Efficacy of a physician-led multiparametric telemonitoring system in very old adults with heart failure. J. Am. Geriatrics Soc. 63, 1175–1180 (2015).

Scherr, D. et al. Effect of home-based telemonitoring using mobile phone technology on the outcome of heart failure patients after an episode of acute decompensation: randomized controlled trial. J. Med. Internet Res. 11, e34 (2009).

Smeets, C. J. et al. Bioimpedance alerts from cardiovascular implantable electronic devices: observational study of diagnostic relevance and clinical outcomes. J. Med. Internet Res. 19, e393 (2017).

Baker, L. C. et al. Effects of care management and telehealth: A longitudinal analysis using medicare data. J. Am. Geriatrics Soc. 61, 1560–1567 (2013).

Steventon, A., Ariti, C., Fisher, E. & Bardsley, M. Effect of telehealth on hospital utilisation and mortality in routine clinical practice: a matched control cohort study in an early adopter site. BMJ Open 6, 5–7 (2016).

Lewis, K. E. et al. Does home telemonitoring after pulmonary rehabilitation reduce healthcare use in optimized COPD a pilot randomized trial. COPD: J. Chronic Obstr. Pulm. Dis. 7, 44–50 (2010).

McElroy, I. et al. Use of digital health kits to reduce readmission after cardiac surgery. J. Surg. Res. 204, 1–7 (2016).

Pinnock, H. et al. Effectiveness of telemonitoring integrated into existing clinical services on hospital admission for exacerbation of chronic obstructive pulmonary disease: researcher blind, multicentre, randomised controlled trial. BMJ (Online) 347, 10–16 (2013).

Vianello, A. et al. Home telemonitoring for patients with acute exacerbation of chronic obstructive pulmonary disease: a randomized controlled trial. BMC Polm. 16, 9–15 (2016).

Steventon, A. et al. Effect of telehealth on use of secondary care and mortality: findings from the Whole System Demonstrator cluster randomised trial. BMJ (Online) 344 (2012).

Del Hoyo, J. et al. A web-based telemanagement system for improving disease activity and quality of life in patients with complex inflammatory bowel disease: pilot randomized controlled trial. J. Med. Internet Res. 20, e11602 (2018).

Mousa, A. Y. et al. Results of telehealth electronic monitoring for post discharge complications and surgical site infections following arterial revascularization with groin incision. Ann. Vasc. Surg. 57, 160–169 (2019).

Basch, E. et al. Symptom monitoring with patient-reported outcomes during routine cancer treatment: a randomized controlled trial. J. Clin. Oncol. 34, 557–565 (2016).

Bekelman, D. B. et al. Primary results of the patient-centered disease management (PCDM) for heart failure study: a randomized clinical trial. JAMA Intern. Med. 175, 725–732 (2015).

Calvo, G. S. et al. A home telehealth program for patients with severe COPD: The PROMETE study. Respiratory Med. 108, 453–462 (2014).

Lee, T. C. et al. Telemedicine based remote home monitoring after liver transplantation: results of a randomized prospective trial. Ann. Surg. 270, 564–572 (2019).

Sink, E. et al. Effectiveness of a novel, automated telephone intervention on time to hospitalisation in patients with COPD: A randomised controlled trial. J. Telemed Telecare. 26, 132–139 (2018).

Ida, S., Kaneko, R., Imataka, K. & Murata, K. Relationship between frailty and mortality, hospitalization, and cardiovascular diseases in diabetes: a systematic review and meta-analysis. Cardiovasc. Diabetol. 18, 81 (2019).

Dantas, I., Santana, R., Sarmento, J. & Aguiar, P. The impact of multiple chronic diseases on hospitalizations for ambulatory care sensitive conditions. BMC Health Serv. Res. 16, 348 (2016).

Pope, I., Burn, H., Ismail, S. A., Harris, T. & McCoy, D. A qualitative study exploring the factors influencing admission to hospital from the emergency department. BMJ Open 7, e011543 (2017).

Joshi, M. et al. Digital alerting and outcomes in patients with sepsis: systematic review and meta-analysis. J. Med. Internet Res. 21, e15166 (2019).

McCambridge, J., Witton, J. & Elbourne, D. R. Systematic review of the Hawthorne effect: new concepts are needed to study research participation effects. J. Clin. Epidemiol. 67, 267–277 (2014).

Godleski, L., Cervone, D., Vogel, D. & Rooney, M. Home telemental health implementation and outcomes using electronic messaging. J. Telemed. Telecare 18, 17–19 (2012).

Licskai, C., Sands, T. W. & Ferrone, M. Development and pilot testing of a mobile health solution for asthma self-management: asthma action plan smartphone application pilot study. Can. Respir. J. 20, 301–306 (2013).

Oeff, M., Kotsch, P., Gosswald, A. & Wolf, U. [Monitoring multiple cardiovascular paramaters using telemedicine in patients with chronic heart failure]. Herzschrittmach 16, 150–158 (2005).

Pinto, A. et al. Home telemonitoring of non-invasive ventilation decreases healthcare utilisation in a prospective controlled trial of patients with amyotrophic lateral sclerosis. J. Neurol. Neurosurg. Psychiatry 81, 1238–1242 (2010).

Santini, M. et al. Remote monitoring of patients with biventricular defibrillators through the CareLink system improves clinical management of arrhythmias and heart failure episodes. J. Inter. Card. Electrophysiol. 24, 53–61 (2009).

Moher, D., Liberati, A., Tetzlaff, J. & Altman, D. G. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Int. J. Surg. 8, 336–341 (2010).

Innovation, V. H. Covidence Systematic Review Software (2017).

Jadad, A. R. et al. Assessing the quality of reports of randomized clinical trials: is blinding necessary? Controlled Clin. Trials 17, 1–12 (1996).

Sterne, J. A. et al. RoB 2: a revised tool for assessing risk of bias in randomised trials. BMJ 366, l4898 (2019).

Stang, A. Critical evaluation of the Newcastle-Ottawa scale for the assessment of the quality of nonrandomized studies in meta-analyses. Eur. J. Epidemiol. 25, 603–605 (2010).

Biddiss, E., Brownsell, S. & Hawley, M. S. Predicting need for intervention in individuals with congestive heart failure using a home-based telecare system. J. Telemed. Telecare 15, 226–231 (2009).

Denis, F. et al. Prospective study of a web-mediated management of febrile neutropenia related to chemotherapy (Bioconnect). Support Care Cancer 27, 2189–2194 (2019).

Acknowledgements

Infrastructure support for this research was provided by the NIHR Imperial Biomedical Research Centre (BRC) and the NIHR Imperial Patient Safety Translational Research Centre (PSTRC).

Author information

Authors and Affiliations

Contributions

F.M.I. drafted the manuscript. F.M.I. and K.L. independently screened and reviewed all included articles and graded the quality of included studies. H.A. performed the meta-analysis. K.L., H.A., M.J., S.K., and A.D. all contributed to significant amendments to the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Iqbal, F.M., Lam, K., Joshi, M. et al. Clinical outcomes of digital sensor alerting systems in remote monitoring: a systematic review and meta-analysis. npj Digit. Med. 4, 7 (2021). https://doi.org/10.1038/s41746-020-00378-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-020-00378-0

This article is cited by

-

Patient-reported symptom monitoring: using (big) data to improve supportive care at the macro-, meso-, and micro-levels

Supportive Care in Cancer (2024)

-

Artificial Intelligence: Exploring the Future of Innovation in Allergy Immunology

Current Allergy and Asthma Reports (2023)

-

Implementation of a multisite, interdisciplinary remote patient monitoring program for ambulatory management of patients with COVID-19

npj Digital Medicine (2021)