Abstract

Photoacoustic Microscopy (PAM) integrates optical and acoustic imaging, offering enhanced penetration depth for detecting optical-absorbing components in tissues. Nonetheless, challenges arise in scanning large areas with high spatial resolution. With speed limitations imposed by laser pulse repetition rates, the potential role of computational methods is highlighted in accelerating PAM imaging. We propose a novel and highly adaptable algorithm named DiffPam that utilizes diffusion models to speed up the photoacoustic imaging process. We leveraged a diffusion model trained exclusively on natural images, comparing its performance with an in-domain trained U-Net model using a dataset focused on PAM images of mice brain microvasculature. Our findings indicate that DiffPam performs similarly to a dedicated U-Net model without needing a large dataset. We demonstrate that scanning can be accelerated fivefold with limited information loss. We achieved a \(24.70\%\) increase in peak signal-to-noise ratio and a \(27.54\%\) increase in structural similarity index compared to the baseline bilinear interpolation method. The study also introduces the efficacy of shortened diffusion processes for reducing computing time without compromising accuracy. DiffPam stands out from existing methods as it does not require supervised training or detailed parameter optimization typically needed for other unsupervised methods. This study underscores the significance of DiffPam as a practical algorithm for reconstructing undersampled PAM images, particularly for researchers with limited artificial intelligence expertise and computational resources.

Similar content being viewed by others

Introduction

Photoacoustic microscopy (PAM) imaging is a field of non-invasive medical imaging that combines optical and ultrasound technology1. The principle is based on detecting the ultrasonic waves generated by pressurized tissues by laser beams. The penetration depth (\(\sim 1 \,\,\textrm{mm}\)2) is much higher than purely optical imaging modalities (\(\sim 10\,\,\upmu\)m3). At the same time, it can detect the optical absorbing parts of the tissue such as hemoglobin, lipids, water, and other light-absorbing chromophores4.

Similar to other imaging techniques, a trade-off exists between scanned area, scanning time, and spatial resolution. Scanning large areas with high resolution increases the time complexity of the whole process. In the literature, advanced scanning techniques are developed for high-speed PAM. However, the main factor that restricts the scanning speed is the laser pulse repetition rate5. Unless advanced lasers are used, the best option for speeding up PAM imaging is reducing the scanning area and reconstructing the image via computational methods.

For the past couple of years, deep learning (DL) has emerged as the state-of-the-art computational method for various tasks in medical image processing, including reconstruction, enhancement, denoising, and super-resolution6,7,8. Recent studies have highlighted the potential of DL methods to address challenges in photoacoustic microscopy (PAM) imaging.

Reconstructing the degraded PAM image can be considered a subset of inverse problems in image processing, which are naturally ill-posed. Degradation may come from but is not limited to, out-of-focus lasers, noisy measurements, undersampling, and low spatial resolution. One can reduce the spatial resolution or the scanned area by undersampling to accelerate the scanning. Super-resolution techniques aim to enhance image resolution, while image inpainting restores missing pixels, which can be used to reconstruct degraded PAM images. The literature has various approaches for solving super-resolution and inpainting; the main ones are interpolation and DL-based methods. In recent years, deep learning-based approaches have proven superior to interpolation methods. Early studies on super-resolution and inpainting utilizing deep learning employed peak-signal-to-noise ratio (PSNR) based methods.

Zhao et al.9 used a multi-task residual dense network to speed up optical resolution PAM with low pulse laser energy. They addressed the issues of image denoising, super-resolution, and vascular enhancement simultaneously through multi-supervised learning. Sharma et al.10 employed supervised fully dense U-Net to enhance out-of-focus acoustic resolution PAM images. DiSpirito III et al.11 approached the reconstruction of undersampled PAM images, i.e., an inpainting problem. They demonstrated that using supervised fully dense U-Net, they were able to reconstruct artificially downsampled PAM images. All techniques up until now required a large set of PAM images to train the neural network. On the contrary, Vu et al.12 use the deep image prior (DIP)13 technique, which uses an untrained neural network as an image prior. They reconstructed the undersampled PAM images, similar to DiSpirito et al.11. Based on our knowledge, this study is the only one that does not require supervised training in PAM imaging.

Today, many super-resolution and inpainting techniques take advantage of deep generative models. The state-of-the-art generative models that are used for super-resolution and inpainting are encoder-decoder networks, generative adversarial networks (GANs)14, flow-based models15, and denoising diffusion probabilistic models (DDPMs).

Deep generative models can facilitate both conditional and unconditional image generation. Unconditional generative models learn natural image distributions to generate images from noise, while conditional generation requires conditions in the form of text, class labels, or images. For super-resolution or inpainting applications, degraded images can be used as the condition. Conditional generation can be achieved by using the condition directly in the training process or leveraging the power of pre-trained unconditional generative models. Both approaches demonstrated remarkable performance in inverse problems in image processing16. The former requires a large corpus of high-quality images of interest, the specific design of the model, and the training process. The latter approach is more robust and flexible.

Recently, a few studies have employed the latter approach for photoacoustic tomography images. Tong et al.17 used a score-based generative model with a rotational consistency constraint, controlling the generation process by utilizing annealed Langevin dynamics. Dey et al.18 and Song et al.19 employed score-based diffusion models to reconstruct limited PAT measurements. These studies demonstrated that the proposed methods using diffusion models achieve higher-quality reconstructions compared to conventional reconstruction methods and U-Net.

Since we are dealing with ill-posed problems, leveraging prior information can be advantageous. Provost et al.20 demonstrated that photoacoustic images exhibit sparsity under wavelet transform. Therefore, regularizing the wavelet representation may enhance the quality of reconstructed images. Conversely, total variation (TV) regularization21 is a widely-used technique in modern computer vision problems22.

Building upon these insights, we propose a novel method that utilizes a pre-trained diffusion model to reconstruct the degraded PAM image with external conditioning and regularization. This approach leverages the effectiveness of neural networks pre-trained on natural image data while eliminating the need for an extensive corpus of PAM images and mitigating the cumbersome training processes. Notably, our methodology demonstrates a degree of adaptability, offering the freedom to select the specific degradation operation, whether super-resolution or inpainting. Furthermore, our proposed framework can integrate and benefit from the existing enhancement techniques.

Method

In this section, the development of the DiffPam algorithm is outlined, detailing the transition from theory to practice. An overview of DDPM and its application in image generation is provided. The conditional generation using an unconditional diffusion model is then explained, with regularization techniques incorporated to enhance image reconstruction. The Come-Closer-Diffuse-Faster technique for accelerating the diffusion process is described. Additionally, the datasets used for training and testing are covered, along with the neural network employed as an approximate solution. Finally, the steps of the DiffPam reconstruction algorithm are presented, and the evaluation metrics for assessing image quality are detailed. This methodology aims to accelerate photoacoustic microscopy imaging by reconstructing undersampled images using diffusion models.

Denoising diffusion probabilistic models

DDPMs were first introduced by Sohl-Dickstein23 and then popularized by Ho et al24, as a parametrized Markov chain with variational inference. Markov process defines a stochastic process where the future only depends on the present. Dhariwal and Nichol25 showed that DDPMs can achieve superior image quality and beat GANs.

The diffusion process in DDPMs is inspired by the thermodynamic process of diffusion. DDPM has forward and backward diffusion processes. The forward process is defined as adding Gaussian noise to an image and the backward process is trying to obtain the original image by using the noisy one (Fig. 1).

For the image generation, the backward process is started from pure Gaussian noise at \(t=T\) and repeated until we obtain \(X_{0}\) at \(t=0\). In the following sections, we use y to represent the measurement, \(X_{ref}\) for the reference (ground truth) image, and \(X_{0}\) for the generated output. This notation aligns with the conventions used in diffusion and score-based model research to ensure consistency and clarity.

Conditional generation from unconditional diffusion model

The sequential nature of diffusion models allows researchers to manipulate the generation process. This principle has been the focus of several studies that aim to alter the generation process, using an unconditional model to generate conditional images. Simply, a measured image is defined as a noisy version of the actual image transformed by a degradation operation.

The degradation operation (A) mimics the relation between measured value (y) and real value (x), and (n) represents the measurement noise. The degradation depends on the inverse problem. For super-resolution, the operation will be downsampling with Gaussian blurring. For inpainting, the transformation is the multiplication of a mask operator.

Choi et al.26 defined a process called Iterative Latent Variable Refinement (ILVR). Proposed algorithm is to generate \(x'_{t-1}\) from \(x_{t}\) using unconditional generation, then update predicted \(x'_{t-1}\) using the condition:

where \(A^{T}\) is the inverse operation to degradation, e.g. upsampling for super-resolution. This process can be deemed as a projection operation onto the desired image manifold. The measurement is considered noiseless (\(n=0\)) in this setting.

Chung et al. (a)27 proposed an improvement to projection-based approaches named Manifold Constraint Gradient (MCG) correction. They devised a series of constraints to ensure that the gradient of the measurement remains on the manifold.

Projection-based approaches may perform well in some cases, but they have downsides. Since the predicted image is projected onto the measurement, noise in the measurement is amplified at each iteration. Chung et al. (b)28 developed an alternative approach that uses gradient-based correction to the predicted image, named Diffusion Posterior Sampling (DPS). In this way, the noise in the measurement is handled during the gradient process, unlike projection-based methods.

\(\eta\) is the scaling factor for DPS, which determines the strength of the measurement in the final image. Chung et al. (b)28 found that \(\eta\) should be between 0.1 and 1, experimentally. Smaller \(\eta\) values lead to hallucination in the generated images, while larger values may produce artifacts.

The formulation presented in Eq. (4) illustrates our contribution to the DPS conditioning, namely regularization with the \(l_{1}\) norm of wavelet coefficients. In Eq. (4), \(\mathcal {F}\) denotes wavelet decomposition, and \(\lambda _{W}\) represents the regularization coefficient for wavelet decomposition. Experimentally, we have determined the value of \(\lambda _{W}\) to be 10, below which we do not observe the effect of regularization, while above, it yields excessive regularization and a smoothing effect that diminishes the quality of the outcome. We have selected the B-spline biorthogonal wavelet with 3 and 5 vanishing moments (bior3.5) to achieve sparse representations. We perform a level 3 discrete wavelet transform using the \(pytorch\_wavelets\) package29 in Python.

In a similar vein, we have utilized DPS conditioning with TV regularization in Eq. (5), where V denotes the TV of the predicted image \(x'_{0}\), and \(\lambda _{TV}\) serves as the regularization coefficient for TV. To calculate the TV-norm, we utilize the \(total\_variation\) function from the torchmetrics library30. The value of \(\lambda _{TV}\) is experimentally set to \(10^{-4}\).

Come-closer-diffuse-faster

Although the pre-trained diffusion models are robust and domain-adaptable, they are computationally expensive. Producing even a single image is a time-consuming process. Chung et al. (c)31 claim that for the inverse problems, starting the generation from pure Gaussian noise is redundant. By utilizing an estimate of initial image \(x_{0}\), the backward diffusion process can begin at a timestep \(t<<T\). They referred to this process as Come-Closer-Diffuse-Faster. An initial estimate can be as simple as an interpolation or as fine-detailed as the output of a fully-trained neural network. As the initial estimate improves, the diffusion process can be shortened31. This method allows us to use existing deep-learning models and go beyond their performance.

Dataset

For all experiments, we have employed OpenAI’s unconditional DDPM model32 trained with ImageNet 256\(\times\)256 dataset33. Since we are using a pre-trained diffusion model, training and validation of the diffusion models are beyond the scope of this study.

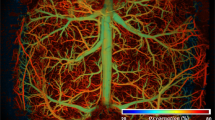

For testing the proposed method in this study, the Duke PAM34 dataset is used. Duke PAM is an open-source database acquired by an optical resolution PAM system at a wavelength of 532 nm in the Photoacoustic Imaging Lab at Duke University. This PAM system has a lateral resolution of 5 \(\upmu\)m and an axial resolution of 15 \(\upmu\)m11. This dataset consists primarily of in vivo images of mouse brain microvasculature, from which 20 images are randomly selected as the test set.

The selected diffusion model is trained with 256\(\times\)256 images, so central cropping is applied to make input image dimensions 256\(\times\)256. Then, images are normalized by mapping the color range to \([-1,1]\) before being passed to the diffusion model. These normalized images are the ground truth images (\(X_{ref}\)).

At this step, our study is divided into three paths to generate input images (y):

-

1.

For super-resolution, 1/4 downsampling operation is performed and followed by low-pass filtering to prevent anti-aliasing using the Resizer Python package developed by Shocher et al.35. Therefore, the resulting image has 6.25% of the pixels of the original image.

-

2.

For inpainting with a uniform undersampling, every four rows out of five is replaced with a zero, leaving 20% of the original pixels.

-

3.

For inpainting with a random undersampling, 80% of the pixels are replaced with zero, leaving 20% of the original pixels.

In PAM imaging, variations in the step sizes along the fast and slow scanning axes can result in differing resolutions in the x and y axes. Random undersampling maintains the same sparsity level without a fixed pattern, thus reducing potential biases from the original scanning step sizes. The differences in reconstruction quality between uniform and random undersampling can be attributed to inherent biases within the original images. For a fair comparison with random undersampling, uniform downsampling was also performed equally along both the x and y axes.

Each setup corresponds to distinct real-world scenarios, as elaborated in subsequent sections. Researchers are encouraged to adopt the pathway that best aligns with their objectives and experimental setups.

Neural network as an approximate solution

The previous works mainly focused on reconstructing uniformly undersampled PAM images11,12. They approached it as an inpainting problem and used U-Net-shaped neural networks for a solution. In this study, we demonstrated that existing solutions can be improved using our algorithm. To this end, we trained a U-Net model with residual connections, which was subsequently subjected to comparative evaluation and involved in our proposed algorithmic framework.

We used the 337 samples in the Duke PAM train dataset for model training. Each image is randomly cropped from 10 different locations during data loading. For regularization, the data augmentation processes, namely, random crops, horizontal and vertical flips with 30% probability, random rotations up to 20\(^\circ\), and random Gaussian blur, are applied to each image in the training set. Then images are normalized to range \([-1,1]\) and used as the ground truth. Input images are constructed by applying a uniform undersampling to the ground truth image, which replaces every four rows out of five with a zero, leaving 20% of the original pixels. Then, empty rows are filled with bilinear interpolation and used as input to the U-Net model. Figure 2 illustrates an example image with uniform masking and the resulting bilinear interpolation.

The U-Net model employed in this study comprises convolutional blocks (Conv Blocks) that consist of two-dimensional convolutional layers (Conv2D) followed by Mish activation functions36. The architecture includes three downsampling blocks and corresponding upsampling blocks, with a middle layer incorporating linear attention (Supp. Fig. 1). The network was optimized using Adam optimizer37 of the PyTorch optimizer package and \(l_{1}\) loss function with batch size 8. The parameters for the Adam optimizer were \(\beta _{1}=0.9\), \(\beta _{2}=0.999\), \(\epsilon =10^{-8}\), and weight decay\(=0\) (the default parameters of PyTorch). We used the PyTorch framework version 1.11. The hyperparameter tuning is achieved by splitting 20% of the training data as the validation set. The initial learning rate is selected \(3e-4\), and step scheduling is employed, which divides the learning rate by half in each 2000 epoch. Total training is done over 5000 epochs, beyond which we did not observe any substantial performance increase.

The DiffPam reconstruction algorithm

The steps of the algorithm are the following:

-

The operation (A) transforms the real value (X) into the measured value (y). For super-resolution, the operation will be downsampling. For inpainting, the transformation is the multiplication of a mask operator.

$$\begin{aligned} y = AX_{ref} \end{aligned}$$(6) -

Optional: An approximate solution to the inverse problem is required. The better we approximate the real value, the fewer diffusion steps would be needed. The solution depends on the transform operator A. But in general, interpolation operations or a simple trained neural network as an approximator can be utilized. Depending on the approximate solution, the starting point (\(N<T\)) for the backward diffusion process can be settled.

-

After having \(X_{N}\), an unconditional diffusion model is employed to generate \(X_{N-1}\). Then, using regularized DPS conditioning the image generation process is iterated in the direction of interest.

Algorithms 1 and 2 illustrate the DiffPam algorithm with or without approximate solution. Table 1 summarizes the experiments conducted in this study, regarding the selection of the measurement operations (A), approximate solutions and the starting point for the diffusion processes (N).

The diffusion model that we used in this study is trained with \(T=1000\) diffusion steps. Depending on the quality of the approximate solution, we have selected \(N=500\) for bilinear and bicubic interpolation and \(N=200\) for pre-trained U-Net outputs.

Image production time is highly correlated with the sample step size. Selecting \(N=T/k\) reduces the computing time by a factor of k.

Evaluation

The quality of reconstructed images is evaluated using three key metrics: PSNR, structural similarity index (SSIM), and learned perceptual image patch similarity (LPIPS). PSNR is the logarithm of the ratio between the peak signal value and the mean squared error (MSE) between the reference and the reconstructed images. The PSNR goes to infinity when MSE is equal to zero.

On the other hand, SSIM is designed to simulate any image distortion using a combination of three factors: loss of correlation, luminance distortion, and contrast distortion38. Equation (9) is the specific form of the SSIM index, which is used in this work. \(\mu\) and \(\sigma\) correspond to the mean and standard deviation of the given image patches39. \(C_{1} = (K_{1}L)^{2},\) and \(C_{2} = (K_{2}L)^{2}\), where K is a small parameter and L is the dynamic range of the image pixels. The SSIM values range between zero and one. Zero SSIM means there is no correlation between the images, and one refers to identical images.

While PSNR and SSIM are widely acknowledged, they may occasionally fall short in aligning with human perceptual judgments. In response to this, LPIPS, as developed by Richard Zhang et al.40, leverages deep neural networks to offer a more refined measure of image similarity closer to human perception. Contrary to PSNR and SSIM, lower LPIPS scores mean better image quality. In this study, the Scikit-image framework41 is employed to calculate PSNR and SSIM metrics, whereas the lpips Python package with AlexNet is used to compute LPIPS scores. For SSIM calculation, image patches of size \(7 \times 7\) are utilized, with default parameters set to \(L=255\), \(K_{1} = 0.01\), and \(K_{2} =0.03\).

In addition to these metrics, we also include the Mean Absolute Error (MAE) to provide further insights into the performance of our method. MAE measures the average magnitude of the absolute differences between the predicted and true values, providing a straightforward interpretation of prediction accuracy. The MAE is defined as:

Our sample size is relatively small (n = 20), and data distributions do not meet the normalization condition according to the Kolmogorov-Smirnov test. Therefore, hypothesis testing is done by utilizing non-parametric Wilcoxon signed-rank tests. The Wilcoxon signed-rank test is designed to test the null hypothesis that two paired samples come from the same distribution42. Paired tests are specifically designed for situations where each data point in one sample is directly paired with a data point in the other sample, which was the case for our images. The alpha level for statistical significance is selected as 0.05.

Results

Producing a single 256\(\times\)256 image from the \(T=1000\) sample steps took 18 min on average on Amazon Web Services (AWS) Sagemaker Studio Lab accelerated computing. Starting from the halfway, image reconstruction time can be reduced to 9 min. Even further, starting from the U-Net model output, the time can be reduced up to 3.6 min.

Inpainting

For inpainting, 20 images are randomly selected from the test set and subjected to uniform and random masking, leaving 20% effective pixels. Then, images are reconstructed using pre-trained U-Net and diffusion model conditioned on undersampled image (DiffPam). Four DiffPam experiments are conducted for inpainting, as mentioned in the “Methods” section.

Three out of four inpainting experiments were conducted using uniform masking in x-axis with differing approximate solutions. Figure 3 demonstrates the ground truth (fully-sampled) PAM image, bilinear interpolation, and the results of reconstruction from the undersampled version. For simplicity, only the diffusion model started with bilinear input (B-DiffPam) is illustrated in the figure.

Our investigation reveals that both wavelet and TV regularization outperform unregularized DPS (\(p<0.01\)) in terms of PSNR and SSIM. While there exists no statistically significant performance disparity between TV and wavelet regularization in terms of PSNR and SSIM, TV regularization demonstrates superior results concerning LPIPS compared to wavelet regularization (\(p<0.01\)) (Supplementary Table 1). Consequently, we present the TV regularized DiffPam results. For a comprehensive examination of the outcomes, we refer readers to the supplementary material.

Table 2 presents the results of reconstruction methodologies from uniform undersampling in terms of MAE, PSNR, SSIM, and LPIPS metrics. All methods yield superior results compared to interpolation (\(p<0.0001\)). DiffPam methods produce lower PSNR values than the pre-trained U-Net model (\(p<0.05\)) since the U-Net is trained for this objective. Nevertheless, U-Net tends to generate smoother outputs, which the PSNR metric favors more than humans do. No statistically significant differences in MAE, SSIM and LPIPS values are observed when comparing DiffPam, B-DiffPam, and U-Net. This result demonstrates that diffusion models trained solely with natural images can perform on par with domain-specifically trained U-Net. U-DiffPam achieves higher SSIM, lower MAE and LPIPS scores (both superior) than the pre-trained U-Net model (\(p=0.044\)).

As an alternative to uniform masking, we offer scanning randomly distributed pixels with an equal number of scanned pixels. We have reconstructed the selected test images with random masking using \(T=1000\) sample steps in the same diffusion model and compared the results with uniform masking. Table 3 demonstrates the PSNR, SSIM, and LPIPS metrics of reconstructed images with uniform undersampling with both x and y-axes and random undersampling methods. Specifically, random undersampling, while maintaining the same effective pixel density, consistently outperforms uniform undersampling across all metrics. (\(p<0.05\)).

Visual comparison of random and uniform inpainting DiffPam results. (a) Original (ground truth) image, reconstructed image from (b) the uniform mask in x-axis (c) the uniform mask in y-axis, (d) the random mask. The second row illustrates the focus of the upper box, whereas the third row demonstrates the focus of the lower box. (b1) and (b2) contain artifacts in the microvasculature, where (c1, c2, d1 and d2) resembles the ground truth.

Upon examining the reconstructed images, we noted the presence of certain artifacts in microvasculature exclusively in images with uniform undersampling in the x-axis, a phenomenon absent in other methods (Fig. 4). Besides, implementing random downsampling could introduce mechanical instability and necessitate modifications to the existing motor control, which might not be feasible with the current hardware configurations. To address these challenges while maintaining mechanical stability, we propose employing uniform undersampling along both axes. This approach can reduce inherent biases caused by differing step sizes in fast and slow scanning, thus enhancing the quality of the reconstructed images.

Super resolution

Another practical approach would be increasing the spatial resolution of the OR-PAM using DiffPam algorithm. The corresponding operation is downsampling and smoothing with cubic kernel in the image processing domain. We experimented with this setting using six images in the test set. We were able to improve the PSNR by 18.2% and SSIM by 31.7% and lower the LPIPS by 54.0% on average. Figure 5 illustrates the ground truth, downsampled and reconstructed images, and pixel value change across the red line.

The example result of super-resolution from 4\(\times\) downsampled image. (a) Original (ground truth) image, (b) 4\(\times\) downsampled image, (c) reconstructed image by DiffPam, (d) pixel values across the red line in the images. DiffPam is able to reconstruct the downsampled images using prior knowledge of natural images.

Discussion

Key contributions

In this study, we demonstrated that the proposed algorithm, DiffPam, adeptly reconstructs undersampled PAM images without the need for a large dataset or training a deep learning model. The diffusion model, which is solely trained with the ImageNet database of natural images, was able to perform on par with the U-Net model specifically trained with mice brain microvasculature image database. By reducing the diffusion steps, we can further accelerate the reconstruction process, enhancing its efficiency. Furthermore, for users with an existing trained model, our algorithm provides a means to enhance results without interfering with the original model.

Pre-training the U-Net on a large-scale dataset such as ImageNet prior to fine-tuning on the Duke PAM dataset could potentially yield additional insights and improvements. However, it is noteworthy that DiffPam inherently leverages the knowledge from ImageNet without the necessity for explicit transfer learning, thereby demonstrating its robustness and adaptability across different domains. This approach highlights the efficacy of diffusion models in effectively utilizing large-scale datasets for a variety of applications.

The reconstruction of undersampled PAM images has recently received attention in two notable studies11,12. DiSpiritio et al. undertook a comprehensive investigation by training multiple versions of U-Net using a fully supervised approach. They reported a substantial improvement over the baseline of bicubic interpolation, achieving SSIM and PSNR increases of \(6.42\%\) and \(16.73\%\), respectively, with a \(20\%\) effective pixel size and uniform undersampling, a strategy akin to ours. In contrast, Vu et al. adopted a different methodology by employing DIP. Their approach involved training a U-Net model using a single undersampled image. Although the DIP approach does not require a large dataset or supervised training akin to our algorithm, it requires careful selection of the neural network architecture and a delicate optimization process, which demands extensive knowledge about the subject.

In our investigation, we demonstrate substantial advancements over baseline methods. Specifically, we achieved a \(27.54\%\) increase in SSIM, a \(24.70\%\) increase in PSNR, and a remarkable \(45.83\%\) decrease in LPIPS compared to our baseline method of bilinear interpolation. Discrepancies in results among studies may stem from variations in hyperparameter selections and differences in the composition of the test set. Nevertheless, our observed improvements over the baseline method exhibit statistically significant advancements (\(p < 0.0001\)).

Notably, neither study incorporated external regularization similar to wavelet and TV techniques. We demonstrated the significant impact of regularization (\(p < 0.01\)) on external conditioning in diffusion models, highlighting its effectiveness in improving reconstruction outcomes. Given that pixel removal and aliasing result in information loss, generative methods attempting to recover from this may produce inaccurate regions, including biologically improbable structures. While regularization techniques play a crucial role in mitigating these effects, addressing this challenge remains an ongoing endeavor.

The primary result of our study is the potential utilization of the proposed algorithm by researchers with limited expertise or computational resources in artificial intelligence. The diffusion model employed in our investigation is openly accessible and can be substituted with any other proficient diffusion model. Our source code is accessible on a public GitHub repository (github.com/iremzog/diffpam). For scientists facing constraints in accessing accelerated computing, the option of fast-tracked, truncated diffusion steps presents a viable solution for reducing computation time.

Undersampling strategies

Since the speed of the scanning axes of PAM imaging may differ considerably, skipping every few lines in the slower axis is a more convenient way of speeding up the process of acquiring images. Uniform masking of PAM images, which is commonly documented in the literature, is a way of representing this process. Our findings revealed that undersampling along fast or slow axes significantly affects results, highlighting an inherent bias in the PAM images. These results emphasize the critical need to account for axis-specific biases in PAM images. We recommend that researchers consider undersampling both axes, regardless of whether they use uniform or random methods, to further enhance the quality and accuracy of their reconstruction outcomes.

An alternative strategy involves employing larger spatial resolutions, followed by utilizing super-resolution techniques to reconstruct finer details. In this study, our objective was to elucidate the limitations inherent in all approaches, leaving the decision regarding methodology selection to the discretion of researchers.

Limitations and future works

Our study is limited to a single image dataset of narrow scope, namely mice brain microvasculature. While using a test set with a higher number of images would provide a more robust evaluation, due to current constraints in computational resources, repeating all experiments with a significantly larger dataset is beyond the scope of this manuscript. Future work should involve applying DiffPam to a larger selection of images from the Duke PAM dataset to ensure comparability and robustness of the results.

Future research should employ broader datasets, including various tissue types and pathological conditions, to validate and generalize our findings. Engaging clinicians in the research process will be crucial for obtaining expert insights and ensuring the clinical relevance of the outcomes. Future work could also explore the application of DiffPam to other imaging modalities, such as Raman spectroscopy where similar challenges with scanning speed and resolution exist. Integrating DiffPam with advanced hardware techniques, optimizing the method for real-time imaging, and developing software tools will further enhance its practical applicability and impact.

Conclusion

In conclusion, our study introduces the DiffPam algorithm, showcasing its efficiency in reconstructing undersampled PAM images without the need for extensive datasets or deep learning model training. The diffusion model, exclusively trained on the natural image database, demonstrated comparable performance to an in-domain trained neural network. We also address the escalating demand for computing power in advancing AI technology, emphasizing the significance of overcoming obstacles in accessing high computational resources for scientific progress. We propose the reduction of computing time through shortened diffusion processes without compromising accuracy. Additionally, our exploration of random or uniform sampling techniques in PAM imaging underscores the superiority of random sampling in preserving valuable information. We acknowledge the limitations of our study, which are that it is confined to a specific image dataset and has a relatively small sample size due to resource constraints. However, our findings will inspire further research in this domain, offering researchers with limited resources a valuable algorithmic tool for PAM image reconstruction. Our algorithm’s accessibility is underscored by the availability of source code on a public GitHub repository, providing a practical way for researchers to implement and extend our work.

Data availability

The dataset used in this study is available on https://doi.org/10.5281/zenodo.4042171.

Code availability

Our source code is accessible on the GitHub repository https://github.com/iremzog/diffpam.

References

Wang, L. V. & Gao, L. Photoacoustic microscopy and computed tomography: From bench to bedside. Annu. Rev. Biomed. Eng. 16, 155–185 (2014).

Steinberg, I. et al. Photoacoustic clinical imaging. Photoacoustics 14, 77–98. https://doi.org/10.1016/j.pacs.2019.05.001 (2019).

Karunamuni, G. et al. Capturing structure and function in an embryonic heart with biophotonic tools. Front. Physiol. 5, 351. https://doi.org/10.3389/fphys.2014.00351 (2014).

Beard, P. Biomedical photoacoustic imaging. Interface focus 1, 602–631 (2011).

Cho, S.-W. et al. High-speed photoacoustic microscopy: A review dedicated on light sources. Photoacoustics 24, 100291. https://doi.org/10.1016/j.pacs.2021.100291 (2021).

Shen, D., Wu, G. & Suk, H.-I. Deep learning in medical image analysis. Annu. Rev. Biomed. Eng. 19, 221–248. https://doi.org/10.1146/annurev-bioeng-071516-044442 (2017).

Razzak, M. I., Naz, S. & Zaib, A. Deep Learning for Medical Image Processing: Overview, Challenges and the Future, 323–350 (Springer International Publishing, 2018).

Zhou, S. K. et al. A review of deep learning in medical imaging: Imaging traits, technology trends, case studies with progress highlights, and future promises. Proc. IEEE 109, 820–838. https://doi.org/10.1109/JPROC.2021.3054390 (2021).

Zhao, H. et al. Deep learning enables superior photoacoustic imaging at ultralow laser dosages. Adv. Sci. 8, 2003097. https://doi.org/10.1002/advs.202003097 (2021).

Sharma, A. & Pramanik, M. Convolutional neural network for resolution enhancement and noise reduction in acoustic resolution photoacoustic microscopy. Biomed. Opt. Express 11, 6826–6839 (2020).

DiSpirito, A. et al. Reconstructing undersampled photoacoustic microscopy images using deep learning. IEEE Trans. Med. Imaging 40, 562–570 (2021).

Vu, T. et al. Deep image prior for undersampling high-speed photoacoustic microscopy. Photoacoustics 22, 100266. https://doi.org/10.1016/j.pacs.2021.100266 (2021).

Ulyanov, D. et al. Deep image prior. arXiv:1711.10925 (2017).

Goodfellow, I. et al. Generative adversarial nets. In Advances in Neural Information Processing Systems 2672–2680 (2014).

Rezende, D. J. & Mohamed, S. Variational inference with normalizing flows (2016). arXiv:1505.05770.

Li, X. et al. Diffusion models for image restoration and enhancement—a comprehensive survey (2023). arXiv:2308.09388.

Tong, S., Lan, H., Nie, L., Luo, J. & Gao, F. Score-based generative models for photoacoustic image reconstruction with rotation consistency constraints (2023). arXiv:2306.13843.

Dey, S. et al. Score-based diffusion models for photoacoustic tomography image reconstruction. In ICASSP 2024–2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). https://doi.org/10.1109/icassp48485.2024.10447579 (IEEE, 2024).

Song, X. et al. Sparse-view reconstruction for photoacoustic tomography combining diffusion model with model-based iteration. Photoacoustics 33, 100558. https://doi.org/10.1016/j.pacs.2023.100558 (2023).

Provost, J. & Lesage, F. The application of compressed sensing for photo-acoustic tomography. IEEE Trans. Med. Imaging 28, 585–594 (2008).

Rudin, L. I., Osher, S. & Fatemi, E. Nonlinear total variation based noise removal algorithms. Phys. D 60, 259–268 (1992).

Estrela, V. V., Magalhães, H. A. & Saotome, O. Total Variation Applications in Computer Vision 41-64 (IGI Global, 2016).

Sohl-Dickstein, J. et al. Deep unsupervised learning using nonequilibrium thermodynamics (2015). arXiv:1503.03585.

Ho, J. et al. Denoising diffusion probabilistic models. arXiv preprintarxiv:2006.11239 (2020).

Dhariwal, P. & Nichol, A. Diffusion models beat gans on image synthesis (2021). arXiv:2105.05233.

Choi, J. et al. Ilvr: Conditioning method for denoising diffusion probabilistic models. arXiv preprintarXiv:2108.02938 (2021).

Chung, H. et al. Improving diffusion models for inverse problems using manifold constraints. Adv. Neural Inf. Process. Syst. 2022, 85 (2022).

Chung, H. et al. Diffusion posterior sampling for general noisy inverse problems. In The Eleventh International Conference on Learning Representations (2023).

Cotter, F. Uses of Complex Wavelets in Deep Convolutional Neural Networks. Ph.D. thesis, Apollo—University of Cambridge Repository (2019). https://doi.org/10.17863/CAM.53748.

Detlefsen, N. S. et al. TorchMetrics—Measuring Reproducibility in PyTorch (2022, accessed 30 Jan 2024). https://doi.org/10.21105/joss.04101.

Chung, H. et al. Come-closer-diffuse-faster: Accelerating conditional diffusion models for inverse problems through stochastic contraction (2022). arXiv:2112.05146.

Open ai guided diffusion (2021). https://github.com/openai/guided-diffusion. Accessed 30 Aug 2023.

Deng, J. et al. Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition 248–255 (IEEE, 2009).

Duke, A. D. Duke pam dataset. https://doi.org/10.5281/zenodo.4042171 (2020).

Shocher, A. Resizer: Only way to resize (2018). https://github.com/assafshocher/resizer. Accessed 30 Aug 2023.

Misra, D. Mish: A self regularized non-monotonic activation function. arXiv preprintarXiv:1908.08681 (2019).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization (2017). arXiv:1412.6980.

Horé, A. & Ziou, D. Image quality metrics: Psnr vs. ssim. In 2010 20th International Conference on Pattern Recognition 2366–2369. https://doi.org/10.1109/ICPR.2010.579 (2010).

Wang, Z., Bovik, A., Sheikh, H. & Simoncelli, E. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 13, 600–612. https://doi.org/10.1109/TIP.2003.819861 (2004).

Zhang, R. et al. The unreasonable effectiveness of deep features as a perceptual metric. In CVPR (2018).

van der Walt, S. et al. scikit-image: Image processing in Python. PeerJ 2, e453. https://doi.org/10.7717/peerj.453 (2014).

Wilcoxon, F. Individual Comparisons by Ranking Methods 196–202 (Springer, 1992).

Author information

Authors and Affiliations

Contributions

I.L. and M.B.U. conceptualized the research. I.L. conducted the experiments and analysed the results. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Loc, I., Unlu, M.B. Accelerating photoacoustic microscopy by reconstructing undersampled images using diffusion models. Sci Rep 14, 16996 (2024). https://doi.org/10.1038/s41598-024-67957-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-67957-z

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.