Abstract

Utilization of optimization technique is a must in the design of contemporary antenna systems. Often, global search methods are necessary, which are associated with high computational costs when conducted at the level of full-wave electromagnetic (EM) models. In this study, we introduce an innovative method for globally optimizing reflection responses of multi-band antennas. Our approach uses surrogates constructed based on response features, smoothing the objective function landscape processed by the algorithm. We begin with initial parameter space screening and surrogate model construction using coarse-discretization EM analysis. Subsequently, the surrogate evolves iteratively into a co-kriging model, refining itself using accumulated high-fidelity EM simulation results, with the infill criterion focusing on minimizing the predicted objective function. Employing a particle swarm optimizer (PSO) as the underlying search routine, extensive verification case studies showcase the efficiency and superiority of our procedure over benchmarks. The average optimization cost translates to just around ninety high-fidelity EM antenna analyses, showcasing excellent solution repeatability. Leveraging variable-resolution simulations achieves up to a seventy percent speedup compared to the single-fidelity algorithm.

Similar content being viewed by others

Introduction

Development of antennas is a difficult undertaking for a number of reasons. On the one hand, existing and emerging application areas1,2,3,4,5 dictate strict performance requirements, related to both electrical and field parameters (broadband, and multi-band operation, high gain, circular polarization, beam scanning, or MIMO operation6,7,8,9,10,11), reconfigurability12, but also small physical dimensions13,14,15,16. On the other hand, handling of complex antenna geometries developed to fulfil these needs17,18,19,20 is a considerable challenge by itself. For example, parametric studies, still widely employed for dimension adjustment, are insufficient to control multiple variables, let alone several design objectives or constraints. Instead, rigorous numerical optimization methods are recommended21,22. However, accurate evaluation of antenna characteristics demands electromagnetic (EM) analysis, known for its computational cost. EM-driven optimization often demands numerous antenna evaluations, leading to potentially prohibitive computational expenses. Even local parameter tuning, whether using gradient-23 or stencil-based techniques24, may necessitate dozens or even hundreds of antenna simulations. Global optimization25, as well as other procedures (multi-criterial design26, statistical analysis27, tolerance optimization28), are incomparably more expensive when executed directly using EM simulation models.

Despite the mentioned challenges, global search is more and more often recommended if not necessary. This is the case for inherently multimodal tasks (array pattern optimization29, frequency-selective surface development30, design of metamaterials31), design of compact antennas based on topological modifications (stubs32, defected ground structures33, shorting pins34) leading to parameter redundancy and parameter space enlargement35, antenna redesign over wide operating parameters ranges36, or simply unavailability of a good starting point. In modern times, global optimization primarily revolves around nature-inspired metaheuristic algorithms37,38,39. These algorithms operate on sets (populations)40 of potential solutions (individuals, agents)41 rather than single parameter vectors. Their global search capability stems from exchanging information among the population members, either by communicating the most promising parameter space locations42, sharing information within individuals43, or integrating stochastic components. The latter might be in a form of partially random selection procedures44, but also random modifications of the parameter vectors45. There is a large variety of nature-inspired procedures available. Widely used methods encompass genetic/evolutionary algorithms, firefly algorithm, differential evolution, particle swarm optimizers (PSO), ant systems, grey wolf optimization46,47,48,49,50,51,52,53, and a plethora of others54,55,56,57. The algorithms of this class have a simple structure and are straightforward to handle. However, in terms of EM-based design they are impractical due to poor computational efficiency. An average optimization run entails several thousand evaluations of the objective function, which can be restrictive, especially for moderately or highly complex antenna structures. As a result, direct application of nature-inspired optimization methods becomes feasible only when EM analysis is relatively rapid or when hardware and licensing enable parallel processing.

Nowadays, one of the most popular ways of enabling nature-inspired optimization of expensive simulation models are surrogate modeling techniques58,59,60. A standard surrogate-assisted framework operates iteratively, relying on a surrogate model as a predictor to approximate the optimal design61. Subsequently, the simulation data gathered during the process aids in refining this surrogate. New data points, often termed infill points, are generated based on diverse criteria aiming to enhance model accuracy across the parameter space (exploration62), pinpoint the optimal design (exploitation63), or enable balanced exploration and exploitation64). The nature-inspired algorithm is tasked with optimizing either the surrogate model or the predicted modeling error65. The specific modeling methods often used for this purpose are kriging66, Gaussial Process Regression (GPR)67, or neural networks68. Recently, the frameworks of this type have been often referred to as machine learning (ML) procedures69,70. A comprehensive review of ML techniques for antenna design can be found in99. The performance of surrogate-assisted nature-inspired algorithms is hindered by problems related to a rendition of an accurate metamodel, which is particularly troublesome for highly-nonlinear antenna responses. The challenge becomes more pronounced when aiming for a model that remains valid across broad ranges of design variables and frequencies. Available algorithms often demonstrate their effectiveness using low-dimensional examples (typically up to six parameters)71,72. Available mitigation approaches include performance-driven modeling36,73,74, although its incorporation into global optimization procedures is not straightforward. Other possibilities are variable-resolution EM simulations (e.g., initial pre-screening executed using the low-fidelity analysis75, or utilization of co-kriging models76), and response feature technology77 (mainly employed for local tuning78, but also generic surrogate modeling frameworks79). The approach involves reframing the problem at hand using system output characteristics (features), such as frequency or level coordinates of resonances. These coordinates have a less nonlinear dependence on design variables compared to the entire frequency characteristics. This strategy offers a significant simplification of the design task80, leading to faster convergence81 or a decrease in the number of training samples necessary to identify a dependable metamodel82.

This work introduces an innovative surrogate-based technique for cost-effective global design enhancement of multi-band antenna devices. The presented approach leverages response feature technology and variable-resolution EM simulations. Initially, a kriging metamodel is constructed based on response features extracted from a set of random observables, allowing determination of attractive regions of the parameter space at low cost through low-fidelity EM analysis. Subsequent surrogate refinement relies on infill points generated by optimizing the metamodel. The optimization is executed using a particle swarm optimizer, minimizing the predicted objective function. Maintaining accuracy entails high-fidelity EM analysis at this stage, constructing the surrogate model through co-kriging. Using response features further reduces the optimization's computational cost. Extensive verification experiments demonstrate the relevance and effects of our framework's algorithmic mechanisms on both reliability and computational efficiency. Comparisons with direct nature-inspired optimization, surrogate-assisted algorithms working with complete antenna responses, and single-resolution feature-based methods confirm significant reduction in runtime without compromising design quality. Depending on the test case, the overall optimization cost corresponds to as few as 120 high-fidelity EM simulations, showing excellent solution repeatability.

Global antenna optimization by response features and variable-resolution simulation models

Here, we present our optimization technique. Section "Design problem formulation" revisits the formulation of the design task, specifically focusing on optimizing multi-band antenna input characteristics. The concept of response features is outlined in Section "Response features". Section "Variable-resolution EM models" delves into variable-resolution EM models, while Section "Kriging interpolation. Co-Kriging Surrogates" formulates kriging and co-kriging modeling, intended for constructing the initial surrogate (low-fidelity) in Section "Parameter space pre-screening. construction of the initial surrogate" and the refined surrogate (high-fidelity) in Section "Generating infill points using PSO. Co-Kriging surrogate". This refined surrogate is involved in generating infill points through the improvement of the predicted merit function, as detailed in Section "Generating infill points using PSO. Co-Kriging surrogate". The optimization engine used in this stage is a particle swarm optimizer. Finally, Section "Optimization framework" encompasses an extensive summary of the complete design procedure.

At this point, it should be reiterated that the proposed optimization methodology relies on surrogate modeling techniques. The main underlying idea is to replace massive evaluations of antenna characteristics using EM simulations with their predictions obtained using a low-cost replacement model (surrogate). Here, data-driven surrogates are utilized, which are obtained by approximating sampled EM data using co-kriging. The resulting surrogate model is fast, so the antenna evaluation cost is negligible compared to EM analysis. This is of fundamental importance, especially in the context of global optimization, which requires a large number of system simulations. EM analysis is only executed occasionally to verify the quality of newly created infill points, and to update the surrogate model, which gradually becomes a better and better representation of the antenna at hand.

Design problem formulation

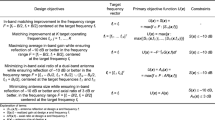

The statement of the design task largely depends on the specific optimization goals. In this study, we concentrate on optimizing the input characteristics of multi-band antennas, aiming to position the resonances at specified locations in terms of (target) frequencies Ft = [ft.1 … ft.K]T, and improving the impedance matching therein (i.e., minimizing the reflection coefficient modulus |S11| at all frequencies ft.j, j = 1, …, K). The problem is usually framed as a minimax objective function, detailed in Table 1 alongside the required notation. Using this terminology, we can express the design task in the following form

The problem (1) can be generalized to handle other types of goals, for example, impedance matching improvement over fractional bandwidths centred at ft.j, maximization of the impedance bandwidth, minimization of axial ratio, improvement of the antenna gain, etc. As mentioned earlier, the specific task considered here is a representative case, which is often dealt with in practice. Here, it is employed to demonstrate the global search framework discussed in the paper. At the same time, it should be emphasized that the considered design problem is a nominal one, i.e., no parameter deviations (e.g., fabrication tolerances or other types of uncertainties) are considered.

Response features

The primary bottleneck in simulation-driven antenna optimization is the extensive computational expense linked to numerous EM analyses, which are essential for numerical search processes. This cost is notably substantial for local optimization and escalates significantly when employing global algorithms.

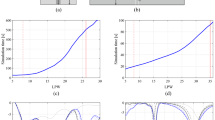

Global search involves exploring the entire parameter space, a challenging task due to its vastness in both dimensionality and parameter ranges. The nonlinear nature of antenna outputs, especially in multi-band antennas, further complicates this task. As shown in Fig. 1 for an exemplary planar antenna, the sharp resonant input characteristics pose difficulties. If pursuing a local search approach for problem (1), it would fail if initiated from most designs depicted in Fig. 1b. Additionally, creating a dependable surrogate model that accurately represents these resonant characteristics is complicated due to the response shape.

Dual-band antenna and challenges associated with its global optimization: (a) geometry, (b) family of responses corresponding to random designs allocated over the assumed design space, (c) selected characteristics. The target operating frequencies: 2.45 GHz and 5.3 GHz are indicated by vertical lines. For most of designs of (b) and (c), local optimization oriented towards matching improvement at the target frequencies would fail. Proper allocation of the antenna resonances requires global search.

One way to address these challenges is through a response feature approach81, which involves reformulating the design task using characteristic points of the antenna outputs78. This method leverages the weakly nonlinear dependency of the characteristic point coordinates (such as frequencies and levels) on antenna geometry parameters77,78,79,80,81. Utilizing this approach regularizes the objective function, facilitating faster convergence of the optimization process81. It also enables quasi-global search capabilities83 and reduces the amount of training points required to build a dependable metamodel82.

Feature points must align with the design objectives78. For impedance matching improvement of multi-band devices, selecting frequency and level allocation of antenna resonances is apt. This is exemplified in Fig. 2, where additional feature points related to the –10 dB levels of |S11| are depicted, aiding in enlarging antenna bandwidth. As shown in Fig. 2(b), the coordinates of feature points display relatively simple patterns (in contrast to complete antenna characteristics; for an in-depth discussion, refer to78,81).

Dual-band dipole antenna: (a) response features corresponding to antenna resonances (o) and –10 dB reflection levels (open square); note that some of the feature points may not exist depending on the design (e.g., one of the resonances being outside the simulation frequency range); (b) relationship between the operating frequencies and selected geometry parameter. Note that clear trend is visible, as emphasized using a least-square regression model (gray dots). The latter demonstrates weakly-nonlinear relations between the response feature coordinates and geometry parameters.

In the remaining part of this work, we use fP(x) = [ff(x)T fL(x)T]T, where ff(x) = [ff.1(x) … ff.K(x)]T and fL(x) = [fL.1(x) … fL.K(x)]T, to denote a response feature vector, its horizontal and vertical coordinates, respectively. The feature-oriented merit function takes the form of

in which β ||ff – Ft||2 is a regularization factor, implemented to facilitate the adjustment of resonant frequencies towards their intended values. While the specific value of β is not overly critical, it should be selected to ensure that the regularization term significantly contributes in scenarios where resonant frequencies exhibit considerable misalignment (here, we set β = 100). It can be noted that formulation (2) is different than the minimax one of Table 1, yet the optimum solutions with respect to both are equivalent assuming that the requested operating frequencies can be reached.

Variable-resolution EM models

Low-fidelity models can be used to accelerate design optimization procedures primarily by reducing the time required for system evaluation. The trade-off is in the loss of accuracy (cf. Fig. 3), which has to be compensated for through appropriate correction (e.g., space mapping84,85). When dealing with antenna structures, coarse-discretization EM analysis stands out as a versatile and effective low-fidelity modeling approach86. The actual speedup depends on the particular antenna structure, and the acceleration factors vary from less than three to over ten, assuming that the reduced-resolution model still renders essential details of antenna response.

Variable-fidelity models: (a) an exemplary dual-band antenna, (b) its reflection responses evaluated using the low-fidelity EM model (- - -) and the high-fidelity one (—). In the case shown in the picture, the simulation time of the high-fidelity model is about 90 s, whereas the evaluation of the low-fidelity model only takes about 25 s.

In subsequent discussions, the low-resolution model, termed Rc(x), will have a dual role: (i) generating a set of random observables for parameter space pre-screening, and (ii) building the initial surrogate model using kriging (refer to Section "Kriging interpolation. Co-Kriging surrogates"). As the process progresses, low-resolution data will merge with the gathered high-fidelity points to create an enhanced surrogate (utilizing co-kriging, cf. Section "Kriging interpolation. Co-Kriging Surrogates"). We will employ a notation Rf(x) to refer to the high-fidelity model.

Kriging interpolation. Co-Kriging Surrogates

Here, we briefly recall kriging and co-kriging interpolation87, utilized to build replacement models employed as predictors in the optimization procedure developed in this paper.

Let {xBc(k),Rc(xBc(k))}k = 1, …, NBc, denote the low-resolution dataset consisting of parameter vectors xBc(k) and the corresponding antenna responses evaluated at the low-fidelity EM simulation level. We will also denote by {xBf(k),Rf(xBf(k))}k = 1, …, NBf, the high-fidelity dataset, obtained through high-fidelity EM analysis at the locations xBf(k).

The details concerning kriging and co-kriging models sKR(x) and sCO(x) can be found in Table 2. Co-kriging surrogate blends together (i) a kriging model sKRc established using the low-resolution data (XBc, Rc(XBc)), (ii) sKRf determined using the residuals (XBf, r), where r = Rf(XBf) – ρ⋅Rc(XBf); here ρ is included in the Maximum Likelihood Estimation (MLE) of the second model88. Rc(XBf) can be taken as Rc(XBf) ≈ sKRc(XBf) in case the relevant low-fidelity data is not available. Both models use the same correlation function (cf. Table 2).

Parameter space pre-screening. construction of the initial surrogate

In the proposed methodology outlined in this study, the search initiates by creating an ensemble set of random parameter vectors evaluated with a low-resolution EM model. Eligible samples, capable of extracting response features, are employed to build the initial surrogate model. This approach operates within the response feature space, enabling a regularization of the cost function, which significantly diminishes the amount of data points required to set up a dependable metamodel.

The metamodel s(0)(x) is built to make predictions about coordinates of the feature points, i.e., their frequencies and levels. We have

The model s(0) is identified using kriging interpolation89 (cf. Section "Kriging interpolation. Co-Kriging surrogates"). The training dataset consist of the vectors xBc(j), j = 1, …, Ninit, and the corresponding feature vectors fP(xBc(j)), extracted from low-fidelity EM simulation data. The data points are generated sequentially, and only the points with extractable characteristic points (cf. Fig. 4) are included. The number Ninit is set to guarantee sufficient reliability of the metamodel. The acceptance threshold Emax for a relative RMS error73 a user-defined parameter. Figure 5 summarizes the procedure for generating the training data points. In practice, Ninit is between 50 and 200, depending on the problem complexity. The actual number of random trials (i.e., low-fidelity EM model simulations) required to produce Ninit acceptable samples is two to three times larger than Ninit.

Generating infill points using PSO. Co-Kriging surrogate

As discussed in Section "Kriging interpolation. Co-Kriging surrogates", the initial metamodel s(0) is identified using Ninit low-resolution data samples obtained through parameter space pre-screening. In the core stage of the search procedure, the metamodel is subject to refinement with the use of high-fidelity samples xf(i), i = 1, 2, …, obtained by solving

where s(j), j = 1, 2, …, are co-kriging surrogates (cf. Section "Parameter space pre-screening. construction of the initial surrogate") constructed using low-resolution dataset {xBc(j), fP(xBc(j))}, j = 1, …, Ninit, as well as high-fidelity dataset consisting of the high-fidelity samples acquired until the ith iteration, {xf(j), fP(xf(j))}, j = 1, …, i.

The current metamodel s(i) acts as a predictor, optimized to yield the subsequent iteration points. The solution to (4) is found in a global sense using a particle swarm optimizer (PSO)91. Given a fast metamodel, the selection of the search routine is of minor significance, and the optimization process can be executed using relatively large computational budget. Also, formulating the problem using response features facilitates the task even further. The regularization term within the objective function (2) essentially promotes a nearly monotonic behaviour concerning the gap between the current and requested center frequencies of the antenna being designed. In machine learning terms, the generation of infill points as described in (4) is akin to using predicted improvements in the objective function as the infill criterion 92.

It should be reiterated that as the proposed optimization framework is intended to be a global search engine, it is essential that the infill points are generated globally. As a matter of fact, for this purpose, PSO might be replaced by any bio-inspired algorithm because the global optimization stage is carried out at the level of the fast surrogate model. Consequently, most metaheuristic algorithms would perform similarly as there is no practical limit on the computational budget when solving the sub-problem (4).

The search process is terminated if the distance between subsequent iteration points is sufficiently reduced (i.e., ||x(i+1) – x(i)||< ε), or there was no improvement of the cost function over the last Nno_improve iterations (whichever occurs first). Both conditions essentially control the optimization process resolution. In our verification experiments (cf. Section "Verification and benchmarking"), the termination parameters are set as ε = 10–2 and Nno_improve = 10. It should be noted that 10–2 corresponds to a precise optimum allocation as for antenna dimensions expressed in millimeters, 0.01 is a small number, which is at the level of fabrication capabilities for standard manufacturing procedures.

Optimization framework

The global optimization framework suggested in this study is summarized in this section. Table 3 gathers the control parameters, which are only three. Two of these are related to the termination condition and have already been discussed in Section "Generating infill points using PSO. Co-Kriging surrogate". The parameter Emax is an acceptance threshold concerning the relative RMS error of the initial surrogate. Here, it is set to two percent; however, this value is not critical. A value below ten percent suffices, due to the fact that the surrogate is rendered using response features, resulting in a relatively regular functional landscape, unlike the more intricate landscape of complete antenna characteristics. Hence, the algorithm setup is quite straightforward. To emphasize this aspect, identical values of control parameters are used for all demonstration case studies investigated in Section "Verification and benchmarking".

A summary of the operating steps of the procedure can be found in Fig. 6. Using the input data, as specified in Step 1, the pre-screening stage is executed as discussed in Section "Parameter space pre-screening. construction of the initial surrogate" (Step 2). The initial surrogate model constructed in Step 3 is used as a predictor for generating the first high-fidelity infill point (Step 5). The metamodel is updated (Steps 6 and 8), and the entire infill process is continued until convergence. As a supplementary explanation, Fig. 7 provides the flow diagram of the presented algorithm.

The complete algorithm including all its components (pre-screening, CST-Matlab socket, PSO algorithm, etc.) has been implemented in Matlab98. The kriging and co-kriging models were implemented using Matlab-based SUMO toolbox developed at Ghent University, Belgium99.

Verification and benchmarking

The effectiveness of the algorithm detailed in Section "Verification and benchmarking" is validated through three distinct multi-band antennas. We aim at gauging how the integration of variable-resolution models and response features influences the algorithm's global search capabilities and computational efficiency. Our benchmarks encompass multiple-start local search, direct EM-driven nature-inspired optimization (using PSO), and two machine learning algorithms: one operating directly with complete antenna responses, the other being a feature-based framework involving the high-resolution computational model. This comparative approach enables us to validate the multimodal nature of the design tasks and verify the impact and relevance of the mechanisms employed (response features and variable-fidelity simulations). The subsequent section organization is as follows: the verification cases are highlighted in Section "Verification antennas", Section "Setup and results" discusses the setup of the numerical experiments along with the results, and finally, Section "Discussion" offers an analysis of the results and the algorithm's performance.

It should be emphasized that experimental validation of the antenna structures produced by the proposed and the benchmark algorithms is out of the scope of this study and will not be considered. The reason is that the ultimate antenna representation is the computational model implemented and evaluated using a full-wave EM solver (here, CST Microwave Studio). Experimental validation of antennas generated using any optimization procedure would be equivalent to validating the accuracy of the simulation software, rather than the optimization procedures. Interested reader may find experimental results in the source works (e.g.,80,93,94).

Verification antennas

The proposed algorithm's validation centers around the following three microstrip antenna structures:

-

A dual-band uniplanar coplanar-waveguide (CPW)-fed dipole antenna93 (Antenna I);

-

A triple-band uniplanar CPW-fed dipole80 (Antenna II);

-

A triple-band patch antenna with a defected ground94 (Antenna III).

The antenna geometries have been shown in Figs. 8, 9, 10, which also provide information about the essential parameters (design variables, material parameters of the substrate). All EM simulation models are implemented and assessed using CST Microwave Studio95, using the time-domain solver. All computations have been performed using the following hardware setup: micro-server machine with two 2.2 GHz Intel Xeon processors with 20 computing cores (40 logical processors), and 64 GB RAM. To acquire the low-resolution models, the structures' discretization density is decreased compared to their original high-fidelity representations. Specifics on the typical mesh density and simulation times are available in Table 4.

Antenna I93: (a) geometry, (b) essential parameters.

Antenna II80: (a) geometry, (b) essential parameters.

Antenna III94: (a) geometry, the light-shade grey denotes a ground-plane slot, (b) essential parameters.

The objective is to align the antenna resonant frequencies with specified targets and enhance impedance matching at those frequencies, as described in Section "Design problem formulation". For more details, refer to Table 5, which offers insights into the parameter spaces. These spaces are notably extensive, particularly regarding parameter ranges. On average, the upper-to-lower bound ratio stands at 4.2, 8.4, and 2.6 for Antennas I, II, and III, respectively.

Setup and results

To enable verification, Antennas I through III were optimized by means of the proposed algorithm, set up as indicated in Table 3 (Emax = 2%, ε = 10–2, and Nno_improve = 10). Table 6 summarizes the benchmark algorithms utilized in the comparative experiments. These include PSO (as a popular metaheuristic procedure), multiple-start gradient search (utilized to underscore the necessity of global search for the outlined test cases), as well as two machine learning algorithms. The first one is a surrogate-assisted kriging-based procedure working with the complete antenna responses, and utilizing predicted objective function improvement as the infill criterion. The second is essentially the feature-based algorithm of Section "Global antenna optimization by response features and variable-resolution simulation models" but using a single EM simulation model (here, Rf(x)) at all design optimization stages. These algorithms are used to demonstrate the relevance of incorporating response features and variable-resolution models. The control parameter Emax was set to 10% for Algorithm III because the construction of a reliable metamodel for antenna frequency characteristics is considerably more challenging than representing feature point coordinates. Also, in this case, the maximum computational budget of 400 initial samples was assumed (in case it is not possible to reach the Emax threshold).

Each algorithm is executed ten times, and the results statistics are reported. This is necessary due to the stochastic nature of the search process. The numerical findings are summarized in Tables 7, 8, and 9. The considered performance figures include the design quality measured using the average objective function value, the computational cost expressed in terms of the number of equivalent high-fidelity EM simulations, and the success rate, which is the number of algorithm runs (out of ten) for which the algorithm allocated the antenna resonances at the intended targets.

Additionally, Figs. 11, 12, 13 showcase the reflection characteristics of Antennas I through III. These figures highlight the designs generated by the proposed framework during specific algorithm runs and demonstrate the evolution of the merit function over the iteration index. Moreover, Fig. 14 depicts the optimization trajectory in the feature space of antenna operating frequencies, emphasizing the same selected runs as those illustrated in Figs. 11, 12, 13. It is worth noting that the specific choice of the infill criterion (minimization of the predicted objective function) results in an increased density of infill points around the optimal design.

Reflection responses of Antenna I at the designs obtained using the proposed global optimization algorithm (top) and evolution of the objective function value (bottom), shown for selected algorithm runs: (a) run 1, (b) run 2. The iteration counter starts after constructing the initial surrogate model. Vertical lines mark the target operating frequencies, here 2.45 GHz and 5.3 GHz.

Reflection responses of Antenna II at the designs obtained using the proposed global optimization algorithm (top) and evolution of the objective function value (bottom), shown for selected algorithm runs: (a) run 1, (b) run 2. The iteration counter starts after constructing the initial surrogate model. Vertical lines mark the target operating frequencies, here 2.45 GHz, 3.6 GHz, and 5.3 GHz.

Reflection responses of Antenna III at the designs obtained using the proposed global optimization algorithm (top) and evolution of the objective function value (bottom), shown for selected algorithm runs: (a) run 1, (b) run 2. The iteration counter starts after constructing the initial surrogate model. Vertical lines mark the target operating frequencies, here 3.5 GHz, 5.8 GHz, and 7.5 GHz.

Optimization history in the feature space for: (a) Antenna I, (b) Antenna II, (c) Antenna III. Shown are the plots corresponding to the two selected runs of the proposed algorithm as presented in Figs. 11, 12, 13 for Antennas I through III, respectively. The black and the blue dots represent the initial (low-fidelity) samples, and the infill (high-fidelity) samples, whereas the line segments illustrate the optimization path. The final solution is marked as a large circle. Figures (b) and (c) are two-dimensional projections of the three-dimensional feature space. The range of operating frequencies was adjusted to provide the details of the optimization history in the vicinity of the final solution.

The analysis of the optimization history in the feature space for all considered antennas allows us to conclude that the majority of the improvement in allocation of the antenna operating frequencies occurs during the first few iterations. Afterwards, only small changes in the values of the said frequencies are observed, meaning that these are fine-tuned in the final part of the procedure. This is corroborated by the results obtained for each verification antenna structure shown in the bottom panels of Figs. 11, 12, 13, where the objective function value rapidly decreases across a few initial iterations. Whereas the reduction in the objective function value throughout the final steps of the optimization procedure is considerably smaller.

In our work, we utilize a standard setup of PSO algorithm parameters, although numerous strategies for selecting values of the control parameters of PSO algorithms have been analyzed100,101. We use a common approach where χ is equal to 0.7298 (see Table 6). Moreover, for this value of ϕ it is recommended that the parameters c1 and c2 sum to 4.1. Thus we use c1 = c2 = 0.5. Nevertheless, in the presented work, fine-tuning the values of the parameters is unnecessary, as we employ a PSO optimizer to optimize a fast surrogate model. Therefore, the optimization process may be carried out as long as required to ensure sufficient solution accuracy.

Discussion

The assessment of the proposed optimization framework is consolidated based on the outcomes detailed in Section "Setup and results". Furthermore, a comparative analysis against benchmark techniques (Algorithms I through IV from Table 6) is conducted to evaluate factors such as the optimization process's reliability, design quality, and computational efficiency. Subsequent discussions delve into these crucial aspects.

Design reliability: The measure of reliability is the success rate (shown in the last column of Tables 7, 8, 9), i.e., the count of successful runs (out of ten) where the algorithm allocated the antenna resonances at the intended targets. The proposed algorithm exhibits perfect success rate in this sense, similarly as the other two machine-learning-based procedures (Algorithms III and IV). Other methods are considerably worse, which, in the case of Algorithm II is an indication of multimodality of the considered design tasks. For PSO, the results (especially the performance improvement from 50 to 100 iteration version) demonstrate that nature-inspired optimization requires considerably higher computational budget. Also, the performance of Algorithm III is inferior for Antenna III, which is the most complex task, and building a dependable replacement model of the complete antenna characteristics is much more challenging here.

Design quality: The design quality is assessed by calculating the mean value of the cost function at the final design. This value is comparable for all algorithms; the differences at the level of one to three decibels have a minor importance due to the response shape. Clearly, seemingly worse values shown by Algorithms I and II are related to the fact the average performance is displayed, which is reduced by unsuccessful runs.

Computational efficiency: The numbers in Tables 7, 8 and 9 demonstrate that the expenses entailed by the optimization process are by a great amount the lowest for our procedure, as compared to other global search algorithms. The average cost, given as the equivalent number of high-resolution EM simulations is only 65, 121, and 90, for Antenna I, II, and III, respectively. The savings with respect to Algorithm IV, which differs from the proposed one in terms of only using high-fidelity models, are as high as 30, 52, and 74 percent. This accomplishment is attributed to the integration of variable-resolution EM simulations. Also, it should be noted that the computational benefits increase with the problem complexity. The majority of antenna simulations occur in the first stage of the search procedure, specifically during the parameter space pre-screening and initial surrogate model construction, utilizing the low-fidelity model. When compared to Algorithm II (gradient search), it is noteworthy that the cost of the proposed approach aligns quite closely with local optimization, marking a significant achievement. Overall, our technique outperforms methods available in the literature in the realm of computer-aided design of antenna systems in terms of computational efficiency. Clearly, expediting optimization processes is advantageous from the point of view od speeding up the design cycles and pushing forward the state-of-the-art in antenna design automation as well as other areas where utilization of CPU-intensive simulation models is ubiquitous.

A separate note should be made about response features. While the proposed algorithm only required 77, 230, and 259 random observables in the first stage of the optimization process (and, in all cases, the assumed modeling error Emax was achieved), Algorithm III working with the complete antenna responses was unable to reach the required accuracy limit, thus, the surrogate was eventually established using 400 data samples (the allowed computational budget). Leveraging response features results in a remarkable reduction in the computational expenses during this stage of the process, presenting another advantageous facet of the presented approach.

The performance assessment formulated in the last few paragraphs demonstrates that the proposed algorithm might be a viable alternative for the existing global search techniques, at least in the realm of multi-band antenna design. Similar performance may be expected for other types of problems, assuming they can be reformulated using response features. The three most important advantages of our methodology are design reliability, computational efficiency, and a straightforward setup: the algorithm comprises only three control parameters, with two specifically relevant to the termination criteria.

Conclusion

This article presented an algorithmic framework designed for cost-effective global optimization of multi-band antennas. Operating within a machine learning paradigm, this method operates at the level of antenna’s characteristic points and utilizes variable-resolution electromagnetic simulations. Using response features allows regularization of the objective function landscape, reducing the data needed for surrogate model construction. Variable-resolution simulations cut down computational expenses during parameter space exploration, primarily utilized in the initial stages. The simplification achieved through response features facilitates an infill strategy focused on parameter space exploitation (minimization of the predicted objective function). Extensive numerical validation across three microstrip antennas consistently demonstrates the method's competitive effectiveness, yielding satisfactory results in every algorithm run out of multiple executions performed. Benchmarking against leading methods underscores the relevance and benefits of the incorporated algorithmic techniques. The average computational expense of the search process equates to approximately ninety high-fidelity evaluations of the antenna at hand. This technique shows promise as a computationally efficient alternative to existing global search algorithms. Future work aims to broaden its scope to handle other antenna responses such as axial ratio, gain, or bandwidth. Furthermore, scalability of the proposed technique for higher-dimensional problems will be considered. Finally, possibilities to apply our method in different engineering fields (e.g., aerospace) will be considered, which is however contingent upon appropriate definition of field- and problem-dependent response features (the latter being an inherent part of design problem formulation).

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Yuan, X.-T., Chen, Z., Gu, T. & Yuan, T. A wideband PIFA-pair-based MIMO antenna for 5G smartphones. IEEE Ant Wireless Propag. Lett. 20(3), 371–375 (2021).

Sun, L., Li, Y. & Zhang, Z. Wideband decoupling of integrated slot antenna pairs for 5G smartphones. IEEE Trans Ant. Prop. 69(4), 2386–2391 (2021).

Lin, X. et al. Ultrawideband textile antenna for wearable microwave medical imaging applications. IEEE Trans Ant. Prop. 68(6), 4238–4249 (2020).

Wang, Y., Zhang, J., Peng, F. & Wu, S. A glasses frame antenna for the applications in internet of things. IEEE Internet of Things J. 6(5), 8911–8918 (2019).

Kapusuz, K. Y., Berghe, A. V., Lemey, S. & Rogier, H. Partially filled half-mode substrate integrated waveguide leaky-wave antenna for 24 GHz automotive radar. IEEE Ant. Wireless Propag. Lett. 20(1), 33–37 (2021).

Cheng, T., Jiang, W., Gong, S. & Yu, Y. Broadband SIW cavity-backed modified dumbbell-shaped slot antenna. IEEE Ant Wireless Propag. Lett. 18(5), 936–940 (2019).

Ameen, M., Thummaluru, S. R. & Chaudhary, R. K. A compact multilayer triple-band circularly polarized antenna using anisotropic polarization converter. IEEE Ant Wireless Propag. Lett. 20(2), 145–149 (2021).

Ali, M. Z. & Khan, Q. U. High gain backward scanning substrate integrated waveguide leaky wave antenna. IEEE Trans Ant. Prop. 69(1), 562–565 (2021).

Aqlan, B., Himdi, M., Vettikalladi, H. & Le-Coq, L. A circularly polarized sub-terahertz antenna with low-profile and high-gain for 6G wireless communication systems. IEEE Access 9, 122607–122617 (2021).

Wen, Z.-Y., Ban, Y.-L., Yang, Y. & Wen, Q. Risley-prism-based dual-circularly polarized 2-D beam scanning antenna with flat scanning gain. IEEE Ant Wireless Propag. Lett. 20(12), 2412–2416 (2021).

Wong, K., Chang, H., Chen, J. & Wang, K. Three wideband monopolar patch antennas in a Y-shape structure for 5G multi-input–multi-output access points. IEEE Ant Wireless Propag. Lett. 19(3), 393–397 (2020).

Shirazi, M., Li, T., Huang, J. & Gong, X. A reconfigurable dual-polarization slot-ring antenna element with wide bandwidth for array applications. IEEE Trans Ant. Prop. 66(11), 5943–5954 (2018).

Sambandam, P., Kanagasabai, M., Natarajan, R., Alsath, M. G. N. & Palaniswamy, S. Miniaturized button-like WBAN antenna for off-body communication. IEEE Trans Ant. Prop. 68(7), 5228–5235 (2020).

Oh, J.-I., Jo, H.-W., Kim, K.-S., Cho, H. & Yu, J.-W. A compact cavity-backed slot antenna using dual mode for IoT applications. IEEE Ant Wireless Propag. Lett. 20(3), 317–321 (2021).

Sun, H., Hu, Y., Ren, R., Zhao, L. & Li, F. Design of pattern-reconfigurable wearable antennas for body-centric communications. IEEE Ant Wireless Propag. Lett. 19(8), 1385–1389 (2020).

Chen, C. A compact wideband endfire filtering antenna inspired by a uniplanar microstrip antenna. IEEE Ant Wireless Propag. Lett. 21(4), 853–857 (2022).

Gutiérrez, A. R., Reyna, A., Balderas, L. I., Panduro, M. A. & Méndez, A. L. Nonuniform antenna array with nonsymmetric feeding network for 5G applications. IEEE Ant Wireless Propag. Lett. 21(2), 346–350 (2022).

Wu, Y. F., Cheng, Y. J., Zhong, Y. C. & Yang, H. N. Substrate integrated waveguide slot array antenna to generate Bessel beam with high transverse linear polarization purity. IEEE Trans Ant. Propag. 70(1), 750–755 (2022).

Guo, Y. J., Ansari, M., Ziolkowski, R. W. & Fonseca, N. J. G. Quasi-optical multi-beam antenna technologies for B5G and 6G mmWave and THz networks: A review. IEEE Open J. Ant. Propag. 2, 807–830 (2021).

Lee, J., Kim, H. & Oh, J. Large-aperture metamaterial lens antenna for multi-layer MIMO transmission for 6G. IEEE Access 10, 20486–20495 (2022).

Zhang, Y.-X., Jiao, Y.-C. & Zhang, L. Antenna array directivity maximization with sidelobe level constraints using convex optimization. IEEE Trans Ant. Propag. 69(4), 2041–2052 (2021).

Zhang, Z., Chen, H. C. & Cheng, Q. S. Surrogate-assisted quasi-Newton enhanced global optimization of antennas based on a heuristic hypersphere sampling. IEEE Trans Ant. Propag. 69(5), 2993–2998 (2021).

Nocedal, J. & Wrigth, S. J. Numerical Optimization 2nd edn. (Springer, 2006).

Conn, A. R., Scheinberg, K. & Vicente, L. N. Derivative-Free Optimization (Society for Industrial and Applied Mathematics, Philadelphia, 2009).

Xu, Y. et al. A reinforcement learning-based multi-objective optimization in an interval and dynamic environment. Knowl. Based Syst. 280, 111019 (2023).

Zhu, D. Z., Werner, P. L. & Werner, D. H. Design and optimization of 3-D frequency-selective surfaces based on a multiobjective lazy ant colony optimization algorithm. IEEE Trans. Ant. Propag. 65(12), 7137–7149 (2017).

Du, J. & Roblin, C. Stochastic surrogate models of deformable antennas based on vector spherical harmonics and polynomial chaos expansions: Application to textile antennas. IEEE Trans. Ant. Prop. 66(7), 3610–3622 (2018).

Pietrenko-Dabrowska, A., Koziel, S. & Al-Hasan, M. Expedited yield optimization of narrow- and multi-band antennas using performance-driven surrogates. IEEE Access 1, 143104–143113 (2020).

Liang, S. et al. Sidelobe reductions of antenna arrays via an improved chicken swarm optimization approach. IEEE Access 8, 37664–37683 (2020).

Genovesi, S., Mittra, R., Monorchio, A. & Manara, G. Particle swarm optimization for the design of frequency selective surfaces. IEEE Ant Wireless Propag. Lett. 5, 277–279 (2006).

Blankrot, B. & Heitzinger, C. Efficient computational design and optimization of dielectric metamaterial structures. IEEE J. Multiscale Multiphys. Comp. Techn. 4, 234–244 (2019).

Hu, W., Yin, Y., Yang, X. & Fei, P. Compact multiresonator-loaded planar antenna for multiband operation. IEEE Trans Ant. Propag. 61(5), 2838–2841 (2013).

Qian, B. et al. Surrogate-assisted defected ground structure design for reducing mutual coupling in 2 × 2 microstrip antenna array. IEEE Ant Wireless Propag. Lett. 21(2), 351–355 (2022).

Ding, Z., Jin, R., Geng, J., Zhu, W. & Liang, X. Varactor loaded pattern reconfigurable patch antenna with shorting pins. IEEE Trans Ant. Propag. 67(10), 6267–6277 (2019).

Koziel, S. & Pietrenko-Dabrowska, A. Reliable EM-driven size reduction of antenna structures by means of adaptive penalty factors. IEEE Trans. Ant. Propag. 70(2), 1389–1401 (2021).

Koziel, S. & Pietrenko-Dabrowska, A. Expedited acquisition of database designs for reduced-cost performance-driven modeling and rapid dimension scaling of antenna structures. IEEE Trans. Ant. Prop. 69(8), 4975–4987 (2021).

Li, W., Zhang, Y. & Shi, X. Advanced fruit fly optimization algorithm and its application to irregular subarray phased array antenna synthesis. IEEE Access 7, 165583–165596 (2019).

Jia, X. & Lu, G. A hybrid Taguchi binary particle swarm optimization for antenna designs. IEEE Ant Wireless Propag. Lett. 18(8), 1581–1585 (2019).

Lv, Z., Wang, L., Han, Z., Zhao, J. & Wang, W. Surrogate-assisted particle swarm optimization algorithm with Pareto active learning for expensive multi-objective optimization. IEEE/CAA J. Autom. Sin. 6(3), 838–849 (2019).

Choi, K. et al. Hybrid algorithm combing genetic algorithm with evolution strategy for antenna design. IEEE Trans. Magn. 52(3), 1–4 (2016).

Bora, T. C., Lebensztajn, L. & Coelho, L. D. S. Non-dominated sorting genetic algorithm based on reinforcement learning to optimization of broad-band reflector antennas satellite. IEEE Trans Magn. 48(2), 767–770 (2012).

John, M. & Ammann, M. J. Antenna optimization with a computationally efficient multiobjective evolutionary algorithm. IEEE Trans. Ant. Propag. 57(1), 260–263 (2009).

Ding, D. & Wang, G. Modified multiobjective evolutionary algorithm based on decomposition for antenna design. IEEE Trans. Ant. Propag. 61(10), 5301–5307 (2013).

Zhang, H., Bai, B., Zheng, J. & Zhou, Y. Optimal design of sparse array for ultrasonic total focusing method by binary particle swarm optimization. IEEE Access 8, 111945–111953 (2020).

Li, H., Jiang, Y., Ding, Y., Tan, J. & Zhou, J. Low-sidelobe pattern synthesis for sparse conformal arrays based on PSO-SOCP optimization. IEEE Access 6, 77429–77439 (2018).

Goldberg, D. E. & Holland, J. H. Genetic algorithms and machine learning (Springer, 1988).

Michalewicz, Z. Genetic algorithms + data structures = evolution programs (Springer, 1996).

Wang, D., Tan, D. & Liu, L. Particle swarm optimization algorithm: An overview. Soft Comput. 22, 387–408 (2018).

Jiang, Z. J., Zhao, S., Chen, Y. & Cui, T. J. Beamforming optimization for time-modulated circular-aperture grid array with DE algorithm. IEEE Ant Wireless Propag. Lett. 17(12), 2434–2438 (2018).

Zhu, D. Z., Werner, P. L. & Werner, D. H. Design and optimization of 3-D frequency-selective surfaces based on a multiobjective lazy ant colony optimization algorithm. IEEE Trans Ant. Propag. 65(12), 7137–7149 (2017).

Baumgartner, P. et al. Multi-objective optimization of Yagi-Uda antenna applying enhanced firefly algorithm with adaptive cost function. IEEE Trans. Magnetics 54(3), 8000504 (2018).

Houssein, E. H. et al. An efficient discrete rat swarm optimizer for globaloptimization and feature selection in chemoinformatics. Knowl. Based Syst. 272, 110697 (2023).

Li, X. & Luk, K. M. The grey wolf optimizer and its applications in electromagnetics. IEEE Trans. Ant. Prop. 68(3), 2186–2197 (2020).

Bayraktar, Z., Komurcu, M., Bossard, J. A. & Werner, D. H. The wind driven optimization technique and its application in electromagnetics. IEEE Trans. Antennas Propag. 61(5), 2745–2757 (2013).

Al-Azza, A. A., Al-Jodah, A. A. & Harackiewicz, F. J. Spider monkey optimization: A novel technique for antenna optimization. IEEE Antennas Wirel. Propag. Lett. 15, 1016–1019 (2016).

Mostafa, R. R., Gaheen, M. A., El-Aziz, M. A., Al-Betar, M. A. & Ewees, A. A. An improved gorilla troops optimizer for global optimizatio problems and feature selection. Knowl. Based Syst. 269, 110462 (2023).

Ram, G., Mandal, D., Kar, R. & Ghoshal, S. P. Cat swarm optimization as applied to time-modulated concentric circular antenna array: Analysis and comparison with other stochastic optimization methods. IEEE Trans. Antennas Propag. 63(9), 4180–4183 (2015).

Queipo, N. V. et al. Surrogate-based analysis and optimization. Prog. Aerosp. Sci. 41(1), 1–28 (2005).

Easum, J. A., Nagar, J., Werner, P. L. & Werner, D. H. Efficient multi-objective antenna optimization with tolerance analysis through the use of surrogate models. IEEE Trans. Ant. Prop. 66(12), 6706–6715 (2018).

Liu, B. et al. An efficient method for antenna design optimization based on evolutionary computation and machine learning techniques. IEEE Trans. Ant. Propag. 62(1), 7–18 (2014).

Jones, D. R., Schonlau, M. & Welch, W. J. Efficient global optimization of expensive black-box functions. J. Global Opt. 13, 455–492 (1998).

Chen, C., Liu, J. & Xu, P. Comparison of infill sampling criteria based on Kriging surrogate model. Sci. Rep. 12, 678 (2022).

Couckuyt, I., Declercq, F., Dhaene, T., Rogier, H., & Knockaert, L. Surrogate-based infill optimization applied to electromagnetic problems. Int. J. RF Microw. Comput. Aided Eng. 20(5), 492–501 (2010).

Forrester, A. I. J. & Keane, A. J. Recent advances in surrogate-based optimization. Prog. Aerospace Sci. 45, 50–79 (2009).

Alzahed, A. M., Mikki, S. M. & Antar, Y. M. M. Nonlinear mutual coupling compensation operator design using a novel electromagnetic machine learning paradigm. IEEE Ant. Wireless Prop. Lett. 18(5), 861–865 (2019).

de Villiers, D. I. L., Couckuyt, I., & Dhaene, T. Multi-objective optimization of reflector antennas using kriging and probability of improvement. Int. Symp. Ant. Prop., pp. 985–986 (San Diego, USA, 2017).

Jacobs, J. P. Characterization by Gaussian processes of finite substrate size effects on gain patterns of microstrip antennas. IET Microwaves Ant. Prop. 10(11), 1189–1195 (2016).

Dong, J., Qin, W. & Wang, M. “Fast multi-objective optimization of multi-parameter antenna structures based on improved BPNN surrogate model. IEEE Access 7, 77692–77701 (2019).

Wu, Q., Wang, H. & Hong, W. Multistage collaborative machine learning and its application to antenna modeling and optimization. IEEE Trans. Ant. Propag. 68(5), 3397–3409 (2020).

Hu, C., Zeng, S. & Li, C. A framework of global exploration and local exploitation using surrogates for expensive optimization. Knowl. Based Syst. 280, 1018 (2023).

Xia, B., Ren, Z. & Koh, C. S. Utilizing kriging surrogate models for multi-objective robust optimization of electromagnetic devices. IEEE Trans. Magn. 50(2), 7104 (2014).

Xiao, S. et al. Multi-objective Pareto optimization of electromagnetic devices exploiting kriging with Lipschitzian optimized expected improvement. IEEE Trans Magn. 54(3), 7001704 (2018).

Koziel, S. & Pietrenko-Dabrowska, A. Performance-driven surrogate modeling of high-frequency structures (Springer, 2020).

Koziel, S. & Pietrenko-Dabrowska, A. Performance-based nested surrogate modeling of antenna input characteristics. IEEE Trans. Ant. Prop. 67(5), 2904–2912 (2019).

Liu, B., Koziel, S. & Zhang, Q. A multi-fidelity surrogate-model-assisted evolutionary algorithm for computationally expensive optimization problems. J. Comp. Sci. 12, 28–37 (2016).

Pietrenko-Dabrowska, A. & Koziel, S. Antenna modeling using variable-fidelity EM simulations and constrained co-kriging. IEEE Access 8(1), 91048–91056 (2020).

Koziel, S. & Pietrenko-Dabrowska, A. Expedited feature-based quasi-global optimization of multi-band antennas with Jacobian variability tracking. IEEE Access 8, 83907–83915 (2020).

Pietrenko-Dabrowska, A. & Koziel, S. Generalized formulation of response features for reliable optimization of antenna input characteristics. IEEE Trans. Ant. Propag. 70(5), 3733–3748 (2021).

Koziel, S. & Bandler, J. W. Reliable microwave modeling by means of variable-fidelity response features. IEEE Trans. Microwave Theory Tech. 63(12), 4247–4254 (2015).

Pietrenko-Dabrowska, A. & Koziel, S. Simulation-driven antenna modeling by means of response features and confined domains of reduced dimensionality. IEEE Access 8, 228942–228954 (2020).

Koziel, S. Fast simulation-driven antenna design using response-feature surrogates. Int. J. RF & Micr. CAE 25(5), 394–402 (2015).

Pietrenko-Dabrowska, A., Koziel, S. & Ullah, U. Reduced-cost two-level surrogate antenna modeling using domain confinement and response features. Sci. Rep. 12, 4667 (2022).

Tomasson, J. A., Koziel, S. & Pietrenko-Dabrowska, A. Quasi-global optimization of antenna structures using principal components and affine subspace-spanned surrogates. IEEE Access 8(1), 50078–50084 (2020).

Cervantes-González, J. C. et al. Space mapping optimization of handset antennas considering EM effects of mobile phone components and human body. Int. J. RF Microwave CAE 26(2), 121–128 (2016).

Koziel, S. & Bandler, J. W. A space-mapping approach to microwave device modeling exploiting fuzzy systems. IEEE Trans. Microwave Theory Tech. 55(12), 2539–2547 (2007).

Koziel, S. & Ogurtsov, S. Simulation-based optimization of antenna arrays (World Scientific, 2019).

Pietrenko-Dabrowska, A. & Koziel, S. Surrogate modeling of impedance matching transformers by means of variable-fidelity EM simulations and nested co-kriging. Int. J. RF Microwave CAE 30(8), e22268 (2020).

Kennedy, M. C. & O’Hagan, A. Predicting the output from complex computer code when fast approximations are available. Biometrika 87, 1–13 (2000).

Koziel, S. & Pietrenko-Dabrowska, A. Recent advances in high-frequency modeling by means of domain confinement and nested kriging. IEEE Access 8, 189326–189342 (2020).

Cawley, G. C. & Talbot, N. L. C. On over-fitting in model selection and subsequent selection bias in performance evaluation. J. Mach. Learn. 11, 2079–2107 (2010).

Vinod Chandra, S. S., & Anand, H. S. Nature inspired meta heuristic algorithms for optimization problems. Computing 104, 251–269 (2022).

Liu, J., Han, Z., & Song, W. Comparison of infill sampling criteria in kriging-based aerodynamic optimization. In 28th Int. Congress of the Aeronautical Sciences, pp. 1–10, Brisbane, Australia, 23–28 Sept. (2012).

Chen, Y.-C., Chen, S.-Y. & Hsu, P. Dual-band slot dipole antenna fed by a coplanar waveguide 3589–3592 (Proc. IEEE Antennas Propag. Soc. Int. Symp, 2006).

Consul, P. Triple band gap coupled microstrip U-slotted patch antenna using L-slot DGS for wireless applications. In Communication, Control and Intelligent Systems (CCIS), Mathura, India, pp. 31–34 (2015).

CST Microwave Studio, ver. 2021, Dassault Systemes, France (2021).

A.R. Conn, N.I.M. Gould, P.L. Toint, Trust Region Methods, MPS-SIAM Series on Optimization (2000).

Akinsolu, M. O., Mistry, K. K., Liu, B., Lazaridis, P. I., & Excell, P. Machine learning-assisted antenna design optimization: A review and the state-of-the-art. In European Conf. Ant. Propag., Copenhagen, Denmark, 15–20 March, 2020.

Matlab, ver. R2022b, The Mathworks Inc. (2022).

Gorissen, D., Crombecq, K., Couckuyt, I., Dhaene, T. & Demeester, P. A surrogate modeling and adaptive sampling toolbox for computer based design. J. Mach. Learn. Res. 11, 2051–2055 (2010).

Zhang, W., Ma, D., Wei, J. & Liang, H. A parameter selection strategy for particle swarm optimization based on particle positions. Expert Syst. Appl. 41, 3576–3584 (2014).

Trelea, I. C. The particle swarm optimization algorithm: Convergence analysis and parameter selection. Inf. Process. Lett. 85(6), 317–325 (2003).

Acknowledgements

The authors would like to thank Dassault Systemes, France, for making CST Microwave Studio available. This work is partially supported by the Icelandic Research Fund Grant 217771 and by National Science Centre of Poland Grant 2020/37/B/ST7/01448.

Author information

Authors and Affiliations

Contributions

Conceptualization, S.K. and A.P.; methodology, S.K. and A.P.; software, S.K. and A.P.; validation, S.K. and A.P.; formal analysis, S.K.; investigation, S.K. and A.P.; resources, S.K.; data curation, S.K. and A.P.; writing—original draft preparation, S.K. and A.P.; writing—review and editing, S.K. and A.P.; visualization, S.K. and A.P.; supervision, S.K.; project administration, S.K.; funding acquisition, S.K All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Koziel, S., Pietrenko-Dabrowska, A. Fast EM-driven nature-inspired optimization of antenna input characteristics using response features and variable-resolution simulation models. Sci Rep 14, 10081 (2024). https://doi.org/10.1038/s41598-024-60749-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-60749-5

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.