Abstract

Recent results have evidenced that spontaneous brain activity signals are organized in bursts with scale free features and long-range spatio-temporal correlations. These observations have stimulated a theoretical interpretation of results inspired in critical phenomena. In particular, relying on maximum entropy arguments, certain aspects of time-averaged experimental neuronal data have been recently described using Ising-like models, allowing the study of neuronal networks under an analogous thermodynamical framework. This method has been so far applied to a variety of experimental datasets, but never to a biologically inspired neuronal network with short and long-term plasticity. Here, we apply for the first time the Maximum Entropy method to an Integrate-and-fire (IF) model that can be tuned at criticality, offering a controlled setting for a systematic study of criticality and finite-size effects in spontaneous neuronal activity, as opposed to experiments. We consider generalized Ising Hamiltonians whose local magnetic fields and interaction parameters are assigned according to the average activity of single neurons and correlation functions between neurons of the IF networks in the critical state. We show that these Hamiltonians exhibit a spin glass phase for low temperatures, having mostly negative intrinsic fields and a bimodal distribution of interaction constants that tends to become unimodal for larger networks. Results evidence that the magnetization and the response functions exhibit the expected singular behavior near the critical point. Furthermore, we also found that networks with higher percentage of inhibitory neurons lead to Ising-like systems with reduced thermal fluctuations. Finally, considering only neuronal pairs associated with the largest correlation functions allows the study of larger system sizes.

Similar content being viewed by others

Introduction

Biological neural networks are highly complex systems, due to the large number of interacting degrees of freedom and the connectivity properties of its constituent elements. Neurons interact by generating action potentials (“firing” or “spiking”), with a biophysical mechanism first explained quantitatively by Hodgkin and Huxley in 19521. Their dynamics can be characterized by discretized time series of these firing patterns in binary notation2. This description suggests an analogy with the binary spin states in Ising models. Indeed, Ising-like descriptions of brain activity can be traced back to fifty years ago, to the work by Little3 and Hopfield4, followed by the work of Amit et al.5, where the authors used a spin glass model to describe neural networks. More recently6,7,8,9,10,11,12,13, generalized Ising models have been used to describe the dynamics of recordings from stimulated neuronal activity, using the so-called Maximum Entropy Modelling (MEM) method14. The method consists in finding the least biased (or maximum entropy) probability distribution that is consistent with a given set of statistical measurements from the system under consideration but otherwise imposes no further constraints15. When this method is applied to describe the individual firing rates and correlation functions between neurons, the resulting statistical description is equivalent to the one of a specific Ising model with frustrated spins6,7. Therefore, the MEM approach can be interpreted as an effective mapping of the dynamics from an out-of-equilibrium, multi-component system into a “Hamiltonian” one9, specified by relatively few parameters when compared to the full set of possible states of the system in question, allowing to apply the framework of thermodynamics. Outside the scope of neuroscience, this method has been used to construct novel statistical models16, and to study geographic distributions of species17, protein structures18,19, gene mutation effects20, the collective behavior of flocks of birds21 and their diversity distribution22, correlations in eye movements while watching videos23 or reading texts24, and to conduct urban-oriented studies such as flood risk assessment25, analyzing urban mobility patterns26 and property valuations in real estate markets27. In turn, within the context of neuroscience, MEM has been mostly used to analyse experimental data of neuronal recordings, such as visual inputs from cells of a salamander6,9,10,28,29 and rat retina30, numerical simulations from a phenomenological model of retinal ganglion cells31, responses from hippocampus place cells in rodent brains11,13, synchronized and desynchronized neuronal activity from the primary visual cortex of anaesthetised cats and awake monkeys32, in vivo and in vitro neuronal activity from cortical tissue of rodents33 and the nervous system of the nematod C. Elegans 12, an organism whose pattern of connectivity between all its 302 neurons is well known34.

Overall, these studies have given insights regarding collective6 and functional characteristics28 of biological neural networks, as well as helping unraveling the information content of neuronal responses9,10 and its analogous thermodynamic properties10. Particularly, it was shown that the thermal fluctuations present in these Ising systems tend to diverge with system size,7,10,12,32,33 a sign of critical behaviour. However, experimental measurements in real networks can pose challenges that may impact the relevance and range of applicability of the conclusions drawn using the MEM method. For instance, contemporary neuronal recording techniques are restricted by the duration and sampling rate of the recordings29,35 and a precise association between which neuron generated which spike is an open problem, known as “spike sorting”36, possibly affecting the accuracy of the measured correlation functions37. Furthermore, precise estimations of the fraction of excitatory and inhibitory neuronal populations in real systems are also often difficult to obtain38. To mitigate these challenges, numerical models can offer a more controlled environment for studying neuronal activity, allowing one to systematically change many parameters of choice, tuning the activity state, make systematic size studies which do not rely on subsampling, and generate many equivalent independent samples, thus producing statistically relevant data. However, this method has never been applied to data generated by biologically inspired neuronal network models reproducing the fundamental features of neuronal activity in the resting state.

In this context, we apply the MEM method to spontaneous neuronal activity generated by an Integrate-and-fire (IF) model implemented on a scale-free network with short- and long-term plasticity39. This IF model implements the main features of neuronal activity, as firing at threshold, refractory period, as well as long- and short-term plasticity for synaptic connections. In particular, short-term plasticity models the recovery of synaptic resources and relies on a tuning parameter that regulates the dynamical state of the system39. At appropriate values of the tuning parameter, the model generates bursts of firing activity with characteristic spatio-temporal statistics, known as neuronal avalanches, first observed in 2003, in acute slices of rat cortex40, displaying sizes S and durations D that are power-law distributed, as \(P(S) \propto S^{-1.5}\) and \(P(D) \propto D^{-2}\), suggesting that biological neural networks operate near a critical point, as proposed in previous numerical and analytical studies41,42, and is consistent with the divergence of thermal energy fluctuations observed in relatively small Ising-like models constructed from experimental neuronal data using the MEM method7,10,12,32. Results suggest that the brain might act between a quiescence-like state and a state of hyperactivity, possibly offering several biological advantages such as optimal information transmission and storage43. Signs of criticality have been observed in a wide variety of animals, including humans44,45,46, monkeys47, cats32, salamanders48, turtles49, worms12, and fish50, a hallmark of universality41.

Our aim here is to apply the MEM approach to neuronal activity data generated by the IF neuronal network in the critical state. In particular, we construct, using the MEM method mentioned above, fully-connected Ising models of frustrated spins with local fields and interaction constants which allow the average spin state to reproduce the average local neuronal activity, as well as the two-point correlation functions in the two models. We will investigate how the parameters of the Ising model and its thermodynamics properties change with the size and connectivity of the neuronal network. Additionally, we examine how different fractions of inhibitory neurons may influence the associated Ising models. We also consider partially-connected spin networks with only a subset of the total pairwise interactions, associated with the strongest neuronal pairwise correlations, to be able to analyse networks of sizes larger than the ones typically considered so far in the literature35. The aim is to implement the mapping of the neuronal model into a thermodynamic framework, which may open the way to novel insights into brain functions.

The manuscript is organized in four sections. The first one gives a general presentation of Results, in particular the firing dynamics generated by the IF model, the mapping of the IF model into an Ising-like model using the MEM method and a study of its associated properties. In the following sections, we present a general Discussion followed by the Conclusions. In the final section, we describe the details of all methods, namely the IF model implementation and the MEM method.

Results

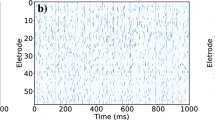

We consider scale-free neural networks of different size \( N \in [ 20 , 500 ] \) with short- and long-term plasticity, as well as a refractory mechanism, where neurons remain inactive for a single timestep immediately after firing (see “Methods”). Together with plastic adaptation, the refractory mechanism has been shown to play a crucial role in the appearance of critical behaviour and in shaping the topology of the network51. All measurements are performed on networks in the critical state. This is done by appropriately tuning the neurotransmitter recovery parameter \( \delta u_{\text {rec}} \) (see “Integrate-and-fire model” in Methods) and verifying that the distributions of neuronal avalanche sizes \(P(S) \propto S^{- \tau _{S}}\) and durations \(P(D) \propto D^{- \tau _{D}}\) decay as power laws with exponents \(\tau _{S} = 1.5\) and \(\tau _{D} = 2\) (see Fig. S1 in Supplementary Information), characteristic of the mean field self-organized branching process52, and consistent with both numerical and experimental observations39,40,46. Initially, we consider only fully-excitatory networks. Later, we will also consider networks with a fraction \( p_{\text {in}} > 0 \) of inhibitory neurons. The time evolution of a system with \(N=120\) neurons in the critical state is shown in the raster plot of Fig. 1.

First, we analyse the firing statistics of the IF model networks. Then, we apply the MEM method to map the dynamics of the IF model networks of size \( N \in [ 20, 120 ] \) into a pairwise Ising model, defined by N “local fields” \( h_ {i} \) and \( N \cdot (N - 1) / 2 \) “interaction constants” \( J_{ij} \), and study its thermodynamical properties. More specifically, this mapping is achieved by using the so-called Boltzmann Machine (BM) algorithm. This learning algorithm searches for the set of parameters \(h_{i}\) and \(J_{ij}\) that best fit the pairwise Ising model to the data of the IF model by comparison with the average local activities \( \langle \sigma _{i} \rangle \) and correlation functions \( C_{ij} \) generated by each model (see “Maximum entropy modelling” in Methods).

Time series for a system at criticality with \(N=120\) neurons. The top raster plot presents the time evolution of the firing states of \( N = 120 \) neurons over a certain number of timesteps, from a fully-excitatory IF network in the critical state. A coloured dot indicates that the respective neuron fired during that timestep, while an absence of a dot means it was inactive. The alternating green and blue colours are just a visual aid to distinguish between different avalanches. The bottom raster plot is a zoom-in of the top one, where the coloured shaded areas indicate avalanches of size \(S > 1\) and duration D.

Firing statistics

To measure the IF network firing statistics, we consider a time bin \(\Delta t_b = 5\) timesteps. We assign a binary value \(\sigma _i ^ {k} \in \{-1,1\}\) to each neuron at each time bin according to the following rule: \(\sigma _i ^ {k} = 1\) if neuron i fired at least once during the k-th time bin, \(\sigma _i ^ {k} = -1\) if the neuron i was inactive during the whole time bin k. We then calculate the average local activity \(\langle \sigma _{i} \rangle \) of the N neurons over \(N_b\) time bins, defined as

as well as the average two-point activity \(\langle \sigma _{i}\sigma _{j} \rangle \) between all \(N \cdot (N - 1) / 2\) pairs of neurons i and j

From these quantities we can also define the two-point correlation functions \(C_{ij}\),

where \(\langle \sigma _{i} \rangle \) is closely related to the firing rate \(r_{i} = \left( \langle \sigma _{i} \rangle + 1 \right) / 2 \Delta t_b\) of neuron i9, whereas \(C_{ij}\) quantifies the tendency for neurons i and j to fire together in the time interval \(\Delta t_b\). Notice that, although likely related, the \(C_{ij}\) are completely distinct from the synaptic strengths \(w_{ij}\). For instance, the \(C_{ij}\) are defined for each pair i and j and are symmetric by definition (\(C_{ij} = C_{ji}\)), whereas \(w_{ij}\) are not. For all simulations we use \(N_b = 10^{7}\).

In Fig. 2 we show the distributions of \(\langle \sigma _{i} \rangle \) and \(C_{ij}\) obtained from \(N_c\) different neural network configurations (see Fig. S1 in Supplementary Information for the respective avalanche statistics), for systems with different number of neurons N. We vary \(N_c\) according to N so that the total number of neurons considered \(N N_c \sim 10{,}000\). The average local activity \(\langle \sigma _{i} \rangle \) is negative for all neurons (Fig. 2a–c), implying that firing is a relatively rare event when considering individual neurons. Moreover, the distribution becomes narrower and shifts towards more negative values as the system size increases. In Fig. 2d–f, we show that the correlation functions are small, but mostly non-zero for all neuron pairs, with their distributions peaked near zero, becoming sharper as the system size N increases. It is also noteworthy that, in experimental studies of the vertebrate retina6, even weak pairwise correlations have been shown to likely become statistically significant in large networks, influencing the dynamics at the scale of the whole network.

Distributions of the average local activity \(\langle \sigma _{i} \rangle \) and of the correlation functions \(C_{ij}\) in IF networks. Average local activity \(\langle \sigma _{i} \rangle \) (a–c) and correlation functions \(C_{ij}\) (d–f), calculated as averages over \( N_{b} = 10^{7} \) time bins. Distributions are obtained for sizes \(N=\{40,120,500\}\), for \(N_{c} \sim 10{,}000 / N\) different fully-excitatory networks.

Another useful quantity that characterizes the firing dynamics is the probability P(K) to observe \(K \in [0,N]\) neurons firing simultaneously within a time bin \(\Delta t_b\),

where \(\delta _{K,K^{k}}\) is the Kronecker delta function and \(K^{k} = \sum _{i}^{N} \left( \sigma _{i} ^ {k} + 1 \right) / 2\) counts the number of neurons firing during the k-th time bin. In Fig. 3 we plot the probability (4) for several networks of different size N. For all N, P(K) is well fitted by an exponential distribution in an intermediate regime. The exponential factor of the distributions consistently decreases as N increases, indicating that it is more likely to observe concurrent firing between neurons in larger networks.

Probability P(K) of observing K neurons firing simultaneously during a time bin \(\Delta t_b = 5\) timesteps. Results are averaged over \( N_{c} \) different fully-excitatory IF networks configurations of size N. \( N_{c} \) varies with N as in Fig. 2. For each configuration, P(K) is estimated by averaging over \(N_b = 10^7\) bins. Error bars are given by the standard error, and most of them are smaller than the symbol size.

Results of the Boltzmann machine learning

Next, we consider Ising models with pairwise interactions for different system sizes \(N=\{20,40,80,120\}\). The interaction constants and local magnetic fields are fitted to reproduce the temporal averages of the correlation functions and local activities generated by the IF model, using the Boltzmann Machine (BM) algorithm (see “Maximum entropy modelling” in Methods). Each spin i can either be in the up-state (\(\sigma _{i} = +1\)) or down-state (\(\sigma _{i} = -1\)), defining a particular spin configuration \( \varvec{\sigma } = \{ \sigma _{1}, \sigma _{2} , ... , \sigma _{N} \} \). The Ising Hamiltonian \( H ( \varvec{\sigma } ) \) is given by

with \(h_i\) and \(J_{ij}\) the fitting parameters, acting as local fields on spin i and interaction constants between spins i and j, respectively. When appropriate, we use the superscript (IF) and (BM) to distinguish between measurements using the IF model and those obtained with the BM using Monte Carlo. More specifically, \( \langle ... \rangle ^ { \text {(IF)} } \) indicates an average over time bins in the IF model while \( \langle ... \rangle ^ { \text {(BM)} } \) is an average over spin configurations of the Ising model.

In Fig. 4 we present the values of \( \{ h_{i} \} \) and the distributions of the interaction constants \( \{ J_{ij} \} \), fitted to reproduce the temporal averages \(\{\langle \sigma _{i} \rangle ^{\text {(IF)}} \}\) and \(\{\langle \sigma _{i}\sigma _{j} \rangle ^{\text {(IF)}} \}\) of fully-excitatory IF networks of size N, tuned to the critical state.

Sets of learned fields \(h_{i}\) and interaction constants \(J_{ij}\) of the Ising model reproducing the time averages of the IF model. Plots of the fields \(h_{i}\) (a–d), sorted by the average local activity \(\langle \sigma _{i} \rangle ^{\text {(IF)}} \) of the associated neuron i, in order of decreasing \(\langle \sigma _{i} \rangle ^{\text {(IF)}} \), and distributions of the interaction constants \(J_{ij}\) (e–h), learned by the BM to reproduce the average local activity \(\langle \sigma _{i} \rangle \) and two-point correlation functions \(C_{ij}\) of a fully-excitatory IF neural network at criticality, with \(N=\{20,40,80,120\}\) neurons.

More specifically, Fig. 4a–d show ranked plots of the fields \(h_i\), where \(h_1\) (\(h_N\)) corresponds to the spin associated with the neuron that fired the most (least) in the IF model. The fields \(h_i\) are mostly negative for all N. This is not surprising considering that a neuron in the IF model fires rarely, as discussed in previous sections, and the negative fields are a consequence of this sparse activity. We note, however, that, as the system size N increases, an increasingly larger fraction of spins have positive \(h_i\), corresponding to the spins associated with the most active neurons. In Fig. 4e–h we plot the distributions of the learned interaction constants \(J_{ij}\). The distributions are bimodal, with the absolute maximum near zero and the second one at positive \(J_{ij}\). However, the height of the second peak appears to decrease with the system size N, suggesting that the limiting distribution when \(N \rightarrow \infty \) becomes consistent with a normal distribution centered around zero. It is interesting to recall that random, normally distributed \(J_{ij}\) characterize the so-called Sherrington-Kirkpatrick (SK) model53,54, an Ising-like model which has been shown to exhibit both ferromagnetic and spin glass phases53. Unlike the SK model, however, the learned Ising models exhibit high heterogeneity in the distribution of the fields \(h_{i}\), and, since the learning technique fits simultaneously both the fields and interaction constants, the inference of the \( \{ J_{ij} \} \) should not be considered numerically decoupled from that of the \( \{ h_{i} \} \).

To test the quality of the learning process, in Fig. 5 we compare the \(\{\langle \sigma _{i} \rangle ^{\text {(BM)}} \}\) and \(\{ C_{ij} ^{\text {(BM)}} \}\) of the Ising-like model with the neural network data of the IF model \(\{\langle \sigma _{i} \rangle ^{\text {(IF)}} \}\) and \(\{ C_{ij} ^{\text {(IF)}} \}\).

Quality test for the BM learning process. Comparison between the average local activity \(\langle \sigma _{i} \rangle \) (a–d) and correlation functions \(C_{ij}\) (e–h) of the Ising-like model (y-axes) and IF model network (x-axes), for system sizes \(N = \{ 20, 40, 80, 120 \}\). The blue dashed lines are the bisector \(y=x\). Results are averages over \(N_{b} = 10^7\) time bins for the IF model (IF), and over \(M_c = 3 \cdot 10^6\) spin configurations for the Ising model (BM). Error bars are given by the standard error, and are smaller or equal to the symbol size.

As described above (by virtue of Eqs. (16) and (17), in the “Methods” section), if the learning is successful, these quantities should be identical since these are precisely the constraints imposed in the learning procedure. The validity of this method is confirmed for both \(\langle \sigma _{i} \rangle \) and \(C_{ij}\), for all N, with all points following closely the bisector \(y=x\).

An interesting quantity to assess the predictive power of the model are the three-point correlation functions \(T_{ijk}\) between all \(N \cdot (N - 1) \cdot (N - 2) / 6\) triplets of neurons i, j and k,

where the averages \(\left\langle ... \right\rangle \) are defined analogously as in Eqs. (1) or (3). In Fig. 6 we compare the triplets between the IF and the Ising models, for systems with \(N=\{20,40,80,120\}\) considered previously.

Predictive capability of the Ising model for the three-point correlation functions \(T_{ijk}\). Comparison between the three-point correlation functions \(T_{ijk}\) of the Ising-like model (y-axes) and the IF model network (x-axes), for system sizes \(N = \{ 20, 40, 80, 120 \}\). The blue dashed lines are the bisector \(y=x\). Results are averages over \(N_{b} = 10^7\) time bins for the IF model (IF), and over \(M_c = 3 \cdot 10^6\) spin configurations for the Ising model (BM). Error bars are given by the standard error, and are smaller or equal to the symbol size.

Even though the \(T_{ijk}\) are not constrained by the learning procedure, the generalized Ising model still captures their systematic qualitative behaviour. Unlike \(\langle \sigma _{i} \rangle \) and \(C_{ij}\), however, the triplets appear to be consistently overestimated by the Ising model, when compared to their IF model counterpart. We note that this behaviour was also seen in experimental recordings from stimulated neurons in a salamander retina7, albeit to a less significant degree.

Another useful measure to assess how well the Ising-like model describes the IF model data is the probability for simultaneous firing P(K) within a small time window, as defined in Eq. (4). In the Ising model, the sum over time bins in Eq. (4) is replaced by a sum over spin configurations generated by Monte Carlo simulation, where K now counts the number of up-spins in a single configuration in the Monte Carlo evolution. We compare this quantity between the IF and Ising models in Fig. 7 for systems of size \(N=\{20,40,80,120\}\).

Predictive capability of the Ising model for the probability of simultaneous firing P(K). Comparison between the simultaneous firing/up-state probability P(K) of the Ising-like model (red) and the IF model network (blue), for system sizes \(N = \{ 20, 40, 80, 120 \}\). Results are averaged over \(N_{b} = 10^7\) time bins for the IF model (IF), and over \(M_c = 3 \cdot 10^6\) spin configurations for the Ising model (BM). Error bars are given by the standard error, and most are smaller or equal to the symbol size.

The Ising model seems to predict the P(K) correctly only when K is very small, of the order \(K/N \lesssim 0.10\), with noticeable discrepancies seen also for this range of K, particularly for the probability of no activity (\(K = 0\)), in systems of size \(N > 20\). For larger K, there is an intermediate range where the Ising model underestimates P(K), followed by a region in which P(K) is greatly overestimated. This effect seems to increase with the system size N. This particular behaviour was also observed when training a pairwise Ising model to reproduce the time averages of stimulated neuronal activity from a salamander retina9.

Thermodynamics of the pairwise Ising models

Having mapped the neural network to a pairwise Ising model, we can start to analyse its properties. Given a system with N spins, for each spin configuration we can measure the magnetization \(M( \varvec{\sigma } ) = \sum _{i}^{N} \sigma _{i}\) and the energy \(E( \varvec{\sigma } ) = - H ( \varvec{\sigma } ) = \sum _{i}^{N} h_i \sigma _i + \sum _{i}^{N} \sum _{j<i}^{N} J_{ij} \sigma _i \sigma _j\). From the fluctuations of these quantities, according to the fluctuation-dissipation theorem, we can calculate the susceptibility \(\chi \) and the specific heat \(C_v\) of the Ising system as a function of temperature T,

where \(\langle ... \rangle \) indicates an average over spin configurations generated by Monte Carlo simulations and the Boltzmann constant \(k_B\) is set equal to one. By changing T, one can probe the thermodynamic properties of these spin systems. We start from a random spin configuration and thermalize it as described in the “Methods” section. Notice, however, that the MEM method only claims that the Ising model is representative of the IF model for \( T = 1 \equiv T_{0} \), since that is the temperature used during the BM learning procedure. Since there is no evident analogy between the control parameter of the learned Ising models, T, and that of the IF model, \( \delta u_{\text {rec}} \), temperatures \( T \ne T_{0} \) have no obvious physical meaning in these learned Ising models, besides being a useful parameter to check if \( T_{0} \) has a particular thermodynamic role10.

In Fig. 8 we plot the average magnetization per spin \(m = \left\langle M \right\rangle /N\), the susceptibility \(\chi \) and the specific heat \(C_v\) as a function of the temperature T for systems of size \(N=\{20,40,80,120\}\). For each N, we consider five Ising systems with distinct parameters learned from different IF networks of identical size at criticality, to assess the sample-to-sample variations for IF networks with different specific connectivities between the neurons.

Thermodynamic functions of Ising models associated with fully-excitatory IF networks of different system sizes N. Average magnetization per spin m (a), susceptibility \(\chi \) (b) and specific heat \(C_v\) (c) as a function of the temperature \(T \in [ 0.1, 3.0 ]\), for systems with \(N=\{20,40,80,120\}\) spins. Different curves with the same colour and symbol correspond to Ising systems with parameters fitted to different IF network configurations with the same size N but different specific connectivities. The vertical dashed lines indicate \( T = T_{0} = 1 \), the “default” temperature used in the BM learning procedure to fit the respective Ising parameters to each IF network. The cloud of random values observed for \( T < 1 \) suggests the presence of a spin-glass phase, where the thermal energy is insufficient to drive the system away from the initial random spin configuration. Results are averages over \( M_c = 3 \cdot 10^6 \) spin configurations. Error bars are given by the standard error and are overall smaller or equal to the symbol size.

The striking result is that all quantities exhibit the behavior expected close to a critical transition. The magnetization takes negative values at low temperatures, because of the majority of negative local fields, and tends to zero for high temperatures with a behavior that becomes steeper for increasing system sizes. The maxima of the susceptibility and specific heat appear near the “default” temperature \( T_{0} = 1 \) associated with the IF model neuronal data, and their height increases with N, indicating that the neural networks tend to maximize thermodynamic fluctuations. This phenomenon, where fluctuations diverge with increasing system size, is a hallmark of a system operating near a critical point55. Looking now at different network configurations of the same size N, we can clearly detect large variations across configurations. Particularly, for different IF networks with the same N, the maximum value of the susceptibility \(\chi \) varies by a factor \( \sim 2 \) for sizes \(N = \{ 20, 40 \}\) and \( \sim 1.5 \) for \(N = \{ 80, 120 \}\).

Another interesting result is the cloud of random values observed for \(T < 1 \) for all thermodynamic quantities. This may be an indication that, for low temperatures, the Ising model transitions into a spin-glass phase. Since this phase usually exhibits complex energy landscapes54, thermal fluctuations in this temperature regime might not be sufficient to drive the system away from the randomly chosen initial configuration and into the ground state. Indeed, starting with all \(\sigma _i = -1\) as initial configuration for the Monte Carlo simulations removes this effect entirely (see Fig. S3 Supplementary Information).

Subnetworks

In experiments, neuronal recordings are usually performed over a subset of neurons out of the total population9,13. As such, it is of practical interest to study how network subsampling might affect the analysis. A very recent study13 shows that the properties of firing statistics change with the spatial distribution of the subsampled neuronal patch. Taking this into account, we will consider two types of subnetworks, also studied in the aforementioned work: a subset of neurons that are spatially close together (Fig. 9a), or picked at random positions (Fig. 9b). For the former case, we select the neurons that are closest to the center of the cubic lattice.

In Fig. 10 we plot the results of the simultaneous firing probability P(K) and triplets \(T_{ijk}\) measured in subnetworks of \(n=40\) neurons in systems with \(N=500\) total neurons, for both spatial distributions described previously. We also compare these quantities with the predictions of the corresponding Ising models. The learning efficiency for the parameters of these models is similar to the cases using full networks, as assessed by inspecting the constrained temporal averages, \(\langle \sigma _{i} \rangle \) and \(C_{ij}\) (see Fig. S4 in Supplementary Information).

Predictive capability of the Ising model for IF model subnetworks. Comparison between Monte Carlo sampling of the Ising model (BM) and the neural network data (IF) of the probability P(K) (a,b) and triplets \(T_{ijk}\) (c,d), for subnetworks with \(n = 40\) neurons, with a local (a,c) and random (b,d) spatial distribution, in a system with \(N=500\) neurons. The blue dashed lines in the bottom plots are the bisector \(y=x\). Results are averaged over \(N_{b} = 10^7\) time bins for the IF model (IF), and over \(M_c = 3 \cdot 10^6\) spin configurations for the Ising model (BM). Error bars are given by the standard error, and are overall smaller or equal to the symbol size.

As in the case of entire networks, the Ising models fail to predict P(K) for large K, with even larger discrepancies (Fig. 10). For the IF network, the P(K) distribution decays exponentially for both subnetworks, as for the entire network case. Analogously, the probability P(K) for the learned Ising models shows an initial exponential decay for low K, for both spatial distributions. Conversely, at \(K/n \approx 0.35\), the behaviour of P(K) for the Ising model is slightly different between the two spatial distributions. For the local case, the probability for observing simultaneously up-spins reaches a second local maximum at \( K/n \approx 0.75\). Notice, however, that this is a small effect, magnified by the logarithmic scale. On the other hand, for the random case, a plateau in P(K) of the Ising model is observed in the intermediate regime of K, followed by a subsequent decay. These differences between the IF and Ising model, compared to the full network case (Fig. 7), could be due to the fact that we are neglecting influences from neurons that are not included in the subnetworks, and therefore not encoded in the Ising model parameters.

The behavior of the triplets is similar to the case of entire networks (Fig. 6), wherein the triplets are systematically overestimated by the Ising model. We note also that overall the triplets have much smaller values, when compared to the ones found for the full network with \(N=40\). Similar results were found for both P(K) and the \(T_{ijk}\) for subnetworks of larger size \(n=80\) in the same IF network with \(N=500\) (see Fig. S5 in Supplementary Information). The fact that both cases of spatial distributions yield Ising models with similar accuracy in reproducing the original IF model data is unexpected, as this is seemingly in contrast to recent experimental studies on mouse brains13, where the generalized Ising model predicted better the results from subgroups of \(\sim 100\) neurons that were spatially clustered together. It should be noted, however, that the experimental study pertains to stimulated neuronal activity due to visual stimuli, whereas our model simulates spontaneous neuronal activity39, in the absence of any external stimulation.

Networks with inhibitory neurons

Inhibitory neurons hamper propagation of neuronal activity, consequently affecting the dynamics of the network56,57. Increasing the percentage \( p_{\text {in}} \) of inhibitory neurons in a neural network moves the system into a subcritical regime57. A way to keep the system close to the critical state when \( p_{\text {in}} >0\) is by increasing the value of the tuning parameter \( \delta u_{\text {rec}} \). Here we consider systems of size \( N = 80 \) and \( p_{\text {in}} > 0\), in the critical state (see Fig. S2 in Supplementary Information), and study how the presence of inhibition might affect the properties of the associated Ising-like models. The agreement of the average local activities and correlation functions between the Ising model and the IF networks with inhibitory neurons is as good as for fully-excitatory ones (see Fig. S6 in Supplementary Information).

In Fig. 11 we show the distributions of fields \(h_i\) and interaction constants \(J_{ij}\) learned from data of IF networks with \( p_{\text {in}} =\{0\%,10\%,20\%\}\) inhibitory neurons.

Distributions of the learned fields \(h_i\) and interaction constants \(J_{ij}\) of a Ising model for neural networks with different fractions of inhibitory neurons \( p_{\text {in}} \). Fields \(h_i\) (a) and interaction constants \(J_{ij}\) (b) associated with IF networks with \( p_{\text {in}} = \{0\%,10\%,20\%\}\) and \(N=80\), obtained after \( N_{\text {BM}} = 60{,}000 \) iterations of the BM.

The distribution of fields \(h_{i}\) (Fig. 11a) for \( p_{\text {in}} > 0 \) exhibits a tail towards negative values, which becomes more pronounced as \( p_{\text {in}} \) increases. Interestingly, these \(h_{i}\) are associated with excitatory neurons (see Fig. S7 in Supplementary Information). A possible explanation is that, in the IF network, the neurons with the smallest average local activity \(\langle \sigma _{i} \rangle \) are not necessarily inhibitory neurons, but rather neurons with incoming connections from inhibitory ones, which in turn are more likely to be excitatory since \( p_{\text {in}} < 50 \% \). This indicates that the \( \{ h_{i} \} \) do not encode the information about whether a neuron is excitatory or inhibitory. In Fig. 11b we see that the peak of the distributions of the interaction constants \( J_{ij} \), decreases with \( p_{\text {in}} \), with a more pronounced tail towards negative \( J_{ij} \) observed for \( p_{\text {in}} = 20 \%\). Interestingly, the presence of the second peak of \( P( J_{ i j } ) \) at positive \( J_{ij} \) seems to be robust with respect to changes in \( p_{\text {in}} \).

In Fig. 12 we plot as a function of the temperature T the thermodynamic functions m, \(\chi \) and \(C_v\) of the Ising systems with the parameters of Fig. 11. For increasing \( p_{\text {in}} \), the magnetization does not go to zero at high T in the observed range of temperatures (Fig. 12a), indicating a higher tendency for spins to be in the down state (\( \sigma _{i} = -1 \)) than for the fully excitatory case in the paramagnetic phase, which could stem from the inhibitory neurons. This is consistent with the observation of stronger negative fields \(h_{i}\) for \( p_{\text {in}} = 20 \% \), as seen in Fig. 11a. In Fig. 12b,c, we see that the values of the maxima of \( \chi \) and \( C_{v} \) decrease with \( p_{\text {in}} \), while their position with respect to the temperature remains unchanged. This suggests that introducing inhibition in the IF networks reduces thermal fluctuations in the associated Ising model.

Thermodynamic functions of Ising-like models associated with IF model networks with different fractions of inhibitory neurons \( p_{\text {in}} \). Average magnetization per spin m (a), susceptibility \(\chi \) (b) and specific heat \(C_v\) (c) as a function of the temperature \(T \in [ 0.1, 3.0 ]\), simulated using the learned parameters shown in Fig. 11 for different fractions of inhibitory neurons \( p_{\text {in}} = \{0\%,10\%,20\%\}\) and \(N=80\). As in Fig. 8, the cloud of random values for \( T < 1 \) suggests the presence of a spin-glass phase. Results are averaged over \(M_c = 3 \cdot 10^6\) spin configurations. Error bars are given by the standard error and are overall smaller or equal to the symbol size.

Partially-connected pairwise Ising models

One of the main disadvantages of BM learning is its intense CPU time demand35,58, restricting the network sizes one could potentially analyse. To try to circumvent this problem, we will consider Ising models with pruned links28, whereby we remove the couplings associated with the weakest correlated pairs of neurons. Specifically, we set \(J_{ij} = 0\) if the corresponding \( C_{ij} ^{\text {(IF)}} \) is below a certain threshold, defined as a fraction of the largest measured correlation function, i.e. if \( C_{ij} ^{\text {(IF)}} < \eta \text {max} \left( C_{ij} ^{\text {(IF)}} \right) \), where \( \eta \in [ 0 , 1 ] \) sets the threshold. Thus, the BM only needs to learn a subset of the total number of couplings \(J_{ij}\), possibly accelerating the convergence process and consequently allowing the study of networks larger than the ones considered so far. Furthermore, an obvious speed-up is also achieved by making use of the fact that a subset of the \( \{ J_{ij} \} \) are zero, and therefore can be disregarded in the double sum of Eq. (5) during the Monte Carlo simulations. We will consider three thresholds \( \eta \in \{ 0.10, 0.15, 0.20 \} \), using a fully-excitatory system of size \( N = 180 \) in the critical state. Computing only a fraction of the interaction terms allowed to obtain the BM results for the \( N = 180 \) system in a less or comparable CPU time with respect to the one required by the \( N = 120 \) systems where we considered the full set \( \{ J_{ij} \} \), with a greater CPU speed-up achieved the larger the threshold \( \eta \) is.

In Fig. 13 we present the comparison between the correlation functions of the IF model and the partially-connected Ising model for the three different thresholds. We see that, even though more than 40% couplings \( J_{ij} \) have been removed in the Ising model for the lowest threshold considered, \( \eta = 0.10 \), and more than \( 70 \% \) for the largest one, \( \eta = 0.20 \), the overall data are still reconstructed by the partially-connected Ising model, with a decrease in quality of the fit for the lowest \( C_{ij} \) (grey dots in the plots) since they were not considered in the learning process of the BM. On the other hand, all \( \langle \sigma _{i} \rangle \) are well predicted by the Ising model (see Fig. S8 in Supplementary Information) since we fit all the \(N = 180\) fields \( \{ h_{i} \} \).

Quality test for the BM learning process with a partially-connected Ising model. Comparison between the correlation functions \(C_{ij}\) of the partially-connected Ising-like model (y-axes) and IF model network (x-axes), for a fully-excitatory system at criticality of size \(N = 180\) and three different thresholds \( \eta = \{ 0.10, 0.15, 0.20 \} \) for the removal of the \( J_{ij} \). If \( C_{ij} ^{\text {(IF)}} < \eta \text {max} ( C_{ij} ^{\text {(IF)}} ) \) (grey dots), the corresponding interaction constant is set to \( J_{ij} = 0 \). The vertical dashed lines indicate the value \( \eta \text {max} ( C_{ij} ^{\text {(IF)}} ) \), with the corresponding approximate percentage of removed \( J_{ij} \) reported at the right of this line. The blue dashed lines are the bisector \(y=x\). Results are averaged over \(N_{b} = 10^7\) time bins for the IF model (IF), and over \(M_c = 3 \cdot 10^7\) spin configurations for the Ising model (BM). Error bars are given by the standard error, and are smaller or equal to the symbol size.

In Fig. 14 we plot the learned fields and the distributions of the learned non-zero interaction constants of the partially-connected Ising model for the three different thresholds \( \eta \). While the set of fields \( \{ h_{i} \} \) remains qualitatively similar for the three thresholds \( \eta \), with a majority of negative fields as in the case of the fully-connected Ising models, the distributions of the non-zero \( J_{ij} \) change significantly with \( \eta \), with the peak at positive \( J_{ij} \) increasing and the one at \( J_{ij} \approx 0 \) decreasing as we remove progressively more \( J_{ij} \). Since we remove only the \( J_{ij} \) associated with the smallest correlation functions \( C_{ij} \), this seemingly indicates that the second peak at \( J_{ij} > 0 \) also seen in the distributions for the fully-connected Ising models (Fig. 4) is associated with the subset of the largest \( C_{ij} \).

Sets of learned fields \(h_{i}\) and non-zero interaction constants \(J_{ij}\) of the partially-connected Ising model. Plots of the fields \(h_{i}\) (a–c), sorted by the average local activity \(\langle \sigma _{i} \rangle ^{\text {(IF)}} \) of the associated neuron i, in order of decreasing \(\langle \sigma _{i} \rangle ^{\text {(IF)}} \), and distributions of the non-zero interaction constants \(J_{ij}\) (d–f), that reproduce the respective data of the Ising model presented in Fig. 13 for the three different thresholds \( \eta = \{ 0.10, 0.15, 0.20 \} \), for a fully-excitatory system at criticality of size \( N = 180 \).

As usual, we can also analyze how the Ising model might predict quantities that are not being constrained by the BM algorithm. In Fig. 15 we present the comparison between the tree-point correlation functions \( T_{ijk} \) measured in the partially-connected Ising model and the IF model. As in the fully-connected case, the Ising model consistently overestimates the values of \( T_{ijk} \). The quality of the fit for the smallest threshold considered, \( \eta = 0.10 \), is comparable to the fully-connected Ising models (Fig. 6). However, as \( \eta \) increases, the quality of the fit worsens, particularly for the smallest \( T_{ijk} ^{\text {(IF)}} \).

Predictive capability of the partially-connected Ising model for the three-point correlation functions \(T_{ijk}\). Comparison between the three-point correlation functions \(T_{ijk}\) of the partially-connected Ising-like model (y-axes) and the IF network (x-axes), for the three thresholds \( \eta = \{ 0.10 , 0.15, 0.20 \} \), for a fully-excitatory system at criticality of size \( N = 180 \). Results are averaged over \(N_{b} = 10^7\) time bins for the IF model (IF), and over \(M_c = 3 \cdot 10^7\) spin configurations for the Ising model (BM). Error bars are given by the standard error, and most are smaller or equal to the symbol size.

Next, we present in Fig. 16 the results for the probability P(K) of simultaneous firing. The predictive capability of the partially-connected Ising model for small K is similar to that of fully-connected ones (Fig. 7), while significant differences can be seen for large K. As the threshold \( \eta \) is increased, the Ising model seems to predict larger simultaneous activity at the scale of the whole network, where \( K \approx N \), indicated by a local maximum in P(K). This seems to indicate that the small \( J_{ij} \) that we are disregarding encode the information concerning the large K regime of P(K) .

Predictive capability of the partially-connected Ising model for the probability of simultaneous firing P(K). Comparison between the simultaneous firing/up-state probability P(K) of the Ising-like model (red) and the IF model network (blue), for the three thresholds \( \eta = \{ 0.10 , 0.15, 0.20 \} \), for a fully-excitatory system at criticality of size \( N = 180 \). Results are averaged over \(N_{b} = 10^7\) time bins for the IF model (IF), and over \(M_c = 3 \cdot 10^7\) spin configurations for the Ising model (BM). Error bars are given by the standard error, and most are smaller or equal to the symbol size.

Finally, in Fig. 17 we plot the thermodynamic functions m, \( \chi \) and \( C_{v} \) versus temperature T for the partially-connected Ising systems, considering the three cases \( \eta = \{ 0.10 , 0.15, 0.20 \} \). For all quantities results are quite robust with respect to \( \eta \), taking into account that there is a difference of \( \approx 31.9 \% \) of removed \( J_{ij} \) between the smallest and largest thresholds \( \eta \) considered. The tendency for the susceptibility \( \chi \) and specific heat \( C_{v} \) to diverge with N is also still clearly visible when comparing to the results for a smaller system size with \( N = 120 \) (black symbols), which in turn considers the full set \( \{ J_{ij} \} \).

Thermodynamic functions of partially-connected Ising models associated with an IF model network with \( N = 180 \). Average magnetization per spin m (a), susceptibility \(\chi \) (b) and specific heat \(C_v\) (c) as a function of the temperature \(T \in [ 0.1, 3.0 ]\), simulated using the learned parameters shown in Fig. 14 for the three different thresholds \( \eta = \{ 0.10, 0.15, 0.20 \} \), for a fully-excitatory IF network at criticality of size \( N = 180 \). The black symbols show the results for a learned Ising model associated with an IF network of size \( N = 120 \), considering the full set \( \{ J_{ij} \} \). As in Figs. 8 and 12, the cloud of random values for \( T < 1 \) suggests the presence of a spin-glass phase. Results are averaged over \(M_c = 3 \cdot 10^6\) spin configurations. Error bars are given by the standard error and are overall smaller or equal to the symbol size.

Discussion

In this study we apply for the first time the Maximum Entropy Modelling (MEM) method to neuronal activity data generated by a numerical model containing the fundamental biological features of living networks and able to tune the system at criticality. Indeed, it has been widely proposed in the literature that the brain could be considered as a system acting close to a critical point, however in the majority of previous papers this feature was not taken in account clearly. The advantage of the numerical study is the possibility to have a clear knowledge of the state of the neuronal system, to control the percentage of inhibitory neurons and to implement artificially, by considering subnetworks, the subsampling limitation in experimental measurements. We mapped the local and pairwise information of IF complex neural networks into Ising models with frustrated spins. Independently of the system size N, the local fields \(h_i\) are mostly negative (Fig. 4a–d) and the distribution of interaction constants \(J_{ij}\) (Fig. 4e–h) is bimodal for small systems, but tends to a normal distribution centered around zero as N increases. These Ising systems display a spin glass phase at low temperatures, independently of N (Fig. 8), and the susceptibility and specific heat tend to diverge with the system size, with a maximum near \(T = T_{0} = 1\), the effective temperature used in the BM learning to fit the IF network neuronal data. This is an indication that the Ising model analogs of the IF networks operate near a critical point. Furthermore, at least for \(N \le 120\), the height of the maximum in the susceptibility is sensible to changes in the details of the connectivity of the network (Fig. 8b). We remark that the Ising models do not predict well unconstrained quantities such as the three-point correlation functions (Fig. 6) and the probability of simultaneous firing even for whole networks (Fig. 7). This discrepancy is enhanced when considering only a subset of the neurons (Fig. 10), likely due to inputs from neurons outside the subnetwork which are not considered in the MEM mapping. This indicates that additional caution should be taken when analysing real neural networks using the MEM approach, which often consider only a subset of the total neuronal population. The presence of inhibitory neurons in the IF networks leads to reduced thermal fluctuations in the associated Ising models, evidenced by a decrease of the maxima near the critical point (Fig. 12).

We have to stress that, as verified in the present case, the potential of the MEM approach is limited by its CPU time demand when considering large system sizes , as the computation time complexity increases as \( \propto N^{2}\) since the Ising model is fully-connected and has high heterogeneity in the distributions of the fields \(h_i\) and interaction constants \(J_{ij}\). To circumvent this issue, we considered a partially-connected Ising model with only a subset of the total pairs of interaction constants \( \{ J_{ij} \} \) obtained considering only the largest correlation functions measured in the IF network, allowing to study a system of size \( N = 180 \) (Figs. 13, 14, 15, 16 and 17). Similar MEM procedures allow the study of much larger sizes, such as the so-called restricted Boltzmann Machine59 or a random projection model60, but the resulting maximum entropy distributions from these approaches are no longer analogous to that of an Ising model, and the thermodynamical interpretation is no longer applicable35. A very recent study61 shows that it might be possible to consider large networks considering only connections which contain the maximum mutual information among all pairs of neurons. As future developments, we remark that the IF model allows the investigation of systems off criticality by appropriately tuning the short-term plasticity parameter. The study of neuronal systems at and off criticality has recently revealed intriguing behavior typical of thermodynamic systems, as the Fluctuation-Dissipation relations62,63. This approach can then be used to study thermodynamic properties of networks in the sub- and supercritical state, brain states associated with pathological conditions64.

Methods

Integrate-and-fire model

The model considers N neurons placed randomly inside a cubic space of side \(L=\root 3 \of {N/\rho }\), where \(\rho = 0.016\)57 is the density of the neurons. Connections are directed and weighted, with dynamic synaptic strengths \(w_{ij} \in [0,1]\) between pre- and post-synaptic neurons i and j. Each neuron has at least one incoming connection, and the distribution of out-going degrees \( k_{\text {out}} \in [2,20]\) is a power law, i.e. \(P( k_{\text {out}} ) \propto k_{\text {out}} ^{-2}\), following experimental measurements in functional networks65. The probability that two neurons are connected decays exponentially with their euclidean distance r, \(P(r) \propto e^{-r/r_0}\), where \(r_0=5\) is a characteristic length66. Neurons can be excitatory or inhibitory, with fraction \( p_{\text {in}} \). We implement synaptic plasticity, in which the strengths \(w_{ij}\) can change dynamically with time. We consider both short-term and long-term plasticity (STP and LTP for short, respectively), so we separate the synaptic strengths \(w_{ij}(t)=u_i(t)g_{ij}\) into the two components \(u_i(t)\) and \(g_{ij}\). STP refers to the modification of synaptic strengths on a short timescale, of the order of milliseconds, and we denote by \(u_i(t) \in [0,1]\) the normalized amount of neurotransmitters available to neuron i at time t, representing the so-called readily releasable pool of neurotransmitter vesicles67. On the other hand, LTP is a Hebbian-like process which strengthens or weakens connections strengths \(g_{ij} \in (0,1]\) depending on their usage over time, acting on much longer timescales, ranging from minutes to hours or even years68, so we regard this term as constant compared to the timescale of the dynamics. Each neuron i is characterized by a membrane potential \(v_i\). A neuron i will fire at some time t when its potential \(v_i\) surpasses a threshold \(v_c = 1\). Activity will then propagate to all post-synaptic neurons j with incoming connections from i according to the following equations39:

where \(+\) and − stands for excitatory and inhibitory pre-synaptic neuron, respectively, and \(\delta u=0.05\) represents the fractional amount of neurotransmitter released at each neuronal firing69. The timestep unit corresponds roughly to the joint interval of synaptic and axonal delay, i.e. the time interval between the generation of the action potential at the pre-synaptic neuron and the membrane potential change at the post-synaptic one, and is of the order of 10 milliseconds70. We set a minimum value \( v_{\text {min}} = -1 \) for the membrane potential of each neuron, to prevent the possibility of a \(v_{i}\) being systematically decreased to overly negative values when \( p_{\text {in}} > 0\). After firing, a neuron enters into a refractory period of a single timestep \(t_r=1\) during which it is incapable of receiving or eliciting any activity. To keep the activity ongoing, we implement a small external stimulation. Namely, at every timestep, even during avalanches, a voltage input \(\delta v = 0.1 v_{c}\) is added to a randomly chosen neuron. From Eq. (10) we see that the amount of synaptic resources gradually depletes as a neuron fires, eventually rendering it incapable of transmitting further signals. In real systems, this is counteracted by a recovery mechanism, where the readily releasable pool is slowly recharged over the span of seconds71. We assume a separation of timescales72, and recharge simultaneously the \(u_i\) of all neurons by a certain amount \( \delta u_{\text {rec}} \) only at the end of every avalanche, \( u_i(t) \rightarrow u_i(t) + \delta u_{\text {rec}} \). By changing the value of \( \delta u_{\text {rec}} \) one can adjust the dynamical state of the system39,57. Before performing any measurements, we let the dynamics evolve for a certain number of timesteps in order to shape the distribution of strengths \(g_{ij}\) by LTP. We start by setting them uniformly distributed in the interval \(g_{ij} \in [0.04, 0.06]\). According to the rules of Hebbian plasticity73,74, if some neuron i frequently stimulates another neuron j, then the synapse from i to j will be strengthened. In this case, we increase the strength of the synapses \(g_{ij}\) proportionally to the voltage variation induced in the post-synaptic neuron j due to i as \( g_{ij}(t+1) = g_{ij}(t) + \delta g_{j} \), where \(\delta g_{j} = \beta |v_{j}(t+1) - v_{j}(t) |\) and \(\beta = 0.04\) sets the rate of this adaptation. On the other hand, synapses that are rarely active tend to weaken over time74. Therefore, at the end of each avalanche, we decrease all terms \(g_{ij}\) by the average increase in strength per synapse, \( g_{ij}(t+1) = g_{ij}(t) - \frac{1}{N_s} \sum { \delta g_{j} } \) where \(N_s\) is the number of synapses. In real networks, synapses that weaken consistently are eventually pruned. To avoid modifying the scale-free structure of the network, We modify the strengths \(g_{ij}\) either for a fixed number \(N_{\text {aval}} = 10^{4}\) of avalanches or until a strength \(g_{ij}\) first reaches a minimum value \(g_{\text {min}} = 10^{-5}\), where we then set that strength to \(g_{ij} = g_{\text {min}}\).

Maximum entropy modelling

The binarization of the IF model firing dynamics introduces the notion of the probability \( P_{\text {IF}}( \varvec{\sigma } ) \) to observe, during a time bin of duration \(\Delta t_b\), any of the \(2^N\) possible patterns of firing states \( \varvec{\sigma } = \{ \sigma _{1}, \sigma _{2},..., \sigma _{N} \} \) in a network of size N, with each \( \sigma _{i} \in \{ -1, 1 \} \). We are interested in defining this \( P_{\text {IF}}( \varvec{\sigma } ) \) in a way that is consistent with the expectation values of the average local activities \(\langle \sigma _{i} \rangle \) and two-point activities \(\langle \sigma _{i}\sigma _{j} \rangle \) measured in the IF model for a given network. This surmounts to finding a probability distribution \( P( \varvec{\sigma } ) \) that maximizes the entropy14 \(\mathcal { S } = - \sum _{ \varvec{\sigma } } P( \varvec{\sigma } ) \ln [ P( \varvec{\sigma } ) ]\), where \( \sum _{ \varvec{\sigma } } \) indicates a sum over all possible outcomes of \( \varvec{\sigma } \), while subject to the constraints \( \langle \sigma _{i} \rangle = \sum _{ \varvec{\sigma } } \sigma _{ i } P( \varvec{\sigma } ) \) and \( \langle \sigma _{i}\sigma _{j} \rangle = \sum _{ \varvec{\sigma } } \sigma _{ i } \sigma _{j} P( \varvec{\sigma } ) \). Solving this problem using the method of Lagrangian multipliers15 (see Supplementary Information for the derivation) yields the distribution of spin states of a generalized Ising model at unit temperature7,

where \( \{ h_{i} \} \) and \( \{ J_{ij} \} \) are the Lagrangian multipliers, \( H ( \varvec{\sigma } ) \) is the Hamiltonian or energy function and Z is the partition function, whose sum runs over all \(2^{N}\) possible configurations of spin states \( \varvec{\sigma } \). This prompts us to interpret this maximum entropy description as a mapping from the temporal averages of the neuronal network to that of a fully-connected spin lattice. Under this conceptual view, \(h_{i}\) is analogous to a local external field acting on spin i, whereas \(J_{ij}\) is an interaction constant between spins i and j. The task is now to find the values of \({ h_{i} }\) and \({ J_{ij} }\) that reproduce the measured expectation values from the IF model. This is a particular example of the inverse Ising problem14, also known as Boltzmann Machine (BM) learning14, which consists in inferring the Hamiltonian of a certain complex multi-component system from its observed statistics. In principle, each parameter \(h_{i}\) and \(J_{ij}\) can be determined from the derivative of the logarithm of the partition function (13), \( \langle \sigma _{i} \rangle = \frac{ \partial }{ \partial h_i } \ln [ Z ] \) and \( \langle \sigma _{i}\sigma _{j} \rangle = \frac{ \partial }{ \partial J_{ij} } \ln [ Z ] \). However, the number of terms in Z grows exponentially with N, as \( 2^{N} \), and an analytical approach becomes intractable when \( N \gtrsim 20 \). To proceed, notice that we are trying to describe an empirical distribution \( P_{\text {IF}}( \varvec{\sigma } ) \), as observed from the measured temporal averages of the IF model, using the analytical description \( P( \varvec{\sigma } ) \), as given by Eq. (12). We want to choose the \( \{ h_{i} \} \) and \( \{ J_{ij} \} \) that minimize the loss in information when using \( P( \varvec{\sigma } ) \) as a proxy for \( P_{\text {IF}}( \varvec{\sigma } ) \). Specifically, this means we want the set of \( \{ h_{i} \} \) and \( \{ J_{ij} \} \) that minimize the so-called Kullback-Leibler divergence14 between these distributions,

From the minimum condition equations one can show that \( \frac{ \partial D_{\text {KL}} }{ \partial h_{i} } = 0 \implies \langle \sigma _{i} \rangle ^{\text {(IF)}} = \langle \sigma _{i} \rangle ^{\text {(BM)}} \) and \( \frac{ \partial D_{\text {KL}} }{ \partial J_{ij} } = 0 \implies \langle \sigma _{i}\sigma _{j} \rangle ^{\text {(IF)}} = \langle \sigma _{i}\sigma _{j} \rangle ^{\text {(BM)}} \), where \( \langle \sigma _{i} \rangle ^{\text {(IF)}} \equiv \sum _{ \varvec{\sigma } } \sigma _{ i } P_{\text {IF}}( \varvec{\sigma } ) \) are the empirical averages measured in the IF model and \( \langle \sigma _{i} \rangle ^{\text {(BM)}} \equiv \sum _{ \varvec{\sigma } } \sigma _{ i } P( \varvec{\sigma } ) \) are the ones estimated from the Ising distribution (12), and analogously for the \( \langle \sigma _{i}\sigma _{j} \rangle \). Therefore, a suitable method to search for the fields \(h_{i}\) and interaction constants \(J_{ij}\) of the Hamiltonian (14) is the following iterative scheme7,

where \(\eta _{h}(x) = 2 \eta _{J}(x) \propto x^{-\alpha }\) are decreasing learning rates, with \( \alpha = 0.4 \) for \( N \le 40 \), \( \alpha = 0.6 \) if \( 40 < N \le 120 \), and \( \alpha = 1.0 \) otherwise. We set a smaller learning rate for the \( \{ J_{ij} \} \) since their number (\( \sim N^{2} \)) is much larger when compared to the \( \{ h_{i} \} \) (N), so we update their values at a slower rate to avoid divergences during the learning procedure. At each iteration x, the data sets \(\{\langle \sigma _{i} \rangle ^{\text {(IF)}} \}\) and \(\{\langle \sigma _{i}\sigma _{j} \rangle ^{\text {(IF)}} \}\) generated by the IF model are compared to those estimated by sampling the distribution (12), \(\{\langle \sigma _{i} \rangle ^{\text {(BM)}} \}\) and \(\{\langle \sigma _{i}\sigma _{j} \rangle ^{\text {(BM)}} \}\), using the sets of fields \( \{ h_{i} (x) \} \) and coupling constants \( \{ J_{ij} (x) \} \). We start with \(h_{i} ( x = 1 ) = \langle \sigma _{i} \rangle ^{\text {(IF)}} \) and \(J_{ij} ( x = 1 ) = 0\) and then iterate Eqs. (16) and (17) typically until \(x = N_{\text {BM}} \sim 60000\). At each iteration, \(\{\langle \sigma _{i} \rangle ^{\text {(BM)}} \}\) and \(\{\langle \sigma _{i}\sigma _{j} \rangle ^{\text {(BM)}} \}\) are estimated from Monte Carlo simulations, using the Metropolis algorithm55, by averaging over \(M_c = 3 \cdot 10^5\) spin configurations. We disregard the first 150N configurations for systems with \( N \le 120 \), or up to \( 10^{5} N^{2} \) configurations for the \( N = 180 \) case, in order to reduce correlations with the initial state, and use only every 2N-th configuration for averaging, to reduce autocorrelations. At the end of the learning routine, we study the generalized Ising models with the set of fitted parameters \(\{ h_{i} \}\) and \(\{ J_{ij} \}\) by sampling the distribution (12), averaging over an increased amount of spin configurations \( M_c = 3 \cdot 10^6 \) for systems with \( N \le 120 \), or up to \( M_c = 3 \cdot 10^7 \) configurations for the system with \( N = 180 \), to reduce error bars.

Data availibility

The datasets generated and analyzed during the current study are available from the corresponding author on reasonable request.

References

Hodgkin, A. L. & Huxley, A. F. A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 117, 500. https://doi.org/10.1113/jphysiol.1952.sp004764 (1952).

Dayan, P. & Abbott, L. F. Theoretical Neuroscience: Computational and Mathematical Modeling of Neural Systems (The MIT Press, 2005).

Little, W. A. The existence of persistent states in the brain. Math. Biosci. 19, 101–120. https://doi.org/10.1016/0025-5564(74)90031-5 (1974).

Hopfield, J. J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. 79, 2554–2558. https://doi.org/10.1073/pnas.79.8.2554 (1982).

Amit, D. J., Gutfreund, H. & Sompolinsky, H. Spin-glass models of neural networks. Phys. Rev. A 32, 1007–1018. https://doi.org/10.1103/PhysRevA.32.1007 (1985).

Schneidman, E., Berry, M. J., Segev, R. & Bialek, W. Weak pairwise correlations imply strongly correlated network states in a neural population. Nature 440, 1007–1012. https://doi.org/10.1038/nature04701 (2006).

Tkacik, G., Schneidman, E., Berry II, M. J. & Bialek, W. Spin glass models for a network of real neurons (2009). arXiv:0912.5409 [q-bio].

Tkačik, G. et al. The simplest maximum entropy model for collective behavior in a neural network. J. Stat. Mech. Theory Exp. 2013, P03011. https://doi.org/10.1088/1742-5468/2013/03/P03011 (2013).

Tkačik, G. et al. Searching for Collective Behavior in a Large Network of Sensory Neurons. PLOS Comput. Biol. 10, e1003408. https://doi.org/10.1371/journal.pcbi.1003408 (2014).

Tkačik, G. et al. Thermodynamics and signatures of criticality in a network of neurons. Proc. Natl. Acad. Sci. 112, 11508–11513. https://doi.org/10.1073/pnas.1514188112 (2015).

Meshulam, L., Gauthier, J. L., Brody, C. D., Tank, D. W. & Bialek, W. Collective Behavior of Place and Non-place Neurons in the Hippocampal Network. Neuron 96, 1178-1191.e4. https://doi.org/10.1016/j.neuron.2017.10.027 (2017).

Chen, X., Randi, F., Leifer, A. M. & Bialek, W. Searching for collective behavior in a small brain. Phys. Rev. E 99, 052418. https://doi.org/10.1103/PhysRevE.99.052418 (2019).

Meshulam, L., Gauthier, J. L., Brody, C. D., Tank, D. W. & Bialek, W. Successes and failures of simple statistical physics models for a network of real neurons, https://doi.org/10.48550/arXiv.2112.14735 (2023). arXiv:2112.14735 [physics, q-bio].

Nguyen, H. C., Zecchina, R. & Berg, J. Inverse statistical problems: from the inverse Ising problem to data science. Adv. Phys. 66, 197–261. https://doi.org/10.1080/00018732.2017.1341604 (2017).

Jaynes, E. T. Information Theory and Statistical Mechanics. Phys. Rev. 106, 62–79. https://doi.org/10.1103/PhysRev.106.620 (1957).

Wainwright, M. J. & Jordan, M. I. Graphical Models, Exponential Families, and Variational Inference. Found. Trends Mach. Learn. 1, 1–305. https://doi.org/10.1561/2200000001 (2008).

Phillips, S. J., Anderson, R. P. & Schapire, R. E. Maximum entropy modeling of species geographic distributions. Ecol. Model. 190, 231–259. https://doi.org/10.1016/j.ecolmodel.2005.03.026 (2006).

Morcos, F. et al. Direct-coupling analysis of residue coevolution captures native contacts across many protein families. Proc. Natl. Acad. Sci. 108, E1293–E1301. https://doi.org/10.1073/pnas.1111471108 (2011).

Figliuzzi, M., Barrat-Charlaix, P. & Weigt, M. How Pairwise Coevolutionary Models Capture the Collective Residue Variability in Proteins?. Mol. Biol. Evol. 35, 1018–1027. https://doi.org/10.1093/molbev/msy007 (2018).

Hopf, T. A. et al. Mutation effects predicted from sequence co-variation. Nat. Biotechnol. 35, 128–135. https://doi.org/10.1038/nbt.3769 (2017).

Bialek, W. et al. Social interactions dominate speed control in poising natural flocks near criticality. Proc. Natl. Acad. Sci. 111, 7212–7217. https://doi.org/10.1073/pnas.1324045111 (2014).

Lai, J. et al. Maximum Entropy Analysis of Bird Diversity and Environmental Variables in Nanjing Megapolis, China. Sustainability 16, 2139. https://doi.org/10.3390/su16052139 (2024).

Burleson-Lesser, K., Morone, F., DeGuzman, P., Parra, L. C. & Makse, H. A. Collective Behaviour in Video Viewing: A Thermodynamic Analysis of Gaze Position. PLOS ONE 12, e0168995. https://doi.org/10.1371/journal.pone.0168995 (2017).

Torres, D. et al. Eye-tracking as a proxy for coherence and complexity of texts. PLOS ONE 16, e0260236. https://doi.org/10.1371/journal.pone.0260236 (2021).

Cabrera, J. S. & Lee, H. S. Flood risk assessment for Davao Oriental in the Philippines using geographic information system-based multi-criteria analysis and the maximum entropy model. J. Flood Risk Manag. 13, e12607. https://doi.org/10.1111/jfr3.12607 (2020).

Daniotti, S., Monechi, B. & Ubaldi, E. A maximum entropy approach for the modelling of car-sharing parking dynamics. Sci. Rep. 13, 2993. https://doi.org/10.1038/s41598-023-30134-9 (2023).

De Paola, P. Real Estate Valuations with Small Dataset: A Novel Method Based on the Maximum Entropy Principle and Lagrange Multipliers. Real Estate 1, 26–40. https://doi.org/10.3390/realestate1010003 (2024).

Ganmor, E., Segev, R. & Schneidman, E. The architecture of functional interaction networks in the retina. J. Neurosci. 31, 3044–3054. https://doi.org/10.1523/JNEUROSCI.3682-10.2011 (2011).

Humplik, J. & Tkačik, G. Probabilistic models for neural populations that naturally capture global coupling and criticality. PLOS Comput. Biol. 13, e1005763. https://doi.org/10.1371/journal.pcbi.1005763 (2017).

Delamare, G. & Ferrari, U. Time-dependent maximum entropy model for populations of retinal ganglion cells. https://doi.org/10.1101/2022.07.13.498395 (2022).

Nonnenmacher, M., Behrens, C., Berens, P., Bethge, M. & Macke, J. H. Signatures of criticality arise from random subsampling in simple population models. PLOS Comput. Biol. 13, e1005718. https://doi.org/10.1371/journal.pcbi.1005718 (2017).

Hahn, G. et al. Spontaneous cortical activity is transiently poised close to criticality. PLoS Comput. Biol. 13, e1005543. https://doi.org/10.1371/journal.pcbi.1005543 (2017).

Sampaio Filho, C. I. et al. Ising-like model replicating time-averaged spiking behaviour of in vivo and in vitro neuronal networks (2023). Preprint.

White, J. G., Southgate, E., Thomson, J. N. & Brenner, S. The structure of the nervous system of the nematode Caenorhabditis elegans. Philos. Trans. R. Soc. Lond. B Biol. Sci. 314, 1–340. https://doi.org/10.1098/rstb.1986.0056 (1986).

Gardella, C., Marre, O. & Mora, T. Modeling the correlated activity of neural populations: A review. Neural Comput. 31, 233–269. https://doi.org/10.1162/neco_a_01154 (2019).

Buccino, A. P., Garcia, S. & Yger, P. Spike sorting: new trends and challenges of the era of high-density probes. Prog. Biomed. Eng. 4, 022005. https://doi.org/10.1088/2516-1091/ac6b96 (2022).

Ventura, V. & Gerkin, R. C. Accurately estimating neuronal correlation requires a new spike-sorting paradigm. Proc. Natl. Acade. Sci. 109, 7230–7235. https://doi.org/10.1073/pnas.1115236109 (2012).

Pastore, V. P., Massobrio, P., Godjoski, A. & Martinoia, S. Identification of excitatory-inhibitory links and network topology in large-scale neuronal assemblies from multi-electrode recordings. PLOS Comput. Biol. 14, e1006381. https://doi.org/10.1371/journal.pcbi.1006381 (2018).

Michiels van Kessenich, L., Luković, M., de Arcangelis, L. & Herrmann, H. J. Critical neural networks with short- and long-term plasticity. Phys. Rev. E 97, 032312. https://doi.org/10.1103/PhysRevE.97.032312 (2018).

Beggs, J. M. & Plenz, D. Neuronal Avalanches in Neocortical Circuits. J. Neurosci. 23, 11167–11177. https://doi.org/10.1523/JNEUROSCI.23-35-11167.2003 (2003).

de Arcangelis, L., Perrone-Capano, C. & Herrmann, H. J. Self-organized criticality model for brain plasticity. Phys. Rev. Lett. 96, 028107. https://doi.org/10.1103/PhysRevLett.96.028107 (2006).

Levina, A., Herrmann, J. M. & Geisel, T. Dynamical synapses causing self-organized criticality in neural networks. Nat. Phys. 3, 857–860. https://doi.org/10.1038/nphys758 (2007).

Shew, W. L., Yang, H., Yu, S., Roy, R. & Plenz, D. Information capacity and transmission are maximized in balanced cortical networks with neuronal avalanches. J. Neurosci. 31, 55–63. https://doi.org/10.1523/JNEUROSCI.4637-10.2011 (2011).

Haimovici, A., Tagliazucchi, E., Balenzuela, P. & Chialvo, D. R. Brain organization into resting state networks emerges at criticality on a model of the human connectome. Phys. Rev. Lett. 110, 178101. https://doi.org/10.1103/PhysRevLett.110.178101 (2013).

Priesemann, V., Valderrama, M., Wibral, M. & Quyen, M. L. V. Neuronal avalanches differ from wakefulness to deep sleep–evidence from intracranial depth recordings in humans. PLOS Comput. Biol. 9, e1002985. https://doi.org/10.1371/journal.pcbi.1002985 (2013).

Shriki, O. et al. Neuronal avalanches in the resting MEG of the human brain. J. Neurosci. 33, 7079–7090. https://doi.org/10.1523/JNEUROSCI.4286-12.2013 (2013).

Petermann, T. et al. Spontaneous cortical activity in awake monkeys composed of neuronal avalanches. Proc. Natl. Acad. Sci. 106, 15921–15926. https://doi.org/10.1073/pnas.0904089106 (2009).

Mora, T., Deny, S. & Marre, O. Dynamical criticality in the collective activity of a population of retinal neurons. Phys. Rev. Lett. 114, 078105. https://doi.org/10.1103/PhysRevLett.114.078105 (2015).

Shew, W. L. et al. Adaptation to sensory input tunes visual cortex to criticality. Nat. Phys. 11, 659–663. https://doi.org/10.1038/nphys3370 (2015).

Ponce-Alvarez, A., Jouary, A., Privat, M., Deco, G. & Sumbre, G. Whole-brain neuronal activity displays crackling noise dynamics. Neuron 100, 1446–1459. https://doi.org/10.1016/j.neuron.2018.10.045 (2018).

Michiels van Kessenich, L., de Arcangelis, L. & Herrmann, H. J. Synaptic plasticity and neuronal refractory time cause scaling behaviour of neuronal avalanches. Sci. Rep. 6, 32071. https://doi.org/10.1038/srep32071 (2016).

Zapperi, S., Lauritsen, K. B. & Stanley, H. E. Self-organized branching processes: Mean-field theory for avalanches. Phys. Rev. Lett. 75, 4071–4074. https://doi.org/10.1103/PhysRevLett.75.4071 (1995).

Sherrington, D. & Kirkpatrick, S. Solvable model of a spin-glass. Phys. Rev. Lett. 35, 1792–1796. https://doi.org/10.1103/PhysRevLett.35.1792 (1975).

Mezard, M., Parisi, G. & Virasoro, M. Spin Glass Theory and Beyond: An Introduction to the Replica Method and Its Applications, vol. 9 of World Scientific Lecture Notes in Physics (World Scientific, 1986).

Böttcher, L. & Herrmann, H. J. Computational Statistical Physics (Cambridge University Press, Cambridge, 2021).

Raimo, D., Sarracino, A. & de Arcangelis, L. Role of inhibitory neurons in temporal correlations of critical and supercritical spontaneous activity. Phys. A 565, 125555. https://doi.org/10.1016/j.physa.2020.125555 (2021).

Nandi, M. K., Sarracino, A., Herrmann, H. J. & de Arcangelis, L. Scaling of avalanche shape and activity power spectrum in neuronal networks. Phys. Rev. E 106, 024304. https://doi.org/10.1103/PhysRevE.106.024304 (2022).

Yeh, F.-C. et al. Maximum entropy approaches to living neural networks. Entropy 12, 89–106. https://doi.org/10.3390/e12010089 (2010).

van der Plas, T. L. et al. Neural assemblies uncovered by generative modeling explain whole-brain activity statistics and reflect structural connectivity. eLife 12, e83139. https://doi.org/10.7554/eLife.83139 (2023).

Maoz, O., Tkačik, G., Esteki, M. S., Kiani, R. & Schneidman, E. Learning probabilistic neural representations with randomly connected circuits. Proc. Natl. Acad. Sci. 117, 25066–25073. https://doi.org/10.1073/pnas.1912804117 (2020).

Lynn, C. W., Yu, Q., Pang, R., Bialek, W. & Palmer, S. E. Exactly solvable statistical physics models for large neuronal populations, https://doi.org/10.48550/arXiv.2310.10860 (2023). arXiv:2310.10860 [cond-mat, physics:physics, q-bio].

Sarracino, A., Arviv, O., Shriki, O. & de Arcangelis, L. Predicting brain evoked response to external stimuli from temporal correlations of spontaneous activity. Phys. Rev. Res. 2, 033355. https://doi.org/10.1103/PhysRevResearch.2.033355 (2020).

Nandi, M. K., de Candia, A., Sarracino, A., Herrmann, H. J. & de Arcangelis, L. Fluctuation-dissipation relations in the imbalanced Wilson-Cowan model. Phys. Rev. E107, 064307. https://doi.org/10.1103/PhysRevE.107.064307 (2023).

Berger, D., Varriale, E., van Kessenich, L. M., Herrmann, H. J. & de Arcangelis, L. Three cooperative mechanisms required for recovery after brain damage. Sci. Rep. 9, 15858. https://doi.org/10.1038/s41598-019-50946-y (2019).

Eguíluz, V. M., Chialvo, D. R., Cecchi, G. A., Baliki, M. & Apkarian, A. V. Scale-free brain functional networks. Phys. Rev. Lett. 94, 018102. https://doi.org/10.1103/PhysRevLett.94.018102 (2005).

Roerig, B. & Chen, B. Relationships of local inhibitory and excitatory circuits to orientation preference maps in ferret visual cortex. Cereb. Cortex 12, 187–198. https://doi.org/10.1093/cercor/12.2.187 (2002).

Kaeser, P. S. & Regehr, W. G. The readily releasable pool of synaptic vesicles. Curr. Opin. Neurobiol. 43, 63–70. https://doi.org/10.1016/j.conb.2016.12.012 (2017).

Abraham, W. C. How long will long-term potentiation last? Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 358, 735–744. https://doi.org/10.1098/rstb.2002.1222 (2003).

Ikeda, K. & Bekkers, J. M. Counting the number of releasable synaptic vesicles in a presynaptic terminal. Proc. Natl. Acad. Sci. 106, 2945–2950. https://doi.org/10.1073/pnas.0811017106 (2009).

Lemaréchal, J.-D. et al. A brain atlas of axonal and synaptic delays based on modelling of cortico-cortical evoked potentials. Brain 145, 1653–1667. https://doi.org/10.1093/brain/awab362 (2021).

Markram, H. & Tsodyks, M. Redistribution of synaptic efficacy between neocortical pyramidal neurons. Nature 382, 807–810. https://doi.org/10.1038/382807a0 (1996).

Zeraati, R., Priesemann, V. & Levina, A. Self-Organization Toward Criticality by Synaptic Plasticity. Front. Phys. 9 (2021).

Hebb, D. O. The Organization of Behavior (John Wiley & Sons Inc, New York, 1949).

Bi, G.-q. & Poo, M.-m. Synaptic Modifications in Cultured Hippocampal Neurons: Dependence on Spike Timing, Synaptic Strength, and Postsynaptic Cell Type. J. Neurosci. 18, 10464–10472. https://doi.org/10.1523/JNEUROSCI.18-24-10464.1998 (1998).

Acknowledgements

L.d.A. acknowledges support from the Italian MUR project PRIN2017WZFTZP and from NEXTGENERATIONEU (NGEU) funded by the Ministry of University and Research (MUR), National Recovery and Resilience Plan (NRRP), and project MNESYS (PE0000006)-A multiscale integrated approach to the study of the nervous system in health and disease (DN. 1553 11.10.2022). H.J.H. thanks FUNCAP and INCT-SC for financial support. J.S.A.J. thanks the Brazilian agencies CNPq, CAPES and FUNCAP for financial support.

Author information

Authors and Affiliations

Contributions

T.S.A.N.S. conducted the numerical simulations. T.S.A.N.S. and C.I.N.S.F. developed the software. H.J.H., J.S.A.J. and L.d.A. conceived the research. T.S.A.N.S., H.J.H. and L.d.A. analysed the results and wrote the manuscript. All authors contributed to the review of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note