Abstract

Gaussian boson sampling (GBS) plays a crucially important role in demonstrating quantum advantage. As a major imperfection, the limited connectivity of the linear optical network weakens the quantum advantage result in recent experiments. In this work, we introduce an enhanced classical algorithm for simulating GBS processes with limited connectivity. It computes the loop Hafnian of an \(n \times n\) symmetric matrix with bandwidth w in \(O(nw2^w)\) time. It is better than the previous fastest algorithm which runs in \(O(nw^2 2^w)\) time. This classical algorithm is helpful on clarifying how limited connectivity affects the computational complexity of GBS and tightening the boundary for achieving quantum advantage in the GBS problem.

Similar content being viewed by others

Introduction

Gaussian boson sampling (GBS) is a variant of boson sampling (BS) that was originally proposed to demonstrate the quantum advantage1,2,3,4. In recent years, great progress has been made in experiments on GBS5,6,7,8,9,10,11. Both the total number of optical modes and detected photons in GBS experiments have surpassed several hundred7,8. Moreover, it is experimentally verified that GBS devices can enhance the classical stochastic algorithms in searching some graph features10,11.

The central issue in GBS experiments is to verify the quantum advantage of the result. The time cost of the best known classical algorithm for simulating an ideal GBS process grows exponentially with the system size12. Therefore a quantum advantage result might be achieved when the system size is large enough13,14,15.

However, there are always imperfections in real quantum setups, and hence the time cost of corresponding classical simulation will be reduced. When the quantum imperfection is too large, the corresponding classical simulation methods can work efficiently16,17,18. In this situation, a quantum advantage result won’t exist even if the system size of a GBS experiments is very large. Therefore, finding better methods to classically simulate the imperfect GBS process is rather useful in exploring the tight criteria for quantum advantage of a GBS experiment.

Recent GBS experiments have been implemented using optical circuits with relatively shallow depths5,7,17,18. A shallow optical circuit might have limited connectivity and its transform matrix will deviate from the global Haar-random unitary18,19. However, the original GBS protocol2,3 claims that the unitary transform matrix U of the passive linear optical network should be randomly chosen from Haar measure. Classical algorithms can take advantage of the limited connectivity and the deviation from the global Haar-random unitary to realize a speed-up in simulating the whole sampling process17,18. Actually, with limited connectivity in the quantum device, the speed-up of corresponding classical simulation can be exponential18.

The most time-consuming part of simulating the GBS process with limited connectivity is to calculate the loop Hafnian of banded matrices. A classical algorithm to calculate the loop Hafnian of a banded \(n\times n\) symmetric matrix with bandwidth w in time \(O\left( nw 4^w\right)\) is given in Ref.17. Later, an algorithm that takes time \(O\left( nw^2 2^w\right)\) is given in Ref.18. Here we present a classical algorithm to calculate the loop Hafnian of a banded \(n\times n\) matrix with bandwidth w in time \(O\left( nw 2^w\right)\). We also show that this algorithm can be used to calculate the loop Hafnian of sparse matrices.

Our algorithm reduces the time needed for classically simulating the GBS process with limited connectivity. This is helpful in clarifying how limited connectivity affects the computational complexity of GBS and tightening the boundary of quantum advantage in the GBS problem.

This article is organized as follows. In “Overview of Gaussian boson sampling and limited connectivity” section, we give an overview of the background knowledge which will be used later. In “Simulate the sampling process with limited connectivity” section, we give our improved classical algorithm for calculating the loop Hafnian of banded matrices. Finally, we make a summary in “Summary” section.

Overview of Gaussian boson sampling and limited connectivity

Gaussian boson sampling protocol

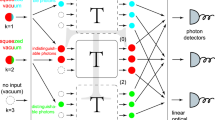

In the GBS protocol, K single-mode squeezed states (SMSSs) are injected into an M-mode passive linear optical network and detected in each output mode by a photon number resolving detector. The detected photon number of each photon number resolving detector forms an output sample which can be denoted as \({\bar{n}}=n_1 n_2 \ldots n_M\). The schematic setup of Gaussian boson sampling is shown in Fig. 1.

A schematic setup of Gaussian boson sampling. In this example, \(K=8\) single-mode squeezed states are injected into a passive linear optical network with \(M=10\) optical modes. Then photon number resolving detectors detect the photon number in each output mode. An output pattern \({\bar{n}}=n_1 n_2\ldots n_M\) is generated according to the detected results.

Before detection, the output quantum state of the passive linear optical network is a Gaussian quantum state20,21,22,23. A Gaussian state is fully determined by its covariance matrix and displacement vector. Denote operator vector \({\hat{\xi }} = ({\hat{a}}_1^\dagger ,\dots ,{\hat{a}}_M^\dagger ,{\hat{a}}_1,\dots ,{\hat{a}}_M)^{T}\), where \({\hat{a}}_i^\dagger\) and \({\hat{a}}_i\) are the creation and annihilation operators in the ith (\(i\in \left\{ 1,2,\dots ,M\right\}\)) optical mode, respectively. The covariance matrix \(\sigma\) and displacement vector \({\bar{r}}\) of the Gaussian state \({\hat{\rho }}\) is defined as

where

Notice that \(({\hat{\xi }}-{\bar{r}}) ({\hat{\xi }}-{\bar{r}})^{\dagger }\) is the outer product of column vector \(({\hat{\xi }}-{\bar{r}})\) and row vector \(({\hat{\xi }}-{\bar{r}})^{\dagger }\) and \(({\hat{\xi }}-{\bar{r}}) ({\hat{\xi }}-{\bar{r}})^{\dagger } \ne (({\hat{\xi }}-{\bar{r}}) ({\hat{\xi }}-{\bar{r}})^{\dagger })^{\dagger }\) as the operators in the vector \({\hat{\xi }}\) might not commute with each other. As an example, assume \(M=1\) so that \({\hat{\xi }}=({\hat{a}}_1^\dagger ,{\hat{a}}_1)^T\), we have

But,

Usually, a matrix (say matrix A) is used to calculate the output probability distribution of a GBS process2,3,14. Denote that \(X_{2M} = \left( \begin{array}{cc} 0 &{}\quad I_M \\ I_M &{}\quad 0 \end{array}\right)\), and \(I_{2M}\) (or \(I_M\)) as identity matrix with rank 2M (or M). The matrix A is fully determined by the output Gaussian sate as follows:

where

The probability of generating an output sample \({\bar{n}}\) is

where \({\text{lhaf}}(A)=\sum _{M \in {\text{SPM}}(n)} \prod _{(i, j) \in M} A_{i, j}\) is the loop Hafnian function of matrix A, and \({\text{SPM}}(n)\) is the set of single-pair matchings12 which is the set of perfect matchings of complete graph with loops on n vertices. The matrix \(A_{{\bar{n}}}\) is obtained from A as follows \({\bar{n}}\)14,24: for \(\forall i = 1,\dots , M\), if \(n_i = 0\), the rows and columns i and \(i+M\) are deleted from the matrix A; if \(n_i \ne 0\), rows and columns i and \(i+M\) are repeated \(n_i\) times.

Continuous-variables quantum systems

If a Gaussian state is input into a linear optical network, the output quantum state is also a Gaussian state. Denote the unitary operator corresponding to the passive linear optical network as \({\hat{U}}\). A property of the passive linear optical network is:

where

The output Gaussian state and the input Gaussian state is related by

where \(\sigma _\text{in}\) and \({\bar{r}}_{\text{in}}\) are the covariance matrix and displacement vector of the input Gaussian state. The SMSS in input mode i has squeezing strength \(s_i\) and phase \(\phi _i\). For simplicity and without loss of generality, assume that \(\phi _i = 0\) for \(i = 1,\dots ,M\). The covariance matrix of the input Gaussian state is

The matrix \({\tilde{A}}\) is thus \({\tilde{A}} = {\tilde{B}} \bigoplus \tilde{B^*}\), where

If a part of the optical modes in the Gaussian state are measured with photon number resolving detectors and the outcome is not all-zero, the remaining quantum state will be a non-Gaussian state25,26. An M-mode coherent state20,21,22,27 is denoted as \(| \vec {\alpha } \rangle\) (or \(|\vec {\beta }\rangle\)), where \(\vec {\alpha } = \left( \alpha _{1},\dots ,\alpha _{M}\right) ^{\mathrm{T}}\) (or \(\vec {\beta } = \left( \beta _{1},\ldots ,\beta _{M}\right) ^{\mathrm{T}}\)) and \(\alpha _i\) (or \(\beta _{i}\)) for \(i=1,\dots ,M\) are complex variables.

A Gaussian state can be represented in the following form:

where

For our convenience, define the permutation matrix P, such that \({\tilde{\gamma }} = P {\tilde{\lambda }} = \left( \beta _{1}^*,\alpha _{1},\beta _{2}^*,\alpha _{2},\dots ,\beta _{M}^*, \alpha _{M}\right) ^{\mathrm{T}}\). Let \({\tilde{R}} = P^\mathrm{T}{\tilde{A}}P\), \({\tilde{l}} = P {\tilde{y}}\). We then have

Suppose the first N modes of an M-mode Gaussian state are measured and a sample pattern \({\bar{n}}=n_1 n_2 \dots n_N\) is observed. Denote that

where \({\tilde{R}}_{dd}\) is a \(2N\times 2N\) matrix corresponding to modes 1 to N, \({\tilde{R}}_{hh}\) is a \(2(M-N) \times 2(M-N)\) matrix corresponding to modes \(N+1\) to M, \({\tilde{R}}_{dh}\) is a \(2N \times 2(M-N)\) matrix represents the correlation between modes 1 to N and modes \(N+1\) to M. The remaining non-Gaussian state is26

where

\(|\vec {\beta }_{(N)}\rangle = |\beta _{N+1},\beta _{N+2}\dots ,\beta _{M}\rangle\) and \(|\vec {\alpha }_{(N)}\rangle = |\alpha _{N+1},\alpha _{N+2}\dots ,\alpha _{M}\rangle\) are coherent states, and

If all optical modes of the Gaussian state are measured (N = M), then Eq. (17) gives the probability of obtaining the output sample \({\bar{n}}\). In this case, the result of Eq. (17) coincides with that of Eq. (7).

Limited connectivity

As specified in the Gaussian boson sampling protocol2,3, the transform matrix U of the passive linear optical network should be randomly chosen from the Haar measure. The circuit depth needed to realize an arbitrary unitary transform in a passive linear optical network is O(M), where M is the number of optical modes19,28,29,30. However, due to the photon loss, the circuit depth of the optical network might not be deep enough to meet the requirements of the full connectivity and the global Haar-random unitary19. This is because photon loss rate \(\varepsilon\) will increase exponentially with the depth of the optical network, i.e., \(\varepsilon = \varepsilon _0^D\), where \(\varepsilon _0\) is the photon loss of each layer of the optical network. If the photon loss rate is too high, the quantum advantage result of GBS experiments will be destroyed16,31,32.

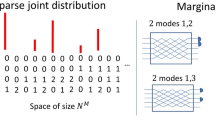

The shallow circuit depth leads to a limited connected interferometer17,18,19. As an example, consider the case where the beam splitters in the optical network is local, which means they act on neighbouring modes, assuming a 2D structure. This is shown in Fig. 1. If \(D<M\), the transform matrix U of the passive linear optical network will have a banded structure17, i.e.,

and

where \(w_U= D\) is the bandwidth of the transform matrix U. An example of a matrix with banded structure is shown in Fig. 2. According to Eqs (10) and (11), the covariance matrix \(\sigma\) of the output Gaussian state will have a banded structure. Thus, if beam splitters are local, the circuit depth D must be no less than M/2 to reach the full connectivity. Note that, according to Eq. (12), matrix \({\tilde{B}}\) [and A in Eq. (7)] has bandwidth

Recently, a scheme known as “high-dimensional GBS” has been proposed4. This scheme suggests that by interfering non-adjacent optical modes, the connectivity can be improved while maintaining a relatively shallow circuit depth. However, due to the limited circuit depth, the transformation matrix in this scheme cannot represent an arbitrary unitary matrix. Consequently, the transformation matrix deviates from the global Haar-random unitary. To address this, the scheme introduces a local Haar-random unitary assumption, which says that the transformation matrix corresponding to each individual beam splitter is randomly selected from the Haar measure. Under this local Haar-random unitary assumption, Ref.18 demonstrates that when the circuit depth is too shallow, the high dimensional GBS process can be approximate by a limited connected GBS process with a small error.

As a result of the limited connectivity and the deviation from the Haar measure, a speed-up can be realized in simulating the corresponding GBS process17,18. The speed-up is attributed to the faster computation of the loop Hafnian for matrices with bandwidth as compared to the computation of general matrices.

Classical simulation of GBS

To date, the most efficient classical simulation method for simulating a general GBS process has been presented in Ref.14. This classical algorithm, which samples from an M-mode Gaussian state \({\hat{\rho }}\), operates as follows.

-

1.

If \({\hat{\rho }}\) is a mixed state, it can be decomposed as a classical mixture of pure Gaussian states. We randomly select a pure displaced Gaussian state based on this classical mixture. This can be done in polynomial time14,21. Its covariance matrix and displacement vector are denoted as \(\sigma\) and \({\bar{r}}\), respectively.

-

2.

If \({\hat{\rho }}\) is a pure state, denote its covariance matrix and displacement vector as \(\sigma\) and \({\bar{r}}\), respectively.

For \(k=1,\dots ,M\):

-

3.

If \(k=1,\) drawn a sample \({\bar{\alpha }}^1 = \left( a_{2+1},\dots ,\alpha _{M}\right)\) from the probability distribution:

$$\begin{aligned} p({\bar{\alpha }}) = \frac{1}{\pi ^{M-1}}\left\langle {\bar{\alpha }}^1 \left| {\hat{\rho }}_{d}\right| {\bar{\alpha }}^1 \right\rangle , \end{aligned}$$(23)where \({\hat{\rho }}_{d} = {\text{Tr}}_{1} \left[ {\hat{\rho }}\right]\), and \({\text{Tr}}_{1}\) \(\left[ {\cdot }\right]\) is the partial trace of mode 1. This process is equivalent to measure the modes \(2\) to M by heterodyne measurements.

-

4.

Let \(\bar{\alpha}^k = \left(\alpha_{k+1},\dots,\alpha_{M}\right)\). Provided that the heterodyne measurements in modes \(k+1\;\text{to}\;M\)gives \(\bar{\alpha}^k\), compute the conditional covariance matrix \(\sigma _{1-k}^{({\bar{\alpha }}^k)}\) and displacement \({\bar{r}}_{1-k}^{({\bar{\alpha }}^k)}\) of the remaining Gaussian state. If \(k=M\), let \(\sigma _{1-k}^{({\bar{\alpha }}^k)} = \sigma\) and \({\bar{r}}_{1-k}^{({\bar{\alpha }}^k)}={\bar{r}}\). Notice that the conditional quantum state in modes \(1,\dots ,k\) is still Gaussian if modes \(k+1,\dots ,M\) is measured by heterodyne measurements21.

-

5.

Given a cutoff \(N_{\text{max}}\), use Eq. (7) with \(\sigma _{1-k}^{({\bar{\alpha }}^k)}\) and \({\bar{r}}_{1-k}^{({\bar{\alpha }}^k)}\) to calculate \(p\left( n_1,\dots ,n_k\right)\) for \(n_k = 0,1,\dots ,N_{\text{max}}\).

-

6.

Sample \(n_k\) from:

$$\begin{aligned} p(n_k) = \frac{p\left( n_1,\dots ,n_k\right) }{p\left( n_1,\dots ,n_{k-1}\right) }. \end{aligned}$$(24)

The most time-consuming part for the above classical simulation method is to calculate the probability \(p\left( n_1,\dots ,n_k\right)\) of an output sample pattern \(n_1,\dots ,n_M\). According to Eq. (7), we have

where the \({\bar{r}}_{{\mathscr {A}}}^{({\mathscr {B}})}\), \((\sigma _Q)_{{\mathscr {A}}}^{({\mathscr {B}})}\) and \(A_{{\mathscr {A}}}^{({\mathscr {B}})}\) are the corresponding conditional matrices for subsystem contains modes 1 to k (denoted as \({\mathscr {A}}\)) when modes \(k+1\) to M are measured by heterodyne measurements with outcome denoted as \({\mathscr {B}}\). \(A_{{\mathscr {A}}}^{({\mathscr {B}})}\) can be computed by the following equation:

Note that if the Gaussian state \({\hat{\rho }}\) is a pure state, the conditional Gaussian state is still a pure state. So, we have \({\tilde{A}}_{{\mathscr {A}}}^{({\mathscr {B}})} = {\tilde{B}}_{{\mathscr {A}}} \bigoplus {\tilde{B}}_{{\mathscr {A}}}^*\). As pointed in Ref.18, we have \({\tilde{B}}_{{\mathscr {A}}} = [U (\bigoplus \nolimits _{i} \tanh s_i ) U^T ]_{{\mathscr {A}}}\). This shows that \({\tilde{B}}_{{\mathscr {A}}}\) has the same banded structure as \({\tilde{B}}\). A classical algorithm to calculate the loop Hafnian of a banded matrix is thus needed for the classical simulation of GBS with limited connectivity.

Simulate the sampling process with limited connectivity

Loop Hafnian algorithm for banded matrices

An algorithm to calculate the loop Hafnian of an \(n \times n\) symmetric matrix with bandwidth w in time \(O\left( nw 4^w\right)\) is given in Ref.17. Then, a faster algorithm with time complexity \(O\left( n w_t^2 2^{w_t}\right)\) to calculate the loop Hafnian of an \(n\times n\) symmetric matrix is proposed in Ref.18, where \(w_t\) is the treewidth of the graph corresponding to the matrix33. For a banded matrix, the smallest treewidth \(w_t\) is equal to the bandwidth w, i.e., \(w = w_t\). So the time complexity for this algorithm to calculate the loop Hafnian of an \(n\times n\) symmetric matrix with bandwidth w is \(O\left( n w^2 2^w\right)\). Here we show that the loop Hafnian of an \(n \times n\) symmetric matrix with bandwidth w can be calculated in \(O\left( n w 2^w \right)\).

Our algorithm to calculate the loop Hafnian for banded matrices is outlined as follows.

Algorithm. To calculate the loop Hafnian of an \(n\times n\) symmetric matrix B with bandwidth w:

-

1.

Let \(C_{\emptyset }^0 = 1\).

For \(t=1,\dots ,n\):

-

2.

Let \(t_w = \min \left( t+w,n\right)\), and \(P(\{t+1,\dots ,t_w\})\) be the set of all subsets of \(\left\{ t+1,\dots ,t_w\right\}\).

-

3.

For every \(Z^t \in P(\{t+1,\dots ,t_w\})\) satisfying \(Z^t \ne \emptyset\) and \(\left| Z^t\right| \le \min \left( t,w\right)\), let

$$\begin{aligned} \quad \quad C^t_{Z^t} = \sum _{x \in Z^t } B_{t,x} C^{t-1}_{Z^t \backslash \{x\}} + C^{t-1}_{Z^t \cup \left\{ t\right\} } + B_{t,t} C^{t-1}_{Z^t}, \end{aligned}$$(27)and if \(t_w \in Z^t\), then

$$\begin{aligned} C^t_{Z^t} = B_{t,t_w} C^{t-1}_{Z^t \backslash \{t_w\}}. \end{aligned}$$(28)During the above iterations, if \(C^{t-1}_{\{\dots \}}\) is not given in the previous steps, it is treated as 0.

-

4.

Let

$$\begin{aligned} C^t_\emptyset = B_{t,t}C^{t-1}_\emptyset + C^{t-1}_{\{t\}}. \end{aligned}$$(29)

The loop Hafnian of matrix B is obtained in the final step \(t = N\) by

An example of calculating the loop Hafnian of a \(4 \times 4\) matrix with bandwidth \(w = 1\) using our algorithm can be found in Appendix A. Note that, our algorithm for calculating loop Hafnian function of matrices with banded structure can be easily extended to cases where the matrices is sparse (but not banded). A description of this can be found in Appendix B. The time complexity of the above algorithm is \(O(nw 2^{w})\) as shown in Theorem 1.

Theorem 1

Let B be an \(n\times n\) symmetric matrix with bandwidth w. Then its loop Hafnian can be calculated in \(O(nw 2^{w})\).

Proof

As shown in our algorithm, the number of coefficients (\(C^t_{Z_t}\), \(C^t_\emptyset\) and \(C^t_{\{t\}}\)) needed to be calculated for each \(t \in \{1,\dots ,n\}\) is at most \(2^{w}\). As shown in Eqs. (27)–(30), in each iteration, we need O(w) steps to calculate each coefficient \(C^{t}_{Z^t}\). So, for each t, the algorithm takes \(O(w 2^{w})\) steps. The overall cost is thus \(O\left( n w 2^{w} \right)\). \(\square\)

Combining our algorithm with the classical sampling techniques described in Ref.14, as summarized in “Classical simulation of GBS” section, we get the time complexity for classically simulating a limited connected GBS process as stated in the following Theorem 2.

Theorem 2

A limited connected GBS process with a bandwidth w can be simulated in \(O(M nw2^w)\) time, where M represents the number of optical modes and n denotes the maximum total photon number of the output samples.

Proof

The classical simulation process is similar to that in Ref.14, as summarized in “Classical simulation of GBS” section. In this process, we sequentially sample \(n_k\) for \(k=1,\dots ,M\) according to Eq. (24). As we shown in Theorem 1, the computation of Eq. (24) takes at most \(O\left( n w 2^w \right)\) steps, assuming that B has a bandwidth w, where \(n = \sum _{i=1}^{M} n_i\). This is scaled up by at most the total number of modes M. Thus the time complexity for simulating such a GBS process is \(O\left( M n w 2^w \right)\). \(\square\)

Validity of the algorithm

By sequentially computing the states (usually non-Gaussian) of the remaining \(M-k\) modes with the measurement outcome of modes 1 to k (\(k=1,\dots ,M\)) in photon number basis, we can demonstrate the validity of the algorithm introduced in “Loop Hafnian algorithm for banded matrices” section. Recalling matrix \({\tilde{R}}\) defined in Eq. (15), we denote the bandwidth of matrix \({\tilde{R}}\) by w. According to the definition of \({\tilde{R}}\) and \({\tilde{l}}\), we know that

where

Assuming that the measurement results are \(n_i = 1\) for every \(i = 1, \dots , M\), we obtain

Although we make the assumption that R corresponds to a Gaussian state, the subsequent proof remains valid in the more general case as discussed in Appendix C.

For convenience, define \({\tilde{\gamma }}_{d_i} = (\beta _i^*,\alpha _i)^\mathrm{T}\), \({\tilde{\gamma }}_{h_i} = (\beta _{i+1}^*,\alpha _{i+1},\dots ,\beta _{M}^*,\alpha _{M})^\mathrm{T}\), \({\tilde{R}}_{dd}^i\) as a \(2\times 2\) matrix corresponding to mode i, \({\tilde{R}}_{hh}^i\) as a \(2(M-i) \times 2(M-i)\) matrix corresponding to modes \(i+1\) to M, \({\tilde{R}}_{dh}^i\) as a \(2 \times 2(M-i)\) matrix represents the correlation between mode i and modes \(i+1\) to M.

According to Eq. (17), after measuring the mode 1 in photon number basis, the remaining non-Gaussian quantum state is:

We then have

where \(D^2_x\) is the coefficient for \({\tilde{\gamma }}_x^2\).

Next, measuring mode 2. The remaining non-Gaussian quantum state is:

We have

where \(D^4_{x_1,x_2,x_3}\) is the coefficient for \({\tilde{\gamma }}_{x_1}^2 {\tilde{\gamma }}_{x_2} {\tilde{\gamma }}_{x_3}\).

Repeating this procedure to measure mode 1 to mode M, we eventually find that

Thus we prove

This demonstrates the validity of the algorithm given in “Loop Hafnian algorithm for banded matrices” section.

Intuitively, as shown in the previous derivation, after measuring the first t modes of the output Gaussian state, the state of the remaining modes is:

where \(\mathrm{Poly}^t \left( R,{\tilde{\gamma }}_{h_t} \right)\) represents the sum of polynomial terms formed by complex variables in \({\tilde{\gamma }}_{h_t}\). Specifically, we have

First we consider the case where \(t<M\). It is easy to find that terms \(\{\prod _{x \in Z^{2t}} {\tilde{\gamma }}_x\}\) of different \(Z^{2t}\) influence the constant term \(C^{2M}_\emptyset\) in the subsequent computing. On the other hand, high-order terms containing \(\gamma _x^k\) with \(k\ge 2\) do not affect the final result. As those term vanishes after performing the partial derivation. Consequently, the iteration of \(C^t_{Z^t}\) appearing in step 3 of our algorithm is required from \(t=1\) to \(t=2M\) to eventually determine the value of \(\mathrm{lhaf}(R) = C^{2M}_\emptyset\). When all modes of the output state are measured, we have \(t=M\) for Eq. (41), and hence \(\mathrm{Poly}^{M} (R,{\tilde{\gamma }}_{h_M})\) will not contain any complex variables, ensuring that the constant term \(C^{2M}_\emptyset\) equals to \(\mathrm{lhaf}(R)\).

Summary

To verify quantum advantage, it is vital to evaluate the concrete cost of classically simulating the corresponding quantum process. Here we present an algorithm to speed up the classical simulation of GBS with limited connectivity. The speed-up is arising from the faster calculation for the loop Hafnian of banded (or sparse) matrices. This algorithm runs in \(O\left( nw2^w\right)\) for \(n\times n\) symmetric matrices with bandwidth w. This result is better than the prior state-of-the-art result of \(O\left( nw^2 2^w\right)\)18.

This classical algorithm is helpful on clarifying how limited connectivity reduces the computational resources required for classically simulating GBS processes, thereby tightening the boundary for achieving quantum advantage in GBS problem.

Data availability

All data generated or analysed during this study are included in this published article.

References

Aaronson, S. & Arkhipov, A. The computational complexity of linear optics. In Proceedings of the Forty-Third Annual ACM Symposium on Theory of Computing, STOC ’11, 333–342 (Association for Computing Machinery, New York, 2011).

Hamilton, C. S. et al. Gaussian boson sampling. Phys. Rev. Lett. 119, 170501 (2017).

Kruse, R. et al. Detailed study of Gaussian boson sampling. Phys. Rev. A 100, 032326 (2019).

Deshpande, A. et al. Quantum computational advantage via high-dimensional Gaussian boson sampling. Sci. Adv. 8, eabi7894 (2022).

Zhong, H.-S. et al. Quantum computational advantage using photons. Science 370, 1460 (2020).

Arrazola, J. M. et al. Quantum circuits with many photons on a programmable nanophotonic chip. Nature 591, 54–60 (2021).

Zhong, H.-S. et al. Phase-programmable Gaussian boson sampling using stimulated squeezed light. Phys. Rev. Lett. 127, 180502 (2021).

Madsen, L. S. et al. Quantum computational advantage with a programmable photonic processor. Nature 606, 75–81 (2022).

Thekkadath, G. et al. Experimental demonstration of Gaussian boson sampling with displacement. PRX Quantum 3, 020336 (2022).

Sempere-Llagostera, S., Patel, R., Walmsley, I. & Kolthammer, W. Experimentally finding dense subgraphs using a time-bin encoded Gaussian boson sampling device. Phys. Rev. X 12, 031045 (2022).

Deng, Y.-H. et al. Solving graph problems using Gaussian boson sampling. Phys. Rev. Lett. 130, 190601 (2023).

Björklund, A., Gupt, B. & Quesada, N. A faster Hafnian formula for complex matrices and its benchmarking on a supercomputer. ACM J. Exp. Algorithm. 24, 1–17 (2019).

Bulmer, J. F. F. et al. The boundary for quantum advantage in Gaussian boson sampling. Sci. Adv. 8, eabl9236 (2022).

Quesada, N. et al. Quadratic speed-up for simulating Gaussian boson sampling. PRX Quantum 3, 010306 (2022).

Brod, D. J. et al. Photonic implementation of boson sampling: A review. Adv. Photon. 1, 034001 (2019).

Qi, H., Brod, D. J., Quesada, N. & García-Patrón, R. Regimes of classical simulability for noisy Gaussian boson sampling. Phys. Rev. Lett. 124, 100502 (2020).

Qi, H. et al. Efficient sampling from shallow Gaussian quantum-optical circuits with local interactions. Phys. Rev. A 105, 052412 (2022).

Oh, C., Lim, Y., Fefferman, B. & Jiang, L. Classical simulation of boson sampling based on graph structure. Phys. Rev. Lett. 128, 190501 (2022).

Russell, N. J., Chakhmakhchyan, L., O’Brien, J. L. & Laing, A. Direct dialling of Haar random unitary matrices. New J. Phys. 19, 033007 (2017).

Wang, X., Hiroshima, T., Tomita, A. & Hayashi, M. Quantum information with Gaussian states. Phys. Rep. 448, 1–111 (2007).

Serafini, A. Quantum Continuous Variables: A Primer of Theoretical Methods (CRC Press, Boca Raton, 2017).

Weedbrook, C. et al. Gaussian quantum information. Rev. Mod. Phys. 84, 621–669 (2012).

Simon, R., Mukunda, N. & Dutta, B. Quantum-noise matrix for multimode systems: U(n) invariance, squeezing, and normal forms. Phys. Rev. A 49, 1567–1583 (1994).

Quesada, N. & Arrazola, J. M. Exact simulation of Gaussian boson sampling in polynomial space and exponential time. Phys. Rev. Res. 2, 023005 (2020).

Quesada, N. et al. Simulating realistic non-Gaussian state preparation. Phys. Rev. A 100, 022341 (2019).

Su, D., Myers, C. R. & Sabapathy, K. K. Conversion of Gaussian states to non-Gaussian states using photon-number-resolving detectors. Phys. Rev. A 100, 052301 (2019).

Scully, M. O. & Zubairy, M. S. Quantum Optics 1st edn. (Cambridge University Press, Cambridge, 1997).

Reck, M., Zeilinger, A., Bernstein, H. J. & Bertani, P. Experimental realization of any discrete unitary operator. Phys. Rev. Lett. 73, 58–61 (1994).

Clements, W. R., Humphreys, P. C., Metcalf, B. J., Kolthammer, W. S. & Walsmley, I. A. Optimal design for universal multiport interferometers. Optica 3, 1460 (2016).

Go, B., Oh, C., Jiang, L. & Jeong, H. Exploring Shallow-Depth Boson Sampling: Towards Scalable Quantum Supremacy. arXiv:2306.10671 (2023).

Martínez-Cifuentes, J., Fonseca-Romero, K. M. & Quesada, N. Classical models may be a better explanation of the Jiuzhang 1.0 Gaussian boson sampler than its targeted squeezed light model. Quantum 7, 1076 (2023).

Oh, C., Liu, M., Alexeev, Y., Fefferman, B. & Jiang, L. Tensor Network Algorithm for Simulating Experimental Gaussian Boson Sampling. arXiv:2306.03709 (2023).

Cifuentes, D. & Parrilo, P. A. An efficient tree decomposition method for permanents and mixed discriminants. Linear Algebra Appl. 493, 45–81 (2016).

Acknowledgements

We acknowledge the financial Support by the Taishan Scholars Program. We acknowledge the financial support in part by National Natural Science Foundation of China Grant No. 11974204, No. 12174215 and No. 12374473.

Author information

Authors and Affiliations

Contributions

All authors equally contributed to the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yang, TY., Wang, XB. Speeding up the classical simulation of Gaussian boson sampling with limited connectivity. Sci Rep 14, 7680 (2024). https://doi.org/10.1038/s41598-024-58136-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-58136-1

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.