Abstract

Hail, a highly destructive weather phenomenon, necessitates critical identification and forecasting for the protection of human lives and properties. The identification and forecasting of hail are vital for ensuring human safety and safeguarding assets. This research proposes a deep learning algorithm named Dual Attention Module EfficientNet (DAM-EfficientNet), based on EfficientNet, for detecting hail weather conditions. DAM-EfficientNet was evaluated using FY-4A satellite imagery and real hail fall records, achieving an accuracy of 98.53% in hail detection, a 97.92% probability of detection, a false alarm rate of 2.08%, and a critical success index of 95.92%. DAM-EfficientNet outperforms existing deep learning models in terms of accuracy and detection capability, with fewer parameters and computational needs. The results validate DAM-EfficientNet’s effectiveness and superior performance in hail weather detection. Case studies indicate that the model can accurately forecast potential hail-affected areas and times. Overall, the DAM-EfficientNet model proves to be effective in identifying hail weather, offering robust support for weather disaster alerts and prevention. It holds promise for further enhancements and broader application across more data sources and meteorological parameters, thereby increasing the precision and timeliness of hail forecasting to combat hail disasters and boost public safety.

Similar content being viewed by others

Introduction

Hail, a weather event arising from intense updrafts, is notorious for intense rainfall and the formation of ice pellets. It significantly harms humans, agricultural fields1,2,3, and structures. Research by Martín Battaglia and others4 suggests that hail can cause leaf loss in plants, potentially decreasing yields by as much as 30%. In daily life, numerous vehicles parked outdoors are vulnerable to hail, with ice balls impacting and damaging the vehicles’ surfaces. The study by Roman Hohl et al.5 introduced a damage assessment function for cars affected by hail storms, correlating with the kinetic energy of hail measured via radar. Bell, J. R., et al.6 demonstrated the effectiveness of using satellite imagery for automatic detection of hail damage on crops. By utilizing NDVI and ground surface temperature data and applying threshold and anomaly detection methods, they successfully distinguished hail damage across different growth seasons, providing solid support for precise hail impact monitoring in the agricultural sector. Moreover, Heinz Jürgen Punge and his team7, through a 14-year satellite study, identified the key distribution zones and high-incidence periods for hail in South Africa, offering important data for long-term hail risk assessments. W.J.W. Botzen and associates8 pointed out the direct correlation between hail activities and agricultural losses, with future projections indicating an increase in hail-induced damage to open-air farming between 25 and 50%. These instances underscore the pressing need to address hail weather challenges both locally and globally. Meteorological researchers urgently require fast, effective, and convenient methods to accurately detect hail in cloud formations, aiding in the timely prevention of hail threats and minimizing human and financial losses.

In the last several decades, there has been notable advancement in the study of methods for identifying hail. Numerous scholars in meteorology have engaged in research to more deeply understand the features of hail clouds. One prevalent approach is the traditional study based on radar equipment data. In this vein, many researchers use radar’s differential reflectivity to determine the presence of hail. Pamela L. Heinselman and Alexander V. Ryzhkov9 streamlined the fuzzy logic hydrometeor classification algorithm (HCA), which utilizes four radar metrics: reflectivity, differential reflectivity, cross-correlation coefficient, and reflectivity texture. This algorithm effectively discerns between seven echo types, including rain and hail. The HCA’s ability to classify hail was validated using polarimetric radar and on-the-ground data, showing that it surpasses the Hail Detection Algorithm (HDA) in hail detection probability and accuracy. In early hail research, THOMAS A. SELIGA and team10 clearly and dependably separated hail from rain areas using differential reflectivity radar, based on the distinct polarization scattering characteristics of the two. In recent advancements in satellite technology, the study by Ralph Ferraro and others11 stands out. They developed an innovative probabilistic hail detection method using passive microwave measurements from satellites. This approach effectively distinguishes moderate and severe hail storms by analyzing the effect of hail on the brightness temperatures detected by satellites. This method has been validated in selected cases across Europe, South America, and the USA, showcasing its versatility and adaptability on various satellite sensors. Additionally, Pablo Melcón and colleagues12 have achieved significant progress in enhancing the precision of hail monitoring. Through field validation in the south of France, they demonstrated the efficacy of their newly developed hail detection tool (FHDT) based on the Meteosat Second Generation (MSG) satellite data, particularly in reducing false positives. These examples underscore the viability and significance of satellite data utilization in hail detection.

The rapid advancements in machine learning and artificial intelligence neural networks have led to their burgeoning application in hail detection research. David John Gagne et al.13 innovated a probabilistic machine learning method to advance hail forecasting, addressing the shortcomings of traditional techniques. This method, utilizing object detection and tracking algorithms, excels at pinpointing potential hailstorms, outperforming traditional approaches in the Critical Success Index (CSI). In related studies14,15,16, machine learning algorithms were used to identify hail weather using C-band radar echoes. Notably, models incorporating Support Vector Machine, Decision Tree, and Naive Bayes were compared, showing Probability of Detection (POD) and False Alarm Rate (FAR) values of 88.9% and 19.6%, 90.5% and 24.1%, and 67.8% and 10.6%, respectively. It was observed that a higher POD often correlates with a higher FAR, in line with prior research findings. Regarding deep learning, Melinda Pullman et al.17 in 2019 demonstrated the capability of deep learning networks in meteorological phenomenon recognition, focusing on hailstorm detection. In 2021, Lan et al.18 developed a dataset comprising 11 Doppler weather radars, regional automatic stations, lightning positioning data, and hail station records. They employed deep learning methods, specifically PredRNN++ and Resnet, for automated hail identification, achieving a high accuracy of 93.81%. Furthermore, in 2023, Stavros Kolios19 utilized a Deep Neural Network (DNN) model based on multispectral infrared images from the European Meteorological Satellite System’s (MTG) third generation, trained with extensive data from the European Severe Weather Database (ESWD), yielding highly satisfactory results.

When identifying hail using machine learning and artificial intelligence technologies, it is crucial to meticulously process and filter the data to ensure its high quality and reliability, thereby minimizing the impact of inaccuracies due to data errors. Kiel L. Ortega and others20 remotely collected hail data from severe thunderstorms at high temporal and spatial resolutions through the Severe Hail Analysis, Verification, and Evaluation (SHAVE) project. High-resolution data reports enhance the effectiveness of hail detection and warning methods. Scott F. Blair and colleagues21 compiled hail measurement data from 73 severe thunderstorms, providing a benchmark for exploring meteorological hail databases and hail forecasting in weather warning products. Therefore, further research still needs to focus on improving data quality to enhance the accuracy and reliability of data studies on weather phenomena like hail and other intense updrafts.

To enhance the early warning detection of hail in the atmosphere, there is a need for a more efficient method to identify hail clouds. This research, therefore, adopts a novel approach to data acquisition using the FY-4A satellite22. Leveraging meteorological data from the FY-4A satellite and performing preprocessing on the raw satellite data to generate cloud images, we have developed a novel lightweight deep learning model, referred to as DAM-EfficientNet (Dual Attention Module EfficientNet). This model is designed for small sample datasets and is capable of identifying features of hail clouds in FY-4A satellite cloud imagery, thereby presenting a new frontier for effective hail detection.

The objective of this research is to introduce a more efficient methodology for the timely detection and early warning of hail, aiming to reduce its potential impacts on human lives, agriculture, and transportation systems. Capitalizing on the advancements in meteorological sciences and artificial intelligence, this study leverages data from the FY-4A satellite along with the lightweight deep learning model DAM-EfficientNet. This innovative approach is designed to overcome the drawbacks of traditional methods, thereby enhancing the accuracy of detecting atmospheric hail clouds. By thoroughly analyzing satellite cloud imagery data, this research is committed to providing a more reliable tool for forecasting and mitigating the effects of hail, aiming to minimize human and economic losses associated with hail incidents. The study anticipates that its contributions will offer groundbreaking techniques in hail monitoring and prevention within the field of meteorology, thus providing more effective safeguards for society.

Related works

Dataset

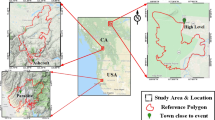

In this study, we have comprehensively utilized FY-4A satellite data from 2019 to 2022, along with ground hail observation records from Guizhou Province, to conduct hail identification research. The FY-4A satellite, China’s latest generation of geostationary meteorological satellites, was launched on December 11, 2016. Initially positioned at 99.5\(^{\circ }\)E above the equator, it drifted to 104.7\(^{\circ }\)E on May 25, 2017. As the world’s first satellite combining geostationary orbit imaging observation with infrared hyperspectral atmospheric vertical detection, FY-4A is equipped with an advanced Advanced Baseline Imager (ABI) as its primary payload. ABI, with its precise dual-scanning mirror mechanism, achieves accurate and flexible two-dimensional targeting and rapid scanning of designated areas. Its off-axis, three-mirror primary optical system boasts a high-frequency acquisition capability of earth cloud images over more than 14 spectral bands and utilizes onboard blackbody for high-frequency infrared calibration, ensuring the precision of observational data. This imager is primarily used for cloud imaging, capturing aerosols, snow, and water vapor clouds of different phases and altitudes. Figure 1 in this document showcases a comprehensive true-color representation of the China area, generated from the visible light channels aboard the FY-4A satellite. These images, aligning closely with how the human eye perceives natural hues, offer a direct visual insight into China’s natural landscapes. The image highlights China’s borders in yellow, with Guizhou Province delineated in red. As a focal point for observing hail events in this research, Guizhou’s climatic patterns and geographical features are of paramount importance. The satellite’s infrared and microwave sensors also play a crucial role, providing essential details about the cloud tops’ temperature and their vertical structure, crucial for spotting cumulonimbus clouds likely to produce hail, making it possible to more effectively monitor and analyze hail-related weather patterns by combining these true-color visuals with infrared and microwave data.

The detailed documentation of ground-level hail occurrences, along with data from the FY-4A satellite, was provided by the Hubei Provincial Meteorological Service Center, associated with the corresponding author, and the Guizhou Provincial Office for Artificial Weather Influence involved in this study. These data sets, collected by well-trained observers, include detailed records of the aftermath and specifics of each hail event. The recorded hailstones had maximum diameters ranging from 2 to 30 mm, with an average maximum diameter of 8.3 mm. Importantly, approximately 76% of the total samples consisted of hailstones with diameters equal to or greater than 5 mm. These in-depth measurements provide a robust basis for our analysis, thereby improving the reliability and applicability of our research outcomes. In this paper, a dataset of hail events was constructed, detailing the specific locations, dates, and exact start and end times of each occurrence. The distinctive terrain of Guizhou Province, including its steep mountains, extensive hills, and broad basins, offers an optimal environment for the formation of intense convective airflows. This not only leads to the variability of local weather but also facilitates hail formation. In this geographic and climatic setting, our study meticulously examined 172 hail events that took place from 2019 to 2022 within the coordinates of 25\(^{\circ }\)08’N to 29\(^{\circ }\)04’N and 104\(^{\circ }\)66’E to 108\(^{\circ }\)92’E. These events encompassed 146 distinct regions within Guizhou Province. The analysis of this data is vital for understanding the specific characteristics and trends of hail formation in the region, and it also serves as a scientific foundation for future meteorological forecasting and disaster prevention strategies.

The FY-4A satellite is equipped with the Advanced Geostationary Radiation Imager (AGRI), comprising 14 observation channels, including two visible light channels, two near-infrared channels, and eight infrared channels. Notably, the infrared channels, specifically channels 7 to 14, facilitate continuous observation both day and night. These channels, with a spatial resolution of 2 to 4 kilometers, enable the satellite to complete a scan of the entire Chinese territory in just five minutes, significantly faster than the traditional 15-minute full-disk image scan. This rapid scanning capability is crucial for capturing subtle changes in hail features. Detailed specifications of the satellite’s performance parameters are listed in Table 1, encompassing a variety of channels such as visible light and near-infrared, short-wave infrared, mid-wave infrared, water vapor, and long-wave infrared, each tailored for specific observational purposes and applications. International studies23,24,25,26 have examined infrared imager wavelengths and channels in geostationary satellites. Previous research27 indicates that infrared imagers with a central wavelength of 3.75\(\upmu\)m are suitable for cloud characteristic analysis, hotspot detection, and snow identification. In this study, Channel 7 was specifically chosen as the primary observation channel. As hail typically forms in cooler cloud top regions, the mid-wave infrared channel is instrumental in locating potential hail formation areas. Additionally, since hail formation is often associated with the development of convective clouds, using data from Channel 7 allows for more accurate cloud top identification, thus enhancing the precision of hail localization. The 2-kilometer spatial resolution of this channel provides sufficient detail to differentiate between cloud characteristics and potential hail formation areas in different regions, laying a solid foundation for building a high-quality hail dataset.

True-color image of China. Note: This image was generated using Python 3.8 with the aid of Satpy 0.29, Cartopy 0.18, Matplotlib 3.3.1, and Geopandas 0.8.1. For more details, visit: https://bit.ly/486tfYA.

Data preprocessing

In order to maintain consistency in time and space within the data, this research rigorously handled the time alignment between satellite observation data and on-ground actual records. The FY-4A satellite’s multi-channel scanning imaging data utilize Coordinated Universal Time (UTC) for timestamping, while the recorded hail events from ground observations follow Beijing Time (UTC+8). Therefore, for the synchronization of these two data sets, an adjustment of adding 8 hours was made to the satellite data timestamps to match them with Beijing Time.

Given the sparse nature of ground-based data on hail events, this study focused on the variation in cloud features observed by the satellite within the hour leading up to a hail event, using these as essential indicators for the deep learning model’s training. Considering the FY-4A satellite’s capability to conduct a complete scan of the China area every five minutes, the research used a time-point comparative analysis to ensure the precise correlation of features extracted from satellite images with ground observations.

In the data annotation process, this research implemented a rigorous synchronization protocol for time and space, guaranteeing the alignment of satellite imagery with ground observation records. A spatial window with a 5-kilometer radius was established as the standard for spatial precision in hail event identification. Time stamps and geographic coordinates of each satellite image file were precisely matched with ground observations, affirming the tight correlation of satellite-captured cloud features with the actual hail occurrence sites. For example, if hail was observed at a certain location from 8:00 to 8:30 on a specific date, satellite data from the hour preceding 8:30 were annotated as ‘hail present’. Similarly, data files were marked as ‘no hail’ based on accurate no-hail occurrence times and locations provided by the corresponding author. This approach ensures the scientific integrity and applicability of the annotated data, offering valuable training and validation datasets for the deep learning model.

Further, this study extracted images of hail occurrence areas based on satellite files, geographic coordinates, and actual data records. The specific steps included converting geographic coordinates into pixel coordinates and cropping the area images to a resolution of 256x256 based on a predetermined offset. Subsequently, the collection range was limited to within 4 kilometers, and the corresponding geographic coordinates were converted into a two-dimensional array, forming a 2D image suitable for model learning. Each image was then appropriately labeled according to its category (’hail present’ or ’no hail’). In order to develop a dataset with a consistent format, every image gathered was scaled to a uniform size of 224x224 pixels. A thorough examination of each image’s clarity and feature distinctiveness was conducted to guarantee the high quality of the sample collection.

Following data preprocessing, our study has developed a hail dataset essential for deep learning model training. Illustrated in Fig. 2 are the curated image samples of hail occurrences versus non-hail scenarios, crafted not from raw satellite imagery but through a refined process extracting crucial information. Each image pixel equates to a 4 km ground area, facilitating an understanding of the depicted spatial range. Instead of mirroring cloud top temperatures directly, the color variations in these images encapsulate key hail-related data. Such delicate color variations, challenging for the naked eye to discern, are analyzed and recognized through Convolutional Neural Networks (CNN). By employing its layered structure and convolutional techniques, CNNs adeptly extract various image features, including but not limited to color, shape, and texture, which might not be overtly noticeable. The training of our deep learning models enables CNNs to unearth intricate details pertinent to hail phenomena, underscoring their potent capability in identifying slight visual distinctions.

The dataset used in this study comprises two distinct parts: the training set and the testing set, encompassing a total of 681 images. For the construction of the training set, 80% of the images from each class were randomly selected, while the remaining 20% were assigned to the testing set. The training set consists of 545 images, whereas the testing set encompasses 136 images. Further details regarding the dataset’s composition are provided in Table 2.

Due to the limited availability of FY-4A satellite data and real hail records, this study adopts the lightweight and high-precision EfficientNet model as the baseline network.

EfficientNet

EfficientNet28 is an efficient convolutional neural network architecture proposed by the Google Brain team in 2019. It optimizes the relationship between three core factors in convolutional neural networks: network depth, network width, and resolution, achieving improved performance with reduced computational resources. Unlike traditional convolutional neural networks that typically enhance accuracy by adjusting individual dimensions of network depth, input resolution, or convolutional channel number, EfficientNet introduces an innovative approach. It first identifies a set of optimal hyperparameters for the network structure through random search and then explores increasing depth, width, and resolution based on this foundation. The authors introduced a compound scaling method that uniformly scales the width, depth, and resolution parameters using a compound factor \(\phi\). The specific calculation formula is as follows:

In the formula, \(\alpha\),\(\beta\), and \(\gamma\) represent constants that determine how different hyperparameters are allocated to the network’s depth, width, and image resolution.\(\phi\) is the compound coefficient used to control model expansion. “s.t.” represents the constraint condition.

The compound scaling method is as follows: Using the Neural Architecture Search (NAS) method on the base network EfficientNet-B0 to search for \(\alpha\), \(\beta\), and \(\gamma\), first, \(\phi\) is fixed at 1, and the values of \(\alpha\),\(\beta\), and \(\gamma\) are determined through grid search. Then, with \(\alpha\),\(\beta\), and \(\gamma\) fixed as constants, an appropriate set of scaling factors \(\phi\) is searched, resulting in EfficientNet-B0 to EfficientNet-B7.

EfficientNet28 provides a total of 8 different models from B0 to B7, enabling the selection of the appropriate model based on specific task requirements and computational resources. Furthermore, the EfficientNet29 model can be combined with traditional models like ResNet30, MobileNet31, etc., to further enhance model performance.

After experimenting with the dataset, it was found that an image resolution of 256\(\times\)256 achieves optimal results. To choose the best model as the base network for the hail identification task, this study considered both the model’s recognition performance and parameter size. Ultimately, EfficientNet-B1 was selected as the base network, using a specific set of network parameters (\(\phi\)=1, \(\alpha\)=1.2, \(\beta\)=1.1, \(\gamma\)=1.15) for further work.

Approach

Hail clouds and channel selection for FY-4A

In this study, deep learning technology is applied to analyze FY-4A satellite data, specifically focusing on channel 7 (3.5 4\(\upmu\)m) of the Fengyun-4 satellite. Spectral characteristic analysis shows that the radiation values of channel 7 are highly sensitive to the scale distribution, shape, and thermodynamic phase (water and ice) of particles near the cloud top. This sensitivity makes the channel particularly suitable for identifying larger, ice-phase particles, such as hail. Researchers worldwide have utilized similar channel data from satellites like NOAA to monitor and identify hail clouds by setting thresholds or establishing specific indicators. Drawing on these studies, our model particularly utilizes the data from channel 7 of the FY-4A satellite for in-depth analysis and identification of hail clouds. These data are integrated into a deep learning model to enhance the monitoring and identification capabilities for hail events, especially in convective active areas.

Utilizing Convolutional Neural Networks (CNNs), this study conducts an in-depth analysis of FY-4A satellite data, effectively identifying hail events. Convection in the atmosphere, as a primary means of vertical movement of heat and moisture, has a critical impact on hail formation. The CNN focuses on analyzing changes in cloud top temperature and morphology in the satellite observational data, which often indicate potential hail formation. These changes reveal the vertical development of clouds during the convective process, providing important clues for identifying hail events.

Updrafts play a crucial role in the formation of hail. The model indirectly detects the presence of updrafts by analyzing the vertical thickness of clouds and their dynamic changes. Specifically, the model can identify clouds with significant updraft characteristics, such as rapidly developing cumulonimbus clouds, thereby identifying potential hail events.

The deep learning model used in this study does not directly simulate complex meteorological processes but instead identifies features related to these processes indirectly by learning and analyzing patterns in satellite images. The model, through analyzing a large amount of historical data, learns cloud patterns closely associated with hail formation. These features, closely related to meteorological concepts, enable the model to exhibit excellent capability in handling complex meteorological data.

Although deep learning technology excels in analyzing complex meteorological data, understanding and interpreting the model’s output still relies on basic knowledge of meteorology and physics. By combining these concepts with advanced deep learning methods, this study can more accurately interpret the model’s predictive results and provide a scientific basis for a deeper understanding of the physical processes of hail formation.

CBAM

CBAM (Cost Benefit Analysis Method)32 is a simple yet effective feed-forward convolutional neural network attention module proposed by Woo et al. in 2018. In contrast to the SE(Squeeze-and-Excitation) attention mechanism in EfficientNet, CBAM integrates channel and spatial attention mechanisms together, facilitating simultaneous consideration of inter-channel correlations and spatial details. The spatial attention mechanism takes into account the importance of different positions in the feature map when calculating attention weights, which helps the model achieve better performance in handling spatial information in images. Moreover, amidst a large amount of information features, CBAM focuses attention on the most relevant information for the current task, thereby suppressing unnecessary features. The internal structure of the CBAM module first utilizes the channel attention module and then applies the spatial attention module, as shown in Fig. 3.

By applying channel attention, the input feature map dynamically learns the importance weight of each feature channel. This process involves computing the weight for each feature channel to capture the crucial information of the input features more effectively and outputting the optimized intermediate feature map. The channel attention module is illustrated in Fig. 4.

Assuming the input feature map F undergoes two parallel MaxPool layers and AvgPool layers, transforming the features from C\(\times\)H\(\times\)W to C\(\times\)1\(\times\)1 in size, followed by a shared Multilayer Perceptron (MLP) module. In this module, the channel dimension is first compressed to 1/r times its original size, then expanded back to the original channel size, and finally passed through the ReLU activation function to obtain two activated results. These two output results are element-wise added, and the merged feature vector is then mapped to [0,1] using a sigmoid activation function, resulting in the Channel Attention . The calculation process is given by:

where

\(\sigma\) represents the sigmoid activation function;

MLP represents the Multilayer Perceptron module;

AvgPool denotes global average pooling;

MaxPool denotes global max pooling.

The obtained output \(M_c\) is element-wise multiplied with the input feature map F, resulting in the adjusted intermediate feature map \(F^{'}\) with channel attention. The calculation process is given by:

where \(\bigotimes\)denotes element-wise multiplication; \(F^{'}\)represents the intermediate feature map outputted by the channel attention submodule.

In this study, we enhanced the spatial attention mechanism by replacing the global average pooling and global max pooling operations used in the original spatial attention module. While these operations effectively preserve the texture and background information of the image, they inevitably lead to the loss of some meaningful features. To address this, we employed 1\(\times\)1, 3\(\times\)3, and 5\(\times\)5 convolutions to replace the max pooling and average pooling33. Different convolution kernel sizes enable the capture of features with varying receptive fields: larger kernels extract more global features, whereas smaller kernels capture more local features.

The feature map \(F^{'}\) of this module undergoes three convolution operations, resulting in the formation of two [1, W, H] weight vectors. These two feature maps are stacked using the Concat method, yielding a [2, H, W] spatial weight feature map. A subsequent 7x7 convolution operation is applied to reduce it to [1, H, W]. The resulting single-channel feature map represents the importance of each point on the feature map, where higher values indicate greater significance.

Next, the spatial weight [1, W, H] is element-wise multiplied with the original feature map [C, H, W], assigning weights to each point on the [H, W] feature map. This process is followed by applying the Sigmoid function to generate the final spatial attention feature map \(M_s\). The expression for\(M_s\)is as follows:

where \(f^{7\times 7}\)represents a 7\(\times\)7 convolution kernel.

The spatial attention module complements the channel attention module, enhancing the model’s recognition and localization of important targets based on their importance at different locations. The spatial attention module is illustrated in Fig. 5.

Finally, the spatial attention weight result \(M_s\) is element-wise multiplied with the channel attention output result \(F^{'}\) to obtain the CBAM output result \(F^{''}\), as given by:

\(F^{''}\)represents the optimized feature map, which has been refined by the channel attention module and spatial attention module, enhancing the model’s capacity to learn key features in the image. This attention mechanism aids the model in effectively focusing on important regions in the spatial domain and suppressing unnecessary features, thereby improving the model’s recognition capability.

ECA

The ECA (Efficient Channel Attention) module34 is an efficient channel attention module. It employs a non-reducing local cross-channel interaction strategy and an adaptive selection of one-dimensional convolution kernel size for attention mechanism. The ECA attention mechanism module directly utilizes a 1x1 convolutional layer after the global average pooling layer to replace the fully connected layer. This module avoids dimension reduction while effectively capturing cross-channel interactions. In comparison to the SE block35, the ECA module does not reduce channel dimensions after global average pooling. Instead, it considers each channel and its k neighbors for local cross-channel interaction information. The schematic diagram of the ECA module is depicted in Fig. 6.

After applying global average pooling to the input feature map, the channel attention is generated using one-dimensional convolution. The adaptive one-dimensional convolution kernel size, k, is calculated based on the number of channels, C, in the feature map. Additionally, k represents the coverage of local cross-channel interaction, indicating that k neighbors participate in predicting the attention for each channel. The value of k is utilized in the one-dimensional convolution to derive the weights for each channel in the feature map. Finally, the normalized weights are element-wise multiplied with the original input feature map, resulting in the weighted feature map. The formula for the one-dimensional convolution kernel size is expressed as:

where C represents the number of channels;\(y = 2,b = 1\) are utilized to adjust the proportion between the number of channels C and the size of the convolution kernel.

DAM-EfficientNet

The EfficientNet baseline network consists of lightweight inverted bottleneck convolutional layers (Mobile Inverted Bottleneck Convolution, MBConv), each incorporating an SE (Squeeze-and-Excitation) module36. The SE module introduces attention mechanism in the channel dimension, allowing the network to automatically learn the importance of each channel in the feature map. It assigns a weight to each feature based on its importance, thereby enabling the neural network to focus on specific feature channels, enhancing those relevant to the current model, while suppressing less useful channels. However, the SE module only considers channel-wise information encoding and disregards positional information, which is crucial for recognition tasks, thus leading to some impact on the model’s performance in hail identification.

To further enhance the accuracy of the hail image recognition model, this study introduces the ECA (Efficient Channel Attention) and CBAM (Convolutional Block Attention Module) to develop the new DAM-EfficientNet model, aiming to improve the learning of attention mechanisms in hail visual recognition tasks. The DAM-EfficientNet network structure is illustrated in Fig. 7. In comparison to the baseline EfficientNet model, the following enhancements were made in this research:

-

(1)

The CBAM module is integrated in a residual structure after the first convolutional layer of the network. The CBAM module enables adaptive learning of spatial and channel attention weights in the image. This means the model can prioritize important regions and channels in the image, reducing computation on less significant areas. As a result, the model can more accurately extract key information with stronger expressive power, enhancing the global attention of the CNN. The network is less likely to lose crucial information due to previous convolutional operations, thereby improving the model’s ability to distinguish hail images.

-

(2)

The SE module in each MBConv module of the original EfficientNet network is replaced with the ECA module. The ECA module adjusts the weights of each channel, effectively capturing and enhancing useful information, thus improving the discriminative ability of features. This reduction in computation allows for more focused transmission of useful information, thereby enhancing the model’s recognition capability.

As depicted in Fig. 7, the hail classification process of DAM-EfficientNet is as follows: Firstly, any input hail image is preprocessed and transformed into a 224\(\times\) 224 pixels \(\times\) 3 channel image, which is then fed into the model. The feature map after the first convolution operation and the attention feature map enhanced by the CBAM module are multiplied using a residual approach to obtain a feature map with attention information. Subsequently, the hail image’s features are further extracted through 7 lightweight inverted bottleneck convolutional layers embedded with ECA modules, resulting in a 7\(\times\)7 pixels \(\times\) 1280 channel feature map. Finally, the hail image recognition result is obtained through the fully connected layer.

Structure diagram of DAM-EfficientNet. Note: ECA represents Efficient Channel Attention; CBAM represents Convolutional Block Attention Module; Conv represents convolution; Avg Pool represents average pooling; Max_Avg represents maximum pooling; MBConv represents Mobile Inverted Bottleneck Convolution; ReLu, Sigmoid, and Swish represent non-linear activation functions; k represents the convolution kernel size; Concat represents concatenation; BN represents batch normalization; \(\oplus\) represents channel-wise addition; Dropout represents randomly deactivating nodes.

Experiments and analysis

Experimental environment and parameter settings

In this experiment, the AMD Ryzen 7 4800H processor with a clock frequency of 2.6GHz and the NVIDIA GeForce GTX 2060 graphics card with 6GB of memory were used. The experimental device was equipped with 16GB of memory and ran on the Windows 10 operating system. The deep learning network models were implemented using the PyTorch 2.0.1 framework, with CUDA version 11.0. The experimental parameters for all network models were set as follows: the number of training epochs was fixed at 300, and each input sample had a batch size of 16. The model training process for hail recognition algorithm employed the Adam optimizer37 to update parameters, with an initial learning rate of 0.0001, and exponential decay rates \(\beta _1\) and \(\beta _2\) set to 0.9 and 0.999, respectively. To meet the constraints imposed by the hail recognition algorithm experiment, and to achieve a balance between accuracy and speed in hail recognition, the model’s input image size was set to 224\(\times\)224.

Validation and evaluation metrics

In this study, we will use the same dataset for both training and testing the model through cross-validation. We will calculate various quantitative evaluation metrics, including accuracy (ACC), probability of detection (POD), false alarm rate (FAR), and critical success index (CSI), using the confusion matrix.

The accuracy (ACC) metric represents the proportion of correct predictions made by the classification model over the total number of observations and is computed as follows:

The probability of detection (POD) metric indicates the ratio of correctly classified hail events to the total number of observed hail events, reflecting the frequency of hail detection when hail is present. If all test images containing hail are correctly classified, POD will be equal to 1. On the other hand, if all test images containing hail are incorrectly classified, POD will be equal to 0. The calculation for POD is as follows:

The false alarm rate (FAR) metric represents the ratio of incorrectly classified non-hail events to the total number of predicted hail events. In other words, FAR is a measure of the network’s reliability, and a lower FAR value indicates fewer false alarms. The calculation for FAR is as follows:

The critical success index (CSI) metric combines POD and FAR into a single score and represents the confidence level of the trained network in detecting hailstorms. CSI is calculated as follows:

where TP, FP, FN, and TN represent true positive, false positive, false negative, and true negative, respectively.

Ablation study

In this study, the DAM-EfficientNet model was trained using the aforementioned experimental settings. The experimental dataset consisted of 241 images with hail and 440 images without hail, totaling 681 images. Among these, 545 images were used for training, and 136 images were used for testing. During each training epoch, the results were recorded, and recognition metrics, including accuracy (ACC), probability of detection (POD), false alarm rate (FAR), and critical success index (CSI), were calculated using the confusion matrix.

At the end of each training epoch, the best model was saved locally. Subsequent parameters were compared with those of the best model, and if better parameters were found, they were replaced; otherwise, they remained unchanged. This process resulted in the final optimal model parameters. Through this training process, the DAM-EfficientNet model was progressively optimized and achieved excellent performance on the test set. These experimental designs and training strategies contribute to ensuring a reliable and high-performance recognition model.

DAM-EfficientNet is an improvement over the EfficientNet baseline model. To evaluate the effectiveness of different modules in the proposed approach, five ablation experiments were conducted to test the evaluation metrics of different methods on the baseline model. Firstly, the evaluation metrics of the baseline model were tested. Next, the Adam optimization algorithm was employed on the basis of the EfficientNet baseline model. Adam is an adaptive learning rate optimization algorithm that automatically adjusts the learning rate based on the gradients of different parameters, thereby enhancing model convergence speed and performance. Additionally, the CBAM module was introduced and combined with a residual structure on the basis of the baseline network. The CBAM module is based on channel and spatial attention mechanisms, enabling the network to automatically learn and focus on important feature information, thereby enhancing the model’s representation capability. The introduction of the residual structure helps the network better capture feature and gradient information, enhancing information transmission and learning ability.

Furthermore, the attention mechanism in the backbone network was replaced with the ECA (Efficient Channel Attention) attention module, and Adam optimization was applied. The ECA attention module focuses on the feature response relationship between channels, further improving the attention to different channel features, and enhancing representation capability and performance. Finally, the Adam optimization algorithm, CBAM attention module, and ECA attention residual module were combined in the baseline model to obtain the final DAM-EfficientNet network model, which yielded excellent results. Through these five ablation operations, the DAM-EfficientNet model was improved and optimized to enhance its performance and accuracy. These improvement strategies help the model better learn important features and optimize global parameters through optimization algorithms, resulting in better results.

According to the data in Table 3, when the EfficientNet-B1 model was applied to the hail dataset, the model’s accuracy, POD, FAR, and CSI were 93.38%, 91.67%, 10.20%, and 83.02%, respectively. To further improve accuracy, the baseline model’s SGD optimizer was replaced with the Adam optimizer. The results showed that accuracy increased by 0.74%, CSI increased by 2.43%, and POD increased by 6.24%. Compared to the baseline model, the hit rate for correctly classifying hail significantly improved. From the data comparison, it can be observed that using the Adam optimizer for model optimization is more effective than using the SGD optimizer. However, after adding the Adam optimizer, the FAR increased by 2.76%, indicating a slight impact on predicting non-hail data. As for the improved version EfficientNet-B1-ECA of the EfficientNet-B1 model, the ECA attention mechanism was added in the backbone network to obtain better attention effects than the original network modules. Compared to the baseline network EfficientNet-B1, EfficientNet-B1-ECA improved accuracy, POD, and CSI by 2.21%, 4.16%, and 3.01%, respectively. Compared to the experiment with the Adam optimizer, the experiment with the ECA attention module reduced the FAR by 2.2%, indicating that the addition of the ECA module was more effective in recognizing non-hail data. Additionally, according to the data of the EfficientNet-B1-CBAM module in Table 3, adding the CBAM attention module with residual blocks to the original model increased accuracy from 93.38% to 97.06%, CSI increased from 85.46% to 91.84%, POD slightly increased by 2.08%, and the FAR decreased from 10.20% to 2.17%. This was a significant improvement over the baseline model, demonstrating the effectiveness of adding the CBAM module after the first module. The combination of channel attention mechanism and spatial attention mechanism in CBAM can improve the model’s feature representation ability and further reduce redundant information, enabling the model to focus more accurately on image content. Finally, when applying the proposed DAM-EfficientNet model, accuracy and CSI improved to 98.53% and 95.92%, respectively, and the FAR decreased to 2.08% from 10.20% in the initial baseline model. This indicates a significant improvement over the baseline model. These results demonstrate the feasibility and effectiveness of the proposed model improvement and network training strategies.

In conclusion, the DAM-EfficientNet model is a lightweight deep network model with excellent recognition accuracy.

Comparing with other state-of-the-art methods

In order to validate the reliability of the proposed DAM-EfficientNet method, this study conducted comparative experiments with other classic classification models, including AlexNet38, ResNet-34, DenseNet-16939, and ShuffleNet40, while keeping the training parameters consistent.

AlexNet is a well-known deep learning model with outstanding performance in classification tasks, characterized by fewer parameters and lower computational complexity. ResNet, on the other hand, is a classic model in residual neural networks, addressing the vanishing gradient problem in deep training through residual connections. The improved model in this study also utilized residual modules to avoid the issue of gradient vanishing during deep training. DenseNet is another type of dense connection network, promoting information propagation and reuse through connections with all preceding layers, aiding in the extraction of richer feature information. It was chosen as another excellent network for comparison. Lastly, ShuffleNet, like EfficientNet, is a lightweight network that reduces parameters and computational complexity while maintaining good performance through channel shuffling and group convolution. The comparison with ShuffleNet was chosen due to its smaller model size and lower computational complexity, making it suitable for experiments in resource-limited scenarios. The experimental results are shown in Table 4.

As seen from the results, DAM-EfficientNet achieved the best performance in terms of accuracy, POD, FAR, and CSI on the test set, exhibiting significant advantages over all baseline models. This indicates the effective performance of DAM-EfficientNet. ShuffleNet, with the smallest parameter size of 8.69M and FLOPs of 0.156G, had a relatively lower accuracy on the test set at 92.65%. Compared to ShuffleNet, ResNet-34 and AlexNet performed better on the test set with accuracies of 95.59% and 94.12%, respectively, indicating that increasing the depth of the model can improve accuracy. DenseNet-169 performed well in various metrics, with a parameter size (32.8M) similar to DAM-EfficientNet, but it had relatively higher FLOPs (2.88G). In practical applications, recognition accuracy is the most critical metric, and thus, DAM-EfficientNet still holds an advantage.

In this study, when the methodology described was benchmarked against advanced techniques on a dataset dedicated to hail classification, the proposed DAM-EfficientNet model delivered remarkable outcomes. The incorporation of spatial and channel attention modules could further refine its efficacy. This study’s algorithm exhibited notable generalization performance and optimal accuracy on the test dataset. Furthermore, DAM-EfficientNet exhibited fewer parameters and lower FLOPs compared to other baseline models. The model size was 25.46MB, only slightly larger than the lightweight ShuffleNet network. To facilitate a more intuitive performance comparison, recognition accuracy curves for each model were plotted (see Fig. 8). From Fig. 8, it can be observed that, during the training process, the convergence becomes stable around epoch 300, with less fluctuation in the accuracy of other methods. DAM-EfficientNet consistently outperforms other recognition models in accuracy throughout the training process.

Verification results

In this study, we conducted further validation of the accuracy and reliability of hail weather identification using the DAM-EfficientNet method on two hail weather events in the Heilongjiang and Hubei regions, which occurred on June 6, 2023, and June 11, 2023, respectively. Based on real-time weather conditions, hail-dominant weather was observed in Hegang, Heilongjiang, between 10:50 and 11:20 on June 6, and in Huantan Town, Suizhou City, Hubei, around 14:00 on June 11. The identification results for these specific time periods are presented in Figs. 9 and 10, respectively, where the weights ranging from 1 to 8 represent the accuracy of hail precipitation detection, with higher weights indicating more reliable results.

We performed hail weather identification on Fengyun-4 satellite imagery using channels 7 to 14 simultaneously and visualized the results as heatmaps. The heatmap’s weight indicates the number of channels in which hail was detected in a particular region, with weights ranging from 0 (no hail detected) to 8 (hail detected in all channels). The colors in the heatmap correspond to the weight value, with higher values indicating a higher likelihood of hail weather in the respective regions.

The results demonstrate that the DAM-EfficientNet model achieved the highest accuracy in hail precipitation detection in Mudanjiang, Heilongjiang (weight of 8), and Suizhou City, Hubei (weight of 8), during the specified time periods. This successful validation indicates that these regions were the most likely to experience hail during the mentioned timeframes. Overall, the DAM-EfficientNet model effectively utilized Fengyun-4 satellite data for hail weather identification. However, it has a limitation in precisely identifying local and small-scale regions.

Hail Prediction Map for China’s Heilongjiang Province on June 6, 2023, from 11:15 to 11:30 The weights range from 1 to 8, representing the accuracy weight of hail prediction, with higher weights indicating more reliable results. Note: This map was created utilizing Python 3.8, enhanced by the crucial functionalities of Geopandas 0.8.1, Matplotlib 3.3.1, and Pandas 1.1.3. For additional information, visit: https://bit.ly/486tfYA.

Hail Prediction Map for China’s Hubei Province on June 11, 2023, from 13:45 to 14:00 The weights range from 1 to 8, representing the accuracy weight of hail prediction, with higher weights indicating more reliable results. Note: This map was created utilizing Python 3.8, enhanced by the crucial functionalities of Geopandas 0.8.1, Matplotlib 3.3.1, and Pandas 1.1.3. For additional information, visit: https://bit.ly/486tfYA.

Conclusion

Based on ground hail observation data from Guizhou Province between 2019 and 2022, this research conducted a comprehensive study on hail identification. By employing deep learning methods to classify and train images generated from Fengyun-4 satellite cloud map files, the study achieved a high level of accuracy in hail identification. Moreover, an improved DAM-EfficientNet network structure was proposed, which demonstrated outstanding performance in hail identification. The research findings not only complement existing achievements in the field but also provide strong support for enhancing weather disaster warning and prevention systems.

The research yielded two significant outcomes. Firstly, it achieved remarkable accuracy results through the utilization of deep learning techniques for classifying and training images generated from Fengyun-4 satellite cloud map files. Secondly, the study introduced an enhanced DAM-EfficientNet network structure that exhibited superior performance in hail identification.

In comparison to prior research, this study boasts two notable advantages in the realm of hail identification. Firstly, it solely relied on Fengyun-4 satellite cloud map data for training, thereby eliminating the need to incorporate additional meteorological data. This simplified data acquisition process offers a more practical and convenient approach for hail identification applications. Secondly, the study harnessed the improved DAM-EfficientNet network structure for hail identification, surpassing other models and significantly improving identification accuracy and robustness.Therefore, the DAM-EfficientNet model is meaningful as it only requires FY-4A satellite data to detect the occurrence of hail events.

However, despite achieving commendable results, this research does have certain limitations. Primarily, the difficulty in data acquisition led to a restricted training dataset, potentially limiting the model’s generalization capabilities. Additionally, the study focused solely on Guizhou Province data, neglecting regional variations in other provinces. To address these issues, future research can expand the dataset to include observation data from other provinces, thereby enhancing the model’s adaptability and accuracy in diverse regions. Furthermore, considering temporal data training to predict hail weather in future time periods could prove beneficial in advancing the research’s practical applications.

Data availability

The satellite data used in this study was sourced from the China Meteorological Science Data Center (https://data.cma.cn/). The hail data in Guizhou Province, China, was provided by the Guizhou Provincial Meteorological Bureau. We have processed and organized the dataset, and it has been uploaded to GitHub (https://github.com/dhn9132/hail_images.git) for download and use. For the code that supports the findings of this research, as per the requirements, you may obtain it by contacting the corresponding author. Please consult the corresponding author for instructions on how to access the code.

References

Gall, M., Borden, K. A., Emrich, C. T. & Cutter, S. L. The unsustainable trend of natural hazard losses in the united states. Sustainability 3, 2157–2181. https://doi.org/10.3390/su3112157 (2011).

Changnon, S. A. Increasing major hail losses in the U.S. Clim. Change 96, 161–166. https://doi.org/10.1007/s10584-009-9597-z (2009).

Velten, S., Leventon, J., Jager, N. & Newig, J. What is sustainable agriculture? A systematic review. Sustainability 7, 7833–7865. https://doi.org/10.3390/su7067833 (2015).

Battaglia, M., Lee, C., Thomason, W., Fike, J. & Sadeghpour, A. Hail damage impacts on corn productivity: A review. Crop Sci. 59(1), 1–14. https://doi.org/10.2135/cropsci2018.04.0285 (2019).

Hohl, R., Schiesser, H. H. & Knepper, I. The use of weather radars to estimate hail damage to automobiles: An exploratory study in Switzerland. Atmos. Res. 61(3), 215–238. https://doi.org/10.1016/S0169-8095(01)00134-X (2002).

Bell, J. & Molthan, A. Evaluation of approaches to identifying hail damage to crop vegetation using satellite imagery. J. Oper. Meteorol.https://doi.org/10.15191/nwajom.2016.0411 (2016).

Punge, H., Bedka, K., Kunz, M., Bang, S. & Itterly, K. Characteristics of hail hazard in South Africa based on satellite detection of convective storms. Natl. Hazards Earth Syst. Sci. Discuss.https://doi.org/10.5194/nhess-23-1549-2023 (2021).

Botzen, W. J. W., Bouwer, L. M. & Bergh, J. C. J. M. Climate change and hailstorm damage: Empirical evidence and implications for agriculture and insurance. Resource Energy Econ. 32(3), 341–362. https://doi.org/10.1016/j.reseneeco.2009.10.004 (2010).

Heinselman, P. L. & Ryzhkov, A. V. Validation of polarimetric hail detection. Weather Forecast. 21(5), 839–850. https://doi.org/10.1175/WAF956.1 (2006).

Bringi, V. N., Seliga, T. A. & Aydin, K. Hail detection with a differential reflectivity radar. Science 225(4667), 1145–1147. https://doi.org/10.1126/science.225.4667.1145 (1984).

Ferraro, R., Beauchamp, J., Cecil, D. & Heymsfield, G. A prototype hail detection algorithm and hail climatology developed with the advanced microwave sounding unit (amsu). Atmos. Res. 163, 24–35. https://doi.org/10.1016/j.atmosres.2014.08.010 (2015).

Melcón, P., Merino, A., Sánchez, J., López, L. & Hermida, L. Satellite remote sensing of hailstorms in France. Atmos. Res. 182, 221–231. https://doi.org/10.1016/j.atmosres.2016.08.001 (2016).

Gagne, D. J. et al. Storm-based probabilistic hail forecasting with machine learning applied to convection-allowing ensembles. Weather Forecast. 32(5), 1819–1840. https://doi.org/10.1175/WAF-D-17-0010.1 (2017).

Liu, X. et al. Classified identification and nowcast of hail weather based on radar products and random forest algorithm. Plateau Meteorol. 40(4), 898–908 (2021).

Yu, Y. Bayesian discrimination of hail clouds based on radar echo parameters. Shandong Meteorol. 4, 22–25. https://doi.org/10.19513/j.cnki.issn1005-0582.1985.04.008 (1985).

Li, B., Tang, X. & He, J. Hail identification based on machine learning methods. J. Meteorol. Sci. 42(05), 581–590. https://doi.org/10.12306/2021jms.0106 (2022).

Pullman, M., Gurung, I., Maskey, M., Ramachandran, R. & Christopher, S. A. Applying deep learning to hail detection: A case study. IEEE Trans. Geosci. Remote Sens. 57(12), 10218–10225. https://doi.org/10.1109/TGRS.2019.2931944 (2019).

Lan, M. et al. Hail automatic recognition based on deep learning method. Hubei Agric. Sci. 60(S2), 376–381. https://doi.org/10.14088/j.cnki.issn0439-8114.2021.S2.099 (2021).

Kolios, S. Hail detection from meteosat satellite imagery using a deep learning neural network and a new remote sensing index. Adv. Space Res.https://doi.org/10.1016/j.asr.2023.06.016 (2023).

Ortega, K. L. et al. The severe hazards analysis and verification experiment. Bull. Am. Meteor. Soc. 90(10), 1519–1530. https://doi.org/10.1175/2009BAMS2815.1 (2009).

Blair, S. F. et al. High-resolution hail observations: Implications for nws warning operations. Weather Forecast. 32(3), 1101–1119. https://doi.org/10.1175/WAF-D-16-0203.1 (2017).

Guo, Q., Han, Q. & Xie, L. Research on geostationary orbit fy-4 meteorological satellite simulator. Measurement and Control Technology (2023). [Online] Available: https://doi.org/10.19708/j.ckjs.2023.01.208

Lindsey, D. T., Hillger, D. W., Grasso, L., Knaff, J. A. & Dostalek, J. F. Goes climatology and analysis of thunderstorms with enhanced 3.9-\(\mu\)m reflectivity. Mon. Weather Rev. 134(9), 2342–2353. https://doi.org/10.1175/MWR3211.1 (2006).

Zhuge, X. & Zou, X. Summertime convective initiation nowcasting over southeastern china based on advanced Himawari imager observations. J. Meteorol. Soc. Jpn. Ser. II 96, 337–353. https://doi.org/10.2151/jmsj.2018-041 (2018).

Schmit, T. J. et al. A closer look at the abi on the goes-r series. Bull. Am. Meteorol. Soc. 98, 681–698. https://doi.org/10.1175/BAMS-D-15-00230.1 (2017).

Schmetz, J. et al. An introduction to meteosat second generation (msg). Bull. Am. Meteorol. Soc. 83, 977–992. https://doi.org/10.1175/1520-0477(2002)083<0977:AITMSG>2.3.CO;2 (2002).

Yang, J., Zhang, Z., Wei, C., Lu, F. & Guo, Q. Introducing the new generation of chinese geostationary weather satellites, fengyun-4. Bull. Am. Meteorol. Soc. 98, 1637–1658. https://doi.org/10.1175/BAMS-D-16-0065.1 (2017).

Tan, M. & Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In: International Conference on Machine Learning, pp. 6105–6114 (2019). https://doi.org/10.48550/arXiv.1905.11946 . PMLR

Atila, Ü., Uçar, M., Akyol, K. & Uçar, E. Plant leaf disease classification using efficientnet deep learning model. Eco. Inform. 61, 101182. https://doi.org/10.1016/j.ecoinf.2020.101182 (2021).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016). https://doi.org/10.48550/arXiv.1512.03385

Howard, A.G., Zhu, M., Chen, B., Kalenichenko, D., Wang, W., Weyand, T., Andreetto, M. & Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:1704.04861 (2017) https://doi.org/10.48550/arXiv.1704.04861

Woo, S., Park, J., Lee, J.-Y. & Kweon, I.S. Cbam: Convolutional block attention module. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 3–19 (2018). https://doi.org/10.48550/arXiv.1807.06521

Xia, Y., Xu, X. & Pu, F. Pcba-net: Pyramidal convolutional block attention network for synthetic aperture radar image change detection. Remote Sens. 14(22), 5762. https://doi.org/10.3390/rs14225762 (2022).

Wang, Q., Wu, B., Zhu, P., Li, P., Zuo, W. & Hu, Q. Eca-net: Efficient channel attention for deep convolutional neural networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11534–11542 (2020). https://doi.org/10.1109/CVPR42600.2020.01155

Hu, J., Shen, L. & Sun, G. Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2018). https://doi.org/10.48550/arXiv.1709.01507

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A. & Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4510–4520 (2018). https://doi.org/10.48550/arXiv.1801.04381

Kingma, D.P. & Ba, J. Adam. a method for stochastic optimization. arxiv preprint (2014). arXiv:1412.6980. https://doi.org/10.48550/arXiv.1412.6980

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst.https://doi.org/10.1145/3065386 (2012).

Huang, G., Liu, Z., Van Der Maaten, L. & Weinberger, K.Q. Densely connected convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4700–4708 (2017). https://doi.org/10.48550/arXiv.1608.06993

Zhang, X., Zhou, X., Lin, M. & Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6848–6856 (2018). https://doi.org/10.48550/arXiv.1707.01083

Author information

Authors and Affiliations

Contributions

R.L.: Conceptualization, Data treating, Formal analysis, Writing-original draft, Visualization. H.D: Conceptualization, Methodology, Formal analysis, Writing-review & editing. Y.C.: Formal analysis, Writing-review & editing. H.Z., D.W., H.L., D.L., and C.Z.: Formal analysis, Visualization. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, R., Dai, H., Chen, Y. et al. A study on the DAM-EfficientNet hail rapid identification algorithm based on FY-4A_AGRI. Sci Rep 14, 3505 (2024). https://doi.org/10.1038/s41598-024-54142-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-54142-5

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.