Abstract

We evaluate the diagnostic performance of deep learning artificial intelligence (AI) for bladder cancer, which used white-light images (WLIs) and narrow-band images, and tumor grade prediction of AI based on tumor color using the red/green/blue (RGB) method. This retrospective study analyzed 10,991 cystoscopic images of suspicious bladder tumors using a mask region-based convolutional neural network with a ResNeXt-101-32 × 8d-FPN backbone. The diagnostic performance of AI was evaluated by calculating sensitivity, specificity, and diagnostic accuracy, and its ability to detect cancers was investigated using the dice score coefficient (DSC). Using the support vector machine model, we analyzed differences in tumor colors according to tumor grade using the RGB method. The sensitivity, specificity, diagnostic accuracy and DSC of AI were 95.0%, 93.7%, 94.1% and 74.7%. In WLIs, there were differences in red and blue values according to tumor grade (p < 0.001). According to the average RGB value, the performance was ≥ 98% for the diagnosis of benign vs. low-and high-grade tumors using WLIs and > 90% for the diagnosis of chronic non-specific inflammation vs. carcinoma in situ using WLIs. The diagnostic performance of the AI-assisted diagnosis was of high quality, and the AI could distinguish the tumor grade based on tumor color.

Similar content being viewed by others

Introduction

Bladder tumor diagnosis and operation was usually performed using cystoscope. Among cystoscopic findings for bladder tumor, the prediction of benign, malignancy was depended on the experience of the urologist1. At transurethral resection of bladder tumor (TURBT), an experienced urologist estimated presence of malignancy lesion and tumor grade, and decided whether to perform superficial resection or additional bladder muscle biopsy. However, it is difficult to differentiate between chronic non-specific inflammation (CNI) and carcinoma in situ (CIS) and between low and high grade among urothelial carcinomas, and it is difficult to distinguish even when mass is too small. The national comprehensive cancer network guideline recommended consideration of the second look TURBT if the necessary muscle biopsy is not performed or incomplete TURBT2. The existing white light image (WLI) cystoscope has limitations in effective identifying the characteristics for small bladder tumors, flat tumors such as CIS. To increase diagnostic accuracy, narrow band image (NBI) cystoscope and blue light cystoscope have been developed3,4,5. Based on accurate diagnosis performed through these enhanced cystoscopes, high-quality TURBT without misdiagnosis, under-staging, or incomplete resection is the most important factor in non-muscle invasive urothelial bladder cancer management6. However, still better diagnostic image modality was required for high-quality TURBT.

Tumor grade is an important factor in determining whether or not to perform a muscle biopsy. High grade bladder tumor and low grade bladder tumor are empirically classified according to the shape of the tumor (papillary vs. sessile). However, it is not always clearly differentiated according to the tumor shape, it is difficult to distinguish flat tumors, such as CIS, from benign inflammatory lesions such as CNI. For increasing representativeness and overcoming the limitation of reliance on empirical distinguishing of tumor grades, artificial intelligence (AI) that used in various fields including the medical field was introduced in urological endoscope field. There are still few studies in the field of urology, especially bladder cancer diagnosis using AI. With the development of deep learning, image recognition by AI proved high accuracy in diagnosis7. AI-based image diagnosis is a potential modality to improve bladder cancer detection. For clinical application of AI in bladder tumor diagnosis, it is necessary distinguishing between the tumor grades (high grade vs. low grade, CIS vs. CNI, etc.) as well as determining cancer and non-cancer, however there was no study have been reported.

AI is a model that predicts based on learned data, and authors considered shapes and color as learned data. Several studies using numerical data of color using red/green/blue (RGB) method8 have already been reported in other carcinomas9,10,11, and we also have reported the study using numerical color data for hypoechoic lesions of transrectal ultrasound12. Accordingly, the authors performed deep learning of AI by collecting WLI and NBI cystoscopic images of histopathologically confirmed benign and malignant lesions and confirmed the diagnostic performance and ability to identify tumor contours. And making color data based on the tumor contours identified by AI, it was analyzed whether the tumor grades were able to distinguished according to the color of the tumor.

Results

Bladder cancer diagnostic performance of AI-assisted diagnostic device

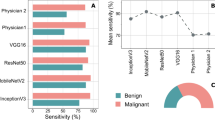

The sensitivity of the AI-assisted diagnostic device was 95.0% (285/300 cases), the 95% confidence interval (CI) was 91.9–97.2, and the specificity was 93.7% (562/600 cases) and the 95% CI was 91.9–97.2. The diagnostic accuracy of the AI-assisted diagnostic device was 94.1% (847/900 cases), and the 95% CI was 92.4–95.6. The area under the receiver characteristic curve was 0.974 and cut-off value was 0.384 (Fig. 1). The accuracy of lesion location recognition of the AI-assisted diagnostic device was confirmed to be 74.7% for dice score coefficient (DSC) and 74.6–74.9 for 95% CI.

Baseline RGB characteristics

We conducted the study only with anonymized cystoscopic images and histopathological pathology, so we could not identify other patient characteristics, including the patient's sex or laboratory values. The characteristics of RGB values according to benign tumor or tumor grade are presented in Table 1. In WLI, there were differences in red and blue values according to tumor grade (p < 0.001). In RGB values measured NBI or WLI by AI, there were no difference between benign vs. low grade and high grade, CNI vs. CIS. But between benign and low grade vs. high grade, there was a difference in red (p < 0.001) and blue (p < 0.001) values in WLI (Table 2).

Diagnostic performance of tumor grade by color measured by AI

The optimal cut-off value and diagnostic performance by support vector machine (SVM) was presented Table 3. Overall, the diagnostic performance was better in WLI than in NBI. In WLI, at diagnosis of benign vs. low grade and high grade, there were excellent results of 98% or more in both sensitivity and specificity and diagnostic accuracy. In distinguishing between CNI and CIS, both sensitivity, specificity, and diagnostic accuracy were more than 90% in WLI. In benign and low grade vs. high grade, although the diagnostic performance was relatively low, the separating hyperplane using SVM was classified two groups in WLI (Fig. 2).

Discussion

We confirmed that the newly developed AI-assisted diagnostic device has good diagnostic performance and ability to identify tumor contours, and tumor grade was distinguished by colors inside tumor contour that recognized by AI. The AUC for benign and low-grade tumors vs. high-grade tumors was relatively low, however, it was distinguished by separating hyperplane using SVM in WLI. Our results help follow-up studies of cystoscopy using AI.

Cystoscopy is the gold standard for bladder cancer diagnosis. Imaging modalities such as abdominal pelvic computed tomography and bladder magnetic resonance imaging are also used to diagnose bladder cancer. These modalities are useful for determining whether bladder cancer has metastasized or invaded the muscle layer or beyond but have limitations in identifying small tumors in the early stages. Additionally, it is more challenging to diagnose bladder tumors if the bladder is empty. Several guidelines recommend the use of cystoscopy when bladder cancer is suspected. However, it is sometimes difficult to differentiate between benign and malignant bladder tumors, and the diagnosis of CIS is challenging. Bladder cancer is prone to recurrence and is characterized by multiple lesions. The accurate identification of tumor margins and complete TURBT are crucial in preventing recurrence. However, although NBI cystoscopy and blue-light cystoscopy can overcome the limitations of white-light cystoscopy, better diagnostic tools are needed. Our results demonstrated the potential of AI models for accurately diagnosing bladder cancer.

In the field of urology, prostate cancer is being extensively studied from diagnosis to treatment using AI13. However, in the field of cystoscopy using AI, to our knowledge, there are only six reports14,15,16,17,18,19. Chan et al. reported a review article on previous studies on bladder cancer diagnosis using AI7. This study reviewed 1 brief correspondence, 1 presentation, and 4 research papers. The training dataset volumes for these reports were between 1680 and 6658 images, and our AI model was trained 8244 images, more than in these previous reports. The combined sensitivity was 89.7% (95% CI 87.5–91.4%) and the integration specificity was 96.1% (95% CI 89.0–98.7%), the diagnostic accuracy was 85.6–96.9%. Only one study was identified that evaluated the ability of AI to identify bladder image using DSC20. The DSC of this previous study was an average of 0.67, which was slightly lower than our result, but there was a difference in evaluating the ability to identify bladder stones and ureteral orifice in addition to tumors. The results of our AI-assisted diagnostic device are similar to or slightly superior to those of previous reports.

Our study is valuable and scalable as it is the first study to bladder lesions using RGB method. There were some previous studies using RGB method to distinguish pulmonary nodule21, esophageal lesions22, and skin lesions23. However, there were no studies using RGB method in the field of cystoscopy, and no studies in the field of urology that machine-learned the average RGB inside AI-identified tumor contours so we could not comparable, but this also presented good results. We have reported a successful study by applying the RGB method in transrectal ultrasound12. The color of bladder mass is different from that of the normal bladder mucosa, we made numerical data that the color of bladder tumor through RGB method. In our previous study, RGB was calculated as the average of three points of hypoechoic lesion on transrectal ultrasound, but in this study, the average RGB value of the area inside the tumor contours recognized by AI was calculated. This improved the representativeness of the RGB values of tumor compared to our previous study.

Our results using RGB in WLI show superior performance in specificity than the results of conventional NBI, especially in distinguishing between CNI and CIS (0.912 [95% CI 0.892–0.933] vs. 0.768 [95% CI 0.730–0.802])24. NBI limited the optical spectrum used in cystoscope by Wlters which allow transmission of light at short wavelengths of 415 nm (blue) and 540 nm (green)24,25. This allows light to penetrate the bladder surface tissue and hemoglobin preferentially absorbs these wavelengths, increasing the visibility of capillaries and submucosal vessels. According to a previous meta-analysis, NBI presented higher diagnostic accuracy than WLI in humans24. However, our results presented a higher AUC in WLI. It is presumed that this is because the limited short wavelength of light limits the difference in intrinsic color according to the grade of the tumor.

We confirmed the effectiveness of the deep learned AI-assisted diagnostic device and at the same time confirmed that the tumor grade was able to classify according to the color of the tumor. Our study is one of the few studies that confirmed the usefulness of AI in cystoscopic image diagnosis and is the first study to classify tumor grades through machine learning of average RGB inside tumor contour recognized by AI. We wonder if better results will be obtained if the deep learned AI additionally learns RGB data of tumor color. The results of these additional studies will be able to check whether the variables that AI learned included color data. Our study is valuable as a pilot study in this field, it had many limitations. First, our study reports that the diagnostic performance is good, but the number of initially trained images may not be sufficiency in the deep learning field. By training more numbers and diverse types of images, higher diagnostic performance and more similar tumor contours identification expected. The DSC for tumor identification is 0.75, and the average RGB value was measured based on images with a higher concordance rate of tumor identification between the physician and AI, but the RGB value may still be different from the RGB value of the actual tumor. Again, if more images are trained, more accurate RGB values were obtained. Second, as our first experience to evaluate the color of the overall tumor, color normalization was not performed because the color space in rendered images would differ significantly from that in real images. Additional considerations for proper color normalization processing are required. Third, since only internal test was performed about diagnostic performance of AI, we need to confirm the results of our study with external validation. In addition, as the study was conducted on per-image-basis, the independence between data sets may have been lower than that if the study was conducted on a per-patient-basis, and this may have affected the results. The multicenter prospective study we are planning will consider this issues. And our color data were divided according to tumor grade, the number of cases was insufficient in some types, including benign lesion images. Therefore, we focused on training rather than training and validation of existing data. Because of this, the possibility of overtraining cannot be ruled out. Additional validation and test is required and may require further training with larger number of color data. Fourth, the initially trained images were prepared by a urologist, it is consistent, but there is a possibility that the lesion may have been missed or underestimated or overestimated. Finally, differences according to urine turbidity and the distance between the target and the endoscope were not considered.

AI is widely used in various medical fields, and in the field of urology, it is applied from diagnosis to treatment for prostate cancer. However, research in the field of cystoscope is still insufficient. We developed an AI-assisted diagnostic device that distinguishes cancer and benign lesions by identifying bladder tumor contour through deep learning and confirmed that tumor grades are classified using SVM according to the color of the tumors obtained thereby. Our study is still in its infancy, but we hope that it will serve as the foundation for the development of a real-time AI-assisted cystoscope in the future.

Methods

Ethic approval

This study was approved by the institutional ethics committee (Yonsei University Health System, Seoul, Korea; approval number: 3-2021-0287) and all procedures were conducted in accordance with the ethical standards of the 1964 Declaration of Helsinki and its later amendments. The informed consent requirement was waived by ethics committee because this study was based on retrospective, anonymous patient data and did not involve patient intervention or the use of human tissue samples.

Images collection and annotated data set

We retrospectively collected data from 1010 consecutive patients who diagnosis bladder cancer by TURBT and 290 consecutive patients who diagnosis benign lesions by TURBT or diagnosis normal by cystoscopic exam between January 2017 and December 2020. Data were obtained from anonymized cystoscopic images and pathologic results. We collected 16,581 cystoscopic images of patients diagnosed with bladder cancer and 3957 cystoscopic images of patients diagnosed with benign lesions. All images were captured using flexible cystoscope (CYF-V2, Olympus Medical Systems Corporation, Tokyo, Japan). Among the acquired images, images of impacted stone in the bladder lesion, difficult to identify due to turbid urine or hematuria or out of focus, tumors except urothelial carcinoma or have no pathologic results were excluded. After screening, in order to secure more images of benign images, only images of clearly benign parts of cancer patients were additional extracted separately by experienced urologists. In the end, 905 patients with bladder cancer (6729 cancer images) and 405 patients with bladder cancer and without bladder cancer (4262 benign images) were classified, and a total of 10,991 images were learned and tested by AI. One urologist with more than 10 years of experience in TURBT identified tumor margins and differentiated between benign from malignant lesions by histopathological analysis. Only one image of each lesion was included in the analysis.

AI-assisted diagnostic device and deep learning

We commissioned Infinyx Corporation to develop an AI-assisted diagnostic device (Robin-Cysto®, Infinyx, Daegu, South Korea) that automated analysis cancer and non-cancer through cystoscopic images. The images that we provided were divided into a deep learning set (10,091 images) and a test set (900 images, 300 benign images of 100 patients and 600 cancer images of 223 patients). The 300 benign images of 100 patients without bladder cancer used in the test set were extracted from the 290 patients without bladder cancer data set and tested. Among the images of patients with cancers, a benign image was not included in test set. The deep learning set was divided into the training set for the predictive model (8244 images, 2742 benign images and 5502 cancer images) and the validation set (1847 images, 1220 benign images and 627 cancer images) by Infinyx Corporation. The training, validation, and testing sets were classified on a per-image-basis.

The AI model consisted of a Mask RCNN with a ResNeXt-101-32 × 8d-FPN backbone. Feature maps were extracted from each layer using a feature pyramid network. For learning, image data was input into the AI model, and the AI model predicted the tumor contour. The AI model compared the real tumor area and the estimated tumor area using loss function, and the model weight was corrected using the extracted loss. With regard to loss functions, classification used log loss, bbox regression used smooth L1 loss, and mask learning used sigmoid cross-entropy loss for each pixel. Total loss was the sum of these three losses. The optimizer applied a momentum of 0.9 and a weight decay of 0.0001 to stochastic gradient descent, and the learning rate (LR) started at 0.001 with 30,000 steps and ended at 0.0001 with 70,000 steps. The accuracy was calculated by inputting the dataset to the AI model and the modified model weights, and the above process was repeated, and the weighted model with the highest accuracy was saved. The final training was conducted with 90,000 steps, and the batch size per step was 6 (Figs. 3, 4). The image size ratio remained unchanged during training, and image height was randomly resized to 480, 640, 720, and 1080 pixels. The code was used Python language (version 3.9.9.; Python Software Foundation, Wilmington, DE), and the PyTorch library was used.

A schematic process for building an AI deep learning model. AI artificial intelligence, bbox bounding box, Conv convolutional layer, DICOM digital imaging and communications in medicine, fc fully connected layer, Mask R-CNN mask region-based convolutional neural networks, ROI resion of interest, RPN region proposal network.

Diagnostic performance of AI-assisted diagnostic device

The deep learned AI reads the digital imaging and communications in medicine format file of the cystoscopy images, and visualizes the contour of bladder tumor on the image with bladder tumor (Fig. 5). Sensitivity, specificity, and diagnostic accuracy were verified with test set (cancer images: 600, benign images: 300), and the tumor area recognized by AI and the tumor area read by physician in the same image were compared with the DSC. The DSC quantifies the overlap between two images of manually segmented lesion and AI recognized lesion26.

Data of tumor color

Among the 8244 images in the training set, color data were collected for the 5568 images in which the concordance rate of tumor identification by physician and AI were higher than DSC (cancer images: 4197, benign images: 1371). Using the embedded function of Robin-Cysto®, data of the color inside the tumor recognized by AI were obtained as the average RGB value from all pixels in the image8.

Study endpoint

The end points were evaluation of diagnostic performance and tumor recognition ability through internal verification of the AI-assisted diagnostic device, and confirmation of difference in tumor grade according to the color inside the tumor contour identified by AI.

Statistical analysis

The distinguishing ability of tumor grade using the RGB value was evaluated by confirming the optimal cut-off value of the prediction probability using the SVM model. SVM is a branch of machine learning for classification that learns the optimal "separation" between the characteristics of each group. This training algorithm finds a separating hyperplane with a maximum margin defined as the distance from each side to the nearest data point27. The optimal cut-off value was based on the Youden index (sensitivity + specificity − 1). In the case of three groups, the cut-off value was not able to confirmed because the probability of belonging to each group was calculated, so benign and cancer lesions were divided into two groups. Continuous variables are expressed as the mean ± standard deviation or median (interquartile range). Categorical variables are reported as number and frequency. Divided two groups were compared using the independent t-test for continuous variables. The results of the ability to classify tumor grade according to RGB values were presented as area under the receiver operating characteristic curve, sensitivity, specificity, and diagnostic accuracy. Statistical analyzes were performed using R Statistical Package (version 4.1.1.; Institute for Statistics and Mathematics, Vienna, Austria). Statistical significance was set at p < 0.05.

Data availability

The datasets used and analyzed during the current study are available from the corresponding author upon reasonable request.

References

Eminaga, O., Eminaga, N., Semjonow, A. & Breil, B. Diagnostic classification of cystoscopic images using deep convolutional neural networks. JCO Clin. Cancer Inform. 2, 1–8 (2018).

Flaig, T. W. et al. Bladder cancer, version 3.2020, NCCN clinical practice guidelines in oncology. J. Natl. Compr. Cancer Netw. 18, 329–354 (2020).

Emerson, R. E. & Cheng, L. Immunohistochemical markers in the evaluation of tumors of the urinary bladder: A review. Anal. Quant. Cytol. Histol. 27, 301–316 (2005).

Goh, A. C. & Lerner, S. P. Application of new technology in bladder cancer diagnosis and treatment. World J. Urol. 27, 301–307. https://doi.org/10.1007/s00345-009-0387-z (2009).

Pradère, B. et al. Two-photon optical imaging, spectral and fluorescence lifetime analysis to discriminate urothelial carcinoma grades. J. Biophotonics 11, e201800065 (2018).

Richards, K. A., Smith, N. D. & Steinberg, G. D. The importance of transurethral resection of bladder tumor in the management of nonmuscle invasive bladder cancer: A systematic review of novel technologies. J. Urol. 191, 1655–1664. https://doi.org/10.1016/j.juro.2014.01.087 (2014).

Chan, E. O., Pradere, B., Teoh, J. Y., European Association of Urology-Young Academic Urologists Urothelial Carcinoma Working, G. The use of artificial intelligence for the diagnosis of bladder cancer: A review and perspectives. Curr. Opin. Urol. 31, 397–403. https://doi.org/10.1097/MOU.0000000000000900 (2021).

Anderson, M., Motta, R., Chandrasekar, S. & Stokes, M. In Color and Imaging Conference. 238–245 (Society for Imaging Science and Technology).

Tsai, C. L. et al. Hyperspectral imaging combined with artificial intelligence in the early detection of esophageal cancer. Cancers (Basel). https://doi.org/10.3390/cancers13184593 (2021).

Zheng, X., Xiong, H., Li, Y., Han, B. & Sun, J. RGB and HSV quantitative analysis of autofluorescence bronchoscopy used for characterization and identification of bronchopulmonary cancer. Cancer Med. 5, 3023–3030. https://doi.org/10.1002/cam4.831 (2016).

Hosking, A. M. et al. Hyperspectral imaging in automated digital dermoscopy screening for melanoma. Lasers Surg. Med. 51, 214–222. https://doi.org/10.1002/lsm.23055 (2019).

Yoo, J. W. & Lee, K. S. Usefulness of grayscale values measuring hypoechoic lesions for predicting prostate cancer: An experimental pilot study. Prostate Int. 10, 28–33 (2021).

Goldenberg, S. L., Nir, G. & Salcudean, S. E. A new era: Artificial intelligence and machine learning in prostate cancer. Nat. Rev. Urol. 16, 391–403. https://doi.org/10.1038/s41585-019-0193-3 (2019).

Shkolyar, E. et al. Augmented bladder tumor detection using deep learning. Eur. Urol. 76, 714–718. https://doi.org/10.1016/j.eururo.2019.08.032 (2019).

Ikeda, A. et al. Support system of cystoscopic diagnosis for bladder cancer based on artificial intelligence. J. Endourol. 34, 352–358. https://doi.org/10.1089/end.2019.0509 (2020).

Teoh, J. et al. A newly developed computer-aided endoscopic diagnostic system for bladder cancer detection. Eur. Urol. Open Sci. 19, e1364–e1365 (2020).

Suarez-Ibarrola, R. et al. A novel endoimaging system for endoscopic 3D reconstruction in bladder cancer patients. Minim. Invasive Ther. Allied Technol. 31, 1–8 (2020).

Yang, R. et al. Automatic recognition of bladder tumours using deep learning technology and its clinical application. Int. J. Med. Robot. Comput. Assisted Surg. 17, e2194 (2021).

Ikeda, A. et al. Cystoscopic imaging for bladder cancer detection based on stepwise organic transfer learning with a pretrained convolutional neural network. J. Endourol. 35, 1030–1035. https://doi.org/10.1089/end.2020.0919 (2021).

Negassi, M., Suarez-Ibarrola, R., Hein, S., Miernik, A. & Reiterer, A. Application of artificial neural networks for automated analysis of cystoscopic images: A review of the current status and future prospects. World J. Urol. 38, 2349–2358. https://doi.org/10.1007/s00345-019-03059-0 (2020).

Chen, S., Han, Y., Lin, J., Zhao, X. & Kong, P. Pulmonary nodule detection on chest radiographs using balanced convolutional neural network and classic candidate detection. Artif. Intell. Med. 107, 101881. https://doi.org/10.1016/j.artmed.2020.101881 (2020).

Yoshida, T. et al. Narrow-band imaging system with magnifying endoscopy for superficial esophageal lesions. Gastrointest. Endosc. 59, 288–295 (2004).

Seidenari, S., Pellacani, G. & Grana, C. Pigment distribution in melanocytic lesion images: A digital parameter to be employed for computer-aided diagnosis. Skin Res. Technol. 11, 236–241 (2005).

Zheng, C., Lv, Y., Zhong, Q., Wang, R. & Jiang, Q. Narrow band imaging diagnosis of bladder cancer: Systematic review and meta-analysis. BJU Int. 110, E680-687. https://doi.org/10.1111/j.1464-410X.2012.11500.x (2012).

Gono, K. et al. Appearance of enhanced tissue features in narrow-band endoscopic imaging. J. Biomed. Opt. 9, 568–577 (2004).

Shu, X. et al. Three-dimensional semantic segmentation of pituitary adenomas based on the deep learning framework-nnU-Net: A clinical perspective. Micromachines (Basel). https://doi.org/10.3390/mi12121473 (2021).

Noble, W. S. What is a support vector machine?. Nat. Biotechnol. 24, 1565–1567 (2006).

Acknowledgements

This study was supported by a faculty research grant from the Yonsei University College of Medicine (6-2017-0085).

Funding

This study was supported by a faculty research grant from the Yonsei University College of Medicine (6-2017-0085).

Author information

Authors and Affiliations

Contributions

Protocol/project development: J.W.Y., S.Y.B., and K.S.L. Data collection and management: J.W.Y., K.C.K., B.H.C., and K.S.L. Data analysis: J.W.Y., S.Y.B., S.J.L., K.H.P. and K.S.L. Manuscript writing/editing: J.W.Y. and K.S.L. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yoo, J.W., Koo, K.C., Chung, B.H. et al. Deep learning diagnostics for bladder tumor identification and grade prediction using RGB method. Sci Rep 12, 17699 (2022). https://doi.org/10.1038/s41598-022-22797-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-22797-7

This article is cited by

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.