Abstract

In this work we investigate the problem of simultaneous estimation of phases using generalised three- and four-mode Mach–Zehnder interferometer. In our setup, we assume that the phases are placed in each of the modes in the interferometer, which introduces correlations between estimators of the phases. These correlations prevent simultaneous estimation of all these phases, however we show that we can still obtain the Heisenberg-like scaling of precision of joint estimation of any subset of \(d-1\) phases, d being the number of modes, within completely fixed experimental setup, namely with the same initial state and set of measurements. Our estimation scheme can be applied to the task of quantum-enhanced sensing in three-dimensional interferometric configurations.

Similar content being viewed by others

Introduction

Multiparameter estimation is a rapidly growing field of quantum metrology1,2,3,4,5,6,7,8, in which an initial quantum state undergoes an evolution which depends on several parameters, and the final task of the process relies on estimating these parameters based on measurement statistics of the final state with as low variance as possible. Multiparameter estimation encounters specific difficulties, which do not occur in the single-parameter case9,10. One of the most significant difficulties is the possibility of an occurrence of correlations between the estimators corresponding to different parameters, which could decrease the overall precision of joint estimation3. Also, in this regard, it is worth mentioning that in Ref.11 it has been shown that the number of simultaneously estimatable parameters reduces when an external reference mode is absent. The main tool used to evaluate the precision of multiparameter estimation is the generalisation of the Quantum Fisher Information (QFI)12 to the multiparameter case, known as the Quantum Fisher Information Matrix (QFIM)5. For sufficiently uncorrelated parameters the QFIM is invertible and the covariance matrix of the estimated parameters is bounded from below by the inverse of the QFIM. This bound is a multiparameter version of the Quantum Cramer–Rao bound (QCRB)3. The elements of the QFIM depend on the size of the initial probe state. If the state is N-partite the elements of QFIM can depend at most quadratically on N, which is known as the Heisenberg-like (HL) scaling13,14. Several works were showing that the Heisenberg-like scaling of precision of estimation of all the parameters is possible for an entangled input state and some measurement strategy, which in principle demands the use of arbitrary multiports1,4,15,16,17,18.

In this work we state the problem of simultaneous estimation of multiple phases using 3- and 4-port generalised Mach–Zehnder interferometer19. We consider a scenario in which the phases are placed within each of the internal ports of the d-mode interferometer (see Fig. 1a), and the task is to simultaneously estimate any \((d-1)\)-element subset of them, whereas the remaining one is known, and serves as a phase reference.

Note that such configuration implies that the phases are strongly correlated, and the QFIM for all the phases in the interferometer is singular. The singularity of the QFIM reflects the impossibility of simultaneous estimation of all the phases without an external reference mode11,20,21.

We show that with the use of a fixed initial entangled probe state and a fixed interferometer one can obtain the Heisenberg-like scaling of precision of simultaneous estimation of any \((d-1)\)-element subset of the phases, without any change in the setup. This means that we have both the same initial state as well as the same set of local measurements when estimating each subset.

In a typical approach to quantum phase estimation one treats all the unitary part before the phaseshifts as a preparation of the initial state, whereas all the part after them is treated as an implementation of the measurement. In this work we apply a different point of view, treating the entire interferometer as a fixed single unitary operation, with the aim of investigating the metrological properties of a generalised Mach–Zehnder interferometer as a whole.

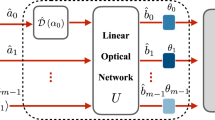

In our setup (see Fig. 1) we use only fixed symmetric multiports and an N-partite entangled state which is equivalent to a GHZ state22 of d-level systems via local unitary transformation specified by an additional symmetric multiport. To perform a detailed analysis of precision of estimation we develop an analytical description of a generalised 3- and 4-mode Mach–Zehnder (MZ) interferometer19 composed of two symmetric multiports intertwined with single-mode phase shifters using the Heisenberg–Weyl operators. We found that the entire evolution of the quantum state in such interferometer is generated by generalised unitary Pauli Y operators, which is analogous to the original two-mode case. This approach allows us to analytically assess the estimation precision of any \((d-1)\)-element subset of phases by calculating the inverse of the classical Fisher Information Matrix3. We show that even though we use the same initial state and the same measurement for estimation of all the subsets of phases, we are still able to obtain the Heisenberg-like scaling of precision of estimation of each of the subset.

Our setup can be presented in two configurations which are equivalent from the estimation perspective. The first configuration, which we utilize for detailed analytical discussion of the estimation precision, consists of N local MZ interferometers in a star-like configuration (see Fig. 1b). Further, we present much more experimentally feasible single-interferometer version of the setup (just a single interferometer depicted in Fig. 1a), which uses the so-called NOON states23 as the initial probe states.

There are several works discussing multiphase estimation with symmetric multiports24,25,26,27,28. They assume that the number of parameters to estimate is lower than the number of modes (in order to perform simultaneous estimation) and also that the initial state contains small definite number of photons, which makes impossible the discussion of scaling of precision with the size of the input state. Our analysis differs from these works in the following points: (i) we assume the phases are placed in each mode of the interferometer, and demonstrate the possibility of obtaining the Heisenberg-like scaling of precision of simultaneous estimation of any \((d-1)\)-element subset of the phases in a completely fixed interferometric setup, (ii) we are not using the methods of QCRB, which in our case would not guarantee achievability of the Heisenberg-like scaling with the same fixed setup; instead we use directly the method of classical Fisher-information-based analysis on the basis of output probabilities, which do not raise the questions of achievability, as no implicit optimisation over measurement procedure is included, (iii) we assume the input state with arbitrary number of photons which allows directly for asymptotic scaling discussions.

As a side result, we show that contrary to previous statements29 it is possible to construct a 5-mode fully symmetric multiport, the evolution of which is generated by a symmetric Hamiltonian.

(a) A generalised d-mode Mach–Zehnder (MZ) interferometer consisting of symmetric multiports intertwinned with d phaseshifts to be estimated. (b) An N-party configuration of the estimation setup, which consists of a central source of GHZ-path-entangled photons and N local stations consisting of generalised MZ interferometers from Fig. 1a.

Results

Analytical description of generalised Mach–Zehnder interferometer

In this section, we describe our setup for estimation of multiple phases. For the convenience of the presentation, we will use the standard Hilbert space description, which demands the introduction of N interferometers, however, as we discuss in details in “Single-interferometer optical implementation” section, the entire scenario can be as well described using the second-quantised framework, in which only one interferometer suffices. The setup (see Fig. 1b) consists of an N-partite GHZ source, which sends single photons to N measurement stations, such that a d-mode bundle goes to each of the stations, and the basis states are encoded by a path of the photon within the bundle. Therefore the initial state reads:

in which the preparation symmetric multiport \(U_d\) will be specified later. Further each of the N measurement stations apply d-mode interferometers, which consist of generalised Mach–Zehnder interferometers involving d phases, the \((d-1)\)-element subsets of which will be estimated. A generalised Mach–Zehnder interferometer19 consists of two symmetric multiports intertwinned with a series of phaseshifts on each of the d modes linking the multiports (see Fig. 1a). By a symmetric multiport we mean a d-mode multiport with the property that a single photon entering by any of the d input modes has a uniform probability \(\frac{1}{d}\) to be detected in any of the d output modes (see e.g.19).

For metrological considerations one usually needs a description of an interferometer in the Hamiltonian-like form \(U=e^{-i h_i\alpha _i}\), with an explicit form of generators corresponding to phaseshifts. The typical choice of the operator basis for finding the generators \(h_i\) is the set of Gell-Mann matrices, however we found that much more convenient choice for describing interferometers based on symmetric multiports is the Heisenberg–Weyl operator basis, defined as:

where \(\omega = \exp (2i\pi /d)\). In the following we will present explicit form of the generators for \(d=2,3,4\).

Two-mode case

In the two-mode case the Heisenberg–Weyl operators (2) are equivalent to the standard Hermitian Pauli matrix basis. The symmetric two-port can be expressed in the following form:

whereas the phase-imprinting part of the interferometer has the following representation:

The evolution of the entire interferometer can be expressed in a concise way using only Y operators:

The \({\mathscr {U}}_2\) evolution is generated by two Hamiltonians:

the eigenvalues of which read: \(\{0,1\}\).

Three-mode case

The description of the 3-mode case in the Heisenberg–Weyl basis turns out to be completely analogous to the 2-mode one. Firstly, the symmetric multiport has the following presentation in terms of generalised X operators:

whereas the phase part depends solely on generalised Z operators:

The entire evolution is, in full analogy to the two-mode case, generated solely by the generalised Y matrices:

The \({\mathscr {U}}_3\) evolution is generated by three Hamiltonians:

The above Hamiltonians fulfill \(h_1+h_2+h_3 = 0\), and their eigenvalues read: \(\{2/3,-1/3,-1/3\}\). Note that despite the fact that in the three-mode case the Heisenberg–Weyl operators (2) are no longer Hermitian, their appropriate combinations give rise to a proper Hermitian Hamiltonians (11).

Plot of the right-hand-side of the Cramer–Rao bound (18), \({\text {Tr}}\left( \left( {\mathscr {F}}(\alpha _1, \alpha _2)_{{\mathscr {I}}, {\mathscr {I}}}\right) ^{-1}\right) \), as a function of the jointly estimated phases \(\alpha _1\) and \(\alpha _2\) in a 3-mode Mach–Zehnder interferometer for the number of photons in the initial state set to \(N=8\). The minimal value of \({\text {Tr}}\left( \left( {\mathscr {F}}(\alpha _1, \alpha _2)_{{\mathscr {I}}, {\mathscr {I}}}\right) ^{-1}\right) \) for the optimal values of the estimated phases reads \(\frac{6+\sqrt{3}}{128}\approx 0.06\), whereas the Monte–Carlo-estimated median, where both the phases were drawn uniformly, reads 0.65.

Four-mode case

The description of a 4-mode case is a bit more complicated. The symmetric multiport still has analogous simple form in terms of generalised X operator:

whereas the phase-imprinting part can be presented in the following concise way:

where entries of the vector \(\vec z\) fulfill relations:

and \(K_{mn} = \omega ^{(m-1)(n-1)}\). The entire evolution is generated by 4 Hamiltonians:

however their exact form, although still depending only on generalised Y matrices, is much more complicated than in previous cases:

where the star \(^*\) denotes complex conjugation and for clarity we introduced constants:

The eigenvalues of \(h_i\) are: \(\{3/4, -1/4, -1/4, -1/4\}\). In this case the same remark applies as in the previous one: properly defined functions of the Heisenberg–Weyl non-Hermitian operators give rise to Hermitian Hamiltonians.

Precision of estimation of multiple phases with generalised Mach–Zehnder interferometer

In this section, we analyse the estimation precision of our proposed estimation scheme for \(d=3,4\) and an arbitrary number N of photons in the initial state. There are two kinds of phases in our experiment: the \((d-1)\)-element subset of unknown phases to be jointly estimated, and one remaining phase, assumed to be fixed and known, serving as a phase reference. Such a situation, in which the set of all parameters determining the final probability distribution is divided into parameters of interest (the ones we estimate) and additional parameters, is well known in estimation theory30 (see “Methods” section for more detailed presentation). In our case the additional parameter is the reference phase. Since we assume entirely fixed experimental setup with no optimisation of measurements, we estimate the precision of estimation using classical Fisher Information Matrix techniques. The precision of joint estimation of several parameters in the presence of fixed and known additional parameters is specified by the Cramer-Rao bound based on the inverese of the Fisher Information Submatrix corresponding to the parameters of interest (55). In order to describe the precision of estimation by a single quantity we take the trace of both sides of the Cramer-Rao bound (55)27:

where in our case the matrix \({\mathscr {F}}(\vec \alpha )_{{\mathscr {I}}, {\mathscr {I}}}\) is a Fisher Information submatrix corresponding to the subset of jointly estimated phases (denoted here by a symbol \({\mathscr {I}}\) meaning parameters of interest, see “Methods” section). Therefore our task would be to analyse the behaviour of the quantity \({\text {Tr}}\left( \left( {\mathscr {F}}(\vec \alpha )_{{\mathscr {I}}, {\mathscr {I}}}\right) ^{-1}\right) \) as a function of the number of photons N in order to find asymptotic scaling of precision.

In our setup we allow for an optimisation of the initial state, which depends solely on the dimension of the local multiport. As an optimisation strategy we take the maximisation of the mean QFI per parameter. We utilise the following inequality:

which follows directly from (50) (see “Methods” section). Note that the LHS of the above inequality is just the mean QFI per parameter, which can be treated as an approximate measure of average estimation performance per parameter. The total collective Hamiltonian corresponding to the action of N local interferometers reads:

where \(h_i\) denotes any of the local Hamiltonians from formulas (11) and (16). The inequality (19) implies that the optimal state should be an eigenstate of the operator \(\sum _i H_i^2\).

Three-mode case

Optimal state

The N-qutrit optimal state that maximizes the trace of the QFIM \({\mathscr {F}}^{Q}\) is given by:

where

One can easily prove that fact by noticing that the operator \(U_3\) simultaneously diagonalises the local Hamiltonians (11). Indeed, the operators (11) are expressible solely by the operators Y and \(Y^2\), and we have the relations: \(U_{3}^{\dagger } Y U_{3} = Z\) and \(U_{3}^{\dagger } Y^2 U_{3} = Z^2\), where by definition Z and \(Z^2\) are diagonal. Consequently, following the action of the collective unitary operation \(U_{3}^{\otimes N}\), the total collective Hamiltonians \(H_i\) (20) are diagonal with the eigenstates \(|0...0\rangle , |1...1\rangle \) and \(|2...2\rangle \), which implies that the operator \(\sum _i H_i^2\) is also diagonal with the same set of eigenstates.

Achievable precision

As described in “Methods” section, in order to calculate the estimation precision via classical Fisher Information matrix (46) we have to determine the parameter-dependent probability distribution for measurement outcomes \(p(k|\vec {\alpha })\). In our setup the outcomes are labeled by the numbers \(i_k\in \{0,1,2\}\) which denote detector clicks in local modes \(i_k\) in measurement stations k, therefore the distribution has the form \(p(i_1,\ldots ,i_N|\vec \alpha )\). Further, as we show in “Methods” section, the final probability distribution (59) depends only on the total number of clicks in local modes \(\{0,1,2\}\) specified by integers z, j, d respectively, therefore \(p(i_1,\ldots ,i_N|\vec \alpha )=p(z,j,d|\vec \alpha )\). Using the final form of the probability distribution (59) we can directly calculate the classical \(2\times 2\) Fisher Information submatrix (46) corresponding to joint estimation of two of the three phases \(\{\alpha _1,\alpha _2\}={\mathscr {I}}\), whereas the third phase is set to zero as a reference mode:

where the multinomial coefficient counts the number of separate detection situations giving rise to the total of z, j, d clicks in modes \(\{0,1,2\}\). In the above formula we took the third mode as a reference mode, however the form of the above Fisher information submatrix does not depend on this choice due to symmetry of the final probability distribution (59) with respect to the parameters \(\alpha _i\). Therefore the following analysis holds for estimation of any 2-mode subset of modes of the interferometer.

The exact analytical expression for the above defined Fisher information submatrix for arbitrary values of \(\alpha \)’s is complicated, however we were able to calculate its inverse and find the optimal scaling of the quantity \({\text {Tr}}\left( \left( {\mathscr {F}}(\vec \alpha )_{{\mathscr {I}}, {\mathscr {I}}}\right) ^{-1}\right) \) as a function of the number of photons N. It reads:

for the optimal values of estimated phases:

Assuming that the estimated phases are equal, \(\alpha _1=\alpha _2=\alpha \), the trace of the inverse Fisher information submatrix has the following form:

In order to visualise how robust our strategy is for estimation of arbitrary values of the phases we plot the value of \({\text {Tr}}\left( \left( {\mathscr {F}}( \alpha _1, \alpha _2)_{{\mathscr {I}}, {\mathscr {I}}}\right) ^{-1}\right) \) as a function of the phases \(\alpha _1,\alpha _2\) for \(N=8\), see Fig. 2.

All the above analysis indicates the local character of the Fisher Information-based approach to precision of estimation: the precision strongly depends on the values of the estimated phases. Therefore in realistic applications one needs to obtain some prior knowledge of the phases in order to tune the interferometer in a way that the unknown phases are close to the optimal values for which the error is the lowest.

Notice that even though we do not allow for optimization of final measurements, we still obtain the Heisenberg-like scaling of precision of joint estimation for each of the parameters around its optimal values.

Four-mode case

Optimal state

In analogy to the previous case the N-ququart state which maximises the trace of the QFIM reads:

where:

The proof of optimality of (27) follows the same logic as (21). \(U_{4}\) simultaneosly diagonalizes local Hamiltonians \(h_i\) (16) with the eigenstates being the standard basis \(|0\rangle , |1\rangle , |2\rangle \), and \(|3\rangle \). Consequently, following the action of the unitary operation \(U_{4}^{\otimes N} \), the total collective Hamiltonians \(H_i\) (20) are diagonal with the eigenstates \(|0...0\rangle , |1...1\rangle , |2...2\rangle \), and \(|3...3\rangle \), which implies that the operator \(\sum _i H_i^2\) is also diagonal with the same set of eigenstates.

Achievable precision

In analogy to the previous case we have to determine the final probability distribution \(p(k|\vec {\alpha })\). In the current case the outcomes are labeled by the numbers \(i_k\in \{0,1,2,3\}\) which denote detector clicks in local modes \(i_k\) in measurement stations k, therefore the distribution has the form \(p(i_1,\ldots ,i_N|\vec \alpha )\). As shown in “Methods” section, the final probability distribution (62) depends only on the total number of clicks in local modes \(\{0,1,2,3\}\) specified by integers z, j, d, t respectively, therefore \(p(i_1,\ldots ,i_N|\vec \alpha )=p(z,j,d,t|\vec \alpha )\). Using the final form of the probability distribution (62) we can directly calculate the classical \(3\times 3\) Fisher Information submatrix corresponding to joint estimation of the three phases \(\{\alpha _1,\alpha _2,\alpha _3\}={\mathscr {I}}\), whereas the fourth phase is set to zero as a reference mode:

where the multinomial coefficient counts the number of separate detection situations giving rise to the total of z, j, d, t clicks in modes \(\{0,1,2,3\}\). As in the previous case the above Fisher information submatrix has the same form for any choice of the reference mode, therefore the following analysis of precision of estimation holds for estimating any triple of phases chosen from all the four ones.

Analogously to the previous case we calculated the optimal scaling of the quantity \({\text {Tr}}\left( \left( {\mathscr {F}}(\vec \alpha )_{{\mathscr {I}}, {\mathscr {I}}}\right) ^{-1}\right) \) as a function of the number of photons N. It reads:

for the optimal values of estimated phases:

Assuming that all the estimated phases are equal, \(\alpha _1=\alpha _2=\alpha _3=\alpha \), the trace of the inverse Fisher information submatrix scales with the number of photons N as follows:

In this case, we also obtain the Heisenberg-like scaling of precision of joint estimation of any triple of the phases around their optimal values. The same remark on the local character of precision of estimation applies here: one needs to gain a prior knowledge of the unknown phases in order to estimate them around the optimal working point specified by the optimal phases (31).

Symmetric 5-mode multiport with symmetric Hamiltonian

We found that symmetric multiports for \(d=5,6\) can be also generated by the powers of generalised X operators in analogy to formulas (3), (8) and (12):

As pointed out in seminal paper29 it is sometimes advisable to analyse the optical multiports from the Hamiltonian perspective. Such an analysis can be necessary in implementations of optical multiports with active optical devices. Let us move to the second quantisation description, in which we define the symmetric Hamiltonian for d-mode optical instrument as:

which is a slight generalisation of the definition used in29 which additionally includes phases. In Ref.29, Sect. III it is stated that Hamiltonian of the form (35) cannot generate evolution of a symmetric multiport for \(d>4\). However, it can be easily seen that the evolution (33) of a symmetric 5-port is in fact generated by such a Hamiltonian. To see this let us notice that the Hamiltonian of the symmetric multiport (33) reads up to a constant factor:

Using the Jordan–Schwinger map:

which maps matrix operators on \({\mathbb {C}}^d\) into second-quantised operators on d-mode Fock space, we obtain that \(H_5\) has the symmetric representation (35) with the following phases:

On the other hand the Hamiltonian:

generating the evolution of the 6-mode symmetric multiport (34) does not have symmetric representation (35). Instead it can be represented as:

with the following amplitudes:

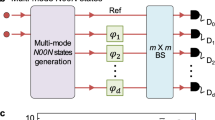

Single-interferometer optical implementation

Although our scheme in the version presented in the Fig. 1 can be directly implemented using optical interferometry, the main difficulty of such an implementation lies in preparing the multiphoton GHZ state. Despite the progress in realising multiphoton entanglement in recent years (cf. e.g.31) it is still chalenging to prepare such states for higher number of subsystems, which may suggest that the proposed scheme is unfeasible. However our scheme can be transformed into simpler one which is completely feasible within current optical technology by performing second quantisation of the scheme. Note that the final states in our setup are symmetric states of photons which are distinguishable by path degree of freedom:

The second-quantised version of the above states, which assumes that a state of N indistinguishable photons is send to a single d-mode interferometer can be expressed as:

where the \( | \text {NOON}^d \rangle _N\) states are defined by the formula:

The detection probabilities for the second-quantised version of the scheme are identical to the ones for the original scheme (59) and (62), only the meaning of the detection events changes: now the numbers z, j, d (and t for the 4-mode case) denote photon counts in modes \(\{0,1,2\}\) (and respectively in mode 3) in a single interferometer consisting of the initial-state-correcting multiports \(U_3\) (22) (or \(U_4\) (28)) and the generalised Mach–Zehnder interferometer \({\mathscr {U}}_3\) (10) (or \({\mathscr {U}}_4\) (15)). Notice that the equivalence of the original scheme with the second-quantised one can be already seen at the level of probabilities (59) and (62), since they do not distinguish in which of the N stations there was a click in a given mode, but depend solely on the total number of clicks in given modes across all the labs.

The single-interferometer version of the scheme is experimentally feasible at the current stage since, in contrast to the multiphoton GHZ sources, there are experimental methods to produce N-photon NOON states (44) for higher values of N23. Note that a similar idea of using fixed multimode Mach–Zehnder interferometer for multiphase estimation already appeared in several works24,25,26,27,28, however in all these works input states with small definite number of photons are considered, therefore they lack discussion about scaling of precision with the size of the initial state.

Discussion

In this work we have investigated the metrological properties of a generalised Mach–Zehnder interferometer for the number of modes equal to 3 and 4, with the emphasis put on the possibility of simultaneous estimation of \((d-1)\)-element subset of phases placed in arbitrary configuration across the modes. We have shown that estimation of each of the subsets can be performed with Heisenberg-like scaling of precision in an entirely fixed interferometric setup, namely with the same initial state and measurement strategy. To prove the Heisenberg-like scaling of precision we developed an analytical description of the generalised Mach–Zehnder interferometer in terms of Heisenberg–Weyl operators. This approach allows for analytical calculation of the inverse of the classical Fisher information matrix related with each of the subsets of parameters, which, in contrast to methods implicitly involving optimisation over measurement strategies based on Quantum Cramer–Rao or Holevo bounds, provides a factual limit for the efficiency of estimation within assumed concrete measurement setup.

Since our scheme allows for estimation of any \((d-1)\)-element subset of unknown phases placed arbitrarily across a fixed interferometer (the remaining phase is assumed to be known), the single-interferometer version of the scheme can be well suited for enhancing the performance of 3-dimensional quantum sensing tasks similar to the ones presented in Ref.24, in which the estimation is performed using only input states with a small definite number of photons.

Methods

General introduction to multiphase estimation

Standard approach to multiparameter estimation assumes the following estimation scheme2,3,4: an initial multipartite state \(\rho _{in}\) undergoes an evolution \(\Lambda _{\vec \alpha }\), which depends on a vector of unknown parameters \(\vec \alpha =(\alpha _1,\ldots ,\alpha _d)\). Finally single-particle projective measurements \(\{\Pi \}_k\) with outcomes labeled as k’s are performed, leading to final probability distribution:

Having the parameter-dependent probability distribution \(p(k|\vec \alpha )\) one can construct estimators \(\{A_i\}\) of the unknown parameters \(\{\alpha _i\}\). In order to estimate the joint accuracy of these estimators one has to introduce joint measure of sensitivity of the distribution \(p(k|\vec \alpha )\) on the parameters \(\{\alpha _i\}\). In classical estimation theory such a measure is provided by the Fisher Information Matrix \({\mathscr {F}}\) (FIM), defined as:

If the estimators \(\{A_i\}\) are unbiased, namely their mean values equal to \(\{\alpha _i\}\) for the entire range of \(\alpha \)’s, and the \({\mathscr {F}}\) matrix is invertible, the quality of estimation of \(\{\alpha _i\}\) based on distribution \(p(k|\vec \alpha )\) is described by the Cramer–Rao bound:

where \({\text {Cov}}(\{A_i\})\) is a covariance matrix for estimators, namely \({\text {Cov}}(\{A_i\})_{mn}={\text {Cov}}(A_m,A_n)\), and \(\nu \) is the number of repetitions of the experiment. The above description of the efficiency of estimation assumes fixed measurements \(\{\Pi \}_k\). In quantum estimation theory one is usually interested in description of efficiency of estimation of \(\alpha \)’s from an evolved quantum state \(\rho _{out}(\vec \alpha )=\Lambda _{\vec \alpha }(\rho _{in})\) in a way which assumes optimisation over all possible measurements. This idea is encoded in the Quantum Fisher Information Matrix (QFIM), defined in an operator-based way:

where the braces denote anticommutator of operators and the operators \(L_i\) are defined implicitly by the equation:

In the case of pure input state \( | \psi \rangle \) and the unitary evolution of the form \(U=e^{-iH_i\alpha _i}\) the QFIM can be expressed in an explicit form:

Assuming that the QFIM is invertible the Quantum Cramer-Rao bound holds:

It is worth to mention that there exists another version of the Quantum Cramer-Rao bound, namely the Holevo bound3,32,33, which does not utilise the QFIM. This bound on the precision of estimation is expressed using the notion of a cost matrix \({\mathscr {C}}\), which is a positive matrix providing weights to the uncertainties related with different parameters and the matrix \({\mathscr {V}}\) defined elementwise by the relation \({\mathscr {V}}_{ij}={\text {Tr}}({\mathscr {X}}_i{\mathscr {X}}_j\rho _{out}(\vec \alpha ))\), where the Hermitian matrices \({\mathscr {X}}_i\) fulfill the constraint \({\text {Tr}}(\{{\mathscr {X}}_i,L_j\}\rho _{out}(\vec \alpha ))=2\delta _{ij}\) and the operators \(L_i\) are defined in (49). Then the Holevo bound has the following form3:

where the norm in the RHS is the trace norm. The Holevo bound (52) is tighter than the QCRB bound (51). Despite the fact that the Holevo bound does not utilise the QFIM, it is also ill-defined in the case when the corresponding QFIM-based Cramer–Rao bound is ill-defined due to singularity of the QFIM33.

Multiparameter Cramer–Rao bound in the presence of additional parameters

Application of both the bounds (51) and (52) always raises the question whether found precision limitations can be saturated by experimentally accessible measurement schemes. Our main aim is to investigate the process of estimation of multiple phases within a fixed interferometer and a simple fixed measurement scheme consisting of single output mode measurements. Therefore our approach to evaluate the precision of estimation would be based on basic tools in estimation theory, namely on the classical Fisher information matrix techniques. In this way we do not need to care about optimisation of measurements.

However another issue remains to be solved concerning our setup. Namely our task is to estimate multiple phases, which constitute a subset of all the parameters on which the final probability distribution depends (being the phases to be estimated and the reference phase). Such a situation in estimation theory has been discussed extensively in Ref.30,34. In our case the chosen subset of phases is referred to as the set of parameters of interest, whereas the reference phase is known and fixed. In general two different cases have to be considered when additional parameters than the estimated ones appear in the setup: (i) the additional parameters are fixed and known, (ii) the additional parameters are fixed but unknown (and are called in this context the nuisance parameters). Let us denote the division of the set of all parameters into parameters of interest and additional parameters by a vector \((\vec \alpha _{{\mathscr {I}}}, \vec \alpha _{{\mathscr {O}}})\) in the case additional parameters are known and as \((\vec \alpha _{{\mathscr {I}}}, \vec \alpha _{{\mathscr {N}}})\) if they are the nuisance parameters. Then the Fisher Information Matrix and its inverse can be expressed in a block form with respect to the fixed partition of parameters \((\vec \alpha _{{\mathscr {I}}}, \vec \alpha _{{\mathscr {O}}})\) and \((\vec \alpha _{{\mathscr {I}}}, \vec \alpha _{{\mathscr {N}}})\)30:

The Cramer–Rao bound for the parameters of interest on condition that the additional parameters are known has the following form:

whereas for the case they are unknown (they are the nuisance parameters) it reads:

In simple words the covariance of the estimators of parameters of interest is bounded from below either by the inverse of the submatrix of the FIM (when additional parameters are known) or by the submatrix of the inverese of the FIM (if they are unknown). It is worth to mention, that all of this holds also in the case of a single parameter of interest: if the additional parameters are unknown, still one needs to take into account entire FIM and take its inverse34.

Probability distributions for the particular outcomes of the experimental setup

Three-mode case

Let \(i_k\in \{0,1,2\}\) denote the measurement outcome at k-th station corresponding to detector click in \(i_k\)-th local mode. Then the conditional probability distribution for the outcomes conditioned on the values of the phaseshifts reads:

Firstly the entire local evolution operator \({\mathscr {U}}_{3}(\alpha _1,\alpha _2,\alpha _3). U_{3}\) can be presented in a compact matrix form by direct use of the defining formulas (10) and (22):

Secondly it turns out that the probability distribution (57) has an important symmetry, namely it depends only on the total number of local clicks in local modes \(\{0,1,2\}\), which we denote by z, j, d respectively. Using this property the final form of the probability distribution reads:

From here, it is very easy to derive compact forms of the elements of the Fisher Information Matrix.

Four-mode case

Again, using the same logic as in the three-mode case, the formula for conditional probability of detection events for \(d = 4\) has the following form:

In full analogy to the previous case the local evolution operator \({\mathscr {U}}_4(\alpha _1,\alpha _2,\alpha _3,\alpha _4).U_{4}\) has a compact matrix form:

The final probability distribution again depends only on the total number of clicks in local modes \(\{0,1,2,3\}\) denoted respectively as z, j, d, t:

References

Yue, J.-D., Zhang, Y.-R. & Fan, H. Quantum-enhanced metrology for multiple phase estimation with noise. Sci. Rep. 4, 5933. https://doi.org/10.1038/srep05933 (2014).

Szczykulska, M., Baumgratz, T. & Datta, A. Multi-parameter quantum metrology. Adv. Phys. X 1, 621–639. https://doi.org/10.1080/23746149.2016.1230476 (2016).

Ragy, S., Jarzyna, M. & Demkowicz-Dobrzanski, R. Compatibility in multiparameter quantum metrology. Phys. Rev. A 94, 052108. https://doi.org/10.1103/PhysRevA.94.052108 (2016).

Gessner, M., Pezzè, L. & Smerzi, A. Sensitivity bounds for multiparameter quantum metrology. Phys. Rev. Lett. 121, 130503. https://doi.org/10.1103/PhysRevLett.121.130503 (2018).

Liu, J., Yuan, H., Lu, X.-M. & Wang, X. Quantum fisher information matrix and multiparameter estimation. J. Phys. A Math. Theor. 53, 023001. https://doi.org/10.1088/1751-8121/ab5d4d (2019).

Górecki, W., Zhou, S., Jiang, L. & Demkowicz-Dobrzanski, R. Optimal probes and error-correction schemes in multiparameter quantum metrology. Quantum 4, 288. https://doi.org/10.22331/q-2020-07-02-288 (2020).

Sidhu, J. S. & Kok, P. Geometric perspective on quantum parameter estimation. AVS Quantum Sci. 2, 014701. https://doi.org/10.1116/1.5119961 (2020).

Polino, E., Valeri, M., Spagnolo, N. & Sciarrino, F. Photonic quantum metrology. AVS Quantum Sci. 2, 024703. https://doi.org/10.1116/5.0007577 (2020).

Tóth, G. & Apellaniz, I. Quantum metrology from a quantum information science perspective. J. Phys. A Math. Theor. 47, 424006. https://doi.org/10.1088/1751-8113/47/42/424006 (2014).

Demkowicz-Dobrzanski, R., Jarzyna, M. & Kołodynski, J. Quantum limits in optical interferometry. Prog. Opt. 60, 345. https://doi.org/10.1016/bs.po.2015.02.003 (2015).

Goldberg, A. Z. et al. Multiphase estimation without a reference mode. Phys. Rev. A 102, 022230. https://doi.org/10.1103/PhysRevA.102.022230 (2020).

Helstrom, C. Quantum Detection and Estimation Theory (Academic Press, 1976).

Zwierz, M., Pérez-Delgado, C. A. & Kok, P. General optimality of the heisenberg limit for quantum metrology. Phys. Rev. Lett. 105, 180402. https://doi.org/10.1103/PhysRevLett.105.180402 (2010).

Hall, M. J. W., Berry, D. W., Zwierz, M. & Wiseman, H. M. Universality of the heisenberg limit for estimates of random phase shifts. Phys. Rev. A 85, 041802. https://doi.org/10.1103/PhysRevA.85.041802 (2012).

Humphreys, P. C., Barbieri, M., Datta, A. & Walmsley, I. A. Quantum enhanced multiple phase estimation. Phys. Rev. Lett. 111, 070403. https://doi.org/10.1103/PhysRevLett.111.070403 (2013).

Knott, P. A. et al. Local versus global strategies in multiparameter estimation. Phys. Rev. A 94, 062312. https://doi.org/10.1103/PhysRevA.94.062312 (2016).

Liu, J., Lu, X.-M., Sun, Z. & Wang, X. Quantum multiparameter metrology with generalized entangled coherent state. J. Phys. A Math. Theor. 49, 115302. https://doi.org/10.1088/1751-8113/49/11/115302 (2016).

Zhang, L. & Chan, K. W. C. Quantum multiparameter estimation with generalized balanced multimode noon-like states. Phys. Rev. A 95, 032321. https://doi.org/10.1103/PhysRevA.95.032321 (2017).

Brougham, T., Košták, V., Jex, I., Andersson, E. & Kiss, T. Entanglement preparation using symmetric multiports. Eur. Phys. J. D 61, 231–236. https://doi.org/10.1140/epjd/e2010-10337-2 (2011).

Jarzyna, M. & Demkowicz-Dobrzanski, R. Quantum interferometry with and without an external phase reference. Phys. Rev. A 85, 011801. https://doi.org/10.1103/PhysRevA.85.011801 (2012).

Ataman, S. Single- versus two-parameter fisher information in quantum interferometry. Phys. Rev. A 102, 013704. https://doi.org/10.1103/PhysRevA.102.013704 (2020).

Greenberger, D. M., Horne, M. A. & Zeilinger, A. Going beyond bell’s theorem. Preprint at http://arXiv.org/0712.0921 (2007).

Zhang, L. & Chan, K. W. C. Scalable generation of multi-mode noon states for quantum multiple-phase estimation. Sci. Rep. 8, 11440. https://doi.org/10.1038/s41598-018-29828-2 (2018).

Spagnolo, N. et al. Quantum interferometry with three-dimensional geometry. Sci. Rep. 2, 862. https://doi.org/10.1038/srep00862 (2012).

Ciampini, M. A. et al. Quantum-enhanced multiparameter estimation in multiarm interferometers. Sci. Rep. 6, 28881. https://doi.org/10.1038/srep28881 (2016).

You, C. et al. Multiparameter estimation with single photons—linearly-optically generated quantum entanglement beats the shotnoise limit. J. Opt. 19, 124002. https://doi.org/10.1088/2040-8986/aa9133 (2017).

Polino, E. et al. Experimental multiphase estimation on a chip. Optica 6, 288–295. https://doi.org/10.1364/OPTICA.6.000288 (2019).

Li, X., Cao, J.-H., Liu, Q., Tey, M. K. & You, L. Multi-parameter estimation with multi-mode ramsey interferometry. New J. Phys. 22, 043005. https://doi.org/10.1088/1367-2630/ab7a32 (2020).

Jex, I., Stenholm, S. & Zeilinger, A. Hamiltonian theory of a symmetric multiport. Opt. Commun. 117, 95–101. https://doi.org/10.1016/0030-4018(95)00078-M (1995).

Suzuki, J., Yang, Y. & Hayashi, M. Quantum state estimation with nuisance parameters. J. Phys. A Math. Theor. 53, 453001. https://doi.org/10.1088/1751-8121/ab8b78 (2020).

Hu, X.-M. et al. Experimental creation of multi-photon high-dimensional layered quantum states. NPJ Quantum Inf. 6, 88. https://doi.org/10.1038/s41534-020-00318-6 (2020).

Holevo, A. S. Probabilistic and Statistical Aspects of Quantum Theory (North-Holland, 1982).

Tsang, M., Albarelli, F. & Datta, A. Quantum semiparametric estimation. Phys. Rev. X 10, 031023. https://doi.org/10.1103/PhysRevX.10.031023 (2020).

Gross, J. A. & Caves, C. M. One from many: Estimating a function of many parameters. J. Phys. A Math. Theor. https://doi.org/10.1088/1751-8121/abb9ed (2020).

Acknowledgements

The authors acknowledge discussions with Lukas Knips and Aaron Goldberg. The work is part of the ICTQT IRAP (MAB) project of the Foundation for Polish Science (IRAP project ICTQT, Contract No. 2018/MAB/5, cofinanced by EU via Smart Growth Operational Programme). M.P. acknowledges the support by DFG (Germany) and NCN (Poland) within the joint funding initiative Beethoven2 (Grant No. 2016/23/G/ST2/04273).

Author information

Authors and Affiliations

Contributions

All authors contributed equally to this work. M.M. prepared the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Markiewicz, M., Pandit, M. & Laskowski, W. Simultaneous estimation of multiple phases in generalised Mach–Zehnder interferometer. Sci Rep 11, 15669 (2021). https://doi.org/10.1038/s41598-021-95005-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-95005-7

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.