Abstract

Physiological signal monitoring and driver behavior analysis have gained increasing attention in both fundamental research and applied research. This study involved the analysis of driving behavior using multimodal physiological data collected from 35 participants. The data included 59-channel EEG, single-channel ECG, 4-channel EMG, single-channel GSR, and eye movement data obtained via a six-degree-of-freedom driving simulator. We categorized driving behavior into five groups: smooth driving, acceleration, deceleration, lane changing, and turning. Through extensive experiments, we confirmed that both physiological and vehicle data met the requirements. Subsequently, we developed classification models, including linear discriminant analysis (LDA), MMPNet, and EEGNet, to demonstrate the correlation between physiological data and driving behaviors. Notably, we propose a multimodal physiological dataset for analyzing driving behavior(MPDB). The MPDB dataset’s scale, accuracy, and multimodality provide unprecedented opportunities for researchers in the autonomous driving field and beyond. With this dataset, we will contribute to the field of traffic psychology and behavior.

Similar content being viewed by others

Background & Summary

According to the National Motor Vehicle Crash Causation Survey (NMVCCS), 94% of traffic crashes are caused by the inappropriate driver behaviour1,2. Some drivers may not consistently adhere to traffic regulations, potentially elevating the risk of conflicts3. Individual drivers exhibit distinct driving styles and levels of risk-taking propensity, influenced by factors like age and gender, affecting their perception of hazardous situations. Additionally, specific driving needs can lead to more assertive driving, potentially resulting in errors. These observations suggest a causal link between a driver’s reactions and accidents4,5. While there exists some research on the influence of driving state on accidents, there is a pressing need for further investigation into the impact of driving responses. Integrating human elements into traffic models offers a more comprehensive grasp of traffic modeling, control, and safety6. Among these considerations, comprehending the cognitive aspects of drivers and the mechanisms governing their decisions is fundamental for enhancing driver behaviour6,7. There remains a requirement for more human driver behavior models adaptable to a wide array of scenarios7. Employing physiological signals in experiments can reveal the underlying logic behind human decision-making, providing a solid foundation for modeling human driving behavior8.

Asymptotically homogeneous driving behaviour response dataset for complex dynamic environments will help to detect driver cognitive function based on natural driving behaviour and provide a basis for tracing the cause of accidents. Since driver cognition is an integral component of driving behaviour, driving behaviour must be studied from a cognitive and decision-making perspective, utilizing the knowledge and theory of related fields, such as psychology, physiology, engineering, and behavioural science9,10,11,12,13. Cortical beta power changes can reflect decision dynamics based on EEG. Beta power as an indicator of evidence accumulation is mainly used to study decision making14. To observe human behaviour, psychophysiological studies can analyse the driver’s driving state or driving intentions through physiological signals from different parts of the human body15,16,17,18,19,20. The development of low-power, high-precision wearable device technology has driven the study of cognition, leading to an increasing number of studies investigating the cognitive decision-making process of human driving behaviour during driving. Currently, numerous studies delve into the interpretation of EEG signals for behavioral movements. Both low-frequency EEG potentials (<3 Hz), which are referred to as movement-related cortical potentials, and faster EEG activity, such as the sensorimotor rhythm21, have been related to the planning and execution of movements during both motor and imagery tasks22,23,24. Part of the literature linked anticipatory EEG signals with the contingent negative variation (CNV), a central negative deflection that can last from about 300 ms to several seconds that was previously related to sensory-motor association and expectancy25,26,27. Utilizing measurements of the CNV from low-frequency EEG, researchers have successfully decoded driver intentions in real-world driving scenarios. This research demonstrates the ability to anticipate braking and accelerating actions with a lead time of 320±200 ms28,29,30.

To study human responses and decision-making processes during driving tasks, researchers require rich and reproducible datasets. Table 1 summarizes the datasets from which information on drivers in survey papers as far as we know. Most existing datasets focus on predicting vehicle trajectories based on previous data or vehicle dynamics. While there have been extensive studies on vehicle-based driving behavior, attributing accidents solely to vehicle driving data proves insufficient in effectively distinguishing between driver operational errors and potential vehicle performance issues.

Consequently, discerning driver behavior from vehicle performance problems presents a considerable challenge. Despite the wealth of driving data available in autonomous driving research, users exhibit hesitancy in relinquishing control over vehicles, resulting in the underutilization of this capability31,32. In other words, the majority of vehicles on the road remain in a non-autonomous driving state. This underscores the critical importance of human factors in driving behavior research33,34. In essence, conducting a comprehensive examination of driver behavior is imperative from a research standpoint. To emulate human behavior, methods like electroencephalography (EEG) and electrocardiography (ECG)35 play an indispensable role in quantification. Acquiring such information is more accessible compared to invasive data. Empirical evidence strongly attests to the effectiveness of these multimodal data in extracting driver behavior features.

Relying exclusively on vehicle behavior data proves inadequate in overcoming these challenges. In the actual driving process, the internal state of the driver at a certain moment is often a combination of multiple drive emotion rather than a single emotion36,37. For instance, when a driver is experiencing drowsiness while using the phone, a distracted state coexists, and these states may swiftly transition to an internal state of anger in response to another driver’s overtaking situation. Therefore, we cannot clearly label driving states because they are transient and unmeasurable38,39,40. However, we cannot clearly label driving states because they are transient and unmeasurable41. Hussain et al.41 is to establish the mapping relationship with EEG signals in both stationary and driving states. In the driving state, there is an observed increase in theta and delta waves, along with a decrease in the beta and gamma bands compared to the resting state. However, this observation does not align with the specific internal states emphasized in our article, such as anger, distraction, fatigue, etc. Despite this inconsistency, it does not contradict our claims. To some extent, it supports the notion that driving behavior can indeed be reflected in EEG signals, highlighting the complexity of the internal state during driving. We want to measure the current driver’s internalimplicit states through explicit driving behaviour data analysis besides deliberate experiment design. Mental status must be induced through well-designed experiments42,43,44. Covering all these states is difficult, so we try to reflect the intrinsic states by detecting the multimodal data to measure the driving behaviour in real time. Therefore, creating multimodal physiological signal human behaviour datasets in driving is essential for studying driver cognitive characteristics affecting driving behaviour decisions. However, to our knowledge, there are no publicly available multimodal physiological datasets of human response decisions in driving tasks. EEG signals can generate clear physiological signal analysis when we are driving, but the internal states of drivers during driving, such as distraction, anger and frustration, are relatively instantaneous changes. It is also more complex, such as angry soon into the state of frustration and so on45. In summary, driving behaviors are easier to annotate and map than complex emotional states. Although the internal driving state is difficult to calibrate in composite instants, we may be able to deduce the driver state from the driver’s driving behavior in future research.

Our dataset is mainly targeted at neuroscience and traffic psychology domains. Fatigue, emotion, distraction, and driving behavior are studied in this field, and it has been an important research topic in psychology, physiology, human factors engineering, and ergonomics.Our paper studies the driving behavior of drivers. At present, the common datasets in the field of driving behavior are mainly divided into four categories: 1) Driving behavior based on vehicle sensors is studied in acceleration, deceleration, turning and other aspects of driving behavior46,47,48,49,50. The data collected from vehicle sensors may be subject to inaccuracies and noise. Sensor readings can be affected by factors such as sensor calibration errors, environmental conditions, and wear and tear, leading to potential inaccuracies in the analysis of driving behavior. 2) Study the driver behavior based on the camera mounted on the vehicle51,52,53,54. Analysis of driving behavior may be influenced by lighting and weather conditions. Adverse weather, low light, or other visual impediments can affect the quality of images, potentially compromising the accuracy of driving behavior analysis; 3) Driving behavior is determined by the data of smart phone sensors55,56,57. Smartphones are often placed in fixed locations, such as pockets or mounts, which might not be ideal for capturing certain driving behaviors accurately. The fixed position could affect the ability to detect nuanced movements, such as steering wheel rotations or pedal usage. 4)The research of driving state based on physiological signals mainly studies the fatigue and distraction states of drivers, but there is no relevant data set that directly maps physiological signals to driver behavior58,59,60,61.Physiological responses to fatigue and distraction can vary significantly among individuals. What may be a reliable indicator for one driver may not hold true for another. This variability complicates the development of universal models for detecting fatigue or distraction.

We investigate the development of a dataset that directly maps physiological signals to driver behavior. Currently, there is no relevant dataset proposed. The advantages of such a dataset include: small computational requirements for physiological signal data; relatively stable physiological signal data; a clearer and more direct reflection of driving behavior with a more explicit correlation; the collection of physiological signals is not influenced by the driver’s position; simultaneously, it can improve the vehicle-human interaction interface. The application of the mapping dataset is expected to enhance the vehicle-human interaction interface. The system can intelligently respond to the driver’s physiological needs, providing a more intuitive and user-friendly interaction experience.

Here, we present a driving behaviour dataset of multimodal physiological signals for the first time. The core challenge of this dataset is how to effectively collect the driver’s reaction decisions and behaviour during driving. Therefore, the core work is to obtain multimodal driving behaviour datasets and analyse different human driving behaviours by designing experiments based on Event-Related Desynchronization/Synchronization (ERD/ERS) paradigm62,63,64 and combining data from physiological signals. We did not utilise Event-Related Potentials (ERPs) as an EEG experimental paradigm in our experiments. The experimental design of ERPs requires a single stimulus such as flashing brake lights65. Specific stimuli in ERP experiments cannot be reproduced in dynamic driving scenarios. Our experimental paradigm is Event-Related Desynchronization/Synchronization (ERD/ERS). Cortical beta power changes can reflect decision dynamics based on EEG. Beta power as an indicator of evidence accumulation is mainly used to study decision making14.

First, we design four different driving tasks in the same scene. Then, we conducted driving experiments on the 51 WORLD driving simulator. The conventional scalp EEG caps for the EEGs we used is a non-invasive method to record scalp voltage over time. The electroencephalography (EEG), electrocardiogram (ECG) and electromyogram (EMG) device model is Neuracle, and the eye tracker model is Tobii Glasses 2. The driving simulation software synchronizes all the equipment by sending time stamps to the trigger box. Finally, data preprocessing is mainly carried out using the EEGLAB66 plug-in of MATLAB. The preprocessed data are analysed in the time-frequency domain by MATLAB, and feature downscaling and classification are carried out using methods such as linear discriminant analysis (LDA).

Methods

Participants

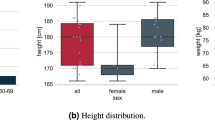

The content and procedures of this study were noticed and approved by the Medical Ethics Committee of Tsinghua University(the approval number: 20230007). Thirty-five voluntary participants (age range = 20–60 years old, average age = 25.06 years old, SD = 7.90), who were students or faculty members at Tsinghua University were recruited to participate in 150-minute event-related driving tasks, including 26 males and 9 females. The participants must have a driving licence of the People’s Republic of China above grade C (including grade C) and had at least one year of driving experience (driving experience range = 1–20 years, average driving experience = 3.03 years, SD = 3.68). Participants were required to ensure adequate rest (sleep no less than 8 hours) before the experiment, and to not stay up late the night before the experiment (sleep no later than 12:00 p.m.) to reduce the impact of noise on EEG signals. The participants had no hair perms or drug use history within three months and did not take any excitatory substances within 48 hours before the experiment, including but not limited to tranquilizers, tranquilizers, alcohol, coffee, tea and cigarettes. All participants had no mental disorders and were required to complete the experiment according to their actual driving style. A pretest will be conducted before the experiment to ensure that all participants understand the experimental task and that no participants have physiological discomfort due to the simulated environment of the experiment. All participants were informed of the experimental requirements before participating in the experiment and the economic reward for an experiment was 90–120 yuan per hour. Each participant completed all five types of driving events, spread across seven or eight sets of driving tasks.

Desired sample size was based on G*Power analysis. We used F-tests, ‘ANOVA: Repeated measures, between factors’ to compute required sample size. We set f = 0.5, α = 0.05, Power = 0.8, Number of groups = 5, Number of measurements = 40, G*Power produced a recommended sample size of 30 participants. Among them, α and Power are determined based on the basic theory of mathematical statistics67. The number of measurements is the average number of events per subject. The number of groups correspond to the categories of the events. Effect size f is determined based on large effect with values ranging from 0.5 to 0.868,69,70,71.

Experimental environment

The experimental environment is mainly composed of a driving simulator and a circular curtain, as shown in Fig. 1a. The driving simulator contains a six-degree-of-freedom motion platform and a control platform. The carrying capacity of the six-degree-of-freedom motion platform reaches 500 kg, and during the experiment, the platform can achieve the functions of translation and rotation, in which the maximum stroke of translation motion can reach 400 mm, the maximum acceleration can reach ±0.7 g, and the maximum speed can reach 400 mm/s. The maximum amplitude of rotation motion can reach ±23°, the maximum acceleration is ±500°/s2, and the maximum speed is 40°/s. The steering wheel of the control platform adopts the real car disk surface, which is directly driven by a servo motor. The strength of the steering wheel is linearly adjustable and the peak torque can reach 28.65 Nm. The servo motor communicates with the control system through a coding device, the control system contains a servo driver and motion control card, the servo driver is responsible for driving the servo motor, the motion control card is responsible for the interaction between the torque signal and the steering wheel signal with the computer, and the two communicate through an encoder signal.

Experimental setup of multimodal human driver factor data collection. (a) A brief sketch of the overall simulation scenario. (b) Structural diagram of the simulator with six degrees of freedom. (c) Overall environment. All participants completed the experiment in the same environment. (d) Data collection. The multimodal physiological data to be collected are shown in Fig. 1, and the use of the relevant portraits was authorized by the participants.

The scene used in the experiment is provided by 51world, which simulates the actual road in Beijing and is projected onto the circular curtain. The actual road section, which is approximately 11 km near Shunbai road in Shunyi, Beijing, is intercepted as the simulation scene to better restore the actual driving environment. The experimental route is shown in Fig. 1b. The road scene is relatively rich, including multiple right angle curves, four lane straight urban roads, two lane straight urban roads, etc. The refresh rate of the scene frame is approximately 60 fps, and the simulation image is shown in Fig. 1c. The main vehicle is controlled by the driving simulator operated by the participants. The static elements and the opponent vehicle are designed to realize various events according to the location of the main vehicle. The participants needed to pay attention to the road conditions at all times and take measures for the events. A variety of physiological signal acquisition devices synchronously recorded the physiological data changes before and after the participants engaged in driving behaviour.

Experimental paradigm

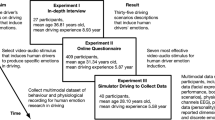

The experiment adopted the event-related behaviour response paradigm to model various driving behaviours using event-related multimodal physiological signals, as shown in Fig. 2. This experimental design covers a relatively comprehensive range of basic driving behaviours, which were carried out by the subjects participating in the experiment, and the driver’s multimodal physiological data were collected synchronously. The exact location of the sensors used in the experiment is shown in Fig. 4. The driving behaviours of our experiment are relatively rich, and the collected physiological data have a higher dimension, that is, the data may contain more information. By designing specific events to induce different driving behaviours, the participants were required to respond to the events to record the multimodal physiological data when the participants engaged in different driving behaviours. Specifically, the driving behaviours to be induced were divided into five categories: smooth driving (control group), acceleration, deceleration, lane-change and turning. The selection of these five types of behaviours takes into account the driver’s basic operations, namely, pedal-based operations and steering wheel-based operations. Smooth driving is the control group. Pedal-based operations include acceleration and deceleration, and steering wheel-based operations include lane changing and turning. Various behaviours are triggered by different events. To ensure the balance of the number of samples, the number of samples corresponding to different driving behaviours should be the same as similar as possible. As shown in Table 2, smooth driving is triggered by normal straight-line driving; acceleration is triggered by overtaking and congestion relief; deceleration is triggered by the sudden braking of the vehicle in front, the sudden lane change of the vehicle in front of the side, and the pedestrian crossing the road; lane-change is triggered by static obstacles in front; turning is triggered by right angle left turn indication and right angle right turn indication. When the trigger event occurs, the time is marked as the marker of event-related potential (ERP) to facilitate data segmentation and research during data analysis, as shown in Fig. 3. Different driving behaviours were divided into four different cases (considering the actual setting of the case, the two behaviours of smooth driving and turning were in the same case). Each participant completed eight groups of experiments. Notably, the time interval between two adjacent events was 5–15 s, and the participants were required to manoeuvre the vehicle back to the original lane and resume normal driving within this period of time. In each experiment, the participant needs to drive the entire journey. The duration of all driving tasks was approximately 90 min, and a rest time was interspersed between them to ensure that the participants do not enter a fatigued driving state. All the operating procedures of the participants and the physiological data during the operation were synchronously recorded.

The overall design framework of the experiment. (a) Trigger event. (b) Signal input. (c) Model selection. (d) Behavioural output. The overall idea is to induce different driving behaviour of human drivers through specific events and synchronously collect multimodal physiological signals, and then use models, such as LDA and EEGNet, to classify multiple driving behaviours.

Marker used to mark the event occurrence point. With reference to the event related potential (ERP) paradigm, 500 ms before the event and 1500 ms after the event were intercepted to analyse the relationship between physiological signals and driving behaviours. To reduce mutual interference between events, there is a time interval between two events.

The setting conditions and time mark positions corresponding to various trigger events were different, and are summarized as follows:

-

1.

Smooth driving: Smooth driving in our experimental design is relative to other mark with event triggering as a control, which refers to the state when no event occurs and the vehicle is travelling on an empty straight road, as a control group. When the main vehicle drives in the normal straight line driving area which is defined in the map design, the time mark is made.

-

2.

Acceleration: The acceleration behaviour has two types of trigger conditions. The first is the overtaking scenario, where the main vehicle will encounter a slower moving vehicle in front of it (speed set to 5 m/s and the driver needs to complete the overtaking action after noticing the slow moving vehicle. The time marker is set when the opponent car starts to drive slowly, as shown in Fig. 5d.

Fig. 5 Event setup used to induce human driving behaviours, including acceleration, deceleration, lane-change and turning. (a)The front emergency brake. (b)The side front cut-in. (c) Pedestrian crossing. (d) Overtaking. (e) Congestion relief. (f) Static obstacle on left. (g) Static obstacle on right. (h) Left-turn sign. (i) Right-turn sign.

The second is the congestion relief scenario, in which the main car encounters multiple cars blocking the road (the speed was set to 5 m/s simulating a congestion scenario). And the opponent cars will rush out at a fast speed to simulate congestion relief after driving slowly for 50 m. At this time, the driver needs to accelerate because they were asked to keep the normal speed at no less than 60 km/h, and the time marker is set at the time when multiple opponent cars accelerate to rush out, as shown in Fig. 5e.

-

3.

Deceleration: There are three scenarios that prompt deceleration. The first involves an emergency braking situation with the vehicle in front. As the main vehicle follows, both vehicles maintain a consistent speed. However, after traveling 50 meters, the vehicle in front suddenly applies the brakes. This leads to a rapid reduction in speed, potentially reaching 0 km/h. Participants were instructed to maintain a speed of no less than 60 km/h and a following distance of only 100 meters. Consequently, they must decelerate swiftly upon encountering such a situation to avert a rear-end collision. The time mark is located when the opponent car in front decelerates to 0, as shown in Fig. 5a.

The second is the situation of a sharp lane change of the vehicle on the side in front. When the main vehicle drives in accordance with the specified lane, there will be a opponent vehicle driving at 0.7 times the speed of the main vehicle on the side in front when driving to a fixed position, and the opponent vehicle will urgently change the lane to the lane where the main vehicle is located after driving for 50 m. The driver needs to slow down after discovering this change to prevent a rear end collision. The time mark is located in the sharp lane change of the vehicle on the side in front, as shown in Fig. 5b.

The third is the situation of pedestrians crossing the road. When the main vehicle reaches a fixed position, pedestrians cross the road at a speed of 5 m/s in front of it. The driver needs to respond in time and slow down to prevent hitting pedestrians. The time mark is when pedestrians begin to cross the road, as shown in Fig. 5c.

-

4.

Lane-change: There are two types of trigger conditions for lane change: both are static obstacles, and the difference is that there are two kinds of static obstacles blocking the road ahead. To guide the driver to achieve left lane change and right lane change, the driver needs to complete the corresponding lane change behaviour after observing the static obstacles. The case design delineates the lane change area, the starting point of which is approximately 100 m from the static obstacles. This position is the position where the relatively stable obstacles found through the test appear in the simulation screen, and the time mark is when the main vehicle drives into the area, as shown in Fig. 5f,g.

-

5.

Turning: There are two kinds of trigger conditions for turning behaviour, road turn signs, which are divided into left-turn signs and right-turn signs. They guide the driver to turn left and right to ensure that the main vehicle runs according to the specified route. The driver needs to complete the corresponding turning behaviour after observing the sign. In the case design, the turning area is delimited, which is similar to the lane-change area. The starting point of the turning area is approximately 100 m away from the indicated road sign. This position is the position where the relatively stable indicated road sign found through the test appears in the simulation screen. When the main vehicle drives into the area, the time mark is recorded, as shown in Fig. 5h,i.

Data synchronization

During the experiment, multimodal physiological data were recorded synchronously. Synchronization is achieved by the synchronization trigger box provided by Neuracle in China, which is called the NeuSen TB series multiparameter synchronizer. When the driving simulator data collection computer receives a specific stimulus, it sends a time marker to the physiological data side through the synchronization trigger box, and all these time markers are recorded in a “bdf” file. Through this synchronization mark, the physiological data corresponding to driving behaviour are aligned to realize synchronization of data recording.

EEG and ECG signals

The EEG signals were collected by the physiological data collection system called the NeuSen W Series Wireless EEG Acquisition System, which is provided by Neuracle. The system includes an EEG cap with 64 electrodes, 59 of which were used to collect the EEG signals for subsequent data analysis. The cap was worn on the participant’s head to record the EEG signals, and the EEG signals were recorded in a “bdf” file with a sampling frequency of 1000 Hz.

The electrode position distribution of EEG acquisition instruments has a unified customary standard, arranged according to the international 10–20 electrode system developed by the EEG Society72. The electrode distribution of this system is shown in Fig. 6, where the electrodes are named by letters and numbers, with letters representing the cortical area where the electrodes are located, odd numbers representing the left brain part, and even numbers representing the right brain part.

The ECG signal is also collected through the Neuracle system since one of the electrodes in the cap is for ECG recording. The ECG electrode can be applied to the participant’s chest near the heart through an electrode patch to achieve ECG data collection. The sampling frequency was also 1000 Hz and the data were recorded in a “bdf” file.

EMG signals

The EMG signals were acquired by the NeuSen WM Series Wireless EMG Acquisition System provided by Neuracle. The acquisition module was pasted on the tibialis anterior muscle, gastrocnemius muscle of the right leg and brachioradialis muscle of both arms, for these muscles are involved in a person’s driving behaviour during driving. The tibialis anterior and gastrocnemius muscles of the right leg are mainly involved in the braking and throttle operation of the legs73,74, while the brachioradialis muscles of the arms are involved in the control of the steering wheel75,76. The acquisition module communicates with the device base station through Bluetooth, and the base station and the acquisition computer realize multimodule synchronous acquisition through a wired network connection. The sampling frequency was also 1000 Hz and data were recorded in a “bdf” file.

GSR signal

The GSR signal was collected by the NeuSen W GSR Series Wireless GSR Acquisition System provided by Neuracle, and the collection electrode was adhered to the belly of the participants’ left index finger and middle finger. The sampling frequency was also 1000 Hz and data were recorded in a “bdf” file.

Eye track signals

The oculomotor signal was collected by Tobbi Glasses 2, which can be configured according to the participant’s desired visual correction, thus ensuring that the participant has normal or corrected vision at the time of the experiment. The participant wore an oculomotor, and the raw data collected by the oculomotor contained time-stamped information, visual fall point, eye position, pupil diameter, etc. The sampling frequency was 100 Hz and data were recorded in a “ttgp” file.

Data Records

Data recording and storage

In this section, we will clarify the storage organization of MPDB dataset, which is publicly accessible in Figshare, including raw dataset77, preprocessed dataset78, and eye tracking dataset79. The raw dataset contains physiological data of 35 subjects driving for 2 hours each, and the preprocessed dataset contains physiological data samples of the driving behaviour of 35 subjects.

Raw dataset storage

The organization of the dataset folder is shown in Table 3. The directory of each subject includes data from four experiments, and each experiment corresponds to different behaviors, namely, deceleration, acceleration, turning, and lane change. Therefore, the {event} field of the filename should be replaced with {brake, turn, throttle, change} when obtaining the data of different behaviors, as shown in the “Raw Dataset” section in Table 3. You can frame and prerprocess the raw data according to your own needs.

Preprocessed dataset storage

After all, we have uploaded the raw dataset and the preprocessed dataset to the publicly accessible repository of figshare. In the preprocessed dataset, the behaviour samples of each subject are combined into a file. These samples include five types of behaviors, and the event types in each behaviour are shown in the Table 2. Readers can find these event types in “EEG.events.type” of the EEG structure when using MATLAB to read the datasets. Since EEG and ECG were collected through the same wireless transmission device, the two were separated during preprocessing and the raw data were organized by subject number. For each modality, all behavioural data from each subject were combined into one data file and named using the corresponding modality and subject number, as shown in the “Preprocessed Dataset” section in Table 3.

Technical Validation

In this section, we prove the validity of the dataset. According to the convention of physiological data validation and the experiment, we consider the following three aspects80: whether physiological data can be used, whether vehicle parameters are correct, and whether there is a correlation between physiological data and driving behaviours. To this end, technical validation includes quality validation of physiological variables and vehicle parameters and correlation analysis of physiological variables and behaviours. In the last part, several classification models are used to effectively prove the validity of the data81.

Physiological data validation

This part explains the availability and standard of the physiological data in this dataset. Each kind of physiological data was preprocessed before use, and the preprocessing method conformed to the specifications of physiological data preprocessing, which is described in detail below.

The technical validity of the physiological dataset is highly related to the equipment and acquisition specification process of the experiment. For example, whether the impedance is in a reasonable range, whether the data processing method is standardized, and so on. Table 4 demonstrates the parameters of the experimental equipment, and it is evident that its accuracy meets the needs of physiological signal acquisition.

For each physiological variable collected in the experiment, we drew their waveforms as time functions for verification. The overall results of each type of data for each subject are shown in Figs. 10–13.

EEG validation

The EEG data for this dataset include 59 channels, the sampling frequency is 1 kHz, and the sample length of each behaviour is 2 s, so each frame contains 2000*59 sampling points.

Impedance validation

EEG signals were collected by a head-worn device, so the hair of the participants affects the quality of the signal. In the preparation stage of the experiment, it is necessary to inject conductive paste into each electrode to ensure reliable contact with the scalp. Excessive impedance will reduce the quality of the signal and cause greater noise. During the test, we ensured that the impedance of each electrode was lower than 20 k Ω, and each experiment was carried out after confirming that the data waveform was normal.

Data preprocessing validation

We preprocessed the raw EEG signal to suppress noise, remove artifacts, and extract useful information. The preprocessing steps mainly include bandpass filtering, enframing and artefact removal. According to the useful frequency band of the EEG signal, an IIR bandpass filter with a [0.5 Hz 40 Hz] pass band is used for filtering.

Take the corresponding time of each mark as the centre, we can select the [−0.5 s, 1.5 s] interval as the corresponding behaviour samples for the filtered data, which was based on the data partitioning method used in event-related potential experiments73. We provided this as a reference for technical validation, rather than a mandatory segmentation. Finally, ICA decomposition is performed on these behaviour samples to remove artefacts. EEGLAB will determine whether each independent component is a useful signal or a artifact based on several objective indicators. We make a comprehensive judgment based on the recommendations of EEGLAB and typical artifact paradigms, including obvious eye movement artifacts, muscle movement artifacts and abnormal electrode artifacts82, as shown in Fig. 7. The EEG waveform is shown in Fig. 10. It contains 59 channels of valid data from five categories of driving behaviours. From the waveform, it can be observed that the EEG signals corresponding to each category of events meet the requirements, with no significant anomalies in the samples.

Physiological structure

In order to analyze the physiological components of driving behaviors embodied in the EEG signals, we extracted the EEG time-frequency domain features of each driving behavior by using the short-time Fourier transform (STFT), and selected 500 ms before the event, 200 ms after the event and 1500 ms after the event as the three observed moments, as shown in Fig. 8. It can be seen from the figure that among the three events that caused the braking behavior, the driver’s EEG signal showed an increase in power at 200 ms, mainly in the parietal and temporal lobes.

Statistical property validation

We analyzed the statistical properties of the EEG data. The EEG power spectral densities under each event are shown in Table 5, which lists the average EEG power spectral densities PSD (dB/Hz) as well as the mean and standard deviation for all participants under each stimulus condition. The boxplot of EEG power spectra for different events is depicted in Fig. 9, which indicates that the power distribution of each group is essentially identical.

EMG, GSR and ECG validation

The dataset also includes EMG, ECG and GSR signals, which can be regarded as discrete time series. The EMG signal consists of four channels with a sampling rate of 1 kHz, which were collected on two arms and the right leg. The GSR and ECG both contain one channel with a sampling rate of 1 kHz. These three signals were all preprocessed to suppress noise and extract useful information. The preprocessing steps include bandpass filtering and removing bad samples. The range of bandpass filtering is selected as15,83 Hz for EMG, [0.01, 200] Hz for ECG, and [0.5, 100] Hz for GSR. The waveforms of the extracted valid EMG, GSR, and ECG data samples for each test are shown in Figs. 11–13.

ECG signals

The ECG signal waveform of each epoch is shown in Fig. 11. For different subjects, the ECG of each subject must be normalized because the impedance conditions of the experiment may change. The heart rate in the figure is very normal and conforms to the parameter setting of the sensor.

GSR signals

The GSR signal waveform of each epoch is shown in Fig. 12. It can be seen that there is no obvious abnormal fluctuation in the GSR signal.

EMG signals

Figure 13 shows the waveform of EMG sigmals. When the muscles on the driver’s arms and legs produce actions, there will be an obvious fluctuation in the EMG waveform, such as when stepping on the brake or turning the steering wheel. The EMG signal has large noise interference, so we could extract enough obvious peaks as features.

The non-uniformity of fluctuations in EMG signals arises from the action potentials of different muscles during driving, e.g., the tibialis anterior muscle is more vigorous during pedal pressing and releasing, while the gastrocnemius muscle is relatively flat. For braking events, pressing the brake pedal urgently causes large observable fluctuations in EMG signals in the legs. Each individual also does not react and maneuver in the experiment in exactly the same way, which is one reason for the different EMG signals. In addition, the last two channels of EMG are placed on the arm, and the intensity of the arm muscle action is different from that of the leg.

The above results show that the driving condition of the subjects remains stable most of the time, without excessive stress and stress state during simulated driving. In particular, only under the stimulation of some emergency braking events involved in this case will the signals change substantially.

Correlation analysis of physiology and driving behaviours

Figures 14, 15 illustrates the Spearman correlation analysis between the five driving behaviors and the mean and variation of 64-channel physiological signals (EEG, EMG, GSR), with the correlations shown as heat maps ranging from −1 to 1.

Eye tracking variable validation

The eye tracker recorded the x and y coordinates of the subject’s gaze at each point in time during the driving experiment, which can show the focus of subjects’ attention. As we introduced, the dataset mainly includes five categories of driving behaviours. In each behaviour, we found that the gazing patterns are notable at the main objects that induce driving events. Furthermore, the gazing pattern is also clear at the motor board, where speed and rpm are shown. This indicates that the subjects are sensitive to the car’s speed. To show the result, we plotted subject 1’s eye movement data scatter diagram of each event, which is shown in Fig. 16. The scatter diagram shows that the scatter points are concentrated in the centre of the event, and the driving state of the subjects in the experiment is normal and without distraction. Therefore, the data is effective.

Validation of main vehicle parameters

Simulated driving environment

The simulated driving environment in this experiment is close to the actual vehicle environment. The hardware system of the simulator includes an adjustable real car seat, steering wheel, safety belt and shift lever. The software system of the simulator includes a model of an actual road in Beijing, dashboard, and a vehicle parameter recording system. Therefore, the physiological data collected during the simulated driving experiments can reflect the characteristics of actual driving to a certain extent.

Vehicle parameter verification

Vehicle parameters, such as speed, acceleration, accelerator pedal, brake pedal, engine speed, and gear position, are recorded by the simulator during the simulated driving test, which can directly reflect the driver’s behaviour. In the experiment, the subjects were asked to drive as smoothly as possible to avoid collisions to simulate the behaviour of real world driving as much as possible.

To ensure the repeatability of the experiment and the applicability of the dataset across subjects, we set the same route for each subject in the experiment under the same case, and the trigger time and content of events on the route were the same in different trials. Therefore, it can be concluded that the vehicle parameters of each subject in each case should show the same trend.

The curve of vehicle parameters changing with time is shown in Fig. 17. Each subfigure in Fig. 17 contains the curves of all 35 subjects, and each curve represents the time waveform of vehicle parameters during simulated driving. The figure shows that for each driving behaviour experiment, the trend of vehicle parameter data that changes with time has a strong similarity, which ensures the sameness of subject behaviour in each experiment. This shows that the behaviours of different subjects in the experiment are consistent, so the data obtained are repeated samples of five different types of behaviours. This ensures the repeatability of the sample and lays the foundation for subsequent analysis.

Vehicle parameters. (a) Velocity(m/s): the average speed is about 60 km/h, which is meeting the speed limit standard of urban roads with center lines. (b) Throttle pedal: for each driver, the acceleration signal is very strong at the beginning of driving, and then decreases until the vehicle speed approaches the speed limit. (c) x-position: when different subjects are driving, the change of vehicle position over time is almost the same, which ensures that the pre-designed events on the route are triggered in turn.

Correlation validation of physiological data, behaviour and event labels

For this dataset, the most important point to prove is the correlation between physiological data and behaviour, that is, whether the physiological data contain the corresponding information of the driver’s behaviours. This section uses the collected data and the behaviour tags to build a model to illustrate this correlation.

Quality control of information interaction synchronization

Quality control of information interaction synchronization. The time of event labels and physiological data must be synchronized. In the above, we introduced a method of data synchronization. The software and hardware system of the 51Sim-One driving simulator writes the events into the event storage area of the Neuracle data acquisition software through serial ports according to the preset event determination conditions. For example, we set the judgement area at the point where the subject can just see the turning sign. At this time, it is deemed that the stimulus point of turning time has occurred, and this event will be marked in the system. Each event mark has a timestamp and is stored with the dataset. We use these event marks to cut the data to obtain event sample frames (epochs). Through experimental analysis, the delay of USB serial communication is very low (approximately 10 ms). Synchronization between data and marks can be ensured, which is also the basis of correlation analysis.

Classification of physiological data

Classification is a powerful illustration of data validity. We use the markers of vehicle parameters as data labels and physiological data as samples to train classifiers for classification tasks. If the data are valid, there should be commonalities between similar samples and differences between different samples. We first balanced the number of samples to ensure that the number for each category was roughly equal, divided the data into a training set, validation set and test set by 6:2:284. Specifically, we specified stratify = y to ensure that the proportions of different classes in the training and test sets are the same as in the original dataset, and finally used the following two models for classification.

Linear discriminant analysis

Linear Discriminant Analysis (LDA)83 minimizes the distance between data of the same category and maximizes the distance between data of different categories through projection transformation of data; that is, it achieves the effect of dimension reduction and classification at the same time. To use LDA for the five classification tasks, the data must be reduced to no more than four dimensions. We combined EEG and EMG data, trained an LDA classifier to reduce each sample to four dimensions, and then verified the performance of the classifier. The overall classification accuracy of the model is 35.1%.

EEGNet

EEGNet is a compact EEG feature extraction convolution neural network with a deeply separable convolution structure. It has good generalization ability and performance in the case of limited data and can learn various interpretable features in a series of BCI tasks. After data preprocessing, we use EEGNet to classify the EEG epochs. We divided the training set and validation set for classification and used EEG data as samples and the mark generated by the simulator as labels. After all, the overall classification accuracy of the model is 49.8%. The results of the above two models show that the classification results of the two models are similar, which shows that there is indeed a correlation between samples and that it does not change with a change in the model.

The classification results show that the classification accuracy of the brake category is the highest, followed by throttle and changing_lanes, while the error rate of the turning and stable categories is higher. The reasons may be as follows:

-

1.

For categories with high classification accuracy, throttle and brake involve the action of stepping on the accelerator and brake pedal, and their motion imagination characteristics may be quite obvious; thus, their sample characteristics are obvious, and the classification accuracy is much higher.

-

2.

For categories with low classification accuracy, turning and stability, the main reason is the similarity and fuzziness between behaviours. Specifically, turning is easily misjudged as changing lanes because the driving actions of the two behaviours are similar, and the reason why the turn is misjudged as throttle may be that the accelerator pedal is pressed after turning; thus, the actions on the arm during turning and lane changing are not obvious.

-

3.

The stable category that we designed to work as the control group tends to be misjudged as many other categories, especially turning, changing lanes and throttle, possibly because the throttle pedal is pressed under normal driving conditions, and the differences are not obvious.

Validation of multimodal data

To improve the resolution of EEG signals, we use multimodal data(e.g. combining EEG with EMG and ECG) as an assistant. Multimodal data actually promote EEG data to a higher dimension. Although compared with the 59 effective channels of EEG signals, EMG has only 4 channels, the behavioural information provided by EMG is crucial.

Multimodal physiological Net(MMPNet)

MMPNet is a neural network model specially designed for multimodal physiological data, and its structure is shown in Fig. 2. The overall classification accuracy of the MMPNet model under multimodal data is 62.6%, while the accuracy of MMPNet model using EEG only is 55.7%, and the confusion matrices are shown in Fig. 18.

EEGNet

The overall classification accuracy of EEGNet model under multimodal data is 55%, which is significantly improved compared with 49.8% of the single-mode data.

Overall, the classification accuracy of several models is shown in Table 6. It can be seen that the classification performance of MMPNet exceeds that of the baseline model EEGNet. The performance comparison of MMPNet model between multimodal data and EEG data shows that the classification accuracy of multimodal data is significantly improved compared with that of single mode data (p = 1.2956 × 10−9).

The results show that the accuracy of the model is improved to a certain extent by multimodal data. Compared with the situation where only single mode data are used, EMG provides information on the driver’s arm and leg movements, which makes it easy to distinguish between the three behaviours of acceleration, turning and stability that were easily confused before due to the differences in pedal and steering wheel movements.

We adopt a strategy to demonstrate the effectiveness of the above classification results, which involves adding additive Gaussian noise with a mean of zero and a gradually increasing variance to the data. Figure 19 illustrates the variation of the performance of the three models for different noise powers. It can be seen that as the variance of additive Gaussian noise increases, the classification performance will deteriorate. It can be seen that when the power of noise exceeds a certain limit, the classification results of the model will be very close to completely random. Hence, we can clearly see that the physiological data has strong separability, which reflects the high quality of the data.

Usage Notes

The original data and preprocessed data of multimodal physiological signals can be downloaded from Figshare. Users interested in the dataset can register on the website and download the dataset locally. The original dataset and the preprocessed dataset are named as “Driving behaviour multimodal human factors original dataset” and “Driving behaviour multimodal human factors preprocessed dataset”, respectively.

After the dataset is downloaded, users can process EEG through MATLAB’s EEGLAB plug-in. We recommend that researchers use EEGLAB version 2021 and MATLAB R2021b on Windows 10 or Linux. EEGLAB can help complete EEG preprocessing steps such as filtering, segmentation and ICA. The code for batch preprocessing of EEG signals will also be provided in “Code availability”. The file formats of EMG, GSR and ECG signals are consistent with those of the EEG signals, which can also be imported and processed through MATLAB. The batch preprocessing codes of EMG, GSR and ECG signals will also be provided in “Code availability”.

Additionally, we suggest the following data processing steps:

-

Download the dataset from the above website and save it locally. Record the save path.

-

Check whether the dataset is complete. Each raw data point consists of two parts, namely “data. bdf” and “evt. bdf”.

-

Import the EEGLAB plug-in to MATLAB and load the dataset.

-

Complete data preprocessing, including but not limited to filtering and segmentation.

-

Further analysis and research can be performed using the preprocessed data.

Code availability

Readers can access the tutorials and code of our original and preprocessed datasets on Github (https://github.com/zwqzwq0/MPDB). Two folders called preprocessing and classification can be found, which contain MATLAB code for preprocessing and python code for classification.

References

Han, W. & Zhao, J. Driver behaviour and traffic accident involvement among professional urban bus drivers in china. Transportation research part F: traffic psychology and behaviour 74, 184–197 (2020).

Singh, S. Critical reasons for crashes investigated in the national motor vehicle crash causation survey. Tech. Rep. (2015).

Ye, S., Wang, L., Cheong, K. H. & Xie, N. Pedestrian group-crossing behavior modeling and simulation based on multidimensional dirty faces game. Complexity 2017 (2017).

Paden, B., Čáp, M., Yong, S. Z., Yershov, D. & Frazzoli, E. A survey of motion planning and control techniques for self-driving urban vehicles. IEEE Transactions on intelligent vehicles 1, 33–55 (2016).

Bener, A., Crundall, D., Haigney, D., Bensiali, A. K. & Al-Falasi, A. Driving behaviour, lapses, errors and violations on the road: United arab emirates study. Advances in transportation studies 12, 5–14 (2007).

Sharma, A., Zheng, Z., Bhaskar, A. & Haque, M. M. Modelling car-following behaviour of connected vehicles with a focus on driver compliance. Transportation research part B: methodological 126, 256–279 (2019).

Kolekar, S., de Winter, J. & Abbink, D. Human-like driving behaviour emerges from a risk-based driver model. Nature communications 11, 1–13 (2020).

Pisauro, M. A., Fouragnan, E., Retzler, C. & Philiastides, M. G. Neural correlates of evidence accumulation during value-based decisions revealed via simultaneous eeg-fmri. Nature communications 8, 15808 (2017).

Markkula, G., Uludağ, Z., Wilkie, R. M. & Billington, J. Accumulation of continuously time-varying sensory evidence constrains neural and behavioral responses in human collision threat detection. PLoS Computational Biology 17, e1009096 (2021).

Diederichs, F. et al. Improving driver performance and experience in assisted and automated driving with visual cues in the steering wheel. IEEE Transactions on Intelligent Transportation Systems 23, 4843–4852 (2022).

Wang, X., Liu, Y., Wang, J. & Zhang, J. Study on influencing factors selection of driver’s propensity. Transportation research part D: transport and environment 66, 35–48 (2019).

Zou, B., Xiao, Z. & Liu, M. Driving behavior recognition based on eeg data from a driver taking over experiment on a simulated autonomous vehicle. In Journal of Physics: Conference Series, vol. 1550, 042046 (IOP Publishing, 2020).

Liang, S.-F. et al. Monitoring driver’s alertness based on the driving performance estimation and the eeg power spectrum analysis. In 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference, 5738–5741 (IEEE, 2006).

Hunt, L. T. et al. Mechanisms underlying cortical activity during value-guided choice. Nature neuroscience 15, 470–476 (2012).

Karthaus, M., Wascher, E. & Getzmann, S. Distraction in the driving simulator: An event-related potential (erp) study with young, middle-aged, and older drivers. Safety 7, 36 (2021).

Boon-Leng, L., Dae-Seok, L. & Boon-Giin, L. Mobile-based wearable-type of driver fatigue detection by gsr and emg. In TENCON 2015-2015 IEEE Region 10 Conference, 1–4 (IEEE, 2015).

Matsuda, T. & Makikawa, M. Ecg monitoring of a car driver using capacitively-coupled electrodes. In 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, 1315–1318 (IEEE, 2008).

Kim, I.-H., Kim, J.-W., Haufe, S. & Lee, S.-W. Detection of braking intention in diverse situations during simulated driving based on eeg feature combination. Journal of neural engineering 12, 016001 (2014).

Zhang, H. et al. Eeg-based decoding of error-related brain activity in a real-world driving task. Journal of neural engineering 12, 066028 (2015).

Wandtner, B., Schömig, N. & Schmidt, G. Effects of non-driving related task modalities on takeover performance in highly automated driving. Human factors 60, 870–881 (2018).

Yuan, H. & He, B. Brain–computer interfaces using sensorimotor rhythms: current state and future perspectives. IEEE Transactions on Biomedical Engineering 61, 1425–1435 (2014).

Birbaumer, N. Slow cortical potentials: Plasticity, operant control, and behavioral effects. The Neuroscientist 5, 74–78 (1999).

Mondini, V., Kobler, R. J., Sburlea, A. I. & Müller-Putz, G. R. Continuous low-frequency eeg decoding of arm movement for closed-loop, natural control of a robotic arm. Journal of Neural Engineering 17, 046031 (2020).

Bhagat, N. A. et al. Neural activity modulations and motor recovery following brain-exoskeleton interface mediated stroke rehabilitation. NeuroImage: Clinical 28, 102502 (2020).

Kropp, P., Kiewitt, A., Göbel, H., Vetter, P. & Gerber, W.-D. Reliability and stability of contingent negative variation. Applied psychophysiology and biofeedback 25, 33–41 (2000).

Kirsch, W. & Hennighausen, E. Erp correlates of linear hand movements: Distance dependent changes. Clinical Neurophysiology 121, 1285–1292 (2010).

Walter, W. G., Cooper, R., Aldridge, V., McCallum, W. & Winter, A. Contingent negative variation: an electric sign of sensori-motor association and expectancy in the human brain. nature 203, 380–384 (1964).

Di Liberto, G. M. et al. Robust anticipation of continuous steering actions from electroencephalographic data during simulated driving. Scientific reports 11, 23383 (2021).

Avanzini, P. et al. The dynamics of sensorimotor cortical oscillations during the observation of hand movements: an eeg study. PloS one 7, e37534 (2012).

Khaliliardali, Z., Chavarriaga, R., Gheorghe, L. A. & Millán, delR. J. Action prediction based on anticipatory brain potentials during simulated driving. Journal of neural engineering 12, 066006 (2015).

Hulse, L. M., Xie, H. & Galea, E. R. Perceptions of autonomous vehicles: Relationships with road users, risk, gender and age. Safety science 102, 1–13 (2018).

Moody, J., Bailey, N. & Zhao, J. Public perceptions of autonomous vehicle safety: An international comparison. Safety science 121, 634–650 (2020).

Mordue, G., Yeung, A. & Wu, F. The looming challenges of regulating high level autonomous vehicles. Transportation research part A: policy and practice 132, 174–187 (2020).

Lee, J., Lee, D., Park, Y., Lee, S. & Ha, T. Autonomous vehicles can be shared, but a feeling of ownership is important: Examination of the influential factors for intention to use autonomous vehicles. Transportation Research Part C: Emerging Technologies 107, 411–422 (2019).

Chang, W. et al. Driving eeg based multilayer dynamic brain network analysis for steering process. Expert Systems with Applications 207, 118121 (2022).

McIntyre, G. & Göcke, R. The composite sensing of affect. In Affect and Emotion in Human-Computer Interaction: From Theory to Applications, 104–115 (Springer, 2008).

Morris, E. A. & Hirsch, J. A. Does rush hour see a rush of emotions? driver mood in conditions likely to exhibit congestion. Travel behaviour and society 5, 5–13 (2016).

Scott-Parker, B. Emotions, behaviour, and the adolescent driver: A literature review. Transportation research part F: traffic psychology and behaviour 50, 1–37 (2017).

Wang, X. et al. Feature extraction and dynamic identification of drivers’ emotions. Transportation research part F: traffic psychology and behaviour 62, 175–191 (2019).

Lang, P. J. & Bradley, M. M. Emotion and the motivational brain. Biological psychology 84, 437–450 (2010).

Hussain, I., Young, S. & Park, S.-J. Driving-induced neurological biomarkers in an advanced driver-assistance system. Sensors 21, 6985 (2021).

Taamneh, S. et al. A multimodal dataset for various forms of distracted driving. Scientific data 4, 1–21 (2017).

Cao, Z., Chuang, C.-H., King, J.-K. & Lin, C.-T. Multi-channel eeg recordings during a sustained-attention driving task. Scientific data 6, 19 (2019).

Li, W. et al. A multimodal psychological, physiological and behavioural dataset for human emotions in driving tasks. Scientific Data 9, 481 (2022).

Carvalho, H. W. et al. The latent structure and reliability of the emotional trait section of the affective and emotional composite temperament scale (afects). Archives of Clinical Psychiatry (São Paulo) 47, 25–29 (2020).

Dingus, T. A. et al. Driver crash risk factors and prevalence evaluation using naturalistic driving data. Proceedings of the National Academy of Sciences 113, 2636–2641 (2016).

Dingus, T. et al. The 100-car naturalistic driving study. Tech. Rep. FHWA-JPO-06-056, United States. Department of Transportation. National Highway Traffic Safety Administration (2006).

Wylie, C., Shultz, T., Miller, J., Mitler, M. & Mackie, R. Commercial motor vehicle driver fatigue and alertness study: Technical summary. Tech. Rep., FHWA-MC-97-001 (1996).

Otmani, S., Pebayle, T., Roge, J. & Muzet, A. Effect of driving duration and partial sleep deprivation on subsequent alertness and performance of car drivers. Physiology & behavior 84, 715–724 (2005).

Feng, R., Zhang, G. & Cheng, B. An on-board system for detecting driver drowsiness based on multi-sensor data fusion using dempster-shafer theory. In 2009 international conference on networking, sensing and control, 897–902 (IEEE, 2009).

D’Orazio, T., Leo, M., Guaragnella, C. & Distante, A. A visual approach for driver inattention detection. Pattern recognition 40, 2341–2355 (2007).

Yan, C., Wang, Y. & Zhang, Z. Robust real-time multi-user pupil detection and tracking under various illumination and large-scale head motion. Computer Vision and Image Understanding 115, 1223–1238 (2011).

Shen, W. et al. Effective driver fatigue monitoring through pupil detection and yawing analysis in low light level environments. International Journal of Digital Content Technology and its Applications 6 (2012).

Jo, J., Lee, S. J., Jung, H. G., Park, K. R. & Kim, J. Vision-based method for detecting driver drowsiness and distraction in driver monitoring system. Optical Engineering 50, 127202–127202 (2011).

Botzer, A., Musicant, O. & Mama, Y. Relationship between hazard-perception-test scores and proportion of hard-braking events during on-road driving–an investigation using a range of thresholds for hard-braking. Accident Analysis & Prevention 132, 105267 (2019).

Ahlström, C., Wachtmeister, J., Nyman, M., Nordenström, A. & Kircher, K. Using smartphone logging to gain insight about phone use in traffic. Cognition, Technology & Work 22, 181–191 (2020).

Albert, G. & Lotan, T. How many times do young drivers actually touch their smartphone screens while driving? IET Intelligent Transport Systems 12, 414–419 (2018).

Kokonozi, A., Michail, E., Chouvarda, I. & Maglaveras, N. A study of heart rate and brain system complexity and their interaction in sleep-deprived subjects. In 2008 Computers in Cardiology, 969–971 (IEEE, 2008).

Distefano, N. et al. Physiological and driving behaviour changes associated to different road intersections. European Transport 77 (2020).

Sriranga, A. K., Lu, Q. & Birrell, S. A systematic review of in-vehicle physiological indices and sensor technology for driver mental workload monitoring. Sensors 23, 2214 (2023).

Hancock, T. O. & Choudhury, C. F. Utilising physiological data for augmenting travel choice models: methodological frameworks and directions of future research. Transport Reviews 1–29 (2023).

Mouraux, A. & Iannetti, G. D. Across-trial averaging of event-related eeg responses and beyond. Magnetic resonance imaging 26, 1041–1054 (2008).

Hu, L. & Zhang, Z. EEG signal processing and feature extraction (Springer, 2019).

Pfurtscheller, G. & Da Silva, F. L. Event-related eeg/meg synchronization and desynchronization: basic principles. Clinical neurophysiology 110, 1842–1857 (1999).

Haufe, S. et al. Eeg potentials predict upcoming emergency brakings during simulated driving. Journal of neural engineering 8, 056001 (2011).

Delorme, A. & Makeig, S. Eeglab: an open source toolbox for analysis of single-trial eeg dynamics including independent component analysis. Journal of neuroscience methods 134, 9–21 (2004).

Rice, J. A. Mathematical Statistics and Data Analysis (Duxbury Press, 1994).

Aminov, A., Rogers, J. M., Wilson, P. H., Johnstone, S. J. & Middleton, S. Acute single channel eeg predictors of cognitive function after stroke. International Journal of Stroke 12, 18 (2017).

Kim, J., Kim, M., Jang, M. & Lee, J. The effect of juingong meditation on the theta to alpha ratio in the temporoparietal and anterior frontal eeg recordings. International Journal of Environmental Research and Public Health 19, https://doi.org/10.3390/ijerph19031721 (2022).

Sarmukadam, K., Bitsika, V., Sharpley, C. F., McMillan, M. M. & Agnew, L. L. Comparing different eeg connectivity methods in young males with asd. Behavioural Brain Research 383, 112482, https://doi.org/10.1016/j.bbr.2020.112482 (2020).

Wei, H. & Zhou, R. High working memory load impairs selective attention: Eeg signatures. Psychophysiology 57, https://doi.org/10.1111/psyp.13643 (2020).

Kakisaka, Y. et al. Sensitivity of scalp 10-20 eeg and magnetoencephalography. Epileptic Disorders 15, 27–31, https://doi.org/10.1684/epd.2013.0554 (2013).

Kim, I.-H., Kim, J.-W., Haufe, S. & Lee, S.-W. Detection of braking intention in diverse situations during simulated driving based on eeg feature combination. Journal of Neural Engineering 12, https://doi.org/10.1088/1741-2560/12/1/016001 (2015).

Cresswell, A. G., Loscher, W. N. & Thorstenson, A. Influence of gastrocnemius-muscle length on triceps surae torque development and electromyographic activity in man. Experimental Brain Research 105, 283–290 (1995).

von Werder, S. C. F. A. & Disselhorst-Klug, C. The role of biceps brachii and brachioradialis for the control of elbow flexion and extension movements. Journal of Electromyography and Kinesiology 28, 67–75, https://doi.org/10.1016/j.jelekin.2016.03.004 (2016).

Balasubramanian, V. & Adalarasu, K. Emg-based analysis of change in muscle activity during simulated driving. Journal of Bodywork and Movement Therapies 11, 151–158, https://doi.org/10.1016/j.jbmt.2006.12.005 (2007).

Tao, X. et al. Driving behaviour multimodal human factors raw dataset. figshare. Dataset. https://doi.org/10.6084/m9.figshare.22193119.v3 (2023).

Tao, X. et al. Driving behaviour multimodal human factors preprocessed dataset. figshare. Dataset. https://doi.org/10.6084/m9.figshare.22192831.v3 (2023).

Tao, X. et al. Driving behaviour multimodal human factors eye tracking dataset. figshare. Dataset. https://doi.org/10.6084/m9.figshare.22192963.v2 (2023).

Li, W. et al. A multimodal psychological, physiological and behavioural dataset for human emotions in driving tasks. Scientific Data.

Renton, A. I., Painter, D. R. & Mattingley, J. B. Optimising the classification of feature-based attention in frequency-tagged electroencephalography data. Scientific Data.

Chaumon, M., Bishop, D. V. & Busch, N. A. A practical guide to the selection of independent components of the electroencephalogram for artifact correction. Journal of Neuroscience Methods 250, 47–63, https://doi.org/10.1016/j.jneumeth.2015.02.025. Cutting-edge EEG Methods (2015).

Fisher, R. The use of multiple measurements in taxonomic problems. Annals of Eugenics 7, 179–188 (1936).

Zhou, Z.-H. Machine Learning, 1 edn (Springer Singapore, 2021).

Nacpil, E. J. C., Wang, Z., Yan, Z., Kaizuka, T. & Nakano, K. Surface electromyography-controlled pedestrian collision avoidance: A driving simulator study. IEEE Sensors Journal 21, 13877–13885 (2021).

Ramanishka, V., Chen, Y.-T., Misu, T. & Saenko, K. Toward driving scene understanding: A dataset for learning driver behavior and causal reasoning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 7699–7707 (2018).

Neale, V. L., Dingus, T. A., Klauer, S. G., Sudweeks, J. & Goodman, M. An overview of the 100-car naturalistic study and findings. National Highway Traffic Safety Administration, Paper 5, 0400 (2005).

Zhang, Y., Li, J. & Guo, Y. Vehicle driving behavior. IEEE Dataport https://doi.org/10.21227/qzf7-sj04 (2018).

Yuksel, A. S. & Atmaca, e. Driving behavior dataset. Mendeley Data https://doi.org/10.17632/jj3tw8kj6h.3 (2021).

Ma, Y., Li, W., Tang, K., Zhang, Z. & Chen, S. Driving style recognition and comparisons among driving tasks based on driver behavior in the online car-hailing industry. Accident Analysis & Prevention 154, 106096 (2021).

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Nos. NSFC62227801, 61925105 and 62306164) and The Xplore Prize 2021 in information and electronics techologies.

Author information

Authors and Affiliations

Contributions

X.M.T. designed the study, supervision, Funding acquisition and edited the manuscript. D.C.G. designed the study, collected, analysed the data, and wrote the manuscript. W.Q.Z. designed the study,collected, organised the data, and wrote the manuscript. T.Q.L. designed the study, analysed the data, and wrote the manuscript. B.D. supervised the study and edited the manuscript. S.H.Z. supervised the study and edited the manuscript. Y.J.Q. supervised the study and edited the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tao, X., Gao, D., Zhang, W. et al. A multimodal physiological dataset for driving behaviour analysis. Sci Data 11, 378 (2024). https://doi.org/10.1038/s41597-024-03222-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-024-03222-2