Abstract

The Tactile Internet aims to advance human-human and human-machine interactions that also utilize hand movements in real, digitized, and remote environments. Attention to elderly generations is necessary to make the Tactile Internet age inclusive. We present the first age-representative kinematic database consisting of various hand gesturing and grasping movements at individualized paces, thus capturing naturalistic movements. We make this comprehensive database of kinematic hand movements across the adult lifespan (CeTI-Age-Kinematic-Hand) publicly available to facilitate a deeper understanding of intra-individual–focusing especially on age-related differences–and inter-individual variability in hand kinematics. The core of the database contains participants’ hand kinematics recorded with wearable resistive bend sensors, individual static 3D hand models, and all instructional videos used during the data acquisition. Sixty-three participants ranging from age 20 to 80 years performed six repetitions of 40 different naturalistic hand movements at individual paces. This unique database with data recorded from an adult lifespan sample can be used to advance machine-learning approaches in hand kinematic modeling and movement prediction for age-inclusive applications.

Similar content being viewed by others

Background & Summary

Hand movements feature prominent functions for humans to interact with the environment and to communicate with others. In recent years, humans not only interact with their hands in the real world, but increasingly in different types of digitized multimedia environments, such as virtual or augmented reality1,2,3. Since hand movements serve as a crucial interaction interface, to make use of them in any of such scenarios as well as in digitally transmitted remote human-machine or human-human interactions through the Tactile Internet (TI)4, hand kinematics need to be well tracked5,6 and in some cases modeled7,8,9, or predicted10,11,12. Although several useful databases of hand movements13,14,15,16,17 exist, most come with certain limitations. The present database aims to improve on several of these aspects.

First, in order to increase generalizability to a broader population of users, the data should be representative particularly regarding age inclusion18. To this end, sensor data of various hand movements should be recorded from potential user samples covering a sufficiently wide range of the adult lifespan. Covering data also from middle-aged and older adults is important because aging research shows that brain aging contributes to age-related impairments in executing and perceiving sensorimotor movements19. Specifically, age-comparative studies found slower movement time20,21, reduced strength, dexterity and sensation22, as well as reduced movement precision and independence of finger movements23, smaller grasp aperture21, and lower movement stability during reaching24 in older compared to young adults. Furthermore, there are indications that these age-related differences observed in real settings may also carry over into virtual environments25. With rapid population aging worldwide26, age-adjusted designs of digital devices and software for applications of human-machine or human-human interactions in virtual and remote environments would be crucial18. Lastly, although about 90% of the population worldwide are right-handed27,28 and perform manual tasks faster and more precisely with the right hand, data from left-handed participants should also be included16 to not neglect applications for left-handed individuals.

Second, in order to map a wide array of hand movements, it is crucial to include basic finger movements, hand postures and wrist movements, as well as different grasp and functional movements. Here, the extensive Ninapro Project database13,17 is notable. Importantly, cross-referencing the grasp movements with established grasp taxonomies29,30 allows for proper cross-validation and ensures the inclusion of a broad range of different grasp types, including distal, cylindrical, spherical, and ring grasps30. Figure 1 depicts the selection of all hand movements included in the present work (the movement naming convention of the Ninapro Project database was maintained13 for consistency across databases).

To improve performance accuracy of machine-learning algorithms for recognizing, classifying and predicting natural hand movements, we adapted the acquisition protocol in terms of movement instructions. Previous work confirmed that videos is an adequate modality for instructing hand movements31. However, in order to capture potential intra-individual and inter-individual differences in movements, participants in our database naturally performed each movement in their individual paces after the instruction video was shown, instead of just mimicking the movement in temporal synchrony with the video. Moreover, it would be helpful to also include individual anthropometric measures in the database, which was not available previously. This allows for improved motion analysis, synthesis, and animations. Similarly, this data is helpful in accounting for a potential technical source of data variability in sensor positioning that is associated with individual differences in hand anthropometry. Furthermore, the anthropometric data could be used for other research inquiries, such as ergonomic design of hand-held devices or datagloves, and forensic anthropology.

Taken together, whereas the Ninapro Project database13,17 is currently considered a benchmark of hand-movement data, with the CeTI-Age-Kinematic-Hand database we provide significant extensions to existing datasets in several aspects, by including (i) the first data from underrepresented members of the general population that cover a broad continuous adult age range from 20 to 80 years, (ii) novel anthropometric measures, (iii) stimulus videos for reproducibility, and (iv) an improved acquisition protocol that facilitates recording of naturalistic individual hand movements. These features are instrumental for developing age-inclusive technologies.

Methods

Participants

Data was recorded from sixty-three participants (33 female/ 30 male, MAge = 47.8 ± 18.7 years, 6 left-handed) in three continuous age groups, covering the age range from 20 to 80 years (see Table 1 for details on demographic and anthropometric measurements). All participants provided written informed consents and consented to the public sharing of the study data. The study was approved by the Ethical Committee of the Technische Universität Dresden (SR-EK-5012021) and was conducted in accordance with the Declaration of Helsinki. The data was pseudonymized and a unique ID was assigned to each participant. Questionnaire data were collected and managed using the REDCap electronic data capture tools32,33 hosted at Technische Universität Dresden.

All participants reported as healthy with normal to corrected-to-normal vision and hearing, as well as normal tactile sensation. Participants were cognitively intact, asymptomatic adults without occupational or recreational exposure to highly repetitive or strenuous hand exertions (e.g., repeated forceful dynamic grasping or prolonged static holds). The individual history of hand, forearm, elbow, neck or shoulder (i.e., upper limbs) problems, if any, were reported and recorded. Furthermore, the Edinburgh inventory34 was used to determine each participant’s handedness score.

Acquisition setup

Stimulus videos

The stimulus videos of the hand movements were recorded using a Sony ILCE-6500 Camera with a frame rate of 29.97 frames per second (fps) and a spatial resolution of 3840 × 2160 pixels. The camera was positioned on a tripod approximately 1.5 m away from the performer. All videos were recorded under the same artificial light conditions in front of a white background. The performer wore neutral clothes and performed all movements with the right hand. Only the performer’s arm and hand were recorded. The recorded movements belonged to three categories adapted from the Ninapro database13 and described in the Introduction (i.e., A: basic finger movements, B: hand postures and wrist movements, C: grasps and functional movements; see Fig. 1 for their actionIDs and static images of recorded movements and Table S2 in Supplementary Material for detailed descriptions). In the postproduction phase the videos were edited with the software iMovie 10.1.14 and 10.3.5 (https://www.apple.com/imovie/). The videos were cut to a duration of 5 s for categories A and B, and 10 s for category C. The sound was removed from the video clips and a gray filter (preset grayscale template) was applied to the videos. Additionally, all videos were also flipped along the vertical axis to be used as stimuli for the left-handed participants. Thus, the instruction stimulus videos are available for showing the respective actions both with the right, as well as, with the left hand.

Anthropometric data

Hand anthropometry was recorded for all participants using a light-based 3D scanner (Artec EVA and Artec Studio version 15, www.artec3d.com). The Artec EVA is a portable handheld 3D scanner with the ability to scan 16 frames per second with ± 0.1 mm accuracy and 0.2 mm resolution. The position of the joints and other characteristic hand landmarks were recorded via a 3D camera image of the participants’ dominant hand with geometry tracker in a static flat pose with all fingers spread (flat spread hand; see Fig. 5). For each participant a final 3D-hand model was created with the automatic processing mode and saved in ‘‘.stl’’ format in Artec Studio Version 15. Furthermore, the models were registered with the 3-2-1 method, cropped and cleaned with Geomagic Wrap Version 2017.0.2.18 (https://oqton.com/geomagic-wrap/) and saved as preprocessed models with the extension _reg.stl. Additionally, key hand anthropometric measurements (i.e., hand length, width of hand and wrist) were taken manually with a generic measuring tape and digital caliper (stated accuracy ± 0.2 mm) following the anthropometric measurement template of the KIT Whole-Body Human Motion Database35. The hand length was indexed as the distance between the wrist and tip of middle finger. Wrist width was measured as the ulnar styloid, and hand width as the width at the first knuckles (metacarpophalangeal joints).

Kinematic data

Kinematic hand movements were recorded for all participants using a 22-sensor CyberGlove III dataglove (CyberGlove Systems LLC, www.cyberglovesystems.com). According to the respective participant’s handedness, either a left or a right glove was used for data recording. Data from the CyberGlove were transmitted via a USB interface once every 100 ms (the highest rate the measurement system could support). Communication with the CyberGlove was implemented through gladius, which is a purpose-made application with a graphical user interface (GUI) written for x86-64 Debian-based systems using C++ 2036 with gtkmm and gstreamermm. The connection to the CyberGlove employed the libglove library version 0.6.2 (https://libglove.sourceforge.net). Due to the legacy nature of the libglove library, it was compiled on an Ubuntu Jaunty Jackalope 9.04 (2009) virtual machine using boost 1.39 and was linked to the main application (gladius) as a static library. During measurements, the state of the glove was polled using the local serial interface of the libglove library (LocalCyberGlove) on a separate thread in order to achieve continuous data retrieval. On the client’s side, each sensor state was represented in double precision floating point format in degrees. Generally, on the hardware side CyberGlove acquires the sensor readings as an integer number in the range of 0–255 via an analog-to-digital conversion process based on the output voltage of each individual sensor, and then converts the values into angles, expressed in radians37.

In order to facilitate the comparability of measurements taken from different individuals, a mandatory calibration procedure was performed before data collection for each participant. During the calibration, each participant was prompted to hold a specific gesture for 100 samples (i.e., the palm was placed on a flat surface, with fingers extended and the thumb perpendicular to the the rest of the fingers, see Fig. 4), after which the respective average value for each of the 22 sensors was calculated and used as a base (e.g., origin or 0.0). After successful calibration, subsequent measurements used this base as an offset for the retrieved readouts for the respective sensor. To ensure consistency, each participant was only allowed to complete calibration if the hand was kept motionless. In other words, if during the 100 sample assessments a sensor value exceeded a difference of over 25 degrees, the calibration was restarted. In addition to the initial calibration prior to data acquisition, a well-established post-processing calibration method was employed to re-calibrate the data (see the section below).

Post-processing re-calibration of kinematic data

Although our calibration process, performed prior to data collection, is designed to be brief and simple, it is important to acknowledge that it tends to yield results with limited precision (see Supplementary Material Figs. S1–S5). Ideally, a comprehensive and participant-specific calibration procedure that incorporates both gain and offset adjustments should be employed. The offset parameter influences the starting position for each joint’s range, representing the baseline values of the sensor output at resting position. Whereas, the gain parameter determines the extent of the permissible range of motion for each joint, considering anatomical constraints and individual hand anatomy. However, the proprietary CyberGlove software or established and verified protocols38 for such detailed calibration entails a complex and cumbersome process, rendering it unsuitable for lengthy data collection protocols or general users. To address this challenge, we implemented a post-processing calibration method using the open-source data and protocol provided by Gracia-Ibáñez and colleagues38,39. Specifically, the mean gain values for each sensor, as well as specific cross-coupling effect corrections were derived from the BE-UJI code39 and utilized as a sensor data correction technique to generate coherent and realistic data. By adopting this alternative calibration method, we aim to mitigate the limitations of our brief calibration process and enhance the reliability and validity of the obtained data (see also17,40,41). Within the CeTI-Age-Kinematic-Hand database42, both the only offset-calibrated and re-calibrated data are published and available for users (see Fig. 3). This approach allows for greater accessibility and ease of use, particularly in the context of extensive data collection protocols or for researchers who may want to investigate alternative post-processing approaches.

Acquisition procedure

Participants sat at a desk in a chair, adjusted for maximum comfort, while resting their arms on the armrests. A PC screen (ASUS VG248QE, 24 inch, max. refresh rate 144 Hz), connected to the acquisition laptop in front of the participant, displayed visual stimuli (instruction text and videos) for each movement exercise, while also recording data from the CyberGlove (i.e., the kinematic data acquisition device) of the participant’s dominant hand. Before the data recording started, participants were familiarized with the CyberGlove and given a general overview of the types of movements they had to perform. They were informed about how the movements and anthropometry of their dominant hand would be recorded.

A recording session with the CyberGloves started with a start panel on the GUI where participant-specific ID (participantID), session-related (session ID), CyberGlove handedness, file directory, demographic (age, sex), and anthropometric (handedness) information were recorded and the CyberGlove was calibrated. A calibration consisted of participants placing their gloved hand flat on the desk surface and spreading their thumb perpendicular (see Fig. 4). After completing the calibration procedure, participants went through a training session. During training, all stimulus videos were shown to the participant in the same fashion as they were subsequently shown during the experimental session. No kinematic data was recorded during training, but the participants were encouraged to familiarize themselves with the movements and the stimulus videos. No time restrictions were given during training. In case of uncertainty, an experimenter was in the room with the participant to answer any questions.

Next, the participants were guided through the experimental session block by block. Within a block, one of the three hand movement types (i.e., A: basic finger movements, B: hand postures and wrist movements, C: grasps and functional movements) was presented. That is, each block contained all movements of a given category and the movements were performed sequentially across trials in a predefined trial order (see Fig. 1). Each block contained two repetitions of the movements and three repetitions of each block were recorded, thus resulting in a total of 6 repetitions of each movement. Such movement repetition data are a basis for understanding age-related and individual differences in intra-individual variability. Between blocks the participants were allowed short rest periods to avoid muscular and cognitive fatigue.

The top inset in Fig. 2 depicts the structure of a trial. Within each trial, participants were asked to mimic the movement that was shown in the short instruction video clip of that given trial. The temporal structure of a trial were indicated to the participants on the computer screen by means of written instructions and a timer accompanied with a color-coded horizontal bar that expanded horizontally on the top of the screen (see top inset Fig. 2). Each trial started with a 1 s rest period (pre-movement rest), followed by the movement instruction video for 5 s for movements of categories A and B (10 s for trials in category C). After viewing the instruction video, a countdown of 3 s with a dynamic horizontal bar displayed in the upper center of the screen signaled the participants to get ready for movement execution. This was followed by a screen with the horizontal bar turning green, indicating the start of movement execution (performance); the green horizontal bar expanded gradually to indicate the recording duration. After performing the movement, there was a post-movement rest period of 5 s. Importantly, during the period of movement performance, no stimulus video was shown in order to allow each participant to execute the movement naturally with an individual pace.

Schematic representation of the experimental setup for movement recording. A PC screen, connected to the data acquisition laptop, displayed the GUI, which guided the participant through the experiment (shown here is the setup for recording from a right-handed participant). Top inset: An instructional video was shown in each trial for the respective movement to be performed. After viewing the instruction video, the participants had to execute the movement at their own individual naturalistic movement speed during the movement performance period while wearing a CyberGlove on their dominant hand (see descriptions in the text for other details).

In order to ensure consistency, during the experiment the participants were instructed to place their gloved hand at a designated resting position marked on the table (see Fig. 2). For movements involving interactions with objects, another designated location at approximately 20 cm in front of the hand-resting position was marked as the object area. In a given trial involving grasping or functional movement, the participants were asked to lift the given object smoothly to a height of approximately 5 cm above the desk surface and to keep their grasp and lifting movement consistent throughout the process. The setups and procedure were done to ensure measurement consistency and quality across trials and participants. For a detailed description of all recorded movements see also Table S2 in the Supplementary Material.

Data Records

The database adheres to the BIDS43 standard while incorporating our own extensions to accommodate kinematic and anthropometric data. This includes raw and re-calibrated kinematic data (“sensor data”) as well as anthropometric data (“3D data”) and is available in the figshare repository: CeTI-Age-Kinematic-Hand42. Following the BIDS convention, the data records have a folder structure as shown in Fig. 3, which begins with one subfolder for each of the 63 participants named with the specific ID (participantID), e.g., S3, S6, S7 etc. and various metafiles.

Folder structure of the CeTI-Age-Kinematic-Hand database. The left-most column shows the content of the entire database at the highest level. The 2nd column represents the highest level of the data folder structure, the 3rd column represents the level of an individual participant, and the right-most column represents the level of a specific participant’s re-calibrated kinematic data. Color-coded insets show selected motion meta files and sample 3D and kinematic data. The annotated screenshot provides an overview of the organizational hierarchy in the database.

For instance, the participants.tsv file gives a detailed tabular overview of the general participant sample (participantID, age group, sex, handedness-glove, etc.), which are, in turn, defined in the participants.json file. The files dataset_description.json and README.txt provide an overview of all relevant database information and instructions. Additionally, the file CHANGES.txt contains the database history.

Inside each of the participant subfolders there are three further subfolders: kinem (for raw kinematic data), kinem_recalibrated (for adjusted kinematic data) and anthrop (for anthropometric data). Additionally, for each database entry (participant) there is a meta file (e.g., meta-S3.json) that contains the participant-specific information collected by the GUI and via REDCap (i.e.,demographic and anthropometric data).

Finally, the stimulus video set (“stimulus material”) is also provided in a folder called Stimulus, and custom code of the Technical Validation in a folder called Code.

Sensor data

Table 2 provides an illustrative example showcasing how the naming convention of the kinematic data files contains information about the performed hand movement (i.e., actionID in categories A,B,C as shown in Fig. 1), a running counter ID (trialID labeled as “ex”, followed by the numerical value of trialID), the data type (offset-calibrated or “recalibrated”), the numerical value of the block and the participantID (labeled as “S” and followed by the numerical value of the specific ID). It is important to note that indexing for trialID and block starts at 0, which means that the first element in these sequences has an index of 0, the second element has an index of 1, and so on. For example, for the first entry in Table 2 the file name A1_ex0-0-S3.csv (see also Fig. 3) signifies that it contains offset-calibrated data derived from the initial trial (‘ex0’) of the first block (‘0’) for action A1 (‘A1’), performed by participant S3 with the corresponding ID (‘S3’). All files follow this naming scheme, where the labels are replaced by the corresponding values for actionID, trialID, (datatype; in case of re-calibrated data), block, and participantID.

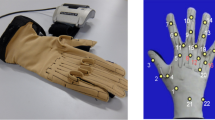

Within a given “*.csv” action motion file, the rows represent samples recorded in frames (39.6 fps) during the movement performance time period (see Fig. 2 top inset “movement performance”). Timestamps were recorded in total elapsed milliseconds with the date of the data acquisition. Columns represent the CyberGlove sensorIDs 1–22 as depicted in Fig. 4. A detailed listing of the motion recording sensors and sensor descriptions can be found in the metafile motion_channels.tsv (see purple inset in Fig. 3) and Table S1 in the Supplementary Material.

Resting (right) hand position with superimposed CyberGlove III sensorID labels. At the end and start of each trial participants assumed resting position, where their hand was placed flat on the table, fingers composed together and thumb perpendicular to the palm. This is also the specific gesture used for the calibration procedure. The placement of the sensor labels corresponding to the 22 columns (1–22; sensorID; abduction sensors in purple & bending sensors in teal) in the sensor data is superimposed.

3D Data

For each participant, there is a anthropometric database entry that contains a preprocessed 3D model (in the form of “*.stl” files) of the participant’s dominant hand (i.e., the hand that was used to record the kinematic data with a CyberGlove). For example S3_reg.stl is the preprocessed 3D model based on the scanned individual hand anthropometric data corresponding to participantID S3 depicted in Fig. 5.

Stimulus material

Lastly, the stimulus videos used for instructing all 40 hand movements for the right and left hand are also included in the database. Each video file is named according to the respective actionID (see Fig. 1 and Table S2 in Supplementary Material) and handedness (R-right, L-left). For instance, the file name, A1_R.mp4, labels the stimulus video corresponding to the execution of the hand movement with the actionID A1, flexion of the index finger, using the right hand.

Technical Validations

As technical validations of the CeTI-Age-Kinematic-Hand database, we examined the recorded re-calibrated kinematic data by several key experimental conditions and used machine-learning to classify the recorded hand movements. Additionally, we have included the respective plots for the unadjusted offset-calibrated data in the Supplementary Material.

Hand kinematics by experimental factors and individual differences

This section shows results of descriptive analyses of the recorded data by experimental conditions to ensure quality control of the database. For this purpose, hand movement actions, age groups of the participants, and the range of joint angles assessed with the dataglove as an estimate of hand kinematics (see for example17,44) were employed to validate that the recorded kinematic data do vary with respect to experimental factors and yield individual and age differences. Furthermore, fatigue or adaptation to the movements45 may reflect in natural trial-by-trial fluctuations of the recorded movement data; thus, we also examined the data with respect to trial repetitions. To this end, we used Python46 3.8.3 and seaborn 0.12.247 to derive violin plots with a set kernel bandwidth of 0.2. The results are visually displayed as violin plots in Figs. 6–9, with values of the median and the quartiles (1st and 3rd) shown as long and short dashed lines, respectively.

Violin plots displaying the angle distribution of the recorded kinematic data across all 22 sensors and participants over repetitions for the three different movement categories–basic finger movements (a), hand postures and wrist movements (b), and grasping and functional movements (c). The short dashed lines represent the 1st and 3rd quartiles, where as the long-dashed line indicate the median of the distributions.

Violin plots displaying the joint angle distributions separately for each of the 22 sensor: aggregated data across all hand movements (a), basic finger movements in category A (b), hand postures and wrist movements in category B (c), and grasping and functional movements in category C (d). The horizontal short and long dashed lines represent the quartiles and median positions of the distributions. Global active range of motion (AROMs) reported in prior research48 are marked with red lines. Unmarked AROMs were not previously published.

Joint angle distributions of kinematic data over movement repetitions

First, we validated the recorded data across all participants and sensors with respect to movement repetitions separately for each of the three action categories. Based on previous validations of the Ninapro database17,44, we expected no substantial differences in the distributions of joint angles acquired by the CyberGlove over six movement repetitions. Our acquisition protocol was designed with the goal to minimize fatigue and movement adaptation, while also obtaining a sensible number of overall movement repetitions. Of note, differing from previous work13 which recorded hand movements performed simultaneously while participants viewed the stimulus videos, participants in the present database only executed the movements after seeing the respective movement instruction videos. Thus, the movement executions in our CeTI-Age-Kinematic-Hand database were of a more individualized and naturalistic nature. A setup of this kind can increase the generalizability of the recorded data and provide a broader variety of movement patterns for developing hand models or training machine learning (ML) algorithms to classify hand movements. The results can be seen in the three panels of Fig. 6, with each movement category exhibits a distinct distribution shape that is maintained over all six repetitions. Overall, the range of motion appears to be wide, showing that participants were not limited in their movement, and consistent across all repetitions, indicating that participants showed little change of intrinsic characteristics over time (e.g., adaptation, fatigue) at the group level.

Joint angle distributions of kinematic data over sensors by movements

Second, we next validated the data at the level of individual sensors by movements. Given the wide selection of hand movements (see Fig. 1), we expected that the joint angle distributions of the recorded kinematic data would differ between movement. By design the database includes different movement categories, which itself involve the use of different muscles, different fingers, and manipulations of different objects (see also grasp taxonomies e.g.,29,30).

The distributions of joint angles averaged across all movement categories and participants at the sensor level are displayed in Fig. 7a. It shows that different joint angles recorded by the 22 sensors varied substantially during the recorded exercises. As the CyberGlove was calibrated and the neutral postures was maintained, both at the start and the end of each movement execution for each participant, the depicted angle distributions are zero-centred. In addition, Fig. 7b–d show the same validation by separately for the three different movement type categories (A, B, and C). These plots show the variability and average movement angles of the joints corresponding to their respective sensors for all movements in the same category. Of note, one can see, for example, a transition in the different thumb joint angle distributions as the hand movements change from individual finger flexion exercises (see Fig. 7b) to grasp-centered exercises (see Fig. 7d). Moreover, in the figures depicting individual joint angles (Fig. 7), red horizontal lines are overlaid to represent the average active range of motion (AROMs) previously documented in the literature48. Previous studies have demonstrated that these ranges closely align with functional ranges of movement, with a reported deviation of up to 28° during activities of daily living41,48. As evident from these figures, the majority of our recorded and re-calibrated joint angles fall well within the established range of these values.

More specifically, in Fig. 8 the distributions of joint angles at the level of the 40 individual hand movements (i.e., actionIDs) are shown. While for basic finger movements (category A), the join angles are mostly centered around 0°, the more complex movements, (i.e. hand postures and wrist movements or functional and grasping movements; categories B and C) show an increasingly scattered distribution of joint angles. This is in line with our expectation, that these movements are more complex and involve the usage of the whole hand, and therefore show greater average angle variability across sensors and participants.

Joint angle distributions of kinematic data over sensors by age groups

Third, given age-related differences in movement variability had been reported in previous studies20,21,23, we examined the variability of hand kinematics at the sensor level by the age groups of the participants. In Fig. 9 the joint angle distributions for movements within each movement category is presented separately for the 22 sensors corresponding to specific joints in the hand and for each of the three age groups. Whereas the general trends are comparable, the plots also reveal variabilities between age groups.

Furthermore, in Fig. 10 one can see exemplary data for one grasp movement of two participants of different ages across movement repetitions. One young (S17) and one old (S23) participant each reached towards and picked up an object (1.5 l standard PET water bottle, 8.6 cm diameter) with the large diameter grasp, lifted it about 5 cm off the table, and put it back on the table. The concatenated raw angle trajectories show all six respective repetitions of the movement. One can see that the data of the old participant (bottom panel) exhibited greater movement variability and longer movement durations.

Exemplary concatenated angle trajectories for a large diameter grasp movement (actionID C1). The data shown here were recorded from the thumb and index finger sensors (see legend for details). In the recordings one young participant (top panel) and one old participant (bottom panel) performed all six movement repetitions (Rep; separated by vertical lines).

Movement classification

To test whether the recorded data is of high quality allowing movement classifications, the multivariate time series of the kinematic data obtained from the CyberGlove sensors were analysed. Specifically, the objective was to determine whether the different movements within a category could be correctly identified in terms of specific hand movements (i.e., by actionID). The selection of feature extraction and classification algorithms was based on prior wearable sensor ML work44,49,50,51. The feature extraction involved the computation of sensor-wise root-mean-square (RMS), empirical variance (var), and mean time series values. These features were calculated for each of the 22 sensors and combined to form a comprehensive set of features representing the entire hand motion. The discrimination of movements within each category was performed using four machine learning algorithms: Random Forest52, 5-nearest-neighbor classifier (KNN)53, linear-discriminant-analysis (LDA)54 and a support-vector-classifier (SVC)55. All classifiers were implemented using Python46 3.8.3 and Scikit-learn56 1.0.2 with corresponding default settings, and verified using a 20-fold cross-validation. Classification accuracy and F1 score57 were utilized as performance metrics and are shown in Table 3. In balanced datasets, containing an equal number of instances in each classification category, accuracy is a common measure of classification performance. However, in the case of imbalanced datasets, F1-score is often used57. Our dataset is slightly imbalanced (see Fig. 11 and supplementary Table S3 due to some missing data (kinematic sensor data) resulting from occasional participants’ erroneous movement executions or due to technical issues during the recording. As such, to adequately evaluate classification performance, both accuracy and F1-score are reported.

The slight imbalance in the amount of available incidences per category in the dataset notwithstanding, the results presented in Table 3 indicate that the data of very good quality for movement classifications. The classifiers were successful in distinguishing between the different hand movements within a category, with mean accuracies between 65.6% and 94.4%, and mean F1-scores between 0.659 and 0.944. The Random Forest classifier demonstrated the best performance overall, achieving a mean accuracy score of 94.4% for category A, 84.4% for category B and 79.3% for category C. In general, category A, which had relatively fewer movements and involved only one finger or joint (that went to one of the two most extreme positions, e.g., flexion or extension), showed the most distinguishable kinesthetic data pattern and yielded the highest performance. In this movement category all classifiers achieved nearly identical results between each other and in terms of accuracy (ranging from 90.7% to 94.4%) and F1-scores (range: 0.908–0.944). For the movements in category B, the classifiers achieved a mean accuracy ranging between 75.7% and 84.4%, and a mean F1-score between 0.757 and 0.841. Finally, the applied classifiers were able to classify the actionIDs with accuracies between 65.9% and 79.3% and F1-scores between 0.659 and 0.792 for category C. This is as expected, because the grasp and functional movements were much more complex and consisted of, at least partially, similar grasp movements and object interactions.

In order to visualize the discriminability of the kinematic data records, a 2-dimensional embedding was generated using t-distributed stochastic neighbor embedding (t-SNE)58. To this end, the sensor-wise data of each hand movement recording was concatenated into a single vector. Thus, the information of all sensors were used in this dimensionality reduction. The t-SNE embedding implementation of Scikit-learn56 1.0.2 was utilized with the perplexity set to 50. The resulting 2-dimensional vectors along with the centroids of the embedded data instances that belong to the same actionID are visualized in Fig. 12.

The t-SNE results were found to preserve much of the global geometry observed in the classification results (see Table 3). This provides a visualization of the high-dimensional kinematic data that goes beyond the standard numerical performance metrics. Specifically, on the one hand, the t-SNE embedding produced more distinguishable embedded clusters of hand movements for category A and B (see Fig. 12a,b). On the other hand, the clusters of recordings of movement in category C are placed closer together, more overlapping, and conflated (see Fig. 12c), making them more difficult to distinguish from each other. The presented projection serves as a useful analytical tool; however, it is important to acknowledge its inherent limitations as they offer incomplete approximations of the full dataset, potentially resulting in the loss or obscuring of certain data aspects. While Fig. 12 illustrates the clustering of movements in a low-dimensional space, it provides limited insights into the underlying factors driving the clustering. As such, dimensionality reduction is employed only for technical validation purposes in this data descriptor. To ensure the reliability of conclusions drawn from the reduced data representations, such as clustering or the identification of kinematic movements, it is imperative to validate them against the original high-dimensional data.

Usage Notes

This novel hand movement database (CeTI-Age-Kinematic-Hand) can be used for a wide range of different domains, starting from virtual reality to robotics, to healthcare. We provide valuable data for various different use cases and potential applications, for example ML-based use cases such as the classification of grasping or social gestures, or to recognize different types of hand actions. Researchers can also use the data to identify movement patterns and make predictions to enhance human-computer interaction, facilitate rehabilitation and prosthetics, improve security by developing methods for perturbing movement, and enable advanced TI-technologies for immersive, multimedia digital environments. Of note, our representative data (incl. chronological age, sex, and hand dominance) naturally offers various sources of variability, such as natural trial-by-trial variability of the performed movements, and inter-subject variability over a wide adult age range in three age cohorts, and thus also potential differences in individual movement experiences and fitness. Older adults, for example have unique limitations when it comes to hand kinematics. Thus, by including this age group in the development and implementation processes, we can ensure that the TI-technology is accessible, sustainable and usable for everyone.

Within the CeTI-Age-Kinematic-Hand database42, both the offset-calibrated and re-calibrated data based on previous work38 are published. This approach allows for greater accessibility and ease of use, particularly in the context of extensive data collection protocols or for researchers who may want to investigate alternative post-processing data calibration approaches3. The selected hand movements were adapted from the Ninapro Project13 and labeled using the same naming convention, and therefore provide the possibility of comparing or matching datasets. If datasets were to be combined, the differences in the data acquisition protocol (baseline hand posture, movements not mimicked in temporal synchrony with stimulus videos, instead more naturalistic individual movements at own pace) should be taken into consideration. Furthermore, the (CeTI-Age-Kinematic-Hand) database includes anthropometric data that can be used to improve classification accuracy and modeling. In this way, the data could be used for motion analysis and synthesis, as well as animations. Researchers should be aware of the limitation in the data due to the usage of the CyberGlove III. For example, the glove might lead to problematic results due to size-fit issues. In particular, the distal phalanges (DIP) sensors are likely to provide reliable output when a participant’s hand fits the glove properly. However, smaller hands could result in poorer fit and subsequently only partial results. Additionally, some object interactions were difficult due to the decreased tactile feedback. This is in line with findings that bare hands are more efficient than gloved hands59. Nonetheless, data gloves offer many benefits as whole-hand input devices because they are relatively natural input devices with a high ease of use, especially for gestures and interactions with 3D objects3. Another benefit is that they do not suffer from occlusion like camera-based systems, thus providing the participants with a realistic and safe environment resembling real-world hand movements, and researchers with the means to collect large-scale hand movement data.

Code availability

The preprocessing of the kinematic data (removal of unused columns in.csv files (see Table S1, checking for corrupt files, removal of sensitive data, naming and sorting of files into folders) as well as the normalization of the kinematic data was performed using custom Python46 code, and can be found in the folder called Code within the CeTI-Age-Kinematic-Hand42 database.

The custom Python scripts46,47,56 used for the descriptive analyses and ML-based movement classifications reported in the Technical Validation section can also be found in the Code folder of CeTI-Age-Kinematic-Hand42.

References

Al-Ghaili, A. M. et al. A review of metaverse’s definitions, architecture, applications, challenges, issues, solutions, and future trends. IEEE Access https://doi.org/10.1109/ACCESS.2022.3225638 (2022).

Buckingham, G. Hand tracking for immersive virtual reality: opportunities and challenges. Frontiers in Virtual Reality 140, https://doi.org/10.3389/frvir.2021.728461 (2021).

Kessler, G. D., Hodges, L. F. & Walker, N. Evaluation of the cyberglove as a whole-hand input device. ACM Transactions on Computer-Human Interaction (TOCHI) 2, 263–283, https://doi.org/10.1145/212430.212431 (1995).

Fitzek, F. H., Li, S.-C., Speidel, S. & Strufe, T. Chapter 1 - tactile internet with human-in-the-loop: New frontiers of transdisciplinary research. In Tactile Internet with Human-in-the-Loop, 1–19, https://doi.org/10.1016/B978-0-12-821343-8.00010-1 (Elsevier, 2021).

Noghabaei, M. & Han, K. Object manipulation in immersive virtual environments: Hand motion tracking technology and snap-to-fit function. Automation in Construction 124, 103594, https://doi.org/10.1016/j.autcon.2021.103594 (2021).

Lin, W., Du, L., Harris-Adamson, C., Barr, A. & Rempel, D. Design of hand gestures for manipulating objects in virtual reality. In Human-Computer Interaction. User Interface Design, Development and Multimodality: 19th International Conference, HCI International 2017, Vancouver, BC, Canada, July 9-14, 2017, Proceedings, Part I 19, 584–592, https://doi.org/10.1007/978-3-319-58071-5_44 (2017).

Lin, J., Wu, Y. & Huang, T. Modeling the constraints of human hand motion. In Proceedings Workshop on Human Motion, 121–126, https://doi.org/10.1109/HUMO.2000.897381 (2000).

Djemal, A. et al. Real-time model for dynamic hand gestures classification based on inertial sensor. In 2022 IEEE 9th International Conference on Computational Intelligence and Virtual Environments for Measurement Systems and Applications (CIVEMSA), 1–6, https://doi.org/10.1109/CIVEMSA53371.2022.9853648 (2022).

Hemeren, P., Veto, P., Thill, S., Li, C. & Sun, J. Kinematic-based classification of social gestures and grasping by humans and machine learning techniques. Frontiers in Robotics and AI 8, 699505, https://doi.org/10.3389/frobt.2021.699505 (2021).

Siu, H. C., Shah, J. A. & Stirling, L. A. Classification of anticipatory signals for grasp and release from surface electromyography. Sensors 16, 1782, https://doi.org/10.3390/s16111782 (2016).

Li, Q. et al. Robust human upper-limbs trajectory prediction based on gaussian mixture prediction. IEEE Access 11, 8172–8184, https://doi.org/10.1109/ACCESS.2023.3239009 (2023).

Ortenzi, V. et al. The grasp strategy of a robot passer influences performance and quality of the robot-human object handover. Frontiers in Robotics and AI 7, 542406, https://doi.org/10.3389/frobt.2020.542406 (2020).

Atzori, M. et al. The ninapro project database web interface. (Ninapro Repository) datasets. Ninaweb http://ninapro.hevs.ch/node/7 (2014).

Taheri, O., Ghorbani, N., Black, M. J. & Tzionas, D. Grab: A dataset of whole-body human grasping of objects. In European conference on computer vision, 581–600, https://doi.org/10.1007/978-3-030-58548-8_34 (2020).

Krebs, F., Meixner, A., Patzer, I. & Asfour, T. The kit bimanual manipulation dataset. In 2020 IEEE-RAS 20th International Conference on Humanoid Robots (Humanoids), 499–506, https://doi.org/10.1109/HUMANOIDS47582.2021.9555788 (2021).

Matran-Fernandez, A., Rodrguez Martnez, I. J., Poli, R., Cipriani, C. & Citi, L. Seeds, simultaneous recordings of high-density emg and finger joint angles during multiple hand movements. Scientific Data 6, 186, https://doi.org/10.1038/s41597-019-0200-9 (2019).

Jarque-Bou, N. J., Atzori, M. & Müller, H. A large calibrated database of hand movements and grasps kinematics. Scientific Data 7, 1–10, https://doi.org/10.1038/s41597-019-0349-2 (2020).

Li, S.-C. & Fitzek, F. H. Digitally embodied lifespan neurocognitive development and tactile internet: Transdisciplinary challenges and opportunities. Frontiers in Human Neuroscience https://doi.org/10.3389/fnhum.2023.1116501 (2023).

Landelle, C. et al. Functional brain changes in the elderly for the perception of hand movements: A greater impairment occurs in proprioception than touch. Neuroimage 220, 117056, https://doi.org/10.1016/j.neuroimage.2020.117056 (2020).

Smith, C. D. et al. Critical decline in fine motor hand movements in human aging. Neurology 53, 1458–1458, https://doi.org/10.1212/WNL.53.7.1458 (1999).

Pratt, J., Chasteen, A. L. & Abrams, R. A. Rapid aimed limb movements: age differences and practice effects in component submovements. Psychology and aging 9, 325, https://doi.org/10.1037/0882-7974.9.2.325 (1994).

Bowden, J. L. & McNulty, P. A. The magnitude and rate of reduction in strength, dexterity and sensation in the human hand vary with ageing. Experimental gerontology 48, 756–765, https://doi.org/10.1016/j.exger.2013.03.011 (2013).

Carment, L. et al. Manual dexterity and aging: a pilot study disentangling sensorimotor from cognitive decline. Frontiers in neurology 9, 910, https://doi.org/10.3389/fneur.2018.00910 (2018).

Lin, B.-S. et al. The impact of aging and reaching movements on grip stability control during manual precision tasks. BMC geriatrics 21, 1–12, https://doi.org/10.1186/s12877-021-02663-3 (2021).

Arlati, S., Keijsers, N., Paolini, G., Ferrigno, G. & Sacco, M. Age-related differences in the kinematics of aimed movements in immersive virtual reality: a preliminary study. In 2022 IEEE International Symposium on Medical Measurements and Applications (MeMeA), 1–6, https://doi.org/10.1109/MeMeA54994.2022.9856412 (2022).

United Nations Department of Economic and Social Affairs, Population Division. World population prospects 2022: Summary of results. Tech. Rep. NO. 3, UN DESA. https://www.un.org/development/desa/pd/content/World-Population-Prospects-2022 (2022).

Papadatou-Pastou, M. et al. Human handedness: A meta-analysis. Psychological bulletin 146, 481, https://doi.org/10.1037/bul0000229 (2020).

Reiß, M. & Reiß, G. Lateral preferences in a german population. Perceptual and Motor Skills 85, 569–574, https://doi.org/10.2466/pms.1997.85.2.569 (1997).

Feix, T., Romero, J., Schmiedmayer, H.-B., Dollar, A. M. & Kragic, D. The grasp taxonomy of human grasp types. IEEE Transactions on human-machine systems 46, 66–77, https://doi.org/10.1109/THMS.2015.2470657 (2015).

Stival, F. et al. A quantitative taxonomy of human hand grasps. Journal of neuroengineering and rehabilitation 16, 1–17, https://doi.org/10.1186/s12984-019-0488-x (2019).

Fothergill, S., Mentis, H., Kohli, P. & Nowozin, S. Instructing people for training gestural interactive systems. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ‘12, 1737–1746, https://doi.org/10.1145/2207676.2208303 (Association for Computing Machinery, New York, NY, USA, 2012).

Harris, P. A. et al. Research electronic data capture (redcap)–a metadata-driven methodology and workflow process for providing translational research informatics support. Journal of biomedical informatics 42, 377–381, https://doi.org/10.1016/j.jbi.2008.08.010 (2009).

Harris, P. A. et al. The redcap consortium: Building an international community of software platform partners. Journal of biomedical informatics 95, 103208, https://doi.org/10.1016/j.jbi.2019.103208 (2019).

Oldfield, R. C. The assessment and analysis of handedness: the edinburgh inventory. Neuropsychologia 9, 97–113, https://doi.org/10.1016/0028-3932(71)90067-4 (1971).

Mandery, C., Terlemez, O., Do, M., Vahrenkamp, N. & Asfour, T. The KIT whole-body human motion database. In 2015 International Conference on Advanced Robotics (ICAR), 329–336, https://doi.org/10.1109/ICAR.2015.7251476 (2015).

ISO. ISO IEC 14882:2020: Programming languages – C++ (International Organization for Standardization, 2020).

Partipilo, S., De Felice, F., Renna, F., Attolico, G. & Distante, A. A natural and effective calibration of the cyberglove. In Eurographics Italian Chapter Conference, 83–89, https://doi.org/10.2312/LocalChapterEvents/ItalianChapConf2006/083-089 (2006).

Gracia-Ibáñez, V., Vergara, M., Buffi, J. H., Murray, W. M. & Sancho-Bru, J. L. Across-subject calibration of an instrumented glove to measure hand movement for clinical purposes. Computer Methods in Biomechanics and Biomedical Engineering 20, 587–597, https://doi.org/10.1080/10255842.2016.1265950 (2017).

Gracia-Ibáñez, V., Jarque-Bou, N. J., Roda-Sales, A. & Sancho-Bru, J. L. BE-UJI Hand joint angles calculation code. Zenodo https://doi.org/10.5281/zenodo.3357966 (2019).

Jarque-Bou, N. J., Vergara, M., Sancho-Bru, J. L., Gracia-Ibáñez, V. & Roda-Sales, A. A calibrated database of kinematics and emg of the forearm and hand during activities of daily living. Scientific data 6, 270, https://doi.org/10.1038/s41597-019-0285-1 (2019).

Roda-Sales, A., Vergara, M., Sancho-Bru, J. L., Gracia-Ibáñez, V. & Jarque-Bou, N. J. Human hand kinematic data during feeding and cooking tasks. Scientific data 6, 167, https://doi.org/10.1038/s41597-019-0175-6 (2019).

Muschter, E. et al. Coming in handy: CeTI-Age – A comprehensive database of kinematic hand movements across the lifespan. Datasets, Figshare, https://doi.org/10.6084/m9.figshare.c.6688871.v1 (2023).

Gorgolewski, K. J. et al. The brain imaging data structure, a format for organizing and describing outputs of neuroimaging experiments. Scientific Data 3, 1–9, https://doi.org/10.1038/sdata.2016.44 (2016).

Atzori, M. et al. Electromyography data for non-invasive naturally-controlled robotic hand prostheses. Scientific Data 1, 1–13, https://doi.org/10.1038/sdata.2014.53 (2014).

Pale, U., Atzori, M., Müller, H. & Scano, A. Variability of muscle synergies in hand grasps: Analysis of intra-and inter-session data. Sensors 20, 4297, https://doi.org/10.3390/s20154297 (2020).

Python Core Team. Python: A dynamic, open source programming language. Python Software Foundation, https://www.python.org/. Version 3.8.3 (2019).

Waskom, M. L. seaborn: statistical data visualization. Journal of Open Source Software 6, 3021, https://doi.org/10.21105/joss.03021 Version 0.12.2 (2021).

Gracia-Ibáñez, V., Vergara, M., Sancho-Bru, J. L., Mora, M. C. & Piqueras, C. Functional range of motion of the hand joints in activities of the international classification of functioning, disability and health. Journal of Hand Therapy 30, 337–347, https://doi.org/10.1016/j.jht.2016.08.001 (2017).

Atzori, M. et al. Characterization of a benchmark database for myoelectric movement classification. IEEE Transactions on Neural Systems and Rehabilitation Engineering 23, 73–83, https://doi.org/10.1109/TNSRE.2014.2328495 (2015).

Puthenveettil, S., Fluet, G., Qiu, Q. & Adamovich, S. Classification of hand preshaping in persons with stroke using linear discriminant analysis. In 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, 4563–4566, https://doi.org/10.1109/EMBC.2012.6346982 (2012).

Hanisch, S., Muschter, E., Hatzipanayioti, A., Li, S.-C. & Strufe, T. Understanding person identification through gait. Proceedings of the Privacy Enhancing Technologies Symposium (PETS) https://doi.org/10.56553/popets-2023-0011 (2023).

Breiman, L. Random forests. Machine learning 45, 5–32, https://doi.org/10.1023/A:1010933404324 (2001).

Cunningham, P. & Delany, S. J. K-nearest neighbour classifiers-a tutorial. ACM Computing Surveys (CSUR) 54, 1–25, https://doi.org/10.1145/3459665 (2021).

Fisher, R. A. The use of multiple measurements in taxonomic problems. Annals of eugenics 7, 179–188, https://doi.org/10.1111/j.1469-1809.1936.tb02137.x (1936).

Hsu, C.-W., et al. A practical guide to support vector classification (2003).

Pedregosa, F. et al. Scikit-learn: Machine learning in Python. Journal of Machine Learning Research 12, 2825–2830, http://scikit-learn.sourceforge.net. Version 1.0.2 (2011).

Fawcett, T. Roc graphs: Notes and practical considerations for researchers. Machine learning 31, 1–38 (2004).

Van der Maaten, L. & Hinton, G. Visualizing data using t-sne. Journal of machine learning research 9, https://doi.org/10.5555/2987189.2987386 (2008).

Hallbeck, M. & McMullin, D. Maximal power grasp and three-jaw chuck pinch force as a function of wrist position, age, and glove type. International Journal of Industrial Ergonomics 11, 195–206, https://doi.org/10.1016/0169-8141(93)90108-P (1993).

Acknowledgements

Funded by the German Research Foundation (DFG, Deutsche Forschungsgemeinschaft) as part of Germany’s Excellence Strategy – EXC 2050/1 – Project ID 390696704 –s Cluster of Excellence “Centre for Tactile Internet with Human-in-the-Loop” (CeTI) of Technische Universität Dresden. This work is partly supported by the Bundesministerium für Bildung und Forschung (BMBF, Federal Ministry of Education and Research) and DAAD (German Academic Exchange Service) in project 57616814 (SECAI, School of Embedded and Composite AI). The authors would like to thank Adamantini Hatzipanagioti, Clemens Steinke, and Helge Wanta for their help in generating stimuli, later edited and used as stimulus videos. The authors thank Adamantini Hatzipanagioti also for initial discussions about the hand movement taxonomy and the study in general. The authors are grateful to Sebastian Knauff, Isabella Strasser, and Jens Strehle for their support with data acquisition. Finally, the authors thank all the participants for “lending a hand”.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

Conceptualization, E.M. and S.-C.L.; data curation, E.M. and J.S.; formal analysis, E.M. and J.S.; funding acquisition, F.H.P.F., S.S. and S.-C.L.; investigation, E.M., L.H. and L.K.; methodology, E.M., M.T. and S.-C.L.; project administration, E.M.; resources, M.S., S.H., L.H. and S.-C.L.; software, M.T.; supervision, E.M. and M.T.; validation, J.S.; visualization, E.M., J.S. and L.K.; writing–original draft, E.M., J.S. and M.T.; writing–review and editing, E.M., J.S., S.S., F.H.P.F. and S.-C.L.; All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Muschter, E., Schulz, J., Tömösközi, M. et al. Coming in handy: CeTI-Age — A comprehensive database of kinematic hand movements across the lifespan. Sci Data 10, 826 (2023). https://doi.org/10.1038/s41597-023-02738-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-023-02738-3