Abstract

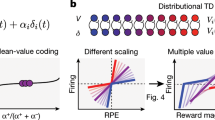

Dopamine prediction error responses are essential components of universal learning mechanisms. However, it is unknown whether individual dopamine neurons reflect the shape of reward distributions. Here, we used symmetrical distributions with differently weighted tails to investigate how the frequency of rewards and reward prediction errors influence dopamine signals. Rare rewards amplified dopamine responses, even when conventional prediction errors were identical, indicating a mechanism for learning the complexities of real-world incentives.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$209.00 per year

only $17.42 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the corresponding author upon request.

Code availability

The code used to analyze these data are available from the corresponding author upon request.

References

Stauffer, W. R. The biological and behavioral computations that influence dopamine responses. Curr. Opin. Neurobiol. 49, 123–131 (2018).

Enomoto, K. et al. Dopamine neurons learn to encode the long-term value of multiple future rewards. Proc. Natl Acad. Sci. USA 108, 15462–15467 (2011).

Sutton, R. & Barto, A. Reinforcement Learning: An Introduction (MIT Press, 1998).

d’Acremont, M. & Bossaerts, P. Neural mechanisms behind identification of leptokurtic noise and adaptive behavioral response. Cereb. Cortex 26, 1818–1830 (2016).

Diederen, K. M. J. & Schultz, W. Scaling prediction errors to reward variability benefits error-driven learning in humans. J. Neurophysiol. 114, 1628–1640 (2015).

Nassar, M. R., Wilson, R. C., Heasly, B. & Gold, J. I. An approximately Bayesian delta-rule model explains the dynamics of belief updating in a changing environment. J. Neurosci. 30, 12366–12378 (2010).

Krajbich, I., Armel, C. & Rangel, A. Visual fixations and the computation and comparison of value in simple choice. Nat. Neurosci. 13, 1292–1298 (2010).

Tobler, P. N., Fiorillo, C. D. & Schultz, W. Adaptive coding of reward value by dopamine neurons. Science 307, 1642–1645 (2005).

Mnih, V. et al. Human-level control through deep reinforcement learning. Nature 518, 529–533 (2015).

Silver, D. et al. Mastering the game of Go with deep neural networks and tree search. Nature 529, 484–489 (2016).

Bellemare, M. G., Dabney, W. & Munos, R. A distributional perspective on reinforcement learning. Proc. Mach. Learn. Res. 70, 449–458 (2017).

Dabney, W. et al. A distributional code for value in dopamine-based reinforcement learning. Nature 577, 671–675 (2020).

Stauffer, W. R., Lak, A. & Schultz, W. Dopamine reward prediction error responses reflect marginal utility. Curr. Biol. 24, 2491–2500 (2014).

Gershman, S. J. A unifying probabilistic view of associative learning. PLoS Comput. Biol. 11, e1004567 (2015).

Babayan, B. M., Uchida, N. & Gershman, S. J. Belief state representation in the dopamine system. Nat. Commun. 9, 1891 (2018).

Lak, A., Stauffer, W. R. & Schultz, W. Dopamine neurons learn relative chosen value from probabilistic rewards. eLife 5, e18044 (2016).

Morrens, J., Aydin, Ç., Janse van Rensburg, A., Esquivelzeta Rabell, J. & Haesler, S. Cue-evoked dopamine promotes conditioned responding during learning. Neuron 106, 142–153.e147 (2020).

Preuschoff, K., Marius’t Hart, B. & Einhauser, W. Pupil dilation signals surprise: evidence for noradrenaline’s role in decision making. Front. Neurosci. 5, 115 (2011).

Schultz, W. Neuronal reward and decision signals: from theories to data. Physiol. Rev. 95, 853–951 (2015).

Vijayraghavan, S., Wang, M., Birnbaum, S. G., Williams, G. V. & Arnsten, A. F. Inverted-U dopamine D1 receptor actions on prefrontal neurons engaged in working memory. Nat. Neurosci. 10, 376–384 (2007).

Van Slooten, J. C., Jahfari, S., Knapen, T. & Theeuwes, J. How pupil responses track value-based decision-making during and after reinforcement learning. PLoS Comput. Biol. 14, e1006632 (2018).

de Hollander, G. & Knapen, T. nideconv v0.1.0 (2017); https://nideconv.readthedocs.io/en/latest/

Loe, P. R., Whitsel, B. L., Dreyer, D. A. & Metz, C. B. Body representation in ventrobasal thalamus of macaque: a single-unit analysis. J. Neurophysiol. 40, 1339–1355 (1977).

Guyenet, P. G. & Aghajanian, G. K. Antidromic identification of dopaminergic and other output neurons of the rat substantia nigra. Brain Res. 150, 69–84 (1978).

Batista, A. P. et al. Cortical neural prosthesis performance improves when eye position is monitored. IEEE Trans. Neural Syst. Rehabil. Eng. 16, 24–31 (2008).

Acknowledgements

We thank A. Bostan for comments and discussion, and J. Breter for animal care and enrichment. This work was supported by the National Institutes of Health (NIMH) grant DP2MH113095 to W.R.S.

Author information

Authors and Affiliations

Contributions

K.M.R. and W.R.S. designed the experiment. K.M.R., A.A. and W.R.S. collected data. K.M.R., T.H. and W.R.S. analyzed the data and wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Neuroscience thanks the anonymous reviewers for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Dopamine neurons and recording sites.

a, Example dopamine waveform from one of the neurons in our population. b, The population of 40 neurons used for our analyses in the Pavlovian and choice task had significant activations following unpredicted rewards – a characteristic feature of dopamine neurons. Gray bar along the x axis indicate the response window used for analysis. c, Recording locations for the left hemisphere of monkey S. X axis indicates lateral to medial location in the grid in millimeters, relative to midline (0). Right y axis indicates posterior to anterior location in the grid in millimeters, relative to interaural line (IAL). Each locations’ color indicates the number of neurons recorded for that location. Black circles surrounding the individual locations indicated that neurons recorded there were part of the population of 29 neurons that had a steeper response slopes in normal compared to uniform condition. Bar graphs on the left and top axes indicate the proportion of cells in that AP (left) or ML (top) location that were effect positive. Yellow dot corresponds to location indicated in MRI scan shown in d and e. d, Recording locations for the left hemisphere of monkey B. Same as panel c. e, Sagittal view MRI of the recording chamber of monkey S. Purple arrow indicates the AP location in the grid (+12 mm from IAL). f, Coronal view MRI of the recording chamber of monkey S. Purple arrow indicates the ML location in the grid (1 mm from Midline). Yellow dot in e and f correspond to approximate recording grid location in c.

Extended Data Fig. 2 Normal and Uniform reward size distributions have equivalent subjective values.

a, Schematic of the distribution-predicting fractal cues used to represent Normal (N) and Uniform (U) distributions, and safe values for the choice task in b. Three unique cues were used to predict a Normal distribution of rewards, and three unique cues were used to predict a Uniform distribution of rewards. All the distribution predicting cues were comprised of the same three reward volumes (0.2, 0.4, and 0.6 ml), and thus the same expected value (EV) of 0.4 ml. Additionally, one fractal cue predicted a sure reward of 0.2 ml, and another fractal cue predicted a sure reward of 0.6 ml. b, Monkeys made saccade-guided choices between Normal distribution-predicting cues, Uniform distribution-predicting cues, and safe rewards. c, Bar graphs are the probability of choosing the alternate cue over a Uniform distribution-predicting cue with an EV of 0.4 ml. The alternates from left to right on the x axis are a safe cue predicting 0.2 ml, a Normal distribution-predicting cue with a mean of 0.4 ml, and a safe cue predicting 0.6 ml. Data points are from individual blocks, and error bars represent ±SEM across blocks (between 6 and 18 blocks per condition). d, Same as in c, but the probability of choosing an alternate cue over a Normal distribution-predicting cue with an EV of 0.4 ml, and the middle alternate option represents Uniform distribution-predicting cues with an EV of 0.4 ml. e, The choice task used to measure subjective value. Animals made saccade-directed choices between a distribution predicting cue and a safe alternative option. The safe alternative option was a value bar with a minimum and maximum of 0 and 0.8 ml at the bottom and top, respectively. The intersection between the horizontal bar and the scale indicated the volume of juice that would be received if monkeys selected the safe cue. f, Probability of choosing the safe cue as a function of the value of the safe option, when the distribution predicting cue had an expected value (EV) of 0.4ml. Dots show average choice probability for 9 safe value options for monkey B. Solid lines are a logistic fit to the data. Red indicates data from normal distribution blocks, gray indicates data from uniform distribution blocks. The dashed horizontal lines indicate subjective equivalence, and the CE for each distribution type is indicated with the dashed vertical lines. g, Same as in f, for monkey S.

Extended Data Fig. 3 Reward randomization schemes used to determine trial types.

Top, ‘CS matched” randomization with equal frequencies of Normal and Uniform trials. Bottom, “PE matched” randomization with equal frequencies of 0.2 ml and 0.6 ml reward trials in each distribution. In both graphs, the y axis represents the probability of drawing the trial type (trial types drawn with replacement). The 6 trial types divided according to distribution type (N and U) and reward size (0.2, 0.4 and 0.6 ml). The number of instances in each trial type “stack” indicates the probability of drawing the trial type.

Extended Data Fig. 4 Amplification effect was robust.

Box and whisker plots show the baseline subtracted responses to 0.2 and 0.6 ml of juice, as in Fig. 3b, but applied to all 34 neurons that were significantly modulated by value. * indicates p < 0.05, ** indicates p < 0.01, N = 34 neurons, Wilcoxon signed-rank test, Bonferroni corrected for multiple comparisons. Box and whisker plots show, median (line), quartiles (boxes), range (whiskers), and outliers (+).

Supplementary information

Rights and permissions

About this article

Cite this article

Rothenhoefer, K.M., Hong, T., Alikaya, A. et al. Rare rewards amplify dopamine responses. Nat Neurosci 24, 465–469 (2021). https://doi.org/10.1038/s41593-021-00807-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41593-021-00807-7

This article is cited by

-

Anterior cingulate learns reward distribution

Nature Neuroscience (2024)

-

Peroxidase-mimicking poly-L-lysine/alginate microspheres with PtS2 nanoparticles for image-based colorimetric assays

Microchimica Acta (2023)

-

Brainstem networks construct threat probability and prediction error from neuronal building blocks

Nature Communications (2022)

-

Single-trial modeling separates multiple overlapping prediction errors during reward processing in human EEG

Communications Biology (2021)