Abstract

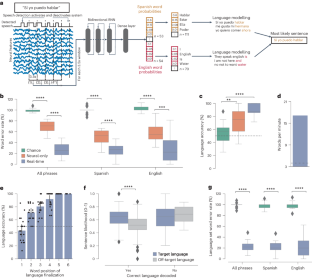

Advancements in decoding speech from brain activity have focused on decoding a single language. Hence, the extent to which bilingual speech production relies on unique or shared cortical activity across languages has remained unclear. Here, we leveraged electrocorticography, along with deep-learning and statistical natural-language models of English and Spanish, to record and decode activity from speech-motor cortex of a Spanish–English bilingual with vocal-tract and limb paralysis into sentences in either language. This was achieved without requiring the participant to manually specify the target language. Decoding models relied on shared vocal-tract articulatory representations across languages, which allowed us to build a syllable classifier that generalized across a shared set of English and Spanish syllables. Transfer learning expedited training of the bilingual decoder by enabling neural data recorded in one language to improve decoding in the other language. Overall, our findings suggest shared cortical articulatory representations that persist after paralysis and enable the decoding of multiple languages without the need to train separate language-specific decoders.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$99.00 per year

only $8.25 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data needed to recreate the main figures are provided as Source Data, and are also available in GitHub at https://github.com/asilvaalex4/bilingual_speech_bci. The raw patient data are accessible to researchers from other institutions, but public sharing is restricted pursuant to our clinical trial protocol. Full access to the data will be granted on reasonable request to E.F.C. at edward.chang@ucsf.edu, and a response can be expected in under 3 weeks. Shared data must be kept confidential and not provided to others unless approval is obtained. Shared data will not contain any information that may identify the participant, to protect their anonymity. Source data are provided with this paper.

Code availability

The code required to replicate the main findings of the study is available via GitHub at https://github.com/asilvaalex4/bilingual_speech_bci.

References

Nip, I. & Roth, C. R. in Encyclopedia of Clinical Neuropsychology (eds Kreutzer, J. et al.) 1-1 (Springer, 2017).

Chartier, J., Anumanchipalli, G. K., Johnson, K. & Chang, E. F. Encoding of articulatory kinematic trajectories in human speech sensorimotor cortex. Neuron 98, 1042–1054.e4 (2018).

Herff, C. et al. Generating natural, intelligible speech from brain activity in motor, premotor, and inferior frontal cortices. Front. Neurosci. 13, 1267 (2019).

Moses, D. A., Leonard, M. K., Makin, J. G. & Chang, E. F. Real-time decoding of question-and-answer speech dialogue using human cortical activity. Nat. Commun. 10, 3096 (2019).

Soroush, P. Z. et al. The nested hierarchy of overt, mouthed, and imagined speech activity evident in intracranial recordings. NeuroImage 269, 119913 (2023).

Thomas, T. M. et al. Decoding articulatory and phonetic components of naturalistic continuous speech from the distributed language network. J. Neural Eng. 20, 046030 (2023).

Stavisky, S. D. et al. Neural ensemble dynamics in dorsal motor cortex during speech in people with paralysis. eLife 8, e46015 (2019).

Willett, F. R. et al. A high-performance speech neuroprosthesis. Nature 620, 1031–1036 (2023).

Wandelt, S. K. et al. Decoding grasp and speech signals from the cortical grasp circuit in a tetraplegic human. Neuron 110, 1777–1787.e3 (2022).

Angrick, M. et al. Speech synthesis from ECoG using densely connected 3D convolutional neural networks. J. Neural Eng. 16, 036019 (2019).

Berezutskaya, J. et al. Direct speech reconstruction from sensorimotor brain activity with optimized deep learning models. J. Neural Eng. 20, 056010 (2023).

Dash, D., Ferrari, P. & Wang, J. Decoding imagined and spoken phrases from non-invasive neural (MEG) signals. Front. Neurosci. 14, 290 (2020).

Moses, D. A. et al. Neuroprosthesis for decoding speech in a paralyzed person with anarthria. N. Engl. J. Med. 385, 217–227 (2021).

Mugler, E. M. et al. Direct classification of all American English phonemes using signals from functional speech motor cortex. J. Neural Eng. 11, 035015 (2014).

Metzger, S. L. et al. A high-performance neuroprosthesis for speech decoding and avatar control. Nature 620, 1037–1046 (2023).

Choe, J. et al. Language-specific effects on automatic speech recognition errors for world Englishes. In Proc. 29th International Conference on Computational Linguistics 7177–7186 (International Committee on Computational Linguistics, 2022).

DiChristofano, A., Shuster, H., Chandra, S. & Patwari, N. Global performance disparities between English-language accents in automatic speech recognition. Preprint at http://arxiv.org/abs/2208.01157 (2023).

Baker, C. & Jones, S. Encyclopedia of Bilingualism and Bilingual Education (Multilingual Matters, 1998).

Athanasopoulos, P. et al. Two languages, two minds: flexible cognitive processing driven by language of operation. Psychol. Sci. 26, 518–526 (2015).

Chen, S. X. & Bond, M. H. Two languages, two personalities? Examining language effects on the expression of personality in a bilingual context. Pers. Soc. Psychol. Bull. 36, 1514–1528 (2010).

Costa, A. & Sebastián-Gallés, N. How does the bilingual experience sculpt the brain? Nat. Rev. Neurosci. 15, 336–345 (2014).

Naranowicz, M., Jankowiak, K. & Behnke, M. Native and non-native language contexts differently modulate mood-driven electrodermal activity. Sci. Rep. 12, 22361 (2022).

Li, Q. et al. Monolingual and bilingual language networks in healthy subjects using functional MRI and graph theory. Sci. Rep. 11, 10568 (2021).

Pierce, L. J., Chen, J.-K., Delcenserie, A., Genesee, F. & Klein, D. Past experience shapes ongoing neural patterns for language. Nat. Commun. 6, 10073 (2015).

Dehaene, S. Fitting two languages into one brain. Brain 122, 2207–2208 (1999).

Kim, K. H. S., Relkin, N. R., Lee, K.-M. & Hirsch, J. Distinct cortical areas associated with native and second languages. Nature 388, 171–174 (1997).

Tham, W. W. P. et al. Phonological processing in Chinese–English bilingual biscriptals: an fMRI study. NeuroImage 28, 579–587 (2005).

Xu, M., Baldauf, D., Chang, C. Q., Desimone, R. & Tan, L. H. Distinct distributed patterns of neural activity are associated with two languages in the bilingual brain. Sci. Adv. 3, e1603309 (2017).

Berken, J. A. et al. Neural activation in speech production and reading aloud in native and non-native languages. NeuroImage 112, 208–217 (2015).

Del Maschio, N. & Abutalebi, J. The Handbook of the Neuroscience of Multilingualism (Wiley-Blackwell, 2019).

DeLuca, V., Rothman, J., Bialystok, E. & Pliatsikas, C. Redefining bilingualism as a spectrum of experiences that differentially affects brain structure and function. Proc. Natl Acad. Sci. USA 116, 7565–7574 (2019).

Liu, H., Hu, Z., Guo, T. & Peng, D. Speaking words in two languages with one brain: neural overlap and dissociation. Brain Res. 1316, 75–82 (2010).

Shimada, K. et al. Fluency-dependent cortical activation associated with speech production and comprehension in second language learners. Neuroscience 300, 474–492 (2015).

Treutler, M. & Sörös, P. Functional MRI of native and non-native speech sound production in sequential German–English Bilinguals. Front. Hum. Neurosci. 15, 683277 (2021).

Cao, F., Tao, R., Liu, L., Perfetti, C. A. & Booth, J. R. High proficiency in a second language is characterized by greater involvement of the first language network: evidence from Chinese learners of English. J. Cogn. Neurosci. 25, 1649–1663 (2013).

Geng, S. et al. Intersecting distributed networks support convergent linguistic functioning across different languages in bilinguals. Commun. Biol. 6, 99 (2023).

Malik-Moraleda, S. et al. An investigation across 45 languages and 12 language families reveals a universal language network. Nat. Neurosci. 25, 1014–1019 (2022).

Perani, D. & Abutalebi, J. The neural basis of first and second language processing. Curr. Opin. Neurobiol. 15, 202–206 (2005).

Alario, F.-X., Goslin, J., Michel, V. & Laganaro, M. The functional origin of the foreign accent: evidence from the syllable-frequency effect in bilingual speakers. Psychol. Sci. 21, 15–20 (2010).

Simmonds, A., Wise, R. & Leech, R. Two tongues, one brain: imaging bilingual speech production. Front. Psychol. 2, 166 (2011).

Hannun, A. Y., Maas, A. L., Jurafsky, D. & Ng, A. Y. First-pass large vocabulary continuous speech recognition using bi-directional recurrent DNNs. Preprint at https://arxiv.org/abs/1408.2873 (2014).

Metzger, S. L. et al. Generalizable spelling using a speech neuroprosthesis in an individual with severe limb and vocal paralysis. Nat. Commun. 13, 6510 (2022).

Willett, F. R., Avansino, D. T., Hochberg, L. R., Henderson, J. M. & Shenoy, K. V. High-performance brain-to-text communication via handwriting. Nature 593, 249–254 (2021).

Radford, A. et al. Language models are unsupervised multitask learners. Preprint at Semantic Scholar https://www.semanticscholar.org/paper/Language-Models-are-Unsupervised-Multitask-Learners-Radford-Wu/9405cc0d6169988371b2755e573cc28650d14dfe (2018).

Blakely, T., Miller, K. J., Zanos, S. P., Rao, R. P. N. & Ojemann, J. G. Robust, long-term control of an electrocorticographic brain–computer interface with fixed parameters. Neurosurg. Focus 27, E13 (2009).

Pels, E. G. M. et al. Stability of a chronic implanted brain–computer interface in late-stage amyotrophic lateral sclerosis. Clin. Neurophysiol. 130, 1798–1803 (2019).

Silversmith, D. B. et al. Plug-and-play control of a brain–computer interface through neural map stabilization. Nat. Biotechnol. 39, 326–335 (2021).

Volkova, K., Lebedev, M. A., Kaplan, A. & Ossadtchi, A. Decoding movement from electrocorticographic activity: a review. Front. Neuroinform. 13, 74 (2019).

Luo, S. et al. Stable decoding from a speech BCI enables control for an individual with ALS without recalibration for 3 months. Adv. Sci. 10, 2304853 (2023).

Bouchard, K. E., Mesgarani, N., Johnson, K. & Chang, E. F. Functional organization of human sensorimotor cortex for speech articulation. Nature 495, 327–332 (2013).

Carey, D., Krishnan, S., Callaghan, M. F., Sereno, M. I. & Dick, F. Functional and quantitative MRI mapping of somatomotor representations of human supralaryngeal vocal tract. Cereb. Cortex 27, 265–278 (2017).

Mikolov, T., Chen, K., Corrado, G. & Dean, J. Efficient estimation of word representations in vector space. Preprint at https://arxiv.org/abs/1301.3781v3 (2013).

Kubichek, R. Mel-cepstral distance measure for objective speech quality assessment. In Proc. IEEE Pacific Rim Conference on Communications, Computers and Signal Processing 125–128 (IEEE, 1993).

Mitra, V. et al. Joint modeling of articulatory and acoustic spaces for continuous speech recognition tasks. In 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 5205 (IEEE, 2017).

Caruana, R. Multitask learning. Mach. Learn. 28, 41–75 (1997).

Tan, C. et al. A survey on deep transfer learning. In Artificial Neural Networks and Machine Learning – ICANN 2018 (eds Kůrková, V. et al.) 270–279 (Springer, 2018).

Makin, J. G., Moses, D. A. & Chang, E. F. Machine translation of cortical activity to text with an encoder–decoder framework. Nat. Neurosci. 23, 575–582 (2020).

Peterson, S. M., Steine-Hanson, Z., Davis, N., Rao, R. P. N. & Brunton, B. W. Generalized neural decoders for transfer learning across participants and recording modalities. J. Neural Eng. 18, 026014 (2021).

Watanabe, S., Delcroix, M., Metze, F. & Hershey, J. R. New Era for Robust Speech Recognition: Exploiting Deep Learning (Springer, 2017).

Gao, H. et al. Domain generalization for language-independent automatic speech recognition. Front. Artif. Intell. 5, 806274 (2022).

Radford, A. et al. Robust speech recognition via large-scale weak supervision. Preprint at http://arxiv.org/abs/2212.04356 (2022).

Zhang, Y. et al. Google USM: scaling automatic speech recognition beyond 100 languages. Preprint at http://arxiv.org/abs/2303.01037 (2023).

Hartshorne, J. K., Tenenbaum, J. B. & Pinker, S. A critical period for second language acquisition: evidence from 2/3 million English speakers. Cognition 177, 263–277 (2018).

Huggins, J. E., Wren, P. A. & Gruis, K. L. What would brain–computer interface users want? Opinions and priorities of potential users with amyotrophic lateral sclerosis. Amyotroph. Lateral Scler. 12, 318–324 (2011).

Peters, B. et al. Brain–computer interface users speak up: the Virtual Users’ Forum at the 2013 International Brain-Computer Interface Meeting. Arch. Phys. Med. Rehabil. 96, S33–S37 (2015).

Herff, C. et al. Brain-to-text: decoding spoken phrases from phone representations in the brain. Front. Neurosci. 9, 217 (2015).

Tang, J., LeBel, A., Jain, S. & Huth, A. G. Semantic reconstruction of continuous language from non-invasive brain recordings. Nat. Neurosci. 26, 858–866 (2023).

Correia, J. et al. Brain-based translation: fMRI decoding of spoken words in bilinguals reveals language-independent semantic representations in anterior temporal lobe. J. Neurosci. 34, 332–338 (2014).

Lucas, T. H., McKhann, G. M. & Ojemann, G. A. Functional separation of languages in the bilingual brain: a comparison of electrical stimulation language mapping in 25 bilingual patients and 117 monolingual control patients. J. Neurosurg. 101, 449–457 (2004).

Giussani, C., Roux, F.-E., Lubrano, V., Gaini, S. M. & Bello, L. Review of language organisation in bilingual patients: what can we learn from direct brain mapping? Acta Neurochir. 149, 1109–1116 (2007).

Best, C. T. The diversity of tone languages and the roles of pitch variation in non-tone languages: considerations for tone perception research. Front. Psychol. 10, 364 (2019).

Li, Y., Tang, C., Lu, J., Wu, J. & Chang, E. F. Human cortical encoding of pitch in tonal and non-tonal languages. Nat. Commun. 12, 1161 (2021).

Lee, G. & Li, H. Modeling code-switch languages using bilingual parallel corpus. In Proc. 58th Annual Meeting of the Association for Computational Linguistics 860–870 (Association for Computational Linguistics, 2020).

Rossi, E., Dussias, P. E., Diaz, M., van Hell, J. G. & Newman, S. Neural signatures of inhibitory control in intra-sentential code-switching: evidence from fMRI. J. Neurolinguist. 57, 100938 (2021).

Zheng, X., Roelofs, A., Erkan, H. & Lemhöfer, K. Dynamics of inhibitory control during bilingual speech production: an electrophysiological study. Neuropsychologia 140, 107387 (2020).

Moses, D. A., Leonard, M. K. & Chang, E. F. Real-time classification of auditory sentences using evoked cortical activity in humans. J. Neural Eng. 15, 036005 (2018).

Ludwig, K. A. et al. Using a common average reference to improve cortical neuron recordings from microelectrode arrays. J. Neurophysiol. 101, 1679–1689 (2009).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. Preprint at https://arxiv.org/abs/1412.6980 (2017).

Cho, K. et al. Learning phrase representations using RNN encoder-decoder for statistical machine translation. Preprint at https://arxiv.org/abs/1406.1078 (2014).

Fort, S., Hu, H. & Lakshminarayanan, B. Deep ensembles: a loss landscape perspective. Preprint at https://arxiv.org/abs/1912.02757 (2020).

Simonyan, K., Vedaldi, A. & Zisserman, A. Deep inside convolutional networks: visualising image classification models and saliency maps. Preprint at https://arxiv.org/abs/1312.6034 (2014).

Lux, F., Koch, J., Schweitzer, A. & Vu, N. T. The IMS Toucan system for the Blizzard Challenge 2021. Preprint at https://arxiv.org/pdf/2310.17499 (2021).

Acknowledgements

We thank our participant ‘Pancho’ for his tireless perseverance, commitment and dedication to the work described in this paper, and his family and caregivers for their incredible support. We also thank members of the Chang lab for feedback on the project; V. Her for administrative support; B. Spidel for imaging reconstruction; T. Dubnicoff for video editing; J. Davidson for help in designing initial bilingual stimuli; C. Kurtz-Miott, V. Anderson and S. Brosler for help with data collection with our participant; and the members of Karunesh Ganguly’s lab for help with the clinical trial. The National Institutes of Health (grant NIH U01 DC018671-01A1) and the William K. Bowes, Jr. Foundation supported authors S.L.M., J.R.L., D.A.M., M.E.D., M.P.S., K.T.L. and E.F.C. A.B.S. was supported by the National Institute of General Medical Sciences (NIGMS) Medical Scientist Training Program (Grant #T32GM007618) and by the National Institute On Deafness And Other Communication Disorders of the National Institutes of Health (award number F30DC021872). K.T.L. was supported by the National Science Foundation GRFP. A.T.-C. and K.G. did not have relevant funding for this work.

Author information

Authors and Affiliations

Contributions

A.B.S. developed deep-learning classification and language models. J.R.L. developed speech detection models. D.A.M. implemented software for online decoding and data collection. A.B.S. generated figures and performed statistical analyses. A.B.S., along with J.R.L., wrote the manuscript with input from I.B.-G., S.L.M., K.T.L., D.A.M. and E.F.C. A.B.S. and D.A.M., along with J.R.L., S.L.M., I.B.-G. and M.E.D., designed the experiments, utterance sets and analyses. A.B.S., M.E.D. and M.P.S. led data collection with help from J.R.L., S.L.M., K.T.L. and D.A.M. M.P.S., A.T.-C., K.G. and E.F.C. performed regulatory and clinical supervision. E.F.C. conceived and supervised the study.

Corresponding author

Ethics declarations

Competing interests

S.L.M., D.A.M., J.R.L. and E.F.C. are inventors on a pending provisional UCSF patent application relevant to the neural-decoding approaches used in this work (Application number: WO2022251472A1, 2022, WIPO PCT - International patent system). G.K.A. and E.F.C. are inventors on patent application PCT/US2020/028926; D.A.M. and E.F.C. are inventors on patent application PCT/US2020/043706; and E.F.C. is an inventor on patent US9905239B2. These patents are broadly relevant to the neural-decoding approaches used in this work. The remaining authors declare no competing interests.

Peer review

Peer review information

Nature Biomedical Engineering thanks Vikash Gilja, Jonas Obleser and Karim Oweiss for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Timing and information flow through the bilingual-sentence decoding system.

Shown is a more detailed schematic overview of the bilingual-sentence decoding system to complement Fig. 1a. Three levels of information are depicted: the neural features, the decoding system, and the output to the participant monitor. To start, the participant makes a speech attempt. This is detected by the system and cues activation of an ongoing decoding process. Following activation, a series of 3.5 s windows are cued to the participant. At the end of each window, after the full 3.5 s have passed, the neural features from that window are passed to the decoding process illustrated in Fig. 1a. Following a latency to conduct the decoding, the most likely beam from the process in Fig. 1a is displayed on the participant monitor. This process continues to occur for sequential 3.5 s windows until a window with no detected speech occurs. After such a window, the decoding is finalized and terminated. The system then listens for another speech attempt to activate and repeat the process.

Extended Data Fig. 2 Graphical depiction of bilingual-word classification.

Shown is a schematic of the bilingual-word classification process. Neural features (256 total; 128 HGA and 128 LFS time series over 3.5 s) are classified as a word in the bilingual vocabulary. Neural features are first processed by a temporal convolution. Next, the features are passed through three bidirectional GRU layers. The latent state from these layers is then read out by a dense, linear layer that emits probabilities over the 104 words in the bilingual vocabulary. This process is performed by 10 distinct models, each with a different weight initialization and trained on different folds of the data. The probabilities generated across these 10 models are averaged to create one probability vector across the bilingual vocabulary. This vector is finally split by language and the probability for a given word is broadcast to all conjugated forms of the word before being combined with the language model, as shown in Fig. 1a.

Extended Data Fig. 3 Neural-only chance sentence-decoding performance.

Shown are neural-only specific chance sentence-decoding distributions, alongside the neural-only decoding performance shown in Fig. 1. Here, we specifically computed a chance distribution with respect to neural-only decoding. We did this by shuffling the neural features and passing them through the classifier. The chance error rate was then computed the same way as for neural-only performance (**** P < 0.0001; two-sided Mann-Whitney U-test with 3-way Holm-Bonferroni correction for multiple comparisons). Distributions are over 21 online phrase-decoding blocks. Box plots in all panels depict median (horizontal line inside box), 25th and 75th percentiles (box), 25th and 75th percentiles +/- 1.5 times the interquartile range (whiskers), and outliers (diamonds).

Extended Data Fig. 4 Performance of attempted speech model on silent reading and listening.

For a subset of 10 bilingual words, we collected neural features during attempted speech, passive listening, and silent reading (roughly 250 trials in each paradigm). A model was trained on attempted speech data, using the same procedure throughout the manuscript, and evaluated on neural features from held-out attempted speech, passive listening, and silent reading trials. Performance was not significantly different from chance when evaluating the attempted speech model on listening or silent reading, in contrast to evaluation on attempted speech. This provides evidence that attempted speech neural features are specific to motor production of speech and not reflecting a process that strongly underlies listening or silent reading. Results are from 10-fold cross validation within each paradigm. Dashed line indicates chance performance (10%). Box plots in all panels depict median (horizontal line inside box), 25th and 75th percentiles (box), 25th and 75th percentiles +/- 1.5 times the interquartile range (whiskers), and outliers (diamonds).

Extended Data Fig. 5 Classification accuracy over the full 104 bilingual-words.

a, Shown is unmasked classification accuracy over the full 104 bilingual-words. The classifier retained stable performance without retraining (weights frozen at black dotted line) as in Fig. 2b. b, Classification performance before and after a 30-day break in recording without retraining (P = 0.31, two- sided Mann-Whitney U-test). Distributions are over 5 days. c, 10-fold cross validation (CV) accuracy over the unmasked 104 bilingual-words using all collected data. Median CV accuracy 47.24% (99% CI: [45.83,48.23] %). Distributions are over 10 non-overlapping folds. Box plots in all panels depict median (horizontal line inside box), 25th and 75th percentiles (box), 25th and 75th percentiles +/- 1.5 times the interquartile range (whiskers), and outliers (diamonds).

Extended Data Fig. 6 Acoustic similarity of words within the English and Spanish bilingual words.

For each word in the English vocabulary we calculated the mean pairwise mel-cepstral distortion (MCD) to all other English words. We repeated the same procedure for Spanish. Distributions are over 51 English and 50 Spanish words (shared words were excluded). English words have a significantly lower mean pairwise MCD (**** P < 0.0001, two-sided Mann-Whitney U-test). This indicates that English words, on average, are more acoustically confusable with other English words than Spanish words are with other Spanish words. Box plots in all panels depict median (horizontal line inside box), 25th and 75th percentiles (box), 25th and 75th percentiles +/- 1.5 times the interquartile range (whiskers), and outliers (diamonds).

Extended Data Fig. 7 Effects of re-training models daily during frozen-decoder evaluation.

Shown is a comparison between performance with and without re-calibration. (a) Shown is the performance without re-calibration for reference taken from (Fig. 2b). (b) Shown is the performance with re-training the classifier with sequential addition of each day’s data. (c) Shown are distributions of accuracy with and without re-training, demonstrating that small improvements may be found with re-training the decoders with each day’s data. Distributions are over 9 days in each boxplot (starting after the first-day when retraining is possible). Chance is 1.85% for English, 1.89% for Spanish, and 1.87% for all words (masked). Box plots in all panels depict median (horizontal line inside box), 25th and 75th percentiles (box), 25th and 75th percentiles +/- 1.5 times the interquartile range (whiskers), and outliers (diamonds).

Extended Data Fig. 8 Distinct contributions of HGA and LFS to classifier performance.

Shown are plots of electrode contributions for HGA against LFS, separately for English (left) and Spanish (right) trained models (as in Fig. 2d,e). The dotted lines indicate the 90th percentile of HGA and LFS contributions. The majority of electrodes only fall above the 90th percentile for one of HGA or LFS.

Extended Data Fig. 9 Full confusion matrix over all bilingual-words.

Full confusion matrix over the 104 bilingual-words. The sum of each row was normalized to 1, making confusion a proportion from (0-1). Predictions were generated using 10-fold cross validation over the full 104 bilingual-words with no masking (as in Extended Data Fig. 5).

Extended Data Fig. 10 Acoustic coverage of large-bilingual-phrase set.

We quantified the distribution of phonemes and phoneme place of articulation features to ensure the large-bilingual-phrase set covered a broad space in each language. We designed the large-bilingual-phrase set to sample a broad range of English (a) and Spanish (b) phonemes. We ensured that the relative proportion of phoneme place of articulation features was similar between English (c) and Spanish (d).

Supplementary information

Main Supplementary Information

Supplementary Notes, Methods, Figures, Tables, References and Video captions.

Supplementary Video 1

A demonstration of online word-by-word bilingual sentence decoding from the brain of a participant with paralysis.

Supplementary Video 2

A demonstration of online word-by-word bilingual sentence decoding from the brain of a participant with paralysis, using three new sentences.

Supplementary Video 3

The participant using the bilingual speech neuroprosthesis has a conversation with a researcher.

Supplementary Data 1

Source data for Supplementary Fig. 1.

Supplementary Data 2

Source data for Supplementary Fig. 2.

Supplementary Data 3

Source data for Supplementary Fig. 3.

Supplementary Data 4

Source data for Supplementary Fig. 4.

Source data

Source Data Fig. 1

Source data.

Source Data Fig. 2

Source data.

Source Data Fig. 3

Source data.

Source Data Fig. 4

Source data.

Source Data Extended Data Fig. 3

Source data.

Source Data Extended Data Fig. 4

Source data.

Source Data Extended Data Fig. 5

Source data.

Source Data Extended Data Fig. 6

Source data.

Source Data Extended Data Fig. 7

Source data.

Source Data Extended Data Fig. 8

Source data.

Source Data Extended Data Fig. 9

Source data.

Source Data Extended Data Fig. 10

Source data.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Silva, A.B., Liu, J.R., Metzger, S.L. et al. A bilingual speech neuroprosthesis driven by cortical articulatory representations shared between languages. Nat. Biomed. Eng (2024). https://doi.org/10.1038/s41551-024-01207-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41551-024-01207-5