Abstract

This article establishes various fixed-point results and introduces the idea of an extended b-suprametric space. We also give several applications pertaining to the existence and uniqueness of the solution to the equations concerning RLC electric circuits. At the end of the article, a few open questions are posed concerning the distortion of Chua’s circuit and the formulation of the Lagrangian for Chua’s circuit.

Similar content being viewed by others

Background summary and preliminaries

Numerous mathematical challenges incorporating the use of differential equations, and integral equations can be solved, and their continual existence is confirmed by the well-known contraction principle. To increase this extraordinary principle’s feasibility in numerous different abstract spaces, initiatives are being undertaken to explore and extend it1,2,3,4. By diminishing the triangle inequality, Bakhtin5 and Czerwik6 extended the configuration of metric space and called it b-metric space. As a result, a number of papers addressing fixed-point hypotheses for both single- and multi-valued mapping in b-metric space have been published.

Within this framework, Maher Berzig7 presented the idea of b-suprametric space by weakening the triangle inequality even further, while Kamran et al.8 presented the idea of extended b-metric space. A great deal of attention and study in this pursuit has been generated by the recent achievement of developing several extended/modified structures in metric spaces and/or their associated results (see,9,10,11,12,13,14,15,16,17,18).

RLC circuits, comprising resistors (R), inductors (L), and capacitors (C), are fundamental components in electrical engineering and electronics. These circuits play a pivotal role in various applications, including signal processing, power distribution, and filtering. In an RLC circuit, the behavior is governed by the interplay of these three passive components, each contributing distinct characteristics. Resistors dissipate energy in the form of heat, providing damping in the circuit. Inductors store energy in a magnetic field when current flows through them, resisting changes in current. Capacitors store energy in an electric field, resisting changes in voltage. Together, they create a complex interconnection of energy storage and dissipation mechanisms, giving rise to a rich array of circuit behaviors. RLC circuits exhibit phenomena such as resonance, where energy transfer between components reaches its peak efficiency, and transient response, where the circuit’s behavior changes over time in response to sudden changes in input. Understanding and analyzing RLC circuits are essential skills for electrical engineers, enabling the design and optimization of circuits for various applications in electrical and electronic systems. These components interact through the governing equations of Kirchhoff’s laws, leading to differential equations that describe the circuit’s behavior. RLC circuits find applications in various fields, from signal processing and filtering to power distribution and electronic devices, underscoring their significance in modern technology and engineering endeavors (see,19,20,21,22).

The analysis of an RLC circuit typically involves solving a system of linear differential equations rather than nonlinear integral equations. However, nonlinear integral equations can arise in certain special cases or when considering more complex circuit elements or behaviors. One scenario where a nonlinear integral equation may arise is when dealing with nonlinear elements such as diodes or transistors within the circuit. In these cases, the behavior of the circuit may not be describable solely through linear differential equations, and integral equations might be needed to model the relationship between voltage and current.

Many mathematical science difficulties, such as integral mathematical problems, can be addressed by restructuring other mathematical problems. Thus, the study of integral equations and the techniques for solving them are quite beneficial. Integral equations are used in the domains of science and engineering these days. Numerous scholars have devised several reliable techniques to deal with integral equations. One technique used by Rahman23 to solve an integral problem is the Chebyshev polynomials. When evaluating definite integrals, hybrid quantification has a greater level of accuracy, which leads to quicker convergence. For individual parameters, the linear symmetric formulation of Gaussian-Newtonian kind rules of less accuracy has been used to produce a combined quantification with improved accuracy. Both finite-element methods and computational solutions of integral equations have recently found effectiveness using combined quantification.

We propose the notion of an extended b-suprametric space in the below-stated formulation.

Definition 1.1

Assume that \({\mathscr {M}}\) be a non-empty set also consider a function \(\gamma :{\mathscr {M}}\times {\mathscr {M}}\rightarrow [1,\infty )\), and \(b\ge 1\). A function \({\mathcal {E}}_{s}:{\mathscr {M}}\times {\mathscr {M}}\rightarrow {\mathbb {R}}^{+}\) is said to be an extended b-suprametric if for all \(\vartheta ,\varrho ,\omega \in {\mathscr {M}}\) the following properties hold:

-

(1)

\({\mathcal {E}}_{s}(\vartheta ,\varrho )=0 \ \text {iff} \ \vartheta =\varrho ;\)

-

(2)

\({\mathcal {E}}_{s}(\vartheta ,\varrho )={\mathcal {E}}_{s}(\varrho ,\vartheta );\)

-

(3)

\({\mathcal {E}}_{s}(\vartheta ,\varrho )\le b[{\mathcal {E}}_{s}(\vartheta ,\omega )+{\mathcal {E}}_{s}(\omega ,\varrho )] +\gamma (\vartheta ,\varrho ){\mathcal {E}}_{s}(\vartheta ,\omega ) {\mathcal {E}}_{s}(\omega ,\varrho ).\)

An extended b-suprametric space is a pair \(({\mathscr {M}},{\mathcal {E}}_{s})\) (shortly, \({\mathcal {E}}_{s}\)-space), where \({\mathscr {M}}\) is a non-empty set and \({\mathcal {E}}_{s}\) is an extended b-suprametric.

Example 1.2

Take \({\mathscr {M}}=\ell _{p}({\mathbb {R}})\) where as \(p\in (0,1)\), and \(\ell _{p}({\mathbb {R}})=\{\{\vartheta _{n}\}\subset {\mathbb {R}} \ \text {such that} \ \sum _{n=1}^{\infty }|\vartheta _{n}|^{p}<\infty \}\) and \({{\mathcal {E}}_{s}}_{\ell _{p}}:{\mathscr {M}}\times {\mathscr {M}}\rightarrow {\mathbb {R}}^{+}\) is provided that

Then \(({\mathscr {M}},{{\mathcal {E}}_{s}}_{\ell _{p}})\) is b-suprametric space with \(b=2^{\frac{1}{p}}\).

Let \({\mathcal {E}}_{s}:{\mathscr {M}}\times {\mathscr {M}}\rightarrow {\mathbb {R}}^{+}\) and \(\gamma :{\mathscr {M}}\times {\mathscr {M}}\rightarrow [1,\infty )\) defined by \({\mathcal {E}}_{s}(\vartheta ,\varrho )={{\mathcal {E}}_{s}}_{\ell _{p}} (\vartheta ,\varrho )[{{\mathcal {E}}_{s}}_{\ell _{p}}(\vartheta ,\varrho )+1]\) and \(\gamma (\vartheta ,\varrho )=8^{\frac{1}{p}}\in [1,\infty ), \ 0<p<1\) with \(b=4^{\frac{1}{p}}\). Then \(({\mathscr {M}},{\mathcal {E}}_{s})\) is \({\mathcal {E}}_{s}\)-space.

We give an overview of the generated topology in the next approach.

Definition 1.3

Suppose we have \({\mathcal {E}}_{s}\)-space \(({\mathscr {M}},{\mathcal {E}}_{s})\). The set \({\mathscr {B}}(\vartheta _{0},r)=\{\vartheta \in {\mathscr {M}}/{\mathcal {E}}_{s}(\vartheta _{0},\vartheta )<r\}\) where \(r>0\) and \(\vartheta _{0}\in {\mathscr {M}}\) is called open ball. A subset \({\mathscr {N}}\) of \({\mathscr {M}}\) is called open whenever \(\varrho \in {\mathscr {N}}\), there is a \(r>0\) in such a way that \({\mathscr {B}}(\vartheta ,r)\subset {\mathscr {N}}\). \(\tau\) will stand for the collection of every open subsets of \({\mathscr {M}}\).

Proposition 1.4

Let \(({\mathscr {M}},{\mathcal {E}}_{s})\) be a \({\mathcal {E}}_{s}\)-space. Then each open ball is an open set.

Proposition 1.5

Assume that there is \({\mathcal {E}}_{s}\)-space \(({\mathscr {M}},{\mathcal {E}}_{s})\) with \(\gamma (\vartheta ,\varrho )=\beta \in [1,\infty )\), for all \(\vartheta ,\varrho \in {\mathscr {M}}\). If \(\varrho \in {\mathscr {B}}(\vartheta ,r)\), for \(r>0\), then there exists \(s>0\) such that \({\mathscr {B}}(\varrho ,s)\subseteq {\mathscr {B}}(\vartheta ,r)\).

Proof

For all \(\vartheta \in {\mathscr {M}}, \ r>0\) and \({\mathscr {B}}(\vartheta ,r)\) is non-empty. Now assume that \(\vartheta \ne \varrho\), then we have \({\mathcal {E}}_{s}(\vartheta ,\varrho )\ne 0\). Choosing \(s=\frac{r-b{\mathcal {E}}_{s}(\vartheta ,\varrho )}{b+\beta {\mathcal {E}}_{s}(\vartheta ,\varrho )}\) and let \(\omega \in {\mathscr {B}}(\varrho ,s)\). Then owing to the property of the \({\mathcal {E}}_{s}\)-space, we have

which yields, \(\omega \in {\mathscr {B}}(\vartheta ,r)\). Thus, \({\mathscr {B}}(\varrho ,s)\subseteq {\mathscr {B}}(\vartheta ,r)\). Accordingly, an open subset of \({\mathscr {M}}\) is represented by each open ball. \(\square\)

Proposition 1.6

\(\tau\) defines a topology on \(({\mathscr {M}},{\mathcal {E}}_{s})\) and the family of open balls form a base of the topology \(\tau\).

Proof

Let \(\vartheta ,\varrho \in {\mathscr {M}}\) with \(\vartheta \ne \varrho\) and \(r={\mathcal {E}}_{s}(\vartheta ,\varrho )>0\). Denote \({\mathscr {U}}={\mathscr {B}}(\vartheta ,\frac{r}{2})\) and \({\mathscr {V}}={\mathscr {B}}(\varrho ,\frac{r(2-b)}{2b+\beta r})\) where \(1\le b<2\).

Let us demonstrate that \({\mathscr {U}}\cap {\mathscr {V}}=\emptyset\), if none of the above applies, there is \(\omega \in {\mathscr {U}}\cap {\mathscr {V}}\), as we have from hypothesis \({\mathcal {E}}_{s}(\vartheta ,\omega )<\frac{r}{2}\) and \({\mathcal {E}}_{s}(\varrho ,\omega )<\frac{r(2-b)}{2b+\beta r}\), we obtain,

Hence, \({\mathscr {U}}\cap {\mathscr {V}}=\emptyset\). As a result, \({\mathscr {M}}\) is Hausdorff. \(\square\)

Definition 1.7

Let \(({\mathscr {M}},{\mathcal {E}}_{s})\) be an \({\mathcal {E}}_{s}\)-space. A sequence \(\{\vartheta _{n}\}_{n\in {\mathbb {N}}}\) of elements of \({\mathscr {M}}\) Converges to \(\vartheta \in {\mathscr {M}}\), if for every \(\epsilon >0\) the ball \({\mathscr {B}}(\vartheta ,\epsilon )\) contained all that a finite number of terms of the sequence. In this case \(\vartheta\) is a limit point of \(\{\vartheta _{n}\}_{n\in {\mathbb {N}}}\) and we write \(\lim _{n\rightarrow \infty }{\mathcal {E}}_{s}(\vartheta _{n},\vartheta )=0\).

Proposition 1.8

Assume that there is \({\mathcal {E}}_{s}\)-space \(({\mathscr {M}},{\mathcal {E}}_{s})\). A sequence is unique if and only if \(\{\vartheta _{n}\}_{n\in {\mathbb {N}}}\subset {\mathscr {M}}\) possesses a limit.

Proof

One can easily deduce this result by using Hausdorffness. \(\square\)

Definition 1.9

Assume that there is \({\mathcal {E}}_{s}\)-space \(({\mathscr {M}},{\mathcal {E}}_{s})\). A sequence \(\{\vartheta _{n}\}_{n\in {\mathbb {N}}}\in {\mathscr {M}}\) is a Cauchy sequence if, for all \(\epsilon >0\), there exists some \(\kappa \in {\mathbb {N}}\) such that for all \(n,m\ge \kappa , \ {\mathcal {E}}_{s}(\vartheta _{n},\vartheta _{m})<\epsilon\).

Remark 1.10

If \(\{\vartheta _{n}\}_{n\in {\mathbb {N}}}\) is a Cauchy sequence in \(({\mathscr {M}},{\mathcal {E}}_{s})\) then there is a \({\mathfrak {q}}\in {\mathscr {M}}\) in such a way that \(\lim _{n\rightarrow \infty }{\mathcal {E}}_{s}(\vartheta _{n},{\mathfrak {q}})=0\) and further every subsequence \(\{\vartheta _{n(\kappa )}\}_{\kappa \in {\mathbb {N}}}\) converges to \({\mathfrak {q}}\).

Definition 1.11

An \({\mathcal {E}}_{s}\)-space \(({\mathscr {M}},{\mathcal {E}}_{s})\) is called complete if every Cauchy sequence is convergent.

Definition 1.12

Let \(\Psi :{\mathscr {S}}\subset {\mathscr {M}}\rightarrow {\mathscr {M}}\) and there is \(\vartheta _{0}\in {\mathscr {S}}\) in such a way that \({\mathscr {O}}(\vartheta _{0})=\{\vartheta _{0},\Psi \vartheta _{0}, \Psi ^{2}\vartheta _{0},....\}\subset {\mathscr {S}}\). An orbit of \(\vartheta _{0}\in {\mathscr {S}}\) is denoted by the set \({\mathscr {O}}(\vartheta _{0})\). A function \({\mathscr {G}}\) from \({\mathscr {S}}\) into the collection of real numbers is predominantly called \(\Psi\)-orbitally lower semicontinuous at \(t\in {\mathscr {S}}\) whenever \(\{\vartheta _{n}\}\subset {\mathscr {O}}(\vartheta _{0})\) and \(\vartheta _{n}\rightarrow t\) \(\Rightarrow\) \({\mathscr {G}}(t)\le \liminf _{n\rightarrow \infty }{\mathscr {G}}(\vartheta _{n})\).

We can observe that J. Matkowski24 introduced the concept of comparison functions initially. Subsequently, various modifications and extensions of these comparison functions are provided to complete their outcomes (see,25,26,27).

Now, inspired by the above literature, we introduce the below definition.

Definition 1.13

Assume that there is \({\mathcal {E}}_{s}\)-space \(({\mathscr {M}},{\mathcal {E}}_{s})\). A function \(\psi :{\mathbb {R}}^{+}\rightarrow {\mathbb {R}}^{+}\) is an extended b-supra-comparison function (shortly, \(E_{sC}\)-function) if it is increasing and there exist a mapping \(\Psi :{\mathscr {S}}\subset {\mathscr {M}}\rightarrow {\mathscr {M}}\) such that for some \(\vartheta _{0}\in {\mathscr {S}}\) and \({\mathscr {O}}(\vartheta _{0})\subset {\mathscr {S}}\),

converges. Here \(\vartheta _{n}=\Psi ^{n}\vartheta _{0}, \forall n=1,2,3...\) Then, \(\psi\) is an \(E_{sC}\)-function for \(\Psi\) at \(\vartheta _{0}\).

Results on \({\mathcal {E}}_{s}\)-space

Theorem 2.1

Let \(({\mathscr {M}},{\mathcal {E}}_{s})\) be a complete \({\mathcal {E}}_{s}\)-space and \(\Psi :{\mathscr {M}}\rightarrow {\mathscr {M}}\) be a mapping. Take \(\eta \in [0,1)\) in such a way that

for all \(\vartheta ,\varrho \in {\mathscr {M}}\). Then \(\Psi\) has a unique fixed point, and for every \(\vartheta _{0}\in {\mathscr {M}}\) the iterative sequence defined by \(\vartheta _{n}=\Psi \vartheta _{n-1}, \ \forall n\in {\mathbb {N}}\) converges to this fixed point.

Proof

Let \(({\mathscr {M}},{\mathcal {E}}_{s})\) be a complete \({\mathcal {E}}_{s}\)-space. Define the sequence \(\{\vartheta _{n}\}\) by \(\vartheta _{n}=\Psi \vartheta _{n-1}, \ \forall n\in {\mathbb {N}}\) for some arbitrary \(\vartheta _{0}\in {\mathscr {M}}\). Now from (2.1), we deduce that

Thus, regardless of the given integer \(\kappa\), the sequence \(\{{\mathcal {E}}_{s}(\vartheta _{n},\vartheta _{n+1})\}\) is non-increasing and meets the following:

Therefore, \(\lim _{n\rightarrow \infty }{\mathcal {E}}_{s}(\vartheta _{n},\vartheta _{n+1})=0,\) which yields that for \(\epsilon >0, \ \kappa \in {\mathbb {N}}\) such that for all \(n\ge \kappa\), we have

We shall now demonstrate the Cauchy nature of the series \(\{\vartheta _{n}\}\). BY utilizing (2.2), (2.3) and triangular inequality, we have

where,

From the above two inequalities (2.4) and (2.5), we get,

Employing (2.3) in each of the terms in the sum, we can keep proceeding until we get

Since \(\frac{\eta }{b}\in [0,1)\), it follows that

Now, one can easily verify that the series \(\sum _{i=0}^{\infty }U_{i}\) converges by ratio test, where,

Hence we deduce that \({\mathcal {E}}_{s}(\vartheta _{\mathbbm {p}},\vartheta _{\mathbbm {q}})\rightarrow 0\) as \(\mathbbm {p},\mathbbm {q}\) tends to infinity, thus, \(\{\vartheta _{n}\}\) is Cauchy. \(\{\vartheta _{n}\}\) converges to some \({\mathfrak {q}}\in {\mathscr {M}}\) as per the completeness of \({\mathscr {M}}\), it follows that every subsequence \(\{\vartheta _{n(\kappa )}\}_{\kappa \in {\mathbb {N}}}\) converges to \({\mathfrak {q}}\). Let us now assert that \({\mathfrak {q}}\) is a fixed point of \(\Psi\). BY using (2.1) and continuity of \(\Psi\), we get,

Therefore, we conclude that \({\mathfrak {q}}=\Psi {\mathfrak {q}}\) as \(\kappa\) attains infinity.

Hence \({\mathfrak {q}}\) is a fixed point of \(\Psi\).

In order to prove uniqueness, assume that \(\vartheta _{1}\) and \(\vartheta _{2}\) are two fixed points which are distinct. Which yields that \({\mathcal {E}}_{s}(\vartheta _{1},\vartheta _{2})\ne 0\). From (2.1), we obtain,

which is absurd, and therefore \(\vartheta _{1}=\vartheta _{2}\). \(\square\)

Example 2.2

Let \({\mathscr {M}}=[0,\pi ]\). Define a mapping \({\mathcal {E}}_{s}:{\mathscr {M}}\times {\mathscr {M}}\rightarrow [0,\infty )\) by \({\mathcal {E}}_{s}(\vartheta ,\varrho )=|\vartheta -\varrho |\) for all \(\vartheta ,\varrho \in {\mathscr {M}}\) and \(\gamma :{\mathscr {M}}\times {\mathscr {M}}\rightarrow [1,\infty )\) by \(\gamma (\vartheta ,\varrho )=e^{\vartheta +\varrho }+1\) for all \(\vartheta ,\varrho \in {\mathscr {M}}\). Clearly \(({\mathscr {M}},{\mathcal {E}}_{s})\) is a complete \({\mathcal {E}}_{s}\)-space.

Define a mapping \(\Psi :{\mathscr {M}}\rightarrow {\mathscr {M}}\) by

Now lets prove that \(\Psi\) satisfies (2.1) of Theorem.2.1.

Consider

By using the boundedness of the function \(\sin \vartheta\) in the interval \([0,\pi ]\), there exists a constant \(\Lambda >0\) such that \(\frac{1}{(1+\sin \vartheta )(1+\sin \varrho )}\le \Lambda\). Thus from the above inequality (2.6), we obtain

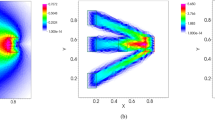

Hence all the conditions of Theorem.2.1 satisfied and 0.9592 is the unique fixed point of \(\Psi\), which is obtained with the help of MATLAB with \(\frac{\eta }{b}\in [0,1)\). Moreover, the comparisons of L.H.S and R.H.S of the contraction (2.1) of Theorem.2.1 using MATLAB for this example as shown in the Fig. 1.

Now, we perform a few numerical simulations to estimate \(\Psi\)’s fixed point in Table 1. Additionally, Fig. 2 illustrates how the aforementioned iterations converge.

Convergence behavior for Example 2.2.

Example 2.3

Let \({\mathscr {M}}=[0,\pi ]\). Define a mapping \({\mathcal {E}}_{s}:{\mathscr {M}}\times {\mathscr {M}}\rightarrow [0,\infty )\) by \({\mathcal {E}}_{s}(\vartheta ,\varrho )=\bigg |\frac{\sin (\vartheta -\varrho )}{1+\sin \vartheta }\bigg |\) for all \(\vartheta ,\varrho \in {\mathscr {M}}\) and \(\gamma :{\mathscr {M}}\times {\mathscr {M}}\rightarrow [1,\infty )\) by \(\gamma (\vartheta ,\varrho )=e^{\vartheta +\varrho }\) for all \(\vartheta ,\varrho \in {\mathscr {M}}\). Clearly \(({\mathscr {M}},{\mathcal {E}}_{s})\) is a complete \({\mathcal {E}}_{s}\)-space.

Define a mapping \(\Psi :{\mathscr {M}}\rightarrow {\mathscr {M}}\) by

By following the same pattern as above, we can easily deduce that \(\Psi (\vartheta )\) satisfies Eq. (2.1) of Theorem 2.1. By using the numerical method in MATLAB, we obtain the unique fixed point of \(\Psi\) which is 0.9592 lies in the interval \([0,\pi ]\). Thus, this example illustrates our Theorem 2.1. Moreover, the comparisons of L.H.S and R.H.S of the contraction (2.1) of Theorem 2.1 using MATLAB for this example are shown in the Fig. 3.

Theorem 2.4

Let \(({\mathscr {M}},{\mathcal {E}}_{s})\) be a complete \({\mathcal {E}}_{s}\)-space such that \({\mathcal {E}}_{s}\) is continuous. Consider the mapping \(\Psi :{\mathscr {S}}\subset {\mathscr {M}}\rightarrow {\mathscr {M}}\) such that \({\mathscr {O}}(\vartheta _{0})\subset {\mathscr {S}}\). Assume that,

for each \(\vartheta \in {\mathscr {O}}(\vartheta _{0})\), where \(\psi\) is an \(E_{sC}\)-function for \(\Psi\) at \(\vartheta _{0}\). Then \(\Psi ^{n}\vartheta _{0}\rightarrow \delta \in {\mathscr {M}}\). Furthermore, \(\delta\) is a fixed point of \(\Psi\) iff \({\mathscr {G}}(\vartheta )={\mathcal {E}}_{s}(\vartheta ,\Psi \vartheta )\) is \(\Psi\)-orbitally lower semicontinuous at \(\delta\).

Proof

Assume that \(({\mathscr {M}},{\mathcal {E}}_{s})\) is a complete \({\mathcal {E}}_{s}\)-space. Define the iterative sequence \(\{\vartheta _{n}\}\) by \(\vartheta _{0},\psi \vartheta _{0}=\vartheta _{1},\vartheta _{2} =\Psi \vartheta _{1}=\Psi (\Psi \vartheta _{0})=\Psi ^{2}(\vartheta _{0}) ....\vartheta _{n}=\Psi ^{n}(\vartheta _{0})...\) \(\forall n\in {\mathbb {N}}\) and \(\vartheta _{0}\in {\mathscr {M}}\).

In the remaining portions of the convincing proof we attempt to streamline the idea \({{\mathcal {E}}_{s}}_{m,n}={\mathcal {E}}_{s}(\vartheta _{m},\vartheta _{n})\) and \(\gamma _{m,n}=\gamma (\vartheta _{m},\vartheta _{n})\) for all \(m,n\in {\mathbb {N}}\).

By successively applying inequality (2.7), we obtain,

By triangular inequality and (2.8), for \(\mathbbm {p}>\mathbbm {q}\) we have

where

By combining the previous two inequalities (2.9) and (2.10), we obtain

By continuing this process until we obtain

The series

converges for each \(\mathbbm {p},\mathbbm {q}\in {\mathbb {N}}\). Thus, we conclude that \(\{\vartheta _{n}\}\) is Cauchy since \({{\mathcal {E}}_{s}}_{\mathbbm {p},\mathbbm {q}}\rightarrow 0\) as \(\mathbbm {p},\mathbbm {q}\rightarrow \infty\). Since \({\mathscr {M}}\) is complete then \(\vartheta _{n}=\Psi ^{n}\vartheta _{0}\rightarrow \delta \in {\mathscr {M}}\). Given the assumption the fact that \({\mathscr {G}}\) is semicontinuous at \(\delta \in {\mathscr {M}}\), implies that,

Conversely, let \(\delta =\Psi \delta\) and \(\vartheta _{n}\in {\mathscr {O}}(\vartheta )\) with \(\vartheta _{n}\rightarrow \delta\).

Then

This completes the proof. \(\square\)

An RLC-electric circuit problem via fixed-point method

Most natural events can be mathematically explained, which typically results in an analysis of the challenges in terms of nonlinear differential equations. Being able to demonstrate that numerous traditional hypotheses from various scientific disciplines could be expressed in the context of linear differential equations demonstrates that, in quite a large number of essential instances, the mathematical formula in question can be linearized without losing any of its key components. However, to clarify and anticipate the observed behavior, some phenomena cannot be explained by linearizing the equations that characterize them. In these cases, a solution to the relevant nonlinear differential equations must be found. The study of nonlinear differential equations is becoming more and more important with the various subfields within multidisciplinary domains.

There has been a global interest in creating analytical techniques to solve nonlinear differential equations. In recent years, a number of literature works that provide an overview of the numerous approaches used to solve nonlinear differential equations have been established (see28,29,30,31). There aren’t many approaches developed for solving nonlinear problems that occur in real-world situations, according to a review of the literature on nonlinear differential equation solutions. The presented paper aims to use our main result of relevance in electrical circuits and to propose a method of solving nonlinear differential equations that is centered on the theory of nonlinear integral equations.

An RLC circuit typically refers to a circuit composed of a resistor (R), inductor (L), and capacitor (C), where the dynamics are governed by linear differential equations. However, when nonlinearities are introduced, such as nonlinear components or nonlinear behavior in the circuit elements, the analysis becomes more complex. In the case of nonlinear integral equations with Green’s functions, the analysis likely involves studying the response of the circuit to time-varying inputs or initial conditions, where the behavior of the circuit elements may not be adequately described by linear models. Green’s functions are useful for solving integral equations and can provide insight into the behavior of the system. Nonlinear integral equations can arise in various contexts within electrical engineering, such as in modeling nonlinear elements like diodes or transistors, or in describing complex behaviors like hysteresis or saturation effects in magnetic components.

The solution to such equations often requires numerical methods due to their complexity, and techniques like finite difference methods, finite element methods, or numerical integration may be employed to approximate the solutions.

Example 3.1

Consider a simple series RLC circuit consisting of a resistor (R), an inductor (L), and a capacitor (C) connected in series to an AC voltage source. The general equation governing the behavior of this circuit is:

Consider a specific example with \(R=50\) ohms, \(L=0.1\) Henry, \(C= 100\) microfarads and \(V(t)=10sin(100t)\) volts. Now, We want to find the current flowing through the circuit I(t).

We can solve this problem using differential equations, but let’s use a numerical method, such as the Euler method, for simplicity.

The Euler method is a basic numerical technique to approximate solutions of ordinary differential equations. Here’s how it works: Start with an initial condition:

-

(A)

Start with an initial condition: \(I(0)=0\) (assuming no initial current).

-

(B)

Use the differential equation to find the rate of change of the current at each time step.

-

(C)

Update the current using the rate of change and a small time step.

Now, perform this calculation for a small time step, say \(\Delta {\mathfrak {t}}=0.01\) seconds, from \({\mathfrak {t}}=0\) to \({\mathfrak {t}}=2\) seconds.

Above Fig. 4 shows how the current in the circuit varies with time. We can observe the transient behavior as the circuit responds to the sinusoidal input voltage. Eventually, the current will stabilize to a sinusoidal waveform due to the balance between the inductive and capacitive reactance with the resistance.

Existence of solution for the integral equation associated with an RLC electrical circuit equation

As a consequence of our results, the existence of solution for the integral equation associated with the electrical circuits equation problem described as below:

Consider an electrical circuit containing a resistor R, an inductor L, a capacitor C and total electro motive force \(V({\mathfrak {t}})\) as shown in Fig. 5

By Kirchoff’s voltage law, we obtain

where I is the current, and q is the charge.

Equation (3.1) can be written as a initial value problem

The associated Green function is,

in which R and L are used to determine the constant \(\tau >0\).

Assume that the collection that includes all continuous real-valued functions constructed on [0, 1] is \({\mathscr {M}}=({\mathcal {C}}[0,1],{\mathbb {R}})\). Let us define \({\mathcal {E}}_{s}:{\mathscr {M}}\times {\mathscr {M}}\rightarrow {\mathbb {R}}\) by \({\mathcal {E}}_{s}(\vartheta ({\mathfrak {t}}),\varrho ({\mathfrak {t}})) =\sup _{{\mathfrak {t}}\in [0,1]}\{|\vartheta ({\mathfrak {t}})-\varrho ({\mathfrak {t}})|^{2}e^{-|\tau {\mathfrak {t}}|}\}\).

Note that \(({\mathscr {M}},{\mathcal {E}}_{s})\) is a complete \({\mathcal {E}}_{s}\)-space with \(b=2\) and \(\gamma (\vartheta ({\mathfrak {t}}),\varrho ({\mathfrak {t}})) =e^{\vartheta ({\mathfrak {t}})+\varrho ({\mathfrak {t}})}\) for all \(\vartheta ({\mathfrak {t}}),\varrho ({\mathfrak {t}})\in {\mathscr {M}}\), where \(\gamma :{\mathscr {M}}\times {\mathscr {M}}\rightarrow [1,\infty )\).

The integral equation can be used to the problem mentioned above:

Theorem 3.2

Let \({\mathscr {M}}=({\mathcal {C}}[0,1],{\mathbb {R}})\) and let \({\mathscr {H}}:{\mathscr {M}}\rightarrow {\mathscr {M}}\) be the operator defined as

where \(\kappa :[0,1]\times {\mathbb {R}}\rightarrow {\mathbb {R}}\) a continuous and non-decreasing function for all \({\mathfrak {t}}\in [0,1]\). Thus the problem (3.2) has a unique solution if the following assumptions hold.

-

(1)

\(|\kappa ({\mathfrak {t}},\vartheta )-\kappa ({\mathfrak {t}},\varrho )|\le \tau ^{2}e^{-\tau (1-\frac{t}{2})}|\vartheta -\varrho |\) for all \(\vartheta ,\varrho \in {\mathscr {M}}\) and \({\mathfrak {t}}\in [0,1]\) and \(\tau >0\);

-

(2)

For all \(\vartheta ({\mathfrak {t}})\le \int _{0}^{1}{\mathscr {G}}({\mathfrak {t}},\varsigma ) \kappa ({\mathfrak {t}},\vartheta (\varsigma ))d\varsigma , \ \text {for all} \ {\mathfrak {t}}\in [0,1]\).

Proof

Let \(\vartheta ({\mathfrak {t}}),\varrho ({\mathfrak {t}})\in ({\mathcal {C}}[0,1],{\mathbb {R}})\). Consider,

which yields that,

Therefore all the conditions of Theorem.2.1 satisfied, \({\mathscr {H}}\) has a fixed point. Consequently the differential equation arising in an RLC-electric circuit (3.2) guarantees the existence and uniqueness of the solution. \(\square\)

Existence of solution for the integral equation associated with an RLC electrical circuit equation with a nonlinear element

Consider an RLC circuit with a nonlinear element, such as a diode. The diode’s current-voltage characteristic is typically described by a nonlinear equation, such as the Shockley diode equation.

The Shockley diode equation relates the current (I) through a diode to the voltage (V) across it and is given by:

where, I is the diode current, \(I_{s}\) is the reverse saturation current, V is the voltage across the diode, n is the ideality factor, and \(V_{{\mathfrak {t}}}\) is the thermal voltage, approximately 26mV at room temperature.

Now, let’s consider an RLC circuit with a voltage source \(V_{s}({\mathfrak {t}})\), a resistor R, an inductor L, a capacitor C, and a diode D connected in series. The voltage across the diode \(V_{D}({\mathfrak {t}})\) can be expressed as:

The current-voltage relation for the diode gives us:

Substituting this expression for I(R) into the equation for \(V_{D}({\mathfrak {t}})\), which yields,

By Faraday’s law of electromagnetic induction, we know that the voltage across an inductor is given by the rate of change of current with respect to time multiplied by the inductance.

Therefore, we can rewrite \(L\frac{dI}{d{\mathfrak {t}}}\) as \(V_{L}({\mathfrak {t}})\), the voltage across the inductor:

Now, the problem is to solve for \(V_{D}({\mathfrak {t}})\) in terms of an integral equation. We can rewrite the equation in integral form by expressing \(V_{L}({\mathfrak {t}})\) as an integral operator:

This integral equation represents the voltage across the diode in terms of an integral of its own voltage over time, along with the input voltage \(V_{s}({\mathfrak {t}})\) and the nonlinear term involving the diode current.

Let \({\mathscr {M}}\) be the space of continuous function on a closed interval [0, T]. Let us define \({\mathcal {E}}_{s}:{\mathscr {M}}\times {\mathscr {M}}\rightarrow {\mathbb {R}}\) by \({\mathcal {E}}_{s}(\vartheta ({\mathfrak {t}}),\varrho ({\mathfrak {t}})) =\sup _{{\mathfrak {t}}\in [0,T]}\{|\vartheta ({\mathfrak {t}})-\varrho ({\mathfrak {t}})|\}\).

Note that \(({\mathscr {M}},{\mathcal {E}}_{s})\) is a complete \({\mathcal {E}}_{s}\)-space with \(b=2\) and \(\gamma (\vartheta ({\mathfrak {t}}),\varrho ({\mathfrak {t}})) =e^{\vartheta ({\mathfrak {t}})+\varrho ({\mathfrak {t}})}\) for all \(\vartheta ({\mathfrak {t}}),\varrho ({\mathfrak {t}})\in {\mathscr {M}}\), where \(\gamma :{\mathscr {M}}\times {\mathscr {M}}\rightarrow [1,\infty )\).

Theorem 3.3

Let \({\mathscr {H}}:{\mathscr {M}}\rightarrow {\mathscr {M}}\) be the operator defined as

Then the Eq. (3.5) admits a unique solution if the following assumptions hold:

-

If we choose \(\delta\) such that, \(|V_{D}-V^{\prime }_{D}|<\delta\);

-

\(e^{\frac{\delta }{nV_{{\mathfrak {t}}}}}-1<\frac{1-\frac{T}{L}}{I_{s}R}\).

Proof

Let \(\vartheta ({\mathfrak {t}}),\varrho ({\mathfrak {t}})\in {\mathscr {M}}\). Now consider

Now, let’s define \(k(=\frac{\eta }{b})\) such that:

For \(k<1\), we need,

Now if \(|V_{D}-V^{\prime }_{D}|<\delta\), where \(\delta\) is such that \(e^{\frac{\delta }{nV_{{\mathfrak {t}}}}}-1<\frac{1-\frac{T}{L}}{I_{s}R}\), then \(k<1\), and thus \({\mathscr {H}}\) satisfies Eq. (2.1). Therefore, if we choose \(\delta\) such that \(e^{\frac{\delta }{nV_{{\mathfrak {t}}}}}-1<\frac{1-\frac{T}{L}}{I_{s}R}\), then \({\mathscr {H}}\) satisfies Eq. (2.1), and Theorem.2.1 guarantees the existence and uniqueness of the solution to the integral equation (3.5) associated with an RLC electrical circuit equation with a nonlinear elements \(\square\)

Open questions

-

What are the additional conditions required in order to prove the existence of a solution and estimation of distortion of Chua’s circuit32 for the above-obtained results in \({\mathcal {E}}_{s}\)-space?

-

Prove or disprove Lagrangian for Chua’s circuit through distance space.

Data availibility

The data sets used and/or analyzed during the current study available from the corresponding author on reasonable request.

References

Bhaskar, G. & Lakshmikantham, V. Fixed point theorems in partially ordered metric spaces and applications. Nonlinear Anal. 65(7), 1379–1393 (2006).

Choudhury, B. S., Metiya, N. & Postolache, M. A generalized weak contraction principle with applications to coupled coincidence point problems. Fixed Point Theory Appl. 2013(152), 1–21 (2013).

Ciric, L. B. A generalization of Banach’s contraction principle. Proc. Amer. Math. Soc. 45, 267–273 (1974).

Suzuki, T. A generalized Banach contraction principle that characterizes metric completeness. Proc. Amer. Math. Soc. 136(5), 1861–1869 (2008).

Bakhtin, I. A. The contraction mapping principle in almost metric spaces. Funct. Anal. 30, 26–37 (1989).

Czerwik, S. Contraction mappings in \(b\)-metric spaces. Acta Math. Inform. Univ. Ostraviensis 1, 5–11 (1993).

Berzig, M. Nonlinear contraction in \(b\)-suprametric spaces, eprint arXiv:2304.08507, 8 pages. https://doi.org/10.48550/arXiv.2304.08507.

Kamran, T., Samreen, M. & Ain, U. Q. A generalization of \(b\)-metric space and some fixed point theorems. Mathematics 5, 19. https://doi.org/10.3390/math5020019 (2017).

Panda, S. K., Kalla, K. S., Nagy, A. M. & Priyanka, L. Numerical simulations and complex valued fractional order neural networks via \((\varepsilon -\mu )\)-uniformly contractive mappings. Chaos Solitons Fractals 173, 113738 (2023).

Panda, S. K., Karapınar, E. & Atangana, A. A numerical schemes and comparisons for fixed point results with applications to the solutions of Volterra integral equations in dislocated extended \(b\)-metricspace. Alex. Eng. J. 59(2), 815–827 (2020).

Panda, S. K., Agarwal, R. P. & Karapınar, E. Extended suprametric spaces and Stone-type theorem. AIMS Math. 8(10), 23183–23199. https://doi.org/10.3934/math.20231179 (2023).

Das, A. et al. An existence result for an infinite system of implicit fractional integral equations via generalized Darbo’s fixed point theorem. Comp. Appl. Math. 40, 143. https://doi.org/10.1007/s40314-021-01537-z (2021).

Panda, S. K. et al. Chaotic attractors and fixed point methods in piecewise fractional derivatives and multi-term fractional delay differential equations. Results Phys. 46, 106313. https://doi.org/10.1016/j.rinp.2023.106313 (2023).

Ravichandran, C. et al. On new approach of fractional derivative by Mittag-Leffler kernel to neutral integro-differential systems with impulsive conditions. Chaos Solitons Fractals 139, 110012 (2020).

Panda, S. K. et al. Enhancing automic and optimal control systems through graphical structures. Sci. Rep. 14, 3139. https://doi.org/10.1038/s41598-024-53244-4 (2024).

Panda, S. K., Atangana, A. & Nieto, J. J. New insights on novel coronavirus 2019-NcoV/Sars-Cov-2 modelling in the aspect of fractional derivatives and fixed points. Math. Biosci. Eng. 18(6), 8683–8726 (2021).

Panda, S. K., Abdeljawad, T. & Ravichandran, C. Novel fixed point approach to Atangana-Baleanu fractional and \(L_{p}\)-Fredholm integral equations. Alex. Eng. J. 59(4), 1959–70 (2020).

Berzig, M. First results in suprametric spaces with applications. Mediterr. J. Math. 19(5), 226 (2022).

Zorica, Dusan & Cveticanin, Stevan M. Dissipative and generative fractional RLC circuits in the transient regime. Appl. Math. Comput. 459, 128227 (2023).

Peelo, D. F. RLC Circuits, in Current Interruption Transients Calculation 9–34 (IEEE, 2020). https://doi.org/10.1002/9781119547273.ch2.

Ilchenko, M. Y. et al. Modeling of electromagnetically induced transparency with rlc circuits and metamaterial cell. IEEE Trans. Microw. Theory Tech. 71(12), 5104–5110. https://doi.org/10.1109/TMTT.2023.3275653 (2023).

Naveen, S., Parthiban, V. & Abbas, M. I. Qualitative analysis of RLC circuit described by Hilfer derivative with numerical treatment using the Lagrange polynomial method. Fractal Fract. 7, 804. https://doi.org/10.3390/fractalfract7110804 (2023).

Rahman, M. M., Hakim, M. A., Hasan, M. K., Alam, M. K. & Ali, L. N. Numerical solution of Volterra integral equations of second kind with the help of Chebyshev polynomials. Ann. Pure Appl. Math. 1(2), 158–167 (2012).

Matkowski, J. Integrable solutions of functional equations. Dissertations Math. 127, 1–68 (1975).

Berinde, V. Contractii Generalizate si Aplicatii (Cub Press, 1997).

Berinde, V. Generalized contractions in quasimetric spaces. In Seminar on Fixed Point Theory Vol. 3 (ed. Berinde, V.) 3–9 (Babes-Bolyai University of Cluj-Napoca, 1993).

Rus, I. A. Generalized Contractions and Applications (Cluj University Press, 2001).

Von Karman, T. The engineer grapples with nonlinear Problems. Bull. Am. Math. Soc. 46, 6–15 (1940).

Pipes, L. A. A mathematical analysis of a dielectric amplifier. J. Appl. Phys. 23(8), 818–824 (1952).

Hammad, H. A. & De la Sen, M. Fixed-Point results for a generalized almost \((s, q)\)-Jaggi \(F\)-contraction-type on \(b\)-metric-like spaces. Mathematics 8, 63. https://doi.org/10.3390/math8010063 (2020).

Ameer, E., Aydi, H., Arshad, M. & De la Sen, M. Hybrid Ćirić type graphic \(\Upsilon ,\Lambda\)-contraction mappings with applications to electric circuit and fractional differential equations. Symmetry 12, 467. https://doi.org/10.3390/sym12030467 (2020).

Madan, Rabinder N. Chua’s Circuit: A Paradigm for Chaos (World Scientific Publishing Company, 1993).

Author information

Authors and Affiliations

Contributions

S.K.P., V.V., I.K., and S.N., collaborated on the design, implementation, investigation, and writing of the research paper in accordance with the results.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Panda, S.K., Velusamy, V., Khan, I. et al. Computation and convergence of fixed-point with an RLC-electric circuit model in an extended b-suprametric space. Sci Rep 14, 9479 (2024). https://doi.org/10.1038/s41598-024-59859-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-59859-x

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.