Abstract

With the improving sensitivity of the global network of gravitational-wave detectors, we expect to observe hundreds of transient gravitational-wave events per year. The current methods used to estimate their source parameters employ optimally sensitive but computationally costly Bayesian inference approaches, where typical analyses have taken between 6 h and 6 d. For binary neutron star and neutron star–black hole systems prompt counterpart electromagnetic signatures are expected on timescales between 1 s and 1 min. However, the current fastest method for alerting electromagnetic follow-up observers can provide estimates in of the order of 1 min on a limited range of key source parameters. Here, we show that a conditional variational autoencoder pretrained on binary black hole signals can return Bayesian posterior probability estimates. The training procedure need only be performed once for a given prior parameter space and the resulting trained machine can then generate samples describing the posterior distribution around six orders of magnitude faster than existing techniques.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$209.00 per year

only $17.42 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

We provide the input test data waveforms as well as the trained ML model on the Harvard Dataverse at the following publicly available link: https://doi.org/10.7910/DVN/DECSMV

Code availability

We have made the entirety of the code used to produce the results (and Bilby posteriors) publicly available at the following GitHub repository: https://github.com/hagabbar/vitamin_c

References

George, D. & Huerta, E. Deep learning for real-time gravitational wave detection and parameter estimation: results with advanced LIGO data. Phys. Lett. B 778, 64–70 (2018).

Gabbard, H., Williams, M., Hayes, F. & Messenger, C. Matching matched filtering with deep networks for gravitational-wave astronomy. Phys. Rev. Lett. 120, 141103 (2018).

Gebhard, T., Kilbertus, N., Parascandolo, G., Harry, I. & Schölkopf, B. ConvWave: searching for gravitational waves with fully convolutional neural nets. In Workshop on Deep Learning for Physical Sciences (DLPS) at the 31st Conference on Neural Information Processing Systems (NIPS) (eds Angus, R. et al.) 13 (Curran, 2017).

Searle, A. C., Sutton, P. J. & Tinto, M. Bayesian detection of unmodeled bursts of gravitational waves. Class. Quantum Gravity 26, 155017 (2009).

Skilling, J. Nested sampling for general Bayesian computation. Bayesian Anal. 1, 833–859 (2006).

Veitch, J. et al. johnveitch/cpnest: v0.11.3 (2021). https://doi.org/10.5281/zenodo.4470001

Speagle, J. S. dynesty: a dynamic nested sampling package for estimating Bayesian posteriors and evidences. Mon. Not. R. Astron. Soc. 493, 3132–3158 (2020).

Foreman-Mackey, D., Hogg, D. W., Lang, D. & Goodman, J. emcee: the MCMC hammer. Publ. Astron. Soc. Pac. 125, 306–312 (2013).

Vousden, W. D., Farr, W. M. & Mandel, I. Dynamic temperature selection for parallel tempering in Markov chain Monte Carlo simulations. Mon. Not. R. Astron. Soc. 455, 1919–1937 (2016).

Veitch, J. et al. Parameter estimation for compact binaries with ground-based gravitational-wave observations using the LALInference software library. Phys. Rev. D 91, 042003 (2015).

Ashton, G. et al. Bilby: a user-friendly Bayesian inference library for gravitational-wave astronomy. Astrophys. J. Suppl. Ser. 241, 27 (2019).

Zevin, M. et al. Gravity spy: integrating advanced LIGO detector characterization, machine learning, and citizen science. Class. Quantum Gravity 34, 064003 (2017).

Coughlin, M. et al. Limiting the effects of earthquakes on gravitational-wave interferometers. Class. Quantum Gravity 34, 044004 (2017).

Graff, P., Feroz, F., Hobson, M. P. & Lasenby, A. BAMBI: blind accelerated multimodal Bayesian inference. Mon. Not. R. Astron. Soc. 421, 169–180 (2012).

Chua, A. J. K. & Vallisneri, M. Learning Bayesian posteriors with neural networks for gravitational-wave inference. Phys. Rev. Lett. 124, 041102 (2020).

Green, S. R., Simpson, C. & Gair, J. Gravitational-wave parameter estimation with autoregressive neural network flows. Phys. Rev. D 102, 104057 (2020).

Green, S. R. & Gair, J. Complete parameter inference for GW150914 using deep learning. Mach. Learning Sci. Technol. 2, 03LT01 (2021).

Cranmer, K., Brehmer, J. & Louppe, G. The frontier of simulation-based inference. Proc. Natl Acad. Sci. USA 117, 30055–30062 (2020).

Tonolini, F., Radford, J., Turpin, A., Faccio, D. & Murray-Smith, R. Variational inference for computational imaging inverse problems. J. Mach. Learning Res. 21, 1–46 (2020).

Sohn, K., Lee, H. & Yan, X. Learning structured output representation using deep conditional generative models. In Advances in Neural Information Processing Systems 28 (eds Cortes, C. et al.) 3483–3491 (Curran, 2015).

Yan, X., Yang, J., Sohn, K. & Lee, H. Attribute2image: conditional image generation from visual attributes. In Computer Vision—ECCV 2016 (eds Leibe, B. et al.) 776–791 (Springer, Cham, Switzerland, 2016).

Nguyen, A., Clune, J., Bengio, Y., Dosovitskiy, A. & Yosinski, J. Plug & play generative networks: conditional iterative generation of images in latent space. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (eds Agapito, L. et al.) 3510–3520 (IEEE, 2017).

Nazábal, A., Olmos, P. M., Ghahramani, Z. & Valera, I. Handling incomplete heterogeneous data using VAEs. Pattern Recognit. 107, 107501 (2020).

Advanced LIGO Sensitivity Design Curve (accessed 1 June 2019); https://dcc.ligo.org/LIGO-T1800044/public

Khan, S., Chatziioannou, K., Hannam, M. & Ohme, F. Phenomenological model for the gravitational-wave signal from precessing binary black holes with two-spin effects. Phys. Rev. D 100, 024059 (2019).

Abbott, B. P. et al. GW170817: observation of gravitational waves from a binary neutron star inspiral. Phys. Rev. Lett. 119, 161101 (2017).

Abbott, B. P. et al. GW190425: observation of a compact binary coalescence with total mass ~3.4 M☉. Astrophys. J. Lett. 892, L3 (2020).

Abbott, R. et al. Observation of gravitational waves from two neutron star–black hole coalescences. Astrophys. J. Lett. 915, L5 (2021).

Singer, L. P. & Price, L. R. Rapid Bayesian position reconstruction for gravitational-wave transients. Phys. Rev. D 93, 024013 (2016).

Abbott, B. P. et al. Prospects for observing and localizing gravitational-wave transients with Advanced LIGO, Advanced Virgo and KAGRA. Living Rev. Relativ. 21, 3 (2018).

Littenberg, T. B. & Cornish, N. J. Bayesian inference for spectral estimation of gravitational wave detector noise. Phys. Rev. D 91, 084034 (2015).

Smith, R. et al. Fast and accurate inference on gravitational waves from precessing compact binaries. Phys. Rev. D 94, 044031 (2016).

Wysocki, D., O’Shaughnessy, R., Lange, J. & Fang, Y.-L. L. Accelerating parameter inference with graphics processing units. Phys. Rev. D 99, 084026 (2019).

Talbot, C., Smith, R., Thrane, E. & Poole, G. B. Parallelized inference for gravitational-wave astronomy. Phys. Rev. D 100, 043030 (2019).

Pankow, C., Brady, P., Ochsner, E. & O’Shaughnessy, R. Novel scheme for rapid parallel parameter estimation of gravitational waves from compact binary coalescences. Phys. Rev. D 92, 023002 (2015).

Gallinari, P., LeCun, Y., Thiria, S. & Soulie, F. F. Mémoires associatives distribuées: une comparaison [Distributed associative memories: a comparison]. In Proceedings of COGNITIVA 87, Paris, La Villette, May 1987 (eds Carroll, J. et al.) (Cesta-Afcet, 1987).

Pagnoni, A., Liu, K. & Li, S. Conditional variational autoencoder for neural machine translation. Preprint at https://arxiv.org/abs/1812.04405 (2018).

Jones, D. I. Parameter choices and ranges for continuous gravitational wave searches for steadily spinning neutron stars. Mon. Not. R. Astron. Soc. 453, 53–66 (2015).

Wang, Q., Kulkarni, S. R. & Verdu, S. Divergence estimation for multidimensional densities via k-nearest-neighbor distances. IEEE Trans. Inf. Theory 55, 2392–2405 (2009).

Acknowledgements

We acknowledge valuable input from the LIGO–Virgo Collaboration, specifically from W. Farr, T. Dent, J. Kanner, A. Nitz, C. Capano and the parameter estimation and machine-learning working groups. We additionally thank S. Marka for posing this challenge to us. We thank Nvidia for the generous donation of a Tesla V100 GPU used in addition to LIGO–Virgo Collaboration computational resources. We also gratefully acknowledge the Science and Technology Facilities Council of the UK. C.M. and I.S.H. are supported by the Science and Technology Research Council (grant ST/ L000946/1) and the European Cooperation in Science and Technology (COST) action CA17137. F.T. acknowledges support from Amazon Research and EPSRC grant EP/M01326X/1, and R.M.-S. EPSRC grants EP/M01326X/1, EP/T00097X/1 and EP/R018634/1.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the work of this manuscript. The work was primarily supervised by C.M., I.S.H. and R.M.-S.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Physics thanks Danilo Jimenez Rezende, Rory Smith and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

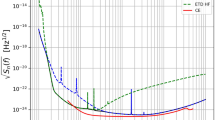

Extended Data Fig. 1 The cost as a function of training epoch.

The cost as a function of training epoch. We show the total cost function (magenta) together with its component parts: the KL-divergence component (purple) and the reconstruction component (blue) which are simply summed to obtain the total. The dark curves correspond to the cost computed on each batch of training data and the lighter curves represent the cost when computed on independent validation data. The close agreement between training and validation cost values indicates that the network is not overfitting to the training data. The change in behaviour of the cost between 102 and 3 × 102 epochs is a consequence of gradually introducing the KL cost term contribution via an annealing process.

Extended Data Table 1 The VItamin network hyper-parameters.

The VItamin network hyper-parameters. Dashed lines ‘—’ indicate that convolutional layers are shared between all 3 networks. Each column from left to right is representative of the \({r}_{{\theta }_{1}}(z| y)\), \({r}_{{\theta }_{2}}(x| y,z)\) and qϕ(z∣x, y) networks and each row denotes a different layer. a The shape of the data [one-dimensional dataset length, No. channels]. b One-dimensional convolutional filter with arguments (filter size, No. channels, No. filters). c L2 regularization function applied to the kernel weights matrix. textrmd The activation function used. e Striding layer with arguments (stride length). f Take the multichannel output of the previous layer and reshape it into a one-dimensional vector. g Append the argument to the current dataset. h Fully connected layer with arguments (input size, output size). i The \({r}_{{\theta }_{1}}\) output has size [latent space dimension, No. modes, No. parameters defining each component per dimension]. j Different activations are used for different parameters. For the scaled parameter means we use sigmoids and for log-variances we use negative ReLU functions. k The \({r}_{{\theta }_{2}}\) output has size [physical space dimension+additional cyclic dimensions, No. parameters defining the distribution per dimension]. The additional cyclic dimensions account for the 2 parameters where each cyclic parameter is represented in the abstract 2D plane. l The qϕ output has size [latent space dimension, No. parameters defining the distribution per dimension].

Extended Data Table 2 Benchmark sampler configuration parameters.

Benchmark sampler configuration parameters. Columns are denoted from left to right as the sampler name and the run configuration parameters for that sampler. Each row is representative of a different sampler. Parameter values were chosen based on a combination of their recommended default parameters11 and private communication with the Bilby development team.

Rights and permissions

About this article

Cite this article

Gabbard, H., Messenger, C., Heng, I.S. et al. Bayesian parameter estimation using conditional variational autoencoders for gravitational-wave astronomy. Nat. Phys. 18, 112–117 (2022). https://doi.org/10.1038/s41567-021-01425-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41567-021-01425-7

This article is cited by

-

Advancing space-based gravitational wave astronomy: Rapid parameter estimation via normalizing flows

Science China Physics, Mechanics & Astronomy (2024)

-

Deep learning waveform anomaly detector for numerical relativity catalogs

General Relativity and Gravitation (2024)

-

Space-based gravitational wave signal detection and extraction with deep neural network

Communications Physics (2023)

-

Advances in machine-learning-based sampling motivated by lattice quantum chromodynamics

Nature Reviews Physics (2023)

-

Scientific discovery in the age of artificial intelligence

Nature (2023)