Abstract

Random quantum circuits have been utilized in the contexts of quantum supremacy demonstrations, variational quantum algorithms for chemistry and machine learning, and blackhole information. The ability of random circuits to approximate any random unitaries has consequences on their complexity, expressibility, and trainability. To study this property of random circuits, we develop numerical protocols for estimating the frame potential, the distance between a given ensemble and the exact randomness. Our tensor-network-based algorithm has polynomial complexity for shallow circuits and is high-performing using CPU and GPU parallelism. We study 1. local and parallel random circuits to verify the linear growth in complexity as stated by the Brown–Susskind conjecture, and; 2. hardware-efficient ansätze to shed light on its expressibility and the barren plateau problem in the context of variational algorithms. Our work shows that large-scale tensor network simulations could provide important hints toward open problems in quantum information science.

Similar content being viewed by others

Introduction

Quantum computing might provide significant improvement of computational powers for current information technologies1,2,3. In the noisy intermediate-scale quantum (NISQ) era, an important question for near-term quantum computing is whether quantum devices are able to realize strong computational advantage against existing classical devices and resolve hard problems that no existing classical computers can resolve4. Recently, Google and the University of Science and Technology of China, in experiments involving boson sampling5,6, claimed to have realized quantum advantage using their quantum devices, disproving the extended Church–Turing thesis. These experiments are considered milestones toward full-scale quantum computing. Another recent study suggests the possibility of achieving quantum advantage in runtime over specialized state-of-the-art heuristic algorithms to solve the Maximum Independent Set problem using Rydberg atom arrays7.

Despite the great experimental success in quantum devices, however, the capability of classical computation is also rapidly developing. It is interesting and important to think about where the boundary of classical computation of the same process is and to understand the underlying physics of the quantum supremacy experiments through classical simulation5. Tensor network methods are incredibly useful for simulating quantum circuits8,9,10. Originating from approximately solving ground states of quantum many-body systems, tensor network methods find approximate solutions when the bond dimension of contracted tensors and the required entanglement of the system is under control8. Tensor network methods are also widely used for investigating sampling experiments with random quantum architectures, which are helpful for verifying the quantum supremacy experiments11,12,13,14.

In this work, we develop tensor network methods and perform classical random circuit sampling experiments up to 50 qubits. Random circuit sampling experiments are important components of near-term characterizations of quantum advantage15. Ensembles of random circuits could provide implementable constructions of approximate unitary k-designs16,17,18, quantum information scramblers19, solvable many-body physics models20, predictable variational ansätze for quantum machine learning21,22,23, good quantum decouplers for quantum channel and quantum error correction codes24,25, and efficient representatives of quantum randomness. To measure how close a given random circuit ensemble is to Haar-uniform randomness over the unitary group, we develop algorithms to evaluate the frame potential, the 2-norm distance toward full Haar randomness26,27,28. The frame potential is a user-friendly measure of how random a given ensemble is in terms of operator norms: the smaller the frame potential is, the more chaotic and more complicated the ensembles are, and the more easily we can achieve computational advantages29,30. In fact, in certain quantum cryptographic tools, concepts identical or similar to approximate k-designs are used, making use of the exponential separation of complexities between classical and quantum computations31,32,33,34,35,36,37,38.

It is critical to perform simulations of quantum circuits efficiently. To achieved this, we developed an efficient tensor network contraction algorithm is developed in the QTensor package39,40,41. QTensor is optimized to simulate large quantum circuits on supercomputers. For this project, we implemented a modified tensor network and fully utilized QTensor’s ability to simulate quantum circuits efficiently at scale.

In particular, we show the following applications of our computational tools. First, we evaluate the k-design time of the local and parallel random circuits through the frame potential. A long-term open problem is to prove the linear scrambling property of random circuits, where they approach approximate k-designs at depth \({{{\mathcal{O}}}}(nk)\) with n qudits16,17,18,29,31,42,43,44,45,46,47. Although lower and upper bounds are given, there is no known proof of the k-design time for general local dimension q and k ≥ 318,47. According to Brandão et al.47, the linear increase of the k-design time will lead to a proof of the Brown–Susskind conjecture, a statement where random circuits have linear growth of the circuit complexity with insights from black hole physics48,49. Recently, the complexity statement was proved in ref. 50 for a different definition of circuit complexity compared with ref. 47. Thus, a validation of the k-design time measured in the frame potential will immediately lead to an alternative verification of the Brown–Susskind conjecture, with the complexity defined in ref. 47. Using our tools, we verify the linear scaling of the k-design time up to 50 qubits and q = 2. Our research also provides important data on the prefactors beyond scaling through numerical simulations, which will be helpful to further the understanding of theoretical computer scientists.

Moreover, we use our tools to evaluate the frame potential of randomized hardware-efficient variational ansätze used in ref. 21. Barren plateau is a term referring to the slowness of the variational angle updates during the gradient descent dynamics of quantum machine learning. When the variational ansätze for variational quantum simulation, variational quantum optimization, and quantum machine learning51,52,53,54,55,56,57,58,59,60,61,62,63 are random enough, the gradient descent updates of variational angles will be suppressed by the dimension of Hilbert space, requiring exponential precision to implement quantum control of variational angles23. The quadratic fluctuations considered in21 will be suppressed with an assumption of 2-design, which is claimed to be satisfied by their hardware-efficient variational ansätze. For higher moments, higher k-designs are required. A study of how far a given variational ensemble is to a unitary k-design is important to understanding how large the barren plateau is and how to mitigate it through designs of variational circuits. In our work, we verify, upto several k’s, that randomized hardware-efficient ansätze are efficient scramblers: the frame potential decays exponentially in the circuit depth, and non-diagonal entangling gates are more efficient.

To familiarize the reader with the theoretical framework of our work, we begin with a formal introduction to the frame potential. Given an ensemble \({{{\mathcal{E}}}}\) of unitaries with a probability measure, we are interested in its randomness and closeness to the unitary group. Truly random unitaries from the unitary group have the Haar measure. Such closeness is measured by how well the ensemble approximates the first k moments of the unitary group. To this end, a k-fold twirling channel

is defined for the ensemble. If the unitary ensemble approximates the kth moment of the unitary group, the distance between the k-fold channel defined for the ensemble and the Haar unitaries (measured by the diamond norm) is bounded by ϵ:

Such \({{{\mathcal{E}}}}\) is said to be an ϵ-approximate k-design. The diamond norm of the channels is not numerically friendly, however. A quantity more suitable for numerical evaluation, which is also discussed in the context of k-designs, is the frame potential \({{{\mathcal{F}}}}\), given by64

Specifically, it relates to the aforementioned definition of ϵ-approximate k-designs as follows18:

where d = qn is the Hilbert space dimension, q is the local dimension of the qudits, and \({{{{\mathcal{F}}}}}_{{{{\rm{Haar}}}}}^{(k)}=k!\).

If we obtain the frame potential \({{{{\mathcal{F}}}}}_{{{{\mathcal{E}}}}}^{(k)}\), we are guaranteed to have at least an \({\epsilon }_{\max }\)-approximate k-design, where

Similarly, we have the following condition for the ensemble to be an ϵ-approximate k-design:

where the ensemble \({{{\mathcal{E}}}}(l)\) depends on the number of layers l. Assuming an exponentially decreasing frame potential approaching the Haar value, we have

Under this assumption, A and C could still have n and k dependence. Therefore, in order for l to scale linearly in n and k, A cannot be exponential, and C must be sublinear.

As an example, the exponential decay of \({{{{\mathcal{F}}}}}^{(2)}\) for the parallel random unitary ansätz is given by18

where ng = ⌊n/2⌋. This is plotted in Fig. 1. For fixed ϵ, this leads to a linear scaling of l in n, given by

where \(C={\left(\log \frac{{q}^{2}+1}{2q}\right)}^{-1}\) is independent of n. We emphasize that linear scaling in n is for fixed ϵ, not fixed \({{{\mathcal{F}}}}\).

Results

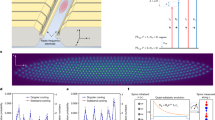

We obtain numerical results for ansätze with local dimension q = 2. Specifically, the frame potential values up to 50 qubits and k = 5 are evaluated. We compute the frame potentials for the local random unitary ansätz, the parallel random unitary ansätz, and hardware-efficient ansätze, illustrated in Fig. 2, respectively.

All ansätzes assumes 1D nearest-neighbor connectivity. a Parallel random unitary ansätze. Each layer is a wall of two-qudit random unitaries on neighboring qudits, and the next layer is offset by 1 qudit. This creates a brickwork motif, and the gate count scales as O(ln). b Local random unitary ansätze. Each layer is a single two-qudit random unitary between a pair of randomly chosen neighboring qudits. The gate count scales as O(l). c Hardware-efficient ansätze. A wall of RY(π/4) rotations is followed by alternating layers of random Pauli rotations and controlled-NOT gates, all independently parameterized. Circuits with controlled-phase gates are also studied.

Algorithm description

The unitary ensembles we are interested in are parameterized by a large number of parameters. Therefore, evaluating the integral is a high-dimensional integration problem, and a numerical Monte Carlo approach is suitable. We approximate the frame potential as the mean value of the trace,

Therefore, we need to evaluate the trace of the sampled unitaries on n target qudits.

A quantum circuit unitary U = U1U2U3… is a tensor \({U}_{ijk\ldots }^{\alpha \beta \gamma \ldots }\), where i, j, k are input qudit indices and α, β, γ are output qudit indices. The trace of the unitary is

This is a tensor contraction operation that can be expressed as the tensor network in Fig. 3a. In this representation, each node is an index, and edges that form cliques are unitaries. This is different from tensor network representations that are more familiar in other works (MPS, MPO, MERA, etc.) For more details on the representation, see refs. 15,40,65. The circuit shown here is a parallel random unitary circuit with 4 qudits. For efficient contraction, when the number of qudits is large, the contraction order is along the direction indicated in Fig. 3 such that the maximum number of exposed indices is minimum.

a Graphical tensor network of the trace of a quantum circuit, black where nodes are tensor indices, and cliques are tensors. The i indices correspond to qubit inputs, and the o indices correspond to qubit outputs. The curves going above the circuit network are identities. The input and output indices can actually be merged together, but this is harder to illustrate. b Equivalent identity tensor that can be represented by gates, qubit initialization, and measurement. More details at the end of section 2.1. c Graphical tensor network representation of the same quantity using our formulation. d The quantum circuit used to evaluate traces as a single amplitude.

Directly implementing this tensor network requires modification of QTensor. We propose an alternative tensor network in Fig. 3c with similar topologies that gives the trace as a single-probability amplitude in the form of \(\left\langle \psi \right\vert U\left\vert \psi \right\rangle\) for any basis state \(\left\vert \psi \right\rangle\). The quantum circuit to achieve this is illustrated in Fig. 3c, and we proceed with a proof.

For simplicity, we describe the algorithm for q = 2 qubits. We assign an ancillary qubit to each target qubit. The quantum state of the n ancillary and n target qubits is initialized to the state

After a layer of Hadamard on the ancillary qubits, we get

where \(\left\vert \mu \right\rangle\) is the n ancillary qubit basis state in the computational basis. Applying a CNOT gate on all target qubits controlled by their respective ancillary qubits yields

where \({U}_{a = j,t = j}^{{{{\rm{CNOT}}}}}\) is a CNOT gate with the jth ancillary qubit as control and the jth target qubit as target. For simplicity, we can combine the aforementioned Hadamard layer and the CNOT layer as a single operator

Consider the following probability amplitude:

This is simply the probability amplitude of measuring the \({\left\vert {{\Psi }}\right\rangle }_{0}\) state after applying the unitary MU†VM to the initialized \({\left\vert {{\Psi }}\right\rangle }_{0}\) state. Moreover, this probability amplitude is actually the trace of U†V:

Therefore, evaluating the trace becomes evaluating the probability amplitude of obtaining the \({\left\vert {{\Psi }}\right\rangle }_{0}\) state, which QTensor is able to simulate with complexity proportional to the number of qubits and exponential to the circuit depth. This is helpful for evaluating the trace of unitaries that can be efficiently represented by shallow circuits, especially those with limited qubit connectivity such as hardware-efficient ansätze.

For qudits with general local dimensions q, the generalization is straightforward. We need to replace the Hadamard gate H with the generalized Hadamard gate Hq, and the CNOT gate with the SUMq gate66:

Similar to the qubit case, applying the generalized gates to \({\left\vert {{\Phi }}\right\rangle }_{0}\) yields an entangled uniform superposition \(\left\vert {{\Phi }}\right\rangle\) of all basis states. The expectation value of any target qudit unitary with respect to this state is the trace.

Graphically, this can be understood by the tensor equivalence shown in Fig. 3b. The gates are

where for \({U}_{kij}^{{{{\rm{CNOT}}}}}\), k is the control qubit index (CNOT does not change the control qubit in the computational basis and therefore has only one index for the control qubit), and i, j are the target qubit output and input indices. The bottom tensor of Fig. 3b evaluates to

Verifying the Brown–Susskind conjecture

Local and parallel random unitaries are commonly discussed in the context of quantum circuit complexity and the Brown–Susskind conjecture. For both ansätzes, the composing random unitaries are drawn from the Haar measure on U(d2). Results for parallel random unitaries are presented in Figs. 4 and 5. In Fig. 4, The frame potential shows a super-exponential decay in the regime of a few layers and converges to exponential decay as the number of layers increases, just like the theoretical prediction in Fig. 1.

As shown in Fig. 1, we do not expect linear scaling of l in n with fixed \({{{\mathcal{F}}}}\). Error bars show one standard error.

To obtain the layer scaling for reaching ϵ-approximate designs, we fit \({{{{\mathcal{F}}}}}_{{{{\mathcal{E}}}}}^{(k)}-{{{{\mathcal{F}}}}}_{{{{\rm{Haar}}}}}^{(k)}\) to an exponential function according to Eq. (7), and l is estimated using Eq. (9). Note that our numerical results are in the regime of large ϵ, but we are extrapolating to small ϵ values, the validity of which depends on a strictly exponentially decaying \({{{\mathcal{F}}}}\). For robust error analysis, we use bootstrapping to quantify the uncertainties. We randomly sample a subset of computed frame potentials and perform curve fitting to obtain the calculate the number of layers needed to reach ϵ < 0.1. This is repeated multiple times to obtain a distribution of layer values. More details on the bootstrapping technique can be found in Supplementary Methods 1.2.

Assuming the validity of extrapolation, the results for ϵ = 0.1 are shown in Fig. 5. Brandão et al.17 established upper bounds on the number of layers needed to approximate k-designs for local and parallel random unitaries, which are quadratic and linear in n, respectively. They further proved that this bound could not be improved by more than polynomial factors as long as ϵ ≤ 1/4. Therefore, to verify linear growth in n, we need to reach below ϵ ≤ 1/4, which informed our choice of the ϵ = 0.1 threshold. We observe a linear scaling of the number of needed layers in n, which agrees with the theoretical prediction and non-trivially restricts the \({{{\mathcal{F}}}}\) scale factor A and decay rate C as discussed before.

Solid points are medians of the bootstrap sample, and the vertical shadows represent the sample distribution where the width corresponds to the density. Missing data points are due to insufficient data (see the Supplementary Discussions 3.1 for more details). Dashed lines are linear fits. The inset shows the fitted slopes for different k values. Error bars show one standard deviation.

Further, we compare the theoretical predictions in Eq. (11) against our numerical findings. Figure 5 shows the experimental and fitted k-design layer scaling as a function of the number of qubits. Specifically, we fit a linear curve through the medians of the estimated layers ignoring the \(\log n\) and the constant \(\log 1/\epsilon\) terms. We find a slope of 4.38 in the case of k = 2, which is lower than the theoretical value 6.2 as predicted by Eq. (10). We note, however, that the theoretical value gives an upper bound of the frame potential since there is overcounting in the contributing domain walls18. Therefore, the analytical expression predicts a larger number of layers needed to approximate 2-designs than necessary. This is apparent in the n = 2 case, where 16 layers are needed in Eq. (11) but a single layer is already sampling from the Haar measure. This accounts for the discrepancy between the theoretical values and the experimental values.

In the inset of Fig. 5, we show the slopes of the scaling curves with different k values. It is predicted that there is a linear O(nk) scaling in k for the number of layers l (or O(n2k) scaling for the circuit size T) needed to approach k-designs18, and a linear relationship between k and complexity is established in ref. 47. Together, these findings imply that complexity grows linearly in the circuit size47,50. Our results support the linear scaling of T in k, which predicts that the slope grows linearly in k.

Results for local random unitaries are presented in Figs. 6 and 7. Since each layer in the local random circuit has only one gate, we simulate layers proportional to the number of qubits and plot layers/qubits on the x-axis to maintain a linear scaling. We observe that this layer/qubits ratio scales linearly with the number of qubits. This is the same gate count scaling as the parallel random unitary ansätz, both quadratic in n. The scaling in k is close to linear, but the confidence is lower due to a lack of data points for k = 4, 5 at large n. Explanations for missing data points are provided in the Supplementary Discussions 3.1.

Missing data points are due to insufficient data (see the Supplementary Discussions 3.1 for more details). The inset shows the fitted slopes for different k values. Error bars show one standard deviation.

Hardware-efficient ansätze as approximate k-designs

Originally proposed for variational quantum eigensolvers53, hardware-efficient ansätze utilize gates and connectivity readily available on the quantum hardware67,68,69. In addition, a hardware-efficient ansätz is simulated in the context of the barren plateau problem21, where the variance of gradients vanish exponentially with the number of qubits in sufficiently deep circuits. In fact, the proof of the barren plateau problem assumes that circuits before and after the gate whose derivative we are computing are approximate 2-designs, which is especially suitable for hardware-efficient ansätze because they are believed to be efficient at scrambling. We simulated these circuits with controlled-phase gates and controlled-NOT gates as two-qubit gates, respectively. Figure 8 shows that the controlled-NOT gate-based ansätz approaches the Haar measure sooner, and therefore further analysis is conducted on the CNOT-based ansätz only. Figure 9 shows a linear dependence on the number of qubits, as well as a positive dependence on k.

Missing data points are due to insufficient data (see Supplementary Discussions 3.1 for more details). The inset shows the fitted slopes for different k values. Error bars show one standard deviation.

We note that the CNOT-based hardware-efficient ansätz reaches lower frame potential values with much fewer layers than the parallel random unitary ansätz, albeit having much fewer parameters per layer. This result is partially explainable through the observation that each layer in the hardware-efficient ansätz contains two layers of two-qubit gate walls, whereas each layer in the parallel random unitary ansätz contains only one wall. Further, random unitaries from U(d2) are not all maximally entangling. The hardware-efficient ansätz can therefore generate highly entangled stages much more efficiently, exploring a much larger space with fewer parameters.

Further, unlike the previously discussed ansätze where the frame potential decay rate is constant, the hardware-efficient ansätz decay rate increases with n as shown in the inset of Fig. 8. This does not contradict the observed linear scaling as long as the decay rate scaling is sublinear.

This observation confirms that hardware-efficient ansätze are highly expressive, a concept that is crucial to the utility of variational quantum algorithms. Ansätze with higher expressibility are able to better represent the Haar distribution, approximate the target unitary, and minimize the objective. This links the expressibility to the frame potential70. The high expressibility of hardware-efficient ansätze and their close relatives, in additional to the desirable noise properties due to their shallow depths, are precisely the argument in favor of these ansätze over their deeper and more complex problem-aware counterparts71. With the recent discovery of the relation between expressibility and gradient variance72, the analysis of frame potentials can play an important role in theoretically and empirically determining the usefulness of various ansätze for variational algorithms.

Discussion

Evaluating the distance from a given random circuit ensemble to the exact Haar randomness is important for understanding several perspectives in quantum information science, including recent experiments on the near-term quantum advantage. Explicitly constructing the unitaries requires memory complexity of O(4n). A more efficient classical algorithm decomposes a unitary into gates in a universal set (H, T, and CNOT), which allows us to estimate the normalized trace by sampling allowed Feynman paths73. Exact evaluation using this method is NP-complete, and approximation to fixed precision requires a number of Feynman path samples that are exponentially large in the number of Hadamard gates in the circuit. Fortunately, for shallow circuits, tensor-network-based algorithms can obtain the exact trace with linear complexity in n.

In our paper, we simulate large-scale random circuit sampling experiments classically up to 50 qubits, the number of noisy physical qubits we are able to control in the NISQ era, using the QTensor package. As examples, we provide two applications of our computational tools: a numerical verification of the Brown–Susskind conjecture and a numerical estimation relating to barren plateaus in quantum machine learning and randomized hardware-efficient variational ansätze.

Through our examples, we show that classical tensor network simulations are useful for our understanding of open problems in theoretical computer science and numerical examinations of quantum neural network properties for quantum computing applications. We believe that tensor networks and other cutting-edge tools are useful for probing the boundary of classical simulation and improving the understanding of quantum advantage in several subjects of quantum physics, for instance, quantum simulation74,75. Moreover, it will be interesting to connect our algorithms to the current research on classical simulation of boson sampling experiments.

Methods

Tensor network simulator

For all the trace evaluations, we use the QTensorAI library76, originally developed to simulate quantum machine learning with parameterized circuits. This library allows quantum circuits to be simulated in parallel on CPUs and GPUs, which is a highly desirable property for sampling a large number of circuits. The library is based on the QTensor simulator39,40,41, a tensor network-based quantum simulator that represents the network as an undirected graph.

In this method of simulation, the computation is memory bound, and the memory complexity is exponential in the “treewidth,” the largest rank of tensor that needs to be stored during computation. The graphical formalism utilized by QTensor allows the tensor contraction order to be optimized to minimize the treewidth. For shallow quantum circuits, the treewidth is determined mainly by the number of layers in the quantum circuit, and therefore QTensor is particularly well suited for simulating shallow circuits such as those used in the Quantum Approximate Optimization Algorithm (QAOA).

Sampling U(d 2)

The simulation of both parallel and local random unitary circuits requires the use of random two-qubit random unitary gates. We implement these gates and sample Haar unitaries according to the scheme proposed for unitary neural networks77, using a PyTorch implementation78. The universality of this decomposition scheme is first proved in the context of optical interferometers79,80. This implementation parameterizes two-qubit unitaries using 16 phase parameters, and uniformly sampling these parameters leads to uniform sampling on the Haar measure. Further, it is fully differentiable, although we do not care about this property in this work.

High-performance computing

For hardware-efficient and parallel random unitary ansätze, once the number of qubits and the number of layers are chosen, the circuit topology will remain the same throughout the ensemble. This is in contrast to the local random unitary ansätz, where a two-qubit gate is applied to random neighboring qubits in each layer, which means that the circuit topologies are very different within an ensemble. For fixed-topology ensembles, the algorithm can optimize the contraction order for all circuits at once. This optimization significantly reduces the computational complexity, and the optimization time is on the order of minutes depending on the circuit size. However, local random unitary circuits cannot benefit from circuit optimizations since we would need to do that for each sample, whereas the actual simulation time is usually much shorter.

Further, for fixed topology circuits, the tensor contraction operations are identical, which is very suitable for single-instruction multi-data parallel executions on GPUs. For ensembles with the smallest tree widths, we can compute the trace values of millions of circuits in parallel on a single GPU. However, local random unitary circuits are not compatible with single-instruction parallel computation and must be simulated in parallel using a CPU cluster.

Data availability

Data containing the bootstrap frame potential values used to generate the figures are available in the GitHub repository https://github.com/sss441803/Frame_Potential, and data for the calculated trace values of sampled random circuits is available upon request from the authors.

Code availability

The code used to generate the data and figures is available in the GitHub repository https://github.com/sss441803/Frame_Potential. The tensor network quantum simulator QTensor and QTensorAI are open source, and available at https://github.com/danlkv/QTensor and https://github.com/sss441803/QTensorAI.

References

Feynman, R. P. Simulating physics with computers. Int. J. Theor. Phys. 21, 467—488 (1982).

Preskill, J. Quantum computing and the entanglement frontier. Preprint at https://arxiv.org/abs/1203.5813 (2012).

Alexeev, Y. et al. Quantum computer systems for scientific discovery. PRX Quantum 2, 017001 (2021).

Preskill, J. Quantum computing in the NISQ era and beyond. Quantum 2, 79 (2018).

Arute, F. et al. Quantum supremacy using a programmable superconducting processor. Nature 574, 505–510 (2019).

Zhong, H.-S. et al. Quantum computational advantage using photons. Science 370, 1460–1463 (2020).

Ebadi, S. et al. Quantum optimization of maximum independent set using Rydberg atom arrays. Science 376, 1209–1215 (2022).

White, S. R. Density matrix formulation for quantum renormalization groups. Phys. Rev. Lett. 69, 2863–2866 (1992).

Rommer, S. & Östlund, S. Class of ansatz wave functions for one-dimensional spin systems and their relation to the density matrix renormalization group. Phys. Rev. B 55, 2164–2181 (1997).

Orus, R. Tensor networks for complex quantum systems. Nat. Rev. Phys. 1, 538–550 (2019).

Noh, K., Jiang, L. & Fefferman, B. Efficient classical simulation of noisy random quantum circuits in one dimension. Quantum 4, 318 (2020).

Huang, C. et al. Classical simulation of quantum supremacy circuits. Preprint at https://arxiv.org/abs/2005.06787 (2020).

Pan, F. & Zhang, P. Simulation of quantum circuits using the big-batch tensor network method. Phys. Rev. Lett. 128, 030501 (2022).

Oh, C., Noh, K., Fefferman, B. & Jiang, L. Classical simulation of lossy boson sampling using matrix product operators. Phys. Rev. A 104, 022407 (2021).

Boixo, S. et al. Characterizing quantum supremacy in near-term devices. Nat. Phys. 14, 595–600 (2018).

Harrow, A. W. & Low, R. A. Random quantum circuits are approximate 2-designs. Commun. Math. Phys. 291, 257–302 (2009).

Brandão, F. G., Harrow, A. W. & Horodecki, M. Local random quantum circuits are approximate polynomial-designs. Commun. Math. Phys. 346, 397–434 (2016).

Hunter-Jones, N. Unitary designs from statistical mechanics in random quantum circuits. Preprint at https://arxiv.org/abs/1905.12053 (2019).

Hayden, P. & Preskill, J. Black holes as mirrors: quantum information in random subsystems. J. High Energy Phys. 2007, JHEP09 (2007).

Nahum, A., Vijay, S. & Haah, J. Operator spreading in random unitary circuits. Phys. Rev. X 8, 021014 (2018).

McClean, J. R., Boixo, S., Smelyanskiy, V. N., Babbush, R. & Neven, H. Barren plateaus in quantum neural network training landscapes. Nat. Commun. 9, 1–6 (2018).

Liu, J., Tacchino, F., Glick, J. R., Jiang, L. & Mezzacapo, A. Representation learning via quantum neural tangent kernels. 3, 030323 (2022).

Liu, J. et al. An analytic theory for the dynamics of wide quantum neural networks. Preprint at https://arxiv.org/abs/2203.16711 (2022).

Brown, W. & Fawzi, O. Decoupling with random quantum circuits. Commun. Math. Phys. 340, 867–900 (2015).

Liu, J. Scrambling and decoding the charged quantum information. Phys. Rev. Res. 2, 043164 (2020).

Roberts, D. A. & Yoshida, B. Chaos and complexity by design. J. High. Energy Phys. 04, 121 (2017).

Cotler, J., Hunter-Jones, N., Liu, J. & Yoshida, B. Chaos, complexity, and random matrices. J. High. Energy Phys. 11, 048 (2017).

Liu, J. Spectral form factors and late time quantum chaos. Phys. Rev. D. 98, 086026 (2018).

Brandão, F. G. S. L. & Horodecki, M. Exponential quantum speed-ups are generic. Quantum Info Comput. 13, 901–924 (2013).

Harlow, D. & Hayden, P. Quantum computation vs. firewalls. J. High. Energy Phys. 2013, 1–56 (2013).

Brandão, F. G., Harrow, A. W. & Horodecki, M. Efficient quantum pseudorandomness. Phys. Rev. Lett. 116, 170502 (2016).

Ji, Z., Liu, Y.-K. & Song, F. Pseudorandom quantum states. In Advances in Cryptology - CRYPTO 2018 126–152 (2018).

Ananth, P., Qian, L. & Yuen, H. Cryptography from pseudorandom quantum states. In Advances in Cryptology - CRYPTO 2022 208–236 (2022).

Škorić, B. Quantum readout of physical unclonable functions. Int. J. Quantum Inf. 10, 1250001 (2012).

Gianfelici, G., Kampermann, H. & Bruß, D. Theoretical framework for physical unclonable functions, including quantum readout. Phys. Rev. A 101, 042337 (2020).

Kumar, N., Mezher, R. & Kashefi, E. Efficient construction of quantum physical unclonable functions with unitary t-designs. Preprint at https://arxiv.org/abs/2101.05692 (2021).

Doosti, M., Kumar, N., Kashefi, E. & Chakraborty, K. On the connection between quantum pseudorandomness and quantum hardware assumptions. Quantum Sci. Technol. 7, 035004 (2022).

Arapinis, M., Delavar, M., Doosti, M. & Kashefi, E. Quantum physical unclonable functions: possibilities and impossibilities. Quantum 5, 475 (2021).

Lykov, D. et al. Performance evaluation and acceleration of the QTensor quantum circuit simulator on GPUs. In 2021 IEEE/ACM Second International Workshop on Quantum Computing Software (QCS) 27–34 (2021).

Lykov, D. & Alexeev, Y. Importance of diagonal gates in tensor network simulations. Preprint at https://arxiv.org/abs/2106.15740 (2021).

Lykov, D., Schutski, R., Galda, A., Vinokur, V. & Alexeev, Y. Tensor network quantum simulator with step-dependent parallelization. Preprint at https://arxiv.org/abs/2012.02430 (2020).

Diniz, I. T. & Jonathan, D. Comment on “random quantum circuits are approximate 2-designs" by AW Harrow and RA Low (Commun. Math. Phys. 291, 257–302 (2009)). Commun. Math. Phys. 304, 281–293 (2011).

Harrow, A. & Mehraban, S. Approximate unitary t-designs by short random quantum circuits using nearest-neighbor and long-range gates. Preprint at https://arxiv.org/abs/1809.06957 (2018).

Nakata, Y., Hirche, C., Koashi, M. & Winter, A. Efficient quantum pseudorandomness with nearly time-independent hamiltonian dynamics. Phys. Rev. X 7, 021006 (2017).

Onorati, E. et al. Mixing properties of stochastic quantum Hamiltonians. Commun. Math. Phys. 355, 905–947 (2017).

Lashkari, N., Stanford, D., Hastings, M., Osborne, T. & Hayden, P. Towards the fast scrambling conjecture. J. High. Energy Phys. 04, 022 (2013).

Brandão, F. G. S. L., Chemissany, W., Hunter-Jones, N., Kueng, R. & Preskill, J. Models of quantum complexity growth. PRX Quantum 2, 030316 (2021).

Brown, A. R. & Susskind, L. Second law of quantum complexity. Phys. Rev. D. 97, 086015 (2018).

Susskind, L. Black holes and complexity classes. Preprint at https://arxiv.org/abs/1802.02175 (2018).

Haferkamp, J., Faist, P., Kothakonda, N. B., Eisert, J. & Yunger Halpern, N. Linear growth of quantum circuit complexity. Nat. Phys. 18, 528–532 (2022).

Peruzzo, A. et al. A variational eigenvalue solver on a photonic quantum processor. Nat. Commun. 5, 1–7 (2014).

McClean, J. R., Romero, J., Babbush, R. & Aspuru-Guzik, A. The theory of variational hybrid quantum-classical algorithms. N. J. Phys. 18, 023023 (2016).

Kandala, A. et al. Hardware-efficient variational quantum eigensolver for small molecules and quantum magnets. Nature 549, 242–246 (2017).

Cerezo, M. et al. Variational quantum algorithms. Nat. Rev. Phys. 3, 625–644 (2021).

Farhi, E., Goldstone, J. & Gutmann, S. A quantum approximate optimization algorithm. Preprint at https://arxiv.org/abs/1411.4028 (2014).

Wittek, P. Quantum Machine Learning: What Quantum Computing Means to Data Mining (Academic Press, 2014).

Wiebe, N., Kapoor, A. & Svore, K. M. Quantum deep learning. Quantum Info. Comput. 16, 541–587 (2016).

Biamonte, J. et al. Quantum machine learning. Nature 549, 195–202 (2017).

Schuld, M. & Killoran, N. Quantum machine learning in feature Hilbert spaces. Phys. Rev. Lett. 122, 040504 (2019).

Havlíček, V. et al. Supervised learning with quantum-enhanced feature spaces. Nature 567, 209–212 (2019).

Liu, Y., Arunachalam, S. & Temme, K. A rigorous and robust quantum speed-up in supervised machine learning. Nat. Phys. 17, 1013–1017 (2021).

Liu, J. Does [Richard Feynman] Dream of Electric Sheep? Topics on Quantum Field Theory, Quantum Computing, and Computer Science. Ph.D. thesis, Caltech (2021).

Farhi, E. & Neven, H. Classification with quantum neural networks on near term processors. Preprint at https://arxiv.org/abs/1802.06002 (2018).

Gross, D., Audenaert, K. & Eisert, J. Evenly distributed unitaries: on the structure of unitary designs. J. Math. Phys. 48, 052104 (2007).

Schutski, R., Lykov, D. & Oseledets, I. Adaptive algorithm for quantum circuit simulation. Phys. Rev. A 101, 042335 (2020).

Wang, Y., Hu, Z., Sanders, B. C. & Kais, S. Qudits and high-dimensional quantum computing. Front. Phys. 8, 589504 (2020).

Grossi, M. et al. Finite-size criticality in fully connected spin models on superconducting quantum hardware. Preprint at https://arxiv.org/abs/2208.02731 (2022).

Nakaji, K. & Yamamoto, N. Expressibility of the alternating layered ansatz for quantum computation. Quantum 5, 434 (2021).

Du, Y., Huang, T., You, S., Hsieh, M.-H. & Tao, D. Quantum circuit architecture search for variational quantum algorithms. NPJ Quantum Inf. 8, 62 (2022).

Sim, S., Johnson, P. D. & Aspuru-Guzik, A. Expressibility and entangling capability of parameterized quantum circuits for hybrid quantum-classical algorithms. AAdv. Quantum Technol. 2, 1900070 (2019).

Liu, X. et al. Layer VQE: a variational approach for combinatorial optimization on noisy quantum computers. IEEE Trans. Quantum Eng. 3, 1–20 (2022).

Holmes, Z., Sharma, K., Cerezo, M. & Coles, P. J. Connecting ansatz expressibility to gradient magnitudes and barren plateaus. PRX Quantum 3, 010313 (2022).

Datta, A., Flammia, S. T. & Caves, C. M. Entanglement and the power of one qubit. Phys. Rev. A 72, 042316 (2005).

Yuan, X., Sun, J., Liu, J., Zhao, Q. & Zhou, Y. Quantum simulation with hybrid tensor networks. Phys. Rev. Lett. 127, 040501 (2021).

Milsted, A., Liu, J., Preskill, J. & Vidal, G. Collisions of false-vacuum bubble walls in a quantum spin chain. PRX Quantum 3, 020316 (2022).

Liu, M. et al. Embedding learning in hybrid quantum-classical neural networks. Preprint at https://arxiv.org/abs/2204.04550 (2022).

Jing, L. et al. Tunable efficient unitary neural networks (EUNN) and their application to RNNs. In International Conference on Machine Learning 1733–1741 (PMLR, 2017).

Laporte, F. torch_eunn. https://github.com/flaport/torch_eunn (2020).

Reck, M., Zeilinger, A., Bernstein, H. J. & Bertani, P. Experimental realization of any discrete unitary operator. Phys. Rev. Lett. 73, 58–61 (1994).

Clements, W. R., Humphreys, P. C., Metcalf, B. J., Kolthammer, W. S. & Walmsley, I. A. Optimal design for universal multiport interferometers. Optica 3, 1460–1465 (2016).

Acknowledgements

This material is based upon work supported by the U.S. Department of Energy, Office of Science, National Quantum Information Science Research Centers. This work was completed in part with resources provided by the University of Chicago Research Computing Center. We thank Jens Eisert and Danylo Lykov for useful discussions. M.L. is supported by DoE Q-NEXT. J.L. is supported in part by International Business Machines (IBM) Quantum through the Chicago Quantum Exchange and by the Pritzker School of Molecular Engineering at the University of Chicago through AFOSR MURI (FA9550-21-1-0209). J.L. is also serving as a scientific advisor in qBraid Co. Y.A. acknowledges support from the U.S. Department of Energy, Office of Science, under contract DE-AC02-06CH11357 at Argonne National Laboratory. This research was developed with funding from the Defense Advanced Research Projects Agency (DARPA). The views, opinions, and/or findings expressed are those of the author and should not be interpreted as representing the official views or policies of the Department of Defense or the U.S. Government. L.J. acknowledges support from ARO (W911NF-18-1-0020, W911NF-18-1-0212), ARO MURI (W911NF-16-1-0349, W911NF-21-1-0325), AFOSR MURI (FA9550-19-1-0399, FA9550-21-1-0209), AFRL (FA8649-21-P-0781), DoE Q-NEXT, NSF (OMA-1936118, EEC-1941583, OMA-2137642), NTT Research, and the Packard Foundation (2020-71479).

Author information

Authors and Affiliations

Contributions

J.L. conceived the idea and wrote the majority of the introduction and discussion. M.L. developed and performed all numerical simulations and wrote the majority of the introduction to frame potential, results and methods. Y.A. and L.J. contributed ideas and provided numerous scientific and writing improvements to the paper. M.L., J.L., Y.A., and L.J. all participated in discussions that shaped the project in a substantial manner and the understanding of its broader impact.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, M., Liu, J., Alexeev, Y. et al. Estimating the randomness of quantum circuit ensembles up to 50 qubits. npj Quantum Inf 8, 137 (2022). https://doi.org/10.1038/s41534-022-00648-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-022-00648-7