Abstract

Most wearable robots such as exoskeletons and prostheses can operate with dexterity, while wearers do not perceive them as part of their bodies. In this perspective, we contend that integrating environmental, physiological, and physical information through multi-modal fusion, incorporating human-in-the-loop control, utilizing neuromuscular interface, employing flexible electronics, and acquiring and processing human-robot information with biomechatronic chips, should all be leveraged towards building the next generation of wearable robots. These technologies could improve the embodiment of wearable robots. With optimizations in mechanical structure and clinical training, the next generation of wearable robots should better facilitate human motor and sensory reconstruction and enhancement.

Similar content being viewed by others

Introduction

Wearable robots are human-centered interdisciplinary systems incorporating diverse technological domains such as machinery, electronics, material science, computer science, integrated circuits, and control theory. These systems integrate robotic components with the human body, either in the form of exoskeletons worn atop the human body to enhance its capabilities; or in the form of powered prostheses that directly replace the lost limb functionality. They are designed to combat deficiencies resulting from neuro-muscular diseases or the loss of limbs. In addition to motor dysfunction, this deficiency encompasses sensory loss, impacting an individual’s autonomy and social interactions. The requirements and expectations for exoskeletons and prostheses are different. Exoskeletons are mainly involved in motor and sensory enhancement, whereas prostheses are mainly for their reconstruction.

Currently, the available technologies for integrating wearable robots with the human body face numerous limitations, resulting in wearers not perceiving the wearable robot as a part of their own bodies. This suggests that enhancing the embodiment of wearable robots is necessary. Prosthetic embodiment quantifies a user’s combined feeling of ownership and agency1. Serino et al. investigated cortical maps for movement control and touch sensing in individuals with upper limb amputation who underwent targeted muscle reinnervation, aiming for prostheses embodiment2. Rognini et al. combined tactile and visual stimulation in upper limb prosthesis users to increase embodiment and decrease perceived phantom limb distortion3. Fritsch et al. found attenuation of touch sensation in prosthesis users, which could serve as an indicator of prosthesis embodiment4. Likewise, in the context of exoskeletons, Forte et al. argue that exoskeletons should be tailored and adjusted according to the spinal cord injury patient condition to increase embodiment5. Hybart and Ferris proposed that measures of embodiment such as electroencephalography, reaction time, and proprioceptive drift could serve as metrics for assessing exoskeleton success6. Currently, the embodiment of wearable robots represents a critical yet insufficiently explored area, particularly in terms of enhancing their integration with the user.

Many challenges in robotics hinder the embodiment of wearable robots. Poor intelligence and functionality are common factors in device rejection by users7. Human-robot interaction requires perceiving the environment information as well as human physical and physiological information. This is while existing wearable robots often rely on single or limited modal sensors, which might result in inadequate performance8. Although wearable robots are human-centered systems, not putting the human user in the control loop remains a major concern9. For instance, issues may arise if individuals wearing exoskeletons are unintentionally pulled or moved against their intentions. A lot of wearable robots mainly focus on the control of the robots while omitting sensory feedback to the human, lacking bi-directional interaction between the human and robot10. Additionally, artifacts caused by skin movement between the wearable robot and the human body can significantly affect the control accuracy, leading to a significant drop in usage period and eventual abandonment11. Indeed, skin stretching during movement makes it challenging to maintain a complete connection between the human and the robot. Moreover, the current interface for idiodynamics and sensation is limited by a deficiency in information acquisition, transfer, and processing, especially for novel deep-learning neural network-based control algorithms that require high computational power12.

Wearable robots have existed for many years, but it is only in recent years that significant breakthroughs have occurred. Multi-modal fusion13, human-in-the-loop control14, neuromuscular interface15, flexible electronics16, and biomechatronic chip17, represent examples of advancements that could profoundly and positively impact the interaction between wearable robots and humans (Table 1). However, due to their complex nature, only a few of these advancements have been tested with users, often involving a limited number of participants18. Currently, each of the mentioned breakthrough technologies is available, but fully integrating these technologies requires the collaboration of multidisciplinary experts. These breakthrough technologies are transforming traditional wearable robots, though we expect their application to enable significant improvement that ensures these solutions mature enough and become the new benchmark. The widespread adoption of these technologies in wearable robots is expected to drive their optimization and standardization, ensuring the effective embodiment of high-performance wearable robots for motor and sensory reconstruction and enhancement.

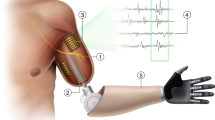

Researchers have extensively reviewed various papers on wearable robots, including exoskeletons for locomotor assistance19, human-in-the-loop optimization for wearable robots20, and sensory feedback for prostheses10. Unlike those papers summarizing the state-of-the-art in specific aspects of wearable robots, we propose a perspective that cutting-edge technologies of multi-modal fusion, human-in-the-loop control, neuromuscular interfaces, flexible electronics, and biomechatronic chips should be leveraged towards building high-performance wearable robots with embodiment. To narrow the scope to the embodiment aspect of wearable robots, we excluded the general aspects such as actuators, structures, electronics, and simulations. The embodiment of wearable robots mainly involves embodied sensing, embodied feedback, and embodied control, which led us to select the five enabling technologies: multi-modal fusion for embodied sensing; neuromuscular interface and flexible electronics for embodied sensing and embodied feedback; human-in-the-loop control and biomechatronic chips for embodied control. The logical relationship between the five technologies is around the embodiment of wearable robots (Fig. 1). Biomechatronic chips act as the central units for information acquisition, processing, and generating control commands. Neuromuscular interface and flexible electronics are enabling technologies for embodiment sensing of human intention, and embodiment feedback to improve the feeling of agency. Multi-modal fusion is utilized to improve the perception of human intention. And human-in-the-loop control integrates humans into the control loop with wearable robots, taking human reactions into account. We analyze how the development of these technologies would facilitate the development and verification of the next generation of wearable robots with a high embodiment. We propose a focused perspective on research progress and challenges in the five selected technologies for wearable robots, highlight their scientific foundations, and look into the potential future direction, to help researchers build a high-performance wearable robot with embodiment for motor and sensory reconstruction and enhancement.

Various technologies of wearable robots for motor and sensory enhancement and reconstruction are depicted. Biomechatronic chips127 could serve as the central unit for information acquisition and processing, generating control commands. Neuromuscular interface128 and flexible electronics129 are enabling technologies for sensing human intention, which could be fused together with multi-modal fusion13. They also provide sensory feedback that transfers information from the robot to the human to improve the feeling of agency. Human-in-the-loop control14 integrates humans into the control loop of wearable robots, taking human reactions into account during the training process. (In the figure, arrows represent the information flow and dashed boxes represent technologies that form the key components of embodiment).

Motor enhancement

Integration of robotic components with the human body requires considerations in both technical and practical aspects, which is challenging when aiming at enhancing motor function. Failure to perceive changes in human states or the surrounding environment may result in delayed response or ineffective control. Not putting humans in the control loop restricts the interaction between robots and humans, and decreases the degree of participation. To surmount the challenges of integrating wearable robots with the human body, advanced technologies such as multi-modal fusion and human-in-the-loop control are being developed, augmenting the ability of robots to perceive the environment, and taking into account human intent.

Multi-modal fusion

Given that wearable robots operate in unstructured environments and interact closely with humans, relying solely on information acquisition from a single sensor is significantly insufficient21. Consequently, incorporating multi-modal sensors enables wearable robots to accumulate and process information from diverse sources, leading to more precise and trustworthy motor enhancement. Just like human beings also utilize multiple modalities of sensing organs to perceive the world. Multi-modal information fusion helps wearable robots perceive the environment and recognize human intentions with diverse fusion methods, thus making the right decision upon motor enhancement operation to achieve compliant human-machine interaction (Fig. 2).

Single-modal information has demonstrated effective performance in capturing human intentions in wearable robot control. For example, high-density EMG provides detailed spatio-temporal information about muscle activity, enabling the decoding of intricate movements like hand gestures22. High throughput electroencephalography-based brain-computer interface (BCI) has achieved movement decoding for each finger23. Invasive BCI has also shown impressive performance in intention decoding. Longitudinal intrafascicular electrode has achieved prosthetic hand control24. High-density electrocorticographic (ECoG) can offer characteristic spatiotemporal neuronal activation patterns to decode hand gestures25, and has achieved exoskeleton control26. The invasive BCI can acquire high-quality neural signals, although the surgical risk and suboptimal long-term reliability hinder its widespread adoption27. Alternatively, people could choose many non-invasive approaches for intention decoding. Though one non-invasive signal alone is inferior to an invasive one, multi-modal information fusion could offer complementary details that potentially facilitate human intention recognition.

A pressure sensor combined with an inertial measurement unit (IMU) could provide information on ground contact and joint angles for wearable robot control28, though the control based on these signals is inherent behind the human physiological intention. Fusing electromyography (EMG) signals with an IMU has shown potential in prosthesis control, however, the EMG signals are susceptible to muscle fatigue which would influence the control accuracy29. Mechanomyography (MMG) signals accompanied by muscle movement have been proposed to be fused with EMG, which has enhanced human-exoskeleton interaction30. Ultrasonography and EMG have been combined, resulting in higher electrode-skin impedance compared to conventional EMG31, in which ultrasonography signals offer the morphological information of the muscle. Near-infrared spectroscopy (NIRS) has been used in conjunction with EMG and achieved a higher decoding performance in a prosthesis, compared to using each method individually32, in which NIRS adds the blood oxygen information during muscle movement. Sheng et al. integrated EMG, MMG, and NIRS for intention decoding, achieving the highest performance among all possible combinations33, in which MMG signals address the mechanical aspect of muscle movement. Chen et al. fused EMG, MMG, and ultrasonography to study the rectus femoris muscle during isometric contractions and found this combination could provide complete information on muscle contraction34.

The analysis of the muscle information may be limited by muscular functionality, as disabled elders often have very weak muscle signals. Electroencephalography (EEG) signals can be assumed to be consistently available. However, using EEG signals alone to control the wearable robot is not reliable, because these signals often have a low signal-to-noise ratio and are easily affected by artifacts. Combining EEG with EMG has shown great potential in improving the accuracy of detecting human intentions. For example, Kiguchi and Hayashi proposed a user’s motion estimation method for controlling wearable robots based on the user’s motion intention35, where EEG signals are employed as a compensatory measure in the absence of EMG signals. The combination of EEG and EMG was also employed for reconstructing the hand’s position in three dimensions36, in which EMG signals offer a wealth of movement-related information, and EEG signals provide supplementary data that enhances the reconstruction process. Environmental information is also important in human-machine interaction. For example, fusing vision gaze and EMG has been proven to enhance end-point control performance in upper-limb prostheses37. However, integrating high-throughput information like vision can result in extended processing times, placing a significant burden on the computing unit and potentially increasing latency.

Although we could choose from various modalities of information, employing a suitable information fusion method would warrant the enhancement of motor function in wearable robots. Transient fusion uses instant information and feeds it into the fusion module after preprocessing8, which is suitable for obtaining the current state of an object or human, for instance, instantaneous gesture recognition38. Sequential fusion introduces sequential data into a model with memory, such as long-short-term memory for multi-modal fusion to recognize human activity39. However, this kind of fusion involves sequential information stored in a memory, which might result in longer fusion times. To minimize the fusion time, the fusion method utilization of a previously trained model could play a role. Falco et al. achieved object recognition with tactile sensing fusion with a pre-trained visual model40. Although employing a pre-trained model could reduce the fusion time, it might sacrifice the output accuracy if the model is not fine-tuned on the new data. It should be noted that the inclusion of modalities increases the likelihood of failure. For example, the communication between modules for measuring different modalities becomes a major issue, along with the concerns associated with powering and calibrating them. Thus, despite the achievements made, current multi-modal fusion methods still need to improve time efficiency and accuracy simultaneously to satisfy the complex tasks of wearable robot control.

Human-in-the-loop control

As wearable robots are designed to be human-centric systems, incorporating the human element in the control loop is essential for effective operations (Fig. 3). However, existing wearable robots lack user interaction, leading to a mismatch between the user’s responses and the robot’s actions. This disconnection hinders the users’ ability to fully leverage the robot’s capabilities to enhance their motor functions. Human-in-the-loop control iteratively updates the controller parameters by considering the user’s response such as muscle activity, synergy, metabolic cost, gait symmetry, user preference or comfort, aiming to minimize or maximize that response20. It achieved a maximum reduction of energy cost by 37.9% for ankle exoskeleton41. The main drawback of this method is its tendency to require long iteration time thus inducing fatigue and increasing human dropout rate20.

Effective human-robot interaction is critical for optimal performance. A patient-cooperative control termed assist-as-needed provides torque assistance only if there is a large deviation from the intended movement42, which is an early-stage concept of human-in-the-loop control. Human biomechanical parameters such as joint angles, gait symmetry, and moving speed are some intuitive responses used in human-in-the-loop control20. However, this method aims to have the wearers achieve normative biomechanical parameters derived from previous clinical analysis43, which might not actually meet the needs of different individuals with different motor and sensory dysfunctions. To deal with this, Huang et al. developed a dynamic movement primitive to model motion trajectories of exoskeletons that updates iteratively to account for inter-subject preference44. Metabolic energy cost45 is a physiological parameter that is one of the most commonly used human responses in human-in-the-loop control, where wearable robots help humans to minimize movement effort. One drawback of this parameter is that it is usually calculated indirectly from oxygen intake and carbon dioxide output with empirical formulas which require several minutes of breath data, which is a lengthy time for updating control parameters. Regarding this, Gordon et al. achieved online metabolic cost estimation with a musculoskeletal model to save the sampling time46. Besides these parameters, muscle activity47, muscle synergy48, and user preference49 also have played a role in human-in-the-loop control.

Optimization is another key factor in the effectiveness of human-in-the-loop control. Advances in optimization strategies can offer optimal cooperation with humans by augmenting or reducing the above-mentioned variables. Evolutionary strategy is frequently used in human-in-the-loop control as it can manage many objective functions41. However, objective functions based on measurements of human performance typically require a mass of online and offline calculations, making it a lengthy evaluation period50. To improve the optimizing efficiency, Bayesian optimization has been utilized in human-in-the-loop control and achieved peak and offset timing of hip extension assistance identification eventually minimizing the energy expenditure9. This method has high time efficiency as it works by learning the shape of the objective functions to find parameters that can improve the result to the global maximum, while it becomes poor in efficiency when the number of objective functions and iterations increase. Reinforcement learning is a method learned by trial and error, where the good performance of humans is remembered and rewarded to facilitate human-robot interaction51. However, just because of its trial and error characteristic, there is a risk that the robot might apply harmful torque to the wearer52. Despite the achievements, most optimization algorithms are heavy on computation, which brings new challenges to the calculation power of the wearable solution.

Sensory reconstruction

Sensory reconstruction builds a new pathway for delivering sensory information from the robot to the human. As motor and sensory functions are strongly integrated as a fundamental principle of human beings, the reconstruction of motor function should not develop alone from the integration of sensory reconstruction. An ideal wearable robot should thus reconstruct and enhance the motor and sensory functions of the dysfunctional body part. However, in practice, rebuilding sensory feedback is extremely challenging10.

Neuromuscular Interface

The neuromuscular interface functions bidirectionally, including sensing and feedback. To sense human movement intentions, sensing neuromuscular interface through the nerves or muscles can be probed directly. The sensory feedback neuromuscular interface transmits information about the external environment or human body state to the nervous system to build a feedback loop (Fig. 4). The sensory feedback interface is crucial for wearable robots, particularly during tasks requiring physical interaction with objects. For example, a prosthesis equipped with sensory feedback can restore lost perception to an amputee, while an exoskeleton with sensory feedback can convey information about the contact force with the environment to the wearer.

The sensing interface captures signals on the efferent nerve pathway (orange arrow downwards), which could be used to sense human intention, for example, ECoG130, EEG131, electroneurogram (ENG)132, implant EMG133, and surface EMG134. The feedback interface stimulates the afferent nerve pathway (indicated by the upward red arrow), which could be used to convey information to humans, for example, in non-invasive ways such as haptic feedback67 and surface electrical stimulation70, in implantable ways like agonist-antagonist myoneural76, implant electrode72, and targeted sensory reinnervation77.

The sensing interface captures signals along the efferent neural pathway to establish a connection between humans and wearable robots. For example, BCI can use brain signals from the scalp, the cortical surface, or intracortical to restore movement control to paralyzed people53. ECoG signals were for used chronic neural recording and stimulation26. The invasive brain-computer interface has high control accuracy while most people could not accept brain surgery, and the non-invasive brain-computer interface typically has relatively lower accuracy54. Recent years have witnessed significant advancements in non-invasive techniques55, such that non-invasive BCIs have demonstrated improvements in motor imagery tasks56. EEG signals have been investigated as an alternative for measuring brain activity and conveying user intention to wearable robots57. The control process typically involves decoding EEG responses elicited by imagining the intended task such as gait58 or manipulating objects59,60. Surface EMG is the most commonly used neuromuscular interface for sensing human intent in wearable robot control29, while it is susceptible to electrode placement and skin conditions. High-density EMG provides detailed spatio-temporal information about muscle activity, enabling the decoding of intricate movements like hand gestures22. Implant EMG could solve the problem of surface EMG and improve robustness in wearable control61. This method is confined when in the case of amputation not enough remnant muscle tissue is left for implanting electrodes. ENG is a neural electrical signal62 that requires a nerve implant and could handle the above-mentioned problems for both invasive and non-invasive EMG interfaces, and has demonstrated promising results in motor intention decoding63. However, this method has problems of poor signal-to-noise ratio and stability64, and might just resemble the decoding result similar to surface EMG65. Besides, all implants in the brain, nerve, or muscle have the concern of durability66. Thus, despite the advances, current sensing neuromuscular interfaces still need to improve accuracy for non-invasive approaches and durability for invasive ones.

In the afferent nerve pathway, feedback plays a vital role in enabling wearable robots to effectively communicate with the user, facilitating intuitive interactions. Vibration feedback to generate tactile on the skin is a commonly used way to provide haptic sensation67. Though, in theory, vibration could be modulated to different frequencies and intensities to map different sensations68, it would enforce a cognitive burden for the user to master the relationship. Iberite et al. used wearable devices to restore natural thermal sensory feedback in individuals with amputation69. Electrical stimulation with current into the skin could generate electrotactile70. However, this method is usually rated by users as tingling71. External stimulations are inherently less intuitive compared to internal nerve stimulation, which could activate the same sensation pathway72. Long-term stimulation of the nerve might lead to decreased sensitivity73 and make it hard for information to be conveyed. However, a recent clinical trial has shown the feasibility of the six-month use of hand prosthesis with intraneural tactile feedback74. Valle et al. have demonstrated that intraneural sensory feedback improves sensation naturalness, tactile sensitivity, and prosthesis embodiment75. Agonist–antagonist myoneural interface could naturally convey proprioception for prosthesis user76, while it is confined to the position of amputation. To deal with that, targeted sensory reinnervation could achieve sensation by simulating other parts of the body77. Significant research progress has been shown in the self-contained hand prosthesis with sensory feedback over 3–7 years of use in 4 individuals with transhumeral amputation78. Similar to sensing, sensory feedback could also consider multi-modal integration to improve naturalness, such as thermal together with tactile feedback69. Current sensory feedback technology still needs to work towards creating a neural pathway that transfers a large amount of sensory information to the nervous system and ensuring an effortless experience for the user.

Flexible electronics

It is difficult for traditional rigid sensors to conform to the human skin or internal organs when measuring neuromuscular signals or delivering the sensory feedback mentioned earlier, which often results in motion artifacts and low measurement accuracy. As a person moves, the skin stretches, wrinkles and flexes, and internal organs beat, all with large deformation. This makes it difficult to maintain contact between the sensors and humans. The flexible electronics nature of soft and stretchable, minimizing the physical and mechanical mismatch between skin/neural tissue and the flexible electronics79, potentially enabling a high-quality interface (Fig. 5).

It could be conformal with human skin or internal organs to capture signals or deliver stimulations. a Flexible electronics for sensing surface EMG80. b Flexible electronics for sensing EEG85. c Flexible electronics for sensing ECog86. d Flexible electronics for sensing and feedback93. e Flexible electronics for sensing invasive EMG83. f Flexible electronics for sensing hand movement87. g Flexible electronics for providing electrotactile89. h Flexible electronics for nerve stimulation90. i Flexible electronics for providing haptic feedback88.

Flexible electronics have the potential to form the bidirectional neuromuscular interface discussed previously. For neuromuscular signal sensing, Xie et al. developed a nano-thick porous stretchable dry electrode for surface EMG sensing, which even works under sweating conditions80. Hydrogel has been commonly used for electrophysiology signals in wearable robot applications for its skin-like properties and good conductivity81. However, the hydrogel sensor is limited in a humid environment, which could not withstand a long time in the air while this characteristic makes it a suitable interface for implant electrodes82. Poly (3,4-ethylenedioxythiophene) (PEDOT) material has been used to form implant EMG electrodes83. Liquid metal has achieved implant ENG signal recording84. For brain signals, Carneiro et al. developed a headband with conductive stretchable ink for forehead EEG signal acquisition85. Invasive ECoG has also been shown to be recorded by flexible electronics86. Besides, flexible electronics could sense human mechanical movement with multiple channels87. Spatial resolution is important for sensing neuromuscular signal quality. Thus, flexible electronics for wearable robots need to form high-density arrays and overcome difficulties in wiring, especially during strain when the connection tends to break80.

Flexible electronics may also function as an interactive feedback mechanism, enhancing the communication between humans and wearable robots. Chossat et al. have developed a soft skin stretch device with twisted and coiled polymer for generating haptic feedback88. Akhtar et al. used a flexible interface to achieve electrotactile touch feedback in prosthesis users89. For in vivo sensory feedback, flexible electronics have also played a role. Lienemann et al. developed cuff electrodes with stretchable gold nanowires and achieved invasive peripheral nerve stimulation90. Minev et al. developed an electronic dura mater capable of delivering electronic stimulation and even drugs91. Similarly to sensing, feedback also requires high resolution. Zhu et al. achieved large area pressure feedback with flexible electronic92. To meet the bi-directional requirements for the neuromuscular interface, flexible electronics need to integrate sensing and feedback. Vitale et al. achieved neural stimulation and recording with soft carbon nanotube fiber microelectrodes93. Since many flexible neuromuscular interfaces work in vivo, they need to head off toward high biocompatibility and long durability to avoid rejection and replacement.

Biomechatronic chip

For wearable robots, dealing with human physiological signals involves acquisition, transfer, and processing, while currently idiodynamic and sensation are limited by a deficiency in human-robot interface. As a weak signal, the human physiological electrical signal first needs to be amplified to meet the level of the following circuit and handle the problems such as electromagnetic interference, human artifact, and noise. Then, the analog-to-digital converter (ADC) directly determines whether the data used for signal processing is reliable. Meanwhile, the latest wearable robot control and optimization involves a large-scale deep learning network, which is extremely costly in conventional computing hardware (Fig. 6). Biomechatronic chip is specifically designed for the acquisition, transfer, and processing of biomechatronic information94, which is very attractive in acting as the central processing unit for a wearable robot.

The signal acquisition part intakes multi-modal information, ADC prepares processable data, and neuromorphic computing handles on-chip neural network calculation94. The biomechatronic chip serves as the central control unit for the wearable robot.

In wearable robot applications, data acquisition faces challenges in a multitude of signal modalities, multi-channels, weak raw signal amplitude, and noises. To reduce noises in neuromuscular sensing, chopper modulation technology and current multiplexing technology have been proposed to modulate input noise when gathering neural signal95. For amplification of the weak neuromuscular signal, Ng and Xu applied single-ended CMOS-inverter-based preamplifiers for both the reference and neural signal inputs and achieved a low power96. For different modality signals, different signal amplification gains have been achieved with a programmable gain amplifier97. In consideration of the multi-channel data acquisition to increase signal resolution, Luo et al. designed a 16-channel neural-signal acquisition chip and achieved low noise98. Besides, to suppress the offset voltage of the neuromuscular signal, a direct current servo loop is introduced to reduce the offset99. As the front end, signal acquisition still needs to work towards optimizing the above-mentioned parameters simultaneously with low power consumption.

Analog-to-digital converter (ADC) is vital for biomechatronic chips in wearable robot applications as it determines the data quality used for subsequent signal processing. The primary consideration is the accuracy of conversion, Ahmed and Kakkar designed an auto-configurable successive approximation register ADC for neural implants and achieved a good performance100. However, the offset compensation that is important for ADC was not considered in this design. Wendler et al. developed a Delta-Sigma ADC with 120 mVpp offset compensation for neural signal recording101. Power consumption and number of channels are two main factors that limit the development of ADC for wearable robot applications. Gagnon-Turcotte et al. designed a CMOS biomechatronic chip for simultaneous multichannel optogenetics and neural signal recording and achieved a low power of 11.2uW102. However, the chip area would increase when the number of channels increases for the high density of the neuromuscular signal. Besides, the stimulation artifact should be rejected to achieve a bidirectional neuromuscular interface. Pazhouhandeh et al. proposed a neural ADC that achieves blind stimulation artifact rejection, where a bidirectional COMS neural interface could capture neural signals during neural stimulation103.

Current wearable robot control and optimization involve large-scale deep learning network algorithms, which require a high hash rate and even a dedicated hardware structure for neural network calculation. Mimicking the dynamics of spiking neurons and dynamic synapses, a neural morphology chip ‘Loihi’ was proposed that could conduct such computation efficiently with integrated circuits104. With the recent advance in material with memristive properties which holds the state induced by a transient spike, the neuromorphic chip could be efficient and compact in neural network computing105. Based on this, Kreiser et al. realized simultaneous localization and mapping with neuromorphic chips106, which holds the potential for wearable robots that assist visually impaired individuals. Stewart et al. achieved online gesture learning with a neuromorphic chip107, which could be suitable for prosthetic applications. Flexible neuromorphic electronics for neuroprosthetics are also achieved108. Despite these achievements, as algorithm development is faster than hardware, there is a limitation in reconfigurability in neuromorphic chips to construct different network architectures to adapt different wearable robot applications.

The next generation of wearable robots

We foresee the performance of the next generation of wearable robots will broaden their applications by leveraging the breakthroughs - multi-modal fusion, human-in-the-loop control, neuromuscular interface, flexible electronics, and biomechatronic chip. With intuitive control and proprioception, next-generation wearable robots should better meet the user requirement for motor and sensory enhancement and reconstruction. The fusion of multi-modal information could guarantee the performance in perceiving the environment and human intention. Putting humans in the control loop in this human-centered system provides an intuitive human-robot interaction. The establishment of a bidirectional neuromuscular interface could reconstruct the neural pathway for sensation. Applying flexible electronics could handle artifacts caused by movement between the wearable robot and the human. Moreover, the adoption of biomechatronic chips with dedicated information acquisition, transfer, and neural network computation would empower fast signal processing for wearable robots.

Multi-modal fusion will eliminate the deficiency in perception of environment information and human intention information, especially for complex human intention. In order to use complementary characteristics of multi-modal information obtained from heterogeneous sensing, a novel multi-modal feature fusion strategy for human motion intention recognition shall be studied109. Besides, an accurate motion intention recognition model with the ability to adapt to different individuals with various motor and sensory dysfunction features is of great importance5. The fusion of available auxiliary modalities such as EMG signals, and visual images can enhance the performance of EEG-based BCI for wearable robots110. Moreover, the accuracy of human motion intention recognition and real-time performance determines the overall user acceptability of wearable robots. Advances in the fusion of multi-scale multi-modal information would warrant compliant human-machine interaction.

The next generation of human-in-the-loop control aims at developing control strategies that are simple and “fit like a glove” to each user, integrating information from multiple human-related responses to generate coherent control parameters configurations and improve human-robot interaction111. Despite the different needs of exoskeletons and prostheses, a natural and reliable control that minimizes the interaction forces between the robot and human to improve comfort is a desired characteristic for both types of wearable robots. Discomfort and problems with body fitting are common causes of device rejection112. It is also worth noting that the wrong set of control parameters may cause discomfort and pain113, thus personalization might be necessary to engage. Future control should consider the user preference, and coactive feedback and utilize cost functions together with other metrics to optimize interaction which increases users’ acceptance114. Advancements in closing the human-robot loop of wearable robot control strategies can benefit different populations with motor and sensory dysfunctions.

Wearable robots involving neuromuscular interfaces have demonstrated significant benefits in rehabilitation, while the widespread adoption of such wearable robots still faces significant challenges. For example, although BCI has shown promising results in detecting the user’s intentions while using a wearable robot their actual effectiveness is yet to be fully explored. While invasive brain imaging methods provide better accuracies, their acceptance is likely to be limited to a small group of patients as the concern of safety and durability of the invasive neuromuscular interface, which might cause unwanted surgery115. Conversely, non-invasive methods, such as EEG, contain less information and are more susceptible to noise and artifacts. Therefore, better recording hardware and detection algorithms are still required to prepare EEG-based BCI for real-world applications. Another challenge in using neuromuscular interfaces is the long adaptation time. Due to the signal’s variability across sessions and individuals, using the same BCI system without calibration or retraining is nearly impractical. Therefore, enhancing the transferability of learned representations is crucial for seamless integration of these systems with wearable robots. Besides, the neuromuscular interface typically builds on humans, while it is also important to build an interface between the robot and environment, for example, sensors on the prostheses for generating sensory feedback for humans116. In order to achieve a positive user experience in the use of wearable robots, the neuromuscular interface should include both reliable decoding of user intentions and real-time delivery of sensory feedback. In the future, the continuous development of bidirectional neuromuscular interfaces will bring leaps to wearable robots for motor and sensory reconstruction.

The flexible electronics offer the advantage of conformation to human skin or tissue. It also has good scalability and can be prepared into different shapes to meet the requirements of different wearable robots. The new generation of flexible electronics for wearable robots shall achieve stretch and healing itself, low cross-coupling, low-cost processing, and multi-sensor integration117. Self-power supply is another direction that could benefit the arrangement of interfaces for wearable robots118. One step forward, flexible electronics could not only serve as sensors but also as the interface for sensory feedback119. This integration would greatly benefit the above-mentioned bidirectional neuromuscular interface. Advances in flexible electronics will provide new strategies for the research and development of wearable robots.

The next generation of wearable robots should apply dedicated biomechatronic chips with high performance in data acquisition, ADC, and processing. As multi-modal signals have various levels of amplitudes, chips with programmable gain amplifiers which can be digitally controlled to obtain multiple amplification ratios to adapt to changes in the input signal would be highly beneficial120,121. Bit error is a problem in ADC, adding redundant bits to the binary code could improve system fault tolerance and reduce bit error probability, and bringing digital calibration technology into the system would improve the effective resolution122. Neuromorphic chips could run neural networks with various topologies and thus could support large-scale deep learning neural network algorithms, where the neuromorphic hardware empowers continual learning with training data and experience together. Those three key components constitute the next-generation biomechatronic chip that can enrich wearable robots with a powerful heart.

Realistically, the wider clinical application of multi-modal fusion, human-in-the-loop control, neuromuscular interface, flexible electronics, and biomechatronic chips should occur within the next decade for wearable robots. All of these technologies have been validated and shown to have benefits for people with motor and sensory dysfunction, such as hemiplegic patients and amputees. Exoskeletons and prostheses leveraging these cutting-edge technologies together will constitute a new generation of wearable robots. We expect them to significantly improve the users’ quality of life and pave the way for motor and sensory enhancement and reconstruction.

References

Zbinden, J., Lendaro, E. & Ortiz-Catalan, M. Prosthetic embodiment: systematic review on definitions, measures, and experimental paradigms. J. Neuroeng. Rehabil. 19, 37 (2022).

Serino, A. et al. Upper limb cortical maps in amputees with targeted muscle and sensory reinnervation. Brain 140, 2993–3011 (2017).

Rognini, G. et al. Multisensory bionic limb to achieve prosthesis embodiment and reduce distorted phantom limb perceptions. J. Neurol. Neurosurg. Psychiatry 90, 833–836 (2019).

Fritsch, A., Lenggenhager, B. & Bekrater-Bodmann, R. Prosthesis embodiment and attenuation of prosthetic touch in upper limb amputees – A proof-of-concept study. Conscious Cogn. 88, 103073 (2021).

Forte, G. et al. Exoskeletons for mobility after spinal cord injury: a personalized embodied approach. J. Pers. Med 12, 380 (2022).

Hybart, R. L. & Ferris, D. P. Embodiment for robotic lower-limb exoskeletons: a narrative review. IEEE Trans. Neural Syst. Rehabil. Eng. 31, 657–668 (2023).

Postol, N. et al. “Are we there yet?” expectations and experiences with lower limb robotic exoskeletons: a qualitative evaluation of the therapist perspective. Disabil Rehabil 1–8 (2023) https://doi.org/10.1080/09638288.2023.2183992.

Xue, T. et al. Progress and prospects of multimodal fusion methods in physical human–robot interaction: A review. IEEE Sens J. 20, 10355–10370 (2020).

Ding, Y., Kim, M., Kuindersma, S. & Walsh, C. J. Human-in-the-loop optimization of hip assistance with a soft exosuit during walking. Sci. Robot 3, eaar5438 (2018).

Raspopovic, S., Valle, G. & Petrini, F. M. Sensory feedback for limb prostheses in amputees. Nat. Mater. 20, 925–939 (2021).

Martinez-Hernandez, U. et al. Wearable assistive robotics: a perspective on current challenges and future trends. Sensors 21, 6751 (2021).

Pertuz, S. A., Llanos, C., Peña, C. A. & Muñoz, D. A modular and distributed impedance control architecture on a chip for a robotic hand. in 2018 31st Symposium on Integrated Circuits and Systems design (SBCCI) 1–6 (IEEE, 2018).

Quan, Z., Sun, T., Su, M. & Wei, J. Multimodal sentiment analysis based on cross-modal attention and gated cyclic hierarchical fusion networks. Comput. Intell. Neurosci. 2022, 4767437 (2022).

Walsh, C. Human-in-the-loop development of soft wearable robots. Nat. Rev. Mater. 3, 78–80 (2018).

Liu, Y. et al. Intraoperative monitoring of neuromuscular function with soft, skin-mounted wireless devices. NPJ Digit. Med. 1, 19 (2018).

Yuk, H., Wu, J. & Zhao, X. Hydrogel interfaces for merging humans and machines. Nat. Rev. Mater. 7, 935–952 (2022).

Sandamirskaya, Y., Kaboli, M., Conradt, J. & Celikel, T. Neuromorphic computing hardware and neural architectures for robotics. Sci. Robot. 7, eabl8419 (2022).

Farina, D. et al. Toward higher-performance bionic limbs for wider clinical use. Nat. Biomed. Eng. 7, 473–485 (2023).

Siviy, C. et al. Opportunities and challenges in the development of exoskeletons for locomotor assistance. Nat. Biomed. Eng. 7, 456–472 (2022).

Díaz, M. A. et al. Human-in-the-loop optimization of wearable robotic devices to improve human–robot interaction: A systematic review. IEEE Trans. Cybern. 53, 7483–7496 (2022).

Novak, D. & Riener, R. A survey of sensor fusion methods in wearable robotics. Rob. Auton. Syst. 73, 155–170 (2015).

Lara, J. E., Cheng, L. K., Rohrle, O. & Paskaranandavadivel, N. Muscle-specific high-density electromyography arrays for hand gesture classification. IEEE Trans. Biomed. Eng. 69, 1758–1766 (2022).

Lee, H. S. et al. Individual finger movement decoding using a novel ultra-high-density electroencephalography-based brain-computer interface system. Front. Neurosci. 16, 1009878 (2022).

Cheng, J., Yang, Z., Overstreet, C. K. & Keefer, E. Fascicle-specific targeting of longitudinal intrafascicular electrodes for motor and sensory restoration in upper-limb amputees. Hand Clin. 37, 401–414 (2021).

Branco, M. P. et al. Decoding hand gestures from primary somatosensory cortex using high-density ECoG. Neuroimage 147, 130–142 (2017).

Benabid, A. L. et al. An exoskeleton controlled by an epidural wireless brain–machine interface in a tetraplegic patient: a proof-of-concept demonstration. Lancet Neurol. 18, 1112–1122 (2019).

Shen, K., Chen, O., Edmunds, J. L., Piech, D. K. & Maharbiz, M. M. Translational opportunities and challenges of invasive electrodes for neural interfaces. Nat. Biomed. Eng. 7, 424–442 (2023).

Xia, H. et al. Design of A Multi-Functional Soft Ankle Exoskeleton for Foot-Drop Prevention, Propulsion Assistance, and Inversion/Eversion Stabilization. in 2020 8th IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob) 118–123 (IEEE, 2020). https://doi.org/10.1109/BioRob49111.2020.9224420.

Fleming, A. et al. Myoelectric control of robotic lower limb prostheses: a review of electromyography interfaces, control paradigms, challenges and future directions. J. Neural Eng. 18, 041004 (2021).

Caulcrick, C., Huo, W., Hoult, W. & Vaidyanathan, R. Human joint torque modelling with MMG and EMG during lower limb human-exoskeleton interaction. IEEE Robot Autom. Lett. 6, 7185–7192 (2021).

Botter, A., Beltrandi, M., Cerone, G. L., Gazzoni, M. & Vieira, T. M. M. Development and testing of acoustically-matched hydrogel-based electrodes for simultaneous EMG-ultrasound detection. Med Eng. Phys. 64, 74–79 (2019).

Guo, W., Sheng, X., Liu, H. & Zhu, X. Toward an enhanced human–machine interface for upper-limb prosthesis control with combined EMG and NIRS signals. IEEE Trans. Hum. Mach. Syst. 47, 564–575 (2017).

Sheng, X. et al. Toward an integrated multi-modal sEMG/MMG/NIRS sensing system for human–machine interface robust to muscular fatigue. IEEE Sens J. 21, 3702–3712 (2020).

Chen, X. et al. A multimodal investigation of in vivo muscle behavior: System design and data analysis. in 2014 IEEE International Symposium on Circuits and Systems (ISCAS) 2053–2056 (IEEE, 2014). https://doi.org/10.1109/ISCAS.2014.6865569.

Kiguchi, K. & Hayashi, Y. Motion Estimation Based on EMG and EEG Signals to Control Wearable Robots. in 2013 IEEE International Conference on Systems, Man, and Cybernetics 4213–4218 (IEEE, 2013). https://doi.org/10.1109/SMC.2013.718.

Fernandez-Vargas, J., Kita, K. & Yu, W. Real-time Hand Motion Reconstruction System for Trans-Humeral Amputees Using EEG and EMG. Front. Robot. AI 3, https://doi.org/10.3389/frobt.2016.00050 (2016).

Krausz, N. E. et al. Intent prediction based on biomechanical coordination of EMG and vision-filtered gaze for end-point control of an arm prosthesis. IEEE Trans. Neural Syst. Rehabilitation Eng. 28, 1471–1480 (2020).

Wu, D. et al. Deep dynamic neural networks for multimodal gesture segmentation and recognition. IEEE Trans. Pattern Anal. Mach. Intell. 38, 1583–1597 (2016).

Li, K., Zhao, X., Bian, J. & Tan, M. Sequential learning for multimodal 3D human activity recognition with long-short term memory. in 2017 IEEE International Conference on Mechatronics and Automation (ICMA) 1556–1561 (IEEE, 2017).

Falco, P. et al. Cross-modal visuo-tactile object recognition using robotic active exploration. in 2017 IEEE International Conference on Robotics and Automation (ICRA) 5273–5280 (IEEE, 2017).

Zhang, J. et al. Human-in-the-loop optimization of exoskeleton assistance during walking. Science 356, 1280–1284 (2017).

Riener, R. et al. Patient-cooperative strategies for robot-aided treadmill training: first experimental results. IEEE Trans. neural Syst. Rehabil. Eng. 13, 380–394 (2005).

Nguiadem, C., Raison, M. & Achiche, S. Motion planning of upper-limb exoskeleton robots: a review. Appl. Sci. 10, 7626 (2020).

Huang, R., Cheng, H., Guo, H., Lin, X. & Zhang, J. Hierarchical learning control with physical human-exoskeleton interaction. Inf. Sci. 432, 584–595 (2018).

Selinger, J. C. & Donelan, J. M. Estimating instantaneous energetic cost during non-steady-state gait. J. Appl. Physiol. 117, 1406–1415 (2014).

Gordon, D. F. N., McGreavy, C., Christou, A. & Vijayakumar, S. Human-in-the-loop optimization of exoskeleton assistance via online simulation of metabolic cost. IEEE Trans. Robot. 38, 1410–1429 (2022).

Jackson, R. W. & Collins, S. H. Heuristic-based ankle exoskeleton control for co-adaptive assistance of human locomotion. IEEE Trans. Neural Syst. Rehabil. Eng. 27, 2059–2069 (2019).

Garcia-Rosas, R., Tan, Y., Oetomo, D., Manzie, C. & Choong, P. Personalized online adaptation of kinematic synergies for human-prosthesis interfaces. IEEE Trans. Cyber. 51, 1070–1084 (2019).

Tucker, M. et al. Preference-based learning for exoskeleton gait optimization. in 2020 IEEE International Conference on Robotics and Automation (ICRA) 2351–2357 (IEEE, 2020).

Malcolm, P. et al. Continuous sweep versus discrete step protocols for studying effects of wearable robot assistance magnitude. J. Neuroeng. Rehabil. 14, 1–13 (2017).

Zhang, Y., Li, S., Nolan, K. J. & Zanotto, D. Reinforcement learning assist-as-needed control for robot assisted gait training. in 2020 8th IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob) 785–790 (IEEE, 2020).

Salwan, D., Kant, S., Pareek, H. & Sharma, R. Challenges with reinforcement learning in prosthesis. Mater. Today Proc. 49, 3133–3136 (2022).

Collinger, J. L. et al. High-performance neuroprosthetic control by an individual with tetraplegia. Lancet 381, 557–564 (2013).

Courtine, G., Micera, S., DiGiovanna, J., del, R. & Millán, J. Brain–machine interface: closer to therapeutic reality? Lancet 381, 515–517 (2013).

Islam, Md. K. & Rastegarnia, A. Recent advances in EEG (non-invasive) based BCI applications. Front. Comput. Neurosci. 17, 1151852 (2023).

Al-Saegh, A., Dawwd, S. A. & Abdul-Jabbar, J. M. Deep learning for motor imagery EEG-based classification: A review. Biomed. Signal Process Control 63, 102172 (2021).

Li, Z. et al. Adaptive neural control of a kinematically redundant exoskeleton robot using brain–machine interfaces. IEEE Trans. Neural Netw. Learn Syst. 30, 3558–3571 (2019).

López-Larraz, E. et al. Control of an ambulatory exoskeleton with a brain–machine interface for spinal cord injury gait rehabilitation. Front. Neurosci. 10, 359 (2016).

Bandara, D., Arata, J. & Kiguchi, K. A noninvasive brain–computer interface approach for predicting motion intention of activities of daily living tasks for an upper-limb wearable robot. Int J. Adv. Robot Syst. 15, 172988141876731 (2018).

Looned, R., Webb, J., Xiao, Z. G. & Menon, C. Assisting drinking with an affordable BCI-controlled wearable robot and electrical stimulation: a preliminary investigation. J. Neuroeng. Rehabil. 11, 51 (2014).

Hahne, J. M., Farina, D., Jiang, N. & Liebetanz, D. A novel percutaneous electrode implant for improving robustness in advanced myoelectric control. Front Neurosci. 10, 114 (2016).

Grosheva, M., Wittekindt, C. & Guntinas-Lichius, O. Prognostic value of electroneurography and electromyography in facial palsy. Laryngoscope 118, 394–397 (2008).

Cracchiolo, M. et al. Decoding of grasping tasks from intraneural recordings in trans-radial amputee. J. Neural Eng. 17, 026034 (2020).

Micera, S. et al. Decoding of grasping information from neural signals recorded using peripheral intrafascicular interfaces. J. Neuroeng. Rehabil. 8, 1–10 (2011).

Levy, T. J. et al. An impedance matching algorithm for common-mode interference removal in vagus nerve recordings. J. Neurosci. Methods 330, 108467 (2020).

Ortiz-Catalan, M., Håkansson, B. & Brånemark, R. An osseointegrated human-machine gateway for long-term sensory feedback and motor control of artificial limbs. Sci. Transl. Med 6, 257re6–257re6 (2014).

Sun, Z., Zhu, M., Shan, X. & Lee, C. Augmented tactile-perception and haptic-feedback rings as human-machine interfaces aiming for immersive interactions. Nat. Commun. 13, 5224 (2022).

Ninu, A. et al. Closed-loop control of grasping with a myoelectric hand prosthesis: Which are the relevant feedback variables for force control? IEEE Trans. neural Syst. rehabil. Eng. 22, 1041–1052 (2014).

Iberite, F. et al. Restoration of natural thermal sensation in upper-limb amputees. Science 380, 731–735 (2023).

Osborn, L. E., Iskarous, M. M. & Thakor, N. V. Sensing and control for prosthetic hands in clinical and research applications. in Wearable Robotics 445–468 (Elsevier, 2020). https://doi.org/10.1016/B978-0-12-814659-0.00022-9.

Zhang, D., Xu, H., Shull, P. B., Liu, J. & Zhu, X. Somatotopical feedback versus non-somatotopical feedback for phantom digit sensation on amputees using electrotactile stimulation. J. Neuroeng. Rehabil. 12, 1–11 (2015).

Roche, A. D. et al. Upper limb prostheses: bridging the sensory gap. J. Hand Surg. 48, 182–190 (2023).

Graczyk, E. L., Delhaye, B. P., Schiefer, M. A., Bensmaia, S. J. & Tyler, D. J. Sensory adaptation to electrical stimulation of the somatosensory nerves. J. Neural Eng. 15, 046002 (2018).

Petrini, F. M. et al. Six‐month assessment of a hand prosthesis with intraneural tactile feedback. Ann. Neurol. 85, 137–154 (2019).

Valle, G. et al. Biomimetic intraneural sensory feedback enhances sensation naturalness, tactile sensitivity, and manual dexterity in a bidirectional prosthesis. Neuron 100, 37–45.e7 (2018).

Clites, T. R. et al. Proprioception from a neurally controlled lower-extremity prosthesis. Sci. Transl. Med. 10, eaap8373 (2018).

Kuiken, T. A. et al. Targeted reinnervation for enhanced prosthetic arm function in a woman with a proximal amputation: a case study. Lancet 369, 371–380 (2007).

Ortiz-Catalan, M., Mastinu, E., Sassu, P., Aszmann, O. & Brånemark, R. Self-contained neuromusculoskeletal arm prostheses. N. Engl. J. Med. 382, 1732–1738 (2020).

Lacour, S. P., Courtine, G. & Guck, J. Materials and technologies for soft implantable neuroprostheses. Nat. Rev. Mater. 1, 16063 (2016).

Xie, R. et al. Strenuous exercise-tolerance stretchable dry electrodes for continuous multi-channel electrophysiological monitoring. npj Flex. Electron. 6, 1–9 (2022).

Yang, C. & Suo, Z. Hydrogel ionotronics. Nat. Rev. Mater. 3, 125–142 (2018).

Yuk, H., Lu, B. & Zhao, X. Hydrogel bioelectronics. Chem. Soc. Rev. 48, 1642–1667 (2019).

Rossetti, N., Kateb, P. & Cicoira, F. Neural and electromyography PEDOT electrodes for invasive stimulation and recording. J. Mater. Chem. C. Mater. 9, 7243–7263 (2021).

Zhang, J., Sheng, L., Jin, C. & Liu, J. Liquid metal as connecting or functional recovery channel for the transected sciatic nerve. arXiv preprint arXiv:1404.5931 (2014).

Carneiro, M. R., de Almeida, A. T. & Tavakoli, M. Wearable and comfortable e-textile headband for long-term acquisition of forehead EEG signals. IEEE Sens. J. 20, 15107–15116 (2020).

Renz, A. F. et al. Opto‐E‐Dura: a soft, stretchable ECoG array for multimodal, multiscale neuroscience. Adv. Health. Mater. 9, 2000814 (2020).

Zhong, C. et al. A flexible wearable e-skin sensing system for robotic teleoperation. Robotica. 41, 1025–1038 (2023).

Chossat, J.-B., Chen, D. K. Y., Park, Y.-L. & Shull, P. B. Soft wearable skin-stretch device for haptic feedback using twisted and coiled polymer actuators. IEEE Trans. Haptics 12, 521–532 (2019).

Akhtar, A., Sombeck, J., Boyce, B. & Bretl, T. Controlling sensation intensity for electrotactile stimulation in human-machine interfaces. Sci. Robot 3, eaap9770 (2018).

Lienemann, S., Zötterman, J., Farnebo, S. & Tybrandt, K. Stretchable gold nanowire-based cuff electrodes for low-voltage peripheral nerve stimulation. J. Neural Eng. 18, 045007 (2021).

Minev, I. R. et al. Electronic dura mater for long-term multimodal neural interfaces. Science 347, 159–163 (2015).

Zhu, B. et al. Skin‐inspired haptic memory arrays with an electrically reconfigurable architecture. Adv. Mater. 28, 1559–1566 (2016).

Vitale, F., Summerson, S. R., Aazhang, B., Kemere, C. & Pasquali, M. Neural stimulation and recording with bidirectional, soft carbon nanotube fiber microelectrodes. ACS Nano 9, 4465–4474 (2015).

Liu, J., Li, Z., Gu, J., Feng, Y. & Li, G. A Neural Interface System-on-Chip for Nerve Signal Recording and Analysis of Human Gesture. in 2023 International Conference on Advanced Robotics and Mechatronics (ICARM) 79–84 (IEEE, 2023). https://doi.org/10.1109/ICARM58088.2023.10218841.

da Silva Braga, R. A., e Silva, P. M. M. & Karolak, D. B. Are CMOS operational transconductance amplifiers old fashioned? A systematic review. J. Integr. Circuits Syst. 17, 1–7 (2022).

Ng, K. A. & Xu, Y. P. A low-power, high CMRR neural amplifier system employing CMOS inverter-based OTAs with CMFB through supply rails. IEEE J. Solid-State Circuits 51, 724–737 (2016).

Tran, L. & Cha, H.-K. An ultra-low-power neural signal acquisition analog front-end IC. Microelectron. J. 107, 104950 (2021).

Luo, D., Zhang, M. & Wang, Z. A low-noise chopper amplifier designed for multi-channel neural signal acquisition. IEEE J. Solid-State Circuits 54, 2255–2265 (2019).

Sporer, M., Reich, S., Kauffman, J. G. & Ortmanns, M. A direct digitizing chopped neural recorder using a body-induced offset based DC Servo Loop. IEEE Trans. Biomed. Circuits Syst. 16, 409–418 (2022).

Ahmed, S. & Kakkar, V. Modeling and simulation of an eight-bit auto-configurable successive approximation register analog-to-digital converter for cardiac and neural implants. Simulation 94, 11–29 (2018).

Wendler, D. et al. A 0.0046-mm2 two-step incremental delta–sigma analog-to-digital converter neuronal recording front end with 120-mvpp offset compensation. IEEE J. Solid-State Circuits. 58, 439–450 (2023).

Gagnon-Turcotte, G., Ethier, C., de Köninck, Y. & Gosselin, B. A 13μm CMOS SoC for simultaneous multichannel optogenetics and electrophysiological brain recording. in 2018 IEEE International Solid-State Circuits Conference-(ISSCC) 466–468 (IEEE, 2018).

Pazhouhandeh, M. R., Chang, M., Valiante, T. A. & Genov, R. Track-and-zoom neural analog-to-digital converter with blind stimulation artifact rejection. IEEE J. Solid-State Circuits 55, 1984–1997 (2020).

Davies, M. et al. Loihi: A neuromorphic manycore processor with on-chip learning. IEEE Micro 38, 82–99 (2018).

Wang, W. et al. Integration and co-design of memristive devices and algorithms for artificial intelligence. iScience 23, 101809 (2020).

Kreiser, R., Renner, A., Sandamirskaya, Y. & Pienroj, P. Pose estimation and map formation with spiking neural networks: towards neuromorphic slam. in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 2159–2166 (IEEE, 2018).

Stewart, K., Orchard, G., Shrestha, S. B. & Neftci, E. Online few-shot gesture learning on a neuromorphic processor. IEEE J. Emerg. Sel. Top. Circuits Syst. 10, 512–521 (2020).

Park, H. et al. Flexible neuromorphic electronics for computing, soft robotics, and neuroprosthetics. Adv. Mater. 32, 1903558 (2020).

Li, J. & Wang, Q. Multi-modal bioelectrical signal fusion analysis based on different acquisition devices and scene settings: Overview, challenges, and novel orientation. Inf. Fusion 79, 229–247 (2022).

Kim, M. et al. Visual guidance can help with the use of a robotic exoskeleton during human walking. Sci. Rep. 12, 3881 (2022).

Beckerle, P. et al. A human-robot interaction perspective on assistive and rehabilitation robotics. Front Neurorobot 11, 1–6 (2017).

Farina, D. et al. Toward higher-performance bionic limbs for wider clinical use. Nat. Biomed. Eng. (2021) https://doi.org/10.1038/s41551-021-00732-x.

Felt, W., Selinger, J. C., Donelan, J. M. & Remy, C. D. ‘Body-in-the-loop’: Optimizing device parameters using measures of instantaneous energetic cost. PLoS One 10, 1–21 (2015).

Ingraham, K. A., Remy, C. D. & Rouse, E. J. The role of user preference in the customized control of robotic exoskeletons. Sci. Robot 7, eabj3487 (2022).

Cha, G. D., Kang, D., Lee, J. & Kim, D. Bioresorbable electronic implants: history, materials, fabrication, devices, and clinical applications. Adv. Health. Mater. 8, 1801660 (2019).

Gerrat, A., Michaud, H. & Lacour, S. Elastomeric electronic skin for prosthetic tactile sensation. Adv. Funct. Mater. 25, 2287–2295 (2015).

Yu, Y. et al. All-printed soft human-machine interface for robotic physicochemical sensing. Sci. Robot 7, eabn0495 (2022).

Yu, X. et al. Skin-integrated wireless haptic interfaces for virtual and augmented reality. Nature 575, 473–479 (2019).

Oh, J. Y. & Bao, Z. Second skin enabled by advanced electronics. Adv. Sci. 6, 1900186 (2019).

Xu, Z. et al. A 12-Bit 50 MS/s Split-CDAC-Based SAR ADC Integrating Input Programmable Gain Amplifier and Reference Voltage Buffer. Electronics 11, 1841 (2022).

AbuShawish, I. Y. & Mahmoud, S. A. A programmable gain and bandwidth amplifier based on tunable UGBW rail-to-rail CMOS op-amps suitable for different bio-medical signal detection systems. AEU-Int. J. Electron. Commun. 141, 153952 (2021).

Frounchi, M. et al. Millimeter-wave SiGe radiometer front end with transformer-based Dicke switch and on-chip calibration noise source. IEEE J. Solid-State Circuits 56, 1464–1474 (2021).

Guo, Z. et al. Transferable multi-modal fusion in knee angles and gait phases for their continuous prediction. J. Neural Eng. 20, 036019 (2023).

Li, Z., Li, Q., Huang, P., Xia, H. & Li, G. Human-in-the-loop adaptive control of a soft exo-suit with actuator dynamics and ankle impedance adaptation. IEEE Trans. Cybern. 53, 7920–7932 (2023).

Liu, F. et al. Neuro-inspired electronic skin for robots. Sci. Robot 7, eabl7344 (2022).

Donati, E. & Valle, G. Neuromorphic hardware for somatosensory neuroprostheses. Nat. Commun. 15, 556 (2024).

Benini, L. A brain in a black box. Nat. Phys. 19, 1391 (2023).

Kawala-Sterniuk, A. et al. Summary of over fifty years with brain-computer interfaces—a review. Brain Sci. 11, 43 (2021).

Su, Y. et al. Mechanics of finger-tip electronics. J. Appl. Phys. 114, 164511 (2013).

Alahi, M. E. E. et al. Recent advancement of electrocorticography (ECoG) electrodes for chronic neural recording/stimulation. Mater. Today Commun. 29, 102853 (2021).

Roohi-Azizi, M., Azimi, L., Heysieattalab, S. & Aamidfar, M. Changes of the brain’s bioelectrical activity in cognition, consciousness, and some mental disorders. Med J. Islam Repub. Iran. 31, 307–312 (2017).

Carter, M. & Shieh, J. C. Guide to Research Techniques in Neuroscience. (Academic Press, 2015).

Salminger, S. et al. Long-term implant of intramuscular sensors and nerve transfers for wireless control of robotic arms in above-elbow amputees. Sci. Robot. 4, eaaw6306 (2019).

Jiang, Y. et al. Topological supramolecular network enabled high-conductivity, stretchable organic bioelectronics. Science (1979) 375, 1411–1417 (2022).

Acknowledgements

This work was supported by the following funding programs: National Key R&D Program of China (No. 2021YFF0501600) and National Natural Science Foundation of China (No.62203419).

Author information

Authors and Affiliations

Contributions

H.X., Z.L. initiated the paper and developed its outline. H.X., Y.Z., N.R., F.T., Q.Y., D.K., and Z.L. wrote the first draft. Z.L. provided guidance as senior author. D.K. provided a comprehensive review for clarity, accuracy, and consistency.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Solaiman Shokur, and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xia, H., Zhang, Y., Rajabi, N. et al. Shaping high-performance wearable robots for human motor and sensory reconstruction and enhancement. Nat Commun 15, 1760 (2024). https://doi.org/10.1038/s41467-024-46249-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-024-46249-0

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.