Abstract

Matrix imaging paves the way towards a next revolution in wave physics. Based on the response matrix recorded between a set of sensors, it enables an optimized compensation of aberration phenomena and multiple scattering events that usually drastically hinder the focusing process in heterogeneous media. Although it gave rise to spectacular results in optical microscopy or seismic imaging, the success of matrix imaging has been so far relatively limited with ultrasonic waves because wave control is generally only performed with a linear array of transducers. In this paper, we extend ultrasound matrix imaging to a 3D geometry. Switching from a 1D to a 2D probe enables a much sharper estimation of the transmission matrix that links each transducer and each medium voxel. Here, we first present an experimental proof of concept on a tissue-mimicking phantom through ex-vivo tissues and then, show the potential of 3D matrix imaging for transcranial applications.

Similar content being viewed by others

Introduction

The resolution of a wave imaging system can be defined as the ability to discern small details of an object. In conventional imaging, this resolution cannot overcome the diffraction limit of a half wavelength and may be further limited by the maximum collection angle of the imaging device. However, even with a perfect imaging system, the image quality is affected by the inhomogeneities of the propagation medium. Large-scale spatial variations of the wave velocity introduce aberrations as the wave passes through the medium of interest. Strong concentration of scatterers also induces multiple scattering events that randomize the directions of wave propagation, leading to a strong degradation of the image resolution and contrast. Such problems are encountered in all domains of wave physics, in particular for the inspection of biological tissues, whether it be by ultrasound imaging1 or optical microscopy2, or for the probing of natural resources or deep structure of the Earth’s crust with seismic waves3.

To mitigate those problems, the concept of adaptive focusing has been adapted from astronomy where it was developed decades ago4,5. Ultrasound imaging employs array of transducers that allow to control and record the amplitude and phase of broadband wave fields. Wave-front distortions can be compensated for by adjusting the time delays added to each emitted and/or detected signal in order to focus ultrasonic waves at a certain position inside the medium6,7,8,9. The estimation of those time delays implies an iterative time-consuming focusing process that should be ideally repeated for each point in the field of view10,11. Such a complex adaptive focusing scheme cannot be implemented in real time since it is extremely sensitive to motion12 whether induced by the operator holding the probe or by the movement of tissues.

Fortunately, this tedious process can now be performed in post-processing13,14 thanks to the tremendous progress made in terms of computational power and memory capacity during the last decade. To optimize the focusing process and image formation, a matrix formalism can be fruitful15,16,17,18. Indeed, once the reflection matrix R of the impulse responses between each transducer is known, any physical experiment can be achieved numerically, either in a causal or anti-causal way, for any incident beam and as many times as desired. More specifically, assuming that the medium remains fixed during the acquisition, multi-scale analysis of the wave distortions can be performed to build an estimator of the transmission matrix T between each transducer of the probe and each voxel inside the medium19. Once the T-matrix is known, a local compensation of aberrations can be performed for each voxel, thereby providing a confocal image of the medium with a close-to-ideal resolution and an optimized contrast everywhere.

Although it gave rise to striking results in optical microscopy20,21,22,23,24 or seismic imaging25,26, the experimental demonstration of matrix imaging has been, so far, less spectacular with ultrasonic waves17,18,27,28. Indeed, the first proof-of-concept experiments employed a linear array of transducers. Yet, aberrations in the human body are 3D-distributed and a 1D control of the wave field is not sufficient for a fine compensation of wave distortions as already shown by previous works29,30,31,32. Moreover, 2D imaging limits the density of independent speckle grains which controls the spatial resolution of the T-matrix estimator28.

In this work, we extend the ultrasound matrix imaging (UMI) framework to 3D using a fully populated matrix array of transducers33,34,35. The overall method is first validated by means of a well-controlled experiment combining ex-vivo pork tissues as aberrating layer on top of a tissue-mimicking phantom. 3D UMI is then applied to a head phantom whose skull induces a strong attenuation, aberration, and multiple scattering of the ultrasonic wave field, phenomena that UMI can quantify independently of each other1,19. Inspired by the CLASS method developed in optical microscopy20,22, aberrations are here compensated by a novel iterative phase reversal algorithm more efficient for 3D UMI than a singular value decomposition16,17,18. In contrast with previous works, the convergence of this algorithm is ensured by investigating the spatial reciprocity between the T-matrices in transmission and reception. Throughout the paper, we will compare the gain in terms of resolution and contrast provided by 3D UMI with respect to its 2D counterpart. In particular, we will demonstrate how 3D UMI can be a powerful tool for optimizing the focusing process inside the brain through the skull.

Results

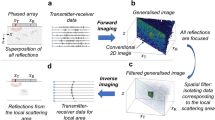

Beamforming the reflection matrix on a focused basis

3D UMI starts with the acquisition of the reflection matrix (see Methods) by means of a 2D array of transducers [32 × 32 elements, see Fig. 1a, b]. It was performed first on a tissue-mimicking phantom with nylon rods through a layer of pork tissue of fat and muscle (obtained from a chop rib piece), acting as an aberrating layer [Fig. 2a], and then on a head phantom including brain and skull-mimicking tissue, to reproduce transcranial imaging (see below). In the first experiment, the reflection matrix Ruu(t) is recorded in the transducer basis [Fig. 1a, c], i.e. by acquiring the impulse responses, R(uin, uout, t), between each transducer (u) of the probe. In the head phantom experiment, skull attenuation imposes a plane wave insonification sequence [Fig. 1b] to improve the signal-to-noise ratio. The reflection matrix Rθu then contains the reflected wave field R(θin, uout, t) recorded by the transducers uout [Fig. 1c] for each incident plane wave of angle θin.

a–c The R-matrix can be acquired in the (a) transducer or (b) plane-wave basis in transmit and (c) recording the back-scattered wave field on each transducer in receive. d Confocal imaging consists in a simultaneous focusing of waves at input and output. e In UMI, the input (rin) and output (rout) focusing points are decoupled. f x−cross-section of the focused R−matrix. g Four-dimensional structure of the focused R-matrix. h UMI enables a quantification of aberrations by extracting a local RPSF (displayed here in amplitude) from each antidiagonal of Rρρ(z). i UMI then consists in a projection of the focused R-matrix in a correction (here transducer) basis at output. The resulting dual R-matrix connects each focusing point to its reflected wave-front. j UMI then consists in realigning those wave-fronts to isolate their distorted component from their geometrical counterpart, thereby forming the D-matrix. k An iterative phase reversal algorithm provides an estimator of the T-matrix between the correction basis and the mid-point of input focusing points considered in panel (i). l The phase conjugate of the T-matrix provides a focusing law that improves the focusing process at output. m RPSF amplitude after the output UMI process. The ultrasound data shown in this figure corresponds to the pork tissue experiment at depth z = 40 mm.

a Schematic of the experiment. b Maps of original RPSFs (in amplitude) at depth z = 29 mm. c Aberration phase laws extracted at the different steps of the UMI process. d Corresponding RPSFs after aberration compensation at each step. e, f 3D confocal and UMI images with one longitudinal and transverse cross-section.

Whatever the illumination sequence, the reflectivity of a medium at a given point r can be estimated in post-processing by a coherent compound of incident waves delayed to virtually focus on this point, and coherently summing the echoes recorded by the probe coming from that same point [Fig. 1d]. UMI basically consists in decoupling the input (rin) and output (rout) focusing points [Fig. 1e]. By applying appropriate time delays to the transmission (uin/θin) and reception (uout) channels (see Methods), Ruu(t) and Rθu(t) can be projected at each depth z in a focused basis, thereby forming a broadband focused reflection matrix, Rρρ(z) ≡ [R(ρin, ρout, z)].

Since the focal plane is bi-dimensional, each matrix Rρρ(z) has a four-dimension structure: R(ρin, ρout, z) = R({xin, yin}, {xout, yout}, z). Rρρ(z) is thus concatenated in 2D as a set of block matrices to be represented graphically [Fig. 1g]. In such a representation, every sub-matrix of R corresponds to the reflection matrix between lines of virtual transducers located at yin and yout, whereas every element in the given sub-matrix corresponds to a specific couple (xin, xout) [Fig. 1f]. Each coefficient R(xin, yin, xout, yout, z) corresponds to the complex amplitude of the echoes coming from the point rout = (xout, yout, z) in the focal plane when focusing at point rin = (xin, yin, z) (or conversely, since Rρρ(z) is a symmetric matrix due to spatial reciprocity).

As already shown with 2D UMI, the diagonal of Rρρ(z) directly provides the transverse cross-section of the confocal ultrasound image:

where ρ = ρin = ρout is the transverse coordinate of the confocal point. The corresponding 3D image is displayed in Fig. 2e for the pork tissue experiment. Longitudinal and transverse cross-sections illustrate the effect of the aberrations induced by the pork layer by highlighting the distortion exhibited by the image of the deepest nylon rod.

Probing the focusing quality

We now show how to quantify aberrations in ultrasound speckle (without any guide star) by investigating the antidiagonals of Rρρ(z). In the single scattering regime, the focused R-matrix coefficients can be expressed as follows1:

with Hin/out, the input/output point spread function (PSF); and γ the medium reflectivity. This last equation shows that each pixel of the ultrasound image (diagonal elements of Rρρ(z)) results from a convolution between the sample reflectivity and an imaging PSF, which is itself a product of the input and output PSFs. The off-diagonal points in Rρρ(z) can be exploited for a quantification of the focusing quality at any pixel of the ultrasound image by extracting each antidiagonal. Such an operation is mathematically equivalent to a change of variable to express the focused R-matrix in a common midpoint basis1 (see Supplementary Section 2):

where the subscript \({{{{{{{\mathcal{M}}}}}}}}\) stands for the common midpoint basis. \({{{{{{{{\bf{r}}}}}}}}}_{{{{{{{{\rm{m}}}}}}}}}=\left\{{{{{{{{{\boldsymbol{\rho }}}}}}}}}_{{{{{{{{\rm{m}}}}}}}}},z\right\}=\left\{({{{{{{{{\boldsymbol{\rho }}}}}}}}}_{{{{{{{{\rm{in}}}}}}}}}+{{{{{{{{\boldsymbol{\rho }}}}}}}}}_{{{{{{{{\rm{out}}}}}}}}})/2,z\right\}\) is the common midpoint between the input and output focal spots, with the two separated by a distance Δρ = ρout − ρin.

In the speckle regime (random reflectivity), this quantity probes the local focusing quality as its ensemble average intensity, which we refer to as the reflection point spread function (RPSF), scales as an incoherent convolution between the input and output PSFs1:

where 〈 ⋯ 〉 denotes an ensemble average, which, in practice, is performed by a local spatial average (see Methods).

Figure 1h displays the mean RPSF associated with the focused R-matrix displayed in Fig. 1g (pork tissue experiment). It clearly shows a distorted RPSF which spreads well beyond the diffraction limit [black dashed line in Fig. 1h]:

with Δu the lateral extension of the probe. The RSPF also exhibits a strong anisotropy that could not have been grasped by 2D UMI. As we will see in the next section, this kind of aberrations can only be compensated through a 3D control of the wave field.

Adaptive focusing by iterative phase reversal

Aberration compensation in the UMI framework is performed using the distortion matrix concept. Introduced for 2D UMI17,28, the distortion matrix can be obtained by: (i) projecting the focused R-matrix either at input or output in a correction basis [here the transducer basis, see Fig. 1i]; (ii) extracting wave distortions exhibited by R when compared to a reference matrix that would have been obtained in an ideal homogeneous medium of wave velocity c0 [Fig. 1j]. The resulting distortion matrix D = [D(u, r)] contains the aberrations induced when focusing on any point r, expressed in the correction basis.

This matrix exhibits long-range correlations that can be understood in light of isoplanicity. If in a first approximation, the pork tissue layer can be considered as a phase screen aberrator, then the input and output PSFs can be considered as spatially invariant: Hin/out(ρ − ρin/out, rin/out) = H(ρ − ρin/out). UMI consists in exploiting those correlations to determine the transfer function T(u) of the phase screen. In practice, this is done by considering the correlation matrix C = D × D†. The correlation between distorted wave fields enables a virtual reflector synthesized from the set of output focal spots17 [Fig. 1k]. While, in previous works17,19, an iterative time-reversal process (or equivalently a singular value decomposition of D) was performed to converge towards the incident wavefront that focuses perfectly through the medium heterogeneities onto this virtual scatterer, here an iterative phase reversal algorithm is employed to build an estimator \(\hat{T}({{{{{{{\bf{u}}}}}}}})\) of the transfer function (see Methods). Supplementary Figure 3 demonstrates the superiority of this algorithm compared to SVD for 3D UMI.

Iterative phase reversal provides an estimation of aberration transmittance [Fig. 1k] whose phase conjugate is used to compensate for wave distortions (see Methods). The resulting mean RPSF is displayed in Fig. 1m. Although it shows a clear improvement compared with the initial RPSF, high-order aberrations still subsist. Because of its 3D feature, the pork tissue layer cannot be fully reduced to an aberrating phase screen in the transducer basis.

Spatial reciprocity as a guide star

The 3D distribution of the speed-of-sound breaks the spatial invariance of input and output PSFs. Figure 2b illustrates this fact by showing a map of local RPSFs (see Methods). The RPSF is more strongly distorted below the fat layer of the pork tissue (cf ≈ 1480 ± 10 m/s36) than below the muscle area (cm ≈ 1560 ± 50 m/s). A full-field compensation of aberrations similar to adaptive focusing does not allow a fine compensation of aberrations [left panel of Fig. 2d]. Access to the transmission matrix T = [T(u, r)] linking each transducer and each medium voxel is required rather than just a simple aberration transmittance T(u).

To that aim, a local correlation matrix C(rp) should be considered around each point rp over a sliding box \({{{{{{{\mathcal{W}}}}}}}}({{{{{{{\bf{r}}}}}}}}-{{{{{{{{\bf{r}}}}}}}}}_{{{{{{{{\rm{p}}}}}}}}})\) (see Methods), commonly called patches, whose choice of spatial extent w is subject to the following dilemma: On the one hand, the spatial window should be as small as possible to grasp the rapid variations of the PSFs across the field of view; on the other hand, these areas should be large enough to encompass a sufficient number of independent realizations of disorder16,19. The bias made on our T-matrix estimator actually scales as (see Supplementary Section 6):

\({{{{{{{\mathcal{C}}}}}}}}\) is the so-called coherence factor that is a direct indicator of the focusing quality8 but that also depends on the multiple scattering rate and noise background28. \({N}_{{{{{{{{\mathcal{W}}}}}}}}}\) is the number of diffraction-limited resolution cells in each spatial window.

The validity of the T-matrix estimator in a region \({{{{{{{{\mathcal{W}}}}}}}}}_{1}\) [Fig. 3c] is investigated by examining the corrected RPSF in a neighbor region \({{{{{{{{\mathcal{W}}}}}}}}}_{2}\) (yellow box). \({{{{{{{{\mathcal{W}}}}}}}}}_{1}\) and \({{{{{{{{\mathcal{W}}}}}}}}}_{2}\) are sufficiently close to assume, in a first approximation, that they belong to the same isoplanatic patch. If the box is too small [left panels of Fig. 3d], our estimator has not converged yet and the correction is not valid, as shown by the degraded quality of the RPSF in \({{{{{{{{\mathcal{W}}}}}}}}}_{2}\) [left panels of Fig. 3h] compared to its initial value [Fig. 3g]. With sufficient spatial averaging [third panel of Fig. 3d], a valid aberration law can be extracted, as shown by a corrected RPSF now close to be only diffraction-limited [third panel of Fig. 3h].

a Normalized scalar product Pin/out extracted at a point r1 (c) as a function of the size w1 of the considered spatial window \({{{{{{{{\mathcal{W}}}}}}}}}_{1}\) for 2D (orange) and 3D (green) imaging. b Corresponding bias intensity estimator, ∣δT∣2 = 1 − Pin/out, as a function of the number of resolution cells \({N}_{{{{{{{{\mathcal{W}}}}}}}}}\) contained in the window \({{{{{{{{\mathcal{W}}}}}}}}}_{1}\). The plot is in log-log scale and the theoretical power law (Eq. (6)) is shown with a dashed black line for comparison. c Cross-section of the confocal volume showing the location of \({{{{{{{{\mathcal{W}}}}}}}}}_{1}\) in green and \({{{{{{{{\mathcal{W}}}}}}}}}_{2}\) in yellow. The green box \({{{{{{{{\mathcal{W}}}}}}}}}_{1}\), centered around the point r1 = (5, − 5, 41) mm, denotes the region where the \(\hat{{{{{{{{\bf{T}}}}}}}}}{\mbox{-}}{{{{{\rm{matrix}}}}}}\) is extracted, while the yellow box \({{{{{{{{\mathcal{W}}}}}}}}}_{2}\), of fixed size w2 = 2 mm and centered around the point r2 = (5, − 5, 45) mm, is the area where the effect of aberration correction is investigated by means of the RPSF. d Spatial windows \({{{{{{{{\mathcal{W}}}}}}}}}_{1}\) considered for the calculation of C(r1). From left to right: Boxes of dimension w = 0 mm, w = 0.75 mm, w = 1.25 mm, rectangle of dimension w = 1.25 mm. e, f Corresponding input \({\hat{{{{{{{{\bf{T}}}}}}}}}}_{{{{{{{{\rm{in}}}}}}}}}\) and output \({\hat{{{{{{{{\bf{T}}}}}}}}}}_{{{{{{{{\rm{out}}}}}}}}}\) aberration laws, respectively. The scalar product Pin/out is displayed in each sub-panel of (f). g, h RPSF associated with the yellow box \({{{{{{{{\mathcal{W}}}}}}}}}_{2}\) (g) before correction and (h) after correction using the corresponding \(\hat{{{{{{{{\bf{T}}}}}}}}}{\mbox{-}}{{{{{\rm{matrices}}}}}}\) displayed in panels (e) and (f).

The question that now arises is how we can, in practice, know if the convergence of \(\hat{{{{{{{{\bf{T}}}}}}}}}\) is fulfilled without any a priori knowledge on T. An answer can be found by comparing the estimated input and output aberration phase laws, \({\hat{T}}_{{{{{{{{\rm{in}}}}}}}}}({{{{{{{\bf{u}}}}}}}},{{{{{{{{\bf{r}}}}}}}}}_{{{{{{{{\rm{p}}}}}}}}})\) and \({\hat{T}}_{{{{{{{{\rm{out}}}}}}}}}({{{{{{{\bf{u}}}}}}}},{{{{{{{{\bf{r}}}}}}}}}_{{{{{{{{\rm{p}}}}}}}}})\), at a given point rp as shown in Fig. 3e, f. Spatial reciprocity implies that \({\hat{T}}_{{{{{{{{\rm{in}}}}}}}}}\) and \({\hat{T}}_{{{{{{{{\rm{out}}}}}}}}}\) shall be equal when the convergence of the estimator is reached [third panel of Fig. 3e, f]. Their normalized scalar product, \({P}_{{{{{{{{\rm{in/out}}}}}}}}}={N}_{u}^{-1}|{\hat{{{{{{{{\bf{T}}}}}}}}}}_{{{{{{{{\rm{in}}}}}}}}}{\hat{{{{{{{{\bf{T}}}}}}}}}}_{{{{{{{{\rm{out}}}}}}}}}^{{{{\dagger}}} }|\), can thus be used to probe the error made on the aberration phase law ∣δT∣2. Both quantities are actually related as follows (see Supplementary Section 7):

The normalized scalar product Pin/out is displayed as a function of w and shows the convergence of the IPR process [Fig. 3a]. For a sufficiently large box [third panel of Fig. 3d], \(\hat{{{{{{{{\bf{T}}}}}}}}}\) is supposed to have converged towards T when \({\hat{{{{{{{{\bf{T}}}}}}}}}}_{{{{{{{{\rm{in}}}}}}}}}\) and \({\hat{{{{{{{{\bf{T}}}}}}}}}}_{{{{{{{{\rm{out}}}}}}}}}\) are almost equal [third panel of Fig. 3e,f], while, for a small box [left panels of Fig. 3d], a large discrepancy can be found between them. In the following, the parameter Pin/out will thus be used as a guide star for monitoring the convergence of the UMI process.

The scaling law of Eq. (6) with respect to \({N}_{{{{{{{{\mathcal{W}}}}}}}}}\) is checked in Fig. 3b. The inverse scaling of the bias with \({N}_{{{{{{{{\mathcal{W}}}}}}}}}\) shows the advantage of 3D UMI over 2D UMI, since \({N}_{{{{{{{{\mathcal{W}}}}}}}}} \sim {w}^{d}\), with d the imaging dimension. This superiority is evident in Fig. 3a, which shows a faster convergence with 3D boxes (green curve) than with 2D patches (orange curve). For a given precision, 3D UMI thus provides a better spatial resolution for our T-matrix estimator as shown by right panels of Fig. 3f, where much better agreement between \({\hat{{{{{{{{\bf{T}}}}}}}}}}_{{{{{{{{\rm{in}}}}}}}}}\) and \({\hat{{{{{{{{\bf{T}}}}}}}}}}_{{{{{{{{\rm{out}}}}}}}}}\) is observed for a 3D box [third panel of Fig. 3d] than for a 2D patch [right panel of Fig. 3d] of same dimension w.

Multi-scale compensation of wave distortions

The scaling of the bias intensity ∣δT∣2 with the coherence factor \({{{{{{{\mathcal{C}}}}}}}}\) has not been discussed yet. This dependence is however crucial since it indicates that a gradual compensation of aberrations shall be favored rather than a direct partition of the field of view into small boxes22 [see Supplementary Fig. 4]. An optimal UMI process should proceed as follows: first, compensate for input and output wave distortions at a large scale to increase the coherence factor \({{{{{{{\mathcal{C}}}}}}}}\); then, decrease the spatial window \({{{{{{{\mathcal{W}}}}}}}}\) and improve the resolution of the T-matrix estimator. The whole process can be iterated, leading to a multi-scale compensation of wave distortions (see Methods). As explained above, the convergence of the process is monitored using spatial reciprocity (Pin/out > 0.9).

The result of 3D UMI is displayed in Fig. 2. It shows the evolution of the T-matrix at each step [Fig. 2c] and the corresponding local RPSFs [Fig. 2d]. In the most aberrated area (i.e. under the fat), the phase fluctuations of the aberration law corresponds to a time delay spread of 56 ns (rms). This value is comparable with past measurements through the human abdominal wall37. The pork tissue layer thus induces a level of aberrations typical of standard ultrasound diagnosis. The comparison with the initial and full-field maps of RPSF highlights the benefit of a local compensation via the T-matrix, with a diffraction-limited resolution reached everywhere. The local aberration phase laws exhibited by \(\hat{{{{{{{{\bf{T}}}}}}}}}\) perfectly match with the distribution of muscle and fat in the pork tissue layer. The comparison of the final 3D image [Fig. 2f] and its cross-sections with their initial counterparts [Fig. 2e] show the success of the UMI process, in particular for the deepest nylon rod, which has retrieved its straight shape. The local RPSF on the top right of Fig. 2 shows a contrast improvement by 4.2 dB and resolution enhancement by a factor 2 [see Methods and Supplementary Fig. 5].

Overcoming multiple scattering for trans-cranial imaging

The same UMI process is now applied to the ultrasound data collected on the head phantom [Fig. 4a]. The parameters of the multi-scale analysis are provided in the Methods section [see also Supplementary Fig. 6]. The first difference with the pork tissue experiment lies in our choice of correction basis. Given the multi-layer configuration in this experiment, the D-matrix is investigated in the plane wave basis17.

a Top and oblique views of the experimental configuration. Image credits: Harryarts and kjpargeter on Freepik. b, c Original and UMI images, respectively. d Aberration laws at 3 different depths. From top to bottom: z = 20 mm, z = 32 mm, z = 60 mm. e Reciprocity criterion Pin/out with or without the use of a confocal filter: Each box chart displays the median, lower and upper quartiles, and the minimum and maximum values. f, g. Correlation function of the \(\hat{{{{{{{{\bf{T}}}}}}}}}\)-matrix in the (x, z)-plane (f) and (x, y)-plane (g), respectively. We attribute the sidelobes along the y-axis (g) to the inactive rows separating each block of 256 elements of the matrix array.

The second difference is that our spatial reciprocity criterion Pin/out is very low [see the blue box plot in Fig. 4e]. This is the manifestation of a bad convergence of our T-matrix estimator. The incoherent background exhibited by the original PSFs [Fig. 5c] drastically affects the coherence factor \({{{{{{{\mathcal{C}}}}}}}}\)28, which, in return, gives rise to a strong bias on the T-matrix estimator (Eq. (6)). The incoherent background is due to multiple scattering events in the skull and electronic noise, whose relative weight can be estimated by investigating the spatial reciprocity symmetry of the R-matrix (see Methods). Figure 5b shows the depth evolution of the single and multiple scattering contributions, as well as electronic noise. While single scattering dominates at shallow depths (z < 20 mm), multiple scattering quickly reaches 35% and remains relatively constant until electronic noise increases, so that the three contributions are almost equal at depths of 75 mm.

a Single scattering (green), multiple scattering (blue) and noise (red) rate at z = 32 mm. b Single scattering, multiple scattering, and noise rates as a function of depth. c, d Maps of local RPSFs (in amplitude) before and after correction, respectively, at three different depths (From top to bottom: z = 20 mm, 32 mm and 60 mm. Black boxes in panel (a) and (c) corresponds to the same area. e Resolution δρ(−3dB) as a function of depth. Initial resolution (red line) and its value after UMI (green line) are compared with the ideal (diffraction-limited) resolution (Eq. (5)).

Beyond the depth evolution, 3D imaging even allows the study of multiple scattering in the transverse plane, as shown in Fig. 5a. Two areas are examined, marked with black boxes, corresponding to the RPSFs shown in [Fig. 5c] (z = 32 mm). In the center, the RPSFs exhibits a low background due to the presence of a spherical target, resulting in a single scattering rate of 90%. The second box on the right, however, is characterized by a much higher background, leading to a multiple-to-single scattering ratio slightly larger than one. This high level of multiple scattering highlights the difficult task of trans-cranial imaging with ultrasonic waves.

In order to overcome these detrimental effects, an adaptive confocal filter can be applied to the focused R-matrix19.

This filter has a Gaussian shape, with a width lc(z) that scales as 3δρ0(z)19. The application of a confocal filter drastically improves the correlation between input and output aberration phase laws [see Fig. 4e and Supplementary Fig. 7], proof that a satisfying convergence towards the T-matrix is obtained.

Figure 4d shows the T-matrix obtained at different depths in the brain phantom. Its spatial correlation function displayed in Fig. 4f, g provides an estimation of the isoplanatic patch size: 5 mm in the transverse direction [Fig. 4g] and 2 mm in depth [Fig. 4f]. This rapid variation of the aberration phase law across the field of view confirms a posteriori the necessity of a local compensation of aberrations induced by the skull. It also confirms the importance of 3D UMI with a fully sampled 2D array, as previous work recommended that the array pitch should be no more than 50% of the aberrator correlation length to properly sample the corresponding adapted focusing law38.

The phase conjugate of the T-matrix at input and output enables a fine compensation of aberrations. A set of corrected RPSFs are shown in Fig. 5d. The comparison with their initial values demonstrates the success of 3D UMI: a diffraction-limited resolution is obtained almost everywhere [Fig. 5e], whether it be in ultrasound speckle or in the neighborhood of bright targets, at shallow or high depths, which proves the versatility of UMI.

The performance of 3D UMI is also striking when comparing the three-dimensional image of the head phantom before and after UMI [Fig. 4b, c, respectively]. The different targets were initially strongly distorted by the skull, and are now nicely resolved with UMI. In particular, the first target, located at z = 19 mm and originally duplicated, has recovered its true shape. In addition, two targets laterally spaced by 10 mm are observed at 42 mm depth, as expected [Fig. 4a]. The image of the target observed at 54 mm depth is also drastically improved in terms of contrast and resolution but is not found at the expected transverse position. One potential explanation is the size of this target (2 mm diameter) larger than the resolution cell. The guide star is thus far from being point-like, which can induce an uncertainty on the absolute transverse position of the target in the corrected image.

Finally, an isolated target can be leveraged to highlight the gain in contrast provided by 3D UMI with respect to its 2D counterpart. To that aim, a linear 1D array is emulated from the same raw data by collimating the incident beam in the y-direction [Fig. 6]. The ultrasound image is displayed before and after UMI in Fig. 6b, c, respectively. The radial average of the corresponding focal spots is displayed in Fig. 6d. Even though 2D UMI enables a diffraction-limited resolution, the contrast gain G is quite moderate (G2D ~ 8dB) as it scales with the number N of coherence grains exhibited by the 1D aberration phase law [Fig. 6a]: N2D ~ 6.2. On the contrary, as expected, 3D UMI provides a strong enhancement of the target echo [see the comparison between Fig. 6e–g]: G3D ~ 18 dB. The 2D aberration phase law actually provides a much larger number of spatial degrees of freedom than its 1D counterpart: N3D ~ 63. The gain in contrast is accompanied by a drastic improvement of the transverse resolution [>8 × for z > 40 mm in Fig. 5e]. Figure 6 demonstrates the necessity of a 2D ultrasonic probe for trans-cranial imaging. Indeed, the complexity of wave propagation in the skull can only be harnessed with a 3D control of the incident and reflected wave fields.

a Aberration law extracted with 2D UMI for a target located at z = 38 mm. b, c Original and corrected images of the same target with 2D UMI, respectively. d Aberration law extracted with 3D UMI. e, f Original and corrected images of the same target with 3D UMI, respectively. g Imaging PSF before (red) and after (green) 2D (dotted line) and 3D (solid line) UMI. The depth range considered in each panel corresponds to the echo of the target located at z = 38 mm.

Discussion

In this experimental proof-of-concept, we demonstrated the capacity of 3D UMI to correct strong aberrations such as those encountered in trans-cranial imaging. This work is not only a 3D extension of previous studies17,28since several crucial elements have been introduced to make UMI more robust.

First, the proposed iterative phase reversal algorithm outperforms the SVD for local compensation of aberrations because it can evaluate the aberration law on a larger angular support [see Supplementary Fig. 3], resulting in a sharper compensation of aberrations. Second, the bias of our T-matrix estimator has been expressed analytically (Eq. (6)) as a function of the coherence factor that grasps the detrimental effects of the virtual guide star blurring induced by aberrations, multiple scattering and noise. This led us to define a general strategy for UMI with: (i) a multi-scale compensation of wave distortions to gradually reduce the blurring of the virtual guide star and tackle high-order aberrations associated with small isoplanatic lengths; (ii) the application of an adaptive confocal filter to cope with multiple scattering and noise; (iii) a fine monitoring of the convergence of our estimator by means of spatial reciprocity. The latter is a real asset, as it provides an objective criterion to check the physical significance of the extracted aberration laws and optimize the resolution of our T-matrix estimator.

Although the results presented in this paper are striking, they were obtained in vitro, and some challenges remain for in vivo brain imaging. Until now, UMI has only been applied to a static medium, while biological tissues are usually moving, especially in the case of vascular imaging, where blood flow makes the reflectivity vary quickly over time. A lot of 3D imaging modes are indeed designed to image blood flow, such as transcranial Doppler imaging39 or ULM40,41. These methods are strongly sensitive to aberrations42,43 and their coupling with matrix imaging would be rewarding to increase the signal-to-noise ratio and improve the image resolution, not only in the vicinity of bright reflectors44 but also in ultrasound speckle.

However, due to spatial aliasing, the number of illuminations required for UMI scales with the number of resolution cells covered by the RPSF [see Supplementary Fig. 8]. Because the aberration level through the skull is important, the illumination basis should thus be fully sampled. It limits 3D transcranial UMI to a compounded frame rate of only a few hertz, which is much too slow for ultrafast imaging45. Moreover, a reduced number of illuminations breaks the symmetry of the reflection matrix. It would therefore also affect the accuracy of our monitoring parameter based on spatial reciprocity.

Soft tissues usually exhibit much slower movement, and provide signals several dB higher than blood. Ultrasound imaging of tissues is generally discarded for the brain because of the strong level of aberrations and reverberations. Interestingly, UMI can open a new route towards quantitative brain imaging since a matrix framework can also enable the mapping of physical parameters such as the speed-of-sound1,46,47,48, attenuation and scattering coefficients49,50, or fiber anisotropy51,52. Those various observables can be extremely enlightening for the characterization of cerebral tissues.

Alternatively, a solution to directly implement 3D UMI in vivo for ultrafast imaging, would be to design an imaging sequence in which the fully sampled R-matrix is acquired prior to the ultrafast acquisition itself, where the illumination basis can be drastically downsampled. The \(\hat{{{{{{{{\bf{T}}}}}}}}}{\mbox{-}}{{{{{\rm{matrix}}}}}}\) obtained from R could then be used to correct the ultrafast images in post-processing.

Interestingly, if an ultrafast 3D UMI acquisition is possible (in cases with less aberrations, or at shallow depths), the quickly decorrelating speckle observed in blood flow can be an opportunity since it provides a large number of speckle realizations in a given voxel. A high resolution T-matrix could thus be, in principle, extracted without spatial averaging and relying on any isoplanatic assumption53,54.

So far, one limit of UMI concerns the strong aberration regime in which extreme time delay fluctuations can occur. Indeed, our approach relies on a broadband focused reflection matrix that consists of a coherent time gating of singly scattered echoes. If time delay fluctuations are larger than the time resolution δt of our measurement, the angular components of each echo will not necessarily emerge in the same time gate and aberration compensation will be imperfect.

Beyond strong aberrations, another issue for transcranial imaging arises from multiple reflections caused by the skull. While such reverberations are not observed in the pork tissue experiment, their detrimental effects are much greater in a transcranial experiment because of the large impedance mismatch between the skull and brain tissues. In this work, such artefacts are not corrected and they drastically pollute the image at shallow depths (z < 20 mm).

To cope with those issues, a polychromatic approach to matrix imaging is required. Indeed, the aberration compensation scheme proposed in this paper is equivalent to a simple application of time delays on each transmit and receive channel. On the contrary, a full compensation of reverberation requires the tailoring of a complex spatio-temporal adaptive (or even inverse) filter. To that aim, 3D UMI provides an adequate framework to exploit, at best, all the spatio-temporal degrees of freedom provided by a high-dimension array of broadband transducers.

To conclude, 3D UMI is general and can be applied to any insonification sequence (plane wave or virtual source illumination) or array configuration (random or periodic, sparse or dense). Matrix imaging can be also extended to any field of wave physics for which a multi-element technology is available: optical imaging20,21,22, seismic imaging25,26 and also radar55. All the conclusions raised in that paper can be extended to each of these fields. The matrix formalism is thus a powerful tool for the big data revolution coming in wave imaging.

Methods

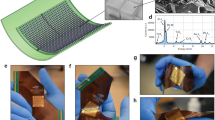

Description of the pork tissue experiment

The first sample under investigation is a tissue-mimicking phantom (speed of sound: c0 = 1540 m/s) composed of random distribution of unresolved scatterers which generate ultrasonic speckle characteristic of human tissue [Fig. 2a]. The system also contains nylon filaments placed at regular intervals, with a point-like cross-section, and, at a depth of 40 mm, a 10 mm-diameter hyperechoic cylinder, containing a higher density of unresolved scatterers. A 12-mm thick pork tissue layer is placed on top of the phantom. It is immersed in water to ensure its acoustical contact with the probe and the phantom. Since the pork layer contains a part of muscle tissue (cm ~ 1560 m/s) and a part of fat tissue (cf ~ 1480 m/s), it acts as an aberrating layer. This experiment mimics the situation of abdominal in vivo imaging, in which layers of fat and muscle tissues generate strong aberration and scattering at shallow depths.

The acquisition of the reflection matrix is performed using a 2D matrix array of transducers (Vermon) whose characteristics are provided in Table 1. The electronic hardware used to drive the probe was developed by Supersonic Imagine (member of Hologic group) in the context of a collaboration agreement with the Langevin Institute.

The reflection matrix is acquired by recording the impulse response between each transducer of the probe using IQ modulation with a sampling frequency fs = 6 MHz. To that aim, each transducer uin emits successively a sinusoidal burst of three half periods at the central frequency fc. For each excitation uin, the back-scattered wave field is recorded by all probe elements uout over a time length Δt = 139μs. This set of impulse responses is stored in the canonical reflection matrix Ruu(t) = [R(uin, uout, t)].

Description of the head phantom experiment

In this second experiment, the same probe [Table 1] is placed slightly above the temporal window of a mimicking head phantom, whose characteristics are described in Table 2. To investigate the performance of UMI in terms of resolution and contrast, the manufacturer (True Phantom Solutions) was asked to place small spherical targets made of bone-mimicking material inside the brain. They are arranged crosswise, evenly spaced in the 3 directions with a distance of 1 cm between two consecutive targets, and their diameter increases with depth: 0.2, 0.5, 1, 2, 3 mm [Fig. 4a]. Skull thickness is of ~6 mm on average at the position where the probe is placed and the first spherical target is located at z ≈ 20 mm depth, while the center of the cross is at z ≈ 40 mm depth. The transverse size of the head is ~ 14 cm.

To improve the signal-to-noise ratio, the R-matrix is here acquired using a set of plane waves56. For each plane wave of angles of incidence θin = (θx, θy), the time-dependent reflected wave field R(θin, uout, t) is recorded by each transducer uout. This set of wave fields forms a reflection matrix acquired in the plane wave basis, \({{{{{{{{\bf{R}}}}}}}}}_{{{{{{{{\boldsymbol{\theta }}}}}}}}{{{{{{{\bf{u}}}}}}}}}(t)=\left[R({{{{{{{{\boldsymbol{\theta }}}}}}}}}_{{{{{{{{\rm{in}}}}}}}}},{{{{{{{{\bf{u}}}}}}}}}_{{{{{{{{\rm{out}}}}}}}}},t)\right]\). Since the transducer and plane wave bases are related by a simple Fourier transform at the central frequency, the array pitch δu and probe size Δu dictate the angular pitch δθ and maximum angle θmax necessary to acquire a full reflection matrix in the plane wave basis such that: \({\theta }_{\max }=\arcsin [{\lambda }_{c}/(2\delta u)]\, \approx \, 2{8}^{\circ }\); \(\delta \theta=\arcsin \left[{\lambda }_{c}/(2{{\Delta }}{u}_{y})\right]\, \approx \, 0.{8}^{\circ }\), with λc = c0/fc the central wavelength and c0 = 1400 m/s the speed-of-sound in the brain phantom. A set of 1225 plane waves are thus generated by applying appropriate time delays Δτ(θin, uin) to each transducer uin = (ux, uy) of the probe:

Focused beamforming of the reflection matrix

The focused R-matrix, Rρρ(z) = [R(ρin, ρout, z)], is built in the time domain via a conventional delay-and-sum beamforming scheme that consists in applying appropriate time-delays in order to focus at different points at input rin = (ρin, z) = ({xin, yin}, z) and output rout = (ρout, z) = ({xout, yout}, z):

where i = u or θ accounts for the illumination basis. A is an apodization factor that limit the extent of the synthetic aperture at emission and reception. This synthetic aperture is dictated by the transducers’ directivity \({\theta }_{\max } \sim 2{8}^{\circ }\)57.

In the transducer basis, the time-of-flights, τ(u, r), writes:

In the plane wave basis, τ(θ, r) is given by

Local average of the reflection point spread function

To probe the local RPSF, the field of view is divided into spatial regions \({{{{{{{\mathcal{W}}}}}}}}({{{{{{{{\bf{r}}}}}}}}}_{{{{{{{{\rm{m}}}}}}}}}-{{{{{{{{\bf{r}}}}}}}}}_{{{{{{{{\rm{p}}}}}}}}})\), defined by their center rp and their extent w = (wρ, wz), where wρ and wz denote the lateral and axial extent, respectively. A local average of the back-scattered intensity can then be performed in each region:

where the symbol 〈 ⋯ 〉 denotes here a spatial average over the variable in the subscript. \({{{{{{{\mathcal{W}}}}}}}}({{{{{{{{\bf{r}}}}}}}}}_{{{{{{{{\rm{m}}}}}}}}}-{{{{{{{{\bf{r}}}}}}}}}_{{{{{{{{\rm{p}}}}}}}}})=1\) for ∣ρm − ρp∣ < wρ/2 and ∣zm − zp∣ < wz/2, and zero otherwise. The dimensions of \({{{{{{{\mathcal{W}}}}}}}}\) used for [Fig. 2b, d] are: w = (wρ, wz) = (3.2, 3) mm. The dimensions of \({{{{{{{\mathcal{W}}}}}}}}\) to obtain [Fig. 5c, d] are: w = (wρ, wz) = (4, 5.5) mm.

Distortion matrix in 3D UMI

The first step consists in projecting the focused R-matrix Rρρ(z) [Fig. 1e] onto a dual basis c at output [Fig. 1i]:

where the symbol × stands for the matrix product. Gρc(z) is the propagation matrix predicted by the homogeneous propagation model between the focused basis (ρ) and the correction basis (c) at each depth z. c can be either the plane wave, the transducer, or any other correction basis suitable for a particular experiment23,58,59.

In the transducer basis (c = u), the coefficients of Gρu(z) correspond to the z − derivative of the Green’s function19:

where kc is the wavenumber at the central frequency. In the Fourier basis (c = k), Gρk simply corresponds to the Fourier transform operator17:

At each depth z, the reflected wave-fronts contained in Rρc are then decomposed into the sum of a geometric component Gρc, that would be ideally obtained in absence of aberrations, and a distorted component that corresponds to the gap between the measured wave-fronts and their ideal counterparts [Fig. 1j]17,19:

where the symbol ○ stands for a Hadamard product. Drc = Dρc(z) = [D({ρin, z}, cout)] is the so-called distortion matrix, here expressed at the output. Note that the same operations can be performed by exchanging input and output to obtain the input distortion matrix Dcr = [D(cin, rout)] = [D(cin, {ρout, z})].

Local correlation analysis of the D-matrix

The next step is to exploit local correlations in Drc to extract the T-matrix. To that aim, a set of output correlation matrices Cout(rp) shall be considered between distorted wave-fronts in the vicinity of each point rp in the field of view:

An equivalent operation can be performed in input in order to extract a local correlation matrix Cin(rp) from the input distortion matrix Dcr.

Iterative phase reversal algorithm

The iterative phase reversal algorithm is a computational process that provides an estimator of the transmission matrix,

where the superscript ⊤ stands for matrix transpose. Tout = [T(cout, rp)] links each point cout in the dual basis and each voxel rp of the medium to be imaged [Fig. 1k]. Mathematically, the algorithm is based on the following recursive relation:

where \({\hat{{{{{{{{\bf{T}}}}}}}}}}_{{{{{{{{\rm{out}}}}}}}}}^{(n)}\) is the estimator of Tout at the nth iteration of the phase reversal process. \({\hat{{{{{{{{\bf{T}}}}}}}}}}_{{{{{{{{\rm{out}}}}}}}}}^{(0)}\) is an arbitrary wave-front that initiates the iterative phase reversal process (typically a flat phase law) and \({\hat{{{{{{{{\bf{T}}}}}}}}}}_{{{{{{{{\rm{out}}}}}}}}}=\mathop{\lim }\limits_{n\to \infty }{\hat{{{{{{{{\bf{T}}}}}}}}}}_{{{{{{{{\rm{out}}}}}}}}}^{(n)}\) is the result of this iterative phase reversal process.

This iterative phase reversal algorithm, repeated for each point rp, yields an estimator \({\hat{{{{{{{{\bf{T}}}}}}}}}}_{{{{{{{{\rm{out}}}}}}}}}\) of the T-matrix. Its digital phase conjugation enables a local compensation of aberrations [Fig. 1l]. The focused R-matrix can be updated as follows:

where the symbol † stands for transpose conjugate. The same process is then applied to the input correlation matrix Cin for the estimation of the input transmission matrix, \({{{{{{{{\bf{T}}}}}}}}}_{{{{{{{{\rm{in}}}}}}}}}(z)={{{{{{{{\bf{G}}}}}}}}}_{{{{{{{{\boldsymbol{\rho }}}}}}}}{{{{{{{\bf{c}}}}}}}}}^{\top }(z)\times {{{{{{{{\bf{H}}}}}}}}}_{{{{{{{{\rm{in}}}}}}}}}(z)\).

Multi-scale analysis of wave distortions

To ensure the convergence of the IPR algorithm, several iterations of the aberration correction process are performed while reducing the size of the patches \({{{{{{{\mathcal{W}}}}}}}}\) with an overlap of 50% between them. Three correction steps are performed in the pork tissue experiment, whereas six are performed in the head phantom experiment [as described in Table 3]. At each step, the correction is performed both at input and output and reciprocity between input and output aberration laws is checked. The correction process is stopped if the normalized scalar product Pin/out does not reach 0.9.

Synthesize a 1D linear array

To estimate the benefits of 3D imaging compared to 2D UMI, a simulation of a 1D array is performed on experimental ultrasound data acquired with our 2D matrix array. To that aim, cylindrical time delays are applied at input and output:

with s = x or y, depending on our focus plane choice.

The focused R-matrix is still built in the time domain but using this time the following delay-and-sum beamforming:

The images displayed in Fig. 6b, c are obtained by synthesizing input and output beams collimated in the (y, z) − plane by focusing on a line located at (xf = 0 mm, zf = 37.25 mm), thereby mimicking the beamforming process by a conventional linear array of transducers.

Estimation of contrast and resolution

Contrast and resolution are evaluated by means of the RPSF. Equivalent to the full width at half maximum commonly used in 2D UMI, the transverse resolution δρ is assessed in 3D based on the area \({{{{{{{{\mathcal{A}}}}}}}}}_{(-3\,{{{{{\rm{dB}}}}}})}\) at half maximum of the RPSF amplitude:

The contrast, \({{{{{{{\mathcal{F}}}}}}}}\), is computed locally by decomposing the normalized RPSF as the sum of three components28:

αS is the single scattering rate that corresponds to the confocal peak. αM is a multiple scattering rate that gives rise to a diffuse halo; αN corresponds to the electronic noise rate which results in a flat plateau. A local contrast can then be deduced from the ratio between αS and the incoherent background αB = αM + αN,

Single and multiple scattering rates

The single scattering, multiple scattering and noise rates can be directly computed from the decomposition of the RPSF (Eq. (26)). However, at large depths, multiple scattering and noise are difficult to discriminate since they both give rise to a flat plateau in the RPSF. In that case, the spatial reciprocity symmetry can be invoked to differentiate their contribution. The multiple scattering component actually gives rise to a symmetric R-matrix while electronic noise is associated with a fully random matrix. The relative part of the two components can thus be estimated by computing the degree of anti-symmetry β in the R-matrix. To that aim, the R-matrix is first projected onto its anti-symmetric subspace at each depth:

where the superscript ⊤ stands for matrix transpose. In a common midpoint representation, (Eq. (28)) re-writes:

A local degree of anti-symmetry β is then computed as follows:

where \({{{{{{{\mathcal{D}}}}}}}}({{\Delta }}{{{{{{{\boldsymbol{\rho }}}}}}}})\) is a de-scanned window function that eliminates the confocal peak such that the computation of β is only made by considering the incoherent background. Typically, we chose \({{{{{{{\mathcal{D}}}}}}}}({{\Delta }}{{{{{{{\boldsymbol{\rho }}}}}}}})=1\) for Δρ > 6δρ0(z), and zero otherwise. Assuming equi-partition of the electronic noise between its symmetric and anti-symmetric subspace, the multiple scattering rate αM and noise ratio αN can then be deduced (see Supplementary Section 11):

In the head phantom experiment [Fig. 5b], these rates are estimated at each depth by averaging over a window of size w = (wρ, wz) = (20, 5.5) mm.

Computational insights

While the UMI process is close to real-time for 2D imaging (i.e. for linear, curve or phased array probes), 3D UMI (using a fully populated matrix array of transducers) is still far from it (see Table 4) as it involves the processing of much more ultrasound data. Even if computing a confocal 3D image only requires a few minutes, building the focused R-matrix from the raw data takes a few hours (on GPU with CUDA language) while one step of aberration correction only lasts for a few minutes. All the post-processing was realized with Matlab (R2021a) on a working station with 2 processors @2.20GHz, 128Go of RAM, and a GPU with 48 Go of dedicated memory.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

The ultrasound data generated in this study is available at Zenodo60 (https://zenodo.org/record/8159177).

Code availability

Codes used to post-process the ultrasound data within this paper are available from the corresponding author upon request.

References

Lambert, W., Cobus, L. A., Couade, M., Fink, M. & Aubry, A. Reflection matrix approach for quantitative imaging of scattering media. Phys. Rev. X 10, 021048 (2020).

Ntziachristos, V. Going deeper than microscopy: the optical imaging frontier in biology. Nat. Methods 7, 603 (2010).

Yilmaz, O. Seismic Data Analysis (Society of Exploration Geophysicists, 2001).

Babcock, H. W. The possibility of compensating astronomical seeing. Publ. Astron. Soc. Pac. 65, 229 (1953).

Roddier, F. ed. Adaptive Optics in Astronomy (Cambridge University Press, 1999).

O’Donnell, M. & Flax, S. Phase-aberration correction using signals from point reflectors and diffuse scatterers: Measurements. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 35, 768 (1988).

Nock, L., Trahey, G. E. & Smith, S. W. Phase aberration correction in medical ultrasound using speckle brightness as a quality factor. J. Acoust. Soc. Am. 85, 1819 (1989).

Mallart, R. & Fink, M. Adaptive focusing in scattering media through sound? speed inhomogeneities: the van Cittert Zernike approach and focusing criterion. J. Acoust. Soc. Am. 96, 3721 (1994).

Ali, R. et al. Aberration correction in diagnostic ultrasound: A review of the prior field and current directions, Z. Med. Phys. 33, 267 (2023).

Måsøy, S.-E., Varslot, T. & Angelsen, B. Iteration of transmit-beam aberration correction in medical ultrasound imaging. J. Acoust. Soc. Am. 117, 450 (2005).

Montaldo, G., Tanter, M. & Fink, M. Time reversal of speckle noise. Phys. Rev. Lett. 106, 054301 (2011).

Pernot, M., Tanter, M. & Fink, M. 3-D real-time motion correction in high-intensity focused ultrasound therapy. Ultrasound Med. Biol. 30, 1239 (2004).

Jaeger, M., Robinson, E., Akara̧y, H. G. & Frenz, M. Full correction for spatially distributed speed-of-sound in echo ultrasound based on measuring aberration delays via transmit beam steering. Phys. Med. Biol. 60, 4497 (2015).

Chau, G., Jakovljevic, M., Lavarello, R. & Dahl, J. A locally adaptive phase aberration correction (LAPAC) method for synthetic aperture sequences. Ultrason. Imaging 41, 3 (2019).

Varslot, T., Krogstad, H., Mo, E. & Angelsen, B. A. Eigenfunction analysis of stochastic backscatter for characterization of acoustic aberration in medical ultrasound imaging. J. Acoust. Soc. Am. 115, 3068 (2004).

Robert, J.-L. & Fink, M. Green’s function estimation in speckle using the decomposition of the time reversal operator: Application to aberration correction in medical imaging. J. Acoust. Soc. Am. 123, 866 (2008).

Lambert, W., Cobus, L. A., Frappart, T., Fink, M. & Aubry, A. Distortion matrix approach for ultrasound imaging of random scattering media. Proc. Nat. Acad. Sci. USA 117, 14645 (2020).

Bendjador, H., Deffieux, T. & Tanter, M. The SVD beamformer: Physical principles and application to ultrafast adaptive ultrasound. IEEE Trans. Med. Imag. 39, 3100 (2020).

Lambert, W., Robin, J., Cobus, L. A., Fink, M. & Aubry, A. Ultrasound matrix imaging-Part I: The focused reflection matrix, the F-factor and the role of multiple scattering. IEEE Trans. Med. Imag. 41, 3907 (2022).

Kang, S. et al. High-resolution adaptive optical imaging within thick scattering media using closed-loop accumulation of single scattering. Nat. Commun. 8, 2157 (2017).

Badon, A. et al. Distortion matrix concept for deep optical imaging in scattering media. Sci. Adv. 6, eaay7170 (2020).

Yoon, S., Lee, H., Hong, J. H., Lim, Y.-S. & Choi, W. Laser scanning reflection-matrix microscopy for aberration-free imaging through intact mouse skull. Nat. Commun. 11, 5721 (2020).

Kwon, Y. et al. Computational conjugate adaptive optics microscopy for longitudinal through-skull imaging of cortical myelin. Nat. Commun. 14, 105 (2023).

Najar, U. et al. Non-invasive retrieval of the time-gated transmission matrix for optical imaging deep inside a multiple scattering medium. Preprint at https://arxiv.org/abs/2303.06119 (2023).

Blondel, T., Chaput, J., Derode, A., Campillo, M. & Aubry, A. Matrix approach of seismic imaging: application to the Erebus Volcano, Antarctica. J. Geophys. Res.: Solid Earth 123, 10936 (2018).

Touma, R., Blondel, T., Derode, A., Campillo, M. & Aubry, A. A distortion matrix framework for high-resolution passive seismic 3-D imaging: Application to the San Jacinto fault zone, California. Geophy. J. Int. 226, 780 (2021).

Sommer, T. I. & Katz, O. Pixel-reassignment in ultrasound imaging. Appl. Phys. Lett. 119, 123701 (2021).

Lambert, W., Cobus, L. A., Robin, J., Fink, M. & Aubry, A. Ultrasound matrix imaging-Part II: The distortion matrix for aberration correction over multiple isoplanatic patches. IEEE Trans. Med. Imag. 41, 3921 (2022).

Ivancevich, N. M., Dahl, J. J., Trahey, G. E. & Smith, S. W. Phase-aberration correction with a 3-D ultrasound scanner: Feasibility study. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 53, 1432 (2006).

Lacefield, J. & Waag, R. Time-shift estimation and focusing through distributed aberration using multirow arrays. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 48, 1606 (2001).

Lindsey, B. D. & Smith, S. W. Pitch-catch phase aberration correction of multiple isoplanatic patches for 3-D transcranial ultrasound imaging. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 60, 463 (2013).

Liu, D.-L. & Waag, R. Estimation and correction of ultrasonic wavefront distortion using pulse-echo data received in a two-dimensional aperture. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 45, 473 (1998).

Ratsimandresy, L., Mauchamp, P., Dinet, D., Felix, N. & Dufait, R., A 3 MHz two dimensional array based on piezocomposite for medical imaging. In: 2002 IEEE Ultrasonics Symposium, 2002. Proceedings. Vol. 2, 1265–1268 (IEEE, Munich, Germany, 2002) https://doi.org/10.1109/ULTSYM.2002.1192524.

Provost, J. et al. 3D ultrafast ultrasound imaging in vivo. Phys. Med. Biol. 59, L1 (2014).

Provost, J. et al. 3-D ultrafast Doppler imaging applied to the noninvasive mapping of blood vessels in vivo. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 62, 1467 (2015).

Goss, S. A., Johnston, R. L. & Dunn, F. Compilation of empirical ultrasonic properties of mammalian tissues. II. J. Acoust. Soc. Am. 68, 93 (1980).

Hinkelman, L. M., Liu, D., Metlay, L. A. & Waag, R. C. Measurements of ultrasonic pulse arrival time and energy level variations produced by propagation through abdominal wall. J. Acoust. Soc. Am. 95, 530 (1994).

Lacefield, J. & Waag, R. Examples of design curves for multirow arrays used with time-shift compensation. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 49, 1340 (2002).

Ivancevich, N. M. et al. Real-time 3-D contrast-enhanced transcranial ultrasound and aberration correction. Ultrasound Med. Biol. 34, 1387 (2008).

Bertolo, A. et al. Whole-brain 3D activation and functional connectivity mapping in mice using transcranial functional ultrasound imaging. J. Vis. Exp. 168, e62267 (2021).

Chavignon, A. et al. 3D transcranial ultrasound localization microscopy in the rat brain with a multiplexed matrix probe. IEEE Trans. Biomed. Eng. 69, 2132 (2022).

Demené, C. et al. Transcranial ultrafast ultrasound localization microscopy of brain vasculature in patients. Nat. Biomed. Imag. 5, 219 (2021).

Soulioti, D. E., Espindola, D., Dayton, P. A. & Pinton, G. F. Super-resolution imaging through the human skull. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 67, 25 (2020).

Robin, J. et al. In vivo adaptive focusing for clinical contrast-enhanced transcranial ultrasound imaging in human. Phys. Med. Biol 68, 025019 (2023).

Tanter, M. & Fink, M. Ultrafast imaging in biomedical ultrasound. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 61, 102 (2014).

Jaeger, M. et al. Computed ultrasound tomography in echo mode for imaging speed of sound using pulse-echo sonography: Proof of principle. Ultrasound Med. Biol. 41, 235 (2015).

Imbault, M. et al. Robust sound speed estimation for ultrasound-based hepatic steatosis assessment. Phys. Med. Biol. 62, 3582 (2017).

Jakovljevic, M. et al. Local speed of sound estimation in tissue using pulse-echo ultrasound: Model-based approach. J. Acoust. Soc. Am. 144, 254 (2018).

Aubry, A. & Derode, A. Multiple scattering of ultrasound in weakly inhomogeneous media: Application to human soft tissues. J. Acoust. Soc. Am. 129, 225 (2011).

Brütt, C., Aubry, A., Gérardin, B., Derode, A. & Prada, C. Weight of single and recurrent scattering in the reflection matrix of complex media. Phys. Rev. E 106, 025001 (2022).

Papadacci, C., Tanter, M., Pernot, M. & Fink, M. Ultrasound backscatter tensor imaging (BTI): Analysis of the spatial coherence of ultrasonic speckle in anisotropic soft tissues. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 61, 986 (2014).

Rodriguez-Molares, A., Fatemi, A., Lovstakken, L. & Torp, H. Specular beamforming. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 64, 1285 (2017).

Zhao, D., Bohs, L. N. & Trahey, G. E. Phase aberration correction using echo signals from moving targets: I. Description and theory. Ultrason. Imaging 14, 97 (1992).

Osmanski, B.-F., Montaldo, G., Tanter, M. & Fink, M. Aberration correction by time reversal of moving speckle noise. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 59, 1575 (2012).

Berland, F. et al. Microwave photonic mimo radar for short-range 3D imaging. IEEE Access 8, 107326 (2020).

Montaldo, G., Tanter, M., Bercoff, J., Benech, N. & Fink, M. Coherent plane-wave compounding for very high frame rate ultrasonography and transient elastography. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 56, 489 (2009).

Perrot, V., Polichetti, M., Varray, F. & Garcia, D. So you think you can DAS? A viewpoint on delay-and-sum beamforming. Ultrasonics 111, 106309 (2021).

Fink, M. & Dorme, C. Aberration correction in ultrasonic medical imaging with time-reversal techniques. Int. J. Imaging Syst. Technol. 8, 110 (1997).

Mertz, J., Paudel, H. & Bifano, T. G. Field of view advantage of conjugate adaptive optics in microscopy applications. Appl. Opt. 54, 3498 (2015).

Bureau, F.et al. Ultrasound matrix imaging [data]. Zenodo https://doi.org/10.5281/zenodo.8159177 (2023).

Acknowledgements

The authors wish to thank L. Marsac for providing initial ultrasound acquisition sequences. The authors are grateful for the funding provided by the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program (grant agreement 819261, REMINISCENCE project, AA).

Author information

Authors and Affiliations

Contributions

A.A. and M.F. initiated the project. A.A. supervised the project. F.B. and A.L.B. coded the ultrasound acquisition sequences. F.B. and J.R. performed the experiments. F.B., A.L.B., and W.L. developed the post-processing tools. F.B., J.R., and A.A. analyzed the experimental results. A.A. performed the theoretical study. F.B. prepared the figures. F.B., J.R., and A.A. prepared the manuscript. F.B., J.R., A.L.B., W.L., M.F., and A.A. discussed the results and contributed to finalizing the manuscript.

Corresponding author

Ethics declarations

Competing interests

A.A., M.F., and W.L. are inventors of a patent related to this work held by CNRS (no. US11346819B2, published May 2022). W.L. had his PhD funded by the SuperSonic Imagine company and is now an employee of this company. All authors declare that they have no other competing interests.

Peer review

Peer review information

Nature Communications thanks Gianmarco Pinton and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bureau, F., Robin, J., Le Ber, A. et al. Three-dimensional ultrasound matrix imaging. Nat Commun 14, 6793 (2023). https://doi.org/10.1038/s41467-023-42338-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-023-42338-8

This article is cited by

-

Tracing multiple scattering trajectories for deep optical imaging in scattering media

Nature Communications (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.