Abstract

Next-generation sequencing (NGS) technologies are now established in clinical laboratories as a primary testing modality in genomic medicine. These technologies have reduced the cost of large-scale sequencing by several orders of magnitude. It is now cost-effective to analyze an individual with disease-targeted gene panels, exome sequencing, or genome sequencing to assist in the diagnosis of a wide array of clinical scenarios. While clinical validation and use of NGS in many settings is established, there are continuing challenges as technologies and the associated informatics evolve. To assist clinical laboratories with the validation of NGS methods and platforms, the ongoing monitoring of NGS testing to ensure quality results, and the interpretation and reporting of variants found using these technologies, the American College of Medical Genetics and Genomics (ACMG) has developed the following technical standards.

Similar content being viewed by others

A. INTRODUCTION

Since the ACMG first published guidance for laboratories performing next-generation sequencing (NGS) testing in 2013,1 the field has witnessed a rapid expansion in the use of this technology. NGS throughput, cost, and accuracy for single-nucleotide variant (SNV) detection are often superior to Sanger sequencing.2 This document describes standards for clinical laboratories that use NGS to perform diagnostic gene panel testing, exome sequencing (ES), and genome sequencing (GS) for constitutional variants. While this document attempts to cover issues essential for the development of any NGS test, it does not address specific technologies in detail, and may not cover all issues relevant to each test application or platform-specific characteristics. Testing for somatic variants, cell-free DNA analysis, infectious disease detection, and RNA applications of NGS are beyond the scope of this document.

A.1. Method for standards development

As part of the 5-year review program of ACMG laboratory guidance documents, a new workgroup was established to review and update the existing document.1 To engage the ACMG membership in the review and development process, the workgroup formulated a survey to query those performing NGS testing for constitutional disorders in clinical laboratories. In February 2018, a survey was sent to 512 individuals identified by ACMG as members board certified by the American Board of Medical Genetics and Genomics (ABMGG) or Canadian College of Medical Geneticists (CCMG) in Clinical Molecular Genetics, by the American Board of Pathology in Molecular Genetic Pathology, or self-identified laboratory genetic counselors. A total of 80 responses were received during the 17-day response window. Survey responses were presented in an open forum session attended by >100 individuals at the ACMG annual meeting in April 2018, during which additional comments were collected from attendee discussion. During the revision of these clinical laboratory standards, the opinions of the survey respondents as well as guidance documents issued by government agencies such as the Food and Drug Administration (FDA), the Centers for Disease Control and Prevention (CDC), and the New York State Department of Health, as well as other professional societies such as the Association for Molecular Pathology (AMP), the College of American Pathologists (CAP), and the Canadian College of Medical Geneticists (CCMG) were considered. This document was finalized through iterative review and editing between the workgroup and the molecular genetics subcommittee of the ACMG Laboratory Quality Assurance Committee, accepted by the ACMG Laboratory Quality Assurance Committee, and approved by the ACMG Board of Directors. A draft document was posted on the ACMG website and an email link was sent inviting ACMG members to provide comments. All comments were assessed by the authors. When appropriate, changes were made to address member comments. Both member comments and workgroup responses were reviewed by a representative of the ACMG Laboratory Quality Assurance Committee and by the ACMG Board of Directors. The final document was approved by the ACMG Board of Directors.

A.2. Definitions

A.2.1. Gene panels examine a curated set of genes associated with a particular phenotype, such as hearing loss, or indication, such as procreative management. The sensitivity and specificity of diagnostic gene panels depend, in part, on the sequence coverage of targeted regions and the types of variants that can be detected. By focusing on a limited set of genes, the cost to achieve appropriate coverage is reduced through efficient utilization of sequencing capacity and reduced computational and data storage requirements. Panel tests can maximize clinical sensitivity by not only evaluating the coding and clinically relevant noncoding regions of targeted genes by NGS, but also incorporating ancillary assays, such as Sanger sequencing, to fill in missing content or other methods to detect copy-number variants (CNVs), predefined complex rearrangements, or other specific variant types3 (section D.1.1.3). The ACMG provides guidance on gene inclusion and technical and reporting considerations for diagnostic gene panels.4 The Clinical Genome Resource (ClinGen; www.clinicalgenome.org) framework for gene–disease associations also provides additional guidance. The PanelApp website represents a crowdsourcing tool to allow gene panels to be shared, downloaded, viewed, and evaluated by the scientific public (https://panelapp.genomicsengland.co.uk/panels/).

A.2.2. Exome sequencing (ES) examines the coding and adjacent intronic regions across the genome and requires enrichment of these regions by capture or amplification methods. The exome is estimated to comprise approximately 1–2% of the genome, yet contains the majority of SNVs and small insertions/deletions currently recognized to cause Mendelian diseases. The depth of coverage for an exome is not uniform, therefore the analytical sensitivity of ES may be lower than some disease-targeted gene panels; however, exome sequencing is still expected to have a higher overall diagnostic yield. While Sanger sequencing and other technologies are commonly used to supplement gene panels, this approach is impractical for ES. Analytical sensitivity and specificity may be compromised by inadequate coverage or quality for certain regions.

A.2.3. Genome sequencing (GS) examines over 90% of the genome and has a number of advantages over diagnostic gene panels and ES. In contrast to ES, GS does not require enrichment methods prior to sequencing; therefore, GS produces more even coverage across the exome. GS data can be produced more rapidly than ES data. GS has increased capacity to simultaneously detect SNVs and CNVs, as well as complex variants such as balanced/unbalanced structural rearrangements (e.g., translocations, inversions, and insertions) and repeat expansions.5 There is value in the coverage of noncoding regions for certain pharmacogenetic variants, and increasing numbers of variants in noncoding regions will likely be found to cause monogenic and complex diseases,6 or become part of polygenic risk scores.7 Mitochondrial genome sequence data are also produced and can be analyzed and interpreted. While coverage is more even with GS, the read depth is generally lower than diagnostic gene panels and ES, and may therefore limit the detection of mosaicism.8 The cost of data generation and storage is higher for GS than for ES.

B. CLINICAL USE OF NGS-BASED TESTING

Choosing an appropriate NGS-based test is the responsibility of the ordering health-care provider. Given the large number of tests (https://www.ncbi.nlm.nih.gov/gtr/) available to the clinician, the clinical laboratory often provides critical advice in test selection. Ordering providers must weigh considerations of sensitivity, specificity, cost, and turnaround time for each clinical situation. The clinical sensitivity and diagnostic yield of these testing approaches continue to be compared.9,10,11,12,13,14,15 Laboratories should be available for phone consultation; provide test definition and intended use for each of their tests, as well as general test-ordering guidance on their website; and publish relevant experience in diagnostic detection rates.

Diagnostic gene panels are optimal for well-defined clinical presentations that are genetically heterogeneous (e.g., congenital hearing loss), for which pathogenic variants in disease-associated genes account for a significant fraction of cases. Secondary/incidental findings should not be encountered, although broad panels (e.g., epilepsy, or pan-cancer panels) may identify clinically significant findings unrelated to the test indication. By limiting the test to those genes relevant to a given disease, the panel can be optimized to maximize coverage of relevant regions of the gene(s).4 If a disease-targeted panel contains genes for multiple overlapping phenotypes, laboratories may provide the option to restrict analysis to a subset of genes associated with a specific phenotype (e.g., hypertrophic cardiomyopathy genes within a broad cardiomyopathy gene panel) to minimize the number of variant(s) of uncertain significance (VUS) reported. Assessing the clinical significance of many VUS is challenging. The number of variants with potential clinical relevance is roughly proportional to the size of the target region analyzed.

In contrast to panels, ES or GS provide a broad approach to match detected variants with the clinical phenotype assessed by the laboratory and health-care provider.16 ES may be performed with the intention of restricting interpretation and reporting to variants in genes with specific disease associations with an option to expand the analysis to the rest of the exome if the initial analysis is nondiagnostic. ES/GS approaches are most appropriate in the following scenarios: (1) when the phenotype is complex and genetically heterogeneous; (2) when the phenotype has unusual features, an atypical clinical course, or unexpected age of onset; (3) when the phenotype is associated with recently described disease genes for which disease-targeted testing is unavailable; (4) when focused testing has been performed and was nondiagnostic; (5) when sequential testing could cause therapeutic delays; or (6) when the phenotype does not match an identified genetic condition, suggesting the possibility of more than one genetic diagnosis, which has been documented in 4–7% of positive cases.17,18,19,20 When ES/GS does not establish a diagnosis, the data can be reanalyzed (section E.6). The potential impact of secondary findings with ES/GS should also be considered (section E.3).

C. NEXT-GENERATION SEQUENCING TECHNOLOGY

C.1. Sample preparation

The NGS process begins with the extraction of genomic DNA from a sample. Any validated sample type can be used as long as the quality and quantity of the resulting DNA are sufficient. The laboratory should specify the allowed sample types and quantities of DNA required (section D.2.1).

C.2. Library generation

At present, short-read platforms are used in most clinical laboratories. A library of short DNA fragments (100–500 bp) flanked by platform-specific adapters is the required input for most of the short-read NGS platforms. Fragmentation of genomic DNA is achieved through a variety of methods, each having strengths and weaknesses. Adapter sequences are ligated to both ends of the fragments. Adapters are complementary to platform-specific sequencing primer(s). Notably, not all technologies rely on a complementary primer.21 Polymerase chain reaction (PCR) amplification of the library may be necessary prior to sequencing.

C.3. Indexing

Indexing refers to the molecular tagging of samples with unique sequence-based codes, enabling pooling of samples, and thereby reducing the per sample sequencing cost. In addition, the same sample can be distributed across several lanes or instruments ameliorating the effects of lane-to-lane and instrument-to-instrument variability. Indexes can be part of the adapters or can be added as part of a PCR enrichment step. Dual indexing is typically recommended to reduce the likelihood of index misassignment.22

C.4. Target enrichment

For gene panels and ES, the genes or regions of interest must be enriched prior to sequencing. The targets can range from a relatively small number of genes chosen for a disease-targeted gene panel to the entire exome. Target enrichment approaches include amplicon-based and solid or in-solution oligonucleotide hybridization-based (capture) strategies. Amplicon-based approaches do not scale beyond a limited number of targets. Strategies for target enrichment have been reviewed.23,24,25,26

An analysis of intronic variants in the ClinVar database (Supplemental Table 1) showed coverage encompassing the −16 position at the splice acceptor site and coverage encompassing the +5 position at the splice donor site would detect >97% of the pathogenic and likely pathogenic intronic variants in the ClinVar database; the bias of this data toward reported variants and current sequencing practices is acknowledged. Targeted regions should minimally include coding exons with sufficient intronic coverage to allow analysis of positions −1_−16 and +1_+5 as well as other regions with reported pathogenic variants (e.g., splice sites of noncoding exons, deep intronic variants). It is suggested that laboratories define pathogenic content outside of the standard coding and intronic flanking regions and design probes to ensure coverage of these regions.

C.5. Sequencing platforms and methods

Current commercial platforms use a variety of processes including sequencing by synthesis or sequencing by ligation with reversible terminators, bead capture, ion sensing, and nucleotide sensing through a nanopore.27,28 Each has the capacity to sequence millions of DNA fragments in parallel. Sequencing platform performance varies in clinically important ways (section D.1.1). Table 1 lists some of the considerations required to choose an appropriate platform.

C.6. Data generation

Extensive bioinformatic support and hardware infrastructure are required for analysis. NGS data generation can be divided into four primary operations: base calling, read alignment, variant calling, and variant annotation. Base calling is the identification of the specific nucleotide present at each position in a single sequencing read; this is typically integrated into the instrument software given the technology-specific nature of the process. Read alignment involves correctly positioning DNA sequence reads in relation to a reference sequence. Variant calling is the identification of sequence differences between the sample and a genome reference. Variant annotation associates the variant with relevant contextual information and annotates zygosity (section D.1.3.3).

D. TEST DEVELOPMENT AND VALIDATION

D.1. General considerations

Test development must consider the variant types that will be detected in the genes or regions of the genome interrogated. The test validation process for an NGS test has been outlined.29 Various combinations of instruments, reagents, and analytical pipelines may be used. A limited number of clinical NGS assays are approved by the FDA (https://www.fda.gov/MedicalDevices/ProductsandMedicalProcedures/InVitroDiagnostics/ucm330711.htm); therefore, most tests will be laboratory developed tests (LDTs) that require a full validation.30 The laboratory director or equivalent may validate a test using commercially available testing components labeled as analyte specific reagent (ASR), investigational use only (IUO), or research use only (RUO). Depending on the intended clinical application of the components, each may be subject to different levels of validation.30

Clinical laboratories should consider the strengths and limitations of their chosen technology. Short-read advantages include SNV and insertion/deletion (indel) calling accuracy,31,32,33 broad use in current clinical practice, a choice of mature analysis software, availability of control samples and associated short-read data for validation, lower cost per base than long-read technology, and the option to produce a rapid (<48 hours) genome. By estimating the anticipated gigabases required, a laboratory can choose a sequencer with suitable capacity.

Short-read sequencing may be performed as single-end sequencing (genomic DNA fragments are sequenced at one end only) or paired-end sequencing (both ends are sequenced). Paired-end sequencing increases unambiguous read mapping, particularly in repetitive regions, and has the added advantage of increasing coverage and stringency of the assay, as bidirectional sequencing of each DNA fragment is performed. A variation of paired-end sequencing is mate-pair sequencing, which can be useful for structural variant detection.32 Although not ideally suited to base calling in repetitive or nonunique regions, technologies and software are being developed to assess these regions using short-read data.34,35,36

Long-read technology is superior to short-read technology in phasing of variants, which is required to establish whether variants for a recessive disorder are in cis or in trans and to determine pharmacogenomic diplotypes. In addition, long reads allow detection of repeat expansion disorders (e.g., fragile X syndrome), CNVs, structural variants such as insertion–translocations, and variants in regions of high homology containing clinically important genes such as CYP2D6, GBA, PMS2, STRC, and the HLA genes.31,34,35,37,38,39,40 See Table 2 for additional comparisons between short-read and long-read sequencing.

D.1.1. Limitations and alternatives

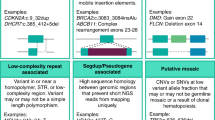

D.1.1.1. Detection of different variant types

Detection of large CNVs, genomic rearrangements, repeat expansions, mitochondrial heteroplasmy, and mosaicism by NGS requires specialized bioinformatic pipelines and highly reproducible, uniform data. Some disease mechanisms such as abnormal methylation require ancillary technologies. It is important to recognize sequence characteristics that may complicate testing or interpretation and when supplementary technologies may be needed to adequately cover the spectrum of pathogenic variants. Detection of mosaicism and heteroplasmic mitochondrial variants requires higher sequence coverage compared to the coverage needed to detect constitutional variants.

D.1.1.2. Regions with technical difficulty

The accuracy of sequence alignment and variant calling can be diminished or biased in genomic regions of high homology or low complexity, as well as repetitive or hypervariable regions.41,42 Hybridization-based enrichment methods used in gene panels and ES cannot avoid capturing homologous regions of targeted genes. The limited length of NGS sequence reads generated by short-read technology can lead to false positive or false negative variant calls when reads are incorrectly aligned to a homologous region. Resources annotating many known regions with high homology have been created (https://www.ncbi.nlm.nih.gov/books/NBK535152/).43 Methods being developed to allow sequencing in problematic regions include:

-

Local realignment after a global alignment strategy

-

Paired-end sequencing to help correct misalignment

-

Informatics tools to force alignment to the region of interest combined with modified variant calling.

Confirmation of variant calls using gene-specific ancillary technology may be necessary. Appropriate caveats specifically addressing potential false negative and false positive results should be reported. Workflows that address accurate, comprehensive diagnostic analysis of genes with known homology issues are available.44,45

D.1.1.3. Ancillary technologies

Clinically relevant genomic regions that cannot be assayed reliably by NGS (e.g., areas with homology, low complexity, methylation) should be considered for testing by ancillary assays.46 Disease-targeted gene panels that include these areas should include appropriate additional methodologies to maximize the clinical sensitivity of the test.4

Sanger sequencing can be used to fill in areas where NGS coverage or quality is insufficient to call variants confidently, but may also be limited by inherent sequencing difficulties (see section D.1.1.2). For ES/GS tests, complete coverage is not expected; however, for gene panel tests the laboratory director or equivalent has discretion to judge the need for Sanger fill-in based on the intended purpose of the test. For diagnostic gene panels, the ACMG provides guidance4 on non-NGS aspects including:

-

Handling of low coverage regions by Sanger fill-in

-

Need for ancillary assays (e.g., CNVs, methylation, repeat expansion) based on the spectrum of pathogenic variants expected for a genetic disorder

-

Disclosures needed at reporting

Projected turnaround times should take into account the time required for these ancillary technologies.

Confirmation of reportable variants using ancillary technologies has been standard practice. Several studies have attempted to determine which metrics could be used to establish parameters for ES/GS approaches, which, when met, would obviate the need for confirmatory testing of SNVs:47

-

Read depth

-

Allele balance

-

Multiple quality scores

-

Strand bias

-

Variant class (e.g., SNV, indel, CNV)

-

Other variant calls nearby

-

Genomic context of the variant (e.g., areas of segmental duplication, homopolymer regions).

Guidance from New York State (NYS) allows SNVs to be reported without orthogonal confirmation once ten SNVs within a gene have been confirmed (https://www.wadsworth.org/regulatory/clep/clinical-labs/obtain-permit/test-approval). However, variants confirmed as true positive (TP) in some samples, can be observed as false positive (FP) in subsequent samples, arguing that prior confirmation alone is insufficient.47 Laboratories should establish and make available a confirmatory testing policy for each variant class, based on a workflow that identifies variants based on laboratory-specific quality metrics drawn from a large and diverse data set as well as visual inspection of read alignments. In the absence of a validated approach, laboratories should continue orthogonal confirmation. For testing involving low allelic fraction variants such as the detection of heteroplasmic mitochondrial variants or germline mosaic variants, other approaches such as replicate testing or testing of additional tissues may be necessary. Identification of large structural variants, such as inversions or gross rearrangements, may require chromosome analysis or fluorescence in situ hybridization (FISH). If the laboratory deviates from their standard practice, for a particular variant, the exception should be noted in the main body of the clinical report.

Laboratory policies should also address instances when the confirmatory test does not support the NGS finding. Confirmatory testing methods such as Sanger sequencing have limitations (e.g., limit of detection, allele drop out). A negative result should not be considered definitive evidence of a FP by NGS.2 Clinical correlation and a second orthogonal test should be considered.

D.1.2. Reference materials

Reference materials (RMs) are used by clinical laboratories for test validation, quality control (QC), and proficiency testing (PT). Initiatives have produced genome-wide RMs for sequence and CNVs. The CDC maintains a list of available RMs here: https://wwwn.cdc.gov/clia/Resources/GETRM/sources.aspx. The Genome in a Bottle Consortium supported by the National Institute for Standards and Technology (NIST) released standardized data for multiple Coriell samples (e.g., NA12878, NA24385, NA24143, NA24149, and NA24631) and two Personal Genome project trios of differing ethnicities.48,49 Additional reference data are appearing regularly.50,51 Long-read sequencing has added to the accuracy of these RMs.37 By comparing internally produced data for these RMs to external data sets, laboratories can more accurately evaluate their test performance. Internal samples assessed by orthogonal methods may also be used as RMs.

While the available RM data is suitable for SNV and small indel performance, robust genome-wide RM data for CNVs, nucleotide repeats, and other genomic variant types are becoming available (https://www.biorxiv.org/content/10.1101/664623v3). A laboratory needs to obtain RMs to represent all appropriate variants or variant classes assessed in their assay.

Note that usage of cell lines as RMs can be limited by genomic stability over time. Genomic DNA extracted from blood is stable, but gathering enough from one individual for long-term, and potentially multilab, use is challenging. Cell lines used as standard RMs for NGS will need to be monitored over time and passages to understand the extent to which instability or usage of amplification techniques impact the samples. Electronic sequences have been computationally generated and can be used as RMs. Simulated sequences are typically designed to address a known issue, such as repetitive sequences, known indels, or SNVs, for which a sample is not available.

D.1.3. Bioinformatic pipelines

Analysis of data generated by NGS platforms is complex and typically requires a multistage data handling and processing pipeline. A wide variety of tools are available and under constant development to improve this process. Analytical pipelines should be developed and optimized separately from the wet lab processes during initial test development by analyzing data containing known sequence variants of various types (e.g., SNVs, small indels, intragenic or large CNVs, structural variants). The optimized pipeline can then be deployed in each end-to-end test validation. When commercially developed software is used, the laboratory should document any validation data provided by the vendor, but must also perform an independent validation of the software. In addition, the laboratory must establish that the bioinformatic pipeline can accurately track sample identity.

The development of standard metrics (section D.3) and well-established RMs (section D.1.2) for performance testing, has significantly improved the capability to perform accurate cross-platform comparisons.48,52 In a joint recommendation by AMP and CAP, standards and guidelines were drafted to aid in validation of bioinformatic pipelines; however, each laboratory must design the appropriate pipeline(s) optimized for its intended clinical use.53 The laboratory should document all hardware, software, databases, including versions used, and additional internally developed systems, and include any modifications or versions if applicable for traceability, that are used in the validated pipeline.54 Basic concepts in the analysis of NGS are outlined below.

D.1.3.1. Base calling

Each NGS platform has specific sequencing biases that affect the type and rates of errors that can occur, including signal intensity decay between the beginning and end of the read and erroneous insertions and deletions in homopolymeric stretches.55 Base-calling software that accounts for technology-specific biases can help address platform-specific issues. The best practice is to utilize a base-calling package that is designed to reduce specific platform-related errors. Generally, an appropriate, platform-specific base-calling algorithm is embedded within the sequencing instrument. Each base call is associated with a quality metric providing an evaluation of the certainty of each call. This is usually reported as a Phred-like score (although some software packages use a different quality metric and measure slightly different variables).

D.1.3.2. Read alignment

Various algorithms for aligning reads have been developed that differ in accuracy and processing speed. Depending upon the types of variants expected, the laboratory should choose one or more read alignment tools to be applied to the data. Several commercially available or open-source tools for read alignment are available that use a variety of alignment algorithms and may be more efficient for certain types of data than for others.56,57,58,59 Alignments are typically stored in standard binary alignment map (BAM) format, although newer compressed and/or secured file formats (CRAM/SECRAM) are also available.60 Proper alignment can be challenging when the captured regions include homologous sequences, but is improved by longer or paired-end reads. In addition, alignment to the full reference genome should be performed, even for ES and disease-targeted testing, to reduce mismapping of reads from off-target capture, unless appropriate methods are used to ensure unique selection of targets.

D.1.3.3. Variant calling and annotation

Increasing sequencing depth and removing duplicate reads increases the accuracy of single or multiple base variant calling. Specific algorithms may be required to detect insertions and deletions (indels), intragenic or large CNVs, repeat expansions, variants in regions of high homology, mitochondrial variants, and structural chromosomal rearrangements (e.g., translocations, inversions). Local realignment after a global alignment strategy can help more accurately call indel variants.61,62 Large deletions and duplications can be detected by comparing actual read depth of a region to the expected read depth, through paired-end read mapping (independent reads that are associated to the same library fragment), skewed allelic ratios, or apparent non-Mendelian transmission of variants. Paired-end and mate-pair (joined fragments brought from long genomic distances) mapping can also be used to identify translocations and other structural rearrangements.

Variant annotation software uses the fraction of reads to differentiate between heterozygous and homozygous sequence variants; however, an unambiguous zygosity call is not always possible and could reflect mosaicism (section D.2.4). Software annotates the variant with relevant information such as the genomic coordinates, coding sequence nomenclature, protein nomenclature, and position relative to gene(s) (e.g., untranslated region, exon, intron). Ideally, the annotation will also include additional information from external resources (as discussed in section E) that facilitates determination of its analytic validity and clinical significance, such as quality metrics and allele frequency in internal and external data sets. This information may also include the degree of evolutionary conservation of the encoded amino acid and a prediction of its potential impact on protein function, gene regulation, or RNA splicing using in silico algorithms.

D.1.3.4. Phase determination

Two variants that are adjacent or close together are particularly challenging to annotate. While each variant substitution is typically tabulated separately, the consequence of the two substitutions must be considered together. For example, two adjacent changes in the triplet codon for a given amino acid may be different from each change considered separately. Bioinformatic pipelines may be adjusted to account for these scenarios but manual curation may be required. When more than one variant is identified in the same gene, it is important to determine whether they are located on the same chromosome (in cis) or opposite chromosomes (in trans). If the difference in the genomic positions for the variants is less than the read length, it is likely that phase can be determined from standard NGS reads. Phase has traditionally been established by targeted testing of parents or other first-degree relatives or trio analysis, but newer technologies are available for determination of phase from a single individual based on short-read sequencing.63

D.1.3.5. File formats

Many different formats have existed for the export of raw variants and their annotations. Each variant file format typically includes a definition of the file structure and the organization of the data, specification of the coordinate system being used as part of the file generated (e.g., the reference genome to which the coordinates correspond, whether numbering is 0-based or 1-based, and the method of numbering coordinates for different classes of variants), and the ability to interconvert to other variant formats and software. If sequence read data are provided as the product of an NGS test, they should conform to one of the widely used formats (e.g., BAM files for alignments, FASTQ files for sequence reads) or have the ability to be readily converted to a standard format. The variant call format (VCF) is a structured text file format that conveys data about specific positions in the genome as well as meta-information about a given data set. It should be noted that the VCF file format is typically limited to variant calls. Many advocate for the inclusion of reference calls in the VCF format (gVCF file) to distinguish the absence of data from reference sequence. Even within this prescribed format, ambiguities can arise when representing complex genetic variants. These should be addressed in order to implement automated duplicate removal and/or variant filtering.64 The CDC has spearheaded an effort to develop a consensus gVCF file format, with specific recommendations regarding reference sequence alignment, variant caller settings, use of genomic coordinates, and gene and variant naming conventions, with the goal of reducing ambiguity in the description of sequence variants and to facilitate genomic data sharing.65 Many genomic file formats are now maintained by GA4GH as international standards (https://www.ga4gh.org/genomic-data-toolkit/).

D.1.3.6. Variant filtering processes

Variant filtering pipelines use a variety of approaches to streamline and automate the process based on reportable data required for a given test. Initial variant filtration processes should maximize analytical sensitivity and minimize false negatives. Subsequent filtration can then be used to increase specificity. To this end, a laboratory should establish a series of variant inclusion and exclusion filters (i.e., filter in versus filter out) based on conditional properties that will accurately and reliably identify the reportable variants for a given test. While these processes can be automated, it is recommended that manual processes exist to view variants filtered out from a given test when necessary. Suggestions regarding filtering relative to variant interpretation and specificity are discussed in section E.2.1.

D.1.4. Staff qualifications

Given the technical and interpretive complexity of NGS, reporting and oversight of clinical NGS-based testing should be performed by individuals with appropriate professional training and certification. The laboratory director or qualified designee should have ABMGG certification in Clinical Molecular Genetics and Genomics or Laboratory Genetics and Genomics or American Board of Pathology certification in Molecular Genetic Pathology, or a foreign equivalent. Directors should have extensive experience in the evaluation of sequence variation and evidence for disease causation as well as technical expertise in sequencing technologies and bioinformatics. For laboratories offering ES/GS services, the laboratory should have access to broad clinical genetics expertise to evaluate the relationships between genes, variants, and disease phenotypes.

Expertise within the laboratory must include a detailed working knowledge of the analytical procedures, data interpretation, bioinformatics methods, and data management. For in-house developed assays and analysis pipelines, this breadth of knowledge is required to develop and validate a test. If commercial assay designs or analysis software are used, the laboratory must be able to critically evaluate the data produced.

Many laboratories utilize additional staff to assist in curation of literature and other evidence used in variant assessment before the data are reviewed by the laboratory director or designee. These individuals often have postgraduate education (e.g., PhD, master’s degree in genetic counseling or a related field) and/or additional training in gene–disease association and variant interpretation. Ongoing competency assessment of technical and interpretive staff is recommended. The laboratory director is ultimately responsible for the technical evaluation of the data and the professional interpretation of the variants in the context of the subject’s phenotype.

D.2. Analyzing and optimizing data before validation

Once the scope and method of testing have been chosen, iterative cycles of performance optimization typically follow until all assay conditions as well as data analysis settings are optimized. Differences in specimens, platforms, and pipelines can produce variability in the resulting data. During optimization the laboratory must first determine the parameters and minimum thresholds for coverage, base quality, and other test quality metrics that define an acceptable quality sequencing run for each sample type through a systematic evaluation of the NGS assay. This may include analyses at intermediate points during the sequencing run as well as at the completion of the run (e.g., real-time error rate, % of target captured, % of reads aligned, fraction of duplicate reads, average coverage depth, range of insert size). In this phase, the laboratory should track these data over a series of runs that include well-established reference samples with or without synthetic variants. This information is used to establish minimum depth of coverage thresholds for variant calling as well as allelic fraction, as they influence analytical sensitivity and specificity. If pooling of samples is planned, the laboratory should also determine the number of samples that can be pooled per sequencing run to achieve these thresholds. Inability or failure to achieve the quality thresholds and other nontechnical design expectations (e.g., baseline costs, turnaround time projections) of an assay should prompt the laboratory to determine if usage of supplemental procedures or a different assay is required. Before proceeding to test validation, a validation plan and standard operating procedure(s) for the entire workflow should be established.

D.2.1. Specimen requirements

NGS may be performed on any specimen that yields DNA (e.g., peripheral blood, saliva, fresh or frozen tissues, cultured cells, formalin-fixed paraffin-embedded [FFPE] tissues, prenatal specimens). However, performance may vary by sample type (e.g., saliva-derived DNA performs more poorly on GS compared with capture-based NGS due to contaminating bacterial DNA; FFPE samples perform poorly on long-read sequencing methods). The laboratory needs to establish the types of specimens and the minimum amount of input DNA required for the particular NGS assay and platform that will be used. The quality of DNA and variant detection requirements will likely differ by specimen type and as such, the laboratory will need to determine acceptable parameters for each type (e.g., volume, amount of tissue, collection device).

Previously extracted genomic DNA may be accepted for testing; however, the original source of the DNA (e.g., blood, saliva) should be determined given the potential differential impact on testing as described above.

D.2.2. DNA requirements and processing

The laboratory should establish the minimum DNA requirements to perform the test. Considerations include how much DNA may be required for confirmatory and follow-up procedures. The laboratory should have written protocols for DNA extraction and quantification (e.g., fluorometry, spectrophotometry) to obtain adequate quality, quantity, and concentration of DNA. In general, the lower limit of detection (LLoD, the lowest amount of DNA acceptable for a test) is calculated as the lowest quantity of an analyte that will generate at least 95% of the positive calls among all true positives.46,66 To identify an initial LLoD as well as an upper LoD (ULoD) that yield data within the established quality metrics, serial dilutions of varying DNA input from multiple specimen types can be assessed.

In addition, the lab must determine the LLoD and ULoD for each anticipated variant type including specimens with mixed content (e.g., mosaicism, chimerism, mitochondrial heteroplasmy). Cell line mixing studies of two well-characterized reference samples (i.e., mixing two pure DNA samples at varying percentages) can aid in these initial evaluations.66 At a minimum, the final LLoD for input in combination with mixed content should be represented by samples in the analytic validation to ensure that quality metrics and performance parameters are maintained across the final assay specifications.

D.2.3. Coverage

Generally, variant calls are more reliable as the depth of coverage from high-quality sequence reads increases for a given position. Low depth of coverage increases the risk of missing variants (false negatives) and increases the risk of assigning incorrect allelic states (zygosity), especially in the presence of amplification bias. Low coverage decreases the ability to effectively filter out sequencing artifacts leading to false positives. Laboratories should establish a minimum depth of coverage necessary to call variants and report analytical performance related to the minimum threshold that is guaranteed for the defined targeted genes or regions.

To call germline heterozygous variants, a minimum base-calling quality of Q ≥ 20 and a minimum depth of 10× for all nucleotides in the targeted region have been suggested;67 https://www.wadsworth.org/regulatory/clep/clinical-labs/obtain-permit/test-approval; however, a 10× depth of coverage may not be sufficient for all variants in all regions. Data suggest that a test intended to detect genetic diseases characterized by a high rate of mosaicism requires 30–50× depth of coverage to detect mosaicism at a level of 10–15%.68 Further increased depth will increase confidence in the call.69,70 Detection of mitochondrial heteroplasmy will also require significantly increased coverage depth to ensure detection of variants down to ~5% allele fraction.71

The average depth of coverage is a readily obtainable surrogate for overall assay performance. For example, to ensure that at least 95% of bases reach at least 10× coverage, an assay may require a minimum mean depth of coverage of 75–100× for ES or 30× for GS. Note that the desired coverage of the region to be sequenced will impact the number of samples that can be pooled in a sequencing run. It is also important to note that minimum coverage is highly dependent on many aspects of the platform and assay including base call error rates, quality parameters such as how many reads are independent versus duplicate, and other factors such as analytical pipeline performance.72 Therefore, it is not possible to recommend a specific minimum threshold for overall coverage. Laboratories will need to choose minimum coverage thresholds in accordance with quality metrics necessary for analytical validity. Estimating the required depth of coverage based on a laboratory’s specific performance parameters is discussed in Jennings et al.66

Other data quality metrics that are useful include the percentage of reads aligned to the genome, the percentage of reads that are unique (prior to removal of duplicates), the percentage of bases corresponding to targeted sequences, the uniformity of coverage, and the percentage of targeted bases with no coverage. Coverage limitations, such as regions of the genome that are difficult to sequence (section D.1.1.2), should be reported as a technical limitation of the test. Ancillary technologies may complement a final test offering to reduce the impact of these limitations on clinical sensitivity.

D.2.4. Allelic fraction and zygosity

Germline heterozygous variants are expected to be present in 50% of the reads; however, capture, amplification, or sequencing bias as well as coverage can lead to variability. Laboratories must determine allelic fraction ranges to distinguish true calls from false positive calls, which typically have a low allelic fraction; assign zygosity; and detect mosaicism or heteroplasmy. The laboratory should understand how zygosity is defined by the bioinformatics pipeline, how it is presented, and establish a range of allele fractions needed to make a final zygosity call based on empirical data. Allele fractions should be investigated by orthogonal methods to clarify ambiguous zygosity or potential mosaicism (section D.1.1.3). Coverage of homozygous calls should be reviewed to distinguish true homozygosity from potential hemizygosity due to deletion of the second allele. The performance of different types of variants should be analyzed separately as their performance may vary. For example, coverage and allelic fraction for indels can be lower when the alignment tool(s) discards indel-containing reads. Finally, laboratories should be aware of genes for which variation in blood may often be confined to blood-forming cells (e.g., TP53, ASXL3),73 even if appearing constitutional, and consider reporting any detected variation in healthy individuals with a warning of possible mosaicism or clonal hematopoiesis of indeterminate potential (CHIP).

D.2.5. Other factors impacting data quality

The lab must identify the factors that occur in clinical samples which will reduce the quality or quantity of the resulting sequence data. Different NGS library preparation approaches, sequencing chemistries, and platforms carry intrinsic error rates; however, systematic evaluations suggest these rates are extremely low for most (section D.1).74,75 In addition, interfering contaminants can reduce the amount of genomic sequence available for analysis, for example, reducing the number of reads that will map to the human reference genome. Methods to detect, reduce, and monitor these interfering substances and improve the analytical specificity of an assay have been studied previously.76,77,78 If significant interoperator variability is observed during initial optimization, the assay may not be ideal for clinical production.

D.3. Establishing quality control metrics and performance parameters before validation

The laboratory must develop quality control (QC) metrics based on established performance parameters during preliminary assay development to be applied to the validation as well as in production. QC metrics should be chosen to monitor sample and data integrity. Selected QC metrics should demonstrate that each assay meets the required coverage depth and quality for the targeted regions (i.e., genome, exome or panel) and variant types identified in the test design.

Examples of metrics used to monitor sample and data integrity:

-

Sample preparation failures

-

Sequencing run failures

-

Cross contamination

-

Cluster cross-talk

-

Sample identity including familial relationships, if applicable

Examples of metrics used to monitor sample and data integrity per sample and over time:

-

Total data yield (gigabases)

-

Raw cluster density

-

Mean coverage

-

Median coverage

-

Percent (%) bases ≥Q30 (Phred-scale)

-

Percent (%) aligned bases (reference/target dependent)

-

Percent (%) duplicate paired reads

-

Target coverage at varying depths (10×, 20×, etc.)

-

SNV Het/Hom/Hemi/unknown zygosity ratio

-

SNV transition/transversion ratio

-

Deviations in expected allele fraction

Laboratories should choose, develop, and validate metrics to monitor performance as appropriate for their assays. If necessary, metrics for each sample can also be individually assessed using case-level metrics (sections D.2.3–D.2.5) with respect to the specific test ordered by the provider.

Overall, the laboratory must document that the NGS assay can correctly identify known variants of multiple types. To extrapolate the ability of an assay to detect novel variants, it is necessary to compare internal data to known truth sets representing different variant types within the targeted regions. Maximizing the number of variants of different types in the targeted regions can establish the confidence and reliability of the assay. It has been suggested that 59 variants of each type (e.g., substitutions, indels, CNVs) be evaluated to confirm that regions covered by the assay can be analyzed with 95% confidence and 95% reliability (section D.4.1).66,79 Detection of variants can be influenced by local sequence context and therefore a uniformly high sensitivity will not be possible for every possible variant. Nevertheless, the greater the number of variants tested and the larger and more diverse the genomic loci included in this cumulative analysis, the higher the confidence that the assay is detecting variants accurately.80 Variants included in this type of analysis do not need to be pathogenic as this has no bearing on their detectability. However, it could be argued that the absence of large numbers of established pathogenic variants in test development and validation may result in an unanticipated shortcoming of the assay.

There are limitations to using truth sets as they contain the most easily assessed variants and may contain false positive variants. The definition of a truth set may also be influenced by cross-platform ascertainment bias or other technical limitations in variant detection (e.g., some regions may have no or low sequence coverage in one NGS assay design versus another).81 It is important to track and investigate discrepancies where there is absent, conflicting, or ambiguous data between the assay under development and the truth set to accurately define the limitations of the assay.

The following performance parameters should be assessed and met during the optimization phase and formally documented during validation: (1) accuracy, (2) precision/reproducibility/robustness, (3) analytical specificity, (4) analytical sensitivity, (5) limit of detection, and (6) clinical sensitivity for each type of variant that the laboratory plans to report. These performance metrics should be completed for the entire assay as a whole, as well as each reported variant type and potentially size, when appropriate (e.g., SNVs, CNVs, insertions and deletions). While parameters may be calculated at the NGS technology level initially, the final reported parameters should be relevant to the full test if multiple technologies are utilized. For example, tests may include both NGS and ancillary technologies, including steps to confirm variants and/or Sanger fill-in for missing data. Some regulatory agencies may require additional validation components.

D.3.1. Accuracy

The accuracy of detection for the different variant types, including different size events within a variant type, should be measured by calculating the positive and negative predictive values based on a given truth set, with confidence intervals included. For example, >99% should be expected for SNVs; however, a range of >95–98% has been used by some laboratories for all variant types. For variant types for which there is no truth set data (e.g., Genome in a Bottle)48,81 then FDA recommendations for accuracy should be followed.46

D.3.2. Precision/reproducibility/robustness

The laboratory should document the assay’s precision (repeatability and reproducibility) based upon truth sets. For example, the laboratory should run a single library or sample preparation on 2–3 lanes or wells within the same run (repeatability, within-run variability) as well as 2–3 different runs (reproducibility, between-run variability). Other meaningful measures of reproducibility are instrument-to-instrument variability (running samples on 2–3 different instruments if available) and interoperator variability. Complete concordance of results is unlikely for NGS technologies; however, the laboratory should establish parameters for sufficient repeatability and reproducibility. Depending on the complexity of the assay, some number of differences in calls is expected; however, all differences in calls between runs should be investigated and explained as part of the validation.

An assay’s robustness (likelihood of assay success) should be monitored and have adequate QC measures in place to assess success at critical points such as library preparation and immediately following sequencing (section G).

D.3.3. Analytical specificity and sensitivity

In the genomic area, analytical specificity is the proportion of variants correctly identified as the same variant compared with the reference in a particular sample. During the validation, truth sets should be analyzed to determine the analytic specificity of the assay. The FP rate can be calculated as 1 – specificity. If variant calls are confirmed by an ancillary method, the technology-specific FP rate is less critical unless it generates a significant amount of confirmatory testing per sample that is not sustainable for the laboratory. In general, the specificity of the assay should at a minimum be >98%.

Analytical sensitivity is the proportion of variants correctly identified as different from the reference sequence in a particular sample. The false negative rate can be calculated as 1 – sensitivity. In general, the sensitivity of the assay should at a minimum be >98%.

D.3.4. Minimum input DNA requirement and limit of detection of the test

LoD and LLoD were discussed in section D.2.2. When possible, the use of samples with known allelic imbalances that are confirmed by orthogonal methods should be assessed to provide validation of the LoD. Note that some licensing entities including CAP require patient sample inclusion in LoD validation (CAP MOL.36118). The LLoD value is used to help set limits for the detection of mosaicism, chimerism, or mixed specimens as well as thresholds for sample admixture or cross-talk associated with automated pipetting instruments and sample indexing.

D.3.5. Clinical sensitivity/specificity

For disease-specific targeted panels, the lab should establish the estimated clinical sensitivity of the test based upon a combination of analytical performance parameters and the known contribution of the targeted set of genes and types of variants detectable for that disease. Clinical specificity can be maximized by limiting or excluding genes with limited or disputed evidence related to the phenotype, thereby minimizing detection of VUS. For ES/GS of individuals with undiagnosed (and possibly nongenetic) disorders, it is not feasible to calculate a theoretical clinical sensitivity or specificity for the test given its dependency on the applications and indications for testing.19,20,82,83

D.4. Test validation

Once test content, assay conditions, and pipeline configurations have been established as outlined in D.2 and D.3, a validation plan should be prepared and executed from start to finish on all permissible sample types. Assay performance characteristics including analytical sensitivity and specificity, accuracy, and precision should meet thresholds predetermined in the validation plan (section D.3).66,80,84

D.4.1. Sample consideration for validation

Performance data across tests using the same platform can be combined to establish a cumulative “platform” performance. By maximizing the number and types of variants tested across a broad range of genomic regions across all acceptable specimen types, confidence intervals can be established. Because the size of NGS tests make validation of every base impossible, this approach enables extrapolation of performance parameters to novel variant discovery within the boundaries of the established regions.

For example, when using standard short-read technologies that produce ~150 bp reads, the common types of variants required to be evaluated can be grouped relative to the methodology used for variant calling. Although there will be overlap, typically an “indel” would be an event within the targeted capture probe and sequencing read length, whereas a “CNV” would be an event that spans more than a single capture probe and exceeds the sequencing read length. Detection of larger indels or CNVs is highly dependent on sequence context with deletions typically being easier to detect than duplications. Since performance of specific events cannot be predicted, testing a variety of events (e.g., type, size, position in captured region) across different genes or regions of interest is important. Alternatively, long-read technologies may allow for larger indel events (>100 bp) to be detected by variant calling methods and validation samples would need to reflect this.

If the spectrum of genomic variation related to a specific disease includes types of variation outside those covered by NGS testing, then ancillary methods should be employed to capture these. Issues related to accurate sequencing of highly homologous regions should be addressed when one or more genes within the test have known pseudogenes or other homologous loci. If high clinical sensitivity is based on the ascertainment of particular common pathogenic variants, these should be included in the validation. Alternatively the test report can note a limitation if ancillary methods are not used.

The first test developed by a laboratory generally requires a more comprehensive validation than subsequent tests developed on the same platform using the same basic bioinformatics pipeline design. In practice this may entail sequencing a larger number of samples in order to test a sufficient number of each variant type (section D.3). Note that current “truth” sets of a few samples (e.g., Genome in a Bottle samples)48,81 often encompass most of these variant types across the genome and are recommended to be included. Importantly, they are a renewable resource that can aid in monitoring test performance over time and after modifications (section D.1.2). Additionally, specific licensing entities may require a minimum number of samples for validation. For example, NYS guidelines require that initial NGS validations for a laboratory include at least 25 samples (NYS NGS20). In an effort to ensure regulatory compliance, CAP and AMP have created spreadsheet resources to guide laboratories through the test validation process3 (https://www.cap.org/member-resources/precision-medicine/next-generation-sequencing-ngs-worksheets).

D.4.2. Bioinformatics

The bioinformatic pipeline must produce the expected results, starting from the data produced by the sequencing equipment. The laboratory should establish the reproducibility of a chosen analytic pipeline, such that a given standard input should produce the same output each time it is run. Additional limitations of the pipeline, such as lack of precision in repetitive regions, may be identified during validation and should be discussed in the validation summary.

D.4.3. Evaluation of quality metrics

QC metrics established in the prevalidation stage for each assay (see section D.3) should be monitored throughout the validation. Deviations from expected values should be investigated to determine whether procedural changes should be implemented during the test validation phase. These deviations should be discussed in the validation summary.

D.4.4. Transfer to production

Final assessment of all established performance parameters must be summarized to support the transfer of a clinical test into production. Any deviations or additional limitations to the test’s performance identified during the validation should be described in the validation summary and reflected in the final approved version of the test. If a test fails multiple performance parameters, reoptimization and subsequent validation studies may be necessary. Once a test is validated for production, it is recommended that 2–3 test samples be initiated through the production workflow to identify any unanticipated issues.

D.5. Validating modified components of a test or platform

D.5.1. Version control

Improvements and adjustments to a test are expected over time, but must be validated and documented through version control. The version control system should record the dates and times that changes are implemented in order to accurately track clinical test performance. Versions of the test and/or its components should be represented in the methods section of the clinical report (section E.4.2).

D.5.2. Modified assay conditions, reagents, instruments, and analytical pipelines

Changes in reagents, hardware, and software that can alter the accuracy of the final test result (e.g., new sequencing chemistry, new instruments, new lots of capture reagents, new software versions) must be validated before they are put into production. The process may require an end-to-end test validation with previously analyzed specimens or well-characterized controls. Ideally, the same, renewable, well-characterized samples (e.g., a HapMap sample, Genome in a Bottle NIST sample) should be used. Determination of the analytical performance and other parameters, such as coverage, should be carried out as outlined in the prior sections. New reagent lots and shipments should be compared with previous reagent lots or with suitable reference material before, or concurrent with, their use in production. Software changes may use the laboratory’s existing data for validation obviating the need for an end-to-end validation.

While data storage is expensive, additional or nonstandard analysis of historic data is recommended to identify the strengths and weaknesses of the analytical pipelines over time and can aid in future test development. Note that any alterations or improvements of the pipeline should prompt revalidation; however, the extent of the validation can be commensurate with the complexity or magnitude of the changes. For example, changes in alignment and base calling would warrant a more extensive validation than updates to versions of downloaded databases.

D.5.3. Added/modified test content

When modifying the content of a validated test, an abbreviated end-to-end or in silico validation (3–10 samples) may be sufficient. Such modifications include adding the analysis of genes in a gene panel test using a previously validated capture library, platform, and pipeline design; employing a new capture library using a validated platform and pipeline design; and updating the equipment or assay (e.g., reagents, bioinformatics, or software updates) in a previously validated panel. Separate validation documentation should be generated and the date of implementation into production must be documented. Gene content of panels should be examined every 6 months to determine whether new data suggest the addition of new genes or the removal of others4 (section A.2.1).

D.6. Considerations for a distributive testing model

Some components of a laboratory test may be performed by an outside entity (e.g., sequencing is performed by one laboratory, but the analysis and reporting are performed by a different laboratory). Laboratories should ensure that the outside entity has similar accreditation (CAP/CLIA) and licensure, uses aligned validation and quality metrics, and provides a mechanism through which to report procedural and quality deviations. Per CAP guidance, the validation of any test using this model should include an integrated validation including wet bench and bioinformatics. Any distributive testing should be addressed in the laboratory’s quality management (QM) plan, and should include the types of data to be transferred as well as a system for monitoring trends in the data.

E. REPORTING STANDARDS

E.1. Turnaround times

The laboratory should have written standards for NGS test prioritization and turnaround times (TATs) that are transparent and readily accessible. These TATs should be clinically appropriate and allow for rapid testing when warranted. Laboratories should also have a notification plan in place to alert the ordering providers when a result is expected to be significantly delayed.

E.2. Data filtering and interpretation

E.2.1. Variant filtering

In traditional disease-targeted testing, the number of identified variants is typically small enough to allow for the individual assessment of all variants in each sample, once common benign variants are curated. However, ES identifies tens of thousands of variants and GS identifies several million, making this approach to variant assessment impossible. A filtering approach must be applied for ES/GS studies. Laboratories may need to employ autoclassification strategies for very large disease-targeted panels. Laboratories must also balance overfiltering that could inadvertently exclude causative variants with underfiltering that presents too many variants for expert analysis. A stepwise approach is generally necessary. For example, an initial filter-step identifies benign variants and those that are obviously disease-causing followed by other filtering driven by phenotypic associations and inheritance patterns. Regardless of the approach, laboratories should describe their methods of variant filtering and assessment, including the limitations of each method.

The filtering algorithm design may differ across case types, and requires a high level of expertise in genetics and disease diagnosis. This expertise should include a full understanding of the limitations of the databases against which the individuals’ results are being filtered as mentioned previously and the limitations of both the sequencing platform and multiple software applications being used to generate the variants analyzed. Individuals leading these analyses should have extensive experience in the evaluation of sequence variation and evidence for disease causation, as well as an understanding of the molecular and bioinformatics pitfalls that are encountered.

E.2.1.1. Known and benign variant filtering

Sources of broad population frequencies that can be used for autoclassification of benign variation include dbSNP (www.ncbi.nlm.nih.gov/projects/SNP), the National Heart, Lung, and Blood Institute (NHLBI) Exome Sequencing Project (ESP, evs.gs.washington.edu/EVS), the 1000 Genomes Project (1000 K, www.1000genomes.org), and the Genome Aggregation Database (gnomAD, http://gnomad.broadinstitute.org/). To account for statistical variance in the gnomAD populations due to the control sample size, precomputed frequency estimates designated as a filtering allele frequency (FAF) have been made available. FAFs represent a statistical estimate of a variant’s allele frequency in a given population at either a 95% or 99% confidence interval.85 The laboratory can utilize allele frequencies to determine if a variant’s presence in these populations exceeds the maximum credible disease allele frequency. Determining the maximum credible disease allele frequency requires extensive knowledge of all related disease prevalence, inheritance patterns, pathogenic variant (i.e., allelic) heterogeneity, and other factors for a given gene. Moreover, frequency thresholds should be assessed conservatively to account for the possibility of undocumented reduced penetrance at the gene or variant level, and the possible inclusion of individuals who have not been phenotyped, who have asymptomatic or undiagnosed disease, or who have a known disease. Examples assessing these frequency cutoffs, which can enable rapid autoclassification of benign variants, have been published.86,87

Variants previously associated with disease either in general variant databases such as ClinVar, publications, or locus-specific databases are generally flagged for further assessment regardless of population frequencies. The DECIPHER database (https://decipher.sanger.ac.uk/browser) can provide a general overview of a gene, with graphical representation of protein domains and the distribution of reported population and disease-associated variants. While several expert curated databases exist (e.g., ENIGMA, INSiGHT, CFTR2), laboratories should not assume that they have correctly assessed pathogenicity as few are curated to a clinical grade with strict evidence-based consensus assessment of supporting data.88 The FDA has recognized the ClinGen human variant data set through their Human Variant Database program (https://www.fda.gov/news-events/press-announcements/fda-takes-new-action-advance-development-reliable-and-beneficial-genetic-tests-can-improve-patient). This recognition signifies that the ClinGen variant data set (inclusive of the curated evidence and assertions of pathogenicity) is recognized as a scientifically valid evidence source. As such, the data and assertions can be utilized by test developers to support the clinical validity of their tests. Note that the intent of the FDA’s human variant database program is to support test development. However, laboratories are expected to independently assess the pathogenicity of every reported variant, and not rely solely on external databases and other resources, especially given the ongoing evolution of variant knowledge. Users can access the ClinGen variant data set via ClinVar (https://www.ncbi.nlm.nih.gov/clinvar/) and can access the evidence supporting those assertions via the ClinGen Evidence Repository (https://erepo.clinicalgenome.org/evrepo/).

E.2.1.2. Additional patient-centric filtering of ES/GS data

The ordering health-care provider must provide detailed phenotypic information to assist the laboratory in the analysis and interpretation of test results. This step is most important for panels that include a large number of genes as well as ES/GS. The interpretation and prioritization of variants by the laboratory may be enhanced by an iterative process with health-care providers to reassess the individual for specific clinical features of potential diagnoses suggested by the sequence data. Conversely, as laboratorians consider the phenotype while prioritizing variants, health-care providers should interact with the lab if the ES/GS is nondiagnostic. A discussion of the individual’s phenotype may guide the reporting of variants with questionable phenotypic fit.89 This is especially relevant in young children who may not manifest all the diagnostic features of a syndrome. For a detailed discussion of this topic, please review the ACMG statement regarding the dissemination of phenotypes in the context of clinical genetic and genomic testing.16

To accurately employ phenotypic-centric filtering, laboratories should maintain and regularly update lists of genes with associated levels of evidence (https://www.clinicalgenome.org/) connecting them to discrete phenotypes and/or conditions using a structured ontology (e.g., Human Phenotype ontology, OMIM disease ID, MedGen). These lists should be examined at least every 6 months.4 Storage of patient phenotypes with structured ontology is also recommended to promote more rapid analysis of data using phenotype-centric filtering. In addition, utilizing external collaborative resources such as Matchmaker Exchange (www.matchmakerexchange.org) can help identify and refine disease–condition relationships.90

When reviewing genes with more poorly defined disease–condition relationships, the laboratory must apply additional strategies for variant filtering. While it is generally assumed that causative variants for Mendelian disorders will be rare and highly penetrant,91 the range of penetrance and expressivity continues to expand. Successfully identifying the molecular basis for a rare disorder may depend on the indication for testing and alternative analysis strategies, such as choosing appropriate family members for comparison given a suspected mode of inheritance. The ultimate goal is to reduce the number of variants related to the clinical phenotype needing examination by a skilled analyst. Variants may be included or excluded based on factors including presence in a phenotype-associated gene list, presumed inheritance pattern in the family (e.g., biallelic if recessive or hemizygous if X-linked), variant types (e.g., truncating, copy number), presence or absence in control populations, observation of de novo occurrence (if the phenotype is sporadic in the context of a dominant disorder), rare homozygous variants, gene expression pattern, algorithmic scores for in silico assessment of protein function or splicing impact, and biological pathway analysis.

E.2.2. Variant classification

Classification of medically relevant sequence variants is not a fully automated process and requires specialized training in evaluating gene–disease association and variant evidence (section D.1.4). Potential clinically relevant variants should be evaluated and classified according to best practices as outlined in the ACMG/AMP guidelines.92 Additional general guidance documents developed by ClinGen (https://clinicalgenome.org/working-groups/sequence-variant-interpretation/) and gene- and disease-specific specifications may be applied to refine variant classifications and reduce discrepant classifications among laboratories (https://clinicalgenome.org/docs/?doc-type=publications#list_documentation_table). Variant evaluation should include an evidence-based assessment of the pathogenicity of the variant as well as its potential role in the individual’s phenotype. The evidence for clinical validity of the particular gene in the patient’s disease, as documented by resources such as OMIM (https://www.omim.org/) and ClinGen (https://clinicalgenome.org/curation-activities/gene-disease-validity/), should also be considered when weighing potential clinical significance. For tests that cover a broad range of phenotypes (e.g., cardiomyopathy or intellectual disability), as well as ES/GS, correlation between phenotypes known to be associated with the variant and the individual’s phenotype should be assessed. If multiple variants of potential clinical significance are identified, the interpretation should discuss the likely relevance of each variant to the phenotype, including the possibility of concurrent diagnoses, resulting in a potential blended phenotype, and prioritize variants accordingly. Individualized clinical interpretation of laboratory findings goes beyond the standard technical analysis of results and classification of analytical findings. Development of an individualized clinical interpretation may require communication with the ordering health-care provider to obtain and review relevant medical and/or family history information.

Laboratories should deposit interpreted variants with supporting evidence and the criteria applied for assessment into the ClinVar database (http://www.ncbi.nlm.nih.gov/clinvar). The public deposition of this information enables the identification of interpretation differences and ability to rapidly share and build knowledge that can improve diagnosis and care.93,94,95,96,97 Laboratories should have policies consistent with emerging professional guidance for the reporting of variants in genes with limited or no known disease association that aligns with the intended use of the test (e.g., a diagnostic gene panel versus4,93 ES/GS) (https://clinicalgenome.org/docs/?doc-type=curation-activity-procedures&curation-procedure=gene-disease-validity).

E.3. Reporting of secondary or unanticipated findings

ES/GS tests may generate sequence information that is not immediately associated with the individual’s phenotype and family history as provided. The terms “incidental” or “secondary” findings, depending on the intent to identify, are used to describe these unexpected clinically significant variants. Reporting recommendations have been published.98,99,100 While laboratories are not limited to the genes recommended by ACMG, deviations from this gene list should be disclosed. Laboratories should carefully consider which variants to report as secondary findings.101,102 Guidance documents suggesting how laboratories should proceed after detection of unanticipated findings such as consanguinity and misattributed parentage have also been published.103,104

E.4. Written report

Creating reports for audiences of different backgrounds is challenging. A report for a health practitioner may be different from the report for a lay audience.105 All laboratory reports should adhere to federal (42 CFR § 493.1291), state, and regulatory (CAP, CLIA) standards. Primary findings in a diagnostic test should appear as a succinct interpretive result at the beginning of the report indicating the presence or absence of variants consistent with the phenotype. Laboratories may choose to use statements like “positive,” “abnormal,” or “clinically important finding” to describe detection of a variant that explains the clinical findings (primary findings), or a medically actionable variant. “Negative” would indicate that no variants were identified that are relevant to the phenotype. “Uncertain” or “see report” would signal that there is uncertainty regarding the connection between the phenotype and the variant(s) reported. Variants should be prioritized according to their relevance to the phenotype. When reporting a gene associated with a treatable genetic disorder the laboratory should consider the addition of a reference to the treatment in the report.106 This is currently recommended in cancer testing reports.107 Any additional findings (e.g., secondary findings, carrier status, pharmacogenomics variants) may be included in separate sections as appropriate. If other family members are tested to assist with the interpretation of the variants found in the proband (e.g., trio analysis), only the minimum amount of information required to interpret the variants and comply with the Health Insurance Portability and Accountability Act (HIPAA) regulations should be provided in the proband’s report. Specific names and detailed phenotypic descriptions should be avoided. As an example, the following statements would be appropriate: “Parental studies demonstrate that the variants are on separate copies of the gene, with one inherited from each parent” or “Segregation studies showed consistent inheritance of the variant with the disease in three additional affected family members.” Sample reports are included in the Supplemental Material as examples of some ways to provide the content recommended above. The details in these reports are provided as examples only. All report details are ultimately left to the discretion of the laboratory director.

E.4.1. Variant reporting