Abstract

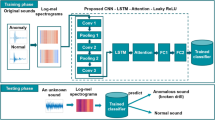

To establish the sound quality evaluation model of roller chain transmission system, we collect the running noise under different working conditions. After the noise samples are preprocessed, a group of experienced testers are organized to evaluate them subjectively. Mel frequency cepstral coefficient (MFCC) of each noise sample is calculated, and the MFCC feature map is used as an objective evaluation. Combining with the subjective and objective evaluation results of the roller chain system noise, we can get the original dataset of its sound quality research. However, the number of high-quality noise samples is relatively small. Based on the sound quality research of various chain transmission systems, a novel method called multi-source transfer learning convolutional neural network (MSTL-CNN) is proposed. By transferring knowledge from multiple source tasks to target task, the difficulty of small sample sound quality prediction is solved. Compared with the problem that single source task transfer learning has too much error on some samples, MSTL-CNN can give full play to the advantages of all transfer learning models. The results also show that the MSTL-CNN proposed in this paper is significantly better than the traditional sound quality evaluation methods.

Similar content being viewed by others

Introduction

Studies have shown that long-term exposure to noise can seriously affect people’s mental and physical health. Mechanical noise not only makes people cranky, but also can damage hearing and even lead to a higher risk of heart disease1,2. As an important mechanical basic product, roller chain is widely used in automobiles and motorcycles, which directly affects the noise quality of the whole machine. Nowadays, users pursue higher noise comfort, so how to evaluate the noise of the roller chain transmission system is particularly important. However, previous studies on roller chain noise mainly focus on noise characteristics and noise generation mechanism3,4. Due to the lack of relevant research on sound quality, a series of noise tests are carried out in this paper to establish the sound quality evaluation model of the roller chain system.

Sound quality research generally includes subjective and objective evaluation content, exploring the user's subjective feeling to noise, mainly the evaluation of comfort degree. The research on sound quality mainly focuses on the field of automobile, and researchers generally use some acoustic parameters (A-weighted sound pressure level, loudness, sharpness, roughness, fluctuation, articulation index and tonality) as objective evaluation. Based on the specific subjective evaluation method, the testers are organized to conduct the auditory evaluation test on the noise samples. Then, researchers often use some machine learning and neural network methods to establish a sound quality evaluation model with objective evaluation as input and subjective evaluation as output5,6,7. Li, D. et al. used the method of multiple linear regression to establish the relationship between subjective discomfort degree and acoustic parameters. The results show that loudness and sharpness have the greatest influence on the comfort of micro commercial vehicles8. Wang, Y. et al. proposed a global annoyance level modeling method for pure electric vehicles and established a nonlinear mapping relationship between psychoacoustic indicators and sound quality based on the extreme gradient boost algorithm9. Sometimes there is severe multicollinearity between the input features, and the accuracy and performance of the sound quality evaluation model will be reduced. Convolutional neural network (CNN) has strong feature extraction ability and is generally used in the field of image processing. In order to use CNN in the study of sound quality, various feature maps are constructed as objective evaluation of noise10,11. Mel frequency cepstral coefficient (MFCC) is a commonly used feature representation method in sound signal processing, which has been proved to be used to distinguish sound quality12. Using the MFCC feature map as the input of the model, CNN is obviously better than the traditional sound quality modeling method. The above research introduces the development of sound quality research and the improvement of modeling methods in different application scenarios. However, how to improve the accuracy of sound quality prediction model is still a difficult problem when the number of samples is insufficient. Moreover, the constant pursuit of prediction accuracy will inevitably lead to more and more complex models, so it is also important to achieve lightweight sound quality prediction models.

To establish the sound quality evaluation model of roller chain transmission system, we first collect the running noise of the roller chain system under different working conditions and preprocess the noise samples. Secondly, we organize a group of testers to evaluate the noise samples subjectively, and construct feature maps as objective evaluation. To solve the problem of small sample sound quality evaluation, we find and make use of a fuzzy phenomenon in the subjective evaluation of sound quality and propose a data enhancement method called fuzzy generation. However, fuzzy generation can lead to a particular kind of data leakage, and we use transfer learning to eliminate this effect. The basic idea of transfer learning is to accelerate the learning process on the target task by using the knowledge learned in one or more source tasks. This is usually done by using a model that has already been trained on one task as a starting point, and then fine-tuning it to fit the new task13,14,15,16. In the study of single-source tasks, Jamil, F. et al., proposed an example-based deep transfer learning method for wind turbine gearbox fault detection to prevent negative migration17. Maschler, B. et al. proposed a modular deep learning algorithm for anomaly detection of time series data sets, which realizes deep industrial transfer learning18. In the study of multi-source tasks, Rajput, D. S. et al. use multi-source sensing data and fuzzy convolutional neural network for fault classification and prediction, and the accuracy of the model is significantly superior to other machine learning and deep learning methods19. Sun, L. et al., proposed a new parameter transfer method to improve training performance in multi-task reinforcement learning20. Based on the sound quality study of three chain transmissions (silent chain, Hy-Vo chain and dual-phase Hy-Vo chain), we propose a new method named multi-source transfer learning convolutional neural network (MSTL-CNN). The results show that the proposed model is superior to the traditional sound quality prediction methods.

Data collection and pre-processing

Figure 1 shows the steps of the sound quality evaluation test, including noise acquisition and processing, subjective evaluation test and objective evaluation.

Noise test

For the chain transmission system, the roller chain type is 06B, the tooth number of driving sprocket is 19, and the tooth number of driven sprocket is 38. The noise sensor (MINIDSP UMIK-1) is placed at the same height as the center of the driving sprocket, and the noise test is carried out in a closed indoor reverberation field. The measurement distance is the distance between the noise sensor and the chain system, which is 0.5 and 1 m respectively. In the noise test, the speed range is 500–4000 rpm, and the three loads are 500 N, 600 N and 750 N respectively. Starting from the lowest speed (500 rpm), the noise collection is performed every 500 rpm, and the collection time is greater than 30 s each time. We record noise audio using Adobe Audition 2022 software, and the noise is sampled at 48,000 Hz. Finally, we can get 2 × 8 × 3 = 48 noise samples, and randomly intercept 5 s fragments of each sample for subsequent processing.

Figure 2 shows a comparison between the noise of the roller chain transmission system and a silent chain transmission system. The green time-domain waveform on the left is the roller chain system, and the blue one on the right is the silent chain system. Under the same load and measurement distance, the noise of the roller chain system is stronger than that of the silent chain from low speed to high speed. At the low-speed of 1000 rpm, the noise energy of the roller chain system is obviously stronger than that of the silent chain. As the speed increases, the noise energy of the roller chain system decreases at 2500 rpm. The results show that the roller chain system works more smoothly at medium speed, but the noise is still stronger than that of the silent chain system. At the high speed of 4000 rpm, the noise energy of both increases significantly, and the noise of the roller chain system is slightly stronger than that of the silent chain system. Therefore, the noise characteristics of the roller chain system are different from those of the silent chain system, so it is necessary to construct the sound quality evaluation model of the roller chain transmission system.

Subjective evaluation test

We use the equal interval direct one-dimensional evaluation as a subjective evaluation method, and the noise discomfort level describes the sound quality21. As shown in Fig. 3, there are five discomfort levels for noise, where 0 means extreme discomfort and 10 means no discomfort. The three middle discomfort levels, with three scores for each level, represent the strength of the discomfort level from smallest to largest. Twelve healthy testers with driving experience take part in the auditory perception test, with a ratio (5:1) of men to women. As shown in Fig. 1d, the tester wears a hi-fi headset for the test, and the same noise audio is played five times by the Groove software. The sound pressure level of the test environment does not exceed 30 dB, and the score is recorded in the table after the tester listens to a noise audio.

The subjective evaluation scores at each speed are shown in Fig. 4. Based on the median line, as the speed continues to increase, the score overall shows a downward trend. In the speed range of 500–3000 rpm, the sound quality of the roller chain system decreases with the increase of the speed. However, at 3500 rpm, the score increases, indicating that the roller chain system runs more smoothly at this time. At the limit speed of 4000 rpm, the score is significantly reduced. It should also be noted that the longer the box line means that the sound quality of this speed fluctuates greatly, and there is even an outlier at 2000 rpm. For the same sample, twelve testers should have relatively consistent feelings, so it is necessary to conduct normality test and correlation test on the subjective evaluation results.

Because the sample size is small, Shapiro–Wilk test is used to test the normality of each sample scores, as shown in Fig. 5. If the test value is greater than 0.05, it is consistent with normality, which is represented by the blue circle, and the red circle indicates that the sample does not conform to the normal distribution. We can see that quite a variety of evaluation results do not conform to the normal distribution, so spearman correlation test is needed to further analyze the subjective evaluation results. The formula for spearman correlation test is as follows:

where xi and yi represent the corresponding elements of the two variables, \(\overline{x}\) and \(\overline{y}\) represent the average value of the corresponding variables. The larger the r value, the stronger the correlation, and the test results are shown in the Fig. 6.

In Fig. 6, Ti (i = 1, 2,…,12) represents the number of the twelve testers. The correlation between many testers is less than 0.7, and the weakest correlation between T9 and T6 is only 0.40. To screen reasonable subjective evaluation results, we calculated the average correlation coefficient (ACC) of each tester based on Fig. 6, as shown in Table 1.

It can be seen from Table 1 that the ACC of T9 is less than 0.7, so if the results of T9 are excluded, the evaluation results of the remaining testers are all reasonable. The average evaluation scores of the remaining eleven testers are calculated as the final subjective evaluation results, as shown in Table 2.

Objective evaluation

Mel frequency cepstral coefficient (MFCC) is based on the characteristics of the human auditory system. The sensitivity of the human ear to different frequencies is non-linear: it is more sensitive to low frequency sounds and less sensitive to high frequency sounds. The Mel scale is a frequency measurement method based on the human ear’s perception of pitch, which can convert the actual frequency into the Mel frequency. MFCC is a powerful feature representation method because it is able to capture the main characteristics of speech signals in a compact manner, and to some extent simulates the characteristics of human auditory perception22,23,24. The calculation steps of MFCC are as follows:

-

(1)

The original signal is pre-weighted, the high-frequency part is strengthened, and the high-frequency part of the sound signal is compensated for the loss that may be suffered during transmission.

$$y(t) = x(t) - \alpha x(t - 1)$$(2)where x(t) is the original signal, y(t) is the pre-weighted signal, and α usually takes 0.95 or 0.97.

-

(2)

The signal is divided into N millisecond frames, and the data of each frame is windowed. Window functions usually use Hamming window:

$$\omega (n) = 0.54 - 0.46 \cdot \cos (2\pi n/N) \, 1 < n < N$$(3) -

(3)

The frequency spectrum is obtained by fast Fourier transform of the data of each frame. A set of Mel filters (usually a triangular filter bank) is applied to the spectrum to simulate the perceptual properties of the human ear. Each filter Hm(k) is defined as:

$$H_{m} (k) = \left\{ {\begin{array}{*{20}l} 0 \hfill & {{\text{k < f}}(m - 1)} \hfill \\ {\frac{k - f(m - 1)}{{f(m) - f(m - 1)}}} \hfill & {{\text{f}}(m - 1) \le k \le f(m)} \hfill \\ {\frac{f(m + 1) - k}{{f(m + 1) - f(m)}}} \hfill & {{\text{f}}(m) \le k \le f(m + 1)} \hfill \\ 0 \hfill & {{\text{k}} \ge {\text{f}}(m + 1)} \hfill \\ \end{array} } \right.$$(4)where f(m) is the central frequency of the filter on the Mel scale. The Mel scale transformation is shown as follows:

$$M(f) = 2595 \cdot \log_{10} \left( {1 + \frac{f}{700}} \right)$$(5)where M(f) is the representation of the frequency f on the Mel scale.

-

(4)

The output of the filter bank needs to be logarithmic.

$$S(m) = \log \left( {\sum\nolimits_{k = 0}^{K - 1} {\left| {X(k)} \right|^{2} \cdot H_{m} (k)} } \right)$$(6)where X(k) is the spectrum of the frame.

-

(5)

After taking the logarithm of the filter bank output, the discrete cosine transform is used to get the final MFCC.

$$C(n) = \sum\nolimits_{m = 0}^{M - 1} {S(m) \cdot \cos \left[ {\frac{\pi n}{M}(m + \frac{1}{2})} \right]} {,}\quad n = 1,2,...,L$$(7)where C(n) is the n-th MFCC, L represents the order of the MFCC, generally 12–16.

In this paper, the MFCC order L is taken as 12, and the length N of each frame is taken as 20 ms, 25 ms and 30 ms respectively. Finally, the 5 s noise sample is divided into F (250,200 and 167) frames. Based on Eqs. (2)–(7), we can calculate the three MFCC for each noise sample. The objective evaluation results generate the input feature space, and the subjective evaluation labels the noise samples. As shown in Fig. 7, the MFCC feature map is constructed as the input of the sound quality evaluation model. To compare with the traditional modeling method of sound quality evaluation, we also select six acoustic parameters as objective evaluation. As illustrated in Fig. 8, the Audio toolbox in MATLAB is used to calculate these six parameters: A-weighted sound pressure level (A-SPL), loudness, sharpness, roughness, fluctuation, articulation index (AI).

Methodology

Transfer learning (TL) is especially useful in situations where data is scarce, because it allows models to leverage existing knowledge, reducing the need for large amounts of labeled data. TL can be divided into four categories according to different technical methods: instance-based TL, feature-based TL, model-based TL and relation-based TL25,26. Instance-based TL directly uses the data instances of the source task to assist the learning of the target task, which usually involves reweighting the data of the source task to better adapt to the target task. Feature-based TL learns feature representations that can be transferred between source and target tasks. Model-based TL directly transfers the model parameters of the source task to the target task and adjusts them. Relation-based TL is suitable for situations where both source and target tasks involve relational data, such as knowledge graphs or social networks. These classification methods of TL help to understand its wide application and provide guidance for selecting appropriate transfer learning strategies for specific problems.

In this paper, we take the sound quality evaluation of roller chain transmission system as the target task and choose the sound quality study of silent chain transmission system as the source task. The source task and the target task are the same in feature space and data space, but the data distribution is different. We choose the model-based TL approach, in which the model parameters of the source task are used as the initialization parameters of the target task model27. The fine-tuning process can be represented by the following formula:

where θtarget is the model parameter of the target task, θsource is the model parameter of the source task, and Δθ is the parameter adjustment on the target task. As shown in Fig. 9, by stacking the prediction results of the three target models, the final sound quality evaluation model can be obtained.

As a basic model, convolutional neural network (CNN) is generally used to solve classification tasks. To model sound quality evaluation, the number of nodes in the output layer is set to 1, and a continuous value can be obtained without using nonlinear activation function. The convolutional layer in front of the CNN can be regarded as a feature extractor, as shown in the Fig. 10. In the source model, there are three convolution layers (Conv), one maxpooling layer, one flatten layer, and three fully connected layers (FC). The step size of the maximum pooling layer is 2 with 0 padding, and the step size of the Conv is 1 without 0 padding. In the three FC, the number of nodes is 1024, 128, and 1, respectively. To avoid overfitting, dropout technology is used in FC1, and the dropout rate is set to 0.5. The output layer is the last layer, and the output result is the evaluation score. Except for the last layer, the activation functions of other layers are relu. Some studies have shown that the structure of the source model and the target model should be similar28. Therefore, in this paper, the structural parameters of the target model are the same as those of the source model. When the 167 × 12 MFCC feature map is used as input, the structural parameters of the source model are shown in the Table 3. The transfer learning process from the source model to the target model can be summarized as: First, the source model is trained on the source task, and then the feature extractor of the source model is reused on the target model. Based on the samples of the target task, the parameters of the new fully connected layers can be trained on the target model.

We chose three sound quality research of chain transmission system as the source tasks, which are: silent chain, Hy-Vo chain, and dual-phase Hy-Vo chain. To better train the source model, we need to expand the datasets for these three source tasks. Fuzzy generation is a data enhancement method based on the fuzzy phenomenon in the subjective evaluation of sound quality. After the correlation test, all the subjective evaluation scores are reasonable, but researchers often take the mean as the final score. If the average score is taken as the most correct result, the accuracy of the other scores can be defined. Based on the uncertainty of subjective evaluation results, we introduce fuzzy mathematics to quantify and deal with the fuzziness of this problem. Fuzzy mathematics is an effective mathematical tool for dealing with uncertainty and fuzziness. By introducing the concepts of fuzzy sets and fuzzy logic, it allows mathematical modeling of inaccurate or incomplete information that is prevalent in the real world29,30,31. Combined with the idea of fuzzy mathematics, fuzzy generation is proposed to expand the datasets. First, we can define a fuzzy map on the evaluation score interval as follows:

where I is the value field [0 10], M is the fuzzy interval of I, and M(s) is the membership function.

We treat the average score as having a membership of 1, while the minimum score and the maximum score both have a membership of 0. After constructing different membership functions, the membership degrees of different sizes are selected to divide the fuzzy generation interval. In the fuzzy generation interval, a suitable perturbation method is selected to generate a sufficient number of new samples. In this paper, three membership functions (cusp, ridge and normal) are constructed, and the formula is as follows:

where c is the core point (the average score), m is the left boundary point (the minimum score), n is the right boundary point (the maximum score), r is a random generation point, and M(sr) is the membership of r. Equation (10) is the cusp membership function, Eq. (11) is the ridge membership function, and Eq. (12) is the normal membership function. We take three samples of the roller chain transmission system as an example, and the three membership functions are shown in Fig. 11. In this paper, we use random perturbation to triple the size of the original dataset with three membership degrees (0.9, 0.7 and 0.5).

Results and analysis

Based on three source tasks (with 168 samples) and one target task, we build three transfer learning models: Source model 1(silent chain)–Target model 1, Source model 2(Hy-Vo chain)–Target model 2, Source model 3(dual-phase Hy-Vo chain)–Target model 3. On the source model and the target model, the MFCC feature map is input, the evaluation score is output. Since a large dataset is obtained through fuzzy generation, simple segmentation is enough to obtain reliable evaluation. Therefore, for the test method of source model and target model, we choose training-test split, that is, 83% of data sets are randomly selected for training and 17% for testing. Both the source model and the target model use the Adam optimizer, and the root mean squared error is the loss function. The initial learning rate of the source model is 0.005 and the epoch is set to 200. After each source model is trained on the source task, this part including the convolution layers, pooling layer, and flatten layer is regarded as the feature extractor and the parameters of these layers are frozen. By connecting the feature extractor with the new input layer, the new fully connected layers, the new dropout layer, and the new output layer, we can finally get three target models. For the target model, we use the dataset of the roller chain system for training, the initial learning rate is 0.003 and the epoch is set to 100. During the training process, only the parameters of the new fully connected layers are constantly updated, and with the help of the feature extractor, the model can converge with fewer epochs. The final results need to take the mean of five training results, and since there are 3 kinds of feature maps, 3 membership functions and 3 membership values, each transfer learning model needs to be trained 5 × 3 × 3 × 3 = 135 times in total. Three indicators: correlation coefficient (R), root mean squared error (RMSE) and mean absolute error (MAE) are selected to evaluate the transfer learning model, and the formulas are as follows:

where n is the number of samples, xi is the predicted value of the sample, and yi is the true value of the sample. R is used to measure the degree of linear correlation between two variables. The value is between − 1 and 1, where 1 means a completely positive correlation, − 1 means a completely negative correlation, and 0 means no linear correlation. In the prediction of sound quality, R can be used to intuitively show the linear correspondence between the predicted value of the model and the real value. To measure the final predictive effect of transfer learning, we also propose an evaluation formula to select the best situation.

The indicator E can represent the effectiveness of the transferred knowledge on the target model. To ensure that the performance difference between the source model and the target model is not too large, the value of E should be as small as possible. Based on the size of the E value, we can get the best set of source model-target model. Due to the small sample size of the target task, serious underfitting occurs in the model without the use of transfer learning, and the results are not presented because they are meaningless.

The training results of the Source model 1 are shown in Table 4, and the training results of the Target model 1 are shown in Table 5. The smaller the evaluation indicator E, the greater the contribution of the source model, and the smaller the prediction error of the target model. As Table 5 shows, the minimum value of evaluation indicator E is 1.745. Therefore, when the membership function is ridge, the membership value is 0.7 and the input size is 167 × 12, the transfer learning effect is the best. The training results of the Source model 2 and Target model 2 are presented in Tables 6 and 7 respectively.

For the Source model 2–Target model 2, as can be seen from the minimum value 1.813 of E in Table 7, transfer learning has the best effect when the membership function is ridge, the membership value is 0.7 and the input size is 200 × 12. For the last transfer learning model (Source model 3–Target model 3), the results are shown in Tables 8 and 9.

As can be seen from Table 9, the minimum value of E is 2.009, indicating that transfer learning has the best effect when the membership function is ridge, the membership value is 0.9 and the input size is 250 × 12. Among the three transfer learning models, the error indicators (RMSE and MAE) of the target model are larger than that of the source model, and we find that the transfer learning effect will be poor on specific samples, as shown in the Fig. 12.

In Fig. 12, the performance of the three transfer learning models differs significantly for different samples. To get the most out of each model, the stacking technique is shown in Fig. 13. Firstly, we train the three transfer learning models at their best situation, and Fig. 14 shows their convergence curves. Among the three transfer learning models, the initial RMSE of the target model is significantly smaller than that of the source model. It shows that the iteration of the target model is smoother, unlike the source model which has an obvious period of rapid convergence. The three target models are taken as the base model, and the prediction results of the base model are taken as the training data of the meta model. After training the meta model, the prediction of the base model can be effectively integrated. In this paper, the meta model adopts the method of linear regression, and the regression equation is shown as follows:

where Pfinal is the final prediction result of the meta model, p1 is the prediction result of Target model 1, p2 is the prediction result of Target model 2, and p3 is the prediction result of Target model 3. Finally, the prediction results of the meta model are shown in the Table 10.

To compare with the traditional sound quality evaluation methods, we also use lasso regression and support vector regression (SVR)32,33. Lasso regression is a linear model, which uses L1 penalty term to control variable selection and complexity. This helps reduce the risk of overfitting and enhances the interpretability of the model in data containing multiple related predictors. There are a large number of potential explanatory variables in sound quality prediction, and lasso regression can help identify which features are most important, simplifying the model and improving prediction performance. Unlike lasso regression, SVR is a nonlinear model that deals with linearly indivisible data by using different kernel functions. There are two main advantages of SVR: it has good robustness to outliers and can work effectively in high-dimensional space. For problems such as sound quality that involves complex nonlinear relationships, SVR can provide powerful modeling capabilities. Based on the six acoustic parameters as inputs and the subjective evaluation scores as outputs, we train the lasso regression model and SVR model. For the lasso regression model, five-fold cross validation is used to find the optimal parameter λ is 68. As for the SVR model with radial basis function, five-fold cross validation is also used to find the optimal parameter, the value range is [0.01, 0.1, 1, 10, 100], and the optimal parameters (penalty parameter c = 100 and kernel parameter g = 0.01) can be obtained. Due to the small size of the original dataset, we choose the cross-validation approach to get a robust model performance evaluation. Five-fold cross-validation is used as a test method for lasso regression model and SVR model. The prediction results of lasso regression model and SVR model are also shown in the Table 10. Compared with the three target models, the three indicators of the meta model are significantly better, especially the error indexes (RMSE and MAE) are particularly small. Compared with the two traditional methods, the meta model also has obvious advantages: the maximum correlation coefficient R is 0.993, the minimum RMSE is 0.238, and the minimum MAE is only 0.181. Therefore, the results show that the multi-source transfer learning convolutional neural network (MSTL-CNN) proposed in this paper has the best effect and the most accurate results in the evaluation of sound quality.

Conclusion

In this paper, we do a series of noise tests to establish the sound quality evaluation model of roller chain transmission system. Firstly, 48 noise samples are obtained through noise acquisition test, and all the noise samples are evaluated subjectively and objectively. For the subjective evaluation, the results with poor correlation are excluded. As for the objective evaluation, we calculate the Mel frequency cepstral coefficients and six acoustic parameters.

To solve the problem of small sample sound quality evaluation, we propose a multi-source transfer learning convolutional neural network (MSTL-CNN) based on three source tasks. For the transfer learning model, three membership functions (cusp, ridge and normal) and three membership values (0.9, 0.7 and 0.5) are selected for fuzzy generation. By comparing the transfer learning results in different situations, the optimal conditions of each transfer learning model are found. Since the three transfer learning models behave differently on different samples, we stack their predictions into one meta model. The results of the meta model are not only much better than each transfer learning model, but also better than the traditional methods of sound quality research. In particular, the MSTL-CNN proposed in this paper has the smallest mean absolute error of only 0.181, indicating that the model is the most accurate in the evaluation of sound quality. In the future work, how to remove the stacking steps to simplify the model structure is a research difficulty. Therefore, it is crucial to realize the simultaneous training of three transfer models and timely knowledge sharing for specific samples.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Basner, M. et al. Auditory and non-auditory effects of noise on health. The Lancet 383(9925), 1325–1332. https://doi.org/10.1016/s0140-6736(13)61613-x (2014).

Dratva, J. et al. Transportation noise and blood pressure in a population-based sample of adults. Environ. Health Perspect. 120(1), 50–55. https://doi.org/10.1289/ehp.1103448 (2012).

Liu, X. L. et al. Test and analysis of bush roller chains for noise reduction. Appl. Mech. Mater. 52–54, 430–435. https://doi.org/10.4028/www.scientific.net/amm.52-54.430 (2011).

Zheng, H. et al. Investigation of meshing noise of roller chain drives for motorcycles. Noise Control Eng. J. 50(1), 5. https://doi.org/10.3397/1.2839671 (2002).

Kim, S., Ryu, S., Jun, Y., Kim, Y. & Oh, J. Methodology for sound quality analysis of motors for automotive interior parts through subjective evaluation. Sensors 22(18), 6898. https://doi.org/10.3390/s22186898 (2022).

Shang, Z. et al. Research of transfer path analysis based on contribution factor of sound quality. Appl. Acoust. 173, 107693. https://doi.org/10.1016/j.apacoust.2020.107693 (2021).

Chen, P., Xu, L., Tang, Q., Shang, L. & Liu, W. Research on prediction model of tractor sound quality based on genetic algorithm. Appl. Acoust. 185, 108411. https://doi.org/10.1016/j.apacoust.2021.108411 (2022).

Li, D. & Huang, Y. The discomfort model of the micro commercial vehicles interior noise based on the sound quality analyses. Appl. Acoust. 132, 223–231. https://doi.org/10.1016/j.apacoust.2017.11.022 (2018).

Wang, Y., Zhang, S., Meng, D. & Zhang, L. Nonlinear overall annoyance level modeling and interior sound quality prediction for pure electric vehicle with extreme gradient boosting algorithm. Appl. Acoust. 195, 108857. https://doi.org/10.1016/j.apacoust.2022.108857 (2022).

Ruan, P., Zheng, X., Qiu, Y. & Zhou, H. A binaural MFCC-CNN sound quality model of high-speed train. Appl. Sci. 12(23), 12151. https://doi.org/10.3390/app122312151 (2022).

Huang, H., Wu, J. H., Lim, T. C., Yang, M. & Ding, W. Pure electric vehicle nonstationary interior sound quality prediction based on deep CNNs with an adaptable learning rate tree. Mech. Syst. Signal Process. 148, 107170. https://doi.org/10.1016/j.ymssp.2020.107170 (2021).

Jin, S., Wang, X., Du, L. & He, D. Evaluation and modeling of automotive transmission whine noise quality based on MFCC and CNN. Appl. Acoust. 172, 107562. https://doi.org/10.1016/j.apacoust.2020.107562 (2021).

Guo, W., Dong, Y. & Hao, G. Transfer learning empowers accurate pharmacokinetics prediction of small samples. Drug Discov. Today 29, 103946. https://doi.org/10.1016/j.drudis.2024.103946 (2024).

Chato, L. & Regentova, E. E. Survey of transfer learning approaches in the machine learning of digital health sensing data. J. Personal. Med. 13(12), 1703. https://doi.org/10.3390/jpm13121703 (2023).

Xu, H., Li, W. & Cai, Z. Analysis on methods to effectively improve transfer learning performance. Theor. Comput. Sci. 940, 90–107. https://doi.org/10.1016/j.tcs.2022.09.023 (2023).

Cody, T. & Beling, P. A. A systems theory of transfer learning. IEEE Syst. J. 17(1), 26–37. https://doi.org/10.1109/jsyst.2022.3224650 (2023).

Jamil, F., Verstraeten, T., Nowé, A., Peeters, C. & Helsen, J. A deep boosted transfer learning method for wind turbine gearbox fault detection. Renew. Energy 197, 331–341. https://doi.org/10.1016/j.renene.2022.07.117 (2022).

Maschler, B., Knodel, T. & Weyrich, M. Towards deep industrial transfer learning for anomaly detection on time series data. In 26th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA). https://doi.org/10.1109/etfa45728.2021.9613542 (2021)

Rajput, D. S., Meena, G., Acharya, M. & Mohbey, K. K. Fault prediction using fuzzy convolution neural network on IoT environment with heterogeneous sensing data fusion. Measur.. Sens. 26, 100701. https://doi.org/10.1016/j.measen.2023.100701 (2023).

Sun, L., Zhang, H., Wang, X. & Tomizuka, M. Efficient multi-task and transfer reinforcement learning with parameter-compositional framework. IEEE Robot. Autom. Lett. 8(8), 4569–4576. https://doi.org/10.1109/lra.2023.3284660 (2023).

Guski, R. Psychological methods for evaluating sound quality and assessing acoustic information. Acustica 83, 765–774 (1997).

Abdul, Z. K. & Al-Talabani, A. K. Mel frequency cepstral coefficient and its applications: A review. IEEE Access 10, 122136–122158. https://doi.org/10.1109/access.2022.3223444 (2022).

Wang, F. & Shen, X. Research on speech emotion recognition based on teager energy operator coefficients and inverted MFCC feature fusion. Electronics 12(17), 3599. https://doi.org/10.3390/electronics12173599 (2023).

Moondra, A. & Nandal, P. Improved speaker recognition for degraded human voice using modified-MFCC and LPC with CNN. Int. J. Adv. Comput. Sci. Appl. https://doi.org/10.14569/ijacsa.2023.0140416 (2023).

Zhuang, F. et al. A comprehensive survey on transfer learning. Proc. IEEE 109(1), 43–76. https://doi.org/10.1109/jproc.2020.3004555 (2021).

Chen, X. et al. Deep transfer learning for bearing fault diagnosis: A systematic review since 2016. IEEE Trans. Instrum. Meas. 72, 1–21. https://doi.org/10.1109/tim.2023.3244237 (2023).

Solís, M. & Calvo-Valverde, L. Performance of deep learning models with transfer learning for multiple-step-ahead forecasts in monthly time series. Intel. Artif. 25(70), 110–125. https://doi.org/10.4114/intartif.vol25iss70pp110-125 (2022).

Rogers, A. W. et al. A transfer learning approach for predictive modeling of bioprocesses using small data. Biotechnol. Bioeng. 119(2), 411–422. https://doi.org/10.1002/bit.27980 (2021).

Ruan, K. & Li, Y. Fuzzy mathematics model of the industrial design of human adaptive sports equipment. J. Intell. Fuzzy Syst. 40(4), 6103–6112. https://doi.org/10.3233/jifs-189449 (2021).

Agayan, S. M., Kamaev, D. A., Бoгoyтдинoв, ШP., Aleksanyan, A. O. & Dzeranov, B. Time series analysis by fuzzy logic Methods. Algorithms 16(5), 238. https://doi.org/10.3390/a16050238 (2023).

Bustince, H. et al. A historical account of types of fuzzy sets and their relationships. IEEE Trans. Fuzzy Syst. 24(1), 179–194. https://doi.org/10.1109/tfuzz.2015.2451692 (2016).

Wu, R., Li, H., Peng, L., Wang, Z. & Wang, W. Research and application of Lasso regression model based on prior coefficient framework. Int. J. Comput. Sci. Math. 13(1), 42. https://doi.org/10.1504/ijcsm.2021.114190 (2021).

Zhan, A. et al. A traffic flow forecasting method based on the GA-SVR. J. High Speed Netw. 28(2), 97–106. https://doi.org/10.3233/jhs-220682 (2022).

Acknowledgements

This research is supported by The National Natural Science Foundation of China (No. 51775222) and Science and Technology Development Project of Jilin Province in China (No. 20200401136GX).

Author information

Authors and Affiliations

Contributions

L. J. B. prepared all figures and wrote the main manuscript text, A. L. C. reviewed the manuscript, C. Y. B. provided the funds for the tests and W. H. X. helped with the tests and data processing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, J., An, L., Cheng, Y. et al. Research on sound quality of roller chain transmission system based on multi-source transfer learning. Sci Rep 14, 11226 (2024). https://doi.org/10.1038/s41598-024-62090-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-62090-3

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.