Abstract

Individuals with the autism spectrum disorder (ASD) experience difficulties in perceiving speech in background noises with temporal dips; they also lack social orienting. We tested two hypotheses: (1) the higher the autistic traits, the lower the performance in the speech-in-noise test, and (2) individuals with high autistic traits experience greater difficulty in perceiving speech, especially in the non-vocal noise, because of their attentional bias toward non-vocal sounds. Thirty-eight female Japanese university students participated in an experiment measuring their ability to perceive speech in the presence of noise. Participants were asked to detect Japanese words embedded in vocal and non-vocal background noises with temporal dips. We found a marginally significant effect of autistic traits on speech perception performance, suggesting a trend that favors the first hypothesis. However, caution is needed in this interpretation because the null hypothesis is not rejected. No significant interaction was found between the types of background noise and autistic traits, indicating that the second hypothesis was not supported. This might be because individuals with high autistic traits in the general population have a weaker attentional bias toward non-vocal sounds than those with ASD or to the explicit instruction given to attend to the target speech.

Similar content being viewed by others

Many individuals with the autism spectrum disorder (ASD) have sensory symptoms in several modalities such as auditory, visual, tactile, olfactory, and gustatory1,2,3. These symptoms constitute a diagnostic criterion of the ASD in the Diagnostic and Statistical Manual of Mental Disorders—fifth edition4. Regarding the general population, people who have not been diagnosed with ASD, but have high levels of autistic traits, have sensory symptoms5,6,7,8.

Among sensory symptoms, various auditory symptoms of ASD have been reported in previous studies (see a review9). One of the auditory symptoms is that the background noise is more likely to interfere with concentration and speech perception in individuals with ASD than in typically developed individuals. Auditory filtering difficulties measured via the Short Sensory Profile10 refer to the difficulties in detecting, discriminating, and responding to auditory information in the background noise. Children with ASD demonstrate more severe auditory filtering difficulties11,12. Ashburner et al.13 reported that auditory filtering difficulties are associated with academic underachievement. Moreover, most teachers of children with ASD consider noise control a crucial issue14. Thus, individuals with ASD experience difficulties in perceiving auditory information, such as speech in the background noise, and these difficulties can lead to academic underachievement. These auditory difficulties may also increase internalizing problems in individuals with ASD and high autistic traits because overall sensory symptoms are related to the internalizing problems in both, individuals with15,16,17,18 and without ASD5,8.

Experimental studies have shown the difficulties in speech perception in the background noise among individuals with ASD. The studies using the speech-in-noise test—it measures speech perception ability in the background noise—have demonstrated that individuals with ASD have lower ability of speech perception than typically developing individuals19,20,21,22,23. The presence of “dips” in the background noise impacts speech perception ability in individuals with ASD. Many noises in daily life—for example, speech sounds—include temporal and spectral dips. Temporal dips arise because there are moments—for example, during brief pauses—when the overall noise level is low. During these moments, the signal-to-noise ratio (SNR) is relatively high, allowing “glimpses” of the target speech to be perceived—this process is called “dip listening.” In contrast, spectral dips arise because the frequency spectrum of the target speech is different from that of the background noise. Therefore, there may be certain frequencies in the target speech that are not masked by the noise, resulting in the ease of perceiving speech at those frequencies19. Normally hearing individuals demonstrate a higher performance in the speech-in-noise test in the case of noise with dips—for example, a single-talker noise—than a steady noise, such as a speech-shaped noise24. However, individuals with ASD demonstrate a lower performance in speech perception in noise with temporal dips than typically developed individuals, because the gain in the perception of noise with temporal dips relative to noise without temporal dips is smaller in individuals with ASD than typically developed individuals19,20. In contrast, spectral dips do not significantly impact the speech perception ability of individuals with ASD19,20. These results indicate that individuals with ASD experience difficulties in integrating auditory information fragments present in temporal dips. However, there are many kinds of noises, and it is ambiguous which of these noises with temporal dips are particularly adverse for speech perception in individuals with ASD.

The lack of social orienting caused by the ASD may also impact the speech perception in noise. Social orienting constitutes a set of psychological dispositions and biological mechanisms biasing the individual to preferentially orient to the social world25. Humans prioritize social signals, such as human faces, bodies, and voices. For example, attention is rapidly captured by human faces and bodies26, and the preference for face-like stimuli is shown early in life27,28. However, individuals with ASD have weaker attentional bias toward social stimuli compared to typically developed individuals. Several eye-tracking studies have confirmed atypical visual exploration of social stimuli in individuals with ASD, as demonstrated by a reduced duration of looking at the mouth and eyes when exploring faces compared to typically developed individuals29,30. Regarding auditory modality, Dawson et al.31 have reported that compared to children with Down syndrome or typical development, children with ASD fail more frequently to orient to auditory stimuli. This failure is more extreme in the case of social stimuli, such as calling a child’s name. A previous study using functional magnetic resonance imaging has shown that individuals with ASD fail to activate their superior temporal sulcus voice-selective regions in response to social stimuli, namely vocal sounds, but show a normal activation pattern in response to non-vocal sounds32. Instead of having an attentional bias toward social stimuli, individuals with ASD sometimes have an attentional bias toward non-social stimuli. An eye-tracking study showed that individuals with ASD prefer to look at object images, whereas typically developed individuals prefer to look at faces—the social stimulus33. In a study where participants were asked to enumerate the sounds they had heard in the experiment, individuals with ASD recalled markedly more non-vocal sounds than vocal sounds, whereas control individuals reported a similar proportion of vocal and non-vocal sounds32. In summary, individuals with ASD pay greater attention to non-social stimuli than individuals without ASD.

In the speech-in-noise test, a target speech is a social stimulus; hence, we assumed that when noise comprises non-social stimuli, individuals with ASD may pay greater attention to non-social noise than to the social target stimulus (e.g., spoken words) and have difficulties perceiving social target stimuli in non-social noise. Contrary to our assumption, Alcántara et al.19 found that the difference in speech perception abilities under non-vocal and vocal noise conditions was comparable between individuals with and without ASD. However, the non-vocal noise they used was a steady speech-shaped noise with a long-term average spectrum similar to the target speech. Moreover, the amplitude of the speech-shaped noise was modulated with the temporal envelope of the single-talker noise. In short, the speech-shaped noise that Alcántara et al.19 used was an artificial non-vocal noise with a similar spectrum and temporal envelope to speech. It was possible that Alcántara et al.19 did not detect the difference between the vocal and non-vocal noises because individuals with ASD might not have an attentional bias toward the non-vocal noise with acoustic features similar to the vocal sound. To our knowledge, no study has as yet investigated whether individuals with ASD experience greater difficulty in perceiving speech in the non-vocal noise that has different spectral and temporal features from speech, than in the vocal noise.

Studies have shown that individuals with ASD have sensory symptoms1,2,3,4 and difficulties in speech perception in noise19,20,21,22,23. However, to our knowledge, no study has investigated the relationship between autistic traits and speech perception ability in noise in the general population. Individuals with high autistic traits in the general population may also experience difficulties in speech perception in noise, just as individuals with ASD do, because people who have not been diagnosed with the ASD but have high levels of autistic traits have sensory symptoms5,6,7,8. Additionally, it can be assumed that when a noise comprises non-social stimuli, individuals with high autistic traits may pay greater attention to the non-social noise than the social target stimulus.

This study investigated the relationship between autistic traits and the speech perception performance of university students in multitalker noise, that is, overlaid voices as social noise and two non-vocal noises with temporal dips as non-social noises. The non-vocal noise used in a previous study was artificial noise with a similar spectrum and temporal envelope to speech19. However, individuals with ASD may focus more on non-vocal noise with different spectral and temporal features from speech. Therefore, we used the non-vocal noise that is not an artificial noise and has different acoustic features from speech, to investigate the different influences of vocal and non-vocal noises in the speech-in-noise test. Exposure to mechanical sounds impairs cognitive functions. For example, train noise impairs reading comprehension34, and aircraft noise impairs memory, reading comprehension, and speech perception35,36. In contrast, exposure to natural sounds improves the cognitive functions37. Therefore, we assumed that mechanical and natural sounds may also affect the performance of the speech-in-noise test differently and thus used them as two types of non-vocal noise. If natural sounds improve the performance of speech-in-noise tests in individuals with high autistic traits, it may be difficult to detect the difference between the effects of vocal and non-vocal noises on speech-in-noise performance when using only natural noise. Conversely, if we use only mechanical noise, the effect of the types of noise on speech-in-noise performance cannot be explained solely by social orienting but also by impaired performance due to the acoustic properties of mechanical sounds. Therefore, we must use both mechanical and natural noise. Suppose individuals with high autistic traits experience greater difficulty perceiving speech in the presence of both mechanical and natural noise. In that case, the difference between the effects of vocal and non-vocal noise on speech-in-noise performance can solely be explained by social orienting. In this study, we used train noise, which contains more temporal dips than aircraft noise as mechanical noise.

We tested the following hypotheses: (1) the higher the autistic traits, the lower the performance in the speech-in-noise test, and (2) individuals with high autistic traits experience greater difficulty perceiving speech, especially in the non-vocal noise, because they pay greater attention to non-vocal sounds than vocal sounds. Furthermore, we investigated the relationship between the ability to perceive speech in background noise and attention-deficit/hyperactivity disorder (ADHD) traits. We used the measure of ADHD traits to ensure that the speech perception performance in noise was not due to ADHD traits but to ASD traits, given that ADHD is the most common comorbidity in children with ASD, with comorbidity rates in the 40–70% range (see a review38). It is presumed that the ADHD traits would not be related to the ability to perceive speech in background noise, as there was no significant difference in this ability between children with and without ADHD39. Additionally, the performance of the Integrated Visual and Auditory Quick Screen Continuous Performance Task, which assess attention and impulse control and is used as an ADHD assessment tool, does not significantly correlate with the performance in the speech-in-noise test for children with ASD22.

Methods

Participants

A total of 105 female Japanese university students (mean age = 20.7, SD = 4.3) participated in an online survey and responded to the Autism-Spectrum Quotient (AQ) Japanese version40. Prior to the survey, all subjects were explained in writing that participation was voluntary, there were no disadvantages of non-participation, and that the survey was anonymous. Their responses to AQ were regarded as their consent to participate in the survey. After the completion of the AQ, the participants who wished to receive the recruitment information of the following experiment provided their e-mail address.

Of those who participated in the survey, 40 normal-hearing Japanese native speakers (mean age = 20.1, SD = 2.6) participated in the experiment. Two were excluded from analyses because of technical problems. As one participant showed speech reception threshold (SRT) for multitalker noise (see Stimuli) less than the group mean minus 3SD, their SRT for multitalker noise was excluded from analyses.

The sample size was determined based on a priori power analysis with G*Power 3.1.9.641 for an analysis of variance of the interaction between a between-participant factor (i.e., median-split high and low AQ groups) and a within-participant factor with three levels. The power analysis indicated that 18 participants in each group were required for a statistical power of 0.90, assuming a moderate effect size f of 0.25 and alpha of 0.05. Note that, during the peer review process, it was determined that the AQ scores should be included in the generalized linear mixed model (GLMM) as a continuous variable instead of being classified as a categorical variable (i.e., median-split) in the analysis of variance.

All participants provided written informed consent prior to the experiment. This study was approved by the Humanities and Social Sciences Research Ethics Committee of Ochanomizu University (approval number: 2021-174) and conducted in accordance with the Declaration of Helsinki.

Measures

Autistic traits were measured using the AQ42 Japanese version40. The AQ is a self-administered questionnaire to measure the degree of traits associated with autism spectrum in adults with normal intelligence42. It comprises 50 questions: 10 questions assessing five different areas: social skill, attention switching, attention to detail, communication, and imagination. The items are rated on a four-point Likert scale (definitely agree, slightly agree, slightly disagree, or definitely disagree). “Definitely agree” or “slightly agree” responses scored 1 point on the items referring to behaviors typically associated with the ASD, and “slightly disagree” or “definitely disagree” responses scored 1 point on the reversal items. Higher scores indicated stronger autistic traits.

The ADHD traits were measured using the Adult ADHD Self-Report Scale (ASRS43; Screener Japanese version44. The ASRS is an 18-item self-administered questionnaire designed to screen for adult ADHD43. We used the ASRS’s short-form screener comprising six items of the scale, which is often used to diagnose the ADHD because these items are reported to be the most predictive of consistent with the ADHD43. It is scored on a five-point Likert scale (0 = never, 1 = rarely, 2 = sometimes, 3 = often, 4 = very often); higher scores indicate stronger ADHD traits. Only the experiment participants completed the ASRS after the experiment.

Stimuli

The target words were 972 independent Japanese words with high familiarity in the Familiarity-Controlled Word Lists 2003 (FW0345). The words in these lists have four-mora, and their accent nuclei are either present at the fourth mora or absent. A mora refers to a temporal unit that divides words into almost isochronous segments46. The developer of the word lists removed words that could induce negative impressions and those related to diseases from the lists47. No other semantic criteria were used to remove words from the lists. In this study, words containing a type of geminate consonant called “soku-on” or a type of a long vowel called “cho-on” were excluded. The duration of each target word was approximately one second. All the words were spoken by a female.

We used three types of background noises with temporal dips. The multitalker noise, which comprised overlaid voices of two men and three women reading different Japanese sentences (General Incorporated Association Aozoraroudoku, Japan, https://aozoraroudoku.jp/index.html), was used as the vocal noise. The train noise was the noise of a moving train recorded outside. The stream noise was the sound of a water stream. These two were used as the non-vocal noises. We used mechanical and natural non-vocal noises because they may affect cognitive functions differently. Duration of all noises were 10 s. We compared the levels of noises for 10 s and adjusted the level of the non-vocal noise so that the loudest level in the non-vocal noise was within ± 1 dB of the loudest signal in the vocal noise in each of the one-third octave bands; that is, we adjusted the frequency response of the non-vocal noise to be close to that of the vocal noise. Three seconds of the 10 s of noises were randomly extracted and presented in each trial.

Apparatus

Each participant was tested individually in a soundproof room. PsychoPy 2021.2.348 running on macOS 12.4 controlled the experiment. The auditory stimuli were presented via a headphone (HPH-MT8, YAMAHA) with an audio interface (UR28M, Steinberg). The instructions and typed responses were presented on a liquid–crystal display monitor. The participants responded using a standard QWERTY keyboard.

Procedures

The targets were superimposed on noises and delivered via the headphone. The target speech commenced 0.3–1.5 s after the noise began. The participants typed a word that they heard via the Romaji input immediately after stimulus presentation. The typed letters were synchronously presented on the monitor in katakana. If they could not hear a mora, they indicated it by typing the @ sign in the applicable place. Correct typing for all four-mora was considered as correct, and all others were considered as incorrect.

The sound-pressure level of the target was fixed at approximately 60 dB. The sound-pressure level of the background noise was initially at a SNR of + 5 dB, that is, approximately 55 dB. The SRTs for each noise were measured using the adaptive-tracking procedure with a three-up and one-down inter-leaved staircase method. The level of background noise was set from 0 (silence) to 1 in PsychoPy. The initial step size was 0.04, and after the first two reversals, the step size was reduced to 0.02. After two more reversals, the step size was reduced to 0.01 for 10 reversals before the trial ended. The mean of the SNRs in the last 10 reversal points was used as the SRT for each noise. The larger the SRT, the lower the level of background noise at 75% of the correct response rate, implying that the ability to perceive the speech in noise was low. The positive values of the SRTs indicate how much dB lower the background noise level is at 75% of the correct response rate from the level of the target speech, that is, 60 dB. Negative values indicate the opposite trend.

Statistical analysis

We investigated the distribution of autistic traits by visual inspection and the Shapiro–Wilk test for testing normality. A GLMM with the AQ score and the type of background noise as fixed effects and participants as a random effect was performed for SRTs with a Gaussian family distribution and the identity link function to investigate the effects of autistic traits and types of background noise on the ability to perceive speech in noise. We tested the hypotheses that (1) the higher the autistic traits, the lower the performance in the speech-in-noise test by investigating the effect of the AQ score and (2) individuals with high autistic traits experience greater difficulty perceiving speech, especially in the non-vocal noise, by investigating the effect of the interaction between the AQ score and the type of background noise.

Furthermore, we performed Bayesian correlational analyses between ASRS scores and SRTs to verify the null hypothesis that ADHD traits are not related to the ability to perceive speech in background noise.

Data analysis was performed using JASP version 0.17.149.

Results

Distribution of autistic traits

Descriptive statistics for each measure are presented in Table 1. The AQ score was widely distributed from low to high (range = 5–40). The cutoff score of 33 for the Japanese version of the AQ40 was included within this range. The Shapiro–Wilk test indicated that the AQ score was normally distributed (p = 0.811, Fig. 1). This is similar to the distribution pattern observed in the general population in a previous study that developed the AQ42.

Effects of autistic traits and types of background noise

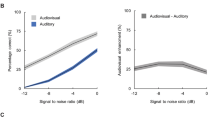

GLMM with the AQ score and type of background noise as fixed effects and the participants as a random effect was performed for the SRTs (Fig. 2). The analysis of variance on the GLMM based on the Wald test showed a marginally significant main effect for the AQ score (χ2(1) = 3.06, p = 0.080). However, the main effect for the type of background noise (χ2(2) = 0.33, p = 0.850) and interaction between them (χ2(2) = 0.27, p = 0.876) were not significant.

Correlations between the ADHD traits and SRTs

We performed a Bayesian correlation analysis between the ASRS scores and SRTs. The results showed that there were moderate evidences50 for the ASRS scores would not be related to the SRTs for multitalker (r = 0.15, BF01 = 3.33), train (r = − 0.09, BF01 = 4.35), and stream noise (r = − 0.04, BF01 = 4.81).

Discussion

This study tested the hypotheses that the higher the autistic traits, the lower the performance in the speech-in-noise test, and that individuals with high autistic traits experience greater difficulty in perceiving speech, especially in the non-vocal noise because they pay more attention to non-vocal sounds than vocal sounds. Additionally, we analyzed the relationship between speech perception ability in noise and ADHD traits.

We found a marginally significant effect of autistic traits. However, we did not find a significant effect of the type of background noise or interaction between the two factors. Previous studies showed that children21,22 and adults19,20,23 with ASD have a lower ability to perceive speech in noisy environments than typically developed individuals. A trend favoring our first hypothesis, that the higher the autistic traits, the lower the performance on the speech-in-noise test, is partially consistent with these studies. The results also showed no statistically significant effect of the types of background noise and interaction between autistic traits and the types of background noise. Therefore, the second hypothesis that individuals with high autistic traits experience greater difficulty in perceiving speech, especially in the non-vocal noise, was not supported. However, previous studies have shown that individuals with ASD lack social orienting31,32 and have an attentional bias toward non-vocal sounds32. Our result was not consistent with these previous studies.

To the best of our knowledge, no previous study has shown a relationship between autistic traits and speech perception performance in noise in the general population. Therefore, this is the first study to suggest a possible relationship between autistic traits and speech perception ability in noisy environments in the general population. However, further studies are needed to confirm whether there is a significant effect of autistic traits because the interpretation should be cautious, as the null hypothesis was not rejected.

The second hypothesis that individuals with high autistic traits experience greater difficulties in perceiving speech, especially in the non-vocal noise, was not supported. Although Gervais et al.32 showed that individuals with ASD have an attentional bias toward non-vocal stimuli, our results suggest that the difference between vocal and non-vocal noise was not associated with difficulties in speech perception in noise in individuals with high autistic traits. There are three possible explanations for the inconsistent results. First, attentional bias toward non-vocal sounds may be weaker in individuals who have not been diagnosed with ASD but have higher levels of autistic traits than in individuals who have been diagnosed with ASD. Second, attentional bias toward non-vocal sounds did not negatively affect performance on speech-in-noise tests using non-vocal noise. Individuals with an attentional bias toward non-vocal sounds may have difficulty maintaining attention toward speech and noticing someone talking to them. These difficulties can lead to failure in capturing words. However, in the speech-in-noise test, there is an explicit instruction to attend to a target speech and not to noise. Although some studies have shown that individuals with ASD show attentional bias toward local information compared to global information in the Navon task51,52, attentional bias in individuals with ASD was reduced when they were instructed to attend to either local or global information52. Similarly, individuals with ASD and high autistic traits with an attentional bias toward non-vocal sounds may not show the difficulties directing their attention to vocal sounds when explicitly instructed to do so. Third, the magnitude of differences in sociality between the noises used could be insufficient. We used multitalker noise, which comprises the overlaid voices of five men and women, and it was difficult to perceive words in the noise. Therefore, although it was a vocal sound, the multitalker noise might have been perceived as almost meaningless and not meaningful human voices (i.e., social stimuli).

The correlational analysis results showed moderate evidence that ADHD traits were not related to the ability to perceive speech in multitalker, train, and stream noise. This result is consistent with our hypothesis that ADHD traits are not related to the ability to perceive speech in background noise and with the results of previous studies that did not show a significant difference in the ability to perceive speech in noise between boys with and without ADHD39. Another study also did not show the relationship between the performance of the Integrated Visual and Auditory Quick Screen Continuous Performance Task, which assesses auditory and visual attention and impulse control, and the performance of speech-in-noise test in children with ASD22. The lack of correlation between the ADHD traits and speech perception performance in noise could be the discriminant evidence for the association between autistic traits and the speech perception in noise.

This study has limitations in terms of generalizability. The participants of this study were only female Japanese students, and there is a possibility of sampling bias in autistic traits. The average of the AQ total score was 22.4 (SD = 7.55) in all participants of this experiment. However, in the study conducted by Wakabayashi et al.40 the average of the AQ total score was 19.9 (SD = 6.38) in a larger sample of Japanese female university students (n = 495). Autistic traits in the participants of this experiment might have been stronger than in the sample used by Wakabayashi et al.40 with a small-to-medium effect size (Cohen’s d = 0.36). This sampling bias may have affected the effect of autistic traits on speech perception in noise. Further research is required to ascertain whether the results of this study can be replicated in individuals of different sexes, ages, and races without sampling bias. Therefore, further research using clinical samples is also required. The effect of attentional bias toward non-vocal noise on speech perception in noise may be easier to detect in clinical samples because these individuals have greater attentional bias and difficulties in speech perception than individuals with high autistic traits.

Conclusions

A trend favoring our first hypothesis that the higher the autistic traits, the lower the speech perception performance in noise was observed; however, the interpretation should be made cautiously, as the null hypothesis was not rejected. The results did not support our second hypothesis that individuals with high autistic traits have greater difficulty perceiving speech, especially in the non-vocal noise, because they pay more attention to non-vocal sounds than to vocal sounds. ADHD traits were not related to speech perception in noise. This result could provide discriminant evidence for the association between autistic traits and speech perception in noise.

Data availability

The raw data is publicly available at https://osf.io/92czn/.

References

Crane, L., Goddard, L. & Pring, L. Sensory processing in adults with autism spectrum disorders. Autism 13, 215–228 (2009).

Leekam, S. R., Nieto, C., Libby, S. J., Wing, L. & Gould, J. Describing the sensory abnormalities of children and adults with autism. J. Autism Dev. Disord. 37, 894–910 (2007).

Bromley, J., Hare, D. J., Davison, K. & Emerson, E. Mothers supporting children with autistic spectrum disorders: social support, mental health status and satisfaction with services. Autism 8, 409–423 (2004).

American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders 5th edn (American Psychiatric Publishing, 2013).

Horder, J., Wilson, C. E., Mendez, M. A. & Murphy, D. G. Autistic traits and abnormal sensory experiences in adults. J. Autism Dev. Disord. 44, 1461–1469 (2014).

Mayer, J. L. The relationship between autistic traits and atypical sensory functioning in neurotypical and ASD adults: a spectrum approach. J. Autism Dev. Disord. 47, 316–327 (2017).

Robertson, A. E. & Simmons, D. R. The relationship between sensory sensitivity and autistic traits in the general population. J. Autism Dev. Disord. 43, 775–784 (2013).

Tsuji, Y. et al. Mediating role of sensory differences in the relationship between autistic traits and internalizing problems. BMC Psychol. 10, 148–148 (2022).

O’Connor, K. Auditory processing in autism spectrum disorder: a review. Neurosci. Biobehav. Rev. 36, 836–854 (2012).

McIntosh, D. N., Miller, L. J. & Shyu, V. Development and validation of the Short Sensory Profile. in Sensory Profile Manual (ed. Dunn, W.) 59–73 (Psychological Corporation, 1999).

Tomchek, S. D. & Dunn, W. Sensory processing in children with and without autism: a comparative study using the Short Sensory Profile. Am. J. Occup. Ther. 61, 190–200 (2007).

McCormick, C., Hepburn, S., Young, G. S. & Rogers, S. J. Sensory symptoms in children with autism spectrum disorder, other developmental disorders and typical development: a longitudinal study. Autism 20, 572–579 (2016).

Ashburner, J., Ziviani, J. & Rodger, S. Sensory processing and classroom emotional, behavioral, and educational outcomes in children with autism spectrum disorder. Am. J. Occup. Ther. 62, 564–573 (2008).

Kanakri, S. M., Shepley, M., Varni, J. W. & Tassinary, L. G. Noise and autism spectrum disorder in children: an exploratory survey. Res. Dev. Disabil. 63, 85–94 (2017).

Kim, S. Y. et al. State and trait anxiety of adolescents with autism spectrum disorders. Psychiatry Investig. 18, 257–265 (2021).

Mazurek, M. O. et al. Anxiety, sensory over-responsivity, and gastrointestinal problems in children with autism spectrum disorders. J. Abnorm. Child Psychol. 41, 165–176 (2013).

Pfeiffer, B., Kinnealey, M., Reed, C. & Herzberg, G. Sensory modulation and affective disorders in children and adolescents with Asperger’s disorder. Am. J. Occup. Ther. 59, 335–345 (2005).

Jones, E. K., Hanley, M. & Riby, D. M. Distraction, distress and diversity: exploring the impact of sensory processing differences on learning and school life for pupils with autism spectrum disorders. Res. Autism Spectr. Disord. 72, 101515 (2020).

Alcántara, J. I., Weisblatt, E. J. L., Moore, B. C. J. & Bolton, P. F. Speech-in-noise perception in high-functioning individuals with autism or Asperger’s syndrome. J. Child Psychol. Psychiatry 45, 1107–1114 (2004).

Groen, W. B. et al. Intact spectral but abnormal temporal processing of auditory stimuli in autism. J. Autism Dev. Disord. 39, 742–750 (2009).

Apeksha, K., Hanasoge, S., Jain, P. & Babu, S. S. Speech perception in quiet and in the presence of noise in children with autism spectrum disorder: a behavioral study. Indian J. Otolaryngol. Head Neck Surg. 75, 1707–1711 (2023).

James, P. et al. Increased rate of listening difficulties in autistic children. J. Commun. Disord. 99, 106252 (2022).

Schelinski, S. & von Kriegstein, K. Brief report: speech-in-noise recognition and the relation to vocal pitch perception in adults with autism spectrum disorder and typical development. J. Autism Dev. Disord. 50, 356–363 (2020).

Peters, R. W., Moore, B. C. & Baer, T. Speech reception thresholds in noise with and without spectral and temporal dips for hearing-impaired and normally hearing people. J. Acoust. Soc. Am. 103, 577–587 (1998).

Chevallier, C., Kohls, G., Troiani, V., Brodkin, E. S. & Schultz, R. T. The social motivation theory of autism. Trends Cogn. Sci. 16, 231–239 (2012).

Fletcher-Watson, S., Findlay, J. M., Leekam, S. R. & Benson, V. Rapid detection of person information in a naturalistic scene. Perception 37, 571–583 (2008).

Gliga, T., Elsabbagh, M., Andravizou, A. & Johnson, M. Faces attract infants’ attention in complex displays. Infancy 14, 550–562 (2009).

Rosa Salva, O., Farroni, T., Regolin, L., Vallortigara, G. & Johnson, M. H. The evolution of social orienting: evidence from chicks (Gallus gallus) and human newborns. PLoS One 6, e18802 (2011).

Falck-Ytter, T. & von Hofsten, C. How special is social looking in ASD: a review. Prog. Brain Res. 189, 209–222 (2011).

Klin, A., Jones, W., Schultz, R., Volkmar, F. & Cohen, D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Arch. Gen. Psychiatry 59, 809–816 (2002).

Dawson, G., Meltzoff, A. N., Osterling, J., Rinaldi, J. & Brown, E. Children with autism fail to orient to naturally occurring social stimuli. J. Autism Dev. Disord. 28, 479–485 (1998).

Gervais, H. et al. Abnormal cortical voice processing in autism. Nat. Neurosci. 7, 801–802 (2004).

Unruh, K. E. et al. Social orienting and attention is influenced by the presence of competing nonsocial information in adolescents with autism. Front. Neurosci. 10, 586 (2016).

Bronzaft, A. L. & McCarthy, D. P. The effect of elevated train noise on reading ability. Environ. Behav. 7, 517–527 (1975).

Stansfeld, S. A. et al. Aircraft and road traffic noise and children’s cognition and health: a cross-national study. Lancet 365, 1942–1949 (2005).

Hygge, S., Evans, G. W. & Bullinger, M. A prospective study of some effects of aircraft noise on cognitive performance in schoolchildren. Psychol. Sci. 13, 469–474 (2002).

Van Hedger, S. C. et al. Of cricket chirps and car horns: the effect of nature sounds on cognitive performance. Psychon. Bull. Rev. 26, 522–530 (2019).

Antshel, K. M. & Russo, N. Autism spectrum disorders and ADHD: overlapping phenomenology, diagnostic issues, and treatment considerations. Curr. Psychiatry Rep. 21, 34 (2019).

Söderlund, G. B. W. & Jobs, E. N. Differences in speech recognition between children with attention deficits and typically developed children disappear when exposed to 65 dB of auditory noise. Front. Psychol. 7, 34 (2016).

Wakabayashi, A., Tojo, Y., Baron-Cohen, S. & Wheelwright, S. The Autism-Spectrum Quotient (AQ) Japanese version: evidence from high-functioning clinical group and normal adults. Jpn. J. Psychol. 75, 78–84 (2004).

Faul, F., Erdfelder, E., Lang, A.-G. & Buchner, A. G*Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191 (2007).

Baron-Cohen, S., Wheelwright, S., Skinner, R., Martin, J. & Clubley, E. The Autism-Spectrum Quotient (AQ): evidence from Asperger syndrome/high-functioning autism, males and females, scientists and mathematicians. J. Autism Dev. Disord. 31, 5–17 (2001).

Kessler, R. C. et al. The World Health Organization adult ADHD self-report scale (ASRS): a short screening scale for use in the general population. Psychol. Med. 35, 245–256 (2005).

World Health Organization. Adult ADHD Self-Report Scale v1.1 Screener. https://www.hcp.med.harvard.edu/ncs/asrs.php (2011).

Amano, S., Kondo, T., Sakamoto, S. & Suzuki, Y. NTT-Tohoku University Familiarity-Controlled Word Lists (FW03). National Institute of Informatics. https://doi.org/10.32130/src.FW03 (2006).

Menning, H., Imaizumi, S., Zwitserlood, P. & Pantev, C. Plasticity of the human auditory cortex induced by discrimination learning of non-native, mora-timed contrasts of the Japanese language. Learn. Mem. 9, 253–267 (2002).

Word lists that are controlled for familiarity and phonological balance. http://www.ais.riec.tohoku.ac.jp/lab/wordlist/abstract.html (2001).

Peirce, J. et al. PsychoPy2: experiments in behavior made easy. Behav. Res. Methods 51, 195–203 (2019).

JASP Team. JASP, 0.17.1, https://jasp-stats.org/ (2023).

Lee, M. D. & Wagenmakers, E. J. Bayesian Cognitive Modeling: A Practical Course (Cambridge University Press, 2014).

Behrmann, M. et al. Configural processing in autism and its relationship to face processing. Neuropsychologia 44, 110–129 (2006).

Plaisted, K., Swettenham, J. & Rees, L. Children with autism show local precedence in a divided attention task and global precedence in a selective attention task. J. Child Psychol. Psychiatry 40, 733–742 (1999).

Acknowledgements

Y.T. was supported by JSPS KAKENHI (22J11083). S.I. was supported by JSPS KAKENHI (20K20144, 20H04094, 23K11785).

Author information

Authors and Affiliations

Contributions

Y.T. conceived the study, collected and analyzed the data, and drafted the manuscript. S.I. supervised the study, and reviewed and edited the manuscript. All authors approved the final version of the manuscript for submission.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tsuji, Y., Imaizumi, S. Autistic traits and speech perception in social and non-social noises. Sci Rep 14, 1414 (2024). https://doi.org/10.1038/s41598-024-52050-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-52050-2

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.