Abstract

The study presents a novel, full model of an industrial camera suitable for robotic manipulator tool center point (TCP) calibration. The authors propose a new solution which employs a full camera model positioned on the effector of an industrial robotic arm. The proposed full camera model simulates the capture of a calibration pattern for use in automated TCP calibration. The study describes an experimental test robot stand for producing a reference data set, a full camera model, the parameters of a generally known camera obscura model, and a comparison of proposed solution with the camera obscura model. The results are discussed in the context of an innovative approach which features a full camera model to assist the TCP calibration process. The results showed that the full camera model produced greater accuracy, a significant benefit not provided by other state-of-the-art methods. In several cases, the absolute error produced was up to seven times lower than with the state-of-the-art camera obscura model. The error for small rotation (max. of 5\(^\circ \)) and small translation (max. of 20 mm) was 3.65 pixels. The results also highlighted the applicability of the proposed solution in real-life industrial processes.

Similar content being viewed by others

Introduction

One of the pillars of Industry 4.0 is the widespread deployment of industrial robots in manufacturing. Mechanical assembly processes are key manufacturing stages, and the use of robots in these processes directly affects product quality by improving production efficiency and assembly performance1.

Industrial robotic manipulators have excellent repeatability and accuracy for precise positioning, but because they are used under intensive operating conditions, they must be repeatedly calibrated during the manufacturing process2. During calibration, the position and orientation of the tool center point (TCP) of a robot arm should be corrected with a highly accurate tracking device3. Numerous methods for calibration procedures have been published. Khaled et al.4 proposed an active disturbance rejection control scheme which calibrated path accuracy in real time. Fares et al.5 applied a sphere fitting algorithm to improve four point calibration accuracy.

The primary objective of this article is to develop a camera model capable of simulating the positions of anchor points within a calibration pattern as captured from various camera positions and orientations. This model can subsequently be utilized to resolve the inverse problem: determining the camera’s position and orientation based on the locations of the anchor points in the captured image. The accurate determination of the camera’s position can then aid in calibrating the TCP of a robotic arm.

This article presents a full model of an optical camera used in combination with the calibration procedure (Fig. 1). The proposed camera model is able to capture an image of a calibration pattern from a certain camera position. The calibration pattern has defined corners and a center point positioned according to the coordinate system of the working plane. The image depicts the calibration pattern point positions as equivalent as possible to an image captured by a real-life camera . The proposed camera model can be easily adapted to an effector for calibration of the robot TCP.

The study contributes the following:

-

1.

Design of a full camera model.

-

2.

Comparison with a previously published camera obscura model.

-

3.

Application of a full camera model in calibrating a robotic manipulator’s TCP.

Calibrating robotic manipulators is an important aspect of any industrial robot deployment. The aim of TCP calibration is to determine the relationship between the TCP frame and working plane6. Calibration is performed using the camera in the robot’s end-effector. The camera captures an image and the calibration point positions are then calculated. However, the calibration procedure requires an inverse problem solver, where the TCP is actually calculated from the calibration points captured on the image. An inverse problem solver is obtained through an iteration process which determines the combination of input values (camera position and orientation), resulting in the calibration point positions in the modelled image, which is as close as possible to the real camera image captured by the robotic tool. A gradient method or difference evolution, for example, can be used as an optimization solver7,8.

The article is organized as follows: Section "Introduction" introduces the scientific challenge and novelty of the current study; Section "Related studies" summarizes the state-of-the-art; Section "Mathematical model" describes the camera and the full camera model; Section "Experiment" outlines the experiment, reference data set and evaluation criteria; Section "Results" reports the results of the experiment from absolute and relative error perspectives; Section "Discussion" discusses and evaluates the results in the context of the study’s novel contribution; Section "Conclusion" concludes the article and outlines potential future work.

Related studies

Precision robot guidance is a critical parameter in robotic manipulators. Robotic manipulators have inaccurate positioning yet excellent repeatability, which means that any error or inaccuracy in movement is also repeated9. The scientific literature contains many methods for improving precision during robot movement. Table 1 provides a summary of state-of-the-art studies which propose solutions for robot movement and detection of position, robot coordination sensors and camera obscura modelling methods.

Industrial producers devise calibration procedures generally based on manual positioning of various types of spike. To achieve greater precision than manual calibration or to calibrate robotic arms automatically, additional sensors must be used37. One option is to use a camera to observe manufactured pieces and discover fitting patterns with subsequent image processing and then calibrate the robot’s coordination frame according to these patterns10. A camera can be also used to observe the robotic arm itself and provide visual feedback on its position11.

Another option is to use a 3D sensor, which, in contrast to a 2D camera, provides additional depth information. RGBD cameras are suitable types12 that record depth information for each RGB pixel. An example of RGBD camera application is the detection of cardboard box position without any markings13.

For large areas requiring precise positioning, the combination of a global laser and local scanning system can be used14. Chen et. al.15 introduced visual reconstructions using a planar laser, two cameras and a 3D orientation board to verify the accuracy of an active vision system’s measurement fields and calibration. Other methods involve the use of visual-tactile sensors17 or optical fibers18.

Accuracy in robot guidance can be improved with various mathematical approaches. Lei et al. applied Levenberg-Marquardt optimization for 3D pose estimation19. The robotic arm itself can be also used for inspection points. Tabu search can find the optimal base position, and the Lin-Kernighan method can optimize the order of inspection points20. Machine learning methods also provide promising solutions for positioning robotic arms. Eldosoky et al. applied a machine learning algorithm in a detection system used by in decoration robots for wall bulge endpoints regression and classification according to its orientation21. Choudhary et. al. introduced self-supervised representation learning networks for accurate spatial guidance22. Gouveia et. al. described a smart robotic system which processed spatial data obtained from a 3D scanner and was capable of performing an autonomous pick-and-place task of injected moulded parts from one conveyor belt to another23. Image processing can also enable automated calibration of robotic arms24. Specialized RGBD cameras allow robots to “see” objects like a human25. Image processing also solves the challenge of grip control in handling moving objects26.

Image processing methods often employ cameras such as a pinhole cameras and are widely used in industrial applications27. Table 1 provides a summary of improvements, practical implementations and calibration and positioning methods for camera obscura models. Camera obscura models assume that the camera’s parameters are correct and have a projection error of almost zero. Because all the camera parameters are known, any computed results are considered true. However, the camera obscura model does not accurately represent camera behavior if an autofocus device is used28. Lens distortion is not a problem with camera obscura models29. Image projection errors can be minimized with backstepping-based approach which synthesizes the Lipschitz condition and natural saturation of the inverse tangent function30.

Camera obscura models have many practical applications, such as detecting and avoiding obstacles31, precise solar pointing32 or determining the distance and dimensions of a tracked object33. Camera obscura models can be also used in the calibration process for industrial robots34. Liu et al. devised a self-calibration camera algorithm for an active vision system which detects radial distortion35. Rendón et al. presented a method for positioning robots using camera-space manipulation with a linear camera model36.

Mathematical model

This section presents the optical equations for a thin lens camera and a camera obscura (pinhole camera) and two mathematical models based on these equations. A complicated mathematical model describes the thin lens camera, whereas a simplified state-of-the-art model characterises the pinhole camera.

Optical equations

To create the mathematical model of a camera with a thin lens, we used the optical equation

where the focal length is f, the symbol a denotes the distance of an object from the lens, h is the object’s height, and \(h'\) is the image height.

A pinhole camera is a simple type of an optical device and predecessor to cameras with a thin lens. A camera obscura is a box with a hole in one of its walls. Light from outside passes through the hole hits and projects an image onto the opposite wall.

The mathematical model of a camera obscura is described by the equation

where \(a'\) is the focal distance. This simple camera model is popularly used in many applications for its simplicity and ease of implementation.

Model implementation

In this section, we derive a model with a thin lens. This type of model is more complex than a camera obscura, but it takes advantage of the properties of modern cameras. Equation (1) was used to create the mathematical model.

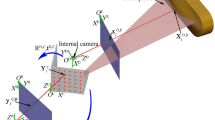

Manufacturers do not usually provide a precise position of the lens, therefore , a displacement L is described in the mathematical model of the full camera. A camera lens can thus be positioned at a different point to the TCP since both points lie on the camera axis o (see Fig. 3). The distance between these points is denoted L. We thus obtain the Equation (1)

This optical equation is applied to a calibration square of prescribed geometric shape (Fig. 2).

In the mathematical model, the projected points of the calibration square (located in Euclidean space \(\mathbb {R}^3\)) must correspond to points on the camera chip (in the plane \(\mathbb {R}^2\)). The mapping is denoted F and must satisfy (3).

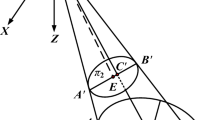

The calibration square contains five points described by

where A, B, C, D a E refer to the points indicated in the diagram (Fig. 2). Points \(S_i^P\) belong to the plane \(\rho _1\) (\(\rho _1: z=0\) in the case described in this paper). To correctly apply the optical Eq. (3), the calibration square must be projected orthogonally onto the plane \(\rho _2\) perpendicular to the camera axis o (defined by the rotation of the camera) and \(\rho _1\). Figure 3 illustrates the orthogonal projection of a calibration square point onto a perpendicular plane.

The camera lens is positioned at a point shifted from the TCP by the distance L in the direction of the o axis and focused on the point \(Q \in \rho _1 \cap \rho _2\).

To define the plane \(\rho _2\), its normal vector given by the camera axis o is required. It is assumed that the initial rotation of the camera is given by the vector \(\vec {v}\). For example, if the camera in its initial position is rotated according to a z-axis only, then

If the angles \(\alpha \), \(\beta \) a \(\gamma \), describing the rotations with respect to the x, y and z axes are known, then vector o can be computed using the rotation matrix

where

For the direction vector o, we have

where \(\vec {v}=[v_1,v_2,v_3]^T\). The general equation of the plane \(\rho _2\) can be derived from the conditions

Figure 4 illustrates the projection of a geometric pattern onto a plane perpendicular to the camera axis.

Orthogonal projection of \(S_i^P \in \rho _1\) onto a point \(S_i^{P'} \in \rho _2\) is possible. Using analytical geometry, the orthogonal projection \(S_i^{P'}\) can be written as

where

In optical Eq. (3), let \(a: \mathbb {R}^3 \rightarrow \mathbb {R}\) denote the distance between the TCP with coordinates [x, y, z] and the calibration square (Fig. 5).

To apply (3), a, h and \(h'\) must be calculated for each point of the calibration square. The value of a for the position [x, y, z] can be computed from

Again, we have the condition

to express the coordinates of Q; substituting into (12), we obtain an expression for a with respect to the camera’s position [x, y, z] and its rotation described by matrix R:

where \(R_i\), \(i \in \{1,2,3\}\) denotes an i-th row of matrix R.

The value of the object’s height h for each point \(S_i^{P'}\) is computed from

Substituting the value of (15) into (3), we have

It is worth mentioning that \(h'_i\) is the height of the image displayed by the camera chip and therefore belongs to \(\mathbb {R}^2\); thus

where \(Q'' \in \mathbb {R}^2\) is the center of the camera chip’s display plane (here, \(Q''=[0,0]\)) and \(S_i^{P''}\) is an image of \(S_i^{P'}\) in the camera chip).

Finally, we obtain the function \(F:\mathbb {R}^6 \rightarrow \mathbb {R}^{10}\), where

The vectors

can be obtained using the orthogonal direction vectors of the plane which contains the camera chip’s display plane.

It is important to recognize that when utilizing a static camera, a linear relationship exists between the size of the object and the size of its image. Conversely, in our proposed model featuring a non-stationary camera, the function F – which characterizes the image output on the camera sensor in relation to the camera’s position and orientation – exhibits a nonlinear nature. This nonlinearity arises because the angles defining the camera’s orientation are not sufficiently small to permit linearization of the model.

Implementation of reference model

The current study discusses the use of a camera obscura as a reference model. A camera obscura is typically defined as a linear system described by matrix multiplication38,39. However, this type of model is used for static cameras and changing images only. The camera investigated in the current study is not static, therefore we derive camera obscura model from general optical equations.

As with the full camera model, the distance between the TCP and the lens is denoted L for the camera obscura, giving the equation

For the camera obscura model, we also need to derive a vector function \(F:\mathbb {R}^6 \rightarrow \mathbb {R}^{10}\) to describe the relationship between the calibration square’s image and the camera’s position and rotation. To formulate the mathematical model of the camera obscura, it is again necessary to describe the projection of the calibration square onto the camera chip. We obtain the camera obscura equations as follows:

-

1.

Determine the plane \(\rho _2\) perpendicular to the camera axis and which intersects the calibration pattern plane on the camera axis (Fig. 4 and Eq. (9)).

-

2.

Obtain an orthogonal projection of the calibration square onto the plane \(\rho _2\) (Fig. 4 and equation (10)).

-

3.

Calculate the distance from the object a (Eqs. (12, 13, 14)).

-

4.

Calculate the image height \(h'\) from the camera obscura optical Eqs. (15) and (20). This results in:

$$\begin{aligned} h'_i=\frac{a'}{a+L}h_i=\frac{a'}{a+L}\Vert S_i^{P'}-Q\Vert , \end{aligned}$$(21)where \(a'\) is constant.

-

5.

Obtain the mathematical model of the camera obscura by substituting Equation (21) into (18).

Deriving the mathematical model of the camera obscura, we obtain the function F from Equation (18), although \(h'\) is obtained from Eq. (21). Function F is a common derivation, therefore it is not presented here in detail.

Experiment

The proposed model was set up in Matlab, and its functionality and accuracy were tested with experimental measurements and a comparison to previously published camera obscura models.

Experimental setup

To evaluate the functionality of the proposed camera model, we designed the experimental setup illustrated in the scheme in Fig. 6.

A calibrated robotic arm and camera were first set to a known position and an image of the calibration pattern on the work plane was captured. The image was processed in LabVIEW to determine the positions of the pattern’s anchor points. The image processing procedure encompasses image calibration to eliminate radial and tangential distortion, along with the detection of anchor points. Radial and tangential distortions are removed through camera calibration, which is determined prior to capturing the reference data set in accordance with the national instrument calibration procedure. The anchor point (A–E) x and y coordinates, calibrated robotic arm position (and orientation) and camera image formed a reference data set.

The reference data set was then used as input for other camera models. In the experiment, we tested and evaluated a commonly used, state-of-the-art camera obscura model and a mathematical model for a full camera and then compared the results to determine any difference in behaviour. The mathematical models used the robotic arm’s reference position and estimated the coordinates of anchor points A–E. These coordinates were then compared to the real measurements from the camera in the evaluation block.

Reference data

For evaluation purposes, we assembled a test robot stand and experimentally measured a reference data set.

Figure 7 shows a photo of the test robot stand. This experimental setup consisted of a Staubli TX2-60 industrial 6-axis robotic manipulator (controller CS9 s8.12.2-Cs9_BS2561)40 and a Basler acA2500-14gm camera41 with a Computar M0814-MP2 2/3” 8 mm f1.4 lens42, a work table and additional hardware to permit manual calibration.

The calibration procedure for the test robot stand consisted of two consecutive steps: rough calibration and fine calibration. Rough calibration involved a three point TCP calibration method using spikes, designed in cooperation with engineers from Staubli. Fine calibration involved precise positioning of the pattern at the center of the camera image and setting the camera axis perpendicular to the calibration pattern plane. The pattern was then replaced with a mirror and the camera aligned so that its reflection was in the exact center of the lens and middle of the image.

The accuracy of robot positioning was estimated at ±0.1 mm. An error of 1 pixel in detecting the anchor point and an error of approximately 0.1 mm related to the camera’s resolution and calibration pattern size were also accounted for. The total error was estimated as a Euclidean norm at 0.14 mm.

The reference data set consisted of 100 positions and TCP orientations plus a base position. The camera’s initial placement was 300 mm above the center of the geometric figure. Figure 8 illustrates the TCP and camera coordinate systems. The robotic arm was progressively moved along the x, y and z axes, − 50 mm to 50 mm on the y and z axes and − 20 mm to 20 mm on the x axis. Movement along the x axis was tested on a limited range because the camera’s field of view in the horizontal direction was limited. The data set also contained various orientations for the base point from − 20\(^\circ \) to 20\(^\circ \). The range of rotation on the y axis was limited to -20\(^\circ \) to 10\(^\circ \) due to possible collision with robot’s fourth axis. The reference data set also contained several additional measurements of combinations of rotation and translation of the TCP.

Table 2 lists the parameter values used in the experiment. The parameters, including focal length (f) and camera resolution, were specified by the manufacturer of the camera. Additionally, the calibration square was precisely fabricated by an industrial partner. The sole parameter determined through experimental methods was the distance (L). A detailed sensitivity analysis of the parameter L is discussed in Section "Discussion".

Evaluation criteria

The study applied three evaluation criteria to compare the results from the mathematical models of the full camera and camera obscura. The first criteria is the difference in distance between the anchor point positions obtained from each camera model; these figures are independent of the calibration pattern size and camera properties. The difference was calculated from the average Euclidean distance between the real anchor points positions and the mathematical model anchor point positions:

where A–E are anchor points, \({\textrm{ref}}\) are reference anchor points, and Abs. Err is the absolute error in pixels.

The second criteria is the absolute error in millimeters produced by the test robot stand with the specific pattern size and camera model. The absolute error in millimeters directly relates to the calibration pattern size. The calibration pattern was printed on paper with a laser printer, the edge of the calibration pattern being 100 mm in length. To analyze the absolute error in millimeters, a projective transformation was applied. The absolute error in millimeters was calculated from

where \(\textbf{T}\) is a projective transformation which transfers the image plane in pixels to the calibration pattern plane in millimeters.

The third and final evaluation criteria is the relative error, which relates to the diagonal distance of the calibration pattern; this figure is independent of the pattern size and camera model. The relative error was calculated from

Results

This section discusses the results of the comparison between a commonly used camera obscura model and the proposed full camera model. Both models are compared to a reference real camera solution.

Figure 9 compares the results for the models’ overall accuracies. The box plots indicate absolute errors in millimeters . Figure 9A shows a comparison of the absolute errors in millimeters for the entire dataset, which includes the reference data and various translations, rotations and combinations of translation and rotation. Figure 9A indicates a significantly lower absolute error from the full camera model than the camera obscura model. For calibration of the robotic manipulator, we assume that the operator manually places the effector in the approximate desired position. This position is assumed to be no greater than the translation distance (20 mm) or rotation (5\(^\circ \)) on each axis. Figure 9B plots a comparison of the camera obscura model and full camera model for small translations and rotations. Significantly, the median absolute error from the full camera model is approximately seven times lower than absolute error from camera obscura model.

The box plots in Fig. 10 indicate the absolute errors in millimeters for each subset. The first two box plots (Translation) indicate the absolute error in millimeters for robotic arm translation only. The difference between the absolute errors for translation was significant, the full camera model producing an error six times lower than the camera obscura model. The two box plots in the middle indicate the absolute errors for TCP rotation. Improvement in the full camera model was not so significant as in the case of translation, but it is still lower than the error produced by the camera obscura model. Similar results were obtained for the combination of translation and rotation (box plots Translation & Rotation), showing an absolute error 1.82 times lower in the full camera model.

Table 3 summarizes the results for the camera obscura and full camera models, indicating averages for various input data sets. The first row states the results for the entire data set. Comparing the results, that full camera model produced absolute and relative errors approximately 2.65 times lower than the camera obscura model. The second row indicates the results for small rotation and translation. As mentioned above, we assumed that an operator manually navigates the robotic arm near the desired TCP during calibration process. For small rotation and translation, the full camera model produced errors 5 times lower than the camera obscura.

The remaining rows in Table 3 state the results for the full camera and camera obscura models under specific conditions. The second row indicates the errors for translation in specific directions; translation generally produced an error of approximately 4 pixels with the full camera model and approximately 19 pixels with the camera obscura model. Regarding rotation, the full camera model behaved differently in z-axis rotation than rotation on the other axes. z-axis rotation produced a very low error of 2.71 pixels, while rotation on the x and y axes produced an absolute error of approximately 25 pixels. The causes of this behaviour are discussed in Section 6. The last row of results in the table shows errors for translation, rotation and concurrent rotation and translation. The results for these data sets correspond to the previously mentioned properties. The translation error produced by the full camera model (4.12 pixels) is less than error produced by the camera obscura model. The errors for rotation and concurrent translation and rotation time are greater than the errors for translation error, however the full camera model still produced better results than the camera obscura model.

Figure 11 graphically compares the average absolute errors of the two camera models, clearly indicating a lower absolute error (in pixels) produced by the full camera model in every case.

Figure 12 graphs the absolute errors in pixels for translation, indicating that the absolute error was lower with the full camera model than the camera obscura model. It is clear that the error produced by the camera obscura model is largely independent of translation, while full camera model produced greater absolute errors for larger translations and a lower errors for smaller translations.

Figure 13 plots the absolute errors in pixels for translation in three dimensions. Figure 13a and b respectively indicate the absolute errors produced by the camera obscura model and the full camera model. The absolute errors on the x and y axes are roughly symmetrical, indicating that the errors produced are not dependent on the direction of movement left or right. The absolute error on the z axis is asymmetrical, becoming lower as the camera moved nearer to the pattern (the image of the calibration pattern is larger) and larger when the camera moved away (the image of the calibration pattern is smaller). It is also clear that with the full camera model, the points with smallest absolute error are concentrated near the base point, i.e. very small distances from the zero position.

Figure 14 plots the absolute errors in pixels for rotations in three dimensions. Figure 14a and b respectively indicate the absolute errors produced by the camera obscura model and the full camera model. Both camera models produced a similar distribution of absolute errors, but the full camera model’s absolute error was lower. Both algorithms produced the lowest absolute error in z-axis rotation. In this case, the calibration pattern image was affected by rotational transformation only, whereas x and y axis rotation affected the calibration pattern image with projective transformation, and the resulting absolute error was greater.

Discussion

The study raises several discussion questions. The first question concerns the accuracy of the presented results.The full camera model was compared with the widely recognized camera obscura model, and an input data set was obtained experimentally from a real robot and camera equipment. To obtain the most accurate results as possible, the robotic arm was calibrated with an an advanced procedure. The total error in the input data set was estimated at 0.14 mm. The estimated data set error was less than the resulting errors produced by the camera obscura and full camera models, therefore the input data set was suitable as a reference data set to compare the camera models.

The next question concerns the applicability of the proposed full camera model. The study used a test robot stand and 100 mm calibration pattern. Pilot testing indicated an average absolute error of 0.3 mm to 0.7 mm. The absolute error in pixels is generally independent of the camera type and calibration pattern size. If we consider a calibration pattern ten times smaller used in combination with telephoto lens, the absolute error will also fall in the range of 0.03 mm to 0.07 mm, which is sufficient for current robotic manipulators.

The final question for discussion concerns the inaccuracy encountered in rotation on the x and y axes. The full camera model expects the TCP to be in the center of the lens. The position of the sensing element was also required, but unfortunately, the camera manufacturers do not specify the parameter L , and it therefore had to be determined experimentally. To conduct a sensitivity analysis of the parameter L, we estimated the derivative of the absolute error (in millimeters) with respect to L using the finite difference method, as detailed in Equation (23). Our numerical experiments revealed that at \(L=9.5\) mm, the computed average derivative is approximately 0.0024 mm. This finding suggests that the selected value of L is near-optimal. Deviations from \(L=9.5\) mm resulted in a marked increase in the magnitude of the derivative, indicating heightened sensitivity to changes in L.

An important consideration of the proposed solution is computational cost. Both algorithms were executed in Matlab software on a Dell Inspiron 7306 2n1 computer with a 2.4 GHz i5-1135G7 processor, which has a computational power similar to the target platform, which is an industrial computer. The entire calculation for the full camera model is a long mathematical expression which could be easily optimized (and parallelized) and implemented in another programming language such as C++, C# or a specific robot programming language. Table 4 compares the computational costs of the camera obscura and full camera models. The computational cost of the full camera model is significantly higher than camera obscura model. Although the calibration procedure is iterative, the full camera model requires only approximately 100–1000 iterations. The full camera model is therefore suitable for industrial processes because an industrial computer is able to process millions of numerical operations in hundreds of milliseconds, and especially because it is expected that a robot is calibrated at least once per day, usually after warming up.

Table 5 summarizes the overall characteristics of the camera models. A comparison of the full camera model with the camera obscura model clearly indicates its major advantage in greater precision. Implementation of both models is simple. The full camera model requires the calculation of a lengthy, single mathematical expression, but this could be easily optimized. The camera obscura model involves calculation of a sequence of mathematical expressions. The computational costs for the full camera model are higher than the camera obscura model, however they are acceptable for both. The full camera model demonstrates two more advantages in variable image distance and variable focal length. The camera obscura has a fixed image distance, and focal length is considered.

Conclusion

The study proposed a full mathematical model of an industrial camera and measured a reference data set with an experiment for comparison to a generally known camera obscura model.

The results of the experiment indicated that the proposed mathematical model of a full camera produced a significantly lower absolute error than the camera obscura model. The absolute error was approximately 2.65 times lower, and for small translations and rotations, up to 5.38 times lower. The novelty of the proposed solution was discussed in relation to other state-of-the-art methods.

Future studies will test the hypothesis that the proposed mathematical full camera model is able to obtain a lower absolute error with smaller calibration patterns and a telephoto lens. Other potential work is the application of the full camera model to provide precise calibration in a real industrial application.

Data availability

The data supporting the findings of this study are available upon request. These data have been collected and are accessible for further analysis and validation. Please contact Jaromir Konecny at jaromir.konecny@vsb.cz to request access to the data.

References

Jiang, Y., Huang, Z., Yang, B. & Yang, W. A review of robotic assembly strategies for the full operation procedure: Planning, execution and evaluation. Robot. Comput.-Integr. Manuf. 78, 102366. https://doi.org/10.1016/j.rcim.2022.102366 (2022).

Bottin, M., Rosati, G. & Boschetti, G. Fixed point calibration of an industrial robot. 215–216 (2018).

Balanji, H., Turgut, A. & Tunc, L. A novel vision-based calibration framework for industrial robotic manipulators. Robot. Comput.-Integr. Manuf. 73, 102248 (2022).

Khaled, T., Akhrif, O. & Bonev, I. Dynamic path correction of an industrial robot using a distance sensor and an ADRC controller. IEEE/ASME Trans. Mechatron. 26, 1646–1656 (2021).

Fares, F., Souifi, H., Bouslimani, Y. & Ghribi, M. Tool center point calibration method for an industrial robots based on spheres fitting method (2021).

Liu, Z. et al. High precision calibration for 3d vision-guided robot system. IEEE Transactions on Industrial Electronics (2022).

Nocedal, J. & Wright, S. J. Numerical Optimization 2nd edn. (Springer, 2006).

Price, K., Storn, R. M. & Lampinen, J. A. Differential Evolution: A Practical Approach to Global Optimization (Natural Computing Series) (Springer-Verlag, 2005).

Konecny, J., Bailova, M., Beremlijski, P., Prauzek, M. & Martinek, R. Adjusting products with compensatory elements using a digital twin: Model and methodology. PLoS ONE 18, e0279988 (2023).

Sun, P., Hu, Z. & Pan, J. A general robotic framework for automated cloth assembly. In 2019 IEEE 4TH INTERNATIONAL CONFERENCE ON ADVANCED ROBOTICS AND MECHATRONICS (ICARM 2019), 47–52 (2019).

Kim, H.-J., Kawamura, A., Nishioka, Y. & Kawamura, S. Mechanical design and control of inflatable robotic arms for high positioning accuracy. Adv. Robot. 32, 89–104. https://doi.org/10.1080/01691864.2017.1405845 (2017).

Tadic, V. et al. Perspectives of realsense and zed depth sensors for robotic vision applications. Machineshttps://doi.org/10.3390/machines10030183 (2022).

Medjram, S., Brethe, J.-F. & Benali, K. Markerless vision-based one cardboard box grasping using dual arm robot. Multimedia Tools Appl. 79, 22617–22633. https://doi.org/10.1007/s11042-020-08996-2 (2020).

Zhou, Z., Liu, W., Wang, Y., Yue, Y. & Zhang, J. An accurate calibration method of a combined measurement system for large-sized components. Meas. Sci. Technol.https://doi.org/10.1088/1361-6501/ac7778 (2022).

Chen, R. et al. Precision analysis model and experimentation of vision reconstruction with two cameras and 3d orientation reference. Sci. Rep.https://doi.org/10.1038/s41598-021-83390-y (2021).

Li, F., Jiang, Y. & Li, T. A laser-guided solution to manipulate mobile robot arm terminals within a large workspace. IEEE/ASME Trans. Mechatron. 26, 2676–2687. https://doi.org/10.1109/TMECH.2020.3044461 (2021).

Ji, J., Liu, Y. & Ma, H. Model-based 3d contact geometry perception for visual tactile sensor. Sensorshttps://doi.org/10.3390/s22176470 (2022).

Guolu, Y., Zhou, X., Rui, J., Ming, D. & Tao, Z. Optical fiber distributed three-dimensional shape sensing technology based on optical frequency-domain reflectometer. Acta Optica Sinicahttps://doi.org/10.3788/AOS202242.0106002 (2022).

Lei, H., Zhou, F. & Zhuang, C. Multi-stage 3d pose estimation method of robot arm based on RGB image. In 2021 7th International Conference on Control, Automation and Robotics (ICCAR) (ed. Lei, H.) 84–88 (IEEE, 2021). https://doi.org/10.1109/ICCAR52225.2021.9463454.

Gueta, L. B., Chiba, R., Ota, J., Arai, T. & Ueyama, T. A practical and integrated method to optimize a manipulator-based inspection system. In 2007 IEEE International Conference on Robotics and Biomimetics (ROBIO) (ed. Gueta, L. B.) 1911–1918 (IEEE, 2007). https://doi.org/10.1109/ROBIO.2007.4522459.

Eldosoky, M. A., Zeng, F., Jiang, X. & Ge, S. S. Deep transfer learning for wall bulge endpoints regression for autonomous decoration robots. IEEE Access 10, 73945–73955. https://doi.org/10.1109/ACCESS.2022.3190404 (2022).

Choudhary, R., Walambe, R. & Kotecha, K. Spatial and temporal features unified self-supervised representation learning networks. Robot. Auton. Syst.https://doi.org/10.1016/j.robot.2022.104256 (2022).

Gouveia, E. et al. Smart autonomous part displacement system based on point cloud segmentation. In Proceedings of the 19th International Conference on Informatics in Control, Automation and Robotics (ed. Gouveia, E.) 549–554 (SCITEPRESS - Science and Technology Publications, 2022). https://doi.org/10.5220/0011353100003271.

Theoharatos, C., Kastaniotis, D., Besiris, D. & Fragoulis, N. Vision-based guidance of a robotic arm for object handling operations - the white’r vision framework (2016).

Apriaskar, E. & Fahmizal & Fauzi, M. R.,. Robotic technology towards industry 4.0. J. Phys. Conf. Ser.https://doi.org/10.1088/1742-6596/1444/1/012030 (2020).

Wen, B.-J., Syu, K.-C., Kao, C.-H. & Hsieh, W.-H. Dynamic proportional-fuzzy grip control for robot arm by two-dimensional vision sensing method. J. Intell. Fuzzy Syst. 36, 985–998. https://doi.org/10.3233/JIFS-169874 (2019).

Stodola, M. Monocular kinematics based on geometric algebras. In Modelling and Simulation for Autonomous Systems (ed. Stodola, M.) 121–129 (Springer International Publishing, 2019). https://doi.org/10.1007/978-3-030-14984-0_10.

Ricolfe-Viala, C. & Esparza, A. The influence of autofocus lenses in the camera calibration process. IEEE Trans. Instrum. Meas. 70, 1–15. https://doi.org/10.1109/TIM.2021.3055793 (2021).

Ricolfe-Viala, C. & Sánchez-Salmerón, A.-J. Using the camera pin-hole model restrictions to calibrate the lens distortion model. Opt. Laser Technol. 43, 996–1005. https://doi.org/10.1016/j.optlastec.2011.01.006 (2011).

Lyu, Y., Lai, G., Chen, C. & Zhang, Y. Vision-based adaptive neural positioning control of quadrotor aerial robot. IEEE Access 7, 75018–75031. https://doi.org/10.1109/ACCESS.2019.2920716 (2019).

Cheng, L. & Wu, G. Obstacles detection and depth estimation from monocular vision for inspection robot of high voltage transmission line. Clust. Comput. 22, 2611–2627. https://doi.org/10.1007/s10586-017-1356-8 (2019).

Fontani, D., Marotta, G., Francini, F., Jafrancesco, D. & Sansoni, P. Pinhole camera sun finders. In Proceedings of the ISES Solar World Congress 2019 (ed. Fontani, D.) 1–9 (International Solar Energy Society, 2019). https://doi.org/10.18086/swc.2019.02.01.

Koirala, A., Wang, Z., Walsh, K. & McCarthy, C. Fruit sizing in-field using a mobile app. Acta Hortic. 12, 129–136. https://doi.org/10.17660/ActaHortic.2019.1244.20 (2019).

Novak, P., Stoszek, S. & Vyskocil, J. Calibrating industrial robots with absolute position tracking system. In 2020 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA) (ed. Novak, P.) 1187–1190 (IEEE, 2020). https://doi.org/10.1109/ETFA46521.2020.9212169.

Liu, D. & Liao, G. The camera calibration approach of pipeline detecting robot. In 2009 WASE International Conference on Information Engineering, vol. 2, 218–221, https://doi.org/10.1109/ICIE.2009.264 (2009).

Rendón-Mancha, J. M., Cárdenas, A., García, M. A., González-Galván, E. & Lara, B. Robot positioning using camera-space manipulation with a linear camera model. IEEE Trans. Rob. 26, 726–733. https://doi.org/10.1109/TRO.2010.2050518 (2010).

Jiang, J., Luo, X., Luo, Q., Qiao, L. & Li, M. An overview of hand-eye calibration. Int. J. Adv. Manuf. Technol. 119, 77–97 (2022).

Xu, G. & Zhang, Z. Epipolar Geometry in Stereo, Motion and Object Recognition (Springer, 1996).

Chen, Y., Chen, Y. & Wang, G. Bundle adjustment revisited, https://doi.org/10.48550/ARXIV.1912.03858.

Staubli. Staubli (2022).

Basler. Basler ace aca2500-14gm - area scan camera (2022).

Basler. Computar lens m0814-mp2 f1.4 f8mm 2/3” - lens (2022).

Acknowledgements

This work was supported by the project SP2023/009, ”Development of algorithms and systems for control, measurement and safety applications IX” of the Student Grant System, VSB-TU Ostrava. The work was partially supported by project SGS No. SP2023/011 and SGS No. SP2023/067, VŠB-Technical University of Ostrava, Czech Republic. This article has been produced with the financial support of the European Union under the REFRESH – Research Excellence For REgion Sustainability and High-tech Industries project number CZ.10.03.01/00/22_003/0000048 via the Operational Programme Just Transition.

Author information

Authors and Affiliations

Contributions

Ja.K.: conceptualization, writing—original draft preparation, supervision, visualization, experiments. P.B. : writing—original draft preparation, experiments. M.B.: writing—original draft preparation, experiments. Z.M.: writing-original draft preparation, resources. Ji.K.: supervision, funding acquisition. M.P.: writing-original draft preparation, supervision.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Konecny, J., Beremlijski, P., Bailova, M. et al. Industrial camera model positioned on an effector for automated tool center point calibration. Sci Rep 14, 323 (2024). https://doi.org/10.1038/s41598-023-51011-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-51011-5

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.