Abstract

It is essential to predict carbon prices precisely in order to reduce CO2 emissions and mitigate global warming. As a solution to the limitations of a single machine learning model that has insufficient forecasting capability in the carbon price prediction problem, a carbon price prediction model (GWO–XGBOOST–CEEMDAN) based on the combination of grey wolf optimizer (GWO), extreme gradient boosting (XGBOOST), and complete ensemble empirical mode decomposition with adaptive noise (CEEMDAN) is put forward in this paper. First, a random forest (RF) method is employed to screen the primary carbon price indicators and determine the main influencing factors. Second, the GWO–XGBOOST model is established, and the GWO algorithm is utilized to optimize the XGBOOST model parameters. Finally, the residual series of the GWO–XGBOOST model are decomposed and corrected using the CEEMDAN method to produce the GWO–XGBOOST–CEEMDAN model. Three carbon emission trading markets, Guangdong, Hubei, and Fujian, were experimentally predicted to verify the model’s validity. Based on the experimental results, it has been demonstrated that the proposed hybrid model has enhanced prediction precision compared to the comparison model, providing an effective experimental method for the prediction of future carbon prices.

Similar content being viewed by others

Introduction

Climate change has evolved into a formidable menace to the survival of humanity in the twenty-first century. Greenhouse gases are considered a major factor contributing to global warming1. To cope with the global warming crisis, the international community has actively reduced carbon emissions by formulating climate policies and other measures. Among them, the European Emissions Trading System (EU-ETS) was implemented in 2005, reducing carbon emissions and energy consumption2. Furthermore, China plays a significant role in international climate protection as one of the top carbon emitters worldwide. China has implemented eight carbon trading pilots in various regions, namely Beijing (2013), Shanghai (2013), Guangdong (2013), Tianjin (2013), Shenzhen (2013), Chongqing (2014), Hubei (2014), and Fujian (2016), in order to reduce global emissions3.

Carbon trading has emerged as an emerging financial industry. A carbon price reflects fluctuations in supply and demand for carbon energy within the carbon emissions market, where carbon energy can be traded as a commodity4. Because of the uncertainty of the internal mechanism and external factors, carbon prices demonstrate nonlinear and non-stationary features5,6. The risks associated with carbon trading are greater than those associated with traditional financial products. Accurate carbon price forecasting not only helps governments grasp the changes in market conditions and make reliable decisions, but also helps enterprises and investors grasp the characteristics of carbon prices. This will make sensible resource allocations and realize the value-added of carbon assets. As a result, it is crucial to establish a system that is stable and effective for the research of carbon prices.

In accordance with the previous literature review, carbon price research can be categorized into two classifications: models based on historical data7,8,9 and models based on influencing factors10,11,12,13.

Grounded on historical data, carbon price forecasting methods can be classified into three categories: statistical and econometric methods, artificial intelligence (AI), and integration methods.

In the past, statistical and econometric methods were extensively employed for forecasting carbon prices as classical time series forecasting methods. Main statistical methods are the autoregressive integrated moving average model (ARIMA)14, generalized autoregressive conditional heteroskedasticity model (GARCH)15, gray model (GM)16, etc. For instance, Carolina et al. (2013) employed an ARIMA model in order to forecast carbon prices, ultimately achieving more accurate predictive outcomes17. According to Dutta (2018), an exponential GARCH model was used for forecasting carbon price volatility, and outliers were processed to improve accuracy18. Under the assumption of linearity, the statistical and econometric methods perform well for short-term forecasting, but when forecasting nonlinear, non-stationary time series of carbon prices, the prediction accuracy is not satisfactory19.

As a result, AI that does not require linear assumptions is broadly utilized across different sectors. For example, credit risk prediction20, disease treatment21, and traffic congestion22,23. For carbon price prediction, least squares support vector machines (LSSVM) and artificial neural networks (ANN) are commonly used. Using 1074 daily carbon price results, Atsalakis (2016) developed a neural network (NN) model to predict time series. ANN was found to be the most effective method for predicting carbon prices based on the final results24. Zhu et al. (2016) introduced an adaptive multiscale integrated learning approach grounded in LSSVM to effectively capture the non-stationary and non-linear attributes of carbon prices. The findings demonstrated that their proposed model surpassed the performance of the ARIMA and GARCH models25. Despite the fact that AI exceeds traditional statistical models in forecasting non-linear and non-stationary data, a single AI model fails to possess sufficient forecasting stability and does not meet researchers’ expectations for accurate carbon price predictions across different markets26.

Given the constraints of conventional statistical approaches in handling non-stationary feature data and the shortcomings of a single AI model, experts have started to focus on researching integrated methods to boost data analysis and forecasting precision. A number of decomposition methods have been proposed based on different theoretical foundations, including the wavelet transform (WT)27, variational mode decomposition (VMD)28, and ensemble empirical mode decomposition (EEMD)29. E et al. (2019) realized that carbon valence has nonlinear and nonstationary properties. To address this issue, they combined VMD with a gated recurrent unit (GRU) to predict carbon prices’ future trends. Experimental results confirmed its validity and reliability30. Jinpei Liu et al. (2019) employed empirical mode decomposition (EMD) and a reconstruction algorithm to transform the original data into three subseries of varying frequencies. Subsequently, they individually analyzed these three types of data using ARIMA, partial least squares (PLS), and NN methods. The findings demonstrated the superior predictive performance of the model31. Using EEMD to preprocess the data, Zhou et al. (2018) constructed different combinations of models to identify different frequencies. The existing hybrid models, although they enhance carbon price prediction accuracy, have drawbacks32. For example, existing hybrid models usually have model subseries obtained from decomposition without considering noise. This can reduce prediction accuracy and efficiency29.

Carbon prices are impacted by a combination of historical data and external factors. The existing literature primarily utilizes carbon price time series data for the modeling process. However, the dynamics of carbon trading prices are influenced by various factors, including energy factors, macroeconomic factors, and industry structures11. In general, since external factors can be analyzed, carbon price forecasting built upon multiple influencing factors is important for carbon market research. Therefore, carbon price prediction models for influencing factors are favored by scholars. Using oil, coal, and natural gas prices as the basis, Tsai and Kuo (2013) devised an ant-based radial basis function network (ARBFN) model for carbon price prediction. The inclusion of multiple influencing factors in carbon price forecasting models can indeed pose challenges due to the potential for error accumulation. When considering multiple factors, the complexity of the model increases, and uncertainties associated with each factor can accumulate throughout the forecasting process33.

Reviewing previous studies, we identify potential research gaps in the prediction of carbon prices. One is that most carbon price forecasting models rely only on past carbon price data series. They ignore the impact of external factors on the carbon market. This limitation may result in models that do not adequately take into account the full range of market conditions when forecasting carbon prices. Second, most current carbon price forecasting models fail to fully explore and utilize other useful information. Useful information means that after the model prediction, there are still a large number of nonlinear residual sequences, which are not random walks34 and still contain carbon price information. Ignoring the residual series leads to the potential problem of incomplete information in predicting carbon prices. To this end, it is of particular importance that the above issues are addressed and a new perspective on carbon price forecasting is proposed.

In order to bridge these gaps, this study first established the index system of influencing factors of carbon price and selected indicators by the random forest method to find out the main factors affecting carbon price, so as to improve the prediction accuracy of the model. Secondly, XGBOOST is used to establish the carbon price prediction model. Meanwhile, with the objective of avoiding the prediction error caused by the parameter setting of the XGBOOST model, GWO is used to find the optimization of the model parameters. To increase the precision of model predictions, the residual series of XGBOOST predictions is corrected using the CEEMDAN method, and a combined GWO–XGBOOST–CEEMDAN model is derived. The contributions can be summarized as follows:

-

(1)

In the majority of prior studies, carbon price forecasts relied on historical time series data on carbon prices. This ignores the effects of multiple factors when predicting carbon prices, so there are limits to the information that can be provided and the extent to which carbon markets can be managed. In this study, multiple influencing factors are considered in conducting carbon price forecasts with the aim of addressing the problem of carbon price forecasting. In order to develop a richer indicator system that is more appropriate to China’s national conditions, the carbon price time series data as well as the various influencing factors are treated as candidate input features for carbon price modeling.

-

(2)

In this study, Partial Autocorrelation Function (PACF) and Random Forest (RF) are introduced as feature selection methods to build carbon price prediction models in accordance with numerous influencing factors and reduce the influence of redundant information between features. A significant improvement has been made in the model’s prediction performance.

-

(3)

Most previous hybrid models first decompose the data and then perform carbon price prediction studies. However, this study adopts a different approach by first predicting carbon prices and then decomposing the residual series. After the carbon price information is predicted by the strong master model, the useful information of the residual sequence is difficult to obtain, so the CEEMDAN algorithm is used to further process the residual information and decompose it into modal information that is easy to extract and a sequence that is more difficult to extract. This is to dig deeper into residual effective information. According to the experiment, carbon price prediction is more accurate and practical than most previous studies. The method of prediction and then decomposition offers innovative thought for carbon price prediction research, and it will serve as a strong reference in the future.

Algorithm introduction

Feature election

Random forest

After the initial selection of 11 metrics, feature screening is performed next. It can enhance the model’s ability to generalize, reduce the risk of overfitting, reduce the computational complexity of the model, etc. Common feature selection methods are Gray correlation, Pearson correlation coefficient, and random forests (RF). Gray correlation and Pearson correlation coefficient are both linear relationship-based methods, while RF can handle more complex nonlinear relationships. This means RF can select features in a wide range of situations. As a result, in this paper, the RF method is used for screening carbon price primary indicator systems.

Based on the results of the RF method, the primary features are ranked in terms of importance and then selected. Consider a sample size of \(A\) and a feature dimension of \(m\). Provide a set of training samples \(\left\{({x}_{1},{y}_{1}),\cdots ,({x}_{N},{y}_{N})\right\}\) and create a self-help sample set \({C}_{t}\) of size \(A\); \({K}_{t}\) is obtained by classification and regression tree (CART) on \({C}_{t}\) ; Taking a random sample of \({m}_{try}=\sqrt{m}\) features from each tree and selecting the most significant \({m}_{try}\) features for node splitting; Analyzing whether \(t\) satisfies \(t\le ntree\) until the loop is not exited, and then generating \(G=Uniform\left(\left\{{K}_{t}\right\}\right)\).

In the calculation of feature importance, the Gini Index is used as a segmentation function to calculate "Gini Importance" as the degree of importance of a feature. This can be expressed as follows:

\(C\) represents the sample set;\({F}_{i}\) represents the probability of belonging to the \(ith\) class in the sample set \(C\) ; There are a number of sample classes in \(E\) . The Gini index of the sample set \(C\) is defined when feature \(G\) is known.

\(H\) represents the number of features \(G\) values, i.e., \(C\) is divided into \(H\) subsets according to the feature \(G\) values \(\left\{{C}^{1},{C}^{2},...{C}^{H}\right\}\), and the samples within each subset are of the same feature \(G\) value. \(G\) feature that has the smallest Gini index after division is considered to be the optimal feature in the selection process.

Partial auto-correlation function

PACF is a statistical tool for time series analysis that helps determine the relationship between each observation in a time series and its lag values. Its function is to recognize the order of the AR (Autoregressive) model in a time series, i.e., how many lags need to be considered in that model. The PACF model actually adjusts the autocorrelation function (ACF) by eliminating the part already explained by the previous lags so that the remaining part more accurately reflects the relationship between the observations and the lags at the current moment. \(({X}_{t},{X}_{t+v}|{X}_{t+1}|,\cdot \cdot \cdot ,{X}_{\left(t+v-1\right)}\) represents the conditional correlation between \({X}_{t}\) and \({X}_{t+v}\) after removing the effects of the intervening variables \({X}_{t+1},\cdot \cdot \cdot ,{X}_{\left(t+v-1\right)}\), i.e., the partial autocorrelation between \({X}_{t}\) and \({X}_{t+v}\).

CEEMDAN model

Empirical mode decomposition (EMD) is to decompose the nonlinear and non-stationary raw data into inherent mode functions (IMFS) with various fluctuation scales. However, due to the intermission of the raw data, mode confusion is easy to occur. This will affect the decomposition effect. Wu35 proposed an ensemble empirical mode decomposition (EEMD) method by adding a certain degree of Gaussian white noise to the original data for repeated decomposition. Although the mode overlap phenomenon can be effectively solved, residual white noise still exists in the component of the eigenmode function derived by this method, resulting in low reconstruction accuracy. Building upon this, Torres36 moved to the complete ensemble empirical mode decomposition with adaptive noise (CEEMDAN) method, which addresses the issue of significant reconstruction errors in the EEMD method by introducing adaptive white noise at each stage. Therefore, in this essay, the CEEMDAN method is used to forecast each component of the eigenmode function and the trend term separately.

CEEMDAN can be broken down as follows:

Step 1 As a result of adding a Gaussian white noise sequence to the residual sequence, an updated sequence with noise is obtained:

where \(y\left(t\right)\) is the residual sequence, and \(\overline{{y}_{i}}\left(t\right)\) is the new sequence with the addition of Gaussian white noise; \({n}_{i}\left(t\right)\) denotes the white noise added to the residual data; σ is the adaptive coefficient.

Step 2 EMD decomposition is performed on the new sequence with white noise added to obtain N modal components, and the first modal component of CEEMDAN is obtained by the overall averaging of the N modal components as follows:

At this point, \({R}_{1}\left(t\right)\) is the residual component.

Step 3 The adaptive white noise sequence \(\sigma {n}_{i}\left(t\right)\) is added to \({R}_{1}\left(t\right)\) to form a new sequence \({R}_{1}\left(t\right)+\sigma {E}_{1}\left({n}_{i}\left(t\right)\right)\) with noise, where \({E}_{j}\left(\cdot \right)\) is the jth eigenmodal component obtained after EMD decomposition. At this point, the EMD decomposition is performed on the new sequence and averaged to obtain the second modal component and the residual component as follows:

Step 4 Repeat the above three steps to obtain the (j + 1)th modal component and the jth residual component:

Step 5 Repeat the above steps until the CEEMDAN can no longer be decomposed by EMD. Finally, the original sequence \(\mathrm{y}(\mathrm{t})\) is decomposed into multiple eigenmodal components and a trend component.

After CEEMDAN has decomposed the residual series, the GWO–XGBOOST model is applied to each eigenfunction component. The final residual forecast is derived by linearly combining the results of each component.

XGBOOST model

Extreme gradient boosting (XGBOOST) was developed by Chen et al.37 in 2016, which integrates a linear scale solver with a categorical regression tree learning algorithm. The model combines models with low prediction accuracy through certain strategies. The purpose of this is to construct an integrated model that is more accurate in terms of prediction. During the model training process, XGBOOST optimizes the boosting process. Each iteration generates an updated decision tree to fit the residuals generated in the previous iteration. XGBOOST can continuously improve its prediction accuracy and generalization capacity through iterative optimization. While traditional gradient boosting decision tree (GBDT) methods utilize only first-order derivatives, XGBOOST does a second-order Taylor expansion of the loss function, controls model complexity by introducing regularization terms to avoid overfitting problems, and employs a more refined evaluation approach when splitting nodes to better capture the nonlinear relationships between features. In recent years, the XGBOOST model has shown superior performance in financial risk control, medical health, natural language processing, and other fields. This model is based on the following mathematical principles:

An integration model for the definition tree can be described as follows:

where \({\widehat{y}}_{i}\) is the prediction value; \(M\) is the number of decision trees; \(F\) is the tree selection space; \({x}_{i}\) is the first \(i\) input feature.

XGBOOST’s loss function is as follows:

The first part of the function is the prediction error between the predicted value and the real training value of the XGBOOST model, and the second part represents the complexity of the tree, which is mainly used to control the regularization of the model complexity:

where \(\gamma \) and \(\tau \) are penalty factors.

By adding an incremental function \({f}_{t}\left({x}_{i}\right)\) to Eq. (13), the value of the loss function is minimized. Then the objective function of the \(t\) th time is

The second-order Taylor expansion of Eq. (15) is used to approximate the objective function, and the set of samples in each child of the \(j\) tree is defined as \({I}_{j}=\left\{i\left|q\left({x}_{i}=j\right)\right.\right\}\). At this point the \({Q}_{\left(t\right)}\) can be approximated as

where \({g}_{i}={\partial }_{{{\widehat{y}}_{i}}^{t-1}}l\left({y}_{i},{{\widehat{y}}_{i}}^{t-1}\right)\) is the first order derivative of the loss function; \({h}_{i}={{\partial }^{2}}_{{{\widehat{y}}_{i}}^{t-1}}l\left({y}_{i},{{\widehat{y}}_{i}}^{t-1}\right)\) is the second order derivative of the loss function. Defining \({G}_{i}=\sum i\in {I}_{j}{g}_{i}\), \({H}_{i}={\sum }_{i\in {I}_{j}}{h}_{i}\) then we have:

The partial derivative of \(\omega \) yields

By incorporating weights into the objective function, we get

A large portion of the model’s performance is determined by parameter selection during the training process of the XGBOOST model. There are 23 hyperparameters in the XGBOOST algorithm, mainly divided into general parameters for macroscopic function control, booster parameters for booster detail control, and learning target parameters for training target control. The GWO–XGBOOST combinatorial model combines the three hyperparameters that have a significant impact on the performance of XGBOOST (learning_rate, n_estimators, and max_depth) as the position vector of the head wolf \(\alpha \) in the GWO algorithm and continuously updates them through the iterations of the GWO algorithm to continuously find the optimal position until the global optimal position is output as the final parameter of the XGBOOST model.

GWO model

A pack intelligence optimization algorithm, the grey wolf optimizer (GWO), based on the predatory behavior of grey wolves, was proposed by Mirjalili et al.38 in 2014, inspired by the predatory behavior of grey wolves. The optimization process of the GWO algorithm can be analogized to the hunting behavior of the gray wolf pack. Among them, α, \(\beta \), and \(\delta \) wolves with the highest social level in each generation of the population act as the leaders of the gray wolf pack. A predator searches, encircles, and attacks prey to achieve its optimization goal. GWO has strong global convergence ability, robustness, and fewer parameters to adjust, and is now used in many fields for optimization problems.

Firstly, the mathematical definition of how a wolf pack searches for and surrounds its prey is as follows:

where \(F\left(t\right)\) is the position of the prey after the \(t\) th iteration; \({F}_{P}\left(t\right)\) is the position of the gray wolf at the \(t\) iteration; \(A\) is the distance between the gray wolf and the prey; \(F\left(t+1\right)\) is the update of the position of the gray wolf; \(C\) and \(B\) are the coefficient vectors;\(c\) is the convergence factor whose value decreases linearly from 2 to 0 with the number of iterations, \(D\) is the number of previous iterations, and \(E\) is the maximum number of iterations; \(r_{1}\) and \(r_{2}\) are the random numbers between [0,1].

Secondly, the prey is finally determined by constantly updating the positions of the three optimal wolves α, \(\beta \), and \(\delta \). The mathematical definition of the hunting process of the gray wolf pack is

where \({F}_{\alpha }\left(t\right)\), \({F}_{\beta }\left(t\right)\) and \({F}_{\delta }\left(t\right)\) are the positions of \(\alpha \), \(\beta \) and \(\delta \) wolves when the population is iterated to generation t; \(F\left(t\right)\) is the position of individual gray wolves in generation t; \({C}_{1}\) and \({B}_{1}\), \({C}_{2}\) and \({B}_{2}\), \({C}_{3}\) and \({B}_{3}\) are the coefficient vectors of \(\alpha \), \(\beta \) and \(\delta \) wolves, respectively; \({F}_{1}\left(t+1\right)\),\( {F}_{2}\left(t+1\right)\) and \({F}_{3}\left(t+1\right)\) are the positions of \(\alpha \), \(\beta \) and \(\delta \) wolves after \(\left(t+1\right)\) iterations, respectively; \(F\left(t+1\right)\) is the position of the next generation of gray wolves.

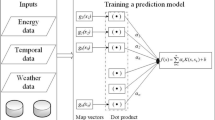

GWO–XGBOOST–CEEMDAN model

To improve carbon price prediction, we propose to combine the CEEMDAN, XGBOOST, and GWO models to build the GWO–XGBOOST–CEEMDAN model. The general idea is as follows: First, the GWO–XGBOOST model is established, and the GWO algorithm is used for optimizing the parameters of the XGBOOST model. Secondly, the CEEMDAN method is applied to decompose the residual series of the GWO–XGBOOST model to establish the GWO–XGBOOST–CEEMDAN hybrid model. Finally, the predicted values and the accumulated values of the residual predictions are summed up to get the final prediction results of the model. Figure 1 illustrates the specific process.

Data description

Data source

Accurate carbon price forecasts smooth investment decisions and maintain carbon market stability. There are big differences between China’s carbon trading pilots. The Hubei carbon trading market is the only carbon trading market in central China12. In addition, the Guangdong carbon market was officially launched in 2013, setting five first places in China’s carbon market trading39. Fujian is the first ecological civilization demonstration zone in China. The carbon market is aligned with the overall idea of the national carbon market, and it is the first pilot to adopt carbon verification standards and guidelines issued by the state. In particular, the data direct reporting system is completely consistent with the national system under construction standards, and the construction starting point is high40,41. To sum up, this paper chooses Guangdong, Hubei, and Fujian carbon trading markets as research objects. In this paper, we collect data on the three carbon markets from the Choice financial terminal and the Wind database. The selected carbon prices take into account public holidays, differences in trading hours, and missing values of variables at home and abroad. In the above data, the Bohai Sea Power Coal Price Index and Natural Gas Market Quotation are weekly and ten-day data, and Eviews software is used to convert them into daily data. A hybrid model is evaluated by using 80% of the data for training and 20% for testing. The carbon price information for the three trading markets is shown in Table 1, and Table 2 presents descriptive statistics for each indicator.

ADF inspection

The ADF (Augmented Dickey–Fuller) test was proposed by economists David Dickey and Wayne Fuller in 1979. The test is a statistical method used to determine whether the time series data has a unit root (or the root of the series), i.e., to verify whether the data has smoothness. The ADF test gives a Guangdong p-value of 0.912439, a Hubei p-value of 0.638039, and a Fujian p-value of 0.988874, which are greater than the usually chosen significance level (e.g., 0.05 or 0.01). Therefore, the original hypothesis cannot be rejected; that is, the historical carbon price data of the three carbon markets is not stationary. In short, it is not possible to use traditional econometric methods for experiments, and an integrated learning approach is used for the prediction study of non-stationary time series of carbon prices.

Data pre-processing

The factors often have different magnitudes and units of magnitude. It is crucial to pretreat the data to be limited to [0, 1] to remove the adverse effects caused by odd sample data and make the data comparable.

where \({Z}^{*}\) represents the normalized value of the data; \(Z\) is the input data, and \({Z}_{min}\) and \({Z}_{max}\) represent the minimum and maximum values of the input data, respectively.

Four aspects that affect the price of carbon

Carbon prices are impacted by a number of factors. This paper builds primary indicators of carbon price influencing factors from four aspects: macroeconomics, energy prices, international carbon markets, and weather conditions. The detailed classification and secondary quantification of each indicator level are shown in Table 3.

Macroeconomics

The macroeconomy directly determines the boom in the carbon market42. The macroeconomic situation, specifically the advancement of the industrial economy, is the most representative of CO2 emissions, which will affect the price of carbon trading43. Guo Fuchun says that when macroeconomic conditions are favorable, production and business activities become active, and the carbon trading price will enter a relatively stable operation. In contrast, when the economy slows down, the carbon trading price will fluctuate sharply44. Meanwhile, carbon prices also reflect a country’s economic growth to a certain extent45. In addition, China’s carbon trading market is developing and maturing. It covers a broad spectrum of industries, including chemicals, construction materials, steel, non-ferrous metals, and various other sectors. Therefore, the rapid economic development and extension of China’s carbon market highlight the significance of carbon pricing mechanisms46.

Energy prices

It is often considered that energy is the factor that has the greatest impact on carbon prices47. With this in mind, researchers have been empirically searching for the drivers of carbon prices and understanding their future value through adequate predictive analysis. Early studies have identified energy prices as one of the indicators of the main influencing factors of carbon prices26,48,49,50. For example, crude oil51 and natural gas prices52. The majority of these studies have found that energy prices have a significant impact on carbon prices. However, investment strategies need to focus not only on the impact of external factors on carbon prices but also on the predictability of future returns. Consequently, numerous recent studies have concentrated on the predictability of carbon prices’ future values53,54. In conclusion, it is valuable to study the influence of energy prices on carbon prices both now and in the future.

International carbon markets

Foreign carbon markets influence China’s carbon prices11. On the one hand, Chinese carbon markets are still in the development stage. In contrast, foreign carbon markets have been established for a longer period of time and are relatively well-established. China’s carbon market will, to a certain extent, refer to foreign carbon markets when setting carbon emission quotas. For instance, the EUA price acts as the primary reference point in the global carbon trading market, significantly shaping carbon emission allowances and, consequently, carbon prices55. Specifically, EUA, certified emission reductions (CER), and other similar products are used to reference the fulfillment of carbon reduction obligations29. On the other hand, the disparity in economic development between China and other nations can result in variations in carbon market pricing. If China’s carbon market is priced low, transnational companies will speculate heavily in the Chinese carbon market to buy a large number of carbon emission rights, thus adding to the demand for carbon emission rights in the Chinese market and driving up the Chinese carbon price until it reaches parity with the international carbon price. Furthermore, at the macro level, an increase in carbon emissions reduces foreign direct investment, which affects the trading of carbon allowances and indirectly causes price volatility10. As of now, China’s carbon market is not yet in line with international standards. Consequently, the investor base remains relatively modest in size. Nevertheless, once the two are connected, the issue of speculation is expected to escalate. Hence, foreign carbon prices will have a dual effect: they will inform the establishment of carbon prices in China and potentially drive up the carbon price in the country through speculative activities11.

Weather conditions

Global warming is becoming more severe and the primary cause of this issue can be attributed to greenhouse gas emissions, especially CO256. Climate change can affect carbon price volatility through multiple channels. Earlier studies have shown that climate change can alter fossil energy consumption and thus affect carbon price fluctuations57,58,59,60. In the past few years, researchers have mainly addressed the significance of climate change on carbon prices from different perspectives. From a production standpoint, when temperatures become excessively high or low, residents resort to cooling or heating equipment, resulting in a temporary upswing in energy consumption and subsequent CO2 emissions. In addition, from a business perspective, extreme weather and catastrophic events are exposing new energy companies to a significant physical risk, leading to changes in the energy mix and having a considerable impact on carbon prices61,62. To be more precise, the generation of environmentally friendly energy sources like wind, solar, and hydropower is strongly influenced by various weather factors, including temperature, precipitation, and humidity63. Therefore, it is very important to consider climate change when predicting carbon prices46.

Experimental results and discussion

Evaluation indicators

In this study, five common metrics are used, as shown in Table 4. The larger the R2 and the smaller the remaining indicators, the better the predictive performance of the model.

Experiment one: comparison of this paper’s model with different benchmark models

Algorithm table

To demonstrate the superiority of the prediction performance of the proposed GWO–XGBOOST–CEEMDAN model in practical applications, four other different benchmark models are first set up for comparison in this paper, namely GBDT, XGBOOST, GWO–XGBOOST, and GWO–XGBOOST–EEMD. As indicated in Table 5.

Model parameter setting

To validate the prediction accuracy of the GWO–XGBOOST–CEEMDAN model, various comparison algorithms are utilized to evaluate forecasting performance. For the prediction of the base model, the GBDT and XGBOOST models are selected to compare and analyze the prediction effect of GWO–XGBOOST. For the prediction of the combined residual correction model, the EEMD and CEEMDAN methods are used to compare and decompose the residual sequences generated by GWO–XGBOOST. The parameter settings of each model are shown in Table 6. According to the actual situation of the three carbon trading markets, the parameters of each model are adjusted as shown in Table 7, and the remaining parameters are set by default in Python.

Screening analysis of carbon price influencing factors

This study considers both the selection of historical carbon price variables and the identification of external influences in three carbon trading markets. More specifically, for the purpose of examining the correlation between historical carbon price variables and the carbon price data, we use PACF in order to identify the relevant input data characteristics for forecasting. Figure 2 shows the PACF results for Guangdong, Hubei, and Fujian. This analysis reveals a notable fourth-order autocorrelation in the carbon price data of Guangdong, whereas both Hubei and Fujian demonstrate a third-order autocorrelation. Xi is the output feature; {Xi-1, Xi-2, Xi-5, Xi-7} are the input historical variables for the Guangdong dataset; {Xi-1, Xi-3, Xi-5} are the input historical variables for the Hubei dataset; and {Xi-1, Xi-2, Xi-3} are the input history variables for the Fujian dataset.

Furthermore, with the intention of identifying the main external influences on carbon prices, this paper selects 11 primary carbon price indicators and uses random forest to rank the importance of the indicators for the purpose of indicator screening, as shown in Fig. 3. The screened carbon price indicators are shown in Table 8. The input variables for the Guangdong data set are {Xi-1, Xi-2, Xi-5, Xi-7, X1.1, X1.2, X1.3, X1.4, X2.2, X2.3, X3.1, X4.3}, and the input variables for the Hubei data set are {Xi-1, Xi-3, Xi-5, X1.2, X1.3, X1.4, X2.1, X2.2, X2.3, X3.1, X4.3}, and the input variables for the Fujian dataset are {Xi-1, Xi-2, Xi-3, X1.1, X1.2, X1.3, X2.1, X2.2, X2.3, X3.1, X4.3}.

Carbon price forecast results I

Table 9 shows the effect of the fitted curves on the carbon price predictions for each model in the three test sets. Furthermore, to facilitate a visual comparison of the prediction outcomes between the proposed model and other comparative models, Figs. 4, 5, and 6 depict the prediction results for the Guangdong, Hubei, and Fujian datasets, respectively.

Experiment two: comparison of this paper’s model with different input feature models

Algorithm table

To further confirm the effectiveness of the feature selection algorithm, GWO–XGBOOST–CEEMDAN*, All-VARIABLE-GWO–XGBOOST–CEEMDAN, and the proposed GWO–XGBOOST–CEEMDAN model are compared in this paper, and the algorithm experiment table is shown in Table 10.

Carbon price forecast results II

The predicted effect graphs are shown in Figs. 7, 8, and 9, and the algorithm experimental results table is shown in Table 11.

Analysis of experimental results

Figure 10 shows the predicted results of the evaluation indexes of the above two groups of experiments. By comparing and analyzing the above two sets of experiments, we can draw the following conclusions:

-

(1)

In single-model prediction, the GWO–XGBOOST model has the best prediction effect, which is mainly attributed to the following reasons: First, GBDT builds an integrated model by training a series of decision trees; each tree is trained on the residuals of the previous tree, so when the number of trees is large, the model may be over-fitted on the training set, resulting in large model prediction errors. Second, XGBOOST is an integrated learning method that enhances and optimizes on the basis of GBDT and improves generalization ability but has weaker performance in dealing with the category imbalance problem. Finally, GWO, as an optimization algorithm for searching for the global optimal solution, can not only tune the hyperparameters in XGBOOST, such as learning rate, tree depth, subsample ratio, etc., but also fuse multiple XGBOOST models, adjusting the weights and parameters of different models to achieve the combination and integration of models. This can, to some degree, enhance the model’s stability and generalization ability, thus increasing its overall capability.

-

(2)

For the combination algorithm, all combination models outperform comparison models in relation to predictive accuracy. The Guangdong dataset is used as an example, and the Hubei and Fujian datasets are consistent with this conclusion. First, to verify the effectiveness of the decomposition method proposed in this paper, GWO–XGBOOST–EEMD is contrasted with the model in this paper, and it is found that the prediction accuracy of the proposed model in this paper is improved by 34.888%, 18.562%, 19.286%, 18.540%, and 0.443% for MSE, MAE, RMSPE, MAPE, and R2, respectively. The results indicate that the CEEMDAN approach proposed in this study offers an additional enhancement to the prediction accuracy of the GWO–XGBOOST model when compared to EEMD. Secondly, to further demonstrate the superiority of the feature selection algorithm, the GWO–XGBOOST–CEEMDAN* model only considers carbon price historical data, and the All-VARIABLE-GWO–XGBOOST–CEEMDAN model takes carbon price historical data and all external influences as input variables. The results of these three models indicate that the feature selection algorithm is helpful in improving the prediction performance of the hybrid model.

Discussion

Validation on other data sets

In order to verify the generalization ability of the model as well as its strong robustness, we selected a Q1 partitioned article published in the journal ENVIRONMENTAL SCIENCE AND POLLUTION RESEARCH29. This paper selects the daily carbon prices in Beijing, Hubei, and Shanghai as sample data, all of which come from the China Carbon Trading Network, and the experiment uses 75% of the data as the training set and 25% as the test set as a way to verify the accuracy of the hybrid model. We use the raw data from this published paper to do a comparative analysis between our proposed model and the model prediction results from the published paper, which not only verifies again that our own model has strong stability and prediction accuracy but also makes the study richer and more convincing. The information on the three carbon trading markets is shown in Table 12.

The effect of the fitting curves of our carbon price prediction for three carbon markets using the proposed GWO–XGBOOST–CEEMDAN model is shown in Fig. 11. In addition, Table 13 is a table of the experimental results of the algorithms of the two models for the three carbon markets, which compares more intuitively the prediction accuracy capability of the proposed model with the models of the published papers.

Comparing the prediction accuracy of our own proposed model with the published paper model for the same dataset, we can draw the following conclusions:

-

(1)

Most of the previous research focused on the analysis of historical carbon price prediction, and the selected published paper is a typical representative of the previous research, which is different from one of the innovations of my own thesis: considering the historical carbon price and the influencing factors at the same time so as to create a rich indicator system. Therefore, this published paper also validates, to a certain extent, the prediction accuracy of the proposed model when only considering the historical carbon price.

-

(2)

The research objects of the published papers are selected as Beijing, Hubei, and Shanghai, which are different from the objects of our own paper. Firstly, it verifies that the proposed model is not limited to the carbon market in Guangdong, Hubei, and Fujian but can also be used in carbon price prediction studies in other regions, which means that the results can be extended to other regions, such as Beijing and Shanghai. Secondly, the research object in the published paper still includes the Hubei carbon market, which verifies that the proposed model still has high accuracy in the case of another dataset that only considers the historical carbon price. In conclusion, by comparing the carbon price prediction with the published paper for the same dataset, it shows that the proposed model has stronger generalization ability and robustness.

-

(3)

The GWO–XGBOOST–CEEMDAN model is more suitable than the VMD–SE–DRNN–GRU model used in the published paper to deal with the problem of forecasting time series data. Firstly, CEEMDAN automatically determines the number of modes to be generated based on the dataset and generates intrinsic modal functions (IMFs), while VMD, although it can also perform modal decomposition, needs to pre-specify the number of modes to be decomposed into, which requires some domain knowledge or experiments to determine. If the number of modes chosen is inappropriate, it may lead to inaccurate decomposition results, thus CEEMDAN has more adaptive and flexible compared to VMD. Secondly, the GWO–XGBOOST–CEEMDAN model has lower complexity compared to deep learning models, thus it is easier to train with limited data and does not require a large amount of computational resources, which can make it more practical in some applications, such as the field of carbon price prediction. Finally, XGBOOST models are usually very interpretable and can provide feature importance rankings, whereas deep learning models such as DRNN and GRU are usually more difficult to interpret, especially in highly complex network structures. In conclusion, both VMD–SE–DRNN–GRU and GWO–XGBOOST–CEEMDAN are sophisticated carbon price prediction methods, and GWO–XGBOOST–CEEMDAN may be a better choice in cases of complex or non-stationary data.

DM test

To further examine the prediction performance between the proposed GWO–XGBOOST–CEEMDAN integrated combination model and the comparison models, this section uses the DM test to analyze statistical errors from the perspective of statistical errors. The bold values in the table indicate that the p-value is below the significance threshold of 0.05. To visually assess the predictive performance of the GWO–XGBOOST–CEEMDAN model and other models, we analyze their predictive ability using the coverage ratio based on the DM results. The coverage ratio is expressed as the ratio of the number of DM results rejecting the original hypothesis to the total number of DM results. When the models exhibit comparable predictive capabilities, a lower number of DM test results have a p-value less than 0.05, resulting in a coverage rate below 50%. When the models demonstrate significantly superior predictive capabilities compared to the benchmark model, a higher number of DM test results exhibit a p-value below 0.05, leading to a coverage rate exceeding 50%.

This further analysis of the DM test results in Table 14 revealed that DM coverage for the GWO–XGBOOST–CEEMDAN model was 83.3% in all three datasets, demonstrating that the GWO–XGBOOST–CEEMDAN model outperformed the proposed benchmark model in the majority of instances, and thus the proposed hybrid model was statistically significant.

Limitations of the current study and future work

Although the constructed hybrid forecasting framework showcases superior performance in carbon trading price prediction and fills the current research gap in carbon price prediction, there are still a few shortcomings that need further improvement and development. Following are the main limitations of this study:

-

(1)

Due to data availability limitations, the hybrid prediction framework we developed only considers eight influencing factors.

-

(2)

This study provides information for related scholars. Firstly, this paper performs carbon price prediction first and then decomposes the residual series. As a result, data can be explored and utilized more effectively, and prediction accuracy and reliability can be improved. Compared with the traditional method of decomposition followed by prediction, this paper provides an alternative way of thinking and method for carbon price prediction, which scholars can refer to further to explore and analyze the intrinsic mechanism of carbon price, expand the research field, and deepen theoretical understanding. Second, in the modeling process, in addition to the factors already considered, other factors that may affect carbon prices can be further considered. This will reveal more factors affecting carbon price change and further improve the prediction effect. In addition to better adapting to changes in data characteristics, we need to improve the hybrid forecasting framework. Specifically, we can implement automatic key parameter settings and build a smart carbon price prediction framework. This framework automatically adapts to data changes, improving prediction accuracy and stability. Finally, future research can also extend carbon price prediction to other fields, for instance, energy market prediction and climate change risk management, which will further prove the value of our research results.

Impact on sustainability

This study examines the effects of macroeconomics, energy prices, international carbon markets, and weather conditions on improving carbon trading price forecasts, which have crucial ramifications for sustainable development. In particular, risk managers can incorporate multiple factors, such as energy factors and global carbon prices, into carbon market management. In addition, investors can grasp carbon market dynamics based on influencing factors and improve market participants’ flexibility and motivation. This paper conducts research related to carbon price forecasting, which is helpful for the government and enterprises to grasp the characteristics of carbon prices and helps carbon market management and investment decisions, especially the solution of the carbon price prediction problem, which is linked to whether the double carbon target can be achieved on time or in advance. Therefore, this study aims to provide a reasonable forecast of carbon prices in order to facilitate carbon market participants in achieving their goals and help real producers reduce emissions efficiently through market mechanisms. In conclusion, the forecasting framework and the associated research findings we have developed hold significant implications for the advancement of sustainable development.

Feature importance analysis

To identify the key determinants in carbon price prediction, this paper uses XGBOOST and GBDT models for feature importance analysis, respectively. The results and statistical plots of feature importance indices for each model are shown in Table 13 and Fig. 12. Through preliminary observation, it is evident that the feature ranking results in the two models for the three data sets vary to some extent. A more in-depth examination of the feature rankings in Table 15 uncovers that the historical carbon price, natural gas market offers, and Bohai Ring Power Coal Price Index in energy prices, the S&P 500 and Dow Jones Industrial Index in macroeconomics, and the EU carbon emission allowances in the international carbon market rank ahead of the two models for the three data sets XGBOOST and GBDT as the key factors for carbon price prediction.

For governments, our findings suggest that historical carbon prices, natural gas market quotes, the Bohai Ring Power Coal Index, the S&P 500 Index, the Dow Jones Industrial Average, and EU carbon emission allowances can be effective ways to improve the predictive power of carbon prices in regional carbon trading markets, and policymakers can refer to our findings to make decisions about carbon market policies. First, for historical carbon price data, when the carbon price rises, the government makes stricter carbon reduction policies. This is to stimulate emission reduction measures. And when carbon prices fall, the government may reduce subsidies and support for carbon abatement to prevent a burden on the Treasury. Second, changes in natural gas supply and the Bohai Ring Power Coal Index can affect energy security. When these prices rise, the government can increase domestic production and reserves to guarantee energy supply stability. When prices fall, the government should promote the energy market by increasing subsidies and controlling imports. Moreover, the S&P 500 and the Dow Jones Industrial Average reflect the macroeconomic environment. When prices fall, the government takes stimulus measures, such as cutting taxes or increasing spending, to promote economic growth. When prices rise, the government can take restraining measures, such as strengthening regulation or controlling capital inflows, to prevent overheating. Finally, EU carbon emission allowances reflect international carbon markets. When quotas rise, the government should increase carbon emission quotas to ease enterprises’ economic burden. This will avoid excessive carbon prices that lose them competitiveness. When the quota decreases, the government should reduce carbon emission quotas or support low-carbon technologies to reduce carbon emissions. In addition, the government should strengthen regulation and management to reduce fraud and misconduct on the carbon market, which will improve market transparency and stability.

In conclusion, the carbon price forecasting study in this paper can act as a point of reference for policymakers to consider various factors. This will ensure policy sustainability and effectiveness.

Conclusions

This paper uses sophisticated data decomposition methods and efficient feature subset selection algorithms to propose a comprehensive consideration of multiple influencing factors in a carbon price forecasting framework, thus achieving the expected forecasting results. To validate the effectiveness of the designed hybrid forecasting framework, we conducted an empirical study on three regional carbon emission trading markets in Guangdong Province, Hubei Province, and Fujian Province, China, and evaluated five performance evaluation indicators, six benchmark models, three case analyses, and four discussions to systematically and holistically examine the developed hybrid forecasting framework. The prediction results clearly demonstrate the superior performance of the developed prediction framework over all benchmark models. Therefore, we contend that the proposed carbon price forecasting framework offers a valuable and effective approach to predicting carbon prices. Specifically, this study’s findings can be briefly outlined as follows:

-

(1)

The XGBOOST model based on the boosting integrated learning framework has high prediction accuracy and strong generalization ability. However, the model’s predictive performance is sensitive to parameter settings, and the grey wolf optimization algorithm facilitates rapid determination of the optimal parameters for the XGBOOST model. Among the single-model carbon price prediction approaches, the proposed GWO–XGBOOST model exhibits the highest level of accuracy.

-

(2)

The nonlinear residual series generated by an individual machine learning model for carbon price prediction still contains valid information. In this paper, CEEMDAN is utilized to decompose the residual series generated by the GWO–XGBOOST model into sub-series with different frequencies, so that each sub-series is predicted separately, and finally, the prediction results of each sub-series are superimposed to achieve the overall prediction result. In comparison to the traditional single model, the combined GWO–XGBOOST–CEEMDAN model presented in this paper has the most accurate prediction effect.

-

(3)

Two machine learning models, XGBOOST and GBDT, are used to model carbon prices separately and perform feature importance analyses. It is found that the historical carbon price, the natural gas market price, and the Bohai Sea Power Coal Price Index in the energy price, the macroeconomic S&P 500 and Dow Jones Industrial Average in the macroeconomy, and EU carbon emission allowances in the international carbon market are the main influencing factors for carbon price prediction.

-

(4)

This study constructs a carbon price prediction model in accordance with multiple influencing factors and introduces a feature selection method. By selecting the features with the most predictive power from many possible input features and mitigating the adverse impacts of redundant information and noise among features, the model’s predictive performance and generalization capability are enhanced.

-

(5)

In this paper, external influences and historical carbon price data are jointly used as input features of the model, such as macroeconomics, energy prices, and international carbon markets. Compared with previous studies that only considered historical carbon price data and all external influences and historical carbon price data concurrently, the accuracy of carbon price prediction has significantly improved. For one thing, it shows the significance of feature selection, and for another, it indicates that the combination of external factors and historical carbon price data can forecast the carbon price trend more precisely.

Data availability

The data used to support the findings of this study are available from the corresponding author upon request.

References

Hashim, H. et al. An integrated carbon accounting and mitigation framework for greening the industry. Energy Procedia 75, 2993–2998 (2015).

An, K. et al. Socioeconomic impacts of household participation in emission trading scheme: A computable general equilibrium-based case study. Appl. Energy 288, 116647. https://doi.org/10.1016/j.apenergy.2021.116647 (2021).

Song, Y., Liang, D., Liu, T. & Song, X. How China’s current carbon trading policy affects carbon price? An investigation of the Shanghai Emission Trading Scheme pilot. J. Clean. Prod. 181, 374–384 (2018).

Sun, W. & Zhang, C. Analysis and forecasting of the carbon price using multi—Resolution singular value decomposition and extreme learning machine optimized by adaptive whale optimization algorithm. Appl. Energy 231, 1354–1371 (2018).

Zhu, B., Ye, S., He, K., Chevallier, J. & Xie, R. Measuring the risk of European carbon market: An empirical mode decomposition-based value at risk approach. Ann. Oper. Res. 281, 373–395 (2019).

Zhu, J., Wu, P., Chen, H., Liu, J. & Zhou, L. Carbon price forecasting with variational mode decomposition and optimal combined model. Phys. A 519, 140–158 (2019).

Yun, P., Zhang, C., Wu, Y. & Yang, Y. Forecasting carbon dioxide price using a time-varying high-order moment hybrid model of NAGARCHSK and gated recurrent unit network. Int. J. Environ. Res. Public Health 19, 899. https://doi.org/10.3390/ijerph19020899 (2022).

Niu, X., Wang, J. & Zhang, L. Carbon price forecasting system based on error correction and divide-conquer strategies. Appl. Soft Comput. 118, 107935. https://doi.org/10.1016/j.asoc.2021.107935 (2022).

Yun, P., Huang, X., Wu, Y. & Yang, X. Forecasting carbon dioxide emission price using a novel mode decomposition machine learning hybrid model of CEEMDAN-LSTM. Energy Sci. Eng. 11, 79–96 (2023).

Li, Y. & Song, J. Research on the application of GA-ELM model in carbon trading price–An example of Beijing. Pol. J. Environ. Stud. 31, 149–161 (2022).

Du, Y., Chen, K., Chen, S. & Yin, K. Prediction of carbon emissions trading price in Fujian province: Based on BP neural network model. Front. Energy Res. 10, 939602. https://doi.org/10.3389/fenrg.2022.939602 (2022).

Li, H. et al. Forecasting carbon price in China: A multimodel comparison. Int. J. Environ. Res. Public Health 19, 6217. https://doi.org/10.3390/ijerph19106217 (2022).

Hao, Y. & Tian, C. A hybrid framework for carbon trading price forecasting: The role of multiple influence factor. J. Clean. Prod. 262, 120378. https://doi.org/10.1016/j.jclepro.2020.120378 (2020).

Zhu, B. & Wei, Y. Carbon price forecasting with a novel hybrid ARIMA and least squares support vector machines methodology. Omega 41, 517–524 (2013).

Paolella, M. S. & Taschini, L. An econometric analysis of emission allowance prices. J. Bank. Financ. 32, 2022–2032 (2008).

Wang, Z. & Ye, D. Forecasting Chinese carbon emissions from fossil energy consumption using non-linear grey multivariable models. J. Clean. Prod. 142, 600–612 (2017).

García-Martos, C., Rodríguez, J. & Sánchez, M. J. Modelling and forecasting fossil fuels, CO2 and electricity prices and their volatilities. Appl. Energy 101, 363–375 (2013).

Dutta, A. Modeling and forecasting the volatility of carbon emission market: The role of outliers, time-varying jumps and oil price risk. J. Clean. Prod. 172, 2773–2781 (2018).

Sun, W. & Wang, Y. Factor analysis and carbon price prediction based on empirical mode decomposition and least squares support vector machine optimized by improved particle swarm optimization. Carbon Manage. 11, 315–329 (2020).

Chi, G., Uddin, M. S., Abedin, M. Z. & Yuan, K. Hybrid model for credit risk prediction: An application of neural network approaches. Int. J. Artif. Intell. Tools 28, 1950017. https://doi.org/10.1142/S0218213019500179 (2019).

Vo, T. H., Nguyen, N. T. K., Kha, Q. H. & Le, N. Q. K. On the road to explainable AI in drug-drug interactions prediction: A systematic review. Comput. Struct. Biotechnol. J. 20, 2112–2123 (2022).

Akhtar, M. & Moridpour, S. A review of traffic congestion prediction using artificial intelligence. J. Adv. Transp. 2021, 1–18 (2021).

Popoola, S. I., Adebisi, B., Ande, R., Hammoudeh, M. & Atayero, A. A. Memory-efficient deep learning for botnet attack detection in IoT networks. Electronics 10, 1104. https://doi.org/10.3390/electronics10091104 (2021).

Atsalakis, G. S. Using computational intelligence to forecast carbon prices. Appl. Soft Comput. 43, 107–116 (2016).

Zhu, B., Shi, X., Chevallier, J., Wang, P. & Wei, Y. An adaptive multiscale ensemble learning paradigm for nonstationary and nonlinear energy price time series forecasting. J. Forecast. 35, 633–651 (2016).

Xu, W., Wang, J., Zhang, Y., Li, J. & Wei, L. An optimized decomposition integration framework for carbon price prediction based on multi-factor two-stage feature dimension reduction. Ann. Oper. Res. 1–38 (2022).

Momeneh, S. & Nourani, V. Application of a novel technique of the multi-discrete wavelet transforms in hybrid with artificial neural network to forecast the daily and monthly streamflow. Model. Earth Syst. Environ. 8, 4629–4648 (2022).

Sun, G. et al. A carbon price forecasting model based on variational mode decomposition and spiking neural networks. Energies 9, 54. https://doi.org/10.3390/en9010054 (2016).

Wang, J., Cheng, Q. & Sun, X. Carbon price forecasting using multiscale nonlinear integration model coupled optimal feature reconstruction with biphasic deep learning. Environ. Sci. Pollut. Res. 29, 85988–86004 (2022).

E, J., Ye, J., He, L. & Jin, H. Energy price prediction based on independent component analysis and gated recurrent unit neural network. Energy 189, 116278. https://doi.org/10.1016/j.energy.2019.116278 (2019).

Liu, J., Guo, Y., Chen, H., Ren, H. & Tao, Z. Multi-scale combined prediction of carbon prices based on non-structural data and popular learning. Control Decis. 279–286 (2019).

Zhou, J., Yu, X. & Yuan, X. Predicting the carbon price sequence in the shenzhen emissions exchange using a multiscale ensemble forecasting model based on ensemble empirical mode decomposition. Energies 11, 1907. https://doi.org/10.3390/en11071907 (2018).

Tsai, M. T. & Kuo, Y. T. A forecasting system of carbon price in the carbon trading markets using artificial neural network. Int. J. Environ. Sci. Dev. 4, 163–167 (2013).

Wang, X. et al. A combined prediction model for hog futures prices based on woa-lightgbm-ceemdan. Complexity 2022, 3216036. https://doi.org/10.1155/2022/3216036 (2022).

Wu, Z. & Huang, N. E. Ensemble empirical mode decomposition: A noise-assisted data analysis method. Adv. Adapt. Data Anal. 1, 1–41 (2009).

Torres, M. E., Colominas, M. A., Schlotthauer, G. & Flandrin, P. A complete ensemble empirical mode decomposition with adaptive noise. ICASSP. 4144–4147 (2011).

Chen, T. & Guestrin, C. Xgboost: A scalable tree boosting system. KDD. 785–794 (2016).

Mirjalili, S., Mirjalili, S. M. & Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 69, 46–61 (2014).

Sun, W. & Xu, C. Carbon price prediction based on modified wavelet least square support vector machine. Sci. Total Environ. 754, 142052. https://doi.org/10.1016/j.scitotenv.2020.142052 (2021).

Li, Y. Current situation, problems and suggestions of carbon emission trading pilot in Fujian province. Macroecon. Manag. 66–71 (2018).

Ding, G., Deng, Y. & Lin, S. A study on the classification of China’s provincial carbon emissions trading policy instruments: Taking Fujian province as an example. Energy Rep. 5, 1543–1550 (2019).

Peng, X. & Zhong, Y. Factors influencing carbon emission trading prices and strategies. Price Mon. 12, 25–31 (2021).

Sun, X., Hao, J. & Li, J. Multi-objective optimization of crude oil-supply portfolio based on interval prediction data. Ann. Oper. Res. 309, 611–639 (2022).

Guo, F. & Pan, X. Carbon market: Price fluctuations and risk measurement—An empirical analysis based on EU ETS futures contract prices. Fin. Trade Econ. 110–118 (2011).

Zhang, Y. & Wei, Y. An overview of current research on EU ETS: Evidence from its operating mechanism and economic effect. Appl. Energy 87, 1804–1814 (2010).

Qin, Q., He, H., Li, L. & He, L. Y. A novel Decomposition-Ensemble based carbon price forecasting model integrated with local polynomial prediction. Comput. Econ. 55, 1249–1273 (2020).

Xie, Q., Hao, J., Li, J. & Zheng, X. Carbon price prediction considering climate change: A text-based framework. Econ. Anal. Policy 74, 382–401 (2022).

Christiansen, A. C., Arvanitakis, A., Tangen, K. & Hasselknippe, H. Price determinants in the EU emissions trading scheme. Clim. Policy 5, 15–30 (2005).

Mansanet-Bataller, M., Pardo, A. & Valor, E. CO2 prices, energy and weather. Energy J. 28, 73–92 (2007).

Chevallier, J., Nguyen, D. K. & Reboredo, J. C. A conditional dependence approach to CO2-energy price relationships. Energy Econ. 81, 812–821 (2019).

Ji, Q., Zhang, D. & Geng, J. Information linkage, dynamic spillovers in prices and volatility between the carbon and energy markets. J. Clean. Prod. 198, 972–978 (2018).

Alberola, E., Chevallier, J. & Chèze, B. Price drivers and structural breaks in European carbon prices 2005–2007. Energy Policy 36, 787–797 (2008).

Tian, C. & Hao, Y. Point and interval forecasting for carbon price based on an improved analysis-forecast system. Appl. Math. Model. 79, 126–144 (2020).

Zhu, B. et al. A novel multiscale nonlinear ensemble leaning paradigm for carbon price forecasting. Energy Econ. 70, 143–157 (2018).

Huang, Y. & He, Z. Carbon price forecasting with optimization prediction method based on unstructured combination. Sci. Total Environ. 725, 138350. https://doi.org/10.1016/j.scitotenv.2020.138350 (2020).

Dimitriadis, D., Katrakilidis, C. & Karakotsios, A. Investigating the dynamic linkages among carbon dioxide emissions, economic growth, and renewable and non-renewable energy consumption: Evidence from developing countries. Environ. Sci. Pollut. Res. 28, 40917–40928 (2021).

Alberola, E., Chevallier, J. & Chèze, B. Emissions compliances and carbon prices under the EU ETS: A country specific analysis of industrial sectors. J. Policy Model. 31, 446–462 (2009).

Considine, T. J. The impacts of weather variations on energy demand and carbon emissions. Resour. Energy Econ. 22, 295–314 (2000).

Creti, A., Jouvet, P. A. & Mignon, V. Carbon price drivers: Phase I versus Phase II equilibrium?. Energy Econ. 34, 327–334 (2012).

Han, M., Ding, L., Zhao, X. & Kang, W. Forecasting carbon prices in the Shenzhen market, China: The role of mixed-frequency factors. Energy 171, 69–76 (2019).

Ji, C., Hu, Y., Tang, B. & Qu, S. Price drivers in the carbon emissions trading scheme: Evidence from Chinese emissions trading scheme pilots. J. Clean. Prod. 278, 123469. https://doi.org/10.1016/j.jclepro.2020.123469 (2021).

Zheng, H., Song, M. & Shen, Z. The evolution of renewable energy and its impact on carbon reduction in China. Energy 237, 121639. https://doi.org/10.1016/j.energy.2021.121639 (2021).

Ji, C., Hu, Y. & Tang, B. Research on carbon market price mechanism and influencing factors: A literature review. Nat. Hazards 92, 761–782 (2018).

Sun, W., Sun, C. & Li, Z. A hybrid carbon price forecasting model with external and internal influencing factors considered comprehensively: A case study from China. Pol. J. Environ. Stud. 29, 3305–3316 (2020).

Li, J. & Liu, D. Carbon price forecasting based on secondary decomposition and feature screening. Energy 278, 127783. https://doi.org/10.1016/j.energy.2023.127783 (2023).

Zhou, J. & Wang, S. A carbon price prediction model based on the secondary decomposition algorithm and influencing factors. Energies 14, 1328. https://doi.org/10.3390/en14051328 (2021).

Acknowledgements

This research was funded by the National Natural Science Foundation of China (Grant no. 81973791).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study’s conception and design. M.F.: data analysis, visualization of experimental results, and writing the original draft. Y.D.: materials preparation and financial support. X.W.: data collection and methodological interpretation. J.Z.: manuscript check. L.M.: manuscript revision. All authors have read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Feng, M., Duan, Y., Wang, X. et al. Carbon price prediction based on decomposition technique and extreme gradient boosting optimized by the grey wolf optimizer algorithm. Sci Rep 13, 18447 (2023). https://doi.org/10.1038/s41598-023-45524-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-45524-2

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.