Abstract

Reservoir computers are powerful machine learning algorithms for predicting nonlinear systems. Unlike traditional feedforward neural networks, they work on small training data sets, operate with linear optimization, and therefore require minimal computational resources. However, the traditional reservoir computer uses random matrices to define the underlying recurrent neural network and has a large number of hyperparameters that need to be optimized. Recent approaches show that randomness can be taken out by running regressions on a large library of linear and nonlinear combinations constructed from the input data and their time lags and polynomials thereof. However, for high-dimensional and nonlinear data, the number of these combinations explodes. Here, we show that a few simple changes to the traditional reservoir computer architecture further minimizing computational resources lead to significant and robust improvements in short- and long-term predictive performances compared to similar models while requiring minimal sizes of training data sets.

Similar content being viewed by others

Introduction

The prediction of complex dynamic systems is a key challenge across various disciplines in science, engineering, and economics1. While machine learning approaches, like generative adversarial networks, can provide sensible predictions2, difficulties with vast data requirements, the large number of hyperparameters, and lack of interpretability limit their usefulness in some scientific applications3. However, it is required to fundamentally understand how, when, and why the models are working to prevent the risk of misinterpreting the results if deeper methodological knowledge is missing4.

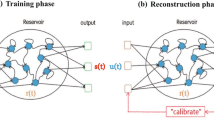

In the context of complex systems research, reservoir computers (RCs)5,6 have emerged for predicting the dynamics of chaotic systems. The core of the model is a fixed reservoir, which is usually constructed randomly7,8,9. The input data is fed into the nodes of the reservoir and solely the weights of the readout layer, which transform the reservoir response to output variables, are subject to optimization via linear regression. This makes the learning extremely fast and comparatively transparent. However, this approach can be hit-or-miss, and it is hardly possible to know a priori how the topology of the reservoir will affect the performance10,11,12.

Recent research has emerged on algorithms which do not require randomness. They are built around regressions13 on large libraries of linear and nonlinear combinations constructed from the data observations and their time lags, such as next generation reservoir computers (NG-RCs)14 or sparse identification of nonlinear dynamics (SINDy)15. These algorithms are built around nonlinear vector autogression (NVAR)16 and the mathematical fact that a powerful universal approximator can be constructed by using an RC with a linear activation function17,18.

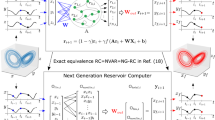

The model we present in this paper is based on the same mathematical principles — but instead of getting rid of the traditional reservoir architecture altogether, we take an intermediate step and make only a few simple changes: we restructure the input weights so that all coordinate combinations are fed separately into the reservoir. Additionally, we remove the randomness of the reservoir by replacing it with a block-diagonal matrix of blocks of ones. Instead of introducing the nonlinearity in the activation function, we add higher orders of the reservoir states in the readout.

Using the example of synthetic, chaotic systems, and in particular the Lorenz system, we show that these alterations lead to excellent short- and long-term predictions that significantly outperform traditional RC, NG-RC, and SINDy. While prediction performance is often evaluated visually, we use three quantitative measures: the largest Lyapunov exponent, the correlation dimension, and the forecast horizon. We also validate the robustness of our results by using multiple attractor starting points, different training data sizes and discretizations.

Results

Prediction on a minimal training data set of the Lorenz system. The first column shows the attractor (top) and the trajectories (bottom) of the 400 training data points (and the discarded transient). The second column shows the attractor and the trajectories of the test data. The third column shows the attractor (top) and the absolute prediction error (bottom) of the prediction. The dashed lines indicate the standard deviations of the three components of the test data.

In this work, we show how small changes to the traditional RC architecture can significantly improve its prediction capability of chaotic systems especially for low data requirements. Therefore, similar to Gauthier et al.,14 we use the minimal data setup for the Lorenz system with a discretization of \(dt{=}0.025\) and \(T_{train}{=}400\) training data points.

The minimal possible architecture would be a spectral radius \(\rho ^*{=}0\) and block-size \(b{=}1\), for which our RC reduces to the case described by Gonon and Ortega.17 Here, we do not have a reservoir and directly feed the input data to the readout and perform a Ridge regression. While we find this parametrization to be capable of reasonable predictions, a few minor alterations increase the performance significantly.

The standard RC architecture used in this work has block-size \(b{=}3\), spectral radius \(\rho ^*{=}0.1\), and a nonlinearity degree \(\eta {=}2\). This equals 36 variables per coordinate. The results of this setup are illustrated in Fig. 1.

In order to obtain robust results we repeat the analysis for \(1000\) different starting points on the attractor and compare the prediction performance to the other models. In Fig. 2 we see that the novel RC architecture significantly outperforms them with regards to short-term predictions with an average forecast horizon of \({\sim }7.0\) Lyapunov times — this is \({\sim }2.5\) times more than the averages of the other models. The long-term prediction is also slightly better as the average relative errors of the correlation dimension and the Lyapunov exponent are \({\sim }3.5 \cdot 10^{-4}\), respectively — this is \({\sim }9.0\) and \({\sim }39.7\) times smaller than the averages of other models. The traditional RC has generally more widely distributed errors due to its randomness.

We verify the robustness of our novel RC to variations in discretization and length of training data. In Fig. 3 we observe that it is quite robust and as expected, performs significantly better than comparable models especially with regards to short-term prediction. Here, we only see a decline in prediction performance for coarse discretizations \(dt{>}0.045\). The robustness of the long-term prediction is similar to traditional RC and SINDy. Interestingly, we see a decline in performance of NG-RC for larger training lengths \(T_{train}{>}700\) and finer discretizations \(dt{<}0.02\). Furthermore, we find out model to be reasonably robust to changes in hyperparameters and noise up to a signal-to-noise ratio of \({\sim }38\text {dB}\).

Furthermore, we analyze the prediction performance of our model on different chaotic systems, which have different nonlinear behavior. We choose the models so that we can understand the inner workings of our RC better. For example, the Halvorsen system has only quadratic nonlinearities with no interacting coordinates and hence the input matrix only needs the first three blocks (which represent the distinct coordinates). Another example to point out is the Rabinovich-Fabrikant system, which has cubic nonlinearities. Here, we see that a nonlinearity degree of \(\eta {\ge }3\) is necessary for a reasonable prediction. The model parameters and the prediction measures for the different systems are illustrated in Table 1.

Prediction measures (columns) of different models (rows) for 1000 different starting points on the Lorenz attractor (\(dt{=}0.025\), \(T_{train}{=}400\)). For the correlation dimension and the Lyapunov exponent we calculate the relative error to the respective test data. The mean and standard deviation of each distribution is denoted by a dashed and dotted black line, respectively.

Prediction measures (columns) of different models (rows) for different discretizations and lengths of training data of the Lorenz system. We vary the discretization (x-axis) and the length (y-axis) of the training data between (0.01, 0.05) and (300, 1000), respectively. For the correlation dimension and the Lyapunov exponent we calculate the relative error to the respective test data. Note that the forecast horizon has a logarithmic color scale. Each value in the heatmaps is the average over 100 variations of attractor starting points.

Discussion

In this work, we present a novel RC architecture that outperforms comparable methods in terms of short- and long-term predictions while requiring similarly minimal training datasets and computational power. The architecture is modified by restructuring the input weights and reservoir such that combinations of input data coordinates are fed separately into the reservoir. Therefore, we use a block-diagonal matrix of ones as the reservoir, which acts as an averaging operator for the reservoir states at each update step. Similar to average pooling layers in other machine learning methods, this can be interpreted as a way to primarily “extract” features that are more robust19. It also takes out the randomness of traditional RC. Instead of using a nonlinear activation function to create the reservoir states, we capture the nonlinearity of the data in the readout layer by appending higher orders of the reservoir states before the Ridge regression. We find that these changes lead to a significant improvement in the short- and long-term predictions of chaotic systems in comparison to models such as the traditional RC, NG-RC, and SINDy. In order to evaluate the prediction performance, we compute the largest Lyapunov exponent, the correlation dimension, and the prediction horizon.

This work can be extended in many directions. For example, the generation of the reservoir states can be explored to understand what the RC actually learns. In our modified architecture, the states are constructed by mixing the average of the past data with the new data with different “proportions”. Therefore, methods for constructing the reservoir states, such as the exponentially weighted moving average (EWMA) of the data20, should be explored. Related to this, the design of the readout is also an interesting topic to look into. Similarly to NG-RC and SINDy, nonlinear functions could be applied and appended to the reservoir states in order to capture more complex structures in the data.

Another study can be conducted on how the elimination of randomness from RC-like models affects their capabilities, e.g., information processing capacity21 or multifunctionality22.

Furthermore, the applicability to high-dimensional and highly nonlinear data can be analyzed and compared with models relying on large feature libraries, such as NG-RC and SINDy. Since the number of variables scales less rapidly in our architecture, it would be relevant to see how much computational power can be saved, especially for hardware RCs.

Moreover, the model can be tested on real-world examples from different disciplines to produce reliable short- and long-term predictions, especially in cases where training data is scarce and expensive.

Methods

Reservoir computers

A reservoir computer (RC)5,23,24 is an artificial recurrent neural network (RNN) that relies on a static network called reservoir. The term static means that, unlike other RNN approaches, the reservoir remains fixed once the network is constructed. The same is true for the input weights. Therefore, the RC is computationally very efficient since the training process only involves optimizing the output layer. As a result, fast training and high model dimensionality are computationally feasible, making RC well suited for complex real-world applications.

In the following we describe the individual components of the architecture and the modifications that we propose. To make the following section more understandable we introduce them in a high-level summary:

-

1.

Input weights: the input weights \({\textbf{W}}_{in}\) are designed so that each combination of the coordinates of the data is fed into the reservoir separately.

-

2.

Reservoir: the reservoir \({\textbf{A}}\) is chosen as a block-diagonal matrix consisting of matrices of ones with size b.

-

3.

Reservoir states: we do not use a nonlinear activation function in order to construct the reservoir states \({\textbf{r}}(t)\). Hence the iterative update equation reduces to:

$$\begin{aligned} {\textbf{r}}(t+1) = {\textbf{A}} \cdot {\textbf{r}}(t) + {\textbf{W}}_{in} \cdot {\textbf{u}}(t) \,, \end{aligned}$$(1)where \({\textbf{u}}(t)\) denotes the training data at time t.

-

4.

Readout: instead of only inserting the squared reservoir states25, our generalized states \(\tilde{{\textbf{r}}}\) contain all orders up to a nonlinearity degree \(\eta\):

$$\begin{aligned} \tilde{{\textbf{r}}} = \left\{ {\textbf{r}}, {\textbf{r}}^2, \dots , {\textbf{r}}^{\eta - 1}, {\textbf{r}}^\eta \right\} \,. \end{aligned}$$(2) -

5.

Training and Prediction: as in traditional RCs, we stack the training data \({\textbf{u}}\) and the corresponding reservoir states \(\tilde{{\textbf{r}}}\) to matrices \({\textbf{U}}\) and \(\tilde{{\textbf{R}}}\) respectively. We then solve the equation \({\textbf{W}}_{out}\cdot \tilde{{\textbf{R}}} = {\textbf{U}}\) by using Ridge regression26 resulting in:

$$\begin{aligned} {\textbf {W}}_{out} = {\textbf{U}}\cdot \tilde{{\textbf{R}}}^T \left( \tilde{{\textbf{R}}} \cdot \tilde{{\textbf{R}}}^T + \beta \,{\textbf{I}}\right) ^{-1} \,, \end{aligned}$$(3)where \(\beta {=}10^{-5}\) is the regularization constant that prevents overfitting and \({\textbf{I}}\) denotes the identity matrix. The prediction procedure of the reservoir states also stays the same (with the adjusted update equation):

$$\begin{aligned} {\textbf{r}}(t+1) = {\textbf{A}} \cdot {\textbf{r}}(t) + {\textbf{W}}_{in} \cdot {\textbf{W}}_{out} \cdot \tilde{{\textbf{r}}}(t) \,. \end{aligned}$$(4)Note that the reservoir \({\textbf{A}}\) only acts on the “simple” reservoir state \({\textbf{r}}\), while the second summand acting on \({\textbf{W}}_{out}\) is \(\tilde{{\textbf{r}}}\) containing all the nonlinear powers. The predicted time series \({\textbf{y}}(t)\) can then be obtained by the multiplication:

$$\begin{aligned} {\textbf{y}}(t) = {\textbf {W}}_{out} \cdot \tilde{{\textbf{r}}}(t) \,. \end{aligned}$$(5)

Input weights

In order to feed the input data \({\textbf{u}}(t)\) into the reservoir, an input weights matrix \({\textbf{W}}_{in}\) is defined, which determines how strongly each coordinate influences every single node of the reservoir network. In a traditional RC, the elements of \({\textbf{W}}_{in}\) are chosen to be uniformly distributed random numbers within the interval \([-1, 1]\).

In our novel framework we do not choose the elements randomly, but follow a structured approach. Firstly, in order to remove the randomness, for a block-size of b we take b equally spaced values between [1, 0].

To avoid non-invertible matrices for ridge regression, we take the square root values of all weights \({\textbf{w}}=\left( \sqrt{w_1}, \dots , \sqrt{w_b} \right) ^T\). Then we specifically structure the input matrix so that the different combinations of input data coordinates, also called features, are fed separately into the reservoir. In the case of a 3-dimensional system with coordinates \({\textbf{u}}(t)=(x, y, z)^T(t)\) and a nonlinearity order \(\eta {=}2\), the input matrix (multiplication) looks like:

where \(\otimes\) denotes the tensor product and hence each block represents one feature f. For n-dimensional data the feature space contains \(n_f=2^n{-}2\) elements. Thus, the dimension of the reservoir is \(d=n_f \cdot b\).

Reservoir

The core of an RC, the reservoir \({\textbf {A}}\), is usually constructed as a sparse Erdős-Rényi random network27 with number of nodes d. As for the choice of input weights, we choose the reservoir in such a way that each feature remains separate. Therefore, we use a block diagonal matrix J consisting of ones \({\textbf{J}}\) with block size b. Thus, each block \({\textbf{J}}_i\) can be directly mapped to a particular feature:

Similar to a traditional RC, we scale the spectral radius \(\rho ({\textbf{J}})\) of the reservoir to a target spectral radius \(\rho ^*\). While the computation of the spectral radius is usually a computationally expensive task28 that scales with \({\mathcal {O}}(d^3)\), the computation is no longer necessary for block diagonal matrices of ones \({\textbf{J}}\). This is because the eigenvalues of the matrix are equal to the block size b. Thus, the rescaled reservoir is given by:

Our default target spectral radius is \(\rho ^*{=}0.1\).

Reservoir states

As in traditional RC, we use a recurrent update equation to capture the dynamics of the system in the so-called reservoir states \({\textbf{r}}(t)\). This would normally require a bounded nonlinear activation function \(g(\cdot )\) that captures the nonlinear properties of the data. The activation function (usually the hyperbolic tangent) is applied on an element-by-element basis.

However, as mentioned earlier, we shift the nonlinearity entirely to the readout. Therefore, the time evolution of the states is iteratively determined via:

Due to our choice of architecture, the reservoir states for each feature can be obtained separately:

Hence, we can take all reservoir states belonging to one feature \({\textbf{r}}_f(t)\) — we call them feature states — and analyze them separately. This helps us to understand that the reservoir acts as an averaging operator on the feature states:

where \({\mathbf {I}}_b\) is a vector of ones of size b. Thus, in each iteration step, the feature states are “normalized” to the average of the past feature states \(\bar{{\textbf{r}}}_f(t)\) and a varying strength (determined by the input weight) is added to the new feature data f(t):

where f can be replaced by any other feature without loss of validity. The average, or “memory”, of each feature is tracked in the last row of the feature states since \(w_b{=}0\). Furthermore, this implies that target spectral radius \(\rho ^*\) determines how strongly the memory of the data is kept in each iteration step.

Readout

While a quadratic readout, i.e., the squared reservoir states \({\textbf{r}}^2\), is often added to a traditional RC to break the symmetry of the activation function25, we need the readout to capture the nonlinearity of the data. Therefore, we add even higher orders of nonlinearity to the so-called generalized states \(\tilde{{\textbf{r}}}\). For a given degree of nonlinearity \(\eta\) they look like the following:

Hence, for a degree of nonlinearity \(\eta\) and a block-size of b, the number of elements in the readout, which is also the number of variables to be optimized, is:

which we rewrite to binomial coefficients for better comparison. For high-dimensional data with high nonlinearity, the number of variables to be optimized is much smaller than for comparable predictive models such as NG-RC14 or SINDy15. This is because NG-RC and SINDy require combinations with recurrences. Therefore, the size of their feature space for a nonlinearity degree is \(\eta\) (at least):

which grows much faster for larger n and \(\eta\) than the expression for \(n_{out}\) in Eq. 16.

Prediction performance measures

When forecasting nonlinear dynamical systems, the goal is not only to exactly replicate the short-time trajectory, but also to reproduce the long-term statistical properties, or climate, of the system.

Correlation dimension

To assess the structural complexity of an attractor, we calculate its correlation dimension \(\nu\), which measures the fractal dimensionality of the space populated by its trajectory29,30. The correlation dimension is implicitly defined by the power-law relationship based on the correlation integral:

where n is the dimension of the data and \(c({\textbf {r}}^{\prime })\) is the standard correlation function. The integral represents the mean probability that two states in the phase space are close to each other at different time steps. This is the case if the distance between the two states is smaller than the threshold distance r. For self-similar, strange attractors, this power-law relationship holds for a certain range of r, which can be calibrated using the Grassberger-Procaccia algorithm31. The benefits of this measure are that it is purely data-based, it only needs a small number of data points, and it does not require any knowledge of the underlying governing equations of the system.

Lyapunov exponents

Besides its fractal dimensionality, the statistical climate of an attractor is also characterized by its temporal complexity represented by the Lyapunov exponents32. They describe the average rate of divergence of nearby points in the phase space, and thus measure sensitivity with respect to initial conditions33. There is one exponent for each dimension in the phase space. If the system has at least one positive Lyapunov exponent, it is classified as chaotic. Thus, it is sufficient for the purposes in this work to calculate only the largest Lyapunov exponent \(\lambda _{max}\):

where d(t) denotes the distance of two initially nearby states in phase space and the constant C is the normalization constant at the initial separation. Thus, instead of determining the full Lyapunov spectrum, we only need to find the largest one as it describes the overall system behavior to a large extent. Therefore we use the Rosenstein algorithm34.

Forecast horizon

To quantify the quality and duration of the short-term prediction of the trajectory we use the forecast horizon \(\tau\)12. It tracks the number of time steps for which the absolute error between each coordinate of the predicted \({\textbf{y}}_{pred}(t)\) and test \({\textbf{y}}_{test}(t)\) data does not exceed the standard deviation of the test data \(\varvec{\sigma }\left( {\textbf{y}}_{test}(t)\right)\):

We express the forecast horizon in units of the Lyapunov time by multiplying it with the discretization and maximum Lyapunov exponent of the test data \(\tau \cdot dt \cdot \lambda _{max}^{test}\). This measure is intended to evaluate how long a prediction can reproduce the actual trajectory before the chaotic nature of the system leads to an exponential divergence.

Dynamical systems

We apply our model to a number of synthetic chaotic systems. In our analyses, we focus on the following three due to their specific properties in terms of nonlinearity.

Lorenz

As it is common in RC research35,36 we use the Lorenz system which was initially proposed for modeling atmospheric convection37:

where the standard parametrization for chaotic behavior is \(\sigma {=}10\), \(\rho {=}28\), and \(\beta {=}8/3\).

Halvorsen

As in Hertreux and Räth25 we use the Halvorsen system38 for our analyses, which has a cyclic symmetry and, unlike to the Lorenz system, only has nonlinearities without interaction of coordinates:

where \(a{=}1.3\) and \(b{=}4\) are the standard parameters.

Rabinovich–Fabrikant

In order to test whether our model works also for systems entailing cubic nonlinearities, we analyze the Rabinovich-Fabrikant system39:

where \(\alpha {=}0.14\) and \(\gamma {=}0.1\) are the standard parameters.

Simulating and splitting data

Since we compare our model with NG-RC and SINDy, we use the same data setup as the original works14,15. Hence, we solve the differential equations of the systems using the Runge-Kutta method40 with a discretization of \(dt{=}0.025\) in order to ensure a sufficient manifestation of the attractor.

We discard the initial transient of \(T_{transient}{=}50000\), use the next \(T_{train}{=}400\) steps for training, then skip \(T_{skip}{=}10000\) steps, and use the remaining \(T_{test}{=}10000\) for testing the prediction. Hence in total we simulate \(T{=}70400\) steps.

To get robust results, we also vary the starting points on the attractor by using the rounded last point of one data sample as the starting point for the next. The initial starting points for the Lorenz, Halvorsen, and Rabinovich-Fabrikant systems are \((-14, -20, 25)\), \((-6.4, 0, 0)\), and \((-0.4, 0.1, 0.7)\), respectively.

Comparable prediction models

We compare our novel RC to other models designed for predicting dynamical systems. We briefly describe them in the following.

Traditional reservoir computer

For the traditional RC architecture we choose an Erdős-Rényi network of dimension \(d{=}600\) with a target spectral radius \(\rho ^*\) and a quadratic readout. This equals 1200 variables per coordinate to be optimized. In order to get robust results we repeat the prediction for \(1000\) realizations and take the average of the prediction measures.

Next generation reservoir computer

The next generation reservoir computer (NG-RC) developed by Gauthier et al.14 is a so-called nonlinear vector autoregression (NVAR) machine and thus, does not require a reservoir. It solely needs the feature vector, which consists of time-delay observations of the data and nonlinear functions of these observations. The resulting output weights can be used to construct the governing equations of the data. We use the standard setting with time delays \(k{=}2\) and skips \(s{=}1\).

Sparse identification of nonlinear dynamics

Sparse identification of nonlinear dynamics (SINDy)15 discovers the underlying dynamical system of data by learning its governing equations through sparse regression. It is similar to NG-RC, but uses an iterative approach to filter only relevant features. We use the standard parametrization and the official Python package PySINDy41,42.

Data availability

The datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.

References

S. L. Brunton and J. N. Kutz, Data-driven Science and Engineering: Machine Learning, Dynamical Systems, and Control ( Cambridge University Press, 2022)

Creswell, A. et al. Generative adversarial networks: An overview. IEEE Signal Process.Mag. 35, 53 (2018).

Zhang, J., Wang, Y., Molino, P., Li, L. & Ebert, D. S. Manifold: A model-agnostic framework for interpretation and diagnosis of machine learning models. IEEE Trans. Vis. Comput. Graph. 25, 364 (2018).

Roscher, R., Bohn, B., Duarte, M. F. & Garcke, J. Explainable machine learning for scientific insights and discoveries. IEEE Access 8, 42200 (2020).

Jaeger, H. The “echo state’’ approach to analysing and training recurrent neural networks-with an erratum note. Bonn Ger. Ger. Natl. Res. Center Inf. Technol. GMD Tech. Rep. 148, 13 (2001).

D. Prokhorov, Echo state networks: appeal and challenges, in Proc. 2005 IEEE International Joint Conf. on Neural Networks, 2005., Vol. 3 (IEEE, 2005) pp. 1463–1466

Broido, A. D. & Clauset, A. Scale-free networks are rare. Nat. Commun. 10, 1017 (2019).

Gerlach, M. & Altmann, E. G. Testing statistical laws in complex systems. Phys. Rev. Lett. 122, 168301 (2019).

G. Holzmann, Reservoir computing: a powerful black-box framework for nonlinear audio processing, in International Conf. on Digital Audio Effects (DAFx) (Citeseer, 2009)

J. Platt, H. Abarbanel, S. Penny, A. Wong, and R. Clark, Robust forecasting through generalized synchronization in reservoir computing, in AGU Fall Meeting Abstracts, Vol. 2021 ( 2021) pp. NG25B–0522

Tanaka, G. et al. Recent advances in physical reservoir computing: A review. Neural Netw. 115, 100 (2019).

Haluszczynski, A. & Räth, C. Good and bad predictions: Assessing and improving the replication of chaotic attractors by means of reservoir computing. Chaos Interdisc. J. Nonlinear Sci. 29, 103143 (2019).

Bollt, E. On explaining the surprising success of reservoir computing forecaster of chaos? the universal machine learning dynamical system with contrast to var and dmd a3b2 show [feature]. Chaos Interdisc. J. Nonlinear Sci. 31, 013108 (2021).

Gauthier, D. J., Bollt, E., Griffith, A. & Barbosa, W. A. Next generation reservoir computing. Nat. Commun. 12, 5564 (2021).

Brunton, S. L., Proctor, J. L. & Kutz, J. N. Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proc. Natl. Acad. Sci. 113, 3932 (2016).

Weise, C. L. The asymmetric effects of monetary policy: A nonlinear vector autoregression approach. J. Money Credit Bank. 85, 25468 (1999).

Gonon, L. & Ortega, J.-P. Reservoir computing universality with stochastic inputs. IEEE Trans. Neural Netw. Learn. Syst. 31, 100 (2019).

Hart, A. G., Hook, J. L. & Dawes, J. H. Echo state networks trained by tikhonov least squares are l2 (\(\mu\)) approximators of ergodic dynamical systems. Phys. D Nonlinear Phenom. 421, 132882 (2021).

D. Yu, H. Wang, P. Chen, and Z. Wei, Mixed pooling for convolutional neural networks, in Rough Sets and Knowledge Technology: 9th International Conf., RSKT 2014, Shanghai, China, October 24-26, 2014, Proc. 9 (Springer, 2014) pp. 364–375

Hunter, J. S. The exponentially weighted moving average. J. Qual. Technol. 18, 203 (1986).

Dambre, J., Verstraeten, D., Schrauwen, B. & Massar, S. Information processing capacity of dynamical systems. Sci. Rep. 2, 1 (2012).

Flynn, A., Tsachouridis, V. A. & Amann, A. Multifunctionality in a reservoir computer. Chaos Interdisc. J. Nonlinear Sci. 31, 013125 (2021).

Maass, W., Natschläger, T. & Markram, H. Real-time computing without stable states: A new framework for neural computation based on perturbations. Neural Comput. 14, 2531 (2002).

Jaeger, H. & Haas, H. Harnessing nonlinearity: Predicting chaotic systems and saving energy in wireless communication. Science 304, 78 (2004).

J. Herteux and C. Räth, Breaking symmetries of the reservoir equations in echo state networks. Chaos Interdisc. J. Nonlinear Sci. 30, 123142 ( 2020)

Hoerl, A. E. & Kennard, R. W. Ridge regression: Applications to nonorthogonal problems. Technometrics 12, 69 (1970).

Erdos, P. et al. On the evolution of random graphs. Publ. Math. Inst. Hung. Acad. Sci 5, 17 (1960).

Paige, C. C. Bidiagonalization of matrices and solution of linear equations. SIAM J. Numer. Anal. 11, 197 (1974).

P. Grassberger and I. Procaccia, Measuring the strangeness of strange attractors, in The Theory of Chaotic Attractors (Springer, 2004) pp. 170–189

Mandelbrot, B. How long is the coast of britain? Statistical self-similarity and fractional dimension. Science 156, 636 (1967).

Grassberger, P. Generalized dimensions of strange attractors. Phys. Lett. A 97, 227 (1983).

Wolf, A., Swift, J. B., Swinney, H. L. & Vastano, J. A. Determining lyapunov exponents from a time series. Phys. D Nonlinear Phenom. 16, 285 (1985).

Shaw, R. Strange attractors, chaotic behavior, and information flow. Z. Naturforschung A 36, 80 (1981).

Rosenstein, M. T., Collins, J. J. & De Luca, C. J. A practical method for calculating largest lyapunov exponents from small data sets. Phys. D Nonlinear Phenom. 65, 117 (1993).

Pathak, J., Lu, Z., Hunt, B. R., Girvan, M. & Ott, E. Using machine learning to replicate chaotic attractors and calculate lyapunov exponents from data. Chaos Interdisc. J. Nonlinear Sci. 27, 121102 (2017).

Lu, Z., Hunt, B. R. & Ott, E. Attractor reconstruction by machine learning. Chaos Interdisc. J. Nonlinear Sci. 28, 061104 (2018).

Lorenz, E. N. Deterministic nonperiodic flow. J. Atmos. Sci. 20, 130 (1963).

S. Vaidyanathan and A. T. Azar, Adaptive control and synchronization of halvorsen circulant chaotic systems, In Advances in chaos theory and intelligent control ( Springer, 2016) pp. 225–247

Rabinovich, M. I., Fabrikant, A. L. & Tsimring, L. S. Finite-dimensional spatial disorder. Soviet Phys. Usp. 35, 629 (1992).

E. Hairer, S. P. Nørsett, and G. Wanner, Solving Ordinary Differential Equations I ( Springer, 1993)https://doi.org/10.1007/978-3-540-78862-1

B. de Silva, K. Champion, M. Quade, J.-C. Loiseau, J. Kutz, and S. Brunton, Pysindy: A python package for the sparse identification of nonlinear dynamical systems from data. J. Open Source Softw. 5, 2104 (2020)https://doi.org/10.21105/joss.02104

A. A. Kaptanoglu, B. M. de Silva, U. Fasel, K. Kaheman, A. J. Goldschmidt, J. Callaham, C. B. Delahunt, Z. G. Nicolaou, K. Champion, J.-C. Loiseau, J. N. Kutz, and S. L. Brunton, Pysindy: A comprehensive python package for robust sparse system identification. J. Open Source Softw. 7, 3994 (2022) https://doi.org/10.21105/joss.03994

Aizawa, Y. et al. Stagnant motions in hamiltonian systems. Progr. Theor. Phys. Suppl. 98, 36 (1989).

Dadras, S. & Momeni, H. R. A novel three-dimensional autonomous chaotic system generating two, three and four-scroll attractors. Phys. Lett. A 373, 3637 (2009).

Rossler, O. An equation for hyperchaos. Phys. Lett. A 71, 155 (1979).

Qi, G., Chen, G., van Wyk, M. A., van Wyk, B. J. & Zhang, Y. A four-wing chaotic attractor generated from a new 3-d quadratic autonomous system. Chaos Solitons Fractals 38, 705 (2008).

Chen, G. & Ueta, T. Yet another chaotic attractor. Int. J. Bifurc. Chaos 9, 1465 (1999).

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

H.M conducted the research and wrote the main manuscript text. C.R. and D.P. assisted in the research. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ma, H., Prosperino, D. & Räth, C. A novel approach to minimal reservoir computing. Sci Rep 13, 12970 (2023). https://doi.org/10.1038/s41598-023-39886-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-39886-w

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.