Abstract

Data modeling requires a sufficient sample size for reproducibility. A small sample size can inhibit model evaluation. A synthetic data generation technique addressing this small sample size problem is evaluated: from the space of arbitrarily distributed samples, a subgroup (class) has a latent multivariate normal characteristic; synthetic data can be generated from this class with univariate kernel density estimation (KDE); and synthetic samples are statistically like their respective samples. Three samples (n = 667) were investigated with 10 input variables (X). KDE was used to augment the sample size in X. Maps produced univariate normal variables in Y. Principal component analysis in Y produced uncorrelated variables in T, where the probability density functions were approximated as normal and characterized; synthetic data was generated with normally distributed univariate random variables in T. Reversing each step produced synthetic data in Y and X. All samples were approximately multivariate normal in Y, permitting the generation of synthetic data. Probability density function and covariance comparisons showed similarity between samples and synthetic samples. A class of samples has a latent normal characteristic. For such samples, this approach offers a solution to the small sample size problem. Further studies are required to understand this latent class.

Similar content being viewed by others

Introduction

Data modeling requires sufficient data for exploration and reproducibility purposes. This is especially relevant to biomedical-healthcare research, where data can be limited; although this field is broad, a few examples include risk prediction1, response to therapy2 and benign malignant classification3,4,5. Unfortunately, data can be limited for variety of reasons: the study of low-incidence diseases or underserved/underrepresented subpopulations6; clinic visitation hesitancy7,8; the inability to share data across facilities9; cost of molecular tests; and study timeframes. We, the authors, have worked in biomedical-healthcare research for many years and have experienced this persistent problem over decades.

Multivariate modeling is often exploratory that can decrease model stability in various ways. Here we explain frequent approaches that we have experienced or witnessed. The process starts by analyzing data from the target population for a variety of goals such as: open-ended analyses by studying many different data characteristics searching for correlations and patterns; subgrouping the dataset; testing hypothesis feasibilities with varying endpoints; exploring multiple hypotheses simultaneously; feature selection; selecting the most suitable model; or estimating model parameters with an optimization procedure. In practice, there are virtually unlimited ways to search through a given data sample. Data mining of this sort may not always be viewed in the most positive light10, but on the other hand it is also the nature of discovery, noting there is often a compromise between these positions. Extensive subgroup analyses can effectively deplete the sample. When this applies, we term it the small sample problem. In the final stage, the fully specified model (i.e., the model with its parameters fixed) is validated with new data to prove its generalizability. Both the exploration and final stages depend critically on having an adequate sample size.

Determining the adequate sample size in the multivariate setting is a difficult task11 and has relevance to the small sample problem. Adequate multivariate sample size is a function of both the analysis technique and covariance structure. For example, a multivariate two-sample test with normally distributed data and common covariance, Hotelling’s T2, is appropriate when comparing mean vectors12. In a broad sense, when the variables under consideration tend to be more highly correlated, the adequate sample size decreases and vice versa. Adequate sample size is a function of the number of free model parameters, which does not necessarily correspond with the number of variables13. In ordinary linear regression modeling with d noninteracting variables, there are about d parameters that must be determined. In contrast when taken to the limit, partial least squares regression14 has roughly d2 parameters, and deep neural network architectures have even greater number of parameters, requiring large sample sizes for a given design15 (see related table in15 for examples). These modeling techniques illustrate that an adequate sample size under one condition may not be optimal for another. It is our premise that the adequate sample size for a given multivariate prediction problem that allows independent validation deserves more attention beyond larger sample sizes are better, especially when normality assumptions do not hold. By hypothesis, a technique that can generate realistic synthetic data will provide benefits in modeling endeavors. Such an approach could be used to augment an inadequate sample size for modeling and validation purposes or to study sample size requirements for a given multivariate covariance structure.

Synthetic data applications in health-related research use a variety of techniques. Some methods are used for generating samples from large populations16,17,18,19. These approaches include hidden Markov models18, techniques that reconstruct time series data coupled with sampling the empirical probability density function of the relevant variables16, and methods that estimate probability density functions (pdfs) from the data, not accounting for variable correlation19. Other work used moment matching to generate synthetic data but does not consider relative frequencies in the comparison analysis20. Discussions on synthetic data generation techniques indicate that the small sample size condition has received little analytical attention20,21.

Our synthetic data technique estimates a multivariate pdf for arbitrarily distributed data, including when normal approximations fail to hold22. This initial work, based on multivariate kernel density estimation (mKDE) with unconstrained bandwidths, was illustrated with d = 5 data from mammographic case–control data22. Synthetic populations (SPs) of arbitrarily large size were generated from samples of limited size. However, mKDE has noted efficiency problems for high dimensionality23,24. As the dimensionality increases, the sample size requirement apparently becomes exceeding large, noting this area is under investigation. Although categorizing a problem as high or low dimensionality may be dependent on many factors, reasonable arguments suggest that high dimensionality may be defined as 3 < d ≤ 50, where d is the number of variables considered25; in that, density estimators should be able to address this range25. Here we let d = 10 so that many of the findings can be presented graphically or reasonably tabulated, and modeling problems in healthcare research can be within this range.

In this report, we present modifications to our method to mitigate the mKDE efficiency problem under specific conditions (latent normality) and address synthetic data generation in relatively higher dimensionality (d = 10). This modified approach decomposes an arbitrarily distributed multivariate problem into multiple univariate KDE (uKDE) problems while characterizing the covariance structure independently26. We are evaluating whether this approach can transform an arbitrarily distributed multivariate sample into an approximate multivariate normal form, which we define as a sample with a latent normal characteristic. In the universe of arbitrarily distributed samples, there is a multivariate normal subgroup. The technique for generating synthetic data for this normal subgroup is relatively straightforward and well-practiced. By hypothesis, our approach seeks to extend these straightforward techniques to the latent normal class by determining when (or if) it exists. Developing the analytics to detect this condition and then leveraging it to generate synthetic data are essential elements of our work26.

Methods

Overview

Our modified synthetic data generation and analytic techniques have sequential components and many related analyses. Therefore, clear definitions, preliminaries, and a brief outline are given before the details are provided. Justifications are also discussed here when warranted.

Definitions

Population is used to define a hypothetical collection of virtually unlimited number of either real or synthetic entities from which samples comprised of observations or realizations may exist or can be drawn. The exception for the use of population is when explaining differential evolution (DE) optimization27 used for uKDE bandwidth determination. The DE-population is limited and defined specifically. Sample defines a collection of n real observations with d attributes (variables) from the space of possible samples, represented mathematically as a n × d matrix (rows = observations, columns = attributes). Column vectors are designated with lower-case bold letters. For example, individual attributes are referred to as x, a column vector. Vector components are designated with lower-case subscripted letters. The components of x are referenced as xj for j = 1, 2, …, d and assumed continuous. The multivariate pdf for x is p(x). X refers to the input variable space, that is, x exists in the X representation. We assume p(x) exists at the population level, but not accessible. In practice, we evaluated normalized histograms throughout this work for all variables considered both univariate and multivariate (i.e., empirical pdfs), also referred to as pdfs for brevity; we use this term to refer to attributes at the population level as well as at the sample level. One-dimensional (1D) marginal pdfs for p(x) are expressed as pj(xj). Matrices are designated with upper case bold lettering. For example, X is the n × d matrix with n observations of x in its rows (i.e., the ith row contains the d attributes for the ith observation and the jth column of x has n realizations of xj). Double subscripts are used for both specific realizations and matrix element indices. That is, xij is the jth component for the ith realization in X (also is the indexing for X). Variables in X are mapped to the Y representation. This creates the corresponding entities in Y: (1) the vector y with d components; (2) the multivariate pdf, g(y), and its marginal pdfs, gj(yj); and (3) the matrix Y defined analogously as X. We also work in the T representation (uncorrelated variables) as explained below, where t, tj, and T are defined similarly. Likewise, r(t) is the multivariate pdf in T with marginals, rj(tj). We define the cumulative probability functions (i.e., the indefinite integral approximation of a given univariate pdf) for pj(xj) and gj(yj) as Pj(xj) and Gj(yj), respectively. Covariance quantities are calculated with the normal multivariate form: E(w − mw)(v − mv), where E is the expectation operator, w and v are arbitrary random variables with means mw and mv. The corresponding covariance matrices are expressed as Cx, Cy, and Ct, respectively (or Ck generically). When an entity is given the subscript, s, it then defines the corresponding synthetic entity. Standardized normal defines a zero mean–unit variance normal pdf, used in both the univariate and multivariate scenarios. Parametric for this report refers to functions that can be expressed in closed form.

Preliminaries

General biomedical healthcare data characteristics are discussed to overview some of their characteristics. Measurements such as body mass index (BMI) and age, or measurements taken from image data can have right-skewed pdfs because they are often positive-valued and not inclusive of zero (see Figs. 1, 2 and 3 for examples). Such measures can bear varying levels of correlation (see lower parts of Tables 1, 2 and 3). Thus, an arbitrary p(x) may not lend itself to parametric modeling in X (i.e., normality and non-correlation assumptions do not apply in many instances). To render such data into a more tractable form, a series of steps (see Fig. 4) were taken to condition X; by premise, these steps will permit characterizing the sample with parametric means and then generating similar multivariate synthetic data without mKDE.

Outline of the processing steps

When describing these steps, we also briefly discuss the analysis at a given step (also provided in detail in the methods). The process starts with a given sample in X (Fig. 4, top left). The processing flow for the sample (X–Y–T) is illustrated in the top row of Fig. 4, and the reversed SP generation flow (Ts–Ys–Xs) in the bottom row.

Step 1 Univariate maps were constructed (Fig. 4, top-left) to transform a given X measurement to a standardized normal, producing the respective marginal pdf set in Y (Fig. 4, top-middle). Maps were constructed with an augmented sample size using optimized uKDE, addressing the small sample size problem. uKDE was used to generate synthetic xj. Here, we augmented the sample size with the goal of filling gaps in the input marginal pdfs of xj (sample) to complement the map constructions. By hypothesis, this step addresses the small sample size problem by guaranteeing continuous smooth maps that will produce standardized normal pdfs from the sample. Synthetic xj generated in this fashion do not maintain the covariance relationships in X and were not used further; only xj from the sample were mapped to Y, and KDE was not used beyond this point. There is no guarantee that a set of normal marginals in Y will produce a multivariate normal pdf. Although the reverse is always true because a multivariate normal has univariate normal marginals. In practice, g(y) from the sample could be assessed at this point to estimate how well it approximates normality. If the latent normal approximation is poor, another synthetic approach could be pursued, or the process could be discontinued. Here we forgo such testing at this step (normality was tested for in steps 3 and 4 instead) and move through all steps to illustrate the techniques. We will also discuss a possible modification that could be investigated when the sample has a poor latent normal characteristic approximation, later in the discussion.

Step 2 Principal component analysis (PCA) was used to decouple the variables in Y producing uncorrelated variables in T (Fig. 4, top-right).

Step 3 Synthetic data was generated in T (Fig. 4, bottom-left) as uncorrelated random variables. Here we assumed that each marginal in T from the sample could be approximated as normal with variance given by the jth eigenvalue of Cy. To generate synthetic data, the columns of Ts were populated as normally distributed random variables with these specified variances (noting, the columns lack correlation). We refer to the realizations in T as the SP (i.e., Ts), noting the column length (number of synthetic entities) can be arbitrarily large. To address the normal characteristic, univariate marginal and multivariate pdfs from the sample were tested for normality at this point.

Step 4 The inverse PCA transform (Fig. 4, bottom-middle) of Ts produced the SP in Y (Ys), thereby restoring the covariance relationships that were removed in Step 2. We note, this technique (steps 3 and 4) of producing multivariate normal data is a practiced approach when the sample is multivariate normal or well approximated as such. For reference, the multivariate standardized normal in Y is expressed as

where Cy can be approximated as the covariance matrix from a given sample. If synthetic samples are poor replicas of their sample, it follows the sample’s g(y) will be a poor approximation of multivariate normality (i.e., the sample is not in the latent normal class).

We evaluated how well the inverse PCA transformation preserved the covariance (Cy) and the method’s capability of restoring the univariate/multivariate pdfs in Y (i.e., normality comparisons) rather than in Step 2.

Step 5 Each synthetic variable in Y is inverse mapped to X (Fig. 4, bottom left). This reversed Step 1, thereby producing the SP in X (Xs), and restoring the covariance relationships by hypothesis. The respective pdfs and covariance matrices in X were compared with those from synthetic samples; pdfs were also tested for normality.

It is important to clarify a few aspects of this work. The univariate mapping from X to Y creates a set of univariate marginals normally distributed that can produce a multivariate normal, but not guaranteed. The comparison of univariate marginal pdfs, however, is no guarantee that the respective multivariate pdfs are reasonable facsimiles because the covariance structure has been removed. Many univariate pdf comparisons are provided between samples and synthetic samples in addition to multivariate comparisons, because these allow visualizing similarities with the above stipulations. When a given sample has the latent normal characteristic, the SP generation is greatly simplified, and then it is defined by Eq. (1). The work below shows how to generate synthetic data when this characteristic holds. We use three datasets that were selected pseudo-randomly. In the space of samples (virtually unlimited), we do not know the probability that a sample selected at random will have this latent normal characteristic. The main objectives are to present the analysis components with the methods for testing for the latent characteristic, give a thorough investigation, demonstrate that the synthesis produces realistic data when this latent condition exists, and then discuss further analyses.

Study data

Samples were derived from two sources of measurements: (1) mammograms and related clinical data (n = 667), and (2) dried beans (n = 13,611)28. Most technical aspects of these data are not relevant for this report. Mammography data included all observations with mammograms from a specific imaging technology, thereby defining n = 667. We used the dried bean data to add variation to the analyses as the variable nomenclatures are very different from the mammogram data, noting at this point the source of data is not germane. From mammograms, we considered two sets of measurements each with d = 10 variables referred to as Sample 1 (DS1) and Sample 2 (DS2). DS1 has 8 double precision measurements from the Fourier power spectrum in addition to age and BMI, both captured as integer variables. The Fourier attributes are from a set of measurements described previously29; the first 8 measurements from this set are labeled as Pi for i = 1–8. These Fourier measures are consecutive and follow an approximate functional form30, and thus represent variables that are very different than those in DS2 (or Sample 3 below). To cite the covariance quantities and correlation coefficients, we used a modified covariance (covariance for short) table format for efficiency because Ck is symmetric. In these tables, entries below the diagonal are the respective correlation coefficients, whereas the elements along the diagonal (variance quantities) and above are the covariance quantities. The covariance table for DS1 is shown in Table 1a. DS2 contains 8 double precision summary measurements derived from the image domain: mean, standard deviation (SD); SD of a high-pass (HP) filter output, SD of a low-pass (LP) filter output; local SD summarized; P20 Fourier measure (from the set described for DS1 measurements); local spatial correlation summarized31; and breast (Br) area measured in cm2. Age and BMI (from DS1) were also included in this dataset. These variables were selected virtually at random to give d = 10 and possibly provide a different covariance structure than DS1. The covariance table is shown in Table 2a. Neither DS1 nor DS2 were used in our related-prior mKDE synthetic data work. Selected measures and realizations from the dried bean dataset28 are referred to as Sample 3 (DS3). The bean data has 17 measurements (floating point) from 7 bean types. We selected 10 measures at random to make the dimensionality of DS3 compatible with the other two datasets giving this set of variables: area (1); minor axis (5); eccentricity (6); convex area (7); equivalent diameter (8); extent (9); solidarity (10); roundness (11); shape factor 3 (15), and shape factor 4 (16). Here, parenthetical references give the variable number listed in the respective resource (see28). Both bean type (bean type = Sira, with n = 2636) and n = 667 observations were selected at random to create DS3. Keeping n = 667 constant across datasets permits consistent statistical comparisons. For example, confidence intervals (CIs) and other comparison metrics are dependent upon the number of observations. The covariance table is shown in Table 3a. The analysis of three samples supports the evaluation of the processing scheme under generalized scenarios. The means and standard deviations for xj in each dataset are provided in Table 4. Note, the dynamic range of the means and standard deviations within a given sample vary widely in some instances.

KDE, optimization, and mapping

The mapping (Step 1) relies on generating synthetic xj with uKDE. Each bandwidth parameter was determined with an optimization process wherein synthetic data from uKDE was compared with the sample. There is a continued feedback loop between the sample, synthetic data generation, and comparison during the optimization process. When the optimization was completed, a given map was constructed.

uKDE

For the map construction in Step 1 and as a modification to our previous work22, uKDE was used to generate realizations from each pj(xj) given by

where xj is the synthetic variable for this discussion, xij are observations from a given sample, kj is a normalization factor, and hj is the univariate bandwidth parameter.

Optimization

Differential evolution optimization27 was used to determine each hj in Eq. (2). The population of candidate hj evolves over generations to a solution, as described in detail previously22. To form generation zero for a given xj, the DE-population (n = 1000) was initialized randomly (uniformly distributed) within these bounds: (0.0, 4 × the variance of xj) because a given range should span the solution for hj. The DE-population size stays constant across all generations. By expectation, the populations become more fit according to the fitness function over generations. A given generation is found by comparing two-DE populations (1000 pair-wise competitions) of candidate hj solutions derived from the previous generation. For a given pairwise competition, two respective SPs were generated for each candidate hj. Synthetic samples (n = 667) were drawn from each SP and Pj(xj) from the sample was compared with each Pj(xj) derived from its synthetic sample using Eq. (2). The hj candidate [used in Eq. (2)] that produced a smaller Dj was used to populate the current generation, where Dj is the difference metric derived from the fitness function. This process was repeated for 30 generations. In summary, 30 × 2 × 1000 synthetic samples were compared with the sample via Pj(xj) comparisons for a given xj to derive its respective hj used in Eq. (2). A given X to Y map was then constructed with xj sampled from Eq. (2) with hj = E[terminal population of candidate hj].

As a modification, the Kolmogorov Smirnov (KS) test32 was used as the fitness function in the DE optimization. This is a nonparametric test that can be used to compare two numerically derived cumulative probability functions or compare a numerically derived curve to a reference32. Here we compare the respective numerical univariate cumulative probability functions derived from synthetic samples with those derived from the sample. The difference metric, Dj, for the KS test is the absolute maximum difference between the two cumulative probability functions under comparison.

Mapping

For each map in Step 1, we solve Pj(xj) = Gj(yj) numerically with interpolation methods described previously33,34, where Pj(xj) and Gj(yj) are assumed to be monotonically increasing. This solves the random variable transformation for each yj analogous to histogram matching with double precision accuracy. Synthetic yj (n = 106) were generated as standardized normal random variables using the Box-Muller (BM) method. Maps from X to Y are expressed as yj = mj(xj), where mj is the jth map. The corresponding inverse maps, \({\mathrm{x}}_{\mathrm{j}}={\mathrm{m}}_{\mathrm{j}}^{-1}({\mathrm{y}}_{\mathrm{j}})\), were derived numerically by inverting a given map and solving for xj. The map construction was complemented by generating synthetic xj with Eq. (2) using hj derived from DE optimization. Synthetic xj generated here were not used further.

Synthetic population generation

Synthetic populations (SPs) were generated in the uncorrelated T representation and converted back to X via Y. In Step 2, the PCA transform for the sample is given by

where P is a d × d matrix with uncorrelated normalized columns. These are the normalized eigenvectors of Cy that capture the sample’s covariance structure. Ct is diagonal with: cjj =\({\upsigma }_{\mathrm{j}}^{2}(\mathrm{t})\) corresponding to the ordered eigenvalues of Cy. We make the approximation that r(t) from the sample has the multivariate normal form expressed as

When the multivariate normality approximation holds in Y, it should hold in T. In Step 3, synthetic tj were populated as zero mean independent normally distributed random variables with variances = \({\upsigma }_{\mathrm{j}}^{2}(\mathrm{t})\) using the BM method, producing the SP in T (Ts). The row length of Ts defines the number of realizations in each SP and is arbitrary. Here, we let n = 106 for all SPs. In Step 4 to construct the SP in Y (Ys), the inverse PCA transform was used by substituting Ts for T in Eq. (3) giving

With this process, g(y) = gn(y) for synthetic data. By premise, the covariance of Ys should be like that of Y. In Step 5 to produce the SP in X (Xs), synthetic yj were inverse mapped. Similarly, the covariance of X should be like that of Xs. An example is also provided to illustrate that Xs is densely populated in contrast with the sparse sample in X.

Statistical methods

The goals are to evaluate the latent normal characteristic and to produce synthetic data that is statistically like its sample. This analysis is based on both multiple univariate/multivariate pdf and covariance comparisons. A given synthetic sample (n = 667) was drawn at random from its SP. The same realizations from a given synthetic sample were used for comparisons in X, Y, and T, when applicable.

Probability density function comparisons

Univariate pdf comparisons

The KS test (described in Step 1) was used for all univariate pdf comparisons. For such comparisons, we selected the test threshold at the 5% significance level as the critical value. In X, pj(xj) from the sample were tested for normality and compared with their respective pdfs from synthetic samples created in Step 5. In Y, gj(yj) from the sample were compared with the respective pdfs from their synthetic samples produced by Step 4; this implicitly evaluated univariate normality in Y because synthetic yj were derived from a multivariate normal process in Ts. In T, we compared rj(tj) from the sample with their respective pdfs derived from zero mean normally distributed random variables (i.e., synthetic tj) with variances = \({\upsigma }_{\mathrm{j}}^{2}(\mathrm{t})\) [from Step 3]. For each tj, the sample was compared with 1000 synthetic samples, and the percentage of times that measured Dj was less than the critical test value was tabulated.

Distribution free multivariate pdf comparisons

To evaluate whether the sample and synthetic samples were drawn from the same distribution without assumptions, we used the maximum mean discrepancy (MMD)35 test. This is a kernel-based (normal kernel) analysis that computes the difference between every possible vector combination between and within two samples (excluding same vector comparisons). To determine the kernel parameter for these tests, we used the median heuristic35,36. This analysis is based on the critical value (MMDc) at the 5% significance level and the test statistic \({(\mathrm{MMD}}_{\mathrm{u}}^{2})\). Both quantities are calculated from the two samples under comparison. This test has an acceptance region given the two distributions are the same: \({\mathrm{MMD}}_{\mathrm{u }}^{2}< {\mathrm{MMD}}_{\mathrm{c}}\) (see Theorem 10 and Corollary in35). This test was applied in X, Y, and T. Note, when applying this test in either T or Y, it is implicitly testing the sample’s likeness with multivariate normality. In X, Y, and T, 1000 synthetic samples were compared with the sample. The test acceptance percentage was tabulated. \({\mathrm{MMD}}_{\mathrm{u}}^{2}\) and \({\mathrm{MMD}}_{\mathrm{c}}\) values are provided as averages over all trials because they change per comparison.

Random projection multivariate normality evaluation

Random projections were used to develop a test for normality. The vector w with d components is multivariate normal if the scalar random variable, z = uTw, is univariate normal, where u is a d component vector with unit norm that is defined as a projection vector in this report37,38. As mentioned by Zhou and Saho38, we developed this formulism into a specific random projection test. To actualize such a test to probe the samples and synthetic samples similarity with normality, the projection vector u was generated randomly 1000 times, referenced as us. Here, s is the projection index ranging from [1,1000]. The projection equation is then expressed as

where z|s defines the scalar z conditioned upon s. In Eq. (6), x, y, or t was substituted for w, and given projection was taken over all realizations (i.e., n = 667) of given sample. These realizations of z|s were used to form the normalized histogram that approximates the conditional pdf for the left side of Eq. (6) defined as f(z|s). A different series of us was produced for each representation; once a given series was produced, us remains fixed. The components of us were generated as standardized normal random variables, where us was normalized to unit norm. For a given sample, f(z|s) was tested for normality using the KS test. This procedure was repeated for all random projections (all s), resulting in 1000 KS test comparisons for normality. The percentage of the times that the null hypothesis was not rejected was tabulated as the normality similarity gauge. We refer to this procedure as the random projection test. It is the percentage of times that w was not rejected when probed in 1000 random directions. This test was performed once for each sample and with 100 synthetic samples and averaged. Here, the same us series used to probe a given sample was also used to probe 100 of its respective synthetic samples. We note, synthetic samples are multivariate normal in Y and T by their construction. Tests were performed on synthetic samples (Y and T) to give control standards as normal comparators. Tests were also performed in X: (1) as control comparator for the test itself; and (2) to determine if a given sample was multivariate normal before undergoing the mapping.

To gain both insight into Eq. (6) and the test, expressions for both z|s and f(z|s) are developed. First, z|s results from a linear random variable transform given by

where uk are the components of us, and hk(wk) are the univariate pdfs for scaled wk. To check one endpoint, we assume wk are independent as a coarse approximation to our samples. Then, f(z|s) results from repeated convolutions given by

where c−1 = u1 × u2 × u3 × u4 × … × ud, and hk(wk) ~ hk. If some hk(wk) have relatively much larger variances (widths) than others, their functional forms can tend to predominate Eq. (8).

Mardia multivariate normality test

This is a two component test that uses multivariate skewness and kurtosis for evaluating deviations from normality37, applied in X, Y and T. It produces a deviation measure for each component as well as a combined measure; we cite the component-findings. This test was applied in X as a control. Outlier elimination techniques were not applied.

Covariance comparisons

Two methods were used to evaluate the covariance similarity between samples and synthetic samples: with (CIs) and eigenvalue comparisons.

Comparisons with confidence intervals

Each covariance matrix element between the sample and its respective synthetic samples was compared with CIs. We assumed the sample and synthetic samples were drawn from the same distributions. We used the elements from each Cx and Cy as point estimates from a given sample in both X and Y. One thousand synthetic samples (n = 667) were used to calculate 1000 covariance matrices (in X and Y). For each matrix element, the respective univariate pdf was formed, and 95% CIs were calculated. This procedure was repeated 1000 times. The percentage of times the sample’s point estimate (for each element in Cy and Cx) was within the synthetic element’s CIs was tabulated.

Comparisons with PCA

The eigenvalues from Cy were used as the reference comparators under two conditions. For condition 1, the PCA transform determined with the sample was applied to a synthetic sample (sample/syn test). Synthetic eigenvalues were estimated by calculating the variances of synthetic tj. For condition 2, the PCA transform determined with a synthetic sample selected at random was applied to the sample (syn/sample test). Eigenvalues were estimated by calculating the variances of tj from the sample. For both conditions, each eigenvalue (or equivalently, variance) was compared to its respective reference (sample) using the F-test.

Ethics and consent to participate

All methods were carried out in accordance with relevant guidelines and regulations. All experimental procedures were approved by the Institutional Review Board (IRB) of the University of South Florida, Tampa, FL under protocol #Ame13_104715. Mammography data was collected retrospectively on a waiver for informed consent approved by the IRB of the University of South Florida, Tampa, FL under protocol #Ame13_104715.

Results

Univariate normality analysis in the X representation

Figures 1, 2 and 3 show the univariate pdfs (solid) for each sample in X. Of note, many pdfs are observably non-normal, usually right skewed. Each xj in DS1 showed significant deviation from normality (p < 0.0001) except for x9. In DS2, neither x1 or x9 (x9 is the same in DS1) showed significant deviation from normality, while the remaining xj exhibited significant deviations (p < 0.0003) except for x3 (p = 0.0144). In DS3, x1 through x5 and x9 did not show significant deviations from normality, the remaining xj deviated significantly (p < 0.002).

Mapping and KDE optimization

Mapping

Figure 5 shows an example of the X to Y map for y9 in the left-pane and the inverse Y to X map in the right-pane. Red-dashed lines show the map and its inverse constructed without synthetic xj. Staircasing effects are observable particularly in the tail regions, where sample densities are sparse. Black lines show the map and its inverse constructed with n = 667 (the sample) plus n = 106 synthetic realizations produced with optimized uKDE. Staircasing effects were removed when incorporating synthetic xj, which was common with all maps and inverses (not shown).

uKDE optimization

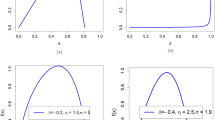

The optimization produced bandwidth parameter solutions (hj) used in Eq. (2). Here, we illustrate the evolution of the solution with h9 from DS1 and DS2 as an example. Figure 6 shows the scatter plot between the candidate h9 population and the respective D9 (KS test difference metric) for DE generation = 1 in the left-pane and for the terminal generation = 30 in the middle-pane. The solution space (middle-pane) is tightly clustered indicating DE convergence. A closer view of this cluster is shown in the right-pane of Fig. 6. This relatively tight-cluster characteristic was common among all variables and datasets (not shown).

Kernel Density Estimation Optimization Illustration: this shows the differential evolution optimization to determine h9 (for x9, age from DS1 and DS2). These show the scatter plots of the Kolmogorov Smirnov test metric (measured D9 quantities for entire generation) versus the h9 quantities for two generations: generation = 1 (left); terminal generation = 30 (middle); and closeup view of the terminal generation (right). The terminal generation shows the candidate solutions for h9 are tightly clustered (compare left pane with middle and right panes).

Univariate comparisons between samples and synthetic samples

Comparisons in T

These findings are summarized first because they start the flow back to X and can show departures from normality. Figures 7, 8 and 9 show the pdfs for the samples (solid) compared with their corresponding synthetic pdfs (dashed). Table 5 shows the variances in T for each sample (i.e., eigenvalues for each sample). Due to (1) the normalization in Y, and (2) that d = 10, multiplying a given, \({\upsigma }_{\mathrm{j}}^{2}(\mathrm{t})\) by 10% gives the percentage of the total variance explained by its tj. Table 6 shows the KS test findings for the univariate normality comparisons. Here we use a cutoff of < 65% to indicate deviation as most trends were well above this boundary. As shown in Table 6 (left column for each dataset): (1) the normal model did not deviate for any tj in DS1 (7 tj were < 94%); (2) the normal model deviated for t10 in DS2 (5 tj were < 94%); and (3) the normal model deviated for t7, t8, t9, and t10 in DS3 (3 tj were < 94%). In DS2, t10 explains about 0.2% of the total variance. Similarly, in DS3, the sum of the variances of the four variables (t7, t8, t9, and t10) constituted about 0.14% of the total variance.

Comparisons in Y

Figures 10, 11 and 12 show the pdfs in Y resulting from the mapped samples (i.e., mapped xj) for each dataset (solid) compared with their respective synthetic pdfs (dashed), which are normal by construct. Comparisons in Y showed little departure from normality in any sample, as the tests were not rejected (about 99%) in most instances (Table 6, middle column for each dataset). Findings from DS2 and DS3 indicate that substituting normal pdfs in T whenever sample tj deviated from normality had little influence on this analysis. This may be because these respective variables in total or isolation explained a minute portion of the variance in the respective PCA models.

Comparisons in X

Figures 1, 2 and 3 show the pdfs in X for the samples (solid) compared with their corresponding synthetic pdfs (dashed). The pdfs from the sample did not deviate from their corresponding synthetic pdfs in any dataset, as the tests were not rejected (< 99%) in most instances (Table 6, right columns). The parenthetical entries in Table 6 show the test findings without using synthetic data for the map/inverse constructions (using the samples only). These show that complementing the map constructions with uKDE is a necessary component of this methodology, although the degree of deviation from the KS tests varied across datasets. Note, the improvement held in D3 as well, which was normal in X (shown below).

Multivariate comparisons and normality comparisons

MMD tests

Testing was performed in X, Y, and T, and the test metrics are provided in Table 7. These show the samples and respective synthetic samples were drawn from the same distributions. In 100% the tests, measured \({\mathrm{MMD}}_{\mathrm{u }}^{2}\) values were less than the critical MMDc quantities. The MMD tests in Y and T were also proxy tests for sample-normality due to the SP constructs.

Random projection normality tests

Testing was performed in X, Y, and T, and the findings are shown in Table 8. Test findings were mixed for the samples in X and were not rejected for approximately these instances: 40% in DS1; 74% in DS2; and 99% in DS3. Thus, DS3 is better approximated as normal in X compared to the other samples. The tests for synthetic samples in X tracked the findings for their respective samples: 44%, 74% and 99% respectively. In Y, the tests for the samples were not rejected for about these instances: 99% in DS1, 97% in DS2, and 95% in DS3, whereas the test for the synthetic samples should no deviation from normality. Similarly in T, the tests for the samples were not rejected for about these instances: 99% in DS1, 94% in DS2, and 95% in DS3. There is a difference in the X and Y analyses because the mapping normalizes the variables in Y. In DS3, the standard deviations vary over many orders of magnitude (see Table 4). As shown by Eq. (8), variables in DS3 with the larger standard deviations may wash out the other variables; the variables in X that had normal marginals compared with those that were not, indicates that a portion of the normal marginals had much larger standard deviations (in Table 4, see x1–x4). As another control experiment, we standardized all variables in X to zero mean and unit variance and performed the tests again. The tests for the samples gave: 32.9%, 76.4%, and 75.2% for DS1, DS2, and DS3, respectively. For synthetic samples, these tests gave: 33.6%, 80.1% and 89.1% for DS1, DS2, and DS3, respectively. Note, centering the means alone had no influence on the findings as expected (data not shown). Thus, normalizing the univariate measures can influence the likeliness with normality by virtue of Eq. (8). In sum, these tests show all samples resemble multivariate normality in both Y and T and that the sample for DS3 resembles normality in X without mapping (without first normalizing the variances).

Mardia normality tests

Testing was performed in X, Y, and T. The findings are shown in Table 8. In X, the samples and synthetic samples all deviated from normality (both skewness and kurtosis). In both Y and T, the samples showed significant deviations from normally in all tests. In contrast, synthetic samples did not deviate significantly from normality in any test in Y or T, as expected.

Covariance comparisons

Covariance matrix comparisons with confidence intervals

Test findings are provided in Tables 1, 2 and 3 for the respective datasets. Part-a of each table shows the X quantities, and part-b shows the corresponding Y quantities. For DS1 (Table 1), covariance references (sample) were within the CIs of the synthetic data for 100% of the trials in both X and Y. For DS2 (Table 2), most references agreed with the synthetic elements except for two entries in X (Table 2a). From the 1000 trials, the x2x3 covariance was out of tolerance for 0.1% of the instances, and the x5x10 covariance was out for 18.7% of instances. For DS3, all covariance references were within tolerance except the x7x10 covariance, which was out of tolerance for 100% of the instances. In tests that showed more deviation (percentage > 0.1%), the reference covariances were approximately zero.

Eigenvalue comparison tests

Eigenvalues are provided in Table 5. This table is separated into three sections vertically. Reference eigenvalues are provided in the top row of each section. Eigenvalues calculated from the sample/syn (condition 1) and syn/sample (condition 2) are provided in the middle and bottom rows of each section, respectively. F-tests were not significant (p > 0.05) in any comparison with the references indicating similarity.

Sample sparsity and synthetic population space filling

This illustration demonstrates that the approach fills in the multidimensional space with synthetic realizations derived from a relatively sparse sample. We selected a synthetic entity at random from DS1 giving this vector: xT = [4.20, 2.08, 1.61, 1.15, 0.85, 0.67, 0.54, 0.44, 52.0, 23.6]. We selected x1 and x8 as the scatter plot variables. For the other 8 components, all synthetic realizations within xij ± ½ σj (the standard deviation for xj) were selected and viewed in the x1x8 plane as a scatter plot. The same vector and limits were used to select realizations from the sample. The plots are provided in Fig. 13 for comparison. The sample (left-pane) produced n = 24 realizations, whereas the SP (right-pane) produced n = 36,398 realizations. These plots illustrate the sample’s relative sparsity and that the synthetic approach produces a dense population with observations that did not exist in the sample.

Sample and Synthetic Population Scatter Plot Illustration: a synthetic realization was selected randomly from DS1 giving this measurement vector: xT = [4.20, 2.08, 1.61, 1.15, 0.85, 0.67, 0.54, 0.44, 52.0, 23.6]. x1 and x8 were selected for the scatter plot variables. For the other 7 components, all synthetic realizations within ± ½ (the respective standard deviation) were selected and viewed as a scatter plot in the x1x8 plane. Using the same vector and limits, individuals were selected from the sample in the same manner. The scatter plot for the sample (n = 24) is shown in the left-pane and from the synthetic population (n = 36,398) in the right-pane. Variables are cited with the names used in their respective resource and with the names used in this report parenthetically.

Discussion

The work involved several steps to generate synthetic data from arbitrarily distributed samples. To the best of our knowledge, new aspects and findings from this work include: (1) demonstrating a class of arbitrarily distributed samples has a latent normal characteristic, as exhibited by two of the samples; (2) conditioning the input variables with sample size augmentation and then constructing univariate transforms so that known techniques could be applied to generate synthetic data; (3) deploying multiple statistical tests for assessing both normality and general similarity in both the univariate and multivariate pdf settings; (4) developing methods for comparing covariance matrices; and (5) incorporating differential evolution (DE) optimization for uKDE bandwidth determination based on the KS fitness function. The related findings are discussed below in detail.

A method was presented that converts a given multivariate sample into multiple 1D marginal pdfs by constructing maps. These X–Y maps were constructed by augmenting the sample size with optimized uKDE. Performing the analysis with and without data augmentation improved the marginal pdf comparisons between the samples and synthetic samples; this also held in DS3 (four xj), which was approximately normal in X. PCA applied to standardized normal variables in Y produced uncorrelated variables in T, where synthetic data was generated. This approach essentially decouples the problem into the covariance relationships (in P and its inverse) and 1D marginal pdfs (i.e., approximate parametric models in Y and T). This decoupling is similar to the objective of Copula modeling that follows from Sklar’s work39,40. Copula modeling allows specification of marginal pdfs and the correlation structure independently41; in this approach, the marginals must be specified accurately and finding analytical solutions for d > 4 is difficult42. In contrast with Copula modeling, which is flexible, the covariance (or correlation) structure in our approach is fixed by the normal form and empirically derived; the marginals were forced to normality rather than specified. As a benefit, the CIs for Ck with our approach were estimated from the pdfs for each matrix element without assumption other than the normal calculation form. Additionally, the eigenvalue comparison technique results reinforced the CI comparison findings. Outside of the multivariate normal situation it is not clear when (1) comparing the marginals with one set of tests, and (2) comparing the covariance relationships separately with another set of tests results in a good overall empirical comparison-approximation between two multivariate samples. Such situations will require further analyses.

There are several other points worth noting about this work. An empirically driven stochastic optimization technique was used to estimate the uKDE bandwidth parameters for the map/inverse constructions. The relative efficiency of the approach is an important attribute in that it only requires multiple uKDE applications rather than mKDE. The number of generations in the optimization was fixed. This can be changed easily to a variable termination based on achieving a critical threshold or applying other appropriate fitness functions; for example, the stopping criteria could be based on the critical distance in the KS test or the change in this distance from one generation to the next. Likewise, there are plug-in kernel bandwidth parameters that can be used statically. These are derived by considering closed form expressions containing the constituent pdfs and minimizing the asymptotic behavior of either the mean integrated square error or mean squared error43. We explored such parameters44, but they did not perform as well as the KS test with DE, notwithstanding the number of computations used here to determine a given bandwidth parameter. Of note, the KS test has limitations, as it is more sensitive to the median of the distribution rather than the tails. As an alternative, the Anderson Darling test is a variant of the KS procedure that is sensitive to the distribution tails32. The mapping from X to Y standardized the problem at the univariate level, but in general there is no guarantee that collectively it produced a multivariate normal in Y. Testing performed in Y (Step 2) could be used to discriminate input samples that have the latent normal characteristic from those that do not. The random project test could be developed into a gauge at this step for assessing the deviation from normality. Moreover, comparing r(tj) from the sample against normality (following Step 3) also provides a basis for testing sample’s likeness to a multivariate normal (discussed below). When p(x) is approximately multivariate normal, as in DS3, the mapping is not required and generating synthetic data based on PCA (without the mapping step) is a practiced technique; our approach addresses the case when this approximation fails to hold. The random projection tests changed the similarity with normality when standardizing the samples. Thus, the purpose for generating synthetic data should be considered before adjusting the input sample.

There are several other limitations and qualifications worth noting. Several multivariate pdf tests were examined with mixed findings. MMD tests in X, Y, and T showed each sample was statistically similar with its respective synthetic sample(s). These tests also indicated normality in Y and T (by default). This MMD test is sensitive to changes in the mean. In our processing, all means were forced either to identically zero or to statistical similarity via mapping. Likewise, the heuristic used for the kernel bandwidth determination can be less than optimal under certain conditions, decreasing the MMD test performance45. Random projection tests in Y and T indicated that the samples did not deviate from normality in most instances, whereas synthetic samples showed essentially no deviation. Understanding the acceptable departure from normality for this test in the modeling context will require more work. This test also showed DS3 was approximately normal in X. In contrast, Mardia tests showed all samples deviated significantly from normality in X, Y and T. With the Mardia test, synthetic samples showed: (1) essentially no deviation in Y or T as expected; (2) and significant deviation in X. Here, we made no attempt to mitigate possible outlier interference when analyzing the samples46. Note, testing for multivariate normality is not a trivial task; many of the complexities are covered by Farrell et al.47

The conclusions we make from these tests indicated each sample was approximately multivariate normal in Y and T, noting the approach may not be dependent upon this characteristic as elaborated below. In planned research, these approximations will be tested in the modeling context to evaluate whether sample and synthetic data are interchangeable. When this normality approximation holds, it implies that the original multivariate pdf estimation problem in X was converted to a parametric normal model described by Eq. (1), which simplifies the synthetic data generation. If this conversion generalizes to other datasets (at least in part), it implies that some class (subset) of the multivariate sample space can be studied with simulations by altering, n, d, and the covariance matrix to that of an arbitrary sample. Future work involves investigating arbitrary selected samples to understand how often this latent normal characteristic is present.

Alternatively, analyzing the samples in T may provide another method for comparing datasets, evaluating similarity, evaluating normality in Y, or generalizing our approach. The marginals from each sample were approximated as univariate normal in T, although there were noted variations. For example, and as noted, about 99% of the total variance came from the first four variables in DS1 (see Table 5). DS2 and DS3 were found to be similar with the first four variables accounting for 90–92% of the total variance. Thus, DS1 is more compressible than the other two datasets as expected due to the high correlation from its approximate functional Fourier form. Although not the purpose of this report, the amount of compression is a likely metric for estimating the effective dimensionality (de) when de ≤ d, which could be useful for estimating sample size. When viewing the PCA transform through the NIPALS algorithm14, it is clear when the total variance is explained by a number of components de << d, the remaining components are residue (noise, chatter, rounding errors). This effect could explain why deviations from normality in DS2 and DS3 in T did not influence the multivariate normal approximation in Y. Here, we did not encounter non-normal variables (from the samples) in T that explained a significant portion of the total variance. When a given sample is well approximated as multivariate normal in Y, the PCA transformation will produce univariate normal marginal pdfs in T. This step could be developed into the definitive test for multivariate normality in Y by understanding the residual error of the non-normal marginal pdfs in T. In this work, the analysis in T supports the normality findings for each sample because the residual non-normal errors were parasitic. Future work will investigate: (1) the impact of the residual error in T on normality in Y, and (2) causes for normality in T, i.e., possibly due to forced normality in Y, some characteristic of the X representation data, or the PCA transform. If required, the technique could be generalized to accommodate non-normal marginals in T. As a generalization, uKDE will be investigated for generating univariate non-normal distributions in T when called for with the same method used to augment X for the map/inverse constructions. In this sample scenario, the Y description will deviate from Eq. (1). Although, this premise will have to be investigated because the lack of correlation in T only guarantees tj independence when r(t) is multivariate normal. We speculate when the sample has low correlation between most of the bivariate set in X, this approximation may hold.

Choosing the most appropriate space to perform modeling or to analyze the samples deserves consideration. We have used the covariance form suitable for normally distributed variables. In Y, this form is likely appropriate. We used the same form in X as well; this form may not be optimal here because covariance relationships are not preserved over non-linear transformations. It is our contention that Y is best suited for modeling because the marginals are normal. It is common practice in univariate/multivariate modeling to adjust variables (univariately) to a standardized normal form or apply transforms to remove skewness. The X to Y map converted each xj to unit variance. When the natural variation for xj is important, the mapping can be modified easily to preserve the variance. If the variable interpretation is not important, modeling can also be performed in T.

The method in this report addresses the small sample problem given the sample has the latent normal characteristic or is normal. The approach will require further evaluation on different datasets to understand its general applicability and when the univariate mapping from X to Y approximately produces a multivariate normal. Multiple methods were explored to evaluate multivariate normality. These tests indicated that the samples approximated normality in both the Y and T but also showed some deviation from normality. The interpretation of these findings in the context of data modeling may aid in understanding the limits of both the multivariate SPs and normality approximations in this report’s data and beyond. For example, determining the limiting percentage of the random projection tests may be informative in the modeling context. In summary, we offer a definition for an insufficient sample size in the context of synthetic data. When considering a given sample with d attributes and specified covariance structure, a sample size that does not allow reconstructing its population can be considered as insufficient. In future work, we will apply the methods in this report to understand the minimum sample size, relative to d and a given covariance structure, that permits recovering the population.

Data availability

The link to publicly available data is provided in text. Mammography summary data can be obtained upon request to the corresponding author: John Heine (john.heine@moffitt.org). Kernel parameters are also available upon request.

References

Gail, M. H. & Pfeiffer, R. M. Breast cancer risk model requirements for counseling, prevention, and screening. J. Natl. Cancer Inst. 110, 994–1002 (2018).

Garrido-Castro, A. C. & Winer, E. P. Predicting breast cancer therapeutic response. Nat. Med. 24, 535–537 (2018).

Huo, Z. et al. Automated computerized classification of malignant and benign masses on digitized mammograms. Acad. Radiol. 5, 155–168 (1998).

Lei, C. et al. Mammography-based radiomic analysis for predicting benign BI-RADS category 4 calcifications. Eur. J. Radiol. 121, 108711. https://doi.org/10.1016/j.ejrad.2019.108711 (2019).

Nguyen, D. V. & Rocke, D. M. Tumor classification by partial least squares using microarray gene expression data. Bioinformatics 18, 39–50 (2002).

Erves, J. C. et al. Needs, priorities, and recommendations for engaging underrepresented populations in clinical research: A community perspective. J. Community Health 42, 472–480. https://doi.org/10.1007/s10900-016-0279-2 (2017).

Dickson, J. L. et al. Hesitancy around low-dose CT screening for lung cancer. Ann. Oncol. 33, 34–41. https://doi.org/10.1016/j.annonc.2021.09.008 (2022).

Wang, G. X. et al. Barriers to lung cancer screening engagement from the patient and provider perspective. Radiology 290, 278–287. https://doi.org/10.1148/radiol.2018180212 (2019).

Foraker, R., Mann, D. L. & Payne, P. R. O. Are synthetic data derivatives the future of translational medicine?. JACC Basic Transl. Sci. 3, 716–718 (2018).

Elston, D. M. Data dredging and false discovery. J. Am. Acad. Dermatol. 82, 1301–1302. https://doi.org/10.1016/j.jaad.2019.07.061 (2020).

Siddiqui, K. Heuristics for sample size determination in multivariate statistical techniques. World Appl. Sci. J. 27, 285–287 (2013).

Wu, Y., Genton, M. G. & Stefanski, L. A. A multivariate two-sample mean test for small sample size and missing data. Biometrics 62, 877–885 (2006).

Riley, R. D. et al. Calculating the sample size required for developing a clinical prediction model. BMJ 368, m441. https://doi.org/10.1136/bmj.m441 (2020).

Geladi, P. & Kowalski, B. R. Partial least-squares regression: A tutorial. Anal. Chim. 185, 1–17 (1986).

Chartrand, G. et al. Deep learning: A primer for radiologists. Radiographics 37, 2113–2131 (2017).

Buczak, A. L., Babin, S. & Moniz, L. Data-driven approach for creating synthetic electronic medical records. BMC Med. Inform. Decis. 10, 1–28 (2010).

Chen, J. Q., Chun, D., Patel, M., Chiang, E. & James, J. The validity of synthetic clinical data: A validation study of a leading synthetic data generator (Synthea) using clinical quality measures. BMC Med. Inform. Decis. Mak. https://doi.org/10.1186/s12911-019-0793-0 (2019).

Dahmen, J. & Cook, D. A synthetic data generation system for healthcare applications. Sensors (Basel) 19, 1181. https://doi.org/10.3390/s19051181 (2019).

Goncalves, A. R., Sales, A. P., Ray, P. & Soper, B. NCI pilot 3-synthetic data generation report report no. Lawrence Livermore National Lab. (LLNL): LLNL-TR-747902 (2018).

Bogle, B. M. & Mehrotra, S. A moment matching approach for generating synthetic data. Big Data 4, 160–178 (2016).

Quintana, D. S. A synthetic dataset primer for the biobehavioural sciences to promote reproducibility and hypothesis generation. Elife 9, e53275 (2020).

Fowler, E. E., Berglund, A., Sellers, T. A., Eschrich, S. & Heine, J. Empirically-derived synthetic populations to mitigate small sample sizes. J. Biomed. Inform. 105, 103408 (2020).

Scott, D. W. Feasibility of multivariate density estimates. Biometrika 78, 197–205 (1991).

Hwang, J.-N., Lay, S.-R. & Lippman, A. Nonparametric multivariate density estimation: A comparative study. IEEE Trans. Signal Process. 42, 2795–2810 (1994).

Wang, Z. & Scott, D. W. Nonparametric density estimation for high-dimensional data—Algorithms and applications. Wiley Interdiscip. Rev. Comput. Stat. 11, e1461 (2019).

Heine, J., Fowler, E. E. E., Berglund, A., Schell, M. J. & Eschrich, S. A. Techniques to produce and evaluate realistic multivariate synthetic data. bioRxiv. https://doi.org/10.1101/2021.10.26.465952 (2021).

Price, K. V., Storn, R. M. & Lampinen, J. A. Differential Evolution: A Practical Approach to Global Optimization (Springer, 2005).

Koklu, M. & Ozkan, I. A. Multiclass classification of dry beans using computer vision and machine learning techniques. Comput. Electron. Agric. 174, 105507 (2020).

Fowler, E. E. E. et al. Generalized breast density metrics. Phys. Med. Biol. 64, 015006. https://doi.org/10.1088/1361-6560/aaf307 (2019).

Heine, J. J. & Velthuizen, R. P. Spectral analysis of full field digital mammography data. Med. Phys. 29, 647–661 (2002).

Fowler, E. E. E. et al. Spatial correlation and breast cancer risk. Biomed. Phys. Eng. Express 5, 045007. https://doi.org/10.1088/2057-1976/ab1dad (2019).

Press, W. H., Numerical Recipes Software (Firm). Numerical Recipes in C 2nd edn. (Cambridge University Press, 1992).

Oh, H. et al. Early-Life and adult anthropometrics in relation to mammographic image intensity variation in the nurses’ health studies. Cancer Epidemiol. Biomark. Prev. 29, 343–351. https://doi.org/10.1158/1055-9965.EPI-19-0832 (2020).

Velthuzen, R. P. & Clarke, L. P. In SPIE proceedings series. 179–187 (Society of Photo-Optical Instrumentation Engineers).

Gretton, A., Borgwardt, K. M., Rasch, M. J., Schölkopf, B. & Smola, A. A kernel two-sample test. J. Mach. Learn. Res. 13, 723–773. https://doi.org/10.5555/2188385.2188410 (2012).

Garreau, D., Jitkrittum, W. & Kanagawa, M. Large sample analysis of the median heuristic. arXiv preprint https://arxiv.org/abs/1707.07269 (2017).

Zhou, M. & Shao, Y. A powerful test for multivariate normality. J. Appl. Stat. 41, 351–363. https://doi.org/10.1080/02664763.2013.839637 (2014).

Shao, Y. & Zhou, M. A characterization of multivariate normality through univariate projections. J. Multivar. Anal. 101, 2637–2640. https://doi.org/10.1016/j.jmva.2010.04.015 (2010).

Haugh, M. An introduction to copulas. In IEOR E4602: Quantitative Risk Management. Lecture Notes (Columbia University, 2016).

Durante, F., Fernández-Sánchez, J. & Sempi, C. Aggregation Functions in Theory and in Practise 85–90 (Springer, 2013).

Schirmacher, D. & Schirmacher, E. Multivariate Dependence Modeling Using Pair-Copulas (The Society of Actuaries, 2008).

Chandrasekara, N. & Tilakaratne, C. D. Determining and comparing multivariate distributions: An application to AORD and GSPC with their related financial markets. GSTF J. Math. Stat. Oper. Res. JMSOR 4, 1–8 (2016).

Jones, M. C., Marron, J. S. & Sheather, S. J. A brief survey of bandwidth selection for density estimation. J. Am. Stat. Assoc. 91, 401–407 (1996).

Gramacki, A. Nonparametric Kernel Density Estimation and Its Computational Aspects (Springer, Berlin, 2018).

Schrab, A. et al. MMD aggregated two-sample test. arXiv preprint https://arxiv.org/abs/2110.15073 (2021).

Korkmaz, S., Göksülük, D. & Zararsiz, G. MVN: An R package for assessing multivariate normality. R J. 6, 151 (2014).

Farrell, P. J., Salibian-Barrera, M. & Naczk, K. On tests for multivariate normality and associated simulation studies. J. Stat. Comput. Simul. 77, 1065–1080 (2007).

Funding

The work was in part supported by Moffitt Cancer Center grant #17032001 (Miles for Moffitt) and National Institutes of Health Grants R01CA166269 and U01CA200464.

Author information

Authors and Affiliations

Contributions

J.H. is the corresponding author, conceived the plan and methods; E.F. is a coauthor, developed the computer code, assisted in the plan and methods development, and prepared figures; A.B. is a coauthor and provided statistical and principal component analysis expertise; M.S. is a coauthor and provided statistical expertise; S.E. is a coauthor and assisted in the plan and methods developments. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Heine, J., Fowler, E.E.E., Berglund, A. et al. Techniques to produce and evaluate realistic multivariate synthetic data. Sci Rep 13, 12266 (2023). https://doi.org/10.1038/s41598-023-38832-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-38832-0

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.