Abstract

Chronic kidney disease (CKD) is a frequent complication after liver transplantation (LT) and associated with poor prognosis. In this study, we retrospectively analyzed 515 adult patients who underwent LT in our center. They were randomly divided into a training set (n = 360) and an internal test set (n = 155). Another 118 recipients in other centers served as external validation set. Univariate and multivariate COX regression analysis were used to determine risk factors. A nomogram model was developed to predict post-LT CKD. The incidence of post-LT CKD in our center was 16.9% (87/515) during a median follow-up time of 22.73 months. The overall survival of recipients with severe CKD (stage IV and V) were significantly lower than those with non or mild CKD (stage III) (p = 0.0015). A nomogram model was established based on recipient’s age, anhepatic phase, estimated glomerular filtration rate and triglyceride levels at 30 days after LT. The calibration curves for post-LT CKD prediction in the nomogram were consistent with the actual observation in both the internal and external validation set. In conclusion, severe post-LT CKD resulted in a significantly reduced survival in liver recipient. The newly established nomogram model had good predictive ability for post-LT CKD.

Similar content being viewed by others

Introduction

Liver transplantation (LT) is a life-saving treatment for patients with end-stage liver diseases. With the development of LT surgery technology and postoperative management, the 10-year survival rate after LT is nearly 89%1. However, the postoperative complications could strongly impair graft function and reduce graft survival. Chronic kidney disease (CKD) is one of the frequent long-term complications in liver recipients. According to the previous reports, the incidence of post-LT CKD ranged from 11.7% to 54%2,3,4,5,6,7,8,9,10. Based on data of 1771 liver recipients in Taiwan National Health Insurance Research Database, Wang et al.7 revealed that 323 (18.2%) patients required renal replacement therapy after LT and had a higher mortality.

The etiologies of post-LT CKD are multifactorial (e.g., perioperative kidney injury, recipient factors, the use of calcineurin-inhibitor) and the risk factors varied from each other in different studies4, making it difficult to accurately identify recipients who were at high risk of developing post-LT CKD. Over the past decade, models have been established and tried to serve as tools to predict the development of post-LT CKD. Giusto et al.11 analyzed data from 179 patients and found that arterial hypertension, severe infection and estimated glomerular filtration rate (eGFR) after LT were risk factors for the development of post-LT CKD. A predictive model was established based on these factors and showed a C-index of 0.91, indicating good predictive ability. Levitsky et al.12 proposed another predictive model by integrating one clinical parameter (hepatitis C virus as the major indication for LT) and two renal injury proteins (serum CD 40 antigen and β-2 microglobulin prior to LT), showing an area under the curve (AUC) of 0.814 in the training cohort (n = 60) and 0.801 in the validation cohort (n = 50). Nevertheless, the detection of these individual biomarkers seems to be difficult and increase the time cost as well, limiting their application in clinic. Furthermore, the sample sizes of the two models are relatively small, suggesting that larger cohorts should be considered to validate their efficacy. In a large cohort study with 43,514 adult liver recipients, Sharma et al.13 established a renal risk index, which contained 14 recipient factors, to predict end-stage renal disease (ESRD) after LT. However, the model could not identify patients with CKD stage III or IV who may deteriorate to CKD stage V without being well managed. In addition, it involved too many variables, which might increase the difficulty of clinical application. Therefore, a simple and effective predictive model is needed to identify patients at high risk of post-LT CKD.

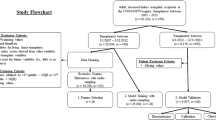

Nomogram is a simple visual graph of statistical prediction model with an easy-to-use graphical interface. Importantly, it could generate personalized predictions, thereby, widely used in risk stratification and personally providing approach to disease management. In our study, we aimed to develop a nomogram predictive model for CKD following donation after circulatory death (DCD) LT.

Methods

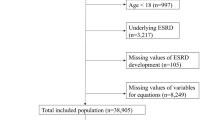

Patient characteristics

All adult patients (> 18 years old) undergoing DCD LT between January 1, 2015 and December 31, 2018 at The First Affiliated Hospital, Zhejiang University School of Medicine were enrolled in this retrospective study. At first, 619 LT recipients were included, 10 with age of < 18 years and 94 who died within 3 months after LT were excluded. Then 515 patients were included and randomly divided into a training set (n = 360) and an internal test set (n = 155) according to ratio of 0.7 to 0.314,15. An external validation set (n = 118) was obtained from China Liver Transplant Registry (CLTR) database and included adult patients who underwent primary LT from July 1, 2017 to December 31, 2020 in other centers with sufficient data. The study protocol was approved by the clinical ethics review board of hospital and CLTR. All LTs were performed with organs from voluntary donations made by deceased donors, not from executed prisoners. Each organ donation or transplant followed the guidelines of the Organ Transplant Committee of China. The informed consent was obtained from each participant.

Data collection

Data related to demographic or perioperative variables were collected in accordance with the procedure described in previous studies16. The following perioperative clinical variables were recorded for the study: patient demographics, medical history, medication history, baseline laboratory findings, medical information about the donor and graft liver, operation time, intraoperative fluid and colloid administration, and intraoperative transfusion amount. The pre-transplant data were collected within 24 h before LT. The post-LT data were recorded during the follow up.

Definition

The primary outcome variable was postoperative CKD defined according to Kidney Disease: Improving Global Outcomes (KDIGO) 2012 CKD Guideline. We used the following criteria: eGFR < 60 mL/min/1.73 m2 ≥ 3 months, with or without renal injury17,18,19. The eGFR calculated using the serum creatinine value and modified diet in renal disease (MDRD) equation20.

Statistical analysis

R software version 4.1.0 (http://www.r-project.org/) were used for statistical analysis of data in this study. Quantitative variables were expressed as mean ± SD or median and quartiles. Categorical variables such as sex were presented as values and percentages. Student's t test or the Wilcoxon's rank-sum test was used to compare quantitative variables. The Chi-square test was used to compare categorical variables. The Kaplan–Meier method and log-rank test were used for survival analysis. Multivariate COX regression with LR forward elimination analysis was used to determine independent risk factors for post-LT CKD. We calculated the AUCs of the models and compared the AUCs using De Long’s method21. A nomogram was built and verified in the training set and two test sets respectively.

In this study, the ‘SurvMiner’ and ‘Survival’ packages in R language were used to conduct Cox regression analysis and establish a nomogram prediction model for post-LT CKD. The ‘tidyverse’, ‘SurvivalRoc’, ‘pROC’, ‘ROCR’ packages were used for AUC analysis. The ‘stdca.R’ source code (http://ame.pub/AME20210218) was used for DCA analysis of the models. P value < 0.05 was considered statistically significant.

Ethical approval

This study was approved by the clinical ethics review board of The First Affiliated Hospital, Zhejiang University School of Medicine and CLTR.

Informed consent

Informed consent was obtained from all patients to allow the use of clinical data for investigation.

Results

Basic characteristics results

A total of 633 adult DCD LT recipients were enrolled in this study. There were 523 males and 110 females. Median age at LT was 51.0 years (quartile: 42.0–57.0 years). The median follow-up time was 22.83 months (quartile: 13.62–39.67 months). The average time of CKD diagnosis after LT was 19.61 months (quartile: 11.25–37.34 months). Demographic and clinical characteristics were shown in Table 1. Other complications, such as biliary complications, acute rejection and early allograft dysfunction, showed no significances between our center and external validation set.

Incidence and prognosis of post-LT CKD in our center

Out of 633 cases, 99 developed CKD. Among these 99 recipients, 18 deteriorated to CKD IV or CKD V and finally only 9 developed ESRD. In our center, the incidence of post-LT CKD was 16.9% (87/515; CKD stage III 14.4% n = 74; CKD IV 1.4% n = 7; CKD stage V 1.2% n = 6). The 1-, 2- and 3-year cumulative survival rates in the CKD group were 91.7%, 81.8% and 73.5% and the corresponding survival rates in the non-CKD group were 88.4%, 81.7% and 79.2% respectively. There was no statistically significant difference in survival between the two groups (p = 0.53) (Fig. 1A). However, the survival rates of severe CKD recipients (CKD stage IV and V) after LT decreased significantly. The cumulative survival rates at 1-, 2-, and 3-year were 76.9%, 56.1%, and 37.4% and the corresponding survival rates for non-CKD or CKD stage III recipients were 89.4%, 82.5%, and 79.3% respectively (p = 0.0015) (Fig. 1B).

Cumulative patient survival after liver transplantation. (A) Comparison of cumulative patient survival between the CKD group and the non-CKD group; log-rank test, p = 0.5300. (B) Comparison of cumulative patient survival between the severe CKD stage group (CKD stage IV and CKD stage V) and the mild CKD stage (CKD stage III) or non-CKD group; log-rank test, p = 0.0015.

Risk factors of post-LT CKD

Due to the fewer cases of CKD IV, CKD V and ESRD, it was difficult to instruct a predictive model. Herein, we focused on post-LT CKD in all stages. In the training set, Cox regression univariate analysis showed that 23 variables were associated with post-LT CKD (p < 0.05) (Table 2). To predict the occurrence of post-LT CKD before LT, we firstly determined the independent preoperative variables using multivariable COX regression analysis with forward LR method. The results showed that recipient’s age (HR 1.04, 95%CI 1.01–1.06), body mass index (BMI) (HR 1.10, 95%CI 1.01–1.18), history of hepatorenal syndrome (HR 3.91, 95%CI 1.94–7.90) and anhepatic phase (HR 1.02, 95%CI 1.01–1.03) were independent risk factors for post-LT CKD in training set.

Because CKD is a chronic disease happened after 3 months postoperatively, we assumed that early postoperative variables might better predict the disease. Therefore, we next entered parameters at 30 days after LT into the multivariable COX regression model. The results showed that recipient’s age (HR 1.03, 95%CI 1.00–1.05), anhepatic phase (HR 1.02, 95%CI 1.01–1.03), eGFR at 30 days after LT (HR 0.98, 95%CI 0.97–0.99) and TG levels at 30 days after LT (HR 1.51 95%CI 1.23–1.83) were independent risk factors for post-LT CKD in training set (Table 2).

Predictive models of post-LT CKD

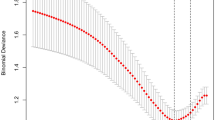

We established a preoperative prediction model (model 1) according to recipient’s age, BMI, history of hepatorenal syndrome and anhepatic phase (Fig. 2A). The AUCs of model 1 in training set, internal test set and external validation set were 0.6988 (95%CI 0.6233–0.7743), 0.6126 (95%CI 0.504–0.7291) and 0.6187 (95%CI 0.5101–0.7475) respectively.

Multivariate COX regression analysis in the training set. (A) The four risk factors (Age, BMI, hepatorenal syndrome and anhepatic phase) included in model 1. (B) The four risk factors (Age, anhepatic phase, eGFR at 30 days after LT and TG levels at 30 days after LT) included in model 2. Abbreviations: BMI Body mass index; eGFR30d Estimated glomerular filtration rate at 30 days after LT (mL/min/1.73 m2); TG30d Triglyceride levels at 30 days after LT (mmol/L).

Then we established another model (model 2) using recipient’s age, anhepatic phase, eGFR at 30 days after LT and TG levels at 30 days after LT (Fig. 2B). The AUCs of model 2 in training set, internal test set and external validation set were 0.8314 (95%CI 0.7700–0.8927), 0.7465 (95%CI 0.6387–0.8543) and 0.8349 (95%CI 0.7381–0.9317) respectively, showing that model 2 had much better predictive ability than model 1 (Fig. 3). According to the DCA curves of the training set and test set, model 2 was better than model 1 as well (Fig. 4).

The AUCs and the quantization diagram of C-index and time for two models in the training set, internal test set and external validation set. (A) The AUCs of model 1 and model 2 were 0.6988 (95%CI 0.6233–0.7743) and 0.8314 (95%CI 0.7700–0.8927) in training set respectively. The contrast of AUCs: model 1 versus model 2 p < 0.0001. (B) The AUCs of model 1 and model 2 were 0.6126 (95%CI 0.5040–0.7291) and 0.7465 (95%CI 0.6387–0.8543) in internal test set respectively. The contrast of AUCs: model 1 versus model 2 p = 0.0270. (C) The AUCs of model 1 and model 2 were 0.6187 (95%CI 0.5101–0.7475) and 0.8349 (95%CI 0.7381–0.9317) in external test set respectively. The contrast of AUCs: model 1 versus model 2 p = 0.0240. (D) The quantization diagram of C-index and time for two models in the training set. (E) The quantization diagram of C-index and time for two models in the internal test set. (F) The quantization diagram of C-index and time for two models in the external validation set.

Development and validation of a nomogram model

The four risk factors in model 2 were included to develop a nomogram model to exactly predict the probability of post-LT CKD in each patient at different time point according to the scores (Fig. 5). For example: A 45-year-old liver recipient with anhepatic phase of 80 min and eGFR of 80 ml/min/1.73 m2 and TG of 2.0 mmol/L at 30 days after LT. The calculated score was 114 and the probabilities of this recipient developing CKD at 1-, 2- and 3-year after LT were 18%, 20% and 25% respectively.

CKD prediction nomogram. Each of these four variables corresponded to a point for LT recipients. The points were added to give a total point that corresponded to the incidence of CKD at different times. Abbreviations: AgeR The recipient’s age (year); Anhepatic Phase Anhepatic phase (min); eGFR30d Glomerular filtration rate at 30 days after LT (mL/min/1.73 m2); TG30d Triglyceride levels at 30 days after LT (mmol/L).

The calibration curve of the nomogram was presented in Fig. 6, demonstrating that the post-LT CKD probabilities predicted by the nomogram was consistent with the actual observation in the internal test set and external validation set. It indicated that the nomogram model could accurately predict the risk of post-LT CKD.

Discussion

CKD is a common long-term complication after LT. Previous studies have revealed that the incidence of post-LT CKD varied widely2,3,4,5,6,7,8,9,10, which mainly due to the different definition of post-LT CKD and the duration of follow-up time. In a single-center retrospective study, Schmitz et al.3 showed that 11.7% of liver recipients developed CKD, which was defined as serum creatinine ≥ 1.8 mg/dL ≥ 2 weeks, within 12 months. When the follow-up reached 53.2 months, Fabrizi et al.8 demonstrated that 28% of liver recipients developed CKD, based on the definition of eGFR < 60 mL/min/1.73 m2 ≥ 3 months. Using a strict criteria according to KDIGO 2012, we showed the incidence of post-LT CKD was 16.9% during a median follow-up of 22.73 months, which was consistent with the previous studies.

The relationship between post-LT CKD and adverse prognosis is still a matter of substantial debate. It has been reported that the occurrence of CKD is not associated with a worse survival11,22. In this study, we also found that post-LT CKD did not reduce the patient survival. However, both our and previous studies showed that as the development of CKD, a severe type (GFR < 30 mL/min/1.73 m2 or those need renal replacement therapy) was significantly associated with higher mortality after LT2,23. LaMattina et al.10 retrospectively analyzed 1151 adult deceased LTs and revealed that 3%, 7% and 18% of recipients developed ESRD at 5, 10 and 20 years, which suggested increased incidence of severe CKD with prolonged follow-up time. Thus, there is greatly necessary to identify earlier CKD and cooperate with nephrologists to take early intervention.

In this study, we established a novel nomogram model to accurately predict post-LT CKD. We found it was difficult to predict the occurrence of post-LT CKD before LT because the AUC of model 1 with preoperative parameters was relatively low. In contrast, when we integrated the postoperative parameters, the predictive ability of model was dramatically improved. The independent risk factors for post-LT CKD were recipient’s old age, prolonged anhepatic phase, low eGFR at 30 days after LT and high TG levels at 30 days after LT.

Advanced age is a well-recognized risk factor for progressive kidney dysfunction after LT2,9. Here, we found that for each 1 year increased of age, the risk of CKD increased by 3%, which confirmed the above association. Older liver recipients are commonly at higher risk of preexisting kidney dysfunction, metabolic disorders, hypertension, diabetes, cardiovascular diseases, making it susceptible to CKD after LT2,24,25. Given that the declining clearance of immunosuppressive agents in the elderly, they are more likely to be affected by immunosuppressor nephrotoxicity, thereby accelerating the development of post-LT CKD24,26.

During LT, the inferior vena cava was partially blocked while the portal vein and hepatic artery were completely occluded, strongly impacting the hemodynamics changes in recipients. Additionally, decreased GFR and increased markers of renal injury (e.g., β2-microglobulin, N-acetyl-β-D-glucosaminidase, syndecan-1) were observed during the anhepatic phase, potentially resulting in post-LT CKD27,28. In addition, prolonged anhepatic time could increase the risk of graft dysfunction and metabolic disorders, mainly due to the accumulation of cytokines (e.g., interleukin 6), metabolites and other toxicants, and finally deteriorated renal function and reduced patient survival29.

Previous study has showed that high levels of TG were significantly associated with low eGFR in liver recipients30. In our present study, we also found that TG level at 30 days after LT was an independent risk factor for post-LT CKD, suggesting the potential role of hyperlipidemia in CKD development. In patients with CKD, the altered lipid profile includes elevated low-density lipoprotein cholesterol and very low-density lipoprotein cholesterol and reduced high-density lipoprotein cholesterol31,32,33. Such alteration could result in increased lipid content (e.g., total cholesterol, TG), which accumulates in kidney and triggers lipid nephrotoxicity34. Given that the close correlation between hyperlipidemia and CKD, it is necessary to regulate lipid metabolism in recipients at higher risk of post-LT CKD35. A series of clinical trials have indicated the use of statins with or without ezetimibe could prevent and treat CKD36,37,38,39,40,41. Moreover, our previous studies also demonstrated that well controlled glucose and lipid levels during the perioperative period of LT could reduce the incidence of post-LT chronic diseases such as metabolic disorders and CKD42,43,44,45.eGFR is commonly served as an indicator of CKD due to its detection is accurate and convenient. In our study, we indicated that low eGFR level at 30 days after LT was an independent risk factor of post-LT CKD, suggesting the renal dysfunction in the early post-LT period may contribute to the development of CKD. In a retrospective cohort study, Sato et al.46 revealed that eGFR < 60 mL/min/1.73 m2 at 1 month following LT was a predictive factor of CKD at 2-year after LT, which proved our hypothesis. During organ procurement and implantation, blood loss could result in intraoperative or post-LT renal injury, thereby influencing the eGFR46. These results highlight the importance of controlling the early post-transplant eGFR. In addition, efforts should be made to avoid massive intraoperative blood loss during LT, usage of nephrotoxic medications and acute kidney injury.

There are several limitations in our study. Firstly, it was an observational study with relatively limited cases and short follow-up time. CKD is a slowly progressive disease with increased incidence as the follow-up time prolonged. Secondly, the nomogram model needs to be validated in prospective studies with well design. Thirdly, given that the fewer ESRD cases in our study, large-scale and adequately powered studies with more samples are necessary to determine independent risk factors of ESRD and construct believable models to identify who deteriorate to ESRD and who doesn’t. At last, although CLTR included more than twenty thousand cases of LTs during 2017–2020, only 118 had sufficient follow-up data to define post-LT CKD. The follow-up data input and management of the national database are needed.

Conclusion

As a long-term complication after LT, severe post-LT CKD could result in a significantly reduced survival rate in liver recipients. Prompt management of dyslipidemia and renal dysfunction during the early post-LT period may of help to prevent the development of post-LT CKD. Furthermore, we established a novel nomogram to predict post-LT CKD, showing an excellent diagnostic efficacy.

Data availability

The data that support the findings of this study are available from China Liver Transplant Registry (CLTR) but restrictions apply to the availability of these data, which were used under license for the current study, and so are not publicly available. Data are however available from the corresponding author upon reasonable request and with permission of CLTR.

Abbreviations

- AUC:

-

The area under the curve

- BMI:

-

Body mass index

- CLTR:

-

China Liver Transplant Registry

- CKD:

-

Chronic kidney disease

- DCD:

-

Donation after circulatory death

- eGFR:

-

Estimated glomerular filtration rate

- LT:

-

Liver transplantation

- MDRD:

-

Modified diet in renal disease

- TG:

-

Triglyceride

References

van Rijn, R., Hoogland, P. E. R., Lehner, F., van Heurn, E. L. W. & Porte, R. J. Long-term results after transplantation of pediatric liver grafts from donation after circulatory death donors. PLoS ONE 12, e0175097. https://doi.org/10.1371/journal.pone.0175097 (2017).

Ojo, A. O. et al. Chronic renal failure after transplantation of a nonrenal organ. N. Engl. J. Med. 349, 931–940. https://doi.org/10.1056/NEJMoa021744 (2003).

Schmitz, V. et al. Chronic renal dysfunction following liver transplantation. Clin. Transplant. 22, 333–340. https://doi.org/10.1111/j.1399-0012.2008.00806.x (2008).

Sharma, P. & Bari, K. Chronic kidney disease and related long-term complications after liver transplantation. Adv. Chronic Kidney Dis. 22, 404–411. https://doi.org/10.1053/j.ackd.2015.06.001 (2015).

Cohen, A. J. et al. Chronic renal dysfunction late after liver transplantation. Liver Transpl. 8, 916–921. https://doi.org/10.1053/jlts.2002.35668 (2002).

Huard, G. et al. The high incidence of severe chronic kidney disease after intestinal transplantation and its impact on patient and graft survival. Clin. Transpl. https://doi.org/10.1111/ctr.12942 (2017).

Wang, T. J. et al. Long-term outcome of liver transplant recipients after the development of renal failure requiring dialysis: A study using the national health insurance database in Taiwan. Transpl. Proc. 48, 1194–1197. https://doi.org/10.1016/j.transproceed.2015.12.130 (2016).

Fabrizi, F. et al. Acute kidney injury and chronic kidney disease after liver transplant: A retrospective observational study. Nefrologia (Engl Ed) https://doi.org/10.1016/j.nefro.2021.01.009 (2021).

Ip, S. et al. Interaction of gender and hepatitis C in risk of chronic renal failure after liver transplantation. Ann. Hepatol. 16, 230–235. https://doi.org/10.5604/16652681.1231581 (2017).

LaMattina, J. C. et al. Native kidney function following liver transplantation using calcineurin inhibitors: Single-center analysis with 20 years of follow-up. Clin. Transpl. 27, 193–202. https://doi.org/10.1111/ctr.12063 (2013).

Giusto, M. et al. Chronic kidney disease after liver transplantation: pretransplantation risk factors and predictors during follow-up. Transplantation 95, 1148–1153. https://doi.org/10.1097/TP.0b013e3182884890 (2013).

Levitsky, J. et al. Discovery and validation of a biomarker model (PRESERVE) predictive of renal outcomes after liver transplantation. Hepatology 71, 1775–1786. https://doi.org/10.1002/hep.30939 (2020).

Sharma, P., Goodrich, N. P., Schaubel, D. E., Guidinger, M. K. & Merion, R. M. Patient-specific prediction of ESRD after liver transplantation. J. Am. Soc. Nephrol. 24, 2045–2052. https://doi.org/10.1681/ASN.2013040436 (2013).

Zou, Y. et al. Development of a nomogram to predict disease-specific survival for patients after resection of a non-metastatic adenocarcinoma of the pancreatic body and tail. Front Oncol 10, 526602. https://doi.org/10.3389/fonc.2020.526602 (2020).

Zou, W., Wang, Z., Wang, F., Zhang, G. & Liu, R. A nomogram predicting overall survival in patients with non-metastatic pancreatic head adenocarcinoma after surgery: A population-based study. BMC Cancer 21, 524. https://doi.org/10.1186/s12885-021-08250-4 (2021).

Palmer, S. C. et al. HMG CoA reductase inhibitors (statins) for kidney transplant recipients. Cochrane Database Syst. Rev. https://doi.org/10.1002/14651858.CD005019.pub4 (2014).

Levin, A. & Stevens, P. E. Summary of KDIGO 2012 CKD guideline: Behind the scenes, need for guidance, and a framework for moving forward. Kidney Int. 85, 49–61. https://doi.org/10.1038/ki.2013.444 (2014).

Stevens, P. E., Levin, A., Kidney Disease Improving Global Outcomes Chronic Kidney Disease Guideline Development Work Group, M. Evaluation and management of chronic kidney disease: synopsis of the kidney disease: Improving global outcomes 2012 clinical practice. Ann. Intern. Med. 158, 825–830. https://doi.org/10.7326/0003-4819-158-11-201306040-00007 (2013).

Andrassy, K. M. Comments on “KDIGO 2012 clinical practice guideline for the evaluation and management of chronic kidney disease”. Kidney Int. 84, 622–623. https://doi.org/10.1038/ki.2013.243 (2013).

Levey, A. S. et al. Using standardized serum creatinine values in the modification of diet in renal disease study equation for estimating glomerular filtration rate. Ann. Intern. Med. 145, 247–254. https://doi.org/10.7326/0003-4819-145-4-200608150-00004 (2006).

DeLong, E. R., DeLong, D. M. & Clarke-Pearson, D. L. Comparing the areas under two or more correlated receiver operating characteristic curves: a nonparametric approach. Biometrics 44, 837–845 (1988).

Gonwa, T. A. et al. End-stage renal disease (ESRD) after orthotopic liver transplantation (OLTX) using calcineurin-based immunotherapy: risk of development and treatment. Transplantation 72, 1934–1939. https://doi.org/10.1097/00007890-200112270-00012 (2001).

Sharma, P. et al. Renal outcomes after liver transplantation in the model for end-stage liver disease era. Liver Transpl. 15, 1142–1148. https://doi.org/10.1002/lt.21821 (2009).

Durand, F. et al. Age and liver transplantation. J. Hepatol. 70, 745–758. https://doi.org/10.1016/j.jhep.2018.12.009 (2019).

Watt, K. D., Pedersen, R. A., Kremers, W. K., Heimbach, J. K. & Charlton, M. R. Evolution of causes and risk factors for mortality post-liver transplant: results of the NIDDK long-term follow-up study. Am. J. Transpl. 10, 1420–1427. https://doi.org/10.1111/j.1600-6143.2010.03126.x (2010).

Staatz, C. E. & Tett, S. E. Pharmacokinetic considerations relating to tacrolimus dosing in the elderly. Drugs Aging 22, 541–557. https://doi.org/10.2165/00002512-200522070-00001 (2005).

Grande, L. et al. Effect of venovenous bypass on perioperative renal function in liver transplantation: Results of a randomized, controlled trial. Hepatology 23, 1418–1428. https://doi.org/10.1002/hep.510230618 (1996).

Cezar, L. C. et al. Intraoperative systemic biomarkers predict post-liver transplantation acute kidney injury. Eur. J. Gastroenterol. Hepatol. 33, 1556–1563. https://doi.org/10.1097/MEG.0000000000001892 (2021).

Ijtsma, A. J. et al. The clinical relevance of the anhepatic phase during liver transplantation. Liver Transpl. 15, 1050–1055. https://doi.org/10.1002/lt.21791 (2009).

Kosmacheva, E. D. & Babich, A. E. Lipid spectrum and function of kidneys before and after liver transplantation. Kardiologiia 59, 17–23. https://doi.org/10.18087/cardio.2611 (2019).

Shah, A. & Olivero, J. J. Lipids and renal disease. Methodist Debakey Cardiovasc J. 15, 88–89. https://doi.org/10.14797/mdcj-15-1-88 (2019).

Trevisan, R., Dodesini, A. R. & Lepore, G. Lipids and renal disease. J. Am. Soc. Nephrol. 17, S145-147. https://doi.org/10.1681/ASN.2005121320 (2006).

Vaziri, N. D. Molecular mechanisms of lipid disorders in nephrotic syndrome. Kidney Int. 63, 1964–1976. https://doi.org/10.1046/j.1523-1755.2003.00941.x (2003).

Mathew, R. O. et al. Concepts and controversies: Lipid management in patients with chronic kidney disease. Cardiovasc Drugs Ther. 35, 479–489. https://doi.org/10.1007/s10557-020-07020-x (2021).

Harper, C. R. & Jacobson, T. A. Managing dyslipidemia in chronic kidney disease. J. Am. Coll. Cardiol. 51, 2375–2384. https://doi.org/10.1016/j.jacc.2008.03.025 (2008).

Jardine, A. G. et al. fluvastatin prevents cardiac death and myocardial infarction in renal transplant recipients: post-hoc subgroup analyses of the ALERT study. Am. J. Transpl. 4, 988–995. https://doi.org/10.1111/j.1600-6143.2004.00445.x (2004).

Wanner, C. et al. Atorvastatin in patients with type 2 diabetes mellitus undergoing hemodialysis. N. Engl. J. Med. 353, 238–248. https://doi.org/10.1056/NEJMoa043545 (2005).

Fellstrom, B. C. et al. Rosuvastatin and cardiovascular events in patients undergoing hemodialysis. N. Engl. J. Med. 360, 1395–1407. https://doi.org/10.1056/NEJMoa0810177 (2009).

Toth, P. P. et al. Efficacy and safety of lipid lowering by alirocumab in chronic kidney disease. Kidney Int. 93, 1397–1408. https://doi.org/10.1016/j.kint.2017.12.011 (2018).

Vallejo-Vaz, A. J. et al. Associations between lower levels of low-density lipoprotein cholesterol and cardiovascular events in very high-risk patients: Pooled analysis of nine ODYSSEY trials of alirocumab versus control. Atherosclerosis 288, 85–93. https://doi.org/10.1016/j.atherosclerosis.2019.07.008 (2019).

Stanifer, J. W. et al. Benefit of Ezetimibe added to simvastatin in reduced kidney function. J. Am. Soc. Nephrol. 28, 3034–3043. https://doi.org/10.1681/ASN.2016090957 (2017).

Ling, Q. et al. Donor PPARalpha gene polymorphisms influence the susceptibility to glucose and lipid disorders in liver transplant recipients: A strobe-compliant observational study. Medicine (Baltimore) 94, e1421. https://doi.org/10.1097/MD.0000000000001421 (2015).

Huang, H. T., Zhang, X. Y., Zhang, C., Ling, Q. & Zheng, S. S. Predicting dyslipidemia after liver transplantation: A significant role of recipient metabolic inflammation profile. World J. Gastroenterol. 26, 2374–2387. https://doi.org/10.3748/wjg.v26.i19.2374 (2020).

Ling, Q. et al. Association between donor and recipient TCF7L2 gene polymorphisms and the risk of new-onset diabetes mellitus after liver transplantation in a Han Chinese population. J. Hepatol. 58, 271–277. https://doi.org/10.1016/j.jhep.2012.09.025 (2013).

Ke, Q. H. et al. New-onset hyperglycemia immediately after liver transplantation: A national survey from china liver transplant registry. Hepatobiliary Pancreat Dis. Int. 17, 310–315. https://doi.org/10.1016/j.hbpd.2018.08.005 (2018).

Sato, K., Kawagishi, N., Fujimori, K., Ohuchi, N. & Satomi, S. Renal function status in liver transplant patients in the first month post-transplant is associated with progressive chronic kidney disease. Hepatol. Res. 45, 220–227. https://doi.org/10.1111/hepr.12339 (2015).

Acknowledgements

This research was supported by the National Natural Science Foundation of China (No. 82171757) and the Zhejiang Province Natural Science Foundation of China (LZ22H030004).

Author information

Authors and Affiliations

Contributions

Conceptualization, L.Q.; methodology, H.Z.; formal analysis, H.Z. and D.S.; investigation, L.Y., K.Q.; data curation, H.Z., L.Y., D.S., and K.Q.; writing-original draft preparation, H.Z. and L.Y.; writing-review and editing, Z.S. and L.Q.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

He, Z., Lin, Y., Dong, S. et al. Development and validation of a nomogram model for predicting chronic kidney disease after liver transplantation: a multi-center retrospective study. Sci Rep 13, 11380 (2023). https://doi.org/10.1038/s41598-023-38626-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-38626-4

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.