Abstract

Image-based characterization offers a powerful approach to studying geological porous media at the nanoscale and images are critical to understanding reactive transport mechanisms in reservoirs relevant to energy and sustainability technologies such as carbon sequestration, subsurface hydrogen storage, and natural gas recovery. Nanoimaging presents a trade off, however, between higher-contrast sample-destructive and lower-contrast sample-preserving imaging modalities. Furthermore, high-contrast imaging modalities often acquire only 2D images, while 3D volumes are needed to characterize fully a source rock sample. In this work, we present deep learning image translation models to predict high-contrast focused ion beam-scanning electron microscopy (FIB-SEM) image volumes from transmission X-ray microscopy (TXM) images when only 2D paired training data is available. We introduce a regularization method for improving 3D volume generation from 2D-to-2D deep learning image models and apply this approach to translate 3D TXM volumes to FIB-SEM fidelity. We then segment a predicted FIB-SEM volume into a flow simulation domain and calculate the sample apparent permeability using a lattice Boltzmann method (LBM) technique. Results show that our image translation approach produces simulation domains suitable for flow visualization and allows for accurate characterization of petrophysical properties from non-destructive imaging data.

Similar content being viewed by others

Introduction

The transition to a sustainable energy future requires a combination of greenhouse gas sequestration, long-term adoption of renewable sources of energy, and near-term fuel switching to cleaner available energy resources1. Approaches that could contribute to these goals include CO2 sequestration, subsurface H2 or compressed air storage, and natural gas recovery2,3,4,5,6. One important requirement for scalable and sustainable implementation of these technologies is more rapid and reliable characterization of porous media transport properties in order to identify viable candidate formations.

Image-based characterization—and nanoimaging in particular—combined with digital rock physics are critical to understanding geological porous media at the pore scale7,8,9,10. Non-destructive imaging modalities such as transmission X-ray microscopy (TXM), scanning transmission electron microscopy (STEM), and X-ray spectroscopy (XRS) allow for characterizing the petrophysical properties of a sample while preserving it for future experimentation11,12,13,14,15,16. On the other hand, destructive imaging modalities such as focused-ion beam scanning electron microscopy (FIB-SEM) obtain high-contrast/high-resolution images at the expense of destroying the sample17.

An emerging area of image-based porous media characterization is isoscale multimodal imaging, where two or more imaging modalities at the same resolution are acquired to characterize a single sample11,18. Multimodal imaging and image data translation is a promising approach to characterization that enables the advantages of two or more imaging modalities, namely sample-preservation and high-resolution19. In a multimodal imaging workflow such as that shown in Fig. 1, a sample is imaged using two or more imaging modalities at the same resolution, a model is trained to translate between modalities, and the synthesized images used to estimate petrophysical properties of the sample20,21,22. Multimodal image prediction or enhancement is common in medical imaging23,24,25, but this methodology has yet to gain prominence in source rock characterization. Indeed, little work exists on applying deep learning models to porous media images, with most work focusing on synthesizing26,27,28 or segmenting images29 rather than translating images across modalities.

Image prediction using multimodal imaging involves elements of single image super-resolution (SISR) and image-to-image translation. SISR seeks to predict a high resolution image from a low resolution input. Many models have been proposed for this problem, with the most successful being dictionary methods30,31,32 and deep learning-based methods33,34. Image-to-image translation meanwhile seeks to translate images between domains35. Deep learning models for image translation include neural style transfer algorithms36,37, paired image translation38, and unpaired image translation39. The most common deep learning models for these tasks are based on feed-forward convolutional neural networks (CNNs) and conditional generative adversarial networks (CGANs)38,40,41.

3D-to-3D image volume translation typically requires paired 3D training data, and consequently is only applicable in a limited number of contexts such as medical imaging42,43 where sufficient amounts of aligned 3D multimodal data is available. Multimodal imaging for source rock samples often contains a mixture of 2D surface imaging modalities, such as electron microscopy, and 3D volumetric imaging, such as CT-based modalities44. Consequently, a persistent challenge for multimodal image-based characterization of geologic samples is predicting 3D volumes from non-destructive image data when only 2D training data is available. This problem remains relatively unexplored, and no work exists addressing volume reconstruction from microscopy data in the context of geological porous media characterization.

This work presents a method for predicting high-contrast geological porous media image volumes from low-contrast, sample-preserving input data using deep learning models trained on only 2D paired multimodal image data. We introduce a new method for regularizing training of deep learning image reconstruction models to improve 3D volume prediction from 2D training data, further develop quantitative metrics for evaluating 2D and 3D multimodal image assimilation models, and construct simulation domains from the translated volumes to evaluate flow properties of geologic samples from reconstructed image volumes. While presented in the context of geological media, the method is applicable to other materials with fine microstructure.

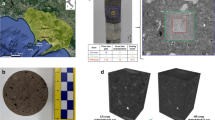

Multimodal image characterization workflow. (a) Multimodal image acquisition for a Vaca Muerta shale sample. After initial \(\mu \)-CT imaging (left), a region with minimal pyrite deposits is selected and milled to a 30 \(\mu \)m diameter cylindrical plug. This is imaged to produce a TXM image volume (middle). The plug is then successively focused ion beam milled and SEM imaged to create FIB-SEM image slices (right). (b) FIB-SEM and TXM image slices are aligned and normalized to create a paired 2D image dataset. (c) Image translation models are trained to predict FIB-SEM images from TXM images. These models include a continuity loss term to improve 3D volume translation when only 2D images are available. (d) Image volumes are predicted using the trained model. The volumes are synthesized by passing x–y image slices through the network independently to create the sequence of predicted FIB-SEM images. (e) Predicted FIB-SEM volumes are segmented into a simulation domain and petrophysical properties evaluated using digital rock physics techniques. Software: Powerpoint www.microsoft.com, Avizo www.thermofishwer.com.

Results

Two-dimensional image reconstruction

We first examine predicting 2D FIB-SEM image patches from TXM image patches. Results for the 2D-to-2D image prediction models are summarized in Table 1 and example images shown in Fig. 2b. For unregularized models, we test several different configurations of network architectures, training objectives, and upsampling factors (for the SISR models). We also train the pix2pix with Wasserstein GAN (WGAN) loss and SRGAN 4x upsampling with vanilla GAN loss with the proposed Jacobian regularization term to improve volume generation from 2D-to-2D models (Fig. 2a). These two models were chosen because they showed the strongest performance on peak signal-to-noise ratio (PSNR), structural similarity index metric (SSIM), and structural texture similarity index metric (STSIM).

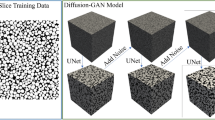

Image translation model. (a) Visualization of the Jacobian regularization approach proposed to improve volume prediction with 2D-to-2D models. z-direction gradients are assumed to be sparse and we encourage continuity in the input TXM pixels \(T_{ij}\) by penalizing the training by the Jacobian of the output image with respect to the input. (b) SRGAN image-to-image deep learning model. The model is trained with a vanilla or Wasserstein GAN loss, \(L_1\) image similarity loss, and optionally the proposed Jacobian loss to encourage continuity between input TXM slices. Software: Powerpoint www.microsoft.com.

Across all metrics, both the image translation and super-resolution models offer improvement over the unprocessed TXM images. STSIM and low density region segmentation show the largest improvement, while the high density segmentation showing minimal improvement over the raw TXM images. The WGAN models tend to outperform their vanilla GAN counterparts and GAN-based models perform a bit more strongly than the feed forward models in terms of PSNR and SSIM. The strongest performing GAN models are the WGAN models, particularly the pix2pix WGAN and SRGAN 2x WGAN models. The z-regularization results show that a Jacobian regularization term does not significantly impact the 2D-to-2D image translation results except for the low-density region segmentation. A comparison of image patches for original and regularized models (Fig. 3) shows that the regularization results in predicted FIB-SEM images with sparser x and y gradients and less noise. Furthermore, the z-regularization term causes the models to synthesize fewer low-density regions, resulting in reduced performance for low-density region segmentation.

2D-to-2D image translation results. Each row contains the input TXM image patch and ground truth FIB-SEM image patch, and translated image patches for the pix2pix (WGAN) and SRGAN 4x (Vanilla) models both with and without the continuity loss term. The Jacobian loss term causes the translation models to synthesize fewer details in the images. Specifically, we observe that fewer low-density regions are synthesized by the regularized models. Software: Powerpoint www.microsoft.com.

Three-dimensional volume prediction

We next synthesize image volumes using the trained models. The volumes are generated by passing each x–y slice independently through the 2D translation network and stacking along the z-axis to form a synthesized volume. Figure 4 shows image volumes generated with the pix2pix (WGAN) and SRGAN 4x (Vanilla) models for the original models, regularized models, and regularized models post-processed with median filtering. As shown in the image volumes, the z-regularization improves z-direction continuity, and the median filtering reduces “jittering” between image slices in the z-direction. We also see that the regularized models contain fewer low density regions, but as shown in Fig. 5, the low density regions are more continuous between slices than for the original models.

Test set volume renderings. Image volume is a \(128^3\) voxel volume from a part of the imaged TXM volume held out as a test set. Volumes are evaluated for the pix2pix (WGAN) and SRGAN 4x (Vanilla) models for the original model, model trained with z-regularization, and regularized model with median filter post-processing using a \(3 \times 1 \times 1\) structuring element. The x–z and y–z cross sections show improved z-direction continuity for the regularized and post-processed images. Software: Powerpoint www.microsoft.com, MATLAB www.mathworks.com.

Flow simulation domain generation. A TXM image volume is translated to a FIB-SEM volume using the original (top) and regularized (bottom) SRGAN 4X (Vanilla) model. The image cross-sections show the improved continuity in the generated FIB-SEM volume produced by the regularized model. The image volumes are then segmented into a lower density region using thresholding-based segmentation. This produces a simulation domain that includes kerogen and lower-density minerals where most flow in the volume is assumed to take place. A threshold value is chosen such that the produced lower-density regions form a connected set of voxels. Disconnected voxels are then discarded to create the final flow simulation domain. Software: Powerpoint www.microsoft.com, MATLAB www.mathworks.com, Avizo www.thermofisher.com.

We evaluate the quantitative similarity metrics for 3D image volume generation by synthesizing 5 \(128^3\) volumes from the test set TXM volume slices and computing the metrics for the x–y direction (the image generation plane) and the x–z and y–z directions (orthogonal to the image generation plane). We compute four image descriptors for each image in the synthesized volumes and test set: the area A(X), perimeter P(X), and mean curvature \(\chi (X)\) for the low-density regions, and the joint pixel value distribution \(p(T_{ij}, S_{ij})\). We then calculate the Kullback-Leibler divergence (KL divergence) between the synthesized and test set image descriptor distributions. The distribution of these descriptors should be similar between the test set images and the synthesized images along all image planes. Therefore, a smaller value of KL divergence indicates better model performance.

Table 2 summarizes the results for the unpaired image prediction metrics. The results for the gray level co-occurrence probability distribution show similar results regardless of the model used. The models with z-regularization show a significant improvement in the perimeter and Euler characteristic metrics. However, the area metric showed worse performance for the regularized models, likely because regularized models produce fewer low-density regions. Results also show that post-processing with a median filter produces worse results for the pix2pix (WGAN) model but improves the perimeter and Euler characteristic results for the SRGAN 4x (Vanilla) model.

Flow in reconstructed volumes

To demonstrate the application of the presented volume generation approach for evaluating petrophysical properties from nondestructive image data, we simulate flow of methane through volumes generated using the SRGAN 4x (Vanilla) model with and without regularization. This workflow is shown in Fig. 5. Using a three-dimensional, nineteen-velocity (D3Q19) lattice Boltzmann method (LBM), described in the Supplementary Information (SI), we simulate flow of methane in the z-direction at a temperature of 370 K, inlet pressure of 0.8 MPa inlet pressure, a pressure drop of \(0.8\times 10^{-7}\) MPa, and a kinematic viscosity of \(10^{-4}\; \text {cm}^2/\text {s}\). The flow simulations use a second-order slip boundary condition45,46. This boundary treatment captures slip velocities in complex pore geometries accurately via the inclusion of local Knudsen numbers47. Results from the pressure computations are shown in Table 3 and visualizations of the pressure field and streamlines are shown in Fig. 6. We observe that the choice of model may substantially affect the morphology of the low density regions and therefore the Knudsen number and apparent permeability. The apparent permeability values for both models are reasonable for a shale sample at the rock fabric scale, showing that the proposed image processing method enables both flow visualization and accurate computation of flow properties from non-destructive image data.

Comparison of pressure fields and flow streamlines in the original volume and in post-processed volumes created with a regularized model. The different models produce substantially different results in flow paths and pressure fields. The original model has more active cells in the simulation domain and therefore produces more flow streamlines. The streamlines for the regularized model, however, have greater continuity in the z direction. Software: Powerpoint www.microsoft.com, MATLAB www.mathworks.com.

Discussion

Both super-resolution and image translation models are shown to predict FIB-SEM image patches effectively from TXM input images. We also observed that for the GAN models, using the WGAN loss function tended to improve performance in terms of PSNR, SSIM, and STSIM, at the expense of synthesizing fewer low-density regions and reduced performance for low-density region segmentation. This presents a significant challenge when analyzing or visualizing flow through shale volumes, because most of the flow is assumed to take place in the kerogen and low-density mineral regions.

The z-regularization approach introduced is promising for improving 3D reconstruction. The image prediction results show that the z-regularization term has a minimal effect on the 2D quantitative error metrics while improving performance for some 3D image similarity metrics. In particular, z-regularization improves the Euler characteristic results that correlate with flow properties in the porous medium. Therefore this regularization is promising for replicating 3D structures in flow simulation domains. While z-regularization is shown to carry many advantages for 3D volume reconstruction, there remains room for improving particularly the quantitative performance of these models and applying this approach in related domains such as medical imaging. An additional significant drawback is that these models take much longer to train due to the extra backpropagation steps required to compute the Jacobian-vector products used in the regularization term.

The simulation results demonstrate the practical application of our models to visualize and characterize flow in a shale rock volume using only non-destructive imaging data. The choice of model, however, may impact the computed apparent permeability and flow paths in a given volume. LBM simulations predict slightly smaller apparent permeability and Knudsen numbers for the regularized model, likely due to the complex pore space connectivity in the computational domain. Removal of active cells and creating disconnected flow paths reduces the ease of flow through the domain, which manifests in simulations as a reduced overall velocity and a less permeable medium.

We assume here that the active cells do not contain organic matter, e.g. kerogen, and their entire volume is available to flow. Although the computed apparent permeabilities are reasonable for a shale sample at the rock fabric-scale, the results may represent an upper bound as a result of this assumption. In future work, we will incorporate the effects of organic content and low permeability regions in the simulation models. The approach presented here could be used to reconstruct low-density regions at the \({\mathscr {O}}(10^1 \text { nm})\) scale, then a generated pore structure obtained from high-resolution images (e.g. transmission electron microscopy images15) super-imposed on the low-density regions to obtain a simulation domain at the \({\mathscr {O}}(10^{0}\text { nm})\) scale. Similar approaches have been applied to other multiscale source rocks48, and presents a promising future direction for nondestructive image-based characterization of geological porous media.

Methods

Multimodal shale image dataset

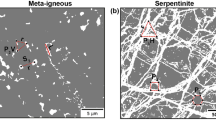

The dataset consists of paired TXM and FIB-SEM images and is described in detail elsewhere19. The TXM images were acquired at beamline 6-2c of the SLAC National Accelerator Laboratory (SLAC) using X-rays at 8 keV. The TXM projections were reconstructed with TXM-Wizard49 to produce an image volume of isotropic resolution of 31.2 nm/px. The FIB-SEM images were acquired on a FEI XL 835 DualBeam FIB/SEM instrument that milled and collected cross-sectional images perpendicular to the main axis of the cylinder. Instrument energy was 4kV, and the resolution was 33.6 nm/px in the x–y plane and variable z direction from 30 up to 50 nm. The TXM images were rescaled to be identical to FIB-SEM pixel resolution and aligned to achieve a paired image dataset. The final dataset contains 149 aligned 2D TXM and FIB-SEM grayscale image slices and a 3D TXM image volume consisting of 419 image slices. In both modalities, darker areas denote low density material or features that include: fractures, pores, organic matter and kerogen. Lighter areas denote high density minerals such as carbonates, silicates, barite, and pyrite.

Image prediction models

We use image translation37,38,39 and SISR33,34 models to predict FIB-SEM images from TXM images. These models are divided into two main families referred to as feedforward CNNs and CGANs. Feedforward CNNs map the input image \(I^{in}\) to the output image \(I^{out}\) using a CNN \(G(I^{in}) = {\hat{I}}^{out}\). These models are trained according to the objective:

where \(T_i\) is the input TXM image and \(S_i\) is the ground truth FIB-SEM image. We train using \(L_1\) loss because this has been shown to produce sharper images than \(L_2\) loss38. This model is effectively a nonlinear regression model where the CNN models the mapping between the input data and response values. The architecture for the neural network \(G(\cdot )\) can take many forms. In the feedforward CNN model, we use a 9-block ResNet architecture37. In the SRCNN model, the \(T_i\) image is downsampled by 2x or 4x and \(G(\cdot )\) is the SR-ResNet architecture34.

We also use the pix2pix38,39 and SRGAN34 CGAN models. These models consist of a generator network \(G(\cdot )\) that predicts the FIB-SEM image and a discriminator network \(D(\cdot )\) that evaluates the quality of an output image or pair of input/output images as being real or synthetic. These models are trained according to the objective as

We experiment with the vanilla GAN loss40 as

and the Wasserstein GAN (WGAN) loss with gradient penalty50,51 as

All models are implemented in Pytorch52, trained using a framework based on code for the pix2pix and CycleGAN38,39 models, optimized using the Adam optimizer53, and trained for 75 epochs total with a batch size of 10,000 randomly sampled \(128 \times 128\) image patches. We split the dataset of 149 paired image slices as 101 training, 12 validation, and 36 test slices. The large test set was chosen to ensure that the 36 test set 2D slices spanned 128\(^3\) voxel subvolumes of the 3D TXM volume. Image patches for training and testing are chosen to contain at least 75% non-zero pixels and 95% non-artifact pixels. We use a learning rate of \(2 \times 10^{-4}\) for the first 50 epochs and anneal the learning rate by factor of 10 for the remaining epochs, as this was found to produce stable training and to train all models to convergence. \(\lambda _{GP} = 10\) is used for all WGAN models per results from previous work on WGANs51.

z-regularization approach

We propose to reconstruct 3D image volumes with 2D-to-2D image models by enforcing continuity between input image slices, and thereby improve continuity in the z-direction of the predicted image volume. Our approach draws inspiration from existing work on robust learning54. Robust learning seeks to reduce the sensitivity of the class logits to the input data of the network; in our approach, we seek to reduce the sensitivity of the generator network \(G(\cdot )\) to perturbations in the input image.

Our approach assumes that \(\nabla _{z} S\) is sparse for any SEM image S. Therefore, we enforce continuity in the predicted image volumes by regularizing with \(||\nabla _{z} S||_1\). This term, however, is not easily computed. For \({\hat{S}} = G(T)\), where \({\hat{S}}\) is the predicted SEM image and T is the input nano-CT image, we observe that

The squared Frobenius norm of the Jacobian can be computed efficiently. During training, we add this term to the original objective function to train a regularized model as

Paired image similarity metrics

We evaluate paired image similarity using four similarity metrics: peak signal to noise ratio (PSNR), structural similarity index metric (SSIM), structural texture similarity index metric (STSIM) and a segmentation-based similarity metric.

PSNR: measures the similarity between images in decibels (dB) and is proportional to the inverse of mean-squared error (MSE). For images with pixel values normalized to have \(I_{ij} \in [0,1]\), PSNR is computed as

SSIM: measures the structural similarity between images. SSIM is based on the similarity of spatial statistics of the image and is calculated as

\(C_1\), \(C_2\), and \(C_3\) are small smoothing factors, \(\mu _X\) and \(\sigma _X\) are respectively the mean and standard deviation of the pixel values in image patch X, and usually \(\alpha =\beta =\gamma =1\).

STSIM: measures textural similarity between images and is based in part on SSIM55. STSIM is designed to measure perceptual similarity between images and is computed as

where \(\rho _X\) computes the correlation coefficient of the image pixels in image X with offset k and \(\ell \) in the x and y directions, respectively. The additional terms have been shown55 to improve image retrieval by measuring perceptual similarities rather than structural.

Segmentation-based Image Similarity: similar inputs should produce FIB-SEM images that are segmentable into the same rock phases19. Hence, we evaluate the outputs of the GAN model by segmenting the outputs and comparing this to the segmentation of the ground truth image38. Let \(Seg_k(\cdot )\) be the segmentation classifier for class k that maps input image I to a binary mask of the pixels selected for class k. We compute the image similarity metric as the Dice score of the segmentation as

where \(\varepsilon \) is a small smoothing factor to account for empty classes. Here we segment the images into five regions: background, low density, medium density, high density, and surface charging artifacts. The classifier is implemented in Ilastik56 and uses a random forest classifier to segment images based on pre-computed image features.

Unpaired image similarity metrics

The lack of ground truth volumetric data precludes evaluation synthetized image volumes using paired image metrics. We propose to evaluate the 3D reconstruction by measuring the similarity between the distribution of structural features for generated and ground truth images. We compute this metric by using a function that maps an image I (either single modality or paired multimodal image) to a scalar value, then compute the Kullback-Leibler Divergence (KL divergence) between the distribution for synthetic images \(q(f({\hat{I}}))\) and ground truth images \(p(f(I^{\text {gt}}))\). KL divergence is computed as

KL divergence takes values on the range \([0, +\infty )\), so a smaller value indicates a closer match between the probability distributions q(x) and p(x).

We compute this metric for features in the x–y plane (image generation plan) and the x–z and y–z planes (orthogonal to the image generation plane). This method assumes that structural features in porous media at the nanoscale are approximately isotropic and therefore the distribution of structural features in all image planes should match the distribution from ground truth test set images. We compute this metric for Minkowski functionals and the pixel value joint distribution.

Minkowski functionals distributions: Minkowski functionals measure topological features of images and provide structural descriptors of a solid volume and are a common metric for characterizing porous materials57. In two dimensions, there are three Minkowski functionals

where \(\kappa (x) = \frac{1}{R(x)}\) is the inverse of the principal radius. We measure structural similarity of the predicted FIB-SEM images by computing the Minkowski functionals58 for the low-density regions segmented with the Ilastik classifier used for the paired similarity metrics.

Pixel value joint distribution: we form the joint histogram between TXM and FIB-SEM images and normalize to create a joint probability distribution of pixel values \(p(T_{ij}, S_{ij})\), then compute the KL divergence between the pixel value distributions for real and synthetic images.

Computation of petrophysical properties

We estimate petrophysical properties of source rocks by processing predicted FIB-SEM image volumes into simulation domains then applying digital rock physics techniques to simulate flow through the rock volume. The active cells for the simulation domain are the segmented lower density voxels. We identify and remove disconnected active cells to improve the stability and convergence behavior of the numerical simulations. Using results from LBM flow simulations, we calculate apparent gas permeability as47

where \(\mu \) is the gas dynamic viscosity, L is the domain length along the main direction of flow, \(\bar{q_o}\) is the average fluid velocity at the outlet, and \(p_i\) and \(p_o\) are the inlet and outlet pressures, respectively. A shortcoming of this model, with respect to the permeability values, is the underlying assumption that the simulation domain is open pore space. In reality, the low-density regions are mixes of kerogen and pore space. Therefore, this approach provides an upper bound on the permeability rather than an exact number. Nevertheless, the models allow us to visualize possible flow paths through the sample volume and evaluate the impact of different volume reconstruction methods on the computed petrophysical properties.

References

EIA/ARI. EIA/ARI world shale gas and shale oil resource assessment. Tech. Rep., Energy Information Administration (2013).

Benson, S. M. & Cole, D. R. CO\(_2\) sequestration in deep sedimentary formations. Elements 4, 325–331. https://doi.org/10.2113/gselements.4.5.325 (2008).

Hassanpouryouzband, A., Joonaki, E., Edlmann, K. & Haszeldine, R. S. (2021) Offshore geological storage of hydrogen: Is this our best option to achieve net-zero?. ACS Energy Lett. 66, 2181–2186. 10.1021/acsenergylett.1c00845.

Schoenung, S. Economic analysis of large-scale hydrogen storage for renewable utility applications. In International Colloquium on Environmentally Preferred Advanced Power Generation, 8e10 (Citeseer, 2011).

Oldenburg, C. M. & Pan, L. Porous media compressed-air energy storage (PM-CAES): Theory and simulation of the coupled wellbore–reservoir system. Transp. Porous Media 97, 201–221. https://doi.org/10.1007/s11242-012-0118-6 (2013).

Zoback, M. D. & Kohli, A. H. UnconvEntional Reservoir Geomechanics (Cambridge University Press, 2019).

Blunt, M. J. Multiphase Flow in Permeable Media: A Pore-Scale Perspective (Cambridge University Press, 2017).

Semnani, S. J. & Borja, R. I. Quantifying the heterogeneity of shale through statistical combination of imaging across scales. Acta Geotech. 12, 1193–1205 (2017).

Kiss, A. M. et al. Synchrotron-based transmission x-ray microscopy for improved extraction in shale during hydraulic fracturing. In X-ray Nanoimaging: Instruments and Methods II Vol. 9592, 95920O (International Society for Optics and Photonics, 2015).

De Andrade, V. et al. Nanoscale 3d imaging at the advanced photon source. SPIE Newsroom 10, 006461 (2016).

Aljamaan, H., Ross, C. M. & Kovscek, A. R. Multiscale imaging of gas storage in shales. SPE J. 22, 1–760 (2017).

Panahi, H. et al. A 4d synchrotron x-ray tomography study of the formation of hydrocarbon migration pathways in heated organic-rich shale. arXiv preprint arXiv:1401.2448 (2014).

Vega, B., Ross, C. M. & Kovscek, A. R. Imaging-based characterization of calcite-filled fractures and porosity in shales. SPE J. 20, 810–823 (2015).

Zhang, Y. et al. Determination of local diffusion coefficients and directional anisotropy in shale from dynamic micro-ct imaging. In Unconventional Resources Technology Conference, Austin, Texas, 24–26 July 2017, 3083–3095 (Society of Exploration Geophysicists, American Association of Petroleum, 2017).

Froute, L. & Kovscek, A. R. Nano-imaging of shale using electron microscopy techniques. In Proceedings of the Unconventional Resources Technology Conference (URTEC) (2020).

Frouté, L., Wang, Y., McKinzie, J., Aryana, S. A. & Kovscek, A. R. Transport simulations on scanning transmission electron microscope images of nanoporous shale. Energies 13, 6665 (2020).

Sondergeld, C. H., Ambrose, R. J., Rai, C. S. & Moncrieff, J. Micro-structural studies of gas shales. In SPE Unconventional Gas Conference (Society of Petroleum Engineers, 2010).

Guan, K. M., Ross, C. M. & Kovscek, A. R. Multimodal visualization of vaca muerta shale fabric before and after maturation. Energy Fuels 6, 66. https://doi.org/10.1021/acs.energyfuels.1c00037 (2021).

Anderson, T. I., Vega, B. & Kovscek, A. R. Multimodal imaging and machine learning to enhance microscope images of shale. Comput. Geosci. 145, 104593. https://doi.org/10.1016/j.cageo.2020.104593 (2020).

Okabe, H. & Blunt, M. J. Pore space reconstruction of vuggy carbonates using microtomography and multiple-point statistics. Water Resour. Res. 43, 3–7. https://doi.org/10.1029/2006WR005680 (2007).

Guan, K., Anderson, T., Cruex, P. & Kovscek, A. Reconstructing porous media using generative flow networks. Comput. Geosci. 6, 66 (2020).

Anderson, T. I., Guan, K. M., Vega, B., Aryana, S. & Kovscek, A. R. RockFlow: Fast generation of synthetic source rock images using generative flow models. Energies 6, 66 (2020).

Torrado-Carvajal, A. et al. Fast Patch-Based Pseudo-CT Synthesis from T1-Weighted MR Images for PET/MR Attenuation Correction in Brain Studies. J. Nucl. Med. 57, 136–143 (2016). https://doi.org/10.2967/jnumed.115.156299.

Cao, X. et al. Deep learning based inter-modality image registration supervised by intra-modality similarity. In International Workshop on Machine Learning in Medical Imaging 55–63 (Springer, 2018).

Zaharchuk, G., Gong, E., Wintermark, M., Rubin, D. & Langlotz, C. P. Deep learning in neuroradiology. Am. J. Neuroradiol. 39, 1776–1784. https://doi.org/10.3174/ajnr.A5543 (2018).

Okabe, H. & Blunt, M. J. Prediction of permeability for porous media reconstructed using multiple-point statistics. Phys. Rev. E Stat. Phys. Plasmas Fluids Relat. Interdiscip. Top. 70, 10. https://doi.org/10.1103/PhysRevE.70.066135 (2004).

Mosser, L., Dubrule, O. & Blunt, M. J. Reconstruction of three-dimensional porous media using generative adversarial neural networks. Phys. Rev. E 96, https://doi.org/10.1103/PhysRevE.96.043309 (2017). arXiv:1704.03225.

Kamrava, S., Tahmasebi, P. & Sahimi, M. Enhancing images of shale formations by a hybrid stochastic and deep learning algorithm. Neural Netw. 118, 310–320. https://doi.org/10.1016/j.neunet.2019.07.009 (2019).

Yun, W., Liu, Y. & Kovscek, A. R. Deep learning for automated characterization of pore-scale wettability. Adv. Water Resour. 144, 103708 (2020).

Zhang, K., Gao, X., Tao, D. & Li, X. Multi-scale dictionary for single image super-resolution. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 6, 66. https://doi.org/10.1109/CVPR.2012.6247791 (2012).

Yang, J., Wang, Z., Lin, Z., Cohen, S. & Huang, T. Coupled dictionary training for image super-resolution. IEEE Trans. Image Process. 21, 3467–3478 (2012).

Wang, S., Zhang, L., Liang, Y. & Pan, Q. Semi-coupled dictionary learning with applications to image super-resolution and photo-sketch synthesis. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 66, 2216–2223. https://doi.org/10.1109/CVPR.2012.6247930 (2012).

Dong, C., Loy, C. C., He, K. & Tang, X. Image super-resolution using deep convolutional networks. IEEE Transactions on Pattern Analysis Mach. Intell. 38, 295–307. https://doi.org/10.1109/TPAMI.2015.2439281 (2016). arXiv:1501.00092.

Ledig, C. et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. https://doi.org/10.1109/CVPR.2017.19 (2016). arXiv:1609.04802.

Efros, A. A. & Freeman, W. T. Image quilting for texture synthesis and transfer. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques, 341–346 (ACM, 2001).

Gatys, L. A., Ecker, A. S. & Bethge, M. Texture synthesis using convolutional neural networks. Neural Image Process. Syst. 1–10, https://doi.org/10.1109/CVPR.2016.265 (2015). arXiv:1505.07376.

Johnson, J., Alahi, A. & Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. Lect. Notes Comput. Sci. (including subseries Lect. Notes Artif. Intell. Lect. Notes Bioinform.) 9906 LNCS, 694–711, https://doi.org/10.1007/978-3-319-46475-6_43 (2016). arXiv:1603.08155.

Isola, P., Zhu, J.-Y., Zhou, T. & Efros, A. A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 1125–1134 (2017).

Zhu, J. Y., Park, T., Isola, P. & Efros, A. A. Unpaired image-to-image translation using cycle-consistent adversarial networks. Proc. IEEE Int. Conf. Comput. Vis.https://doi.org/10.1109/ICCV.2017.244 (2017). arXiv:1703.10593.

Goodfellow, I. J. et al. Generative adversarial networks (2014). arXiv:1406.2661.

Mirza, M. & Osindero, S. Conditional generative adversarial nets (2014). arXiv:1411.1784.

Nie, D., Cao, X., Gao, Y., Wang, L. & Shen, D. Estimating CT image from MRI data using 3d fully convolutional networks. In Deep Learning and Data Labeling for Medical Applications 170–178 (Springer, 2016). https://doi.org/10.1007/978-3-319-46976-8_18.

Bradshaw, T., Zhao, G., Jang, H., Liu, F. & McMillan, A. 2018 Feasibility of deep learning-based PET/MR attenuation correction in the pelvis using only diagnostic MR images. Tomography 4, 138–147.

Vega, B., Andrews, J. C., Liu, Y., Gelb, J. & Kovscek, A. Nanoscale visualization of gas shale pore and textural features. In Unconventional Resources Technology Conference, 1603–1613 (Society of Exploration Geophysicists, American Association of Petroleum, 2013).

Zhang, R., Shan, X. & Chen, H. Efficient kinetic method for fluid simulation beyond the navier-stokes equation. Phys. Rev. E 74, 046703. https://doi.org/10.1103/PhysRevE.74.046703 (2006).

Wang, Y. & Aryana, S. A. Coupled confined phase behavior and transport of methane in slit nanopores. Chem. Eng. J. 404, 126502. https://doi.org/10.1016/j.cej.2020.126502 (2021).

Wang, Y. & Aryana, S. A. Pore-scale simulation of gas flow in microscopic permeable media with complex geometries. J. Nat. Gas Sci. Eng. 81, 103441. https://doi.org/10.1016/j.jngse.2020.103441 (2020).

Shams, R., Masihi, M., Boozarjomehry, R. B. & Blunt, M. J. Coupled generative adversarial and auto-encoder neural networks to reconstruct three-dimensional multi-scale porous media. J. Petroleum Sci. Eng. 186, 106794. https://doi.org/10.1016/j.petrol.2019.106794 (2020).

Liu, Y. et al. Txm-wizard: a program for advanced data collection and evaluation in full-field transmission x-ray microscopy. J. Synchrotron Radiat. 19, 281–287 (2012).

Arjovsky, M., Chintala, S. & Bottou, L. Wasserstein gan (2017). arXiv:1701.07875.

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V. & Courville, A. Improved training of wasserstein gans (2017). arXiv:1704.00028.

Paszke, A. et al. Pytorch: An imperative style, high-performance deep learning library. In Wallach, H. et al. (eds.) Advances in Neural Information Processing Systems 32, 8024–8035 (Curran Associates, Inc., 2019).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization (2017). arXiv:1412.6980.

Hoffman, J., Roberts, D. A. & Yaida, S. Robust learning with Jacobian regularization (2019). arXiv:1908.02729.

Xiaonan Zhao, Reyes, M. G., Pappas, T. N. & Neuhoff, D. L. Structural texture similarity metrics for retrieval applications. In 2008 15th IEEE International Conference on Image Processing, 1196–1199, https://doi.org/10.1109/ICIP.2008.4711975 (2008).

Berg, S. et al. Ilastik: Interactive machine learning for (bio) image analysis. Nat. Methods 16, 1226–1232 (2019).

Armstrong, R. T. et al. Porous media characterization using minkowski functionals: Theories, applications and future directions. Transp. Porous Media 130, 305–335 (2019).

Ohser, J. & Mücklich, F. Statistical Analysis of Microstructures in Materials Science (Wiley, 2000).

Acknowledgements

This work was supported as part of the Center for Mechanistic Control of Unconventional Formations (CMC-UF), an Energy Frontier Research Center funded by the U.S. Department of Energy (DOE), Office of Science, Basic Energy Sciences (BES), under Award # DE-SC0019165. Use of the Stanford Synchrotron Radiation Lightsource, SLAC National Accelerator Laboratory, is supported by the U.S. Department of Energy, Office of Science, Office of Basic Energy Sciences under Contract No. DE-AC02-76SF00515. Part of this work was performed at the Stanford Nano Shared Facilities (SNSF), supported by the National Science Foundation under award ECCS-1542152. TA is also supported by the Siebel Scholars Foundation. Thank you to Laura Frouté, Cynthia Ross, Kelly Guan, Yijin Liu (SLAC), and Kevin Filter (Semion, LLC) for their help in developing this work. Code for this project was based on that provided for the pix2pix/CycleGAN papers38,39 and previous work on robust learning54, and is available at https://github.com/supri-a/TXM2SEM.

Author information

Authors and Affiliations

Contributions

T.A. developed and implemented the image reconstruction approach and created the simulation domains, B.V. acquired and curated the image dataset, J.M. performed the permeability calculations and flow visualization, S.A.A. advised on this project, and A.R.K. was the principal investigator. S.A.A. and A.R.K. supervised. All authors wrote and reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Anderson, T.I., Vega, B., McKinzie, J. et al. 2D-to-3D image translation of complex nanoporous volumes using generative networks. Sci Rep 11, 20768 (2021). https://doi.org/10.1038/s41598-021-00080-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-00080-5

This article is cited by

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.