Abstract

Parkinson’s disease (PD) is a neurodegenerative disorder characterized by motor impairments such as tremor, bradykinesia, dyskinesia, and gait abnormalities. Current protocols assess PD symptoms during clinic visits and can be subjective. Patient diaries can help clinicians evaluate at-home symptoms, but can be incomplete or inaccurate. Therefore, researchers have developed in-home automated methods to monitor PD symptoms to enable data-driven PD diagnosis and management. We queried the US National Library of Medicine PubMed database to analyze the progression of the technologies and computational/machine learning methods used to monitor common motor PD symptoms. A sub-set of roughly 12,000 papers was reviewed that best characterized the machine learning and technology timelines that manifested from reviewing the literature. The technology used to monitor PD motor symptoms has advanced significantly in the past five decades. Early monitoring began with in-lab devices such as needle-based EMG, transitioned to in-lab accelerometers/gyroscopes, then to wearable accelerometers/gyroscopes, and finally to phone and mobile & web application-based in-home monitoring. Significant progress has also been made with respect to the use of machine learning algorithms to classify PD patients. Using data from different devices (e.g., video cameras, phone-based accelerometers), researchers have designed neural network and non-neural network-based machine learning algorithms to categorize PD patients across tremor, gait, bradykinesia, and dyskinesia. The five-decade co-evolution of technology and computational techniques used to monitor PD motor symptoms has driven significant progress that is enabling the shift from in-lab/clinic to in-home monitoring of PD symptoms.

Similar content being viewed by others

Introduction

Parkinson’s disease (PD) is a complex neurodegenerative disorder commonly characterized by motor impairments such as tremor, bradykinesia, dyskinesia, and gait abnormalities1. Proper assessment of PD motor impairments is vital for clinical management of the disease2,3. Appropriate timing of dopaminergic medications4 to avoid sudden increases in symptom severity5 and selection for interventions such as deep brain stimulation6 both require precise understandings of symptom fluctuations in patients with PD. In addition, objective characterization of non-motor manifestations of PD such as sleep disorders, gastrointestinal symptoms, and psychiatric symptoms are needed to understand long-term disease progression3.

Characterization of motor and non-motor PD symptoms traditionally relied on the Unified Parkinson’s Disease Rating Scale (UPDRS), a PD severity rating system with four parts related to (I) Mentation, Behavior and Mood, (II) Activities of Daily Living, (III) Motor, and (IV) Complications7. The UPDRS was eventually updated by the Movement Disorder Society (MDS), creating the MDS-UPDRS, in an attempt to reduce subjectivity in the scale8. Clinicians also use other rating systems such as the WHIGET Tremor Rating Scale for action tremor9 and the modified bradykinesia rating scale (MRBS) for bradykinesia10. However, these rating systems suffer from two main flaws. First, they lack granularity during disease or medication cycles, as they only provide a snapshot view of a patient’s symptoms as seen during in-clinic visits. In addition, when assessing PD symptoms outside of the clinic, physicians must rely on patient diaries or recall, which can be inaccurate2. Second, these rating systems are inherently subjective, leading to high inter- and intra-rater variability3.

Addressing these flaws is vital to ensure proper diagnosis and management of patients with PD. To that end, considerable efforts have been made to develop objective, at-home, and automated methods to monitor the main motor symptoms characteristic of PD. Leveraging motion sensor and, in some instances, video-based technologies can first enable physicians to take data-driven approaches to PD diagnoses. Adding at-home patient monitoring through smart devices (e.g., smartphones, watches) could then enable physicians to adjust treatment plans based on patient activity data. The end goal of these technologies is to achieve continuous, at-home monitoring, which will require continued research using data from at-home, continuous studies, rather than applying laboratory data to develop at-home solutions. This review aims to summarize the co-evolution of the technologies and computational methods used to assess and monitor common motor symptoms of PD such as tremor, gait abnormalities, bradykinesia, and dyskinesia.

Review of literature

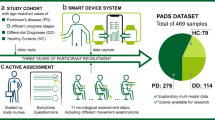

Technology

The technology used to diagnose and monitor PD has evolved significantly over time (Fig. 1A). Most notably, this technology has progressed from laboratory to at-home/everyday settings, enabling more robust data collection related to PD symptoms. This section describes this progression, along with the purpose and advantages of different technologies.

A In the 1970s, the main technologies used were lab-based, such as EMG and potentiometer measurements. Adoption of lab-based accelerometers began in the late 1980s and continued until the early 2000s when smaller devices such as tablets and wearable accelerometers started being leveraged. Since the late 2010s, smart devices and apps on those devices were the primary technologies used for symptom monitoring. Over time, the evolution of technology has enabled greater and more continuous data collection. B Since the 1970s, computational and statistical techniques such as frequency domain analyses of accelerometer data have enabled researchers and clinicians to quantify symptom severity in patients with PD. Improvements in technologies used to monitor symptoms have enabled increased data collection, allowing for the growth in adoption of machine learning techniques. Supervised techniques were applied first to analyze symptom data, followed by unsupervised techniques.

Laboratory-based technologies to assess PD symptoms had two main purposes: (1) develop methodologies to diagnose PD/categorize severity (e.g., distinguish PD from similar neurological conditions) and (2) set the foundation for smaller, more portable, and more user-friendly technologies that could assist in PD diagnosis and monitoring in the future (Table 1).

Laboratory-based electromyography (EMG) techniques were among the first technologies used to assess PD. More specifically, the data collected using these techniques was primarily meant to help distinguish/diagnose PD from similar conditions or quantify disease progression. In 1984, Bathien et al. quantified tremor of the head, hands, and lower extremities with EMG11. The group found that analyzing phase-shifts between bursts of EMG activity in agonist-antagonist muscles enabled categorization between the tremor seen in PD and that of tardive dyskinesia, creating one of the first quantitative methodologies for distinguishing PD from other conditions. In-lab EMG was also leveraged to quantify and monitor gait abnormalities in patients with PD. EMG data helped distinguish between normal and “Parkinsonian” gait and quantify response to therapy over time12. Similar studies were conducted to assess other symptoms of PD. In 1979, Milner-Brown et al. reported that needle-based hand EMG detected abnormal motor unit properties during muscle contraction that could be used to track progression of bradykinesia13. Of note, these EMG-based techniques were not meant for making initial PD diagnoses, but were rather used for tracking progression of already established disease.

Starting in the latter half of the 1980s, researchers began moving past EMG and towards less invasive methods. The technologies developed during this era also attempted to diagnose PD in addition to monitoring/quantifying symptoms. The initial techniques developed varied widely. Some groups tested potentiometer-based systems that could monitor multiple symptoms at once14, allowing for “one stop” assessments of, for example, how patients were responding to pharmacologic therapy. Laser-based technologies were also popular. Beuter et al. developed a laser system that could measure hand movements to distinguish between healthy controls and patients with PD15, while Weller et al. developed a system to track how gait abnormalities changed in response to various medications16. Though these technologies were less invasive and more portable than EMG, their use was often limited to special laboratory environments (e.g., areas with laser-safety equipment) and required significant expertise to operate17. Accelerometers and gyroscopes addressed both of these concerns, thereby solidifying them as two of the main technologies that defined the next era of PD monitoring. The use of accelerometers and gyroscopes enabled increased data collection, thereby improving the granularity with which researchers were able to monitor and assess patients with PD. Early use of accelerometers and gyroscopes collected in-lab data in one axis and looked to differentiate between PD and other conditions. Deuschl et al. used a monoaxial accelerometer to demonstrate that time series analysis alone was sufficient to differentiate between PD and essential tremor18. The use of tri-axial accelerometers and gyroscopes improved classification accuracy and allowed for more robust in-lab measurements. The tri-axial accelerometers employed by Spyers-Ashby et al. in 1999 lead to greater than 60% classification accuracy between control, essential tremor, multiple sclerosis, and PD19. Additionally, Rajaraman et al. demonstrated that using an increased number of tri-axial accelerometers on various parts of the hand, forearm, and arm allowed for quantification of tremor despite altered hand positions and orientation20. Seminal studies by the van Hilten group also demonstrated that tri-axial accelerometry was beneficial in identifying and characterizing tremor, bradykinesia, and dyskinesia21,22,23,24.

The use of wearable accelerometers and gyroscopes extended to quantifying other PD symptoms. Data from tri-axial accelerometers and gyroscopes on various parts of the body (e.g., wrists, index finger, back) allowed for models to estimate UPDRS scores and determine bradykinesia severity under both scripted and unscripted conditions25,26,27,28. Salarian et al. investigated using tri-axial accelerometers and gyroscopes along with inertial sensors to track postural instability gait difficulty (PIGD sub score of UPDRS III) during gait-assessment turning trials, reporting that patients with PD had significantly longer turning duration and delay before initiating a turn29. Similar findings were reported by Moore et al., who showed that freezing-of-gait (FoG) identification based on frequency characteristics of lower extremity motion correlated strongly (interclass correlation >0.7) with clinical assessments by specialists30. The use of accelerometers to identify FoG has been reported by many other groups as well31,32,33,34. Multiple studies investigating dyskinesia severity used tri-axial accelerometers, gyroscopes, and/or magnetometers on various body parts (e.g., shoulder, wrist, ankle, waist) and found strong correlations between the magnitudes of dyskinesia measured by devices to those observed by clinicians35,36,37.

At the same time, in-lab methodologies were being developed specific to quantifying and better understanding certain manifestations of PD. Unique to bradykinesia was the use of computer game-based technologies. In 1999, Giovannoni et al. introduced the BRAIN TEST as a computer-based way to monitor the progression of bradykinesia in PD. By requiring participants to use their index fingers to alternately strike the “S” and “;” keys on a standard computer keyboard, the BRAIN TEST provided a rapid and objective measurement of upper-limb motor function38. Allen et al. built upon Giovannoni’s work and developed a joystick and toy steering wheel-based computer test that was able to discriminate pathologic bradykinesia of varying severity39. Espay et al. studied the effect of virtual reality (VR) and audio-based gait feedback in identifying and correcting gait abnormalities in PD patients as they walked on an in-lab four meter GAITRite electronic walkway. Overall, nearly 70% of patients improved by at least 20% in either walking velocity, stride length, or both40. Bachlin et al. developed a similar correction-focused platform that detected FoG in patients with PD and provided audio cues to resume walking. The system detected FoG events in real-time with a sensitivity of 73% and specificity of 82%41. Visually cued FoG correction platforms have been developed using technologies such as Google Glass42. Finally, Rao et al. reported a video-based facial tracking algorithm that assessed severity of face and neck dyskinesia during a speech task. The calculated severity scores showed a high correlation to dyskinesia ratings by neurologists43.

Leveraging the data and analyses from in-lab studies, researchers began to develop methodologies for not just monitoring, but also diagnosing PD outside of the lab. Initial studies in this area included work by van Hilten et al. in which patients wore small accelerometers over the course of six days and completed quality of life surveys, enabling the first objective measures of dyskinesia44. Tremor analyses continued incorporating progressively more wearable accelerometers and enabling accurate classification between PD, essential tremor patients and controls while starting to step outside the boundaries of the lab45,46. Tsipouras et al. demonstrated that using multiple, wearable accelerometers and gyroscopes allowed for effective monitoring of patients while performing activities of daily life under real-life, but simulated, conditions47. Finally, using accelerometers embedded in a pen along with other sensors (e.g., touch recording plate), Papapetropoulos et al. showed the ability of multiple, small sensors to discriminate types of pathological tremor48.

Over the past decade, monitoring of PD symptoms has experienced two thematic changes. First, monitoring has become more remote and accessible due to the ease of use and widespread availability of more wearable accelerometers/gyroscopes and smartphones with those devices built-in. Second, monitoring has become more continuous through the use of web and mobile applications. Together, these changes are making way for more smart technology-mediated assessment of PD, with platforms for diagnosis currently in development (Table 2).

Wearable sensors are making way for more remote assessment of PD symptoms. Yang et al. found that a single, small tri-axial accelerometer attached to the belt buckle enabled estimation of multiple gait parameters such as cadence, step regularity, stride regularity and step symmetry to be estimated in real-time, allowing for immediate quantification of gait49. Klucken et al. also reported the use of a small, heel-clipped device that achieved a classification accuracy of 81% differentiating between PD patients and healthy controls50. More recently, a study of insole sensors enabled detection of PD-related FoG episodes with 90% accuracy51 and wrist-worn accelerometers achieved “good to strong” agreement with clinical ratings of resting tremor and bradykinesia, in addition to discriminating between treatment-caused changes in motor symptoms52. Though some of these studies were conducted in laboratory settings, the collective results indicate that patients could wear similar devices at home, enabling remote mobility assessment. Studies specifically assessing wearable technologies’ ability to track motor symptoms in at-home settings have reported high compliance and clinical utility26,53,54,55,56,57.

In 2011, Chen et al. introduced MercuryLive, a web-based system that integrated data from wearable sensors and qualitative patient surveys for real-time, in-home monitoring of symptoms. Specifically, the system was used to guide potential changes in medications for patients with later-stage disease58. The advantage of such systems over sensor-only platforms is the ability to more seamlessly collect qualitative patient data, allowing clinicians and researchers to better contextualize quantitative sensor data. Other web application-based systems, like the PERFORM system presented by Cancela et al. in 2013, continued deploying wearable accelerometers and gyroscopes, but expanded the functionalities of the associated web application to include medication adherence questionnaires, food diaries, and the PDQ-39 questionnaire59, further expanding the qualitative information that supplements the objective data collected by wearable devices.

In-home monitoring became even more practical following the adoption of smartphones and other smart devices60,61. In 2011, Kostikis showed the feasibility of remote tremor monitoring using an Apple iPhone 3 G’s built-in accelerometer and gyroscope62. As recently as 2020, van Brummelen et al. tested seven consumer product accelerometers in smartphones (e.g., iPhone 7) and consumer smart devices (e.g., Huawei watch) and found that these products performed comparably to laboratory-grade accelerometers when assessing the severity of certain PD symptoms63. Smart tablets have also been shown to be helpful through the use of spiral drawing tests whose results significantly correlated with UPDRS scores and with the results of other tests including the BRAIN Test64.

Further expansion of smart devices came with the advent of user-friendly mobile applications such as the Fox Wearable Companion app developed by the Michael J. Fox Foundation. Silva de Lima et al. showed that using the app along with an Android smartphone and Pebble smartwatch resulted in high patient engagement and robust quantitative and qualitative data collection for clinicians to monitor PD progression and medication adherence65. Prince et al. report success using an independently designed iOS application66. Use of smartwatches in conjunction with such mobile applications also allows for cloud-based data storage, thereby enabling research and clinical teams to more effectively monitor symptom progression and severity in real-time67. In 2021, Powers et al. developed the “Motor fluctuations Monitor for Parkinson’s Disease” (MM4PD) system that used continuous monitoring from an Apple Watch to quantify resting tremor and dyskinesia. MM4PD strongly correlated with evaluations of tremor severity, aligned with expert ratings of dyskinesia, and matched clinician expectations of patients 94% of the time2. Multiple other groups, including Keijsers et al., have presented solutions that can assess motor fluctuations in real or simulated home settings using either wearable sensors68,69,70,71 or smart devices72,73. These types of solutions are particularly important for PD monitoring since assessing symptom fluctuations can give clinicians insight into medication dosing, disease severity, and even symptom triggers (e.g., a patient has worse tremor when driving compared to washing dishes). Monitoring fluctuations using smart devices can be particularly useful, as the device can document what a patient was doing when symptoms worsened, what time of day it happened, among other important environmental factors, providing clinicians a more wholistic picture of a patient’s disease. Data collected from fluctuation monitoring could also inform whether certain patients might be candidates for procedures such as deep brain stimulation.

Finally, multiple studies have proposed using technologies other than accelerometers and gyroscopes (either stand-alone or in smartphones). Instead, some studies used computer vision-based algorithms to assess data from video cameras, time-of-flight sensors, and other motion devices74,75,76. In the future, similar video analysis technologies could be combined with existing video platforms (e.g., Zoom, FaceTime) to regularly and reliably monitor motor impairments outside of the clinic. Significant work has also been conducted assessing the feasibility of using voice recordings to monitor and even diagnose PD. Arora et al. analyzed at-home voice recordings and were able to determine patients’ UPDRS scores to differentiate between patients with PD and healthy controls with a sensitivity of 96% and specificity of 97%77. Similar work on voice data from smartphones has been reported by many others78,79,80, indicating that voice analyses might be beneficial when developing technologies for monitoring and diagnosing PD.

Computational approaches

Non-ML techniques to evaluate PD symptoms have evolved considerably over the last 30 years (Fig. 1B). Prior to adoption of machine learning algorithms, researchers used more traditional statistical and frequency domain analysis techniques. This likely occurred for two main reasons: (1) requisite computing power for ML was not as widely available and (2) the datasets collected in early studies were relatively less complex with respect to size and noise. Additionally, certain key machine learning techniques (e.g., backpropagation applied to neural networks) were not popularized until the late 1980s and early 1990s, with more widespread adoption occurring many years after with the advent of machine learning software libraries81,82. One of the first studies was in 1973 where Albers et al. showed that Parkinsonian hand tremor power spectra were easily distinguished that of control patients83 (Table 3). Statistical testing of frequency power spectrum also showed a significant correlation between selected features such as the total power of the frequency power spectrum and clinical ratings for dyskinesia severity84. Edwards et al. showed that combining multiple tremor characteristics (e.g., amplitude, dominant frequency) into one single index could also differentiate PD from non-PD movement85. Further development of computational techniques included applying more advanced regression models to data collected through different modalities (e.g., accelerometers, mechanical devices)86,87.

Many studies also found success through standard hypothesis statistical testing such as t-tests and ANOVAs. Blin et al. used an in-lab potentiometer-linked string and pulley system to collect data on stride length. Using a Mann-Whiteney U test and linear regression, they found that variability of stride length was significantly more marked in PD patients and increased with Hoehn and Yahr clinical stages88. ANOVA conducted on finger tapping data (e.g., RMS angular velocity, RMS angular displacement) showed significant differences between PD and control subjects89.

To harness insights about gait abnormalities, researchers incorporated kinematic analyses into their studies. Using ANOVA on kinematic measurements of gait, Lewis et al. found that patients with Parkinson’s displayed lower gait velocity and stride length, but comparable cadence relative to healthy controls while exhibiting reductions in peak joint angles in the sagittal plane and reductions in ankle plantarflexion at toe-off of the gait cycle90. These gait and kinematic characteristics were corroborated using spatiotemporal analysis conducted by Sofuwa et al., who showed that patients with PD had a significant reduction and step length and walking velocity compared to control, with the major feature defining the PD group being a reduction in ankle plantarflexion91. More recently, Nair et al. used standard logistic regression on centroids from k-means clustering of data from tri-axial accelerometers to classify PD and control subjects with an accuracy of ~95%, specificity of ~96%, and sensitivity of ~89%92.

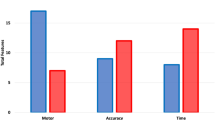

In more recent literature, machine learning techniques have proven to be highly effective in identifying PD symptom characteristics, especially when applied to varied datasets obtained using smart devices (Fig. 1B). The literature demonstrates strong performance across multiple machine learning techniques. Both neural network and non-neural network algorithms achieved high sensitivities and specificities in classification of PD symptoms using both raw and processed data. (Table 4) .

There is still significant research being conducted on optimizing and refining most of the ML algorithms discussed here, as many aspects of ML design still work through trial and error. This applies to both determining model parameters (e.g., learning rates for gradient descent, impurity levels in decision trees) and selecting algorithms themselves (e.g., neural network versus decision tree)93,94,95,96. In reality, multiple different models could be effective in performing the same task on a given set of data97,98. Here, we present objective measures of ML model performance while also attempting to provide rationale regarding the design criteria that may have led researchers to choose one algorithm over another.

Non-neural network machine learning algorithms have proven effective in Parkinson’s disease classification, as they often provide more mechanistic insight/interpretability and generally require less training data compared to neural networks. Multiple studies have found that decision trees are highly effective in classifying Parkinson’s versus control patients based on accelerometer and gyroscope data. Using data from a Microsoft Band smartwatch, Rigas et al. used decision trees to achieve a tremor detection accuracy of 94% with a 0.01% false positive rate99. Aich et al. showed that a decision tree trained on gait characteristics such as step time and length, stride time and length, and walking speed distinguished Parkinson’s patients from healthy controls with an accuracy of ~88%, sensitivity of ~93%, and specificity of ~91%, outperforming k nearest neighbor (KNN), support vector machine (SVM), and Naïve-Bayes100. The design choices in these studies were conducive to using decision trees, as there were multiple quantitative variables (e.g., stride length) with specific cut-offs (e.g., stride length <1.2 m) that informed certain diagnoses. Decision trees also enabled researchers to quantitatively determine which feature(s) (e.g., tremor frequency) from the data were most important in determining final classifications, thus improving the link between data analysis and understanding of disease.

While decision trees can be effective, they can also overfit training data, thereby limiting their generalizability. Therefore, many groups have found success using bagged decision trees, a technique that trains multiple trees using subsets of the training data and then aggregates the final results. Bagged decision trees can be particularly useful to mitigate overfitting that can result from analyzing relatively small datasets. Kostikis et al. used data from 25 patients with PD and 20 health controls and found that bagged decision trees on tremor features resulted in an AUC of 0.94, higher than any other algorithm they tested (e.g., logistic regression, SVM, AdaBoost)101. In a study with 20 patients with PD, bagged trees showed between 95 and 98% accuracy in classifying patients as per the MDS-UPDRS 0,1,2 scheme when using tremor data from motion sensors rather than accelerometers or gyroscopes102.

Results continued to be strong with a variant of bagged decision trees known as random forests (RF), which can be useful in improving accuracy and further reduce overfitting, with the tradeoff of longer training times. RF performed better than logistic regression on features from gait analysis, sway tests, and time up-and-go tasks when classifying between progressive supranuclear palsy and Parkinson’s and were also useful in estimating clinical scores of dyskinesia103. At the same time, researchers have encountered success with another variation of decision trees known as boosted trees, with gradient tree boosting outperforming a long short-term memory neural network when estimating UPDRS-III scores based on motion sensor data from the wrist and ankle104.

To further improve algorithm efficiency and reduce computational cost, researchers have leveraged feature selection techniques in combination with established machine learning algorithms. Feature selection is particularly important in the design of studies that evaluate multiple ML algorithms to identify the top performers or train algorithms on different datasets105,106. Feature selection is also commonly used as a tool to help improve algorithm performance. When used in conjunction with feature selection techniques such as recursive feature elimination, RF achieved a classification accuracy of 96% when grading gait abnormalities of PD patients on and off medications71. Another type of SVM-based feature selection was useful in achieving high RF performance when classifying PD vs non-PD patients, resulting in accuracy of 97%, sensitivity of 100%, and specificity of 94%. In general, many different feature selection techniques have shown to be useful with multiple ML algorithms32,106,107,108. SVMs have shown to perform well with and without feature selection before model training32,106.

Feature analysis, however, does not stop with feature selection. Specifically, post-hoc feature importance calculations can be beneficial in better understanding why specific models work the way they do, providing more insight related to the clinical applications of the model. Rehman et al. built multiple partial least discriminant analysis models using subsets of gait features measured in patients with PD and healthy controls, and used feature importance metrics to identify that, among others, step velocity, step length, and gait regularity were the most influential features in the model. This type of analysis is particularly beneficial, as they can improve clinical decision-making independent of using machine learning models, by providing clinicians with more nuanced signs/symptoms of early disease manifestation or disease progression109. Similar analyses were conducted on gait abnormalities by Mirelman et al., who stratified patients based on their PD disease progression and found that different features were more important in differentiating between various stages of PD110. For example, as PD progressed, features related to more challenging activities such as turning became more important for patient classification, but Mirelman et al. found that this increase in importance occurred in earlier stages of disease than one would normally expect. Similar analyses were reported by additional groups investigating gait and even other symptoms of PD104,111,112.

While the choice of which ML algorithm to use can partially be informed by the type of data, size of the study, etc., some papers have shown that the accuracy of a machine learning model depends on the type of tremor being evaluated, further highlighting the inherent trial-and-error nature of ML study design. Jeon et al. found that while decision trees were most accurate when classifying patients based on resting tremor with mental stress and intention tremor, resting tremor classification alone was most accurate with polynomial SVM and postural tremor classification was most accurate with (KNN)113. In the same vein, multiple groups have found that KNNs using time and frequency domain data are highly effective in Parkinson’s versus control classification80,112,114 using tremor data. Finally, Butt et al. and Bazgir et al. in 2018 both found that Naïve Bayes outperformed other tested algorithms when classifying Parkinson’s tremor using motion and accelerometer/gyroscope data, respectively115,116.

A few unsupervised learning algorithms have been developed for PD classification. Unsupervised learning can be useful when designing studies with large datasets that might be too cumbersome to manually label—a pre-requisite for training supervised ML models. Unsupervised learning is also beneficial in exploratory analyses to provide structure and novel insights from large and diverse datasets. Zhan et al. developed a novel “Disease Severity Score Learning” algorithm that calculated a “mobile Parkinson disease score” (mPDS) based on 435 features from gait, finger tapping, and voice tests that were conducted using smartphones. mPDS scores strongly correlated with MDS-UPDRS part III, MDS-UPDRS total, and Hoen and Yahr stages. This work represents ongoing efforts to create more objective measurements of Parkinson’s disease progression that are not impacted by interrater variability72.

The development of artificial neural networks to study large datasets have recently been used for PD symptom classification. Neural networks have multiple use cases but are most often utilized on large sets of data whose features must be combined using complex, non-linear relationships for classification or regression tasks. That being the case, neural networks typically require more data to train compared to other ML algorithms and, as a consequence, are more computationally expensive. Though neural networks can be powerful tools, they tend to be more “black box”, lacking in interpretability compared to other ML algorithms52,117,118. Even so, neural networks are one of the most popular ML algorithms used today and have achieved strong performance when applied to diagnosing and monitoring PD.

Moon et al. used 48 features across gait and postural sway collected from six inertial measurement units (IMUs) across patients’ backs, upper extremities, and lower extremities to differentiate between PD and essential tremor. After testing multiple machine learning algorithms (e.g., SVM, KNN, neural network, logistic regression), the authors found that a neural network with a learning rate of 0.001 had the highest accuracy (0.89), precision (0.61), and F1-score (0.61)119. Moon et al.’s paper is a good example of the design process often times used with machine learning in that multiple algorithms are tested before selecting one algorithm with specific hyperparameters (e.g., learning rate, number of hidden layers) that are also typically selected with trial and error120,121. Veeraragavan et al. also used neural networks, but attempted two different tasks: classifying between PD and healthy patients based on gait and classifying PD patients into Hoehn and Yahr clinical stages. Parkinson’s versus healthy control classification was achieved with an accuracy of 97% using a single hidden layer network with 25 nodes, while classification into Hoehn and Yahr stages was accomplished with an accuracy of 87% using a single hidden layer network with 13 nodes122. These results suggest that neural networks are promising candidates for disease classification and staging.

Early efforts to apply machine learning to PD tremor data utilized single hidden layer perceptron classifiers of 30 higher order statistical characteristics of tremor accelerometer data as inputs to differentiate between Parkinsonian, essential, and physiological tremor123. Such efforts essentially combined sophisticated feature extraction with relatively simple algorithm architecture for classification tasks. Other approaches, such as the dynamic neural network used by Roy et al., aimed to classify tremor as “mild”, “moderate”, or “severe” (based on UPDRS), using spectrum data from EMG and accelerometer measurements. Leveraging input features that required minimal pre-processing, such as accelerometer signal energy after lowpass filtering, Roy et al. achieved global classification error rates of less than 10%124. Others have reported success using neural networks trained on similar features that require little pre-processing125,126. Alterations to classical neural networks have also performed well. Oung et al. showed that extreme learning machines—neural networks that learn weights without backpropagation—achieved 91% classification accuracy when tremor and voice data were used as inputs to the network127.

Convolutional neural networks (CNNs) have recently played a large role in Parkinson’s disease classification due to their ability to directly analyze image data. In many cases, this reduces the amount of feature extraction needed. For example, if using a CNN to analyze tremor data collected by accelerometers, researchers do not need to extract features such as frequency, amplitude, etc., because the input to the CNN can simply be a processed version of the accelerometry graph itself. In 2020, Shi et al. used graphs of wavelet-transformed data (decomposing the data into a set of discrete oscillations called wavelets) from tri-axial accelerometers, gyroscopes, and magnetometers as inputs to a CNN to classify FoG and non-FoG episodes. Overall, the CNN displayed classification accuracy of ~89%, sensitivity of ~82%, and specificity of ~96%. The same study found that CNNs using raw time series data or Fourier-transformed data as inputs did not perform as well128. This shows that researchers must carefully select pre-processing techniques when using CNNs, as this choice can significantly alter the algorithm’s performance. However, using Fourier-transformed data improved CNN-based tremor classification. Kim et al., in 2018, reported ~85% accuracy when estimating UPDRS scores using a 3-layer CNN with a soft-max classification final layer. Rather than extracting specific features from accelerometer data to use as inputs to the CNN, Kim et al. used a stacked 2D FFT image of the tri-axial accelerometer and gyroscope data129.

Researchers have experimented with various CNN architectures and structures as well. Pereira et al. compared CNNs with ImageNet or Cifar10 architectures to an optimum-path forest, support vector machine with radial basis function, and Näive-Bayes using data from 4 drawing (e.g., spiral drawing) and 2 wrist movement tasks to distinguish Parkinson’s from control patients based on tremor. Overall, the CNNs outperformed the other machine learning techniques with respect to classification accuracy when using data from each aforementioned task separately (single-assessment case) and when combining data from each task (combined-assessment case)130. Sigcha et al. in 2020 wanted to model the time-dependencies of FoG and used a novel CNN structure by combining a classical CNN with a long short-term memory (LSTM) recurrent neural network to classify FoG and non-FoG episodes. Using Fourier-transformed data from an IMU on patients’ waists as an input, the CNN-LSTM combination achieved an AUC of 0.939131.

CNNs have also been useful beyond classification tasks. In 2020, Ibrahim et al. used a CNN with perceptron to estimate the amplitude of future tremor at 10, 20, 50, and 100 millisecond time steps, with a prediction accuracy ranging from 90 to 97%132. Both traditional and convolutional neural networks will likely continue to be useful in machine learning-based analysis of PD symptoms.

Interplay between technology and computational techniques

The technology and computational techniques used to monitor PD motor symptoms have evolved concurrently. As technology improves, different computational techniques must be developed and optimized to handle increasing amounts of data collected by new devices. The same applies in reverse. As advancements are made in computation that enable researchers to ask and answer different questions, new technologies must be developed that can facilitate these new analyses.

The overarching, major change seen in the technology used to diagnose and monitor PD over the last ~50 years has been the transition from laboratory to home monitoring. This technological evolution has undoubtedly been accompanied by a shift in computational approaches. Fundamentally, the techniques used to analyze data collected in well-controlled laboratory settings must be different from those required to analyze data collected in real-world conditions. As such, the evolution of technology necessitated computational methods that could: (1) better denoise signals, (2) make predictions given large sets of structured data, and (3) make predictions given large sets of unstructured data.

In-lab diagnosis and monitoring of PD generates data with less noise compared to data generated from real-world monitoring. This manifests in two ways. First, the data signal itself contains less ambient noise. For example, by using high-quality microphones or working in sound-treated rooms, researchers can control for room noise if recording voice samples from patients with PD133,134. At another level, the data from most in-lab studies are “de-noised”/simplified due to the inherent structure built into these studies. Assessing gait abnormalities via the timed up-and-go test or quantifying tremor via circle drawing tests produces highly consistent and uniform data since participants have executed the same task(s) in the same way to generate the data. This is not the case in real-world settings. As technology enabled real-world data collection, de-noising became one of the first priorities, both through simple filtering77 and data labeling (e.g., smartwatch labeling if a participant was running, swimming, sleeping)135. Though, apart from adding functionalities to deal with noisy data, foundational computational techniques such as frequency analyses and statistical testing were still adequate.

The adoption of machine learning generally correlated with the ability to collect increasing amounts of data, which have enabled researchers to ask new questions. The prime example of this is the adoption of smart devices. Before, researchers could ask participants to wear accelerometers, gyroscopes, heart rate monitors, etc. to collect varied types of data. Smart devices enabled device consolidation, improving ease of use for patients, and therefore increasing the amount of data that could be collected. Even more, smart devices improved ease of collecting qualitative data. Instead of relying on patient diaries or recall from memory, app-based monitoring on phones or tablets allowed patients to more seamlessly provide qualitative data related to medication adherence, exercise levels, mood, etc.

With access to increased volumes and types of data, researchers and clinicians started asking questions that were more suited for analysis with ML rather than non-ML techniques. These questions can broadly be assigned into two categories: (1) predictions and (2) classifications. When investigating PD, researchers were interested in predicting severity of symptoms and disease progression, while classifying patients for diagnostic and therapeutic purposes. ML algorithms were specifically suited for this task given their ability to leverage non-linearities and more efficiently handle large datasets. For example, neural networks enabled researchers to uncover complex, non-linear relationships between quantitative (e.g., tremor frequency) and qualitative (e.g., medication adherence) data to predict UPDRS scores, while SVM allowed for high-dimensional (>3 independent variable) classification. With smart devices providing access to vast amounts of data, researchers leveraged algorithms such as random forest that parallelized classification and prediction tasks, making data analyses more efficient and insightful.

It is clear that the computational techniques and technology used to monitor PD have co-evolved over the years. As technology advances, new computational techniques will be required to take advantage of the technologies’ improved functionalities and vice versa.

Discussion

The technology used to monitor and quantify Parkinson’s motor symptoms has undergone a rapid transformation in the past few decades. Early monitoring began with in-lab devices such as needle-based EMG, transitioned to using in-lab accelerometers/gyroscopes, then to more wearable accelerometers/gyroscopes, and finally to phone and mobile & web application-based monitoring in patients’ homes. The shift from in-lab to in-home monitoring will enable physicians to make more data-driven decisions regarding patient management. Along the same lines, significant progress has been made with respect to the use of machine learning to classify and monitor Parkinson’s patients. Using data from multiple different sources (e.g., wearable motion sensors, phone-based accelerometers, video cameras), researchers have designed both neural network and non-neural network-based machine learning algorithms to classify/categorize Parkinson’s patients across tremor, gait, bradykinesia, and dyskinesia. Further advancements in these algorithms will create more objective and quantitative ways for physicians to diagnose and manage patients with Parkinson’s.

As machine learning becomes more prevalent in medicine, regulators such as the Food and Drug Administration (FDA) are developing new protocols to assess the safety and efficacy of ML-based health technologies. The plan outlined by the FDA to improve evaluation of these technologies includes: (1) outlining “good machine learning practices”, (2) setting guidelines for algorithm transparency, (3) supporting research on algorithm evaluation and improvement, and (4) establishing guidelines on real-world data collection for initial approval and post-approval monitoring136. As this plan goes into action over the next few years, trial endpoints for diseases will still likely be established clinical metrics (e.g., UPDRS) rather than novel metrics generated by new ML-powered devices137,138. There seems to be, however, a future in which device-generated metrics replace or are used in conjunction with traditional clinical metrics. In the case of PD monitoring, the FDA’s approval of Great Lakes NeuroTechnologies’ KinesiaU device and provider portal to monitor motor symptoms of PD is a first step in that direction139. ML will undoubtedly play an increasingly larger role in medicine, and the FDA’s actions to navigate this new healthcare environment should be carefully monitored by researchers in this field.

Digital PD monitoring has enabled an understanding of patients’ symptoms to a level of detail not seen before. Prior to the adoption of wearable and smart devices in this field, clinicians were blind to the manifestation of PD motor symptoms outside of the clinic (e.g., brushing teeth, exercising, driving). Device-based monitoring has also helped fill in gaps left by sometimes inaccurate or incomplete patient diaries. However, many barriers exist to full clinical adoption of digital monitoring, including the cost of digital devices, lack of secure and reliable pipelines to transfer data to physicians, and perhaps the technological capabilities of patients with PD140. These barriers can start to be overcome through: (1) public-private partnerships that help lower the cost of digital devices for hospital systems to provide to their patients, (2) increased focus on data storage and retrieval infrastructure, and (3) patient education.

In the future, a transition to truly continuous PD symptom monitoring has the greatest potential by leveraging easy-to-use mobile applications on smart devices (e.g., smartphones, smartwatches) that can integrate quantitative and qualitative (e.g., quality of life surveys) data for physicians to better understand a patient’s experience with Parkinson’s. Further development of these applications, along with live data transmission and storage to the cloud will enhance the usability and utility of these technologies. Incorporating machine learning to these functionalities can then enable more objective disease staging/diagnoses by physicians and enhanced predictive capabilities for identifying disease progression. However, there is much work to be done related to developing better disease biomarkers to train these machine learning algorithms on. Reliable biomarkers must accurately identify symptoms of PD across patient populations and stages of disease. These biomarkers might also need to be different in different contexts (e.g., tremor during driving is different from tremor while brushing teeth). Identifying the nuances of digital biomarkers will be essential in realizing the full potential of machine learning and high technology in the monitoring of Parkinson’s symptoms.

Methods

We queried the US National Library of Medicine PubMed database (PubMed). Five compound search terms were used to query PubMed for machine learning and computational publications and clinical trials: “Parkinson’s” + SYMPTOM + (1) machine learning, (2) neural network, (3) quantification, (4) analysis, and (5) monitoring where “SYMPTOM” was either “tremor”, “gait”, “bradykinesia”, or “dyskinesia”. These queries resulted in 10,200 papers. Manuscripts about technology for monitoring PD symptoms were identified in PubMed with advanced search terms: ((automatic detection) OR (classification) OR (wearables) OR (digital health) OR (sensors)) AND “Parkinson’s” + SYMPTOM. These queries resulted in 2600 papers. Studies were first de-duplicated and then excluded if they did not: have full text availability, use data from humans, or evaluate PD specifically. Book chapters, review articles, and “short communications” were also excluded. Titles and abstracts were reviewed before further assessing a sub-set of representative English language papers. These papers were selected as they best characterized the machine learning and technology timelines that manifested from reviewing the literature.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

The data used to generate the figures and tables are publicly available to researchers through the National Library of Medicine. Additional inquiries are welcome to the corresponding author.

References

Liang, T.-W. & Tarsy, D. In Up to Date (ed. Post, T. W.) (UpToDate, 2021).

Powers, R. et al. Smartwatch inertial sensors continuously monitor real-world motor fluctuations in Parkinson’s disease. Sci. Transl. Med. 13, eabd7865 (2021).

Rovini, E., Maremmani, C. & Cavallo, F. How wearable sensors can support Parkinson’s disease diagnosis and treatment: a systematic review. Front. Neurosci. 11, 555 (2017).

Kovosi, S. & Freeman, M. Administering medications for Parkinson’s disease on time. Nursing 41, 66 (2011).

Grissinger, M. Delayed administration and contraindicated drugs place hospitalized Parkinson’s disease patients at. Risk. P T 43, 10–39 (2018).

Groiss, S. J., Wojtecki, L., Südmeyer, M. & Schnitzler, A. Deep brain stimulation in Parkinson’s disease. Ther. Adv. Neurol. Disord. 2, 20–28 (2009).

Movement Disorder Society Task Force on Rating Scales for Parkinson’s Disease. The unified Parkinson’s disease Rating Scale (UPDRS): status and recommendations. Mov. Disord. 18, 738–750 (2003).

Goetz, C. G. et al. Movement Disorder Society-sponsored revision of the Unified Parkinson’s Disease Rating Scale (MDS-UPDRS): Process, format, and clinimetric testing plan. Mov. Disord. 22, 41–47 (2007).

Louis, E. D. et al. Clinical correlates of action tremor in Parkinson disease. Arch. Neurol. 58, 1630 (2001).

Heldman, D. A. et al. The Modified Bradykinesia Rating Scale for Parkinson’s disease: reliability and comparison with kinematic measures. Mov. Disord. 26, 1859–1863 (2011).

Bathien, N., Koutlidis, R. M. & Rondot, P. EMG patterns in abnormal involuntary movements induced by neuroleptics. J. Neurol. Neurosurg. Psychiatry 47, 1002–1008 (1984).

Andrews, C. J. Influence of dystonia on the response to long-term L-dopa therapy in Parkinson’s disease. J. Neurol. Neurosurg. Psychiatry 36, 630–636 (1973).

Milner-Brown, H. S., Fisher, M. A. & Weiner, W. J. Electrical properties of motor units in Parkinsonism and a possible relationship with bradykinesia. J. Neurol. Neurosurg. Psychiatry 42, 35–41 (1979).

Hacisalihzade, S. S., Albani, C. & Mansour, M. Measuring parkinsonian symptoms with a tracking device. Comput. Methods Prog. Biomed. 27, 257–268 (1988).

Beuter, A., de Geoffroy, A. & Cordo, P. The measurement of tremor using simple laser systems. J. Neurosci. Methods 53, 47–54 (1994).

Weller, C. et al. Defining small differences in efficacy between anti-parkinsonian agents using gait analysis: a comparison of two controlled release formulations of levodopa/decarboxylase inhibitor. Br. J. Clin. Pharm. 35, 379–385 (1993).

O’Suilleabhain, P. E. & Dewey, R. B. Validation for tremor quantification of an electromagnetic tracking device. Mov. Disord. 16, 265–271 (2001).

Deuschl, G., Lauk, M. & Timmer, J. Tremor classification and tremor time series analysis. Chaos: Interdiscip. J. Nonlinear Sci. 5, 48 (1998).

Spyers-Ashby, J. M., Stokes, M. J., Bain, P. G. & Roberts, S. J. Classification of normal and pathological tremors using a multidimensional electromagnetic system. Med. Eng. Phys. 21, 713–723 (1999).

Rajaraman, V. et al. A novel quantitative method for 3D measurement of Parkinsonian tremor. Clin. Neurophysiol. 111, 338–343 (2000).

Hoff, J. I., van der Meer, V. & van Hilten, J. J. Accuracy of objective ambulatory accelerometry in detecting motor complications in patients with Parkinson’s disease. Clin. Neuropharmacol. 27, 53–57 (2004).

Dunnewold, R. J. W. et al. Ambulatory quantitative assessment of body position, bradykinesia, and hypokinesia in Parkinson’s disease. J. Clin. Neurophysiol. 15, 235–242 (1998).

Hoff, J. I., van den Plas, A. A., Wagemans, E. A. & van Hilten, J. J. Accelerometric assessment of levodopa-induced dyskinesias in Parkinson’s disease. Mov. Disord. 16, 58–61 (2001).

Dunnewold, R. J. W., Jacobi, C. E. & van Hilten, J. J. Quantitative assessment of bradykinesia in patients with Parkinson’s disease. J. Neurosci. Methods 74, 107–112 (1997).

Salarian, A. et al. Quantification of tremor and bradykinesia in Parkinson’s disease using a novel ambulatory monitoring system. IEEE Trans. Biomed. Eng. 54, 313–322 (2007).

Mera, T. O., Heldman, D. A., Espay, A. J., Payne, M. & Giuffrida, J. P. Feasibility of home-based automated Parkinson’s disease motor assessment. J. Neurosci. Methods 203, 152–156 (2012).

Heldman, D. A. et al. Automated motion sensor quantification of gait and lower extremity Bradykinesia. Conf. Proc. IEEE Eng. Med Biol. Soc. 2012, 1956–1959 (2012).

Phan, D., Horne, M., Pathirana, P. N. & Farzanehfar, P. Measurement of axial rigidity and postural instability using wearable sensors. Sensors (Basel) 18, 495 (2018).

Salarian, A. et al. Analyzing 180° turns using an inertial system reveals early signs of progress in Parkinson’s Disease. Conf. Proc. IEEE Eng. Med Biol. Soc. 2009, 224–227 (2009).

Moore, S. T. et al. Autonomous identification of freezing of gait in Parkinson’s disease from lower-body segmental accelerometry. J. Neuroeng. Rehabil. 10, 19 (2013).

Mancini, M. et al. Measuring freezing of gait during daily-life: an open-source, wearable sensors approach. J. Neuroeng. Rehabil. 18, 1 (2021).

Reches, T. et al. Using wearable sensors and machine learning to automatically detect freezing of gait during a FOG-Provoking test. Sensors (Basel) 20, 4474 (2020).

Tripoliti, E. E. et al. Automatic detection of freezing of gait events in patients with Parkinson’s disease. Comput. Methods Prog. Biomed. 110, 12–26 (2013).

Zach, H. et al. Identifying freezing of gait in Parkinson’s disease during freezing provoking tasks using waist-mounted accelerometry. Parkinsonism. Relat. Disord. 21, 1362–1366 (2015).

Manson, A. et al. An ambulatory dyskinesia monitor. J. Neurol. Neurosurg. Psychiatry 68, 196–201 (2000).

Pulliam, C. L. et al. Continuous assessment of levodopa response in Parkinson’s disease using wearable motion sensors. IEEE Trans. Biomed. Eng. 65, 159–164 (2018).

Rodríguez-Molinero, A. et al. Estimating dyskinesia severity in Parkinson’s disease by using a waist-worn sensor: concurrent validity study. Sci. Rep. 9, 13434 (2019).

Giovannoni, G., van Schalkwyk, J., Fritz, V. & Lees, A. Bradykinesia akinesia inco-ordination test (BRAIN TEST): an objective computerised assessment of upper limb motor function. J. Neurol. Neurosurg. Psychiatry 67, 624–629 (1999).

Allen, D. P. et al. On the use of low-cost computer peripherals for the assessment of motor dysfunction in Parkinson’s disease—quantification of bradykinesia using target tracking tasks. IEEE Trans. Neural Syst. Rehabilitation Eng. 15, 286–294 (2007).

Espay, A. J. et al. At-home training with closed-loop augmented-reality cueing device for improving gait in patients with Parkinson’s disease. J. Rehabil. Res. Dev. 47, 573 (2010).

Bachlin, M. et al. Wearable assistant for Parkinson’s disease patients with the freezing of gait symptom. IEEE Trans. Inf. Technol. Biomed. 14, 436–446 (2010).

Lee, A. et al. Can google glassTM technology improve freezing of gait in parkinsonism? A pilot study. Disabil. Rehabil. Assist. Technol. 1–11. https://doi.org/10.1080/17483107.2020.1849433 (2020).

Rao, A. S. et al. Quantifying drug induced dyskinesia in Parkinson’s disease patients using standardized videos. In: 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society 1769–1772. https://doi.org/10.1109/IEMBS.2008.4649520 (2008).

van Hilten, J. J., Middelkoop, H. A., Kerkhof, G. A. & Roos, R. A. A new approach in the assessment of motor activity in Parkinson’s disease. J. Neurol. Neurosurg. Psychiatry 54, 976–979 (1991).

Burne, J. A., Hayes, M. W., Fung, V. S. C., Yiannikas, C. & Boljevac, D. The contribution of tremor studies to diagnosis of Parkinsonian and essential tremor: a statistical evaluation. J. Clin. Neurosci. 9, 237–242 (2002).

Cole, B. T., Roy, S. H., Luca, C. J. D. & Nawab, S. H. Dynamic neural network detection of tremor and dyskinesia from wearable sensor data. In: 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology 6062–6065. https://doi.org/10.1109/IEMBS.2010.5627618 (2010).

Tsipouras, M. G. et al. An automated methodology for levodopa-induced dyskinesia: assessment based on gyroscope and accelerometer signals. Artif. Intell. Med. 55, 127–135 (2012).

Papapetropoulos, S. et al. Objective quantification of neuromotor symptoms in Parkinson’s disease: implementation of a portable, computerized measurement tool. Parkinsons Dis. 2010, (2010).

Yang, C.-C., Hsu, Y.-L., Shih, K.-S. & Lu, J.-M. Real-time gait cycle parameter recognition using a wearable accelerometry system. Sensors (Basel) 11, 7314–7326 (2011).

Klucken, J. et al. Unbiased and mobile gait analysis detects motor impairment in Parkinson’s disease. PLoS ONE 8, e56956 (2013).

Marcante, A. et al. Foot pressure wearable sensors for freezing of gait detection in Parkinson’s disease. Sensors (Basel) 21, 128 (2020).

Mahadevan, N. et al. Development of digital biomarkers for resting tremor and bradykinesia using a wrist-worn wearable device. npj Digital Med. 3, 1–12 (2020).

Heldman, D. A. et al. Telehealth management of Parkinson’s disease using wearable Sensors: Exploratory Study. Digit Biomark. 1, 43–51 (2017).

Ferreira, J. J. et al. Quantitative home-based assessment of Parkinson’s symptoms: the SENSE-PARK feasibility and usability study. BMC Neurol. 15, 89 (2015).

Fisher, J. M., Hammerla, N. Y., Rochester, L., Andras, P. & Walker, R. W. Body-worn sensors in Parkinson’s disease: evaluating their acceptability to patients. Telemed. J. E Health 22, 63–69 (2016).

Evers, L. J. et al. Real-life gait performance as a digital biomarker for motor fluctuations: the Parkinson@Home validation study. J. Med. Internet Res. 22, e19068 (2020).

Erb, M. K. et al. mHealth and wearable technology should replace motor diaries to track motor fluctuations in Parkinson’s disease. npj Digital Med. 3, 1–10 (2020).

Chen, B. et al. A web-based system for home monitoring of patients with Parkinson’s disease using wearable sensors. IEEE Trans. Biomed. Eng. 58, 831–836 (2011).

Cancela, J., Pastorino, M., Arredondo, M. T. & Hurtado, O. A telehealth system for Parkinson’s disease remote monitoring. The PERFORM approach. In: 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) 7492–7495. https://doi.org/10.1109/EMBC.2013.6611291 (2013).

Daneault, J.-F., Carignan, B., Codère, C. É., Sadikot, A. F. & Duval, C. Using a smart phone as a standalone platform for detection and monitoring of pathological tremors. Front. Hum. Neurosci. 6, 357(2013).

Lo, C. et al. Predicting motor, cognitive & functional impairment in Parkinson’s. Ann. Clin. Transl. Neurol. 6, 1498–1509 (2019).

Kostikis, N., Hristu-Varsakelis, D., Arnaoutoglou, M., Kotsavasiloglou, C. & Baloyiannis, S. Towards remote evaluation of movement disorders via smartphones. In: 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society 5240–5243. https://doi.org/10.1109/IEMBS.2011.6091296 (2011).

van Brummelen, E. M. J. et al. Quantification of tremor using consumer product accelerometry is feasible in patients with essential tremor and Parkinson’s disease: a comparative study. J. Clin. Mov. Disord. 7, 4 (2020).

Banaszkiewicz, K., Rudzińska, M., Bukowczan, S., Izworski, A. & Szczudlik, A. Spiral drawing time as a measure of bradykinesia. Neurol. Neurochir. Pol. 43, 16–21 (2009).

Silva de Lima, A. L. et al. Feasibility of large-scale deployment of multiple wearable sensors in Parkinson’s disease. PLoS ONE 12, e0189161 (2017).

Prince, J., Andreotti, F. & De Vos, M. Multi-source ensemble learning for the remote prediction of Parkinson’s disease in the presence of source-wise missing data. IEEE Trans. Biomed. Eng. 66, 1402–1411 (2018).

Daneault, J.-F. et al. Accelerometer data collected with a minimum set of wearable sensors from subjects with Parkinson’s disease. Scientific Data 8, 48 (2021).

Keijsers, N. L. W., Horstink, M. W. I. M. & Gielen, S. C. A. M. Automatic assessment of levodopa-induced dyskinesias in daily life by neural networks. Mov. Disord. 18, 70–80 (2003).

Patel, S. et al. Monitoring motor fluctuations in patients with Parkinson’s disease using wearable sensors. IEEE Trans. Inf. Technol. Biomed. 13, 864–873 (2009).

Ghoraani, B., Hssayeni, M. D., Bruack, M. M. & Jimenez-Shahed, J. Multilevel features for sensor-based assessment of motor fluctuation in Parkinson’s disease subjects. IEEE J. Biomed. Health Inf. 24, 1284–1295 (2020).

Aich, S. et al. A supervised machine learning approach to detect the on/off state in Parkinson’s disease using wearable based gait signals. Diagnostics (Basel) 10, 421 (2020).

Zhan, A. et al. Using smartphones and machine learning to quantify parkinson disease severity. JAMA Neurol. 75, 876–880 (2018).

Pfister, F. M. J. et al. High-resolution motor state detection in Parkinson’s disease using convolutional neural networks. Sci. Rep. 10, 5860 (2020).

Lu, M. et al. Vision-based estimation of MDS-UPDRS gait scores for assessing Parkinson’s disease motor severity. Med. Image Comput. Comput. Assist Int. 12263, 637–647 (2020).

Chen, S.-W. et al. Quantification and recognition of parkinsonian gait from monocular video imaging using kernel-based principal component analysis. Biomed. Eng. Online 10, 99 (2011).

Bank, P. J. M., Marinus, J., Meskers, C. G. M., de Groot, J. H. & van Hilten, J. J. Optical hand tracking: a novel technique for the assessment of bradykinesia in Parkinson’s disease. Mov. Disord. Clin. Pr. 4, 875–883 (2017).

Arora, S. et al. Detecting and monitoring the symptoms of Parkinson’s disease using smartphones: a pilot study. Parkinsonism Relat. Disord. 21, 650–653 (2015).

Singh, S. & Xu, W. Robust detection of Parkinson’s disease using harvested smartphone voice data: a telemedicine approach. Telemed. J. E Health 26, 327–334 (2020).

Rusz, J. et al. Smartphone allows capture of speech abnormalities associated with high risk of developing Parkinson’s disease. IEEE Trans. Neural Syst. Rehabilitation Eng. 26, 1495–1507 (2018).

Sajal M. S. R. et al. Telemonitoring Parkinson’s disease using machine learning by combining tremor and voice analysis. Brain Inform. 7, 12 (2020).

Collobert, R., Bengio, S. & Marithoz, J. Torch: A Modular Machine Learning Software Library (CiteSeerx, 2002).

Rumelhart, D. E., Hinton, G. E. & Williams, R. J. Learning representations by back-propagating errors. Nature 323, 533–536 (1986).

Albers, J. W., Potvin, A. R., Tourtellotte, W. W., Pew, R. W. & Stribley, R. F. Quantification of hand tremor in the clinical neurological examination. IEEE Trans. Biomed. Eng. 20, 27–37 (1973).

Burkhard, P. R., Shale, H., Langston, J. W. & Tetrud, J. W. Quantification of dyskinesia in Parkinson’s disease: validation of a novel instrumental method. Mov. Disord. 14, 754–763 (1999).

Edwards, R. & Beuter, A. Indexes for identification of abnormal tremor using computer tremor evaluation systems. IEEE Trans. Biomed. Eng. 46, 895–898 (1999).

Matsumoto, Y., Fukumoto, I., Okada, K., Hando, S. & Teranishi, M. Analysis of pathological tremors using the autoregression model. Front. Med. Biol. Eng. 11, 221–235 (2001).

Elble, R. J. et al. Tremor amplitude is logarithmically related to 4- and 5-point tremor rating scales. Brain 129, 2660–2666 (2006).

Blin, O., Ferrandez, A. M. & Serratrice, G. Quantitative analysis of gait in Parkinson patients: increased variability of stride length. J. Neurological Sci. 98, 91–97 (1990).

Kim, J.-W. et al. Quantification of bradykinesia during clinical finger taps using a gyrosensor in patients with Parkinson’s disease. Med. Biol. Eng. Comput. 49, 365–371 (2011).

Lewis, G. N., Byblow, W. D. & Walt, S. E. Stride length regulation in Parkinson’s disease: the use of extrinsic, visual cues. Brain 123, 2077–2090 (2000).

Sofuwa, O. et al. Quantitative gait analysis in Parkinson’s disease: comparison with a healthy control group. Arch. Phys. Med. Rehabilitation 86, 1007–1013 (2005).

Nair, P., Trisno, R., Baghini, M. S., Pendharkar, G. & Chung, H. Predicting early stage drug induced parkinsonism using unsupervised and supervised machine learning. In: 2020 42nd Annual International Conference of the IEEE Engineering in Medicine Biology Society (EMBC) 776–779. https://doi.org/10.1109/EMBC44109.2020.9175343 (2020).

Chicco, D. Ten quick tips for machine learning in computational biology. BioData Min. 10, 35 (2017).

Raschka, S. Model evaluation, model selection, and algorithm selection in machine learning. Preprint at https://arxiv.org/abs/1811.12808 (2020).

Ali, S. & Smith, K. A. On learning algorithm selection for classification. Appl. Soft Comput. 6, 119–138 (2006).

Kotthoff, L., Gent, I. P. & Miguel, I. An evaluation of machine learning in algorithm selection for search problems. AI Commun. 25, 257–270 (2012).

Lee, I. & Shin, Y. J. Machine learning for enterprises: applications, algorithm selection, and challenges. Bus. Horiz. 63, 157–170 (2020).

Awan, S. E., Bennamoun, M., Sohel, F., Sanfilippo, F. M. & Dwivedi, G. Machine learning‐based prediction of heart failure readmission or death: implications of choosing the right model and the right metrics. ESC Heart Fail 6, 428–435 (2019).

Rigas, G. et al. Tremor UPDRS estimation in home environment. In: 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) 3642–3645. https://doi.org/10.1109/EMBC.2016.7591517 (2016).

Aich, S. et al. Design of a machine learning-assisted wearable accelerometer-based automated system for studying the effect of dopaminergic medicine on gait characteristics of Parkinson’s patients. J. Healthc. Eng. 2020, 1823268 (2020).

Kostikis, N., Hristu-Varsakelis, D., Arnaoutoglou, M. & Kotsavasiloglou, C. A smartphone-based tool for assessing parkinsonian hand tremor. IEEE J. Biomed. Health Inform. 19, 1835–1842 (2015).

Vivar, G. et al. Contrast and homogeneity feature analysis for classifying tremor levels in Parkinson’s disease patients. Sensors (Basel) 19, 2072 (2019).

Lee, S. I. et al. A novel method for assessing the severity of levodopa-induced dyskinesia using wearable sensors. In 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) 8087–8090. https://doi.org/10.1109/EMBC.2015.7320270 (2015).

Hssayeni, M. D., Jimenez-Shahed, J., Burack, M. A. & Ghoraani, B. Wearable sensors for estimation of parkinsonian tremor severity during free body movements. Sensors (Basel) 19, 4215 (2019).

Gao, C. et al. Model-based and model-free machine learning techniques for diagnostic prediction and classification of clinical outcomes in Parkinson’s disease. Sci. Rep. 8, 7129 (2018).

Rehman, R. Z. U. et al. Selecting clinically relevant gait characteristics for classification of early Parkinson’s disease: a comprehensive machine learning approach. Sci. Rep. 9, 17269 (2019).

Martinez-Manzanera, O. et al. A method for automatic and objective scoring of bradykinesia using orientation sensors and classification algorithms. IEEE Trans. Biomed. Eng. 63, 1016–1024 (2016).

Channa, A., Ifrim, R.-C., Popescu, D. & Popescu, N. A-WEAR bracelet for detection of hand tremor and bradykinesia in Parkinson’s patients. Sensors (Basel) 21, 981 (2021).

Rehman, R. Z. U. et al. Accelerometry-based digital gait characteristics for classification of Parkinson’s disease: what counts? IEEE Open J. Eng. Med. Biol. 1, 65–73 (2020).

Mirelman, A. et al. Detecting sensitive mobility features for Parkinson’s disease stages via machine learning. Mov. Disord. 36, 2144–2155 (2021).

Rupprechter, S. et al. A clinically interpretable computer-vision based method for quantifying gait in parkinson’s disease. Sensors (Basel) 21, 5437 (2021).

de Araújo, A. C. A. et al. Hand resting tremor assessment of healthy and patients with parkinson’s disease: an exploratory machine learning study. Front. Bioeng. Biotechnol. 8, 778 (2020).

Jeon, H. et al. High-accuracy automatic classification of Parkinsonian tremor severity using machine learning method. Physiol. Meas. 38, 1980–1999 (2017).

Rios-Urrego, C. D. et al. Analysis and evaluation of handwriting in patients with Parkinson’s disease using kinematic, geometrical, and non-linear features. Comput. Methods Prog. Biomed. 173, 43–52 (2019).

Bazgir, O., Habibi, S. A. H., Palma, L., Pierleoni, P. & Nafees, S. A classification system for assessment and home monitoring of tremor in patients with Parkinson’s disease. J. Med. Signals Sens. 8, 65–72 (2018).

Butt, A. H. et al. Objective and automatic classification of Parkinson disease with Leap Motion controller. Biomed. Eng. Online 17, 168 (2018).

Heaton, J., McElwee, S., Fraley, J. & Cannady, J. Early stabilizing feature importance for TensorFlow deep neural networks. In: 2017 International Joint Conference on Neural Networks (IJCNN) 4618–4624. https://doi.org/10.1109/IJCNN.2017.7966442 (2017).

Ghorbani, A., Abid, A. & Zou, J. Interpretation of neural networks is fragile. Proc. AAAI Conf. Artif. Intell. 33, 3681–3688 (2019).

Moon, S. et al. Classification of Parkinson’s disease and essential tremor based on balance and gait characteristics from wearable motion sensors via machine learning techniques: a data-driven approach. J. Neuroeng. Rehabil. 17, 125 (2020).

Balaprakash, P., Salim, M., Uram, T. D., Vishwanath, V. & Wild, S. M. DeepHyper: asynchronous hyperparameter search for deep neural networks. In: 2018 IEEE 25th International Conference on High Performance Computing (HiPC) 42–51. https://doi.org/10.1109/HiPC.2018.00014 (2018).

Thomas, A. J., Petridis, M., Walters, S. D., Gheytassi, S. M. & Morgan, R. E. In Engineering Applications of Neural Networks (eds. Boracchi, G., Iliadis, L., Jayne, C. & Likas, A.) 279–290 (Springer International Publishing, 2017). https://doi.org/10.1007/978-3-319-65172-9_24.

Veeraragavan, S., Gopalai, A. A., Gouwanda, D. & Ahmad, S. A. Parkinson’s disease diagnosis and severity assessment using ground reaction forces and neural networks. Front. Physiol. 11, 587057 (2020).

Jakubowski, J., Kwiatos, K., Chwaleba, A. & Osowski, S. Higher order statistics and neural network for tremor recognition. IEEE Trans. Biomed. Eng. 49, 152–159 (2002).

Roy, S. H., Cole, B. T., Gilmore, L. D., Luca, C. J. D. & Nawab, S. H. Resolving signal complexities for ambulatory monitoring of motor function in Parkinson’s disease. In: 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society 4832–4835. https://doi.org/10.1109/IEMBS.2011.6091197 (2011).

Memedi, M. et al. Automatic spiral analysis for objective assessment of motor symptoms in Parkinson’s disease. Sensors (Basel) 15, 23727–23744 (2015).

Butt, A. H. et al. Biomechanical parameter assessment for classification of Parkinson’s disease on clinical scale. Int. J. Distrib. Sens. Netw. 13, 1550147717707417 (2017).

Oung, Q. W., Muthusamy, H., Basah, S. N., Lee, H. & Vijean, V. Empirical wavelet transform based features for classification of Parkinson’s disease severity. J. Med. Syst. 42, 29 (2017).

Shi, B. et al. Convolutional neural network for freezing of gait detection leveraging the continuous wavelet transform on lower extremities wearable sensors data. In: 2020 42nd Annual International Conference of the IEEE Engineering in Medicine Biology Society (EMBC) 5410–5415. https://doi.org/10.1109/EMBC44109.2020.9175687 (2020).

Kim, H. B. et al. Wrist sensor-based tremor severity quantification in Parkinson’s disease using convolutional neural network. Comput. Biol. Med. 95, 140–146 (2018).

Pereira, C. R. et al. Handwritten dynamics assessment through convolutional neural networks: an application to Parkinson’s disease identification. Artif. Intell. Med. 87, 67–77 (2018).

Sigcha, L. et al. Deep learning approaches for detecting freezing of gait in parkinson’s disease patients through on-body acceleration sensors. Sensors (Basel) 20, 1895 (2020).

Ibrahim, A., Zhou, Y., Jenkins, M. E., Trejos, A. L. & Naish, M. D. The design of a Parkinson’s tremor predictor and estimator using a hybrid convolutional-multilayer perceptron neural network. In: 2020 42nd Annual International Conference of the IEEE Engineering in Medicine Biology Society (EMBC) 5996–6000. https://doi.org/10.1109/EMBC44109.2020.9176132 (2020).

Wang, E. Q. et al. Hemisphere-specific effects of subthalamic nucleus deep brain stimulation on speaking rate and articulatory accuracy of syllable repetitions in Parkinson’s disease. J. Med. Speech Lang. Pathol. 14, 323–334 (2006).

Holmes, R. J., Oates, J. M., Phyland, D. J. & Hughes, A. J. Voice characteristics in the progression of Parkinson’s disease. Int J. Lang. Commun. Disord. 35, 407–418 (2000).

Gatsios, D. et al. Feasibility and utility of mhealth for the remote monitoring of Parkinson disease: ancillary study of the Pd_Manager randomized controlled trial. JMIR Mhealth Uhealth 8, e16414 (2020).

U.S. Food and Drug Administration. Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) Action Plan (FDA, 2021).

Taylor, N. P. FDA rejects Verily filing for wrist-worn Parkinson’s clinical trial device. MedTech Dive https://www.medtechdive.com/news/fda-rejects-verily-filing-for-wrist-worn-parkinsons-clinical-trial-device-google/601724/ (2021).

Heldman, D. A. Movement Disorder Quantification Algorithm Development —Clinical Trial. (2017).

NeuroTechnologies, G. L. Great Lakes NeuroTechnologies Releases KinesiaU Provider Portal Enabling Real-Time Remote Monitoring of Patients With Parkinson’s Disease. Great Lakes NeuroTechnologies https://www.glneurotech.com/blog/2021/01/08/great-lakes-neurotechnologies-releases-kinesiau-provider-portal-enabling-real-time-remote-monitoring-of-patients-with-parkinsons-disease/ (2021).

Espay, A. J. et al. A roadmap for implementation of patient-centered digital outcome measures in Parkinson’s disease obtained using mobile health technologies. Mov. Disord. 34, 657–663 (2019).

Deuschl, G., Lauk, M. & Timmer, J. Tremor classification and tremor time series analysis. Chaos Woodbury N 5, 48–51 (1995).

Van Someren, E. J. W. Actigraphic monitoring of movement and rest-activity rhythms in aging, Alzheimer’s disease, and Parkinson’s disease. IEEE Trans. Rehabil. Eng. 5, 394–398 (1997).

Sekine, M., Akay, M., Tamura, T., Higashi, Y. & Fujimoto, T. Fractal dynamics of body motion in patients with Parkinson’s disease. J. Neural Eng. 1, 8–15 (2004).

Giansanti, D., Macellari, V. & Maccioni, G. Telemonitoring and Telerehabilitation of Patients with Parkinson’s Disease: Health Technology Assessment of a Novel Wearable Step Counter. Telemed. E-Health 14, 76–83 (2008).

Mancini, M., Zampieri, C., Carlson-Kuhta, P., Chiari, L. & Horak, F. B. Anticipatory postural adjustments prior to step initiation are hypometric in untreated Parkinson’s disease: an accelerometer-based approach. Eur. J. Neurol. 16, 1028–1034 (2009).

Mancini, M. et al. Trunk Accelerometry Reveals Postural Instability in Untreated Parkinson’s Disease. Parkinsonism Relat. Disord. 17, 557–562 (2011).

Morris, T. R. et al. Clinical assessment of freezing of gait in Parkinson’s disease from computer-generated animation. Gait Posture 38, 326–329 (2013).

Ginis, P. et al.Feasibility and effects of home-based smartphone-delivered automated feedback training for gait in people with Parkinson’s disease: A pilot randomized controlled trial. Parkinsonism Relat. Disord. 22, 28–34 (2016).

Cavanaugh, J. T. et al. Capturing Ambulatory Activity Decline in Parkinson Disease. J. Neurol. Phys. Ther. JNPT 36, 51–57 (2012).

Lakshminarayana, R. et al. Using a smartphone-based self-management platform to support medication adherence and clinical consultation in Parkinson’s disease. NPJ Park. Dis 3, 2 (2017).

Isaacson, S. H. et al. Effect of using a wearable device on clinical decision-making and motor symptoms in patients with Parkinson’s disease starting transdermal rotigotine patch: A pilot study. Parkinsonism Relat. Disord. 64, 132–137 (2019).

Dominey, T. et al. Introducing the Parkinson’s KinetiGraph into Routine Parkinson’s Disease Care: A 3-Year Single Centre Experience. J. Park. Dis 10, 1827–1832 (2020).

Hadley, A. J., Riley, D. E. & Heldman, D. A. Real-World Evidence for a Smartwatch-Based Parkinson’s Motor Assessment App for Patients Undergoing Therapy. Changes. Digit. Biomark. 5, 206–215 (2021).

Sundgren, M., Andréasson, M., Svenningsson, P., Noori, R.-M. & Johansson, A. Does Information from the Parkinson KinetiGraphTM (PKG) Influence the Neurologist’s Treatment Decisions?—An Observational Study in Routine Clinical Care of People with Parkinson’s Disease. J. Pers. Med. 11, 519 (2021).

Salarian, A. et al. Gait assessment in Parkinson’s disease: toward an ambulatory system for long-term monitoring. IEEE Trans. Biomed. Eng. 51, 1434–1443 (2004).

Chien, S.-L. et al. The efficacy of quantitative gait analysis by the GAITRite system in evaluation of parkinsonian bradykinesia. Parkinsonism Relat. Disord. 12, 438–442 (2006).