Abstract

Although live streaming is indispensable, live-streaming e-business requires accurate and timely sales-volume prediction to ensure a healthy supply–demand balance for companies. Practically, because various factors can significantly impact sales results, the development of a powerful, interpretable model is crucial for accurate sales prediction. In this study, we propose SaleNet, a deep-learning model designed for sales-volume prediction. Our model achieved correct prediction results on our private, real operating data. The mean absolute percentage error (MAPE) of our model’s performance fell as low as 11.47% for a + 1.5-days forecast. Even for a 1-week forecast (+ 6 days), the MAPE was only 19.79%, meeting actual business needs and practical requirements. Notably, our model demonstrated robust interpretability, as evidenced by the feature contribution results which are consistent with prevailing research findings and industry expertise. Our findings provided a theoretical foundation for predicting shopping behavior in live-broadcast e-commerce and offered valuable insights for designing live-broadcast content and optimizing the user experience.

Similar content being viewed by others

Introduction

The rapid development of social media and the widespread adoption of smartphones have spurred a significant shift from traditional marketing to social media marketing1,2. Live e-commerce, compared with traditional business, offers advantages in terms of authenticity, visualization, entertainment, and interactivity, facilitated by the growing popularity of online platforms3. The ongoing impact of the COVID-19 pandemic has further popularized online shopping, as individuals seek to minimize outdoor activities and protect themselves4,5. Online shopping provides a valuable platform for consumers, enabling real-time interactions, reducing psychological distance (the perception of a separation between the consumer and a brand), and addressing uncertainties through live videos and immediate responses6,7,8. Advertisers can effectively showcase, test, and demonstrate products through live broadcasts, allowing customers to receive immediate feedback, observe interactions between anchors and other customers, and ultimately enhance their purchasing efficiency. The increasing prevalence of online shopping is evidenced by approximately 30% of Chinese individuals engaging in it in their spare time, spending an average of 0.5 h a day browsing products, according to recent data9,10. Various live-broadcasting platforms have emerged as bridges from customers to merchants, with TikTok being one of the leading platforms, capturing around 52.2% of customers6. TikTok leverages short videos, live broadcasts, and targeted advertising campaigns, featuring vivid content and utilizing tracking algorithms to recommend products11. As a result, video content is essential for improving sales.

As sales increase, inventory management becomes more critical12,13. Specifically, excessive inventory can lead to overstock, where goods cannot be sold in time, resulting in waste and expired goods. In contrast, insufficient inventory can affect delivery times and may require additional time to schedule goods transport from other warehouses, leading to adverse impacts such as a negative consumer experience, weakened consumption enthusiasm, and even refunds to purchase competing products. Additionally, sales volumes of goods are uncertain and strongly influenced by factors such as public holidays, merchant activities, and celebrity endorsements. For instance, major shopping extravaganzas in China, such as the Double 11 Festival and the June 18 Festival, as well as celebrity promotions, can trigger temporary surges in sales volume, which may be followed by rapid demand decreases. Moreover, the post-epidemic era has seen soaring sales of drugs and medical equipment. These factors add to the challenge of accurately predicting sales volumes and preparing appropriate inventory. Therefore, fluctuations in time, induced human factors, and external forces beyond control are important source influencing sales volume. However, they are often difficult to be directly analyzed or mathematically evaluated, thereby increasing the challenge of predicting outcomes.

Recent years have witnessed the emergence of machine learning, including deep learning14,15, which has demonstrated remarkable capabilities in tackling complex problems, such as traffic-flow prediction16, air-quality prediction17, and generative molecular design18. Deep learning methodology offer promising solutions for addressing non-empirical issues with high-fidelity available data such as several pioneer models like ARIMA model19, structural-equation-model (SEM) analysis20,21 They effectively achieve accurate sales predictions based on historical sales data, indicating that utilizing data models or artificial intelligence methods can effectively achieve accurate predictions of future sales.

However, with the advancement of technology and the evolution of the market, businesses often employ more effective strategies or means to boost product sales, such as advertising, celebrity endorsements through live broadcasts, etc., aiming for higher profit returns. In such circumstances, traditional methods often appear limited, as they struggle to effectively handle and disentangle multi-dimensional time-series data simultaneously, constrained by single-channel input modes and the lack of robust methods for feature fusion. Additionally, the inherent black-box nature of deep neural networks has impeded their widespread adoption and credibility22. Despite the development or deployment of some explainable models and techniques, such as SHAP23,24, the choice of interpretable methods still needs to consider the practical situation in the field, requiring a trade-off between predictive accuracy and interpretability (more details could be found in the related work section).

Here, the dataset utilized in this study originates from our TikTok live-streaming dataset, encompassing live-broadcast data, advertising data, and interactive data, totaling 35 features. To better capture the information contained in different datasets, we designed three distinct modules: the fusion module (FM), interaction module (IM-I), and interaction module II (IM-II) for data processing and feature extraction. Each of these modules focuses on different information and mutually integrates to complement each other's information. To enhance the interpretability of the model, we incorporate layer-wise relevance propagation (LRP)25. LRP is a widely used method for explaining predictions in a broad range of machine-learning models and has been extensively validated across various domains, including machine translation, emotion analysis, and text classification26,27. By attributing the contributions of neurons in the preceding layer to the succeeding layer, the method allocates and propagates relevant values back to the input layer while adhering to the conservation principle28. Additionally, attention mechanisms have been introduced to improve our understanding of the network and visualize molecular-prediction results. These mechanisms provide intuitive visualizations and user-friendly explanations that enhance the interpretability of the model29. By incorporating LRP and attention mechanisms, our approach significantly improves the interpretability of the deep-learning model.

This paper presents an approach for predicting the sales volume of live broadcasting using proprietary operational data. We propose an innovative deep-learning network called SaleNet, which integrates real-time data from multiple sources, including live-broadcast data, advertising data, and interactive data, to achieve accurate prediction performance. Additionally, we enhance the interpretability of the model by incorporating the LRP algorithm, enabling a clear and separate quantification of the contribution of each feature to the final prediction result.

The rest of this paper is organized as follows. "Related work" provides a review of existing literature, presenting the theoretical foundation for the study. Based on this foundation, we put forward the theoretical support, theoretical framework, and hypotheses. Subsequently, the research methods and statistical analysis are detailed in "Material and methods". "Results and discussion" presents the results and elaborates on the managerial and theoretical implications of the findings. "Limitation and outlook" presents the conclusions with a summary of the key findings, study limitations, and future research directions.

Related work

Sales forecasting is an important business activity that can help enterprises achieve better production plans, inventory control, and marketing strategies. Over the past few decades, many scholars and business practitioners have explored various methods for predicting sales30. These forecasting approaches can be broadly categorized into statistical- and neural-network-based models. However, traditional statistical methods have limitations owing to their linearity and inability to account for uncertain factors that influence sales prediction, such as weather, promotion, and competitive markets. These methods are primarily based on historical data for forecasting. For example, the ARIMA model is a widely used time-series-analysis method that can model seasonality, trends, and cyclicality19. Currently, most research on sales volume has used structural-equation-model (SEM) analysis20,21. In addition, exponential-smoothing methods are also simple, effective time-series-analysis methods that can model trends. Single-stage SEM analysis can only capture linear relationships between constructs in the study model, which may not be sufficient to predict complex decision-making processes in the real world. Linear models only capture non-compensatory decision rules, which can oversimplify the complexity involved in consumer decision-making31, leading to unreliable conclusions32.

Machine learning (ML) has emerged as a valuable tool for enhancing the efficacy of statistical forecasting methods in the domain of continuous and regularly sampled time-series forecasting33. While numerous studies have demonstrated the effectiveness of machine learning in this context, a noticeable research gap exists in the specific domain of demand forecasting33,34. The existing body of literature lacks conclusive evidence regarding the superiority of machine-learning approaches over traditional statistical methods in the context of demand forecasting35. Some studies have indicated that the individual use of either statistical or machine learning methods results in lower accuracy. However, the effectiveness of a hybrid approach—which incorporates both statistical and machine learning features—exhibited superior accuracy and precision in forecasting, as evidenced by its performance in the M3 and M4 competitions33,34,36. Thus, the combination of methods and the exploration of hybrid approaches have emerged as promising directions for advancing forecasting accuracy and enhancing the overall value of predictions36.

In contrast, artificial neural networks, as a basic tool in deep learning, are a powerful modern technique for nonlinear modeling and have gained popularity in time-series forecasting37. Artificial neural networks can handle multivariate, nonlinear, and uncertain data and can adaptively learn patterns in the data. Most studies have indicated that deep learning has better performance than the conventional methodology38, and they have attempted to apply artificial neural networks to sales forecasting. For instance, Weigend et al.39 used the backpropagation learning algorithm to apply deep learning to sunspots and exchange-rate time-series forecasting. Ansuj et al.40 used artificial neural networks to analyze the sales behavior of a medium-sized enterprise and found it to be more accurate. These methods typically require a large amount of data and more computational resources to train and optimize the model, but they offer better forecasting accuracy in certain cases.

Deep learning, in addition to having excellent predictive ability37, has a “black-box” operation mechanism and does not require theoretical support, thus being unsuitable for hypothesis testing41. To improve the interpretability of deep-learning models, researchers have used various methods. One example is the hybrid sales-forecasting system based on clustering and decision trees, which is a typical interpretable network structure42. Another method for improving deep-learning model interpretability is the Shapley additive explanation method package (SHAP), which has been shown to be effective23,24. Common interpretability methods in deep learning include Gradients, Integrated Gradients, Guided Back Propagation, and the Contrastive Explanations Method (CEM), among others43,44. Gradient-based techniques quantify the extent to which changes in each input dimension affect predictions in the vicinity of the input. For instance, in Deep gaze i45, the renowned Krizhevsky network46 was utilized to significantly enhance interpretability results. Integrated Gradients47 is an attribution method based on gradients, aiming to explain deep neural network predictions by attributing them to the input features. It essentially computes a variation of the gradient of the prediction output with respect to the input features, as implemented by simpler gradient methods48,49,50. Guided Back Propagation is a variant of the deconvolution method51, used for visualizing features learned by Convolutional Neural Networks (CNNs), and is widely applied in the image domain, such as in DeconvNet52 and ImageNet model51. The Contrastive Explanations Method (CEM) not only determines which features should minimally and sufficiently be present to yield a particular prediction but also identifies which features should minimally and necessarily be absent. This method has seen extensive applications in the image domain by Luss et al.53. However, an ideal explanation incorporates expertise from multiple domains of knowledge54. Constructing a deep-learning model suitable for a particular application is a complex task that requires consideration of many design parameters2,55,56. The corresponding interpretation algorithm also needs to consider the model's specific characteristics.

Material and methods

Scope of study and datasets preparation

The dataset utilized in this study originates from our TikTok live-streaming projects. Specifically, we employed backend recording tools to capture video and audio data from the live-streaming rooms, enabling us to derive various statistical metrics across different data types for subsequent analysis. Our primary objective is to evaluate the total transaction volume of products within specific time periods. The core of the sales data is based on transactional records within the live-streaming rooms, excluding other sources of traffic-driven transactions. During the live-streaming sessions, we recorded video data from the rooms and automatically collected multidimensional and heterogeneous time-series data, including traffic metrics, user interactions, and product purchase details. Advertising placement data primarily refers to the paid promotional activities conducted during the live broadcasts, aimed at guiding and aggregating audience attention. Here, audience engagement lasting over one minute is considered for statistical recording. Interactive data encompasses user engagement behaviors within the live-streaming rooms, providing valuable insights into the atmosphere and popularity of the broadcasts. The target audience for the live-streaming rooms comprises individuals from mainland China, spanning all provinces and cities. All recorded data covers a span of over 2 years, effectively encompassing various Chinese holidays and e-commerce event milestones, thus reflecting the characteristics of a complete sales cycle. This comprehensive temporal coverage aids in achieving improved predictive performance at corresponding time points.

The dataset used in this research consists of three parts: live-broadcast data, advertising data, and interactive data. The detailed composition of the dataset and related statistical results are presented in Table 1.

Live-broadcast data: this data contains various factors related to live broadcasting, including the user ID of the live broadcast, start and end times, cumulative number of viewers, number of comments, shares, order rate, and purchase rate. These data were collected from TikTok and reflect the real-time live-broadcasting status with 1.5-days interval.

Advertising data: these data contain average monitored advertising data, such as AD from, number of AD clicks, order rate of AD, and AD conversion number. All AD-related data were recorded in 1.5-days interval.

Interactive data: in practice, there are often interrelationships between advertisements and indicators. For instance, merchants often allocate more resources toward advertising to attract a larger audience to participate in live broadcasts and engage in consumption behaviours. Therefore, we selected GPM, ROI, and other indicators as interaction features.

The data spans from October 2, 2021, to October 24, 2023, comprising a total of 502 records, all of which share a uniform time interval of 1.5 days. The training set, generated using the scikit-learn package57, consists of the first 80% of the chronological sequence, while the remaining 20% naturally form the test set.

Model details

Fusion module (FM)

We employ one-dimensional convolutional layers to capture the dependency between the features \({\text{G}}^{\text{t}}\) (where \(\text{t}\) denotes time) in advertising data and the sales volume. The convolutional layer consists of multiple kernels of size \(\text{W}\) (here is 4). The hidden vector \({\text{h}}_{\text{k}}^{\text{t}}\) (representing the advertising data) is defined as follows:

The symbol \(\otimes\) denotes the convolution operation: \({\text{W}}_{\text{k}}\) and \({\text{b}}_{\text{k}}\) are two learnable parameters. \(\text{k}\) represents the number of filters in the convolutional network, and \({\text{b}}_{\text{k}}\) is a bias term. The \(\text{LeakyReLU}\) function is defined as

where \({\alpha }\) is a hyperparameter ranging from 0 to 1. After passing through the Maxpooling layer, \({\text{h}}_{\text{k}}^{\text{t}}\) is updated to \({\text{h}}^{\text{t}}\), which, when unfolded and passed through a fully connected layer, forms the updated advertising feature \({\text{g}}^{\text{t}}\) at time \(\text{t}\):

Interaction module (IM-I)

Firstly, we concatenate the updated advertisement input and live broadcast feature input to obtain the original input features:

We feed \({\text{x}}^{\text{t}}\) into the network to learn the interaction between different features at time \(\text{t}\) to identify potential characteristics. \({\text{L}}^{\text{t}}\) is the Live broadcast feature data, self-attention is applied to learn the interactions between features and recognize underlying connections:

where \({\text{A}}_{\text{i}}^{\text{t}}\in {\text{R}}^{\text{m}\times \text{d}}\) is the vector encoded after self-attention; \(\text{m}\) is the current number of features, \(\text{d}\) is the dimension set after encoding, which is set to 16 here;

\(\text{W}\) is a set of learnable parameters; \(\sqrt{{\text{d}}_{\text{k}}}\) is a constant used to normalize \({\text{QK}}^{\text{T}}\), set to \({\text{d}}_{\text{k}}= \sqrt{\text{d}}\). To better capture interactions between features, we apply the Multi-head attention mechanism, which is:

where \(\text{h}\) represents the number of attention heads, set to 16 here. Although we utilize feature attention mechanism to extract feature importance information and combine it as input to the temporal module, specifically:

This value represents the importance of each input feature to the prediction, where \({\text{b}}_{\text{w}}\) is a bias. After obtaining the feature attention weight values, we calculate the interaction vector of processed advertisement data and live broadcast room data as follows:

Interaction module II (TIM-II)

We applied TCN (Temporal Convolutional Network) to extract relationships between different time dimensions. It consists of three structures: Causal Convolutions layer, Dilated Convolutions layer, and Residual Convolutions layer.

Causal convolutions layer

Given an input sequence \(\widetilde{{\text{x}}^{\text{i}}}\) of length \(\text{T}\), where \(\text{X}=(\widetilde{{\text{x}}^{1} },\widetilde{{\text{x}}^{2} },\cdots ,\widetilde{{\text{x}}^{\text{t}} })\), and here, \(\widetilde{{\text{x}}^{\text{t}}}\) is the output vector in interaction module-I, then for the predicted value \({\text{y}}^{\text{t}}\) for time \(\text{t}\), it can only be influenced by those previous observations \(\{\widetilde{{\text{x}}^{\text{i}} }\left|0\le \text{i}\le \text{t}\right.\}\).

Dilated convolutions layer

To learn the formation and evolution of sales process in a long-term temporal perspective, we employ TCN with dilated convolutions, instead of traditional RNN, which enables an exponentially large receptive field. Regarding a real-number input sequence \(\text{X}=(\widetilde{{\text{x}}^{1} },\widetilde{{\text{x}}^{2} },\cdots ,\widetilde{{\text{x}}^{\text{T}} })\in {\mathbb{R}}^{\text{L}}\) and a filter \(\text{f}:(0,\cdots ,\text{k}-1)\to {\mathbb{R}}\) with a specific kernel size \(\text{k}\), the dilated convolution operation \(\text{F}\) on element \(\text{s}\) of the input sequence can be formulated by

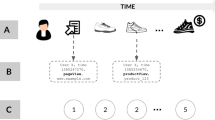

where \(\text{d}\) is the dilation factor, \(\text{k}\) is the size of the filter, and \(\otimes\) indicates the convolution operator. When \(\text{d}=1\), a dilated convolution reduces to a standard convolution. Figure 1 illustrates a TCN with dilation factor \(\text{d}=2\) and kernel size \(\text{k}=2\).

Residual convolutions layer

Residual convolutions have been proved to be effective for very-deep networks. A residual block58 contains a branch, which refers to a series of transformations \(\text{F}\), and the output of this branch is added to the input x of the block:

We fuse the time series \(\text{o}\) output by TCN with other features. Firstly, we input them into an MLP layer to transform them into a low-dimensional vector. Then, utilizing the Flatten layer, we concatenate the features. Based on the residual network concept, we integrate the output of IM-I, represented as \(\widetilde{\text{x}}\), the batch normalization result \({\text{e}}^{\text{t}}\), and the TCN output \(\text{o}\) into \({\text{s}}_{\text{t}}=[\text{o},{\text{e}}^{\text{t}},\widetilde{{\text{x}}^{\text{t}} }]\). This effectively merges different features. We obtain the time series \(\text{s}=({\text{s}}_{1},{\text{s}}_{2},{\dots ,\text{s}}_{\text{T}})\) based on the time encoder. Subsequently, we employ an attention mechanism to capture dynamic temporal correlations between features at different time intervals:

This value represents the importance of features at different time dimensions for predicting the sales volume. After obtaining the feature attention weight values, the output temporal features are:

Finally, we use a fully connected layer to output the sales volume at future time \(\Gamma \), \(\widehat{{\text{y}}_{\text{n}+\Gamma }}\).

Loss function

The SaleNet network was implemented and trained using Keras (version 2.2.4) and Tensorflow (version 1.15) deep-learning toolkits in Python (version 2.7.18). The training process was conducted on a CentOS Linux 7 server equipped with a Tesla V100-PCIE GPU. During the training phase, a batch size of 16 and a learning rate of 0.001 were used. The Adam optimizer was employed, and the objective loss function was formulated as follows:

where \(\widehat{y}\) and \(y\) are the predicted value and the ground-truth value, respectively.

Evaluation methods and baseline

The performance of our model was evaluated by three common metrics: the mean absolute error (MAE), root mean squared error (RMSE), and mean absolute percentage error (MAPE). The MAE, RMSE, and MAPE are defined as follows:

where \(\widehat{Y}=\left\{\widehat{{y}_{1}},\widehat{{y}_{2}},\cdots ,\widehat{{y}_{n}}\right\}\) is the set of the predicted value, and \(Y=\left\{{y}_{1},{y}_{2},\cdots ,{y}_{n}\right\}\) is the test set value.

We compared our model with several advanced neural networks for sales-volume forecasting to better express model performance, including autoregressive integrated moving average (ARIMA)59, gradient-boosting regression tree (GBDT)60, and emerging deep-learning-based based methods, including long short-term memory network (LSTM)61, gated recurrent unit (GRU)62, sequence to sequence (Seq2seq)63, dual-stage attention-based recurrent neural network (DA-RNN)64, and multilevel-attention-based RNN model for time-series prediction (Geo-MAN)65. These methods use the same dataset, but the input data can be adjusted for different models.

Layer-wise relevance propagation

For a deep-learning neural network, the input vector can be represented as \(V=\left\{{v}_{1},{v}_{2},\cdots ,{v}_{n}\right\}\), and the predicted output of the network is denoted as \(f\left(V\right)\). Layer-wise relevance propagation (LRP) produces a decomposition \(R=\left\{{r}_{1},{r}_{2},\cdots ,{r}_{n}\right\}\) of the prediction on the input variables, which satisfies the following conditions:

The LRP method applies a backward-propagation mechanism uniformly to all neurons in a neural network. By applying the LRP method to our SaleNet network, we obtained the relevance values between the input factors and the output, denoted as \(\left\{{r}_{0},{r}_{1},{r}_{2},{r}_{3},{\dots ,r}_{n}\right\}\).

Results and discussion

Data collection

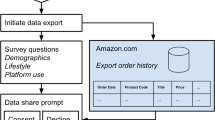

In this section, we present the live-broadcast process within our research scope, which is divided into three parts: advertising, live-broadcast, and interactive section. These three categories play distinct roles during the live streaming process, each serving different purposes. Advertising aims to showcase the entrance to the live room, attract viewers and traffic, and allow consumers to find the desired products. Specifically, they can enter the live room in two ways: by clicking on the profile picture during the live broadcast or by clicking on the blogger’s image. Customers can also directly search for the desired products by finding the target store in the online shopping mall for consumption.

Within the live room, the anchor continuously interacts with potential consumers. Through the anchor’s script, consumers understand the selling points of the products presented through graphics and text, thereby stimulating consumer spending and guiding them to complete the ordering process. The interactive process is crucial, as anchors must attract as many viewers as possible and maintain their engagement for an adequate duration, facilitating comprehension of the products' functionality and purpose, thus establishing the foundation for subsequent consumption. Moreover, the live room can directly facilitate the sale of products, providing richer feature information.

Model architecture

The SaleNet model utilizes an encoder-decoder architecture made up of three distinct modules: fusion module (FM), interaction module (IM-I), and interaction module II (TIM-II) (Fig. 1). (a) Fusion module: We employ an independent FM to exploit the spatial dependence between the advertising data and sales volume, utilizing its multiple embedded convolutional layers. The results are the processed advertising feature as input to the next module. (b) Interaction module I: In this module, we aim to unscramble the complex relationship between advertising data and the live broadcast by employing the multi-head attention structure66 to achieve the interactions between different features. Considering that not all of these features can contribute to the prediction of sale volume, we then introduce traditional attention mechanism into this module to highlight key features. The self-attention and attention mechanism are combined, which can not only enable the interactions between different features but also can address sequential forecasting problems with long-term dependency. Besides, this approach can achieve higher-accuracy forecasting. (c) Interaction Module II: The temporal convolutional network (TCN) is used to capture the long-term and deep interactions between interactive data in the temporal dimension and processed feature embedding. The TCN contains three substructures: causal convolution, dilation convolution, and residual connection (more detail can be found in "Material and methods").

Evaluations of SaleNet on sales prediction

This subsection presents the performance of SaleNet in predicting sales volume, as depicted in Fig. 2. The performance results of SaleNet and various baselines for predicting sales volumes at different time horizons (+ 1.5 days, + 3 days and + 6 days) are summarized in Table 2, with the best results highlighted in bold. The findings illustrate that SaleNet outperformed alternative solutions for both short-term and long-term prediction tasks. In short-term predictions, SaleNet exhibited superior performance with the lowest MAPE and MAE, indicating more accurate predictions with minimal errors compared to actual sales volumes. However, all models showed increased error in long-term predictions (+ 6 days), yet SaleNet demonstrated better prediction accuracy compared to other baseline methods.

Specifically, compared to the traditional baseline model ARIMA, SaleNet exhibited a significant reduction in MAE, decreasing from 72.172 to 29.562 in the + 1.5 days prediction results, as shown in Table 2. Despite an increase in prediction errors for both models in long-term forecasts (+ 6 days), SaleNet showed relatively minor fluctuations in the R2 metric. This suggests that while the performance of the model decreased in long-term time series prediction tasks, SaleNet's predictions still effectively reflected changes in sales trends, demonstrating superior robustness. Moreover, SaleNet's MAPE results were consistently below 20% for both short-term and long-term predictions, indicating significant practical implications. For businesses, a lower proportion of unsold or oversold products translates to reduced supply pressure, facilitating inventory optimization and product scheduling.

Additionally, our investigation highlighted the notable superiority of hierarchical structured networks such as seq2seq, DA-RNN, ADAIN, and Geo-MAN over other nonhierarchical structured networks. This result suggests that hierarchical structures, which process each feature individually and incorporate feature interactions, may be more conducive to producing satisfactory results in time series prediction tasks.

Furthermore, to validate the generalizability of our model, we tested it on another publicly available TikTok dataset, which collected 7599 active users and 122,670 records from 20,445 shopping guide micro-videos67. To ensure a fair comparison, we removed the Visual and Acoustic information from the dataset, retaining only the textual and user information. As shown in the results, SaleNet significantly outperforms other baseline models, achieving an approximate improvement of 8.2% over the state-of-the-art MTAF (without Visual and Acoustic modalities)67. This demonstrates the superiority of our model framework. On the other hand, we also observed that incorporating more modalities appears to effectively enhance model performance, as it provides more available information to assist the model in understanding the data (Fig. 3).

Identification of important features

Generally, we focused on the sale volume as well as aimed to perform a feature-contribution analysis. An important output of SaleNet is a ranking of the contributions of input features by LRP method. Here, the 6-days prediction result was used as the case because it has more practical significance (Fig. 4).

The contribution assessment of variables by LRP (abbreviation definitions are presented in Table 1).

Regarding the characteristics of live streaming, we observed significant effects on sales outcomes from various factors. These factors included user ID, start and end times of the live stream, as well as features such as the number of overall order information. An earlier start time of the live stream had a positive impact on sales, as an earlier start time has a negative correlation with sales. Conversely, a later end time was advantageous for increasing sales, as longer live-streaming durations provide consumers with more opportunities and time to engage with the products, thereby leading to increased sales. Moreover, the overall order-fulfillment number, fulfillment rate, order rate, and order number were directly related to the final sales; these features serve as direct indicators of sales volume. Therefore, leveraging key features obtained by the model, such as user ID, start and end times of the live stream, can assist businesses in devising more rational and flexible strategies, ultimately reducing costs and achieving higher profits. For instance, selecting more appropriate start and end times for live streams throughout the day could optimize product sales.

Turning to advertising data, noteworthily, most features did not demonstrate a clear correlation with the predictive results. However, metrics such as the ad capture rate, average cost, and ad cost per click exhibited a significant positive correlation. This finding aligns with expectations, as these metrics directly influence sales. Thus, within the interaction data, Advertising Global Product Marketing (GPM) showed a strong positive correlation with the results, representing the average total amount of orders placed per 1000 viewers. In summary, the observed feature rankings of the current model were consistent with existing knowledge and research, highlighting the model's interpretability within the SaleNet framework. Therefore, given that the purpose of advertising is to attract attention and gather focus, businesses can effectively reduce advertising costs by decreasing ad placements after the start of the live stream.

The number of viewers and comments constituted essential content within the interactive features. We also found clear positive correlations between sales and cumulative number of viewers, number of live comments, and number of clicks on goods. These relationships are intuitive, as a higher number of viewers signifies a larger potential consumer base, while increased comments and clicks reflect heightened consumer interest and purchasing intent. However, the number of new fans showed a negative correlation with sales, possibly owing to the tendency of individuals to become familiar with a product or seller before making a purchase. This feature reflects consumers' cautious approach. Another possible explanation is that the negative correlation arises from the promotional phase, where the advertising and promotional efforts may outpace the actual growth in sales. Hence, to increase sales revenue, businesses need to consider how to engage the audience and enhance their interest in the products.

Ablation experiment

To validate the effectiveness of each module and assess the model’s robustness, we conducted a comprehensive series of ablation studies within the integrated SaleNet framework. Through systematic removal and replacement of individual modules, we rigorously evaluated their impact on the model’s performance. Remarkably, our findings consistently demonstrate the substantial contributions of each module in enhancing the integrated model’s overall predictive ability, as shown in Fig. 5.

Our experimental results serve to validate the predictive capabilities and robustness of our algorithm across varying time intervals. Additionally, they underscore its practicality and stability in real-world scenarios. The observed improvements resulting from the presence of specific modules reaffirm the essential role these modules play within the integrated SaleNet framework. By systematically dissecting the model and examining its individual components, we uncovered compelling evidence of the synergistic effects achieved through the integration of the respective modules. These findings not only highlight the importance of each module but also support the overall efficacy and reliability of the SaleNet framework. Furthermore, the results of the ablation study validate our model's performance, boosting confidence in its predictive capabilities and reinforcing its suitability for practical applications. In summary, our results lay a strong foundation for the proposed SaleNet framework and its potential for real-world deployment.

Limitation and outlook

In this study, several limitations remain. Firstly, the reliance on limited data for training and testing models may not fully capture the complexities and variability of real-world scenarios. In this study, we did not differentiate between different products and regions. However, acquiring high-quality, comprehensive real-life datasets is challenging, as it requires extensive fieldwork, prolonged data collection periods, and consideration of various factors. Secondly, further and broader validation of SaleNet's practical application is still needed. Thus, the interpretability of the model is crucial as the first step for establishing trust and confidence. Consequently, the recommendations of this study are:

-

1.

Increase the richness and diversity of available training data sources to facilitate the creation of comprehensive datasets for model development, enhancing the model's generalization ability to address various contexts.

-

2.

Conduct further research to enhance the predictive and generalization capabilities of the model under different categories of products and regional conditions.

-

3.

Expect the current interpretability results to serve as important foundational evidence for future AI-assisted customization of broader sales strategies and scheme planning.

Conclusions

In this paper, we introduced a novel method for accurately predicting sales volumes. Through experimental analysis, we demonstrated that our proposed model outperformed other deep-learning models, such as LSTM, GBRT, Seq2Seq, and DA-RNN, in terms of both fitting and prediction performance. Thus, our approach could significantly enhance forecasting accuracy, enabling managers to make informed decisions regarding product ordering. Furthermore, we found that maintaining a delicate balance between supply and demand was crucial for retailers, and accurate sales-volume prediction was indispensable for achieving commercial success. Next, we employed the LRP method to evaluate the contributions of input parameters to the prediction results. The interpretability of our model was noteworthy because the feature-importance results aligned more closely with current research and common sense. The findings presented in this study made substantial contributions to the field of sales-volume prediction, offering a robust and accurate solution. The demonstrated superiority of our proposed model, coupled with its interpretability, enhanced its practical applicability and facilitated decision-making processes in various commercial contexts. We also anticipate that an interpretable deep-learning algorithm will play a pivotal role in the field of sales forecasting, contributing to the sustainable development of both humanity and society.

Data availability

All datasets supporting this study are available at: https://github.com/lore-ys/livegmv.

References

Chen, R., Cao, L. & Jan, N. Risk prediction algorithm of green agriculture industry direct marketing based on improved membership function. Math. Probl. Eng. 1–9, 2022. https://doi.org/10.1155/2022/7418089 (2022).

Chen, T. et al. Online sales prediction via trend alignment-based multitask recurrent neural networks. Knowl. Inf. Syst. 62, 2139–2167. https://doi.org/10.1007/s10115-019-01404-8 (2020).

Zhang, X. M., Chen, H. R. & Liu, Z. Operation strategy in an E-commerce platform supply chain: Whether and how to introduce live streaming services?. Int. Trans. Oper. Res. https://doi.org/10.1111/itor.13186 (2022).

Xu, Y. J., Jiang, W. Q., Li, Y. & Guo, J. The influences of live streaming affordance in cross-border E-commerce platforms: An information transparency perspective. J. Glob. Inf. Manag. https://doi.org/10.4018/JGIM.20220301.oa3 (2022).

Lixin, X., Hongzhen, L., Na, H. & Jing, L. Risk analysis of College Students e-commerce live broadcast based on data in survey. J. Phys. Conf. Ser. UK 1774, 012010. https://doi.org/10.1088/1742-6596/1774/1/012010 (2021).

Wang, L., Li, X., Zhu, H. Y. & Zhao, Y. Influencing factors of livestream selling of fresh food based on a push-pull model: A two-stage approach combining structural equation modeling (SEM) and artificial neural network (ANN). Expert Syst. Appl. https://doi.org/10.1016/j.eswa.2022.118799 (2023).

Liu, X. L., Zhang, L. & Chen, Q. The effects of tourism e-commerce live streaming features on consumer purchase intention: The mediating roles of flow experience and trust. Front. Psychol. https://doi.org/10.3389/fpsyg.2022.995129 (2022).

Zhou, M. et al. Characterizing Chinese consumers’ intention to use live e-commerce shopping. Technol. Soc. https://doi.org/10.1016/j.techsoc.2021.101767 (2021).

Zheng, H. Y. & Ma, W. L. Click it and buy happiness: Does online shopping improve subjective well-being of rural residents in China?. Appl. Econ. 53, 4192–4206. https://doi.org/10.1080/00036846.2021.1897513 (2021).

Wang, F., Wang, M. F. & Yuan, S. C. Spatial diffusion of E-commerce in China’s counties: Based on the perspective of regional inequality. Land. https://doi.org/10.3390/land10111141 (2021).

Yu, X. Y., Li, Y. J., Zhu, K. X., Wang, W. H. & Wen, W. Strong displayed passion and preparedness of broadcaster in live streaming e-commerce increases consumers’ neural engagement. Front. Psychol. https://doi.org/10.3389/fpsyg.2022.674011 (2022).

Tsoumakas, G. A survey of machine learning techniques for food sales prediction. Artif. Intell. Rev. 52, 441–447. https://doi.org/10.1007/s10462-018-9637-z (2019).

Fries, M. & Ludwig, T. 'Why are the sales forecasts so low?' Socio-technical challenges of using machine learning for forecasting sales in a bakery. In Computer Supported Cooperative Work-the Journal of Collaborative Computing and Work Practices. https://doi.org/10.1007/s10606-022-09458-z.

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444. https://doi.org/10.1038/nature14539 (2015).

Jiménez-Luna, J., Grisoni, F. & Schneider, G. Drug discovery with explainable artificial intelligence. Nat. Mach. Intell. 2, 573–584. https://doi.org/10.1038/s42256-020-00236-4 (2020).

Wang, H. Q., Zhang, R. Q., Cheng, X. & Yang, L. Q. Hierarchical traffic flow prediction based on spatial-temporal graph convolutional network. IEEE Trans. Intell. Transport. Syst. 23, 16137–16147. https://doi.org/10.1109/tits.2022.3148105 (2022).

Du, W. J. et al. Deciphering urban traffic impacts on air quality by deep learning and emission inventory. J. Environ. Sci. 124, 745–757. https://doi.org/10.1016/j.jes.2021.12.035 (2023).

Merz, K. M., De Fabritiis, G. & Wei, G. W. Generative models for molecular design. J. Chem. Inf. Model. 60, 5635–5636. https://doi.org/10.1021/acs.jcim.0c01388 (2020).

Chu, C.-W. & Zhang, G. P. A comparative study of linear and nonlinear models for aggregate retail sales forecasting. Int. J. Prod. Econ. 86, 217–231 (2003).

Wang, L., Li, X., Zhu, H. & Zhao, Y. Influencing factors of livestream selling of fresh food based on a push-pull model: A two-stage approach combining structural equation modeling (SEM) and artificial neural network (ANN). Expert Syst. Appl. 212, 118799 (2023).

Wang, Z., Li, J. & Chen, P. Factors influencing Chinese flower and seedling family farms’ intention to use live streaming as a sustainable marketing method: An application of extended theory of planned behavior. Environ. Dev. Sustain. 1–24 (2022).

Arora, S. Opening the black box of deep learning: Some lessons and take-aways. ACM SIGMETRICS Perform. Eval. Rev. (USA) 49, 1–1. https://doi.org/10.1145/3543516.3453910 (2022).

Bhattacharya, A. Applied Machine Learning Explainability Techniques: Make ML Models Explainable and Trustworthy for Practical Applications using LIME, SHAP, and More (Packt Publishing Ltd, 2022).

Chen, J., Koju, W., Xu, S. & Liu, Z. 135–138 (IEEE).

Bach, S. et al. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PLoS One. https://doi.org/10.1371/journal.pone.0130140 (2015).

Arras, L., Horn, F., Montavon, G., Muller, K. R. & Samek, W. “What is relevant in a text document?”: An interpretable machine learning approach. PLoS One. https://doi.org/10.1371/journal.pone.0181142 (2017).

Lin, F. Z., Gao, C. Y. & Yamada, K. D. An effective convolutional neural network for visualized understanding transboundary air pollution based on Himawari-8 Satellite Images. IEEE Geosci. Remote Sens. Lett. https://doi.org/10.1109/lgrs.2021.3102939 (2022).

Lapuschkin, S. et al. Unmasking Clever Hans predictors and assessing what machines really learn. Nat. Commun. https://doi.org/10.1038/s41467-019-08987-4 (2019).

Ross, J. et al. Large-scale chemical language representations capture molecular structure and properties. Nat. Mach. Intell. 4, 1256–1264. https://doi.org/10.1038/s42256-022-00580-7 (2022).

Cheriyan, S., Ibrahim, S., Mohanan, S. & Treesa, S. 53–58 (IEEE).

Calzetta, E. Chaos, decoherence and quantum cosmology. Class. Quantum Gravity. 29, 143001 (2012).

Hew, T.-S., Leong, L.-Y., Ooi, K.-B. & Chong, A.Y.-L. Predicting drivers of mobile entertainment adoption: A two-stage SEM-artificial-neural-network analysis. J. Comput. Inf. Syst. 56, 352–370 (2016).

Makridakis, S., Spiliotis, E. & Assimakopoulos, V. The M4 competition: Results, findings, conclusion and way forward. Int. J. Forecast. 34, 802–808. https://doi.org/10.1016/j.ijforecast.2018.06.001 (2018).

Makridakis, S., Spiliotis, E. & Assimakopoulos, V. The M4 competition: 100,000 time series and 61 forecasting methods. Int. J. Forecast. 36, 54–74. https://doi.org/10.1016/j.ijforecast.2019.04.014 (2020).

Spiliotis, E., Makridakis, S., Semenoglou, A.-A. & Assimakopoulos, V. Comparison of statistical and machine learning methods for daily SKU demand forecasting. Oper. Res. 22, 3037–3061. https://doi.org/10.1007/s12351-020-00605-2 (2020).

Hernandez Montoya, A. R., Makridakis, S., Spiliotis, E. & Assimakopoulos, V. Statistical and machine learning forecasting methods: Concerns and ways forward. PLoS One https://doi.org/10.1371/journal.pone.0194889 (2018).

Kurani, A., Doshi, P., Vakharia, A. & Shah, M. A comprehensive comparative study of artificial neural network (ANN) and support vector machines (SVM) on stock forecasting. Ann. Data Sci. 10, 183–208. https://doi.org/10.1007/s40745-021-00344-x (2021).

Lachtermacher, G. & Fuller, J. D. Back propagation in time-series forecasting. J. Forecast. 14, 381–393 (1995).

Weigend, A., Rumelhart, D. & Huberman, B. Generalization by weight-elimination with application to forecasting. Adv. Neural Inf. Process. Syst. 3 (1990).

Ansuj, A. P., Camargo, M. E., Radharamanan, R. & Petry, D. G. Sales forecasting using time series and neural networks. Comput. Ind. Eng. 31, 421–424 (1996).

Olden, J. D. & Jackson, D. A. Illuminating the “black box”: A randomization approach for understanding variable contributions in artificial neural networks. Ecol. Model. 154, 135–150 (2002).

Thomassey, S. & Fiordaliso, A. A hybrid sales forecasting system based on clustering and decision trees. Decis. Support Syst. 42, 408–421 (2006).

Linardatos, P., Papastefanopoulos, V. & Kotsiantis, S. Explainable ai: A review of machine learning interpretability methods. Entropy 23, 18 (2020).

Hassija, V. et al. Interpreting black-box models: A review on explainable artificial intelligence. Cogn. Comput. 16, 45–74 (2024).

Kümmerer, M., Theis, L. & Bethge, M. Deep gaze i: Boosting saliency prediction with feature maps trained on imagenet. arXiv preprint arXiv:1411.1045 (2014).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25 (2012).

Sundararajan, M., Taly, A. & Yan, Q. 3319–3328 (PMLR).

Roth, A. E. The Shapley Value: Essays in Honor of Lloyd S. Shapley (Cambridge University Press, 1988).

Shrikumar, A., Greenside, P. & Kundaje, A. 3145–3153 (PMlR).

Mudrakarta, P. K., Taly, A., Sundararajan, M. & Dhamdhere, K. Did the model understand the question? arXiv preprint arXiv:1805.05492 (2018).

Zeiler, M. D. & Fergus, R. 818–833 (Springer).

Zeiler, M. D., Taylor, G. W. & Fergus, R. 2018–2025 (IEEE).

Luss, R., Chen, P.-Y., Dhurandhar, A., Sattigeri, P. & Shanmugam, K. (Google Patents, 2022).

Frasca, M., La Torre, D., Pravettoni, G. & Cutica, I. Explainable and interpretable artificial intelligence in medicine: A systematic bibliometric review. Discover Artif. Intell. 4, 15 (2024).

Chen, F. L. & Ou, T. Y. Sales forecasting system based on Gray extreme learning machine with Taguchi method in retail industry. Expert Syst. Appl. 38, 1336–1345. https://doi.org/10.1016/j.eswa.2010.07.014 (2011).

Cantón Croda, R. M., Gibaja Romero, D. N. E. & Caballero Morales, S. O. Sales prediction through neural networks for a small dataset (2019).

Hao, J. & Ho, T. K. Machine learning made easy: A review of scikit-learn package in python programming language. J. Educ. Behav. Stat. 44, 348–361 (2019).

He, K., Zhang, X., Ren, S. & Sun, J. 770–778.

Box, G. E. P. & Pierce, D. A. Distribution of residual autocorrelations in autoregressive-integrated moving average time series models. J. Am. Stat. Assoc. 65, 1509–1526 (1970).

Ayyadevara, V. K. & Ayyadevara, V. K. Gradient boosting machine. In Pro machine Learning Algorithms: A Hands-on Approach to Implementing Algorithms in Python and R, 117–134 (2018).

Graves, A. & Graves, A. Long short-term memory. In Supervised Sequence Labelling with Recurrent Neural Networks, 37–45 (2012).

Cho, K. et al. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv preprint arXiv:1406.1078 (2014).

Sutskever, I., Vinyals, O. & Le, Q. V. Sequence to sequence learning with neural networks. Adv. Neural Inf. Process. Syst. 27 (2014).

Qin, Y. et al. A dual-stage attention-based recurrent neural network for time series prediction. arXiv preprint arXiv:1704.02971 (2017).

Liang, Y., Ke, S., Zhang, J., Yi, X. & Zheng, Y. 3428–3434.

Vaswani, A. et al. Attention is all you need. Adv. Neural Inf. Process. Syst. 30 (2017).

Ou, N. et al. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). 4543–4547 (IEEE).

Acknowledgements

We thank LetPub (www.letpub.com) for its linguistic assistance during the preparation of this manuscript. Declaration of generative AI and AI-assisted technologies in the writing process

Author information

Authors and Affiliations

Contributions

L.J.W. wrote the main manuscript text. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, L., Zhang, X. Livestream sales prediction based on an interpretable deep-learning model. Sci Rep 14, 20594 (2024). https://doi.org/10.1038/s41598-024-71379-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-71379-2

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.