Abstract

To successfully market to automotive parts customers in the Industrial Internet era, parts agents need to perform effective customer analysis and management. Dynamic customer segmentation is an effective analytical tool that helps parts agents identify different customer groups. RFM model and time series clustering algorithms are commonly used analytical methods in dynamic customer segmentation. The original RFM model suffers from the problems of R index randomness and ignoring customers’ perceived value. For most existing studies on dynamic customer segmentation, time series clustering techniques largely focus on univariate clustering, with less research on multivariate clustering. To solve the above problems, this paper proposes a dynamic customer segmentation approach by combining LRFMS and multivariate time series clustering. Firstly, this method represents each customer behavior as a time series sequence of the Length, Recency, Frequency, Monetary and Satisfaction variables. And then, we apply a multi-dimensional time series clustering algorithm based on three distance measurement methods called DTW-D, SBD, and CID to carry out customer segmentation. Finally, an empirical study and comparative analyses are conducted using customer transaction data of parts agents to verify the effectiveness of the approach. Additionally, a detailed analysis of different customer groups is made, and corresponding marketing suggestions are provided.

Similar content being viewed by others

Introduction

Customer segmentation has become a research hotspot for customer relationship management in various industries. Customer segmentation can effectively assist companies in managing customer relationships and improving their services. It utilizes various advanced technologies such as statistical analysis, machine learning, pattern mining, and deep learning1,2,3. “Segmentation results are not static” has already attracted the attention of many scholars, and the underlying reasons are attributed to the changing time and space factors4. With the increase of customer information and the development of data analysis technology, the study of customer segmentation is no longer satisfied with the validity of a particular point in time5. And it focuses more on the behavioral trends of customers6,7. Therefore, the research on dynamic customer segmentation is significant.

The Recency, Frequency, and Monetary (RFM) model and time series clustering are commonly used analytical methods in dynamic customer segmentation. Their combination can provide accurate customer value analysis and better meet customer needs. However, these two methods also have some problems that need be solved. The traditional RFM model only considers the company’s perceived value, neglecting the perceived value from the customer’s perspective. In addition, the R-attribute in the RFM model has significant randomness. For dynamic customer segmentation, although most existing research focuses on the application of univariate time series clustering, there is increasing interest in the application of multivariate time series (MTS) clustering.

To solve these problems, this study proposes a dynamic customer segmentation approach by combining the Length, Recency, Frequency, Monetary and Satisfaction (LRFMS) model and MTS clustering. The method is called DCSLM (Dynamic Customer Segmentation by combining LRFMS and MTS). In the DCSLM, LRFMS attributes are extracted from customers’ transaction data. Then, we utilize an MTS clustering approach that incorporates dynamic time warping based on multiple dimensions (DTW-D)8,9, shape-based distance (SBD), and complexity-invariant dissimilarity (CID) to carry out customer segmentation. In MTS clustering, when only considering the overall matching of MTS, all variables are processed using DTW-D to generate a fuzzy membership matrix. When considering the overall matching of MTS and the contribution of each independent dimension, two composite fuzzy membership matrixes are generated based on DTW-D and SBD, as well as DTW-D and CID. For three fuzzy membership matrixes, clustering results can be obtained by applying spectral clustering (SP), affinity propagation (AP), and k-medoids clustering algorithms. The best-quality clustering results are obtained by cluster validity indices.

The rest of the paper is organized as follows. In "Related work" section, we provide a thorough description of related work. In "The proposed method" section, we describe the DCSLM. In "Empirical study" section, we verify the validity of the DCSLM using customer transaction data from parts agents. The results are discussed in "Analysis and comparisons of the DCSLM" section, and "Conclusions" section concludes the paper and discusses future research directions.

Related work

Dynamic customer segmentation is a customer behavior analysis method, which combines customer behavioral characteristics and attributes to divide customers into different groups, helping enterprises better understand customer needs10,11,12. Table 1 summarizes the research on customer segmentation in recent years.

Most scholars apply RFM to capture the dynamic behavior of customers to improve the effectiveness of dynamic customer segmentation. Syaputra et al.4, Abbasimehr et al.5,6,10,13,14, Sivaguru et al.15, Liu et al.16, and Yoseph et al.17 have used RFM attributes and time series clustering to achieve dynamic customer segmentation. In addition to the original RFM, various modifications to the RFM model are used as segmentation variables18. Change of the RFM model is done by redefining one or more of the RFM variables or adding new variables, sometimes excluding some variables18. In the traditional retail industry, Huang et al.19 modified the RFM model by adding the parameter C of social relations, providing more precise customer segmentation. Smaili et al.20 proposed an RFM-D model, which added the diversity D of products purchased by a given customer, improving customer analysis and response prediction. Kao et al.21, Wei et al.22 and Husnah et al.23 added the dimension of time length based on the RFM model to improve the accuracy of customer segmentation. Peker et al.24 proposed a LRFMP model that adds length (L) and periodicity (P) based on the RFM model. The model performs well in classifying customers for the grocery retail industry and significantly improves the accuracy of customer segmentation. Although the above references improve the effectiveness of customer segmentation, there is a large randomness in the R index. In addition, the previous research on RFM only considers the firm’s perceived value and ignores the customer’s perceived value.

Clustering is most commonly used in RFM-based dynamic customer segmentation25. The clustering algorithms act as tools to deal with RFM attributes to achieve the customer segmentation objective26. Most of the studies in the literature have focused on two major properties of cluster analysis especially when dealing with time series data, including clustering algorithms and the similarity measure which is used to calculate the distance between the time series27. Some common clustering algorithms are k-means7,10,19,20,21,24,28,29, k-medoids10, fuzzy c-means (FCM)10,15,17,23, DBSCAN16,29, Spectral clustering (SP)5, AP29, and hierarchical clustering (HC)5,6,7,13. The second important property of the clustering methods is the similarity measure. Some common measures are SBD5, CID5,6,14, dynamic time warping (DTW)4,5,6,13,14, temporal correlation coefficient (CORT)5,6,13,14, correlation-based measure (COR)13, discrete wavelet transform (DWT)14, and Euclidean distance (ED)6,13,14,28. In the segmentation of pharmaceutical users in Indonesia, Syaputra et al.4 modeled customer behavior as a time series of RFM and used DTW (CBRD) to achieve effective customer segmentation for the return product customers. Abbasimehr et al.13 proposed a methodology for customer behavior analysis using time series clustering (CATC). The methodology modeled customer behavior as a time series of RFM, and used time series clustering including the similarity measure (i.e., ED, COR, DTW, and CORT) and HC, which validated its effectiveness and practicality by analyzing customer data from a bank. Zhou et al.28 proposed a time series clustering algorithm based on smooth sequence ED measurement and k-means (TCBS), which analyzed user behavior patterns in online games, and effectively improved the accuracy and practicality of dynamic customer segmentation. Considering the time dimension of individual customer behavior, Abbasimehr et al.5 proposed an analytical framework based on RFM model and time series clustering techniques (AFRC) for dynamic segmentation. This framework represented each customer behavior as a time series of RFM and used time series clustering algorithms for customer segmentation, which combined state-of-the-art similarity measures and some powerful time-series clustering algorithms to obtain the best quality clustering results, improving the refinement management and decision-making efficiency of enterprises. All the above methods effectively improve dynamic customer segmentation. However, most research has been developed primarily for univariate time series, and there has been a lack of effective solutions for dealing with MTS data.

In summary, it is challenging research to solve the problems of R index randomness and neglecting customer perceived value of traditional RFM model, and apply MTS clustering to dynamic customer segmentation. In this paper, we introduce the DCSLM method and validate its effectiveness using customer transaction data from parts agents.

The proposed method

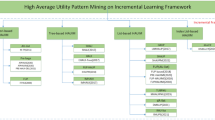

In this section, we introduce the four main components of DCSLM, as shown in Fig. 1. The DCSLM consists of four main components: (1) representing customer behavior as a time series of LRFMS; (2) distance measures; (3) feature weighting and fuzzy membership matrix; and (4) clustering algorithms.

Representing customer behavior as time series of LRFMS

The RFM model is a crucial tool for measuring customer value and has found wide application in customer segmentation across various fields, including sales, marketing, and operations management15. However, the R index of the RFM model has a large randomness. For example, the R index of a new customer may be the same as a loyal customer’s, resulting in an incorrect assessment of the customer’s value. Additionally, the three-dimensional indexes of the original RFM model are selected from the perceived value of the firm. With the rapid development of the Internet, customers have become the source of a company’s profit for its development. Therefore, customer satisfaction has become a critical factor in winning the market competition for companies.

To solve the above problem, we propose the LRFMS model. This model includes five indicators: Length (\(L_{i}\)), Recency (\(R_i^{\prime }\)), Frequency (\(F_{i}\)), Monetary (\(M_{i}\)) and Satisfaction (\(S_{i}\)). \(L_{i}\) is the time elapsed between the first and last transaction in the ith interval, reflecting the customer’s loyalty; \(R_i^{\prime }\) denotes the average time elapsed between the end of the ith interval and the time of this customer’s last p transactions in that interval; \(F_{i}\) represents the number of transactions during the interval; \(M_{i}\) represents the accumulated amount of customer transactions during the interval; and \(S_{i}\) represents customer satisfaction in that interval. Each indicator of the LRFMS is defined as follows:

where \(last_{i1}\) represents the time of the customer’s last transaction in ith interval and \(first_i\) represents the time of the customer’s first transaction in ith interval.

where end(i) represents the end time of the ith interval, and \(last_{il}\) denotes the time of the customer’s last lth transaction during ith interval. P represents the number of transactions closest to the end of the ith interval.

where count(data) represents the number of transactions during ith interval.

where sum(price) is the accumulated amount of customer transactions during the interval.

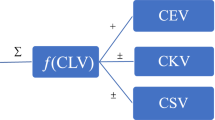

where \(CQ_{i}\) represents the customer’s satisfaction rating of product quality during ith interval; \(CD_{i}\) represents the customer’s satisfaction rating with the timeliness of product delivery during ith interval; \(CS_{i}\) indicates the customer’s satisfaction rating of after-sales service during ith interval; \(\alpha\), \(\beta\), and \(\gamma\) are rating weights of customer satisfaction.

Distance measures

In this section, we introduce three state-of-the-art similarity measures, including DTW-D, CID, and SBD. They are used to calculate the distance between the time series.

Dynamic time warping

DTW, as a similarity measure, can find the optimal matching path between data in two arbitrary long time series by adjusting the correspondence between time points, which is robust to noise and can measure the similarity of time series more effectively30. Suppose there are two univariate time series \(\tilde{X}=\left\{ \tilde{x}_1,\ldots ,\tilde{x}_i,\ldots ,\tilde{x}_t \right\}\) and \(\tilde{Y}=\left\{ \tilde{y}_1,\ldots ,\tilde{y}_j,\ldots ,\tilde{y}_t \right\}\), where \(\tilde{x}_i\) is a real number in the ith time point of \(\tilde{X}\) and \(\tilde{y}_j\) is a real number in the jth time point of \(\tilde{Y}\). t is the length of a univariate time series. An alignment matrix of size \(t\times t\) is constructed from \(\tilde{X}\) and \(\tilde{Y}\). Element (i,j) in the alignment matrix represents the ith element of \(\tilde{X}\) corresponding to the jth element of \(\tilde{Y}\)31. The optimal alignment can be regarded as a warping path \(M^{'}\) in the alignment matrix31. DTW can be defined as follows:

where \(M^{\prime }=\left\{ M_1^{\prime }, \ldots , M_{\grave{s}}^{\prime }, \ldots , M_{\grave{n}}^{\prime }\right\}\) represents a curved path, \(\grave{n}\) is the total number of elements in the path. \(M_{\dot{s}}^{\prime }=(i, j) \in M^{\prime }\) indicates the coordinate of the \(\grave{s}\)th point on the path, and \(D S\left( M_{\dot{s}}^{\prime }\right) =D S(i, j)\) represents the distance between \(\tilde{\textrm{x}}_i\) and \(\tilde{\textrm{y}}_j\). There are many paths like \(M^{\prime }\). All these paths form the path space \(M^{\prime \prime }\). The goal is to obtain the optimal warping path that characterizes the path that minimizes the overall distance between \(\tilde{X}\) and \(\tilde{Y}\)10.

DTW uses dynamic programming to find estimated minimal warping path on an element-wise cost matrix given a cost function \(\left\| \tilde{x}_i-\tilde{y}_j\right\|\)32. To limit the warping path and prevent overfitting, we employ constraints. Specifically, we utilize the asymmetric slope constraint defined by the recurrence:

where D(i, j) is the cumulative minimum cost at the ith and jth element, and DM(i, j) is the minimum of the three elements’ cumulative distance values. The last element D(t, t) of D is DTW distance between the time series \(\tilde{X}\) and \(\tilde{Y}\).

DTW is commonly used to measure the similarity between two univariate time series objects. However, it is not suitable for calculating the similarity between two MTS objects. In this study, we use DTW-D, which takes into account the overall matching of MTS33,34. A z-dimensional MTS with a length of t is represented as follows.

where \(\textrm{x}_i\) represents a set of elements at the ith time point31. Suppose there are two MTS X and Y. DTW-D returns an optimal alignment utilizing Eq. (11).

where \(\textrm{dt}\left( \textrm{x}_i, \textrm{y}_j\right)\) represents the multivariate distance between \(\textrm{x}_i\) and \(\textrm{y}_j\), \(\textrm{x}_i\) is the ith time point of X, and \(\textrm{Y}_j\) is the jth time point of Y.

CID

Proposed by Batista et al.30, it uses the complexity difference between two-time series as a correction factor for the existing distance metric5,30. A general CID measure is defined as:

where \(O D(\tilde{X}, \tilde{Y})\) represents the distance metric (e.g., Euclidean distance), and \(F(\tilde{X}, \tilde{Y})\) depicts the complex correction factor by Eq. (13):

where \(C E(\tilde{X})\) and \(C E(\tilde{Y})\) are the complexity estimators. For time series \(\tilde{Y}\), \(C E(\tilde{Y})\) is defined as:

Shape-based distance measurement

SBD is a novel measurement approach that handles distortions in amplitude and phase accurately, by efficiently using cross-correlation through the normalization of a shape-based distance measure34. It is defined as:

where NCC represents the normalized cross-correlation measure, which is calculated by applying the Fast Fourier Transformation algorithm, and \(\rho\) is the position that \(N C C_w(\tilde{X}, \tilde{Y})\) is maximized.

Feature weight and fuzzy membership matrix

Feature weight

In most datasets, each feature has a different value, and some features contain more information than others. Therefore, when clustering, it is essential to compute a feature weight vector31. Li et al.31 used range to compute the feature weights. The range is affected by the extremum and does not truly reflect the dispersion of the data. Therefore, this study uses the variance to calculate the feature weights.

The dataset to be clustered with n MTS objects is called \(M=\left\{ X_1, X_2, \ldots , X_n\right\}\).\(W=\left\{ w_1, w_2, \ldots , w_z\right\}\) represents the feature vector of an MTS dataset, and \(w_l\) can be computed based on the M as follows:

where \(X_{\check{i}}^l\) denotes the lth dimension of the \(\check{i}\)th MTS object in M. \(X_{\check{i}}^a\) represents the ath dimension of the \(\check{i}\)th MTS object in M. \(\overline{X^l}\) represents the average value among all univariate time series in the lth dimension of M. \(\overline{X^a}\) represents the average value among all univariate time series in the ath dimension of M.

Center choosing by fast searching and finding density peaks

Rodriguez and Laio35 proposed a clustering by fast search and find of density peaks (DPC), which automatically finds the cluster centers and is suitable for arbitrary-shaped datasets. The main idea of the DPC algorithm is that the cluster center exhibits a higher local density in the corresponding clusters and is positioned at a relatively larger distance from other cluster centers compared to its local data points31. Compared to traditional clustering algorithms, it is simpler and more efficient35. The DPC algorithm consists of two important parameters for each data point \(\check{i}\): its local density \(\rho _{\check{i}}\) , and the distance to the nearest high-density point \(\delta _{\check{i}}\). For each point \(\check{i}\), its local density \(\rho _{\check{i}}\) is defined as follows:

where if we assume \(\check{x}=d_{\check{i} \check{j}}-d_c\), then \(\chi (\check{x})= {\left\{ \begin{array}{ll}1 &{} \check{x}<0 \\ 0 &{} \check{x} \ge 0\end{array}\right. }\). \(d_{\check{i} \check{j}}\) denotes the Euclidean distance between \(\check{i}\) and \(\check{j}\), and \(d_c\) denotes the cutoff distance. \(d_c\) is \(1 \%\) to \(2 \%\) of the total number of points in the dataset. The distance to the nearest high-density point \(\delta _{\check{l}}\) is computed as follows:

In this study, we chose the cluster centers according to a decision graph, and then select points with large \(\rho _{\check{i}}\) and \(\delta _{\check{i}}\) values as cluster centers. The following are the detailed steps of the DPC algorithm31.

Fuzzy membership matrix

The fuzzy membership matrix measures the relevance between two objects, helping to optimize clustering algorithms and produce more accurate results. It consists of a series of elements called fuzzy members. Each element represents the degree of belonging of a given cluster. It takes values ranging from zero to one. Converting original MTS objects into a fuzzy membership matrix F is computed as follows:

where distance represents the distance measurement. Cluster centers are \(\check{C}=\left\{ \check{c}_1, \check{c}_2, \ldots , \check{c}_k\right\}\), where k is the number of clusters. \(\check{C}_s\) represents the index of the sth cluster center. \(\check{C}_{\check{j}}\) represents the index of the \(\check{j}\)th cluster center.

Composite fuzzy membership matrix

In this section, we use the methodology proposed in Li and Wei31 to generate the compound fuzzy membership matrixes \(O_{D T W-D+C I D}\) and \(O_{D T W-D+S B D}\). The complete process of calculating the fuzzy membership matrix is shown in Algorithm 2.

Time series clustering

AP36, SP37 and k-medoids10 are the three most popular clustering algorithms5. AP and SP have many advantages, such as being suitable for high-dimensional data and automatically determining the number of clusters. These advantages make these two methods widely applicable and essential in analyzing and applying multi-dimensional time series data. The k-medoids algorithm is a method suitable for time series clustering, which has good robustness to noise and outliers, and can handle large datasets. In practical applications, different clustering algorithms can be selected for time series clustering according to their needs to achieve better clustering results5. Since AP and k-medoids are widely used, we only introduce the spectral clustering.

Spectral clustering is a graph-theoretic algorithm used for data classification. It treats each data point as a node in a graph and connects them based on their similarity. The graph is then partitioned to minimize the sum of weights between different subgraphs while maximizing the sum of weights within each subgraph. The following is a description of spectral clustering.

Input: Fuzzy membership matrix \(O=\left\{ O_1, O_2, \ldots , O_n\right\}\), number k of clusters

Output: Clustering results \(C=\left\{ C_1, C_2, \ldots , C_k\right\}\)

Step 1: Transform the fuzzy membership matrix O into a similarity matrix S as follows.

where \(\sigma\) is the bandwidth value.

Step 2: Transform the similarity matrix S into the Laplacian matrix L as follows.

where D is a degree matrix, and its diagonal elements are the sum of node degrees.

Step 3: Perform eigenvalue decomposition on the Laplacian matrix L to obtain the first k eigenvectors \(v_1, v_2, \ldots , v_k\) and corresponding eigenvalues, and create the eigenvector matrix \(V=\left( v_{\check{i} \check{j}}\right) _{n \times k}\).

Step 4: Obtain matrix U by normalizing V using Eq. (22):

Step 5: Each row of the matrix U is regarded as a data point, and these data points are clustered using the k-means clustering algorithm to obtain the clustering result C.

The time complexity of traditional spectral clustering is \(O\left( n^3\right)\). Therefore, this study adopts the optimised spectral clustering method proposed in literature38, which can effectively reduce the time complexity. For more information about this method, please refer to the relevant literature38.

Each step of the DCSLM

The DCSLM takes the time-stamped customer transaction information as input and transforms the customer behavior into multi-dimensional time series data M for LRFMS. Then, some powerful clustering algorithms and state-of-the-art distance measures are combined to obtain well-separated and dense clusters5. Finally, based on the clustering results, marketing strategies are designed to meet customer needs and improve customer satisfaction. The main steps of the DCSLM method (MTS clustering) are as follows in Algorithm 3.

Empirical study

To implement the proposed method DCSLM, we use Python 3.9 programming language in Anaconda software version 2021. We obtain 300,000 customer transaction data of parts agents from the ASP/SaaS-based manufacturing industry value chain collaboration platform for 2019-202139,40. We extract features such as customer ID, part name, part brand, quantity, and timing of purchase from the customer transaction data to form two customer transaction datasets39. The first dataset, consisting of“Engine Parts”, “Clutch and Transmission Parts”, and “Hydraulic Lift Parts” purchased by the customer, is called PD1. The second dataset, consisting of “Body and Interior/Exterior Parts” purchased by the customer, is called PD2. The third dataset, consisting of “Electric Parts” purchased by the customer, is called PD3.

Representing customer behavior as time series of LRFMS

In this step, LRFMS analysis generates time series data that capture changes in customer behavior over time. We first use min-max normalization to normalize the customer transaction data41. Next, the transaction data is aggregated into bins based on a two-week time interval. Finally, by considering the definition of LRFMS variables, time series of L, R\(^{\prime }\), F, M, and S are extracted every two weeks. Since we consider \(C Q_i\), \(C D_i\) and \(C S_i\) to be equally important in customer satisfaction S, \(\alpha , \beta\), and \(\gamma\) are set to 1/3.

Multivariate time series clustering

In this step, first, the DPC algorithm is applied to directly generate k cluster centers. Then, three distance measures, namely, DTW-D, (DTW-D+ CID), (DTW-D+ SBD), are used to generate three fuzzy membership matrixes according to Algorithm 2. For three fuzzy membership matrixes, clustering results can be obtained by applying three clustering algorithms (i.e., AP, SP, and k-medoids). In this study, the number of clusters (k) is 3, 4, and 5.

Choosing the best clustering results by computing cluster validity indices

In this step, we choose silhouette index as validity indices for selecting the optimal clustering model.

The silhouette index (SI), proposed by Peter J. Rousseeuw in 1986, is an evaluation metric for clustering algorithms. It combines the separation and cohesion of clustering42. It is defined as follows:

The value range of \(S I\left( X_{\check{i}}\right)\) is \([-1,1]\). \(b_{-} {\text {avg}}\left( X_{\check{i}}\right)\) represents the average distance between data point \(\check{i}\) and its closest cluster, and \(a_{-} {\text {avg}}\left( X_{\check{i}}\right)\) represents the average distance between data point \(\check{i}\) and the other data point in the same cluster.

In Table 2, we summarizes the SI index for the clustering approaches in PD1, PD2 and PD3. For PD1, the highest SI value is obtained by applying spectral clustering with (DTW-D + SBD) metric and setting \(k=4\). For PD2, the best SI value is obtained by using hierarchical clustering with (DTW-D + CID) metric and setting \(k=4\). For PD3, the best SI value is obtained by using hierarchical clustering with (DTW-D + SBD) metric and setting \(k=5\).

Analysis and comparisons of the DCSLM

In this section, we validate the performance of the LRFMS segmentation model and discuss the effectiveness of DCSLM. We also discuss the limitations of the study.

Analysis of the obtained clusters

According to the clustering results and the different characteristics of customers, we divide customers into important retention customers, development customers, loyal customers, and general customers in PD1 and PD243. And we divide customers into important retention customers, secondary important retention customers, development customers, loyal customers, and general customers in the PD3.

In order to analyze the resulting customer segments, each customer group needs to be tagged and its behavior analyzed. For analyzing customer behavior, we chose the monetary variable as a representation of customer behavior since the ultimate goal of any firm is to gain the desired profitability5. Figures 2, 3 and 4 illustrates each cluster’s representative series of customer segments and trend lines obtained using the moving average. The representative series for each cluster is the centroid of that cluster. By analyzing the monetary value of each cluster and corresponding trend lines, we can provide marketing suggestions for each group.

The clustering results on the PD1 are shown in Fig. 2. Cluster C1 exhibits a slight downward trend and is considered a loyal customer group based on its average value. Marketing programs should be designed to prevent the value of customers within C1 from declining. Cluster C2 shows an increasing trend and is considered a development customer group considering the average of the time series values. Effective marketing programs are needed to turn this group into an important customer retention group. Cluster C3 has a stable trend; however, regarding the average value of the series, it is a general customer group. Effective marketing programs to increase the value of customers within C3 should be devised. Cluster C4 shows a downward trend at the beginning of the period, then switches to an upward trend. The cluster is considered an important customer retention group considering the average of the series values. The parts agent should devise effective marketing programs to maintain the profitability of this group.

The clustering results on the PD2 are shown in Fig. 3. Cluster C1 shows a downward trend first and then an upward trend. It is considered a general customer group with a low mean series value. The parts agent should devise effective marketing programs to improve the value of this group of customers. The trend of cluster C2 is growing at the beginning of the period, then declines and becomes steady. C2 is a loyal customer group based on the average value of the series. Specific marketing programs should be designed and executed to prevent customers of the group from becoming general customers. Cluster C3 is a developmental customer group with a downward trend. Marketing programs should be designed to prevent customers of C3 from becoming loyal customers or general customers. In addition, Cluster C4, which first has a downward trend and then an upward trend, is an important customer group with a high mean series value. The parts agent should devise marketing programs to maintain the profitability of this group.

In PD3, as shown in Fig. 4, the trend of cluster C1 is growing at the beginning of the period, then declines. However, it is a general customer group in terms of its average value. Cluster C2 has a downward trend and is the second important customer group based on the average value of the series. Cluster C3 is a loyal customer group with a downward trend. Cluster C4, which has also undergone a downward trend, is an important customer group with a high mean series value. In addition, cluster C5 is a development customer group whose overall trend is downward. For the five clusters, as they have a downward trend, it is necessary to conduct a thorough investigation to identify the reasons.

Analysis of the LRFMS model

To evaluate the validity of the LRFMS model, we present the segmentation results using the original RFM model and LRFMS model in PD2 to illustrate the credibility of the LRFMS model. The partial results are shown in Tables 3 and 4.

From Tables 3 and 4, we can see that customer with the ID of ’1330808365760253952’ and ’1384039727912718388’ have the same classification results under the two models. From Table 3, the customers of ’1330808365760253952’ and ’1384039727912718388’ are important retention customers. From Table 4, the two customers have considerable data on the total amount and purchases frequency within 10 days. And the customer of ’1330808365760253952’ has a better S-value than the customer of ’1384039727912718388’. This indicates that the customer with ID ’1330808365760253952’ is more satisfied with the parts agent, or in other words, has a better user experience. The customers with IDs ’1330808365760253967’ and ’1343940521835801811’ are considered to be important retention and developmental customers in the RFM model. In the LRFMS model, it can be observed that these two customers have a high purchase frequency within four days. The customer with ID ’1330808365760253967’ has a larger total purchase amount, while the customer with ID ’1343940521835801811’ has a relatively smaller total purchase amount than that of other important retention customers mentioned in Table 4. The customer with ID ’1330808365760253967’ is considered a development customer due to their lower satisfaction with the parts agent, while the customer with ID ’1343940521835801811’ is rated as an important retention customer because of their higher satisfaction. Therefore, the LRFMS model can provide a more detailed description of user data from the customer’s perspective, enhancing the ability to analyze customer value and making the assessment of user value more accurate.

The customer of ’1364039727912718336’ made a total purchase of parts worth 17.0 RMB, with a frequency of 3 purchases. Since the time of his latest purchase is closer to the end of the purchase period, this customer is classified as a loyal customer in the original RFM model. The customer with ID ’1364039727912718826’ purchased parts worth 39.11 RMB in total, with a purchase frequency of 4. However, because the time of his last purchase is closest to the end of the purchase period, he is classified as a development customer in the original RFM model. According to the analysis of the LRFMS model, the customer with ID ’1364039727912718336’ made three purchases of low-priced parts within three days and gave a satisfaction rating of 3 to the parts agent. The customer with ID ’1364039727912718826’ made 4 purchases of low-priced parts within 5 days, with a satisfaction rating of 3 points. Both of these customers have potential commercial value that needs to be further developed, so they are correctly classified as general customers. Therefore, the LRFMS model overcomes the randomness of the R indicator in the original RFM model and more accurately positions the customer.

The experimental results show that the LRFMS model can provide more detailed descriptions of data and overcome the randomness of R indicators in the RFM model. The improved LRFMS model can better achieve one-to-one precision marketing to the customers of the parts agent.

To further evaluate the effectiveness of the LRFMS model, we replaced the LRFMS model in DCSLM with RFM, resulting in DCSRM. We compared the results of DCSLM and DCSRM in PD1, PD2, and PD3, as shown in Table 5. Their performance is evaluated based on three comparison indices: SI, Dunn Index (DI), and Davies-Bouldin Index (DB). The detailed description of DB and DI (including the definition and computational method) has been reported by Saini et al.44. Compared to DCSRM, DCSLM showed increases in SI values of \(18.7283 \%, 14.6780 \%\) and \(21.6190 \%\) respectively, and increases in DI values of \(35.66 \%, 28.56 \%\) and \(33.22 \%\) across the three datasets. Furthermore, DCSLM significantly outperformed DCSRM on these datasets in terms of the DB index. These findings indicate that the LRFMS model significantly outperforms the traditional RFM model.

Comparative analysis of different methods

To evaluate the effectiveness of DCSLM, we compare 4 clustering methods, namely clustering method based on DTW and Partitioning Around Medoids (DTW-PAM)31, Fuzzy Clustering based on Feature Weights (FCFW)31, clustering method based on Global Alignment Kernel and DTW Barycenter Averaging (GAK-DBA)45, and Hybrid Clustering for Multivariate Time Series (HCMTS)46, based on multivariate time series data from LRFMS. The comparison results of the experiments are shown in Table 6. To understand the performance of the DCSLM more clearly, we compare it with several customer segmentation methods, including CATC13, CBRD4, TCBS28, and AFRC5, as shown in Table 7. To adapt these customer segmentation methods for the MTS dataset, we need to make some modifications based on the literature31. From the index values (SI, DB, DI) in Table 6, the mean index values of different clustering methods are calculated, as shown in Fig. 5. The mean index values (SI, DB, DI) for different segmentation methods are computed based on the data in Table 7, as depicted in Fig. 6.

From Table 6 and Fig. 5, we can find that the results of DCSLM can obtain an advantage on three datasets. In addition, one can see that except to DCSLM, the clustering effect of FCFW is also striking. It also obtains superior results in the SI and DI index. In Table 7, DCSLM performs better than other customer segmentation methods overall on three datasets. And it is believed that DCSLM performs significantly better than others in the DI index, but it shows slightly better performance in the other indexes. In Fig. 6, the mean values of DCSLM are overall higher than those of the comparison customer segmentation methods. This means that DCSLM performs better than the other methods on all indices. Therefore, when performing dynamic customer segmentation, DCSLM should be considered to obtain more ideal results.

Time complexity

In this section, we analyze the time complexity and time consumption of the comparison algorithms31. The time complexity of DCSLM mainly consists of four parts: weight calculation, distance calculation, fuzzy membership matrix construction and result calculation. The time complexity of computing the weights of all the objects is approximately O(tn). The time complexity of processing the distance calculation for all objects is approximately \(O\left( n^2 t^2+n^2 t \log (t)\right)\). Obtaining the fuzzy membership matrices \(\textrm{F}_{d t w}\), \(\textrm{F}_{s b d}\), and \(\textrm{F}_{c i d}\) require a time complexity of \(O\left( n k^2\right)\) respectively. The complexity of generating the final result is approximately \(O\left( n^2I+nkI\right)\), where I represents the number of iterations. The total time complexity is approximately \(O\left( \left( I+t^2+t \log (t)\right) n^2+\left( k^2+k I\right) n\right)\). The time complexities of FCFW, GAK-DBA, DTW-PAM, and HCMTS are \(O\left( \left( t^2+ t \log (t)\right) n^2+\left( k^2+t\right) n\right)\), \(O\left( I\left( \left( n t^2\right) +n t\right) \right)\), \(O\left( n^2 t^2+k n^2\right)\), and \(O\left( {n}^2 k I\right)\) respectively. Similarly, the time complexities of CATC, CBRD, TCBS, and AFRC are also \(O\left( n^2 t^2+n^2 t+n^2 I\right)\), \(O\left( n^2 t^2\right)\), \(O\left( n^2 t^2+n k I\right)\), and \(O\left( n^3+n^2 t+n^2 I+n^2 t \log (t)\right)\)respectively. These time complexities are summarised in Table 8.

Obviously, it is difficult to intuitively see the time efficiency of the algorithm from the time complexity analysis due to existence of the number of objects and iterations. However, the highest order of DCSLM time complexity is the square term, DCSLM requires longer computation time when handling large-scale datasets. We recorded the runtimes of the comparison algorithms on three datasets, as shown in Fig. 7. GAK-DBA has the highest time consumption compared to the other algorithms. This may be because the algorithm requires a large number of iterations to reach convergence. The DCSLM also requires longer calculation time, but its time consumption is within an acceptable range.

Conclusions

In the highly competitive auto parts market, precision marketing and improving customer satisfaction are particularly important. In order to cope with fierce market competition, parts agents need to divide their customers into different groups and gain an in-depth understanding of the characteristics of these groups. To achieve this goal, parts agents use customer segmentation methods to effectively identify distinct groups of customers. RFM models and time series clustering algorithms are essential techniques for dynamic customer segmentation. The original RFM model suffers from the problems of R index randomness and ignoring customers’ perceived value. And for dynamic customer segmentation, the previous research on clustering techniques primarily focuses on univariate time series clustering, with less research on MTS clustering. This paper proposes DCSLM.

DCSLM is improved from two aspects: RFM model and time series clustering. DCSLM represents customer behavior as time series of LRFMS. The LRFMS model is developed by adding the satisfaction (S) variable to the LRFM model, which takes into account the perceived value of the customer and is quite different from previous studies that conducted customer segmentation based on traditional RFM or LRFM models. DCSLM can capture the dynamic behavior of customers more fully by using the LRFMS model. Based on customer time series, MTS clustering is performed to identify groups of customers with similar behavior. The MTS clustering in DSCLM combines state-of-the-art distance measures and some powerful time-series clustering algorithms to obtain the best quality clustering results. The resulting clusters’quality in the MTS clustering task depends on choosing a suitable distance measure and an effective clustering algorithm by computing cluster validity indices10. And for dynamic customer segmentation, the previous research on clustering techniques primarily focuses on univariate time series clustering. DCSLM provides a feasible solution for dynamic customer segmentation by using MTS clustering. The effectiveness of the proposed method is verified on real data of auto parts sales.

For the case of this study, real-life data (PD1, PD2 and PD3) from the ASP/SaaS-based manufacturing industry value chain collaboration platform in China is used. It is found that the best clustering result for PD1 is obtained by using spectral clustering with (DTW-D+SBD) metric. And the best segmentation is obtained by applying AP clustering with (DTW-D+CID) metric in the PD2. In addition, for PD3, the best segmentation is obtained by applying AP clustering with (DTW-D+SBD) metric. The customers of parts agents are divided into different customer groups. The segmentation obtained are analysed and behavioral patterns are found. For example, Customers who buy electrical parts (PD3) are divided into five different segments: important retention customers, secondary important retention customers, development customers, loyal customers, and general customers. As the segments obtained for customers all show a downward behavior trend, this parts agent associated with PD3 should conduct a thorough investigation to identify the reasons. On this basis, the management of parts agents flexibly adjusts customer relationship management strategies and marketing methods, and effectively allocates and utilizes resources. This can not only improve customer satisfaction and loyalty, but also maximise the parts agent’s profit and maintain a competitive advantage. Furthermore, we conducted extensive experiments on PD1, PD2 and PD3. The experimental results show that the algorithm outperforms other algorithms, further validating the effectiveness of the proposed method.

This study can contribute to prior literature by proposing a dynamic customer segmentation approach based on LRFMS and MTS clustering for the auto parts sales industry. On the other hand, this study proposes important practical directions and a wide range of managerial implications including capturing the dynamic behavior of customers and assisting part agents in developing marketing strategies. Moreover, we have successfully applied DCSLM in the context of the auto parts sales industry, and verified its effectiveness on the third-party platform. However, with the continuous development of the platform and the increasing amount of customer data, DCSLM will gradually expose the problem of insufficient response speed. Due to the huge amount of customer data, DCSLM’s segmentation process will become slow and unable to reflect the latest changes and needs of customer groups in time. This is the first limitation. Another limitation of this study is that we only use the transaction data of parts agents’ customers, and other customer information, such as geographic location, reputation or browsing history, is not available. In view of this, we plan to introduce an adaptive selection technique into the proposed method in the future. We can use this technology to select the best clustering algorithm in a large dataset to optimise the customer segmentation process and improve time efficiency. In addition, we plan to apply the proposed method to car sales or other fields where more comprehensive customer information can be obtained. Based on this customer information, we will further enhance DCSLM by adding new attributes related to the customer’s behavior, and validate the applicability of the method in different areas.

Data availibility

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Pakzad, S. S., Roshan, N. & Ghalehnovi, M. Comparison of various machine learning algorithms used for compressive strength prediction of steel fiber-reinforced concrete. Sci. Rep. 13, 3646. https://doi.org/10.1038/s41598-023-30606-y (2023).

Barough, S. S. et al. Generalizable machine learning approach for covid-19 mortality risk prediction using on-admission clinical and laboratory features. Sci. Rep. 13, 2399. https://doi.org/10.1038/s41598-023-28943-z (2023).

Ramezani, F. et al. Automatic detection of multilayer hexagonal boron nitride in optical images using deep learning-based computer vision. Sci. Rep. 13, 1595. https://doi.org/10.1038/s41598-023-28664-3 (2023).

Syaputra, A. & Laoh, E. Customer segmentation on returned product customers using time series clustering analysis. In 2020 International Conference on ICT for Smart Society (ICISS) (ed. Syaputra, A.) 1–5 (IEEE, 2020).

Abbasimehr, H. & Bahrini, A. An analytical framework based on the recency, frequency, and monetary model and time series clustering techniques for dynamic segmentation. Expert Syst. Appl. 192, 116373. https://doi.org/10.1016/j.eswa.2021.116373 (2022).

Abbasimehr, H. & Shabani, M. A new framework for predicting customer behavior in terms of rfm by considering the temporal aspect based on time series techniques. J. Ambient. Intell. Humaniz. Comput. 12, 515–531. https://doi.org/10.1007/s12652-020-02015-w (2021).

Mosaddegh, A., Albadvi, A., Sepehri, M. M. & Teimourpour, B. Dynamics of customer segments: A predictor of customer lifetime value. Expert Syst. Appl. 172, 114606. https://doi.org/10.1016/j.eswa.2021.114606 (2021).

Shokoohi-Yekta, M., Wang, J. & Keogh, E. On the non-trivial generalization of dynamic time warping to the multi-dimensional case. In Proceedings of the 2015 SIAM International Conference on Data Mining (ed. Shokoohi-Yekta, M.) 289–297 (SIAM, 2015).

Yu, C., Luo, L., Chan, L. L. H., Rakthanmanon, T. & Nutanong, S. A fast lsh-based similarity search method for multivariate time series. Inf. Sci. 476, 337–356. https://doi.org/10.1016/j.ins.2018.10.026 (2019).

Abbasimehr, H. & Baghery, F. S. A novel time series clustering method with fine-tuned support vector regression for customer behavior analysis. Expert Syst. Appl. 204, 117584 (2022).

Akhondzadeh-Noughabi, E. & Albadvi, A. Mining the dominant patterns of customer shifts between segments by using top-k and distinguishing sequential rules. Manag. Decis. 53, 1976–2003 (2015).

Mosaddegh, A., Albadvi, A., Sepehri, M. M. & Teimourpour, B. Mining patterns of customer dynamics in banking industry. New Market. Res. J. 9, 1–30 (2019).

Abbasimehr, H. & Shabani, M. A new methodology for customer behavior analysis using time series clustering: A case study on a bank’s customers. Kybernetes 50, 221–242. https://doi.org/10.1108/K-09-2018-0506 (2021).

Abbasimehr, H. & Shabani, M. Forecasting of customer behavior using time series analysis. In The 7th International Conference on Contemporary Issues in Data Science (ed. Abbasimehr, H.) 188–201 (Springer International Publishing, 2019).

Sivaguru, M. Dynamic customer segmentation: A case study using the modified dynamic fuzzy c-means clustering algorithm. Granul. Comput. 8, 345–360. https://doi.org/10.1007/s41066-022-00335-0 (2023).

Liu, Y. & Chen, C. Improved rfm model for customer segmentation using hybrid meta-heuristic algorithm in medical iot applications. Int. J. Artif. Intell. Tools 31, 1–16. https://doi.org/10.1142/S0218213022500099 (2022).

Yoseph, F., Malim, N. H. & AlMalaily, M. New behavioral segmentation methods to understand consumers in retail industry. Int. J. Comput. Sci. Inf. Technol. 11, 43–61 (2019).

Ernawati, E., Baharin, S. S. K. & Kasmin, F. A review of data mining methods in rfm-based customer segmentation. In Journal of Physics: Conference Series (ed. Ernawati, E.) 1–8 (IOP Publishing, 2021).

Huang, Y., Zhang, M. & He, Y. Research on improved rfm customer segmentation model based on k-means algorithm. In 2020 5th International Conference on Computational Intelligence and Applications (ICCIA) (ed. Huang, Y.) 24–27 (IEEE, 2020).

Smaili, M. Y. & Hachimi, H. New rfm-d classification model for improving customer analysis and response prediction. Ain Shams Eng. J. 14, 102254 (2023).

Kao, Y. T., Wu, H. H., Chen, H. K. & Chang, E. C. A case study of applying lrfm model and clustering techniques to evaluate customer values. J. Stat. Manag. Syst. 14, 267–276. https://doi.org/10.1080/09720510.2011.10701555 (2011).

Wei, J. T., Lin, S. Y., Weng, C. C. & Wu, H. H. A case study of applying lrfm model in market segmentation of a children’s dental clinic. Expert Syst. Appl. 39, 5529–5533 (2012).

Husnah, M. & Vinarti, R. A. Customer segmentation analysis using lrfm based product and brand dimensions. In 2023 2nd International Conference for Innovation in Technology (INOCON) (ed. Husnah, M.) 1–6 (IEEE, 2023).

Peker, S., Kocyigit, A. & Eren, P. E. Lrfmp model for customer segmentation in the grocery retail industry: A case study. Market. Intell. Plan. 35, 544–559. https://doi.org/10.1108/MIP-11-2016-0210 (2017).

Aggelis, V. & Christodoulakis, D. Customer clustering using rfm analysis. In Proceedings of the 9th WSEAS International Conference on Computers (ed. Aggelis, V.) 1–5 (IEEE, 2005).

Cheng, C. H. & Chen, Y. S. Classifying the segmentation of customer value via rfm model and rs theory. Expert Syst. Appl. 36, 4176–4184. https://doi.org/10.1016/j.eswa.2008.04.003 (2009).

Kamalzadeh, H., Ahmadi, A. & Mansour, S. Clustering time-series by a novel slope-based similarity measure considering particle swarm optimization. Appl. Soft Comput. 96, 106701. https://doi.org/10.1016/j.asoc.2020.106701 (2020).

Zhou, Y., Hu, Z. & Liu, Y. Analyzing user behavior patterns in casual games using time series clustering. In 2021 2nd International Conference on Computing and Data Science (CDS) (ed. Zhou, Y.) 372–382 (IEEE, 2021).

Pavithra, M. & Prashar, A. Maximizing strategy in customer segmentation using different clustering techniques. In 2022 IEEE International Conference on Signal Processing, Informatics, Communication and Energy Systems (ed. Pavithra, M.) 481–485 (IEEE, 2022).

Batista, G. E., Keogh, E. J., Tataw, O. M. & De Souza, V. M. CID: An efficient complexity-invariant distance for time series. Data Min. Knowl. Discov. 28, 634–69 (2014).

Li, H. & Wei, M. Fuzzy clustering based on feature weights for multivariate time series. Knowl.-Based Syst. 197, 105907. https://doi.org/10.1016/j.knosys.2020.105907 (2020).

Iwana, B. K., Frinken, V. & Uchida, S. Dtw-nn: A novel neural network for time series recognition using dynamic alignment between inputs and weights. Knowl.-Based Syst. 188, 104971. https://doi.org/10.1016/j.knosys.2019.104971 (2020).

Cen, Z. & Wang, J. Forecasting neural network model with novel cid learning rate and eemd algorithms on energy market. Neurocomputing 317, 168–178. https://doi.org/10.1016/j.neucom.2018.08.021 (2018).

Fahiman, F., Bezdek, J. C., Erfani, S. M., Palaniswami, M. & Leckie, C. Fuzzy c-shape: A new algorithm for clustering finite time series waveforms. In 2017 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE) (ed. Fahiman, F.) 1–8 (IEEE, 2017).

Rodriguez, A. & Laio, A. Clustering by fast search and find of density peaks. Science 344, 1492–1496 (2014).

Montero, P. & Vilar, J. A. Tsclust: An r package for time series clustering. J. Stat. Softw. 62, 1–43 (2015).

Ng, A., Jordan, M., Weiss, Y. On spectral clustering: Analysis and an algorithm. Adv. Neural Inf. Process. Syst. 14 (2001).

Cai, D. & Chen, X. Large scale spectral clustering via landmark-based sparse representation. IEEE Trans. Cybern. 45, 1669–1680 (2014).

University, S. J. Asp/saas-based manufacturing industry value chain collaboration platform (2022). Data provided by anufacturing Industry Chain Collaboration and Information Support Technology Key Laboratory of Sichuan Province http://www.autosaas.cn/.

Yu, Y., Sun, L. F. & Ma, Y. H. Multi-tenant form customization technology for collaborative cloud service platform in industrial chain. Comput. Integr. Manuf. Syst. 22, 2235–2244 (2016).

Han, J., Pei, J. & Tong, H. Data Mining: Concepts and Techniques (Morgan Kaufmann, 2022).

Li, H. Accurate and efficient classification based on common principal components analysis for multivariate time series. Neurocomputing 171, 744–753. https://doi.org/10.1016/j.neucom.2015.07.010 (2016).

Wu, J. et al. User value identification based on improved rfm model and k-means++ algorithm for complex data analysis. Wirel. Commun. Mob. Comput. 2021, 1–8 (2021).

Saini, N., Saha, S. & Bhattacharyya, P. Automatic scientific document clustering using self-organized multi-objective differential evolution. Cogn. Comput. 11, 271–293 (2019).

Petitjean, F., Ketterlin, A. & Gançarski, P. A global averaging method for dynamic time warping, with applications to clustering. Pattern Recogn. 44, 678–693. https://doi.org/10.1016/j.patcog.2010.09.013 (2011).

Fontes, C. H. & Budman, H. A hybrid clustering approach for multivariate time series-a case study applied to failure analysis in a gas turbine. ISA Trans. 71, 513–529. https://doi.org/10.1016/j.isatra.2017.09.004 (2017).

Acknowledgements

Thanks to the editorial team and all the anonymous reviewers who helped us improve the quality of this paper.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Data Curation were performed by Y.Y. L.S. performed the formal analysis. Validation was performed by S.W.. The first draft of the manuscript was written by S.W. and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, S., Sun, L. & Yu, Y. A dynamic customer segmentation approach by combining LRFMS and multivariate time series clustering. Sci Rep 14, 17491 (2024). https://doi.org/10.1038/s41598-024-68621-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-68621-2

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.