Abstract

Entanglement is a key feature of quantum mechanics1,2,3, with applications in fields such as metrology, cryptography, quantum information and quantum computation4,5,6,7,8. It has been observed in a wide variety of systems and length scales, ranging from the microscopic9,10,11,12,13 to the macroscopic14,15,16. However, entanglement remains largely unexplored at the highest accessible energy scales. Here we report the highest-energy observation of entanglement, in top–antitop quark events produced at the Large Hadron Collider, using a proton–proton collision dataset with a centre-of-mass energy of √s = 13 TeV and an integrated luminosity of 140 inverse femtobarns (fb)−1 recorded with the ATLAS experiment. Spin entanglement is detected from the measurement of a single observable D, inferred from the angle between the charged leptons in their parent top- and antitop-quark rest frames. The observable is measured in a narrow interval around the top–antitop quark production threshold, at which the entanglement detection is expected to be significant. It is reported in a fiducial phase space defined with stable particles to minimize the uncertainties that stem from the limitations of the Monte Carlo event generators and the parton shower model in modelling top-quark pair production. The entanglement marker is measured to be D = −0.537 ± 0.002 (stat.) ± 0.019 (syst.) for \(340\,{\rm{GeV}} < {m}_{t\bar{t}} < 380\,{\rm{GeV}}\). The observed result is more than five standard deviations from a scenario without entanglement and hence constitutes the first observation of entanglement in a pair of quarks and the highest-energy observation of entanglement so far.

Similar content being viewed by others

Main

Particle colliders, such as the Large Hadron Collider (LHC) at CERN, probe fundamental particles and their interactions at the highest energies accessible in a laboratory, exceeded only by astrophysical sources. Beyond the fundamental interest of exploring quantum entanglement in a new setting, this observation demonstrates the potential of using high-energy colliders, such as the LHC, as tools for testing our fundamental understanding of quantum mechanics. Hadron colliders offer a truly relativistic environment and provide a rich variety of fundamental interactions, rarely considered for experiments in quantum information. Relativistic effects are expected to play a critical part in quantum information17 and the measurement described here illustrates the potential for new approaches to explore these effects and other foundational problems in quantum mechanics using colliders.

Recently, the heaviest fundamental particle known to exist, the top quark, was proposed as a new laboratory to study quantum entanglement and quantum information18,19. In this Article, the spin correlation between the top quark and antitop quark is used to probe the effects of quantum entanglement, in proton–proton (pp) collision events recorded with the ATLAS detector with a centre-of-mass energy of 13 TeV. Entanglement is observed with a significance of more than five standard deviations for the first time in pairs of quarks.

If two particles are entangled, the quantum state of one particle cannot be described independently of the other. The simplest example of an entangled system involves a pair of quantum bits (qubits); pieces of quantum information about two particles in the same quantum state that exist in superposition. The spin quantum number of a fundamental fermion, a particle that can take spin values of ±1/2, is one of the simplest and most fundamental examples of a qubit. Among the fundamental fermions of the standard model of particle physics, the top quark is uniquely suited for high-energy spin measurements because of its unique properties: its immense mass gives it a lifetime (about 10−25 s) notably shorter than the timescale needed for the quantum numbers of a quark to be shrouded by hadronization (around 10−24 s) and spin decorrelation (approximately 10−21 s) effects20. As a result, its spin information is transferred to its decay products. This unique feature provides an opportunity to study a pseudo-bare quark, free of the colour-confinement properties of the strong force that shrouds other quarks.

Quarks are most commonly produced in hadron collider experiments as matter–antimatter pairs. A pair of top–antitop quarks (\(t\bar{t}\)) is a two-qubit system in which the spin quantum state is described by the spin density matrix ρ:

The first term in the linear sum is a normalization constant, where In is the n × n identity matrix. The second term describes the intrinsic polarization of the top and the antitop quarks, where σi are the corresponding Pauli matrices and the real numbers \({B}_{i}^{\pm }\) characterize the spin polarization of each particle. The third term describes the spin correlation between the particles, encoded by the spin correlation matrix Cij. In all expressions, an orthogonal coordinate system is represented by the indices i, j = 1, 2, 3.

At hadron colliders, \(t\bar{t}\) pairs are produced mainly by the strong interaction and thus have no intrinsic polarization (that is, \({B}_{i}^{\pm }\simeq 0\)) because of parity conservation and time invariance in quantum chromodynamics (QCD)21. However, the spins of these pairs are expected to be correlated, and this correlation has already been observed by both the ATLAS and CMS experiments at the LHC22,23,24,25,26. Entanglement in top-quark pairs can be observed by an increase in the strength of their spin correlations.

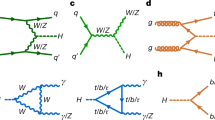

Owing to their short lifetime, top quarks cannot be detected directly in experiments. In the standard model, the top quarks decay almost exclusively into a bottom quark and a W boson, and the W boson subsequently decays into either a pair of lighter quarks or a charged lepton and a neutrino. In this measurement, only W bosons decaying into leptons are considered because charged leptons, especially electrons and muons, are readily detected with high precision at collider experiments. To a good approximation, the degree to which the leptons carry the spin information of their parent top quarks is 100% because of the maximally parity-violating nature of the electro-weak charged current. The angular direction of each of these leptons is correlated with the direction of the spin of their parent top quark or antitop quark in such a way that the normalized differential cross-section (σ) of the process may be written as27

where \({\widehat{{\bf{q}}}}_{+}\) is the antilepton direction in the rest frame of its parent top quark and \({\widehat{{\bf{q}}}}_{-}\) is the lepton direction in the rest frame of its parent antitop quark; and Ω+ is the solid angle associated with the antilepton and Ω− is the solid angle associated with the lepton. The vectors B± determine the top-quark and antitop-quark polarizations, whereas the matrix C contains their spin correlations. These terms are the same as those that appear in the general form for ρ. As the information about the polarizations and spin correlations of the short-lived top quarks is transferred to the decay leptons, their values can be extracted from a measurement of angular observables associated with these leptons, allowing us to reconstruct the \(t\bar{t}\) spin quantum state.

The experiments at the LHC ring, such as ATLAS, are the only ones currently taking data that are able to produce and study the properties of the top quark. At the LHC, \(t\bar{t}\) pairs are produced mainly by gluon–gluon fusion. When they are produced close to their production threshold, that is, when their invariant mass \({m}_{t\bar{t}}\) is close to twice the mass of the top quark (\({m}_{t\bar{t}} \sim 2\cdot {m}_{t} \sim 350\) GeV), approximately 80% of the production cross-section of \(t\bar{t}\) pairs arises from a spin-singlet state28,29,30, which is maximally entangled. After averaging over all possible top-quark directions, entanglement only survives close to the threshold because of the rotational invariance of the spin-singlet. This invariance implies that the trace (the sum of all of the diagonal elements) of the correlation matrix C, in which each diagonal element corresponds to the spin correlation in a particular direction, is a good entanglement witness. It is an observable that can signal the presence of entanglement, with tr(C) + 1 < 0 as a sufficient condition for entanglement18. It can be understood as a violation of a Cauchy–Schwarz inequality, a notable entanglement criterion in fields such as quantum optics, condensed matter or analogue gravity31,32,33,34.

It is more convenient to define an entanglement marker by using D = tr[C]/3 (ref. 18), which can be experimentally measured as

where ⟨cos φ⟩ is the average value of the cosine of the angle (dot product) between the charged-lepton directions after they have been subjected to Lorentz boosting into the \(t\bar{t}\) rest frame and then the rest frames of their parent top-quark and antitop-quark, which can be measured experimentally in an ensemble dataset. The existence of an entangled state is demonstrated if the measurement satisfies D < −1/3, derived from the Peres–Horodecki criterion35,36 and is independent of the order of the calculation. It should be noted that the CMS collaboration has already measured D = −0.237 ± 0.011 (ref. 26) inclusively, showing no signal of entanglement.

The standard model is a quantum theory, and entanglement is implicitly present in its predictions. Nevertheless, a demonstration of spin entanglement in \(t\bar{t}\) pairs is challenging because of the inability to control the internal degrees of freedom in the initial state19. Currently, entanglement can be detected only with the help of a dedicated analysis in a restricted phase space such as the one presented here.

The ATLAS detector and event samples

The ATLAS experiment37,38,39 at the LHC is a multipurpose particle detector with a forward–backward symmetric cylindrical geometry and a solid-angle coverage of almost 4π. It is used to record particles produced in LHC collisions through a combination of particle position and energy measurements. The coordinate system is defined in the section ‘Object identification in the ATLAS detector’. It consists of an inner-tracking detector surrounded by a thin superconducting solenoid providing a 2 T axial magnetic field, electromagnetic and hadronic calorimeters, and a muon spectrometer. The muon spectrometer surrounds the calorimeters and is based on three large superconducting air-core toroidal magnets with eight coils each providing a field integral of between 2.0 T m and 6.0 T m across the detector. An extensive software suite40 is used in data simulation, the reconstruction and analysis of real and simulated data, detector operations, and the trigger and data acquisition systems of the experiment. The complete dataset of pp collision events with a centre-of-mass energy of √s = 13 TeV collected with the ATLAS experiment during 2015–2018 is used, corresponding to an integrated luminosity of 140 fb−1. This analysis focuses on the data sample recorded using single-electron or single-muon triggers41.

A unique feature of particle physics is that very precise simulations of the standard model can be realized through the use of Monte Carlo event generators. These simulations replicate real collisions and their resultant particles on an event-by-event basis, and these events can be passed through sophisticated simulations of the ATLAS detector to produce simulated data. Comparing these simulated events with those recorded by the detector is one way to test the predictions of the standard model. Another is to use the simulated data to model how the ATLAS detector responds to a particular physics process, such as the pair production of top quarks, and to use these data to create corrections to undo the effect of the detector response on real data and then to compare these corrected data with theoretical predictions. This measurement uses the latter strategy.

Three distinct types of real and simulated data are used, each with associated physics objects. Detector level refers to real data before they have been corrected for detector effects and simulated data after they have been passed through simulation of the ATLAS detector. Parton level refers to simulated Monte Carlo events in which the particles arise from the fundamental interaction being simulated, such as quarks and bosons, or to real collision data that have been corrected to this level. Particle level refers to simulated data with physics objects that are built only from the stable particles that remain after the decay of the particles that exist at parton level, that is, particles that live long enough to interact with the detector, or to real data that have been corrected to this level. This measurement relies on the selection and reconstruction of muons, electrons, quarks and gluons as hadronic jets, neutrinos as missing transverse momentum (\({{\bf{p}}}_{{\rm{T}}}^{{\rm{miss}}}\)), W bosons and top quarks. These objects are each reconstructed at the detector level, particle level and parton level. Details of how these objects are reconstructed in ATLAS and Monte Carlo simulations are provided in the section ‘Object identification in the ATLAS detector’.

Monte Carlo event simulations are used to model the \(t\bar{t}\) signal and the expected standard model background processes. The production of \(t\bar{t}\) events was modelled using the POWHEG BOX v.2 heavy-quark (hvq) (refs. 42,43,44,45) generator at next-to-leading order (NLO) precision in QCD and the events were interfaced to either PYTHIA 8.230 (ref. 46) or HERWIG 7.2.1 (refs. 47,48) to model the parton shower and hadronization. The decays of the top quarks, including their spin correlations, were modelled at leading-order (LO) precision in QCD. An additional sample that generates \(t\bar{t}\) events at full NLO accuracy in production and decay was generated using the POWHEG BOX RES (bb4ℓ) (refs. 49,50) generator, interfaced to PYTHIA. Further details of the setup and tuning of these generators are provided in the section ‘Monte Carlo simulation’. An important difference between PYTHIA and HERWIG is that the former uses a pT-ordered shower, whereas the latter uses an angular-ordered shower (see section ‘Parton shower and hadronization effects’). Another important consideration is that full information on the spin density matrix is not passed to the parton shower programs and, therefore, is not fully preserved during the shower.

The standard model background processes that contribute to the analysis are the production of a single top quark with a W boson (tW), pair production of top quarks with an additional boson \(t\bar{t}+X\) (X = H, W, Z) and the production of dileptonic events from either one or two massive gauge bosons (W and Z bosons). The generators for the hard-scatter processes and the showering are listed in the section ‘Monte Carlo simulation’. The procedure for identifying and reconstructing detector-level objects is the same for data and Monte Carlo events.

Analysis procedure

Only events taken during stable-beam conditions, and for which all relevant components of the detector were operational, are considered. To be selected, events must have exactly one electron and one muon with opposite-sign electric charges. A minimum of two jets is required, and at least one of them must be identified to originate from a b-hadron (b-tagged).

The background contribution of events with reconstructed objects that are misidentified as leptons, referred to as the ‘fake-lepton’ background, is estimated using a combination of Monte Carlo prediction and correction based on data. This data-driven correction is obtained from a control region dominated by fake leptons. It is defined by using the same selection criteria as above, except that the two leptons must have the same-sign electric charges. The difference between the numbers of observed events and predicted events in this region is taken as a scale factor and applied to the predicted fake-lepton events in the signal region.

Events that pass the event selection are separated into three analysis regions, based on the detector-level, particle-level or parton-level \({m}_{t\bar{t}}\), depending on the region. The signal region is constructed to be dominated by events that are as close to the production threshold as the resolution of the reconstruction method will allow, as this is the region in which the entanglement of the top quarks is expected to be maximized.

The optimal mass window for the signal region was determined to be \(340 < {m}_{t\bar{t}} < 380\,{\rm{GeV}}\). Two additional validation regions are defined to validate the method used for the measurement. First, a region is defined close to the limit in which entanglement is not expected to be observable, and also with sizeable dilution from mis-reconstructed events from non-entangled regions, by requiring \(380 < {m}_{t\bar{t}} < 500\,{\rm{GeV}}\). Second, a region in which no signal of entanglement is expected is defined with \({m}_{t\bar{t}} > 500\,{\rm{GeV}}\). Each of the regions has a \(t\bar{t}\)-event purity of more than 90%. The dominant sources of background processes arise from tW and fake-lepton, accounting for 56% and 27% of the background in the signal region, respectively. The remaining 17% of background events arise from \(t\bar{t}+X\) and the production of dileptonic events from either one or two massive gauge bosons. The distribution of cos φ in the signal region and the detector-level Ddetector value, built from the cos φ at the reconstructed detector level and after background subtraction, are shown in Fig. 1a,b.

a, The cos φ observable in the signal region at the detector level. b, The entanglement marker D, calculated from the detector-level distributions, from three different Monte Carlo generators; the POWHEG + PYTHIA and POWHEG + HERWIG heavy-quark models, labelled Pow+Py (hvq) and Pow+H7 (hvq), respectively, and the POWHEG + PYTHIA bb4ℓ model, labelled Pow + Py (bb4ℓ), are shown after background processes are subtracted. The uncertainty band shows the uncertainties from all sources added in quadrature. The ratios of the predictions to the data are shown at the bottom of a and b. The quoted value for D for the bb4ℓ model also includes subtraction of the single-top-quark background.

To compare the data with calculations and correct for detector effects, we must also define an event selection using the ‘truth’ information in the Monte Carlo event record. This selection uses particle-level objects to match as closely as possible the selection at the detector level and is called a fiducial particle-level selection. Particle-level events are required to contain exactly one electron and one muon with opposite-sign electric charges and at least two particle-level jets, one of which must contain a b-hadron. The cos φ distribution is then constructed from the particle-level top quarks and charged leptons in the same manner as at the detector level.

The response of the detector, the event selections and the top-quark reconstruction distort the shape of the cos φ distribution. The observed distribution is corrected for these effects with a simple method: a simulation-based calibration curve that connects any value at the detector level to the corresponding value at the particle level. We correct the data for detector effects by using a unique calibration curve built for each signal and validation region based on the expected signal model, after subtracting the expected contribution from background processes. Owing to the limited resolution of the reconstructed mass of the \(t\bar{t}\) system, some events that truly belong to the validation regions can enter the signal region at the detector level. These events are treated as detector effects.

To build these curves, Monte Carlo event samples are created with alternative values of D by reweighting the events, following the procedure described in the section ‘Reweighting the cos φ distribution’. The calibration curve corrects the value Ddetector measured at the detector level to a corresponding value Dparticle at the particle level. To construct the calibration curve, several hypotheses for different values of D, denoted by \({D}_{{\rm{particle}}}^{{\prime} }\) with a corresponding \({D}_{{\rm{detector}}}^{{\prime} }\) value, are created corresponding to the changes in the expected value of entanglement.

The pairs of \({D}_{{\rm{detector}}}^{{\prime} }\) and \({D}_{{\rm{particle}}}^{{\prime} }\) are plotted in Fig. 2a. A straight line interpolates between the points. With this calibration curve, any value for Ddetector can be calibrated to the particle level.

a, Calibration curve for the dependence between the particle-level value of D and the detector-level value of D in the signal region. The yellow band represents the statistical uncertainty, and the grey band represents the total uncertainty obtained by adding the statistical and systematic uncertainties in quadrature. The measured values and expected values from POWHEG + PYTHIA 8 (hvq) are marked with black and red circles, respectively, and the entanglement limit is shown as a dashed line. b, The particle-level D results in the signal and validation regions compared with various Monte Carlo models. The entanglement limit shown is a conversion from its parton-level value of D = −1/3 to the corresponding value at the particle level, and the uncertainties that are considered for the band are described in the text.

Three categories of uncertainties are included in the calibration curves: uncertainties in modelling \(t\bar{t}\) production and decay, uncertainties in modelling the backgrounds and detector-related uncertainties for both the \(t\bar{t}\) signal and the standard model background processes. Each source of systematic uncertainty can result in a different calibration curve because it changes the shape of the cos φ distribution at the particle level and/or detector level. For each source of systematic uncertainty, the data are corrected using this new calibration curve, and the resultant deviation from the data corrected by the nominal curve is taken as the systematic uncertainty of the data due to that source. Systematic uncertainties from all sources are summed in quadrature to determine the final uncertainty in the result.

For all of the detector-related uncertainties, the particle-level quantity is not affected and only detector-level values change. For signal modelling uncertainties, the effects at the particle level propagate to the detector level, resulting in shifts in both. Uncertainties in modelling the background processes affect how much background is subtracted from the expected or observed data and can, therefore, cause changes in the calibration curve. These uncertainties are treated as fully correlated between the signal and background (that is, if a source of systematic uncertainty is expected to affect both the signal and background processes, this is estimated simultaneously and not separately).

A summary of the different sources of systematic uncertainty and their impact on the result is given in Table 1. The size of each systematic uncertainty depends on the value of D and is given in Table 1 for the standard model prediction, calculated with POWHEG + PYTHIA. The systematic uncertainties considered in the analysis are described in detail in the section ‘Systematic uncertainties’.

To compare the particle-level result with the parton-level entanglement limit D < −1/3, the limit must be folded to the particle level. A second calibration curve is constructed to relate the value of Dparton to the corresponding Dparticle. The definitions of parton-level top quarks and leptons in the Monte Carlo generator follow ref. 24 and correspond approximately to those of stable top quarks and leptons in a fixed-order calculation. Only systematic uncertainties related to the modelling of the \(t\bar{t}\) production and decay process are considered while building this calibration curve. The migration of the parton-level events from the signal region into the validation regions at the particle level and vice versa is very small.

The calibration procedure is performed in the signal region and the two validation regions to correct the data to a fiducial phase space at the particle level, as described in the previous section. All systematic uncertainties are included in the three regions. The observed (expected) results are

in the signal region of \(340 < {m}_{t\bar{t}} < 380\,{\rm{GeV}}\) and

in the validation regions of \(380 < {m}_{t\bar{t}} < 500\,{\rm{GeV}}\) and \({m}_{t\bar{t}} > 500\,{\rm{GeV}}\), respectively. The expected values are those predicted by POWHEG + PYTHIA. The calibration curve for the signal region and a summary of the results in all regions are presented in Fig. 2.

The observed values of the entanglement marker D are compared with the entanglement limit in Fig. 2b. The parton-level bound D = −1/3 is converted to a particle-level bound by folding the limit to particle level to better highlight the differences between the predictions using different parton shower orderings. For POWHEG + PYTHIA, this yields −0.322 ± 0.009, in which the uncertainty includes all uncertainties in the POWHEG + PYTHIA model except the parton shower uncertainty (for more details of these uncertainties, see section ‘Systematic uncertainties’). Similarly, for POWHEG + HERWIG, with an angular-ordered parton shower, a value of −0.27 is obtained. No uncertainties are assigned in this case because it is merely used as an alternative model.

Discussion

In both of the validation regions, with no entanglement signal, the measurements are found to agree with the predictions from different Monte Carlo setups within the uncertainties. This serves as a consistency check to validate the method used for the measurement.

Although the different models yield different predictions, the current precision of the measurements in the validation regions does not allow us to rule out any of the Monte Carlo setups that were used. It is important to note that close to the threshold, non-relativistic QCD processes, such as Coulomb bound state effects, affect the production of \(t\bar{t}\) events28 and are not accounted for in the Monte Carlo generators. The main impact of these effects is to change the line shape of the \({m}_{t\bar{t}}\) spectrum. The impact of these missing effects was tested by introducing them with an ad hoc reweighting of the Monte Carlo based on theoretical predictions, and the effect was found to be 0.5%. Other systematic uncertainties on the top-quark decay (1.6%) and top-quark mass (0.7%) also similarly change the line shape within our experimental resolution and have a much larger impact. Therefore, the ad hoc reweighting is not included by default in the measurement because including it would not change the sensitivity of the result within the precision quoted.

In the signal region, the POWHEG + PYTHIA and POWHEG+ HERWIG generators yield different predictions. The size of the observed difference is consistent with changing the method of shower ordering and is discussed in detail in the section ‘Parton shower and hadronization effects’.

In the signal region, the observed and expected significances with respect to the entanglement limit are well beyond five standard deviations, independently of the Monte Carlo model used to correct the entanglement limit to account for the fiducial phase space of the measurement. This is shown in Fig. 2b, in which the hypothesis of no entanglement is shown. The observed result in the region with \(340\,{\rm{GeV}} < {m}_{t\bar{t}} < 380\,{\rm{GeV}}\) establishes the formation of entangled \(t\bar{t}\) states. This constitutes the first observation of entanglement in a quark–antiquark pair.

Apart from the fundamental interest in testing quantum entanglement in a new environment, this measurement in top quarks paves the way to use high-energy colliders, such as the LHC, as a laboratory to study quantum information and foundational problems in quantum mechanics. From a quantum information perspective, high-energy colliders are particularly interesting because of their relativistic nature and the richness of the interactions and symmetries that can be probed there. Furthermore, highly demanding measurements, such as measuring quantum discord and reconstructing the steering ellipsoid, can be naturally implemented at the LHC because of the vast number of available \(t\bar{t}\) events51. From a high-energy physics perspective, borrowing concepts from quantum information theory inspires new approaches and observables that can be used to search for physics beyond the standard model52,53,54,55.

Methods

Object identification in the ATLAS detector

ATLAS uses a right-handed coordinate system with its origin at the nominal interaction point in the centre of the detector and the z-axis along the beam pipe. The x-axis points from the interaction point to the centre of the LHC ring, and the y-axis points upwards. Cylindrical coordinates (r, ϕ) are used in the transverse plane, where ϕ is the azimuth angle around the z-axis. The pseudorapidity is defined in terms of the polar angle θ as η = −ln tan(θ/2). Angular distance is measured in units of \(\Delta R\equiv \sqrt{{(\Delta \eta )}^{2}+{(\Delta \phi )}^{2}}\).

Reconstructed (detector-level) objects are defined as follows. Electron candidates are required to satisfy the ‘tight’ likelihood-based identification requirement as well as calorimeter- and track-based isolation criteria56 and have pseudorapidity ∣η∣ < 1.37 or 1.52 < ∣η∣ < 2.47. Muon candidates are required to satisfy the ‘medium’ identification requirement as well as track-based isolation criteria57,58,59 and have ∣η∣ < 2.5. Electrons and muons must have a minimum transverse momentum (pT) of 25–28 GeV, depending on the data-taking period. Showers of particles (jets) that arise from the hadronization of quarks and gluons60 are reconstructed from particle-flow objects61, using the anti-kt algorithm62,63 with a radius parameter R = 0.4, a pT threshold of 25 GeV and a ∣η∣ < 2.5 requirement. Objects can fulfil the criteria for both jet and lepton selections, necessitating the implementation of an overlap removal procedure. This way, objects are associated with a singular hypothesis. First, any electron candidates that share a track with a muon candidate are removed. Subsequently, jets within ΔR = 0.2 of an electron are removed, and afterwards, electrons within a region 0.2 < ΔR < 0.4 around any remaining jet are rejected. Jets that have fewer than three tracks and are within ΔR = 0.2 of a muon candidate are removed, and muons within ΔR = 0.4 of any remaining jet are discarded. A Jet-Vertex-Tagger (JVT) requirement is applied to jets with pT < 60 GeV and ∣η∣ < 2.4 to suppress jets originating from additional interactions in the same or neighbouring bunch crossings (pile-up)64. Jets are tagged as containing b-hadrons using the DL1r tagger65 with a b-tagging efficiency of 85%. Missing transverse momentum (\({{\bf{p}}}_{{\rm{T}}}^{{\rm{miss}}}\)) (refs. 66,67) is determined from the imbalance in the transverse momenta of all reconstructed objects.

To measure D, the top quarks must be reconstructed from their measured decay products. In the \(t\bar{t}\) dileptonic decay, apart from charged leptons and jets, there are two neutrinos that are not measured by the detector. Several methods are available to reconstruct the top quarks from the detector-level charged leptons, jets and \({{\bf{p}}}_{{\rm{T}}}^{{\rm{miss}}}\). The main method used in this work is the Ellipse method68, which is a geometric approach to analytically calculate the neutrino momenta. This method yields at least one real solution in 85% of events. We always choose the solution with the lowest top-quark pair invariant mass, to populate the region that is close to the threshold. If this method fails (for example, the resultant solutions are all complex), the Neutrino Weighting method69 is used. The Neutrino Weighting method assigns a weight to each possible solution by assessing the compatibility of the neutrino momenta and the \({{\bf{p}}}_{{\rm{T}}}^{{\rm{miss}}}\) in the event, after scanning possible values of the pseudorapidities of the neutrinos. In this analysis, the Neutrino Weighting method is only used in a small fraction of events (about 5%). Furthermore, in ref. 24, it was used in all events and the performance was found to be the same between samples that include and exclude spin correlation. If both methods fail, a simple pairing of each lepton with its closest b-tagged jet is used as proxies for the top- and antitop-quark, and no attempt is made to reconstruct the neutrinos. If a second b-tagged jet is not present in the event, the leading (highest) pT untagged jet is used instead. In all cases, a W boson mass of 80.4 GeV and a top-quark mass of 172.5 GeV are used as input parameters.

In simulated events, parton-level objects are taken directly from the Monte Carlo history information and are required to have a status code of 1, indicating that they are the fundamental particles (partons) of the interaction. Top quarks are required to be partons that decay to a W boson and a b quark, whereas charged leptons are required to be the immediate decay parton from the W boson from the top quark. Particle-level objects are reconstructed using simulated stable particles in the Monte Carlo simulation before their reconstruction in the detector but after hadronization. A particle is defined as stable if it has a mean lifetime greater than 30 ps, within the pseudorapidity acceptance of the detector. The selection criteria for the particle-level objects are chosen to correspond as closely as possible to the criteria applied to the detector-level objects. Electrons, muons and neutrinos are required to come from the electroweak decay of a top quark and are discarded if they arise from the decay of a hadron or a τ-lepton. Electrons and muons are then ‘dressed’ by summing their four momenta with any prompt photons within ΔR = 0.1. Electrons and muons must also be well separated from jet activity. If they lie within ΔR < 0.4 from a jet, they are removed from the event. Leptons are also required to have pT > 10 GeV and ∣η∣ < 2.5, and at least one lepton must have pT > 25 GeV. Jets are built by clustering all stable particles, using the anti-kt algorithm with a radius parameter of R = 0.4 and are tagged as containing b-hadrons if they have at least one ghost-matched b-hadron70,71 with pT > 5 GeV. Jets are also required to have pT > 25 GeV and ∣η∣ < 2.5. Each W boson is reconstructed by combining an available electron and electron neutrino or muon and muon neutrino. The top quark and antitop quark are reconstructed by pairing the two leading b-tagged jets, or the b-tagged jet and the highest-pT untagged jet in events with only one b-tag, with the reconstructed W bosons. Both potential jet–lepton combinations are formed and the one that minimizes ∣mt − m(W1 + b1/2)∣ + ∣mt − m(W2 + b2/1)∣ is taken as the correct pairing, where mt denotes the mass of the top quark, b1/2 denotes the two jets selected for the reconstruction, W1/2 refers to the reconstructed W bosons and m is the invariant mass of the objects in brackets.

Monte Carlo simulation

The production of \(t\bar{t}\) events was modelled using the POWHEG BOX v.2 heavy-quark (hvq) (refs. 42,43,44,45) event generator. This generator uses matrix elements calculated at next-to-leading-order (NLO) precision in a strong coupling constant power expansion in QCD with the NNPDF3.0NLO (ref. 72) parton distribution function (PDF) set and the hdamp parameter set to 1.5mt (ref. 73). The hdamp parameter is a resummation damping factor and one of the parameters that control the matching of POWHEG matrix elements to the parton shower and thus effectively regulates the high-pT radiation against which the system recoils. The decays of the top quarks, including their spin correlations, were modelled at leading-order (LO) precision in QCD. As an alternative, the POWHEG BOX RES (refs. 49,50) event generator, developed to treat decaying resonances within the POWHEG BOX framework and including off-shell and non-resonant effects in the matrix element calculation, was used to produce an additional event sample, labelled as bb4ℓ in the following. Although bb4ℓ is the higher-precision Monte Carlo sample, it cannot be compared directly with the data after they are corrected for detector effects as it is not possible to remove its off-shell component in a formally correct way. However, the effect of using this model was tested approximately and was found to not significantly change the conclusions of the measurement.

In the bb4ℓ event sample, spin correlations are calculated at NLO, and full NLO accuracy in \(t\bar{t}\) production and decays is attained. To model the parton shower, hadronization and underlying event, the events from both POWHEG BOX v.2 and POWHEG BOX RES were interfaced to PYTHIA 8.230 (ref. 46), with parameters set according to the A14 set of tuned parameters74 and using the NNPDF2.3LO set of PDFs75. Similarly, the events from POWHEG BOX v.2 (hvq) were also interfaced with HERWIG 7.2.1 (refs. 47,48), using the HERWIG 7.2.1 default set of tuned parameters. The decays of bottom and charm hadrons were performed by Evtgen 1.6.0 (ref. 76). The spin information from the matrix element calculation is not passed to the parton shower programs and, therefore, is not fully preserved during the shower.

All simulated event samples include pile-up interactions, and the events are reweighted to reproduce the observed distribution of the average number of collisions per bunch crossing.

Reweighting the cos φ distribution

To construct the calibration curve, templates for alternative scenarios with different degrees of entanglement, and therefore with different values of D, must be extracted. The degree of entanglement is intrinsic in the calculations of the Monte Carlo event generators. However, the effects of entanglement can be directly accessed using D, measured from the average of the cos φ distribution in the event. Therefore, an event-by-event reweighting based on D is used to vary the degree of entanglement. Although the measurement uses detector-level and particle-level objects, the observable D is changed at the parton level, at which it is directly related to the entanglement between the top and antitop spins. Therefore, each event is reweighted according to its parton-level values of \({m}_{t\bar{t}}\) and cos φ, as described below.

The entanglement marker D is extracted at the parton level from the cos φ distribution by using either the mean of the distribution D = −3 · ⟨cos φ⟩ or the slope of the normalized differential cross-section \((1/\sigma ){\rm{d}}\sigma /{\rm{d}}\,\cos \,\varphi =(1/2)(1-D\,\cos \,\varphi )\).

For simplicity, the analysis always uses the mean of the distribution, although the two methods are equivalent. Thus, for the purpose of reweighting, we must change the slope of the cos φ distribution at the parton level. Each event is reweighted according to this slope, which in turn changes the distributions at the particle level and detector level.

The observable D depends on the invariant mass of the \(t\bar{t}\) system, \({m}_{t\bar{t}}\). To perform the reweighting, the differential value of D per mass unit as a function of \({m}_{t\bar{t}}\), \({D}_{\varOmega }({m}_{t\bar{t}})\), has to be calculated. This is achieved by fitting a third-order polynomial of the form

where x0, x1, x2 and x3 are constants. This parametrization was found to describe well the value of \({D}_{\varOmega }({m}_{t\bar{t}})\), in good agreement with the Monte Carlo prediction. The values of the parameters of \({D}_{\varOmega }({m}_{t\bar{t}})\) depend on the Monte Carlo event generator and have to be calculated for the nominal sample and for the effect of each of the \(t\bar{t}\) theory systematic uncertainties, as they change the parton-level cos φ values and thus \({D}_{\varOmega }({m}_{t\bar{t}})\).

The reweighting method is a simple scaling of the cos φ distribution according to the desired new value of D. This is done by assigning a weight w to each event at parton level as

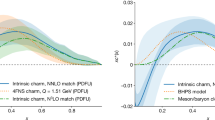

with \({\mathcal{X}}\) as the scaling hypothesis of D. If, for example, \({\mathcal{X}}=1.2\), it means that D is scaled up by 20% relative to its nominal value. To build the calibration curve, four alternative values of D are considered, with \({\mathcal{X}}=0.4,0.6,0.8,1.2\), in addition to the nominal value without reweighting (\({\mathcal{X}}=1.0\)). It is important to note that these \({\mathcal{X}}\) values change D across the entire \({m}_{t\bar{t}}\) spectrum. In Extended Data Fig. 1, the parton-level distribution of D is shown in the signal region before and after reweighting.

Background modelling

Simulated data in the form of Monte Carlo samples were produced using either the full ATLAS detector simulation77 based on the GEANT4 framework78 or, for the estimation of some of the systematic uncertainties, a faster simulation with parameterized showers in the calorimeters79. The effect of pile-up was modelled by overlaying each hard-scattering event with inelastic pp collisions generated with PYTHIA 8.186 (ref. 80) using the NNPDF2.3LO set of PDFs75 and the A3 set of tuned parameters81. Except for the events simulated with SHERPA, the EVTGEN program was used to simulate bottom and charm hadron decays. If not mentioned otherwise, the top-quark mass was set to mt = 172.5 GeV. All event samples that were interfaced with PYTHIA used the A14 set of tuned parameters74 and the NNPDF2.3LO PDF set.

Single-top quark tW associated production was modelled using the POWHEG BOX v.2 (refs. 43,44,45,82) event generator, which provides matrix elements at NLO in the strong coupling constant αs in the five-flavour scheme with the NNPDF3.0NLO (ref. 72) PDF set. The functional form of the renormalization and factorization scales was set to the default scale, which is equal to the top-quark mass. The diagram-removal scheme83 was used to handle the interference with \(t\bar{t}\) production73. The inclusive cross-section was corrected to the theoretical prediction calculated at NLO in QCD with next-to-next-leading-logarithm (NNLL) soft-gluon corrections84,85. For pp collisions at a centre-of-mass energy of √s = 13 TeV, this cross-section corresponds to σ(tW)NLO+NNLL = 71.7 ± 3.8 pb. The uncertainty in the cross-section due to the PDF was estimated using the MSTW2008NNLO 90%CL (refs. 86,87) PDF set and was added in quadrature to the effect of the scale uncertainty.

Samples of diboson final states (VV), where V denotes a W or Z boson, were simulated with the SHERPA 2.2.2 (ref. 88) event generator, including off-shell effects and Higgs boson contributions, where appropriate. Fully leptonic final states and semileptonic final states, in which one boson decays leptonically and the other hadronically, were generated using matrix elements at NLO accuracy in QCD for up to one additional parton and at LO accuracy for up to three additional parton emissions. Samples for the loop-induced processes gg → VV were generated using LO-accurate matrix elements for up to one additional parton emission for both the cases of fully leptonic and semileptonic final states. The matrix element calculations were matched and merged with the SHERPA parton shower based on Catani–Seymour dipole factorization89,90 using the MEPS@NLO prescription91,92,93,94. The virtual QCD corrections were provided by the OPENLOOPS library95,96,97. The NNPDF3.0NNLO set of PDFs was used72, along with the dedicated set of tuned parton-shower parameters developed by the SHERPA authors.

The production of V + jets events was simulated with the SHERPA 2.2.11 (ref. 88) event generator using NLO matrix elements for up to two partons, and LO matrix elements for up to five partons, calculated with the Comix (ref. 89) and OPENLOOPS 2 (refs. 95,96,97,98) libraries. They were matched with the SHERPA parton shower90 using the MEPS@NLO prescription91,92,93,94. The set of tuned parameters developed by the SHERPA authors was used, along with the NNPDF3.0NNLO set of PDFs72.

The production of \(t\bar{t}V\) events was modelled using the MADGRAPH5_AMC@NLO 2.3.3 (ref. 99) event generator, which provides matrix elements at NLO in the strong coupling constant αs with the NNPDF3.0NLO (ref. 72) PDFs. The functional form of the renormalization and factorization scales was set to \(0.5\times {\sum }_{i}\sqrt{{m}_{i}^{2}+{p}_{{\rm{T}},i}^{2}}\), where the sum runs over all the particles generated from the matrix element calculation. Top quarks were decayed at LO using MADSPIN (refs. 100,101) to preserve spin correlations. The events were interfaced with PYTHIA 8.210 (ref. 46) for the simulation of parton showering and hadronization. The cross-sections were calculated at NLO QCD and NLO EW accuracy using MADGRAPH5_AMC@NLO as reported in ref. 102. For \(t\bar{t}{\ell }{\ell }\) events, the cross-section was scaled by an off-shell correction estimated at one-loop level in αs.

The production of \(t\bar{t}H\) events was modelled using the POWHEG BOX v.2 (refs. 42,43,44,45,103) event generator, which provides matrix elements at NLO in the strong coupling constant αs in the five-flavour scheme with the NNPDF3.0NLO (ref. 72) PDF set. The functional form of the renormalization and factorization scales was set to \(\sqrt[3]{{m}_{{\rm{T}}}(t)\cdot {m}_{{\rm{T}}}(\bar{t})\cdot {m}_{{\rm{T}}}(H)}\). The events were interfaced with PYTHIA 8.230. The cross-section was calculated at NLO QCD and NLO EW accuracy using MADGRAPH5_AMC@NLO as reported in ref. 102. The predicted value at √s = 13 TeV is \(50{7}_{-50}^{+35}\,{\rm{fb}}\), for which the uncertainties were estimated from variations of both αs and the renormalization and factorization scales.

The background from non-prompt or fake leptons was modelled using simulated Monte Carlo events to describe the shape of the kinematic distributions. Monte Carlo event generator information is used to distinguish events with prompt leptons from events with non-prompt or fake leptons. The normalization of this background was obtained from data by using a dedicated control region. This control region uses the same basic event selection as the signal and validation regions, the only difference being that the electric charges of the electron and muon must have the same sign. Within this control region, the number of simulated prompt-lepton events is subtracted from the observed number of data events. The number of events remaining is then divided by the number of simulated fake-lepton events, resulting in a normalization factor of 1.4. This scale factor is then applied to the simulated fake-lepton events in the signal and validation regions.

Systematic uncertainties

The systematic uncertainties can be divided into three separate categories: signal modelling uncertainties, which stem from the theory prediction of \(t\bar{t}\) production; object systematic uncertainties, which arise from the uncertainty in the detector response to objects used in the analysis; and background modelling systematic uncertainties, which are related to the theory prediction of the standard model backgrounds. All systematic uncertainties, grouped according to their sources, are described in the following sections. The signal modelling uncertainties were found to dominate the overall uncertainty of this measurement.

For each source of systematic uncertainty, a new calibration curve is created and the simulated (or observed) data are corrected, resulting in a shifted corrected result. In most cases, the systematic uncertainty is taken to be the difference between the nominal expected and observed result and the systematically shifted result. In cases in which a systematic shift only affects the background model (for example, background cross-section uncertainties), the systematically shifted background sample is subtracted from the data instead before the calibration is performed. In cases in which the systematic uncertainty is one-sided, the uncertainty is symmetrized. In cases in which the uncertainties are asymmetric, the larger of the two variations is symmetrized. The signal modelling uncertainties dominate the measurement, and their estimated sizes are presented in Extended Data Table 1.

Signal modelling uncertainties

Signal modelling uncertainties are those related to the choice of POWHEG BOX + PYTHIA as the nominal Monte Carlo setup as well as those affecting the theoretical calculation itself. These systematic uncertainties are considered in two forms: alternative event generators and weights. For the alternative-generator uncertainties, the difference between the calibrated values of D is taken as the systematic uncertainty. For the systematic uncertainties involving weights, the difference between the calibrated D values for the nominal sample and the weight-shifted sample is taken as the uncertainty. These uncertainties follow the description in ref. 104 and are enumerated as follows:

-

pThard setting: the region of phase space that is vetoed in the showering when matched to a parton shower is varied by changing the internal pThard parameter of POWHEG BOX from 0 to 1, as described in ref. 105.

-

Top-quark decay: the uncertainty in the modelling of the decay of the top quarks and of the \({m}_{t\bar{t}}\) line shape is estimated by comparing the nominal decay in POWHEG BOX with the decays modelled with MADSPIN (refs. 100,101). The effect of this uncertainty is to shift the \({m}_{t\bar{t}}\) line shape to lower or higher values that alter the degree of entanglement entering the signal region. Thus, this is one of the most impactful sources of systematic uncertainty.

-

NNLO QCD + NLO EW reweighting: the uncertainty due to missing higher-order corrections is estimated by reweighting the pT of the top quarks, the pT of the \(t\bar{t}\) system and the \({m}_{t\bar{t}}\) spectra at parton level to match the predicted NNLO QCD and NLO EW differential cross-sections106,107.

-

Parton shower and hadronization: this uncertainty is estimated by comparing two different parton-shower and hadronization algorithms, PYTHIA and HERWIG, interfaced with the same matrix element event generator (POWHEG BOX).

-

Recoil scheme: the nominal sample uses a recoil scheme in which the partons recoil against b-quarks. This recoil scheme changes the modelling of the second and subsequent gluon emissions from quarks produced by coloured resonance decays, such as the b-quark in a top-quark decay, and therefore affects how the momentum is rearranged between the W boson and the b-quark. An alternative sample is produced in which the recoil is set to be against the top quark itself for the second and subsequent emissions108.

-

Scale uncertainties: the renormalization and factorization scales are raised and lowered by a factor of 2 in the nominal POWHEG setup, including simultaneous variations in the same direction. The envelope of results from all of these variations is taken as the final uncertainty.

-

Initial-state radiation: The uncertainty due to initial-state radiation is estimated by choosing the Var3c up/down variations of the A14 tune as described in ref. 109.

-

Final-state radiation: the impact of final-state radiation is evaluated by doubling or halving the renormalization scale for emissions from the parton shower.

-

PDF: the systematic uncertainty due to the choice of PDF is assessed using the PDF4LHC15 eigenvector decomposition110. The full difference between the results from the nominal PDF and the varied PDF is taken and symmetrized for each of the 30 eigenvectors. The quadrature sum of all result variations is provided in Extended Data Table 1.

-

hdamp setting: the hdamp parameter is a resummation damping factor and one of the parameters that control the matching of POWHEG BOX matrix elements to the parton shower and thus effectively regulates the high-pT radiation against which the \(t\bar{t}\) system recoils. The systematic uncertainty due to the chosen value of the hdamp parameter is assessed by comparing the nominal POWHEG+ PYTHIA result with one in which the hdamp parameter is increased by a factor of two.

-

Top-quark mass: the effect of the top-quark mass uncertainty is examined by comparing the nominal sample with alternative samples that use mt = 172 GeV or 173 GeV in the simulation.

Object systematic uncertainties

Systematic uncertainties that originate from the uncertainty in the detector response to the objects used in the analysis are estimated.

-

Electrons: The systematic uncertainties considered for electrons arise mainly from uncertainties in their trigger, reconstruction, identification and isolation efficiencies and are estimated using tag-and-probe measurements in Z and J/ψ decays56,111. Electron-related systematic uncertainties have a negligible impact on the final measurement, with a total contribution of about 0.2%.

-

Muons: The systematic uncertainties considered for muons arise from uncertainties in their trigger, identification and isolation efficiencies, and their energy scale and resolution, and are estimated using tag-and-probe measurements in Z and J/ψ decays57,58,59. Muon-related systematic uncertainties have a negligible impact on the final measurement, with a total contribution of about 0.3%.

-

Jets: The systematic uncertainties associated with jets are separated into those related to the jet-energy scale and resolution (JES and JER)60 and those related to the JVT algorithm64. The JES uncertainty consists of 31 individual components and the JER uncertainty consists of 13 individual components that are added in quadrature with the JVT uncertainty to obtain the total jet uncertainty. The largest contribution from a single source is 0.2%.

-

b-Tagging: The estimation of these uncertainties is described in ref. 112. A total of 17 independent systematic variations are considered: 9 related to b-hadrons, 4 related to c-hadrons, and 4 related to light-jet misidentification. Furthermore, two high-pT extrapolation uncertainties are taken into account. The largest contribution from a single systematic variation is 0.4%.

-

\({E}_{{\rm{T}}}^{{\rm{miss}}}\): All object-based uncertainties are fully correlated with the reconstruction of the \({E}_{{\rm{T}}}^{{\rm{miss}}}\) object of the event, the magnitude of the \({{\bf{p}}}_{{\rm{T}}}^{{\rm{miss}}}\) vector. However, there are some uncertainties specific to the reconstruction of \({E}_{{\rm{T}}}^{{\rm{miss}}}\) that concern soft tracks not matched to leptons or jets. These uncertainties are divided into parallel and perpendicular response components as well as a scale uncertainty66. These have a negligible effect on the measurement.

-

Pile-up: The effect of pile-up was modelled by overlaying the simulated hard-scattering events with inelastic pp events. To assess the systematic uncertainty due to pile-up, the reweighting performed to match simulation to data is varied within its uncertainty64. The resulting uncertainty has an effect of less than 0.1%.

-

Luminosity: The luminosity uncertainty only changes the normalization of the signal and background samples. The value of D is calculated from the normalized cos φ distribution and, therefore, is not affected by varying the sample normalization. However, the total expected statistical uncertainty can be affected by the luminosity uncertainty. This analysis uses the latest integrated luminosity estimate of 140.1 ± 1.2 fb−1 (ref. 113). Its uncertainty affects the measurement by less than 0.1%.

Background modelling systematic uncertainties

Background events are a relatively small source of uncertainty in this measurement because the event selection and top-quark reconstruction, especially the \({m}_{t\bar{t}}\) constraint, tend to suppress them. The uncertainties and their sources are listed in the following:

-

Single top quark: two uncertainties are considered for the single-top quark background: a cross-section uncertainty of 5.3% based on the NNLO cross-section uncertainty85 and an uncertainty for the choice of schemes used to remove higher-order diagrams that overlap with the \(t\bar{t}\) process. For the latter, the nominal POWHEG + PYTHIA sample, generated with the diagram-removal scheme83, was compared with an alternative sample generated using the diagram-subtraction scheme73,83. The cross-section uncertainty has a 0.4% effect on the measurement, whereas the choice of diagram scheme has less than 0.1% effect on the measurement.

-

\(t\bar{t}+X\): a normalization uncertainty is considered for each of the \(t\bar{t}+X\) backgrounds: a cross-section uncertainty of \({\phantom{1}}_{-12{\rm{ \% }}}^{+10{\rm{ \% }}}\) for \(t\bar{t}+Z\) and \({\phantom{1}}_{-12{\rm{ \% }}}^{+13{\rm{ \% }}}\) for \(t\bar{t}+W\). Both are based on the NLO cross-section uncertainty derived from the renormalization and factorization scale variations and PDF uncertainties in the matrix element calculation. These uncertainties have a negligible effect on the measurement because the \(t\bar{t}+X\) processes make a very small contribution to the signal region.

-

Diboson: a normalization uncertainty of ±10% is considered for the diboson process to account for the difference between the NLO precision of the Sherpa event generator and the precision of the theoretical cross-sections calculated to NNLO in QCD with NLO EW corrections. This simple K-factor approach is taken, rather than a more elaborate prescription, because the diboson background is small and the phase space selected by the analysis (\({m}_{t\bar{t}} < 380\) GeV) is unlikely to be sensitive to shape effects in the EW corrections, typically observed in high-pT tails. This uncertainty has less than 0.1% effect on the measurement.

-

Z → ττ: a conservative cross-section uncertainty of ±20% is applied to the Z → ττ background to account for the uncertainty in the cross-section prediction (which is much smaller than this variation) as well as to account for some mismodelling of the rate of associated heavy-flavor production, which is typically seen in ee and μμ dileptonic \(t\bar{t}\) analyses and was estimated to be a 5% (3%) effect in previous iterations of this analysis that included the ee (μμ) channel. This assumption is conservative as it is not possible to isolate a pure Z → ττ control region in which to estimate this effect, and therefore additional lepton-flavor-related effects present in the ee and μμ channels are also being included. This uncertainty has a noticeable impact on the final measurement, becoming the largest background-related uncertainty. It becomes large, despite this background being relatively small, because the reconstruction-level \(Z\to \tau \tau \cos \varphi \) distribution is quite flat and, therefore, subtracting even a relatively small amount of Z → ττ background can noticeably affect the mean of the overall cos φ distribution and therefore the D observable. This uncertainty has an impact of 0.8% on the measurement.

-

Fake and non-prompt leptons: a normalization uncertainty of ±50% is assigned to account for the uncertainty in the total yield of fake or non-prompt leptons in the signal region compared with the same-sign control region to ensure adequate coverage for our understanding of the rates of these types of events. It is a conservative uncertainty based on the observed level of data and Monte Carlo agreement in the same-sign region. The uncertainty has only a 0.1% effect on the final measurement.

Most of the systematic uncertainties that are considered are inconsequential to the measurement, and the dominant systematic uncertainties arise mostly from the signal modelling. These findings are true for the validation regions as well.

Parton shower and hadronization effects

The studies described in the following were performed to gain a more detailed understanding of why the different parton-shower and hadronization algorithms yield different values for the entanglement- and spin-correlation-related observables. The nominal Monte Carlo sample was produced with the NLO matrix element implemented in POWHEG BOX (hvq). The four momenta produced with POWHEG BOX were interfaced with either PYTHIA or HERWIG for the parton shower, hadronization and underlying-event model.

At the parton level, the two predictions are nearly identical, whereas at the stable-particle and detector levels, the two predictions show larger differences in the shape of the cos φ distributions. A parton-level measurement would, therefore, suffer from the ambiguity in cos φ, whereas the particle-level measurement presented in this paper does not. An extensive suite of studies was performed to understand the origin of this difference.

Apart from using different parameter-tuning strategies, there are two main differences between the two parton-shower algorithms: their hadronization model and the shower ordering. Whereas PYTHIA is based on the Lund string model and uses a pT-ordered dipole shower114,115,116, the HERWIG samples used in this study are based on a cluster model and use an angular-ordered shower as the default117.

A comparison between Monte Carlo simulations with different hadronization models was performed. For one study, Sherpa was used with either a string or a cluster model for hadronization. For the other study, HERWIG 7 was used, again comparing the effects of using either a string or a cluster model. Changing the hadronization model has shown in both cases to have a negligible effect on the cos φ distribution, both when not placing a cut on \({m}_{t\bar{t}}\) and when using a smaller part of phase space close to the signal region of the analysis, with \({m}_{t\bar{t}} < 380\,{\rm{GeV}}\). Instead, most of the differences seem to originate from the different orderings in the parton shower. To illustrate this, different event generator setups were used for simulation and the corresponding cos φ distributions were compared at particle level. The cos φ distributions for the POWHEG + PYTHIA and POWHEG + HERWIG samples used in the analysis are shown in Extended Data Fig. 2a, together with distributions for two different setups of HERWIG 7 in Extended Data Fig. 2b. In these setups, HERWIG 7 was used both for the production of the \(t\bar{t}\) events and for the parton shower, hadronization and underlying event. The samples were produced at LO, using either a dipole shower or an angular-ordered shower. All distributions are normalized to unity. A difference of up to 6% is observed when examining the ratio of POWHEG + HERWIG to POWHEG + PYTHIA distributions. The same behaviour is observed when comparing the two different showering orders for HERWIG.

The similarities between the samples used in this analysis and the HERWIG samples with different showering orders imply that the ordering of the shower is the main cause of the observed differences. It has to be noted, however, that POWHEG does not pass the spin correlation information to the parton shower algorithms, whereas this is done in the LO HERWIG setup used to study these hadronization effects.

These findings lead to the conclusion that performing the measurement at the particle level is more attractive because the difference in the predictions while extrapolating from the parton to particle level can be isolated and not taken as full systematic uncertainty. In the validation regions, the level of agreement between either POWHEG + PYTHIA or POWHEG + HERWIG and the data are similar. As the measurement is performed at the stable-particle level, the parton-level prediction for the entanglement limit was folded to the particle level as well, using a special calibration curve for this step. The prediction for the entanglement limit with POWHEG + HERWIG is further away from the data measurement than the one for POWHEG + PYTHIA. This difference is not symmetrized. All uncertainties in the POWHEG + PYTHIA prediction itself are folded to the particle level as well and are included in the grey uncertainty band in Fig. 2b.

The procedure used in Monte Carlo event generators to combine the matrix element with a parton-shower algorithm requires special attention in future higher-precision quantum information studies at the LHC.

Data availability

Raw data were generated by the ATLAS experiment. Derived data supporting the findings of this study are available from the ATLAS Collaboration upon request.

Code availability

The ATLAS data reduction software is available at Zenodo (https://doi.org/10.5281/zenodo.4772550) (ref. 118). Statistical modelling and analysis are based on the ROOT software and its embedded RooFit and RooStats modules, available at Zenodo (https://doi.org/10.5281/zenodo.3895852) (ref. 119). Code to configure these statistical tools and to process their output is available upon request.

References

Einstein, A., Podolsky, B. & Rosen, N. Can quantum-mechanical description of physical reality be considered complete? Phys. Rev. 47, 777–780 (1935).

Schrödinger, E. Discussion of probability relations between separated systems. Math. Proc. Camb. Philos. Soc. 31, 555–563 (1935).

Bell, J. S. On the Einstein Podolsky Rosen paradox. Phys. Phys. Fizika 1, 195–200 (1964).

Bennett, C. H. & DiVincenzo, D. P. Quantum information and computation. Nature 404, 247–255 (2000).

Nielsen, M. & Chuang, I. L. Quantum Computation and Quantum Information (Cambridge Univ. Press, 2000).

Marciniak, C. D. et al. Optimal metrology with programmable quantum sensors. Nature 603, 604–609 (2022).

Horodecki, R., Horodecki, P., Horodecki, M. & Horodecki, K. Quantum entanglement. Rev. Mod. Phys. 81, 865–942 (2009).

Casini, H. & Huerta, M. Lectures on entanglement in quantum field theory. Proc. Sci. 403, 002 (2022).

Aspect, A., Grangier, P. & Roger, G. Experimental realization of Einstein-Podolsky-Rosen-Bohm gedankenexperiment: a new violation of Bell’s inequalities. Phys. Rev. Lett. 49, 91–94 (1982).

Hagley, E. et al. Generation of Einstein-Podolsky-Rosen pairs of atoms. Phys. Rev. Lett. 79, 1–5 (1997).

Steffen, M. et al. Measurement of the entanglement of two superconducting qubits via state tomography. Science 313, 1423–1425 (2006).

Pfaff, W. et al. Demonstration of entanglement-by-measurement of solid-state qubits. Nat. Phys. 9, 29–33 (2013).

Belle Collaboration. Measurement of Einstein-Podolsky-Rosen-type flavor entanglement in Υ(4S) → \({B}^{0}\bar{{B}^{0}}\) decays. Phys. Rev. Lett. 99, 131802 (2007).

Julsgaard, B., Kozhekin, A. & Polzik, E. S. Experimental long-lived entanglement of two macroscopic objects. Nature 413, 400–403 (2001).

Lee, K. C. et al. Entangling macroscopic diamonds at room temperature. Science 334, 1253–1256 (2011).

Ockeloen-Korppi, C. F. et al. Stabilized entanglement of massive mechanical oscillators. Nature 556, 478–482 (2018).

Peres, A. & Terno, D. R. Quantum information and relativity theory. Rev. Mod. Phys. 76, 93–123 (2004).

Afik, Y. & de Nova, J. R. M. Entanglement and quantum tomography with top quarks at the LHC. Eur. Phys. J. Plus 136, 907 (2021).

Afik, Y. & de Nova, J. R. M. Quantum information with top quarks in QCD. Quantum 6, 820 (2022).

Particle Data Group et al. Review of particle physics. Prog. Theor. Exp. Phys. 2022, 083C01 (2022).

Mahlon, G. & Parke, S. J. Angular correlations in top quark pair production and decay at hadron colliders. Phys. Rev. D 53, 4886–4896 (1996).

ATLAS Collaboration. Observation of spin correlation in \(t\bar{t}\) events from pp collisions at √s = 7 TeV using the ATLAS detector. Phys. Rev. Lett. 108, 212001 (2012).

ATLAS Collaboration.Measurement of spin correlation in top–antitop quark events and search for top squark pair production in pp collisions at √s = 8 TeV using the ATLAS detector. Phys. Rev. Lett. 114, 142001 (2015).

ATLAS Collaboration. Measurements of top-quark pair spin correlations in the eμ channel at √s = 13 TeV using pp collisions in the ATLAS detector. Eur. Phys. J. C 80, 754 (2020).

CMS Collaboration. Measurements of \(t\bar{t}\) spin correlations and top-quark polarization using dilepton final states in pp collisions at √s = 7 TeV. Phys. Rev. Lett. 112, 182001 (2014).

CMS Collaboration. Measurement of the top quark polarization and \(t\bar{t}\) spin correlations using dilepton final states in proton-proton collisions at √s = 13 TeV. Phys. Rev. D 100, 072002 (2019).

Bernreuther, W., Flesch, M. & Haberl, P. Signatures of Higgs bosons in the top quark decay channel at hadron colliders. Phys. Rev. D 58, 114031 (1998).

Kiyo, Y., Kühn, J. H., Moch, S., Steinhauser, M. & Uwer, P. Top-quark pair production near threshold at LHC. Eur. Phys. J. C 60, 375–386 (2009).

Kühn, J. H. & Mirkes, E. QCD corrections to toponium production at hadron colliders. Phys. Rev. D 48, 179–189 (1993).

Petrelli, A., Cacciari, M., Greco, M., Maltoni, F. & Mangano, M. L. NLO production and decay of quarkonium. Nucl. Phys. B 514, 245–309 (1998).

Clauser, J. F. Experimental distinction between the quantum and classical field-theoretic predictions for the photoelectric effect. Phys. Rev. D 9, 853–860 (1974).

Brukner, Č, Vedral, V. & Zeilinger, A. Crucial role of quantum entanglement in bulk properties of solids. Phys. Rev. A 73, 012110 (2006).

Wölk, S., Huber, M. & Gühne, O. Unified approach to entanglement criteria using the Cauchy-Schwarz and Hölder inequalities. Phys. Rev. A 90, 022315 (2014).

de Nova, J. R. M., Sols, F. & Zapata, I. Violation of Cauchy-Schwarz inequalities by spontaneous Hawking radiation in resonant boson structures. Phys. Rev. A 89, 043808 (2014).

Peres, A. Separability criterion for density matrices. Phys. Rev. Lett. 77, 1413–1415 (1996).

Horodecki, P. Separability criterion and inseparable mixed states with positive partial transposition. Phys. Lett. A 232, 333 (1997).

ATLAS Collaboration. The ATLAS experiment at the CERN Large Hadron Collider. J. Instrum. 3, S08003 (2008).

ATLAS Collaboration. ATLAS Insertable B-Layer: Technical Design Report. Report No. CERN-LHCC-2010-013, ATLAS-TDR-19 (CERN, 2010).

Abbott, B. et al. Production and integration of the ATLAS Insertable B-Layer. J. Instrum. 13, T05008 (2018).

ATLAS Collaboration. The ATLAS Collaboration Software and Firmware. Report No. ATL-SOFT-PUB-2021-001 https://cds.cern.ch/record/2767187 (CERN, 2021).

ATLAS Collaboration. Performance of the ATLAS trigger system in 2015. Eur. Phys. J. C 77, 317 (2017).

Frixione, S., Ridolfi, G. & Nason, P. A positive-weight next-to-leading-order Monte Carlo for heavy flavour hadroproduction. J. High Energy Phys. 09, 126 (2007).

Nason, P. A new method for combining NLO QCD with shower Monte Carlo algorithms. J. High Energy Phys. 11, 040 (2004).

Frixione, S., Nason, P. & Oleari, C. Matching NLO QCD computations with parton shower simulations: the Powheg method. J. High Energy Phys. 11, 070 (2007).

Alioli, S., Nason, P., Oleari, C. & Re, E. A general framework for implementing NLO calculations in shower Monte Carlo programs: the Powheg Box. J. High Energy Phys. 06, 43 (2010).

Sjöstrand, T. et al. An introduction to Pythia 8.2. Comput. Phys. Commun. 191, 159–177 (2015).

Bähr, M. et al. Herwig++ physics and manual. Eur. Phys. J. C 58, 639–707 (2008).

Bellm, J. et al. Herwig 7.2 release note. Eur. Phys. J. C 80, 452 (2020).

Ježo, T. & Nason, P. On the treatment of resonances in next-to-leading order calculations matched to a parton shower. J. High Energy Phys. 12, 1–47 (2015).

Ježo, T., Lindert, J. M., Nason, P., Oleari, C. & Pozzorini, S. An NLO+PS generator for \(t\bar{t}\) and Wt production and decay including non-resonant and interference effects. Eur. Phys. J. C 76, 691 (2016).

Afik, Y. & de Nova, J. R. M. Quantum discord and steering in top quarks at the LHC. Phys. Rev. Lett. 130, 221801 (2023).

Aoude, R., Madge, E., Maltoni, F. & Mantani, L. Quantum SMEFT tomography: top quark pair production at the LHC. Phys. Rev. D 106, 055007 (2022).

Fabbrichesi, M., Floreanini, R. & Gabrielli, E. Constraining new physics in entangled two-qubit systems: top-quark, tau-lepton and photon pairs. Eur. Phys. J. C 83, 162 (2023).

Severi, C. & Vryonidou, E. Quantum entanglement and top spin correlations in SMEFT at higher orders. J. High Energy Phys. 01, 148 (2023).

Aoude, R., Madge, E., Maltoni, F. & Mantani, L. Probing new physics through entanglement in diboson production. J. High Energy Phys. 12, 17 (2023).

ATLAS Collaboration. Electron and photon performance measurements with the ATLAS detector using the 2015–2017 LHC proton–proton collision data. J. Instum. 14, P12006 (2019).

ATLAS Collaboration. Muon reconstruction and identification efficiency in ATLAS using the full Run 2 pp collision data set at √s = 13 TeV. Eur. Phys. J. C 81, 578 (2021).

ATLAS Collaboration. Muon reconstruction performance of the ATLAS detector in proton–proton collision data at √s = 13 TeV. Eur. Phys. J. C 76, 292 (2016).

ATLAS Collaboration. Studies of the muon momentum calibration and performance of the ATLAS detector with pp collisions at √s = 13 TeV. Eur. Phys. J. C 83, 686 (2023).

ATLAS Collaboration. Jet energy scale and resolution measured in proton–proton collisions at √s = 13 TeV with the ATLAS detector. Eur. Phys. J. C 81, 689 (2021).

ATLAS Collaboration. Jet reconstruction and performance using particle flow with the ATLAS detector. Eur. Phys. J. C 77, 466 (2017).

Cacciari, M., Salam, G. P. & Soyez, G. The anti-kt jet clustering algorithm. J. High Energy Phys. 04, 063 (2008).

Cacciari, M., Salam, G. P. & Soyez, G. FastJet user manual. Eur. Phys. J. C 72, 1896 (2012).

ATLAS Collaboration. Performance of pile-up mitigation techniques for jets in pp collisions at √s = 8 TeV using the ATLAS detector. Eur. Phys. J. C 76, 581 (2016).

ATLAS Collaboration. ATLAS flavour-tagging algorithms for the LHC Run 2 pp collision dataset. Eur. Phys. J. C 83, 681 (2023).

ATLAS Collaboration. Performance of missing transverse momentum reconstruction with the ATLAS detector using proton–proton collisions at √s = 13 TeV. Eur. Phys. J. C 78, 903 (2018).

ATLAS Collaboration. \({E}_{{\rm{T}}}^{{\rm{miss}}}\) performance in the ATLAS detector using 2015–2016 LHC pp collisions. Report No. ATLAS-CONF-2018-023 (CERN, 2018).

Betchart, B. A., Demina, R. & Harel, A. Analytic solutions for neutrino momenta in decay of top quarks. Nucl. Instrum. Meth. A 736, 169–178 (2014).

D0 Collaboration. Measurement of the top quark mass using dilepton events. Phys. Rev. Lett. 80, 2063–2068 (1998).

Cacciari, M., Salam, G. P. & Soyez, G. The catchment area of jets. J. High Energy Phys. 04, 005 (2008).

Cacciari, M. & Salam, G. P. Pileup subtraction using jet areas. Phys. Lett. B 659, 119–126 (2008).

NNPDF Collaboration. Parton distributions for the LHC run II. J. High Energy Phys. 04, 040 (2015).

ATLAS Collaboration. Studies on Top-Quark Monte Carlo Modelling for Top2016. Report No. ATL-PHYS-PUB-2016-020 (CERN, 2016).

ATLAS Collaboration. ATLAS Pythia 8 Tunes to 7TeV Data. Report No. ATL-PHYS-PUB-2014-021 (CERN, 2014).

NNPDF Collaboration. Parton distributions with LHC data. Nucl. Phys. B 867, 244–289 (2013).

Lange, D. J. The EvtGen particle decay simulation package. Nucl. Instrum. Meth. A 462, 152–155 (2001).

ATLAS Collaboration. The ATLAS Simulation Infrastructure. Eur. Phys. J. C 70, 823–874 (2010).

Agostinelli, S. et al. Geant4—a simulation toolkit. Nucl. Instrum. Meth. A 506, 250–303 (2003).

ATLAS Collaboration. The Simulation Principle and Performance of the ATLAS Fast Calorimeter Simulation FastCaloSim. Report No. ATL-PHYS-PUB-2010-013 (CERN, 2010).

Sjöstrand, T., Mrenna, S. & Skands, P. A brief introduction to Pythia 8.1. Comput. Phys. Commun. 178, 852–867 (2008).

ATLAS Collaboration. The Pythia 8 A3 Tune Description of ATLAS Minimum Bias and Inelastic Measurements Incorporating the Donnachie–Landshoff Diffractive Model. Report No. ATL-PHYS-PUB-2016-017 (CERN, 2016).

Re, E. Single-top Wt-channel production matched with parton showers using the Powheg method. Eur. Phys. J. C 71, 1547 (2011).

Frixione, S., Laenen, E., Motylinski, P., White, C. & Webber, B. R. Single-top hadroproduction in association with a W boson. J. High Energy Phys. 07, 029 (2008).

Kidonakis, N. Two-loop soft anomalous dimensions for single top quark associated production with a W− or H−. Phys. Rev. D 82, 054018 (2010).

Kidonakis, N. Top quark production. In Proc. Helmholtz International Summer School on Physics of Heavy Quarks and Hadrons (HQ 2013), 139–168 (INSPIRE, 2014).

Martin, A. D., Stirling, W. J., Thorne, R. S. & Watt, G. Parton distributions for the LHC. Eur. Phys. J. C 63, 189–285 (2009).

Martin, A. D., Stirling, W. J., Thorne, R. S. & Watt, G. Uncertainties on αS in global PDF analyses and implications for predicted hadronic cross sections. Eur. Phys. J. C 64, 653–680 (2009).

Bothmann, E. et al. Event generation with Sherpa 2.2. SciPost Phys. 7, 034 (2019).

Gleisberg, T. & Höche, S. Comix, a new matrix element generator. J. High Energy Phys. 12, 039 (2008).

Schumann, S. & Krauss, F. A parton shower algorithm based on Catani–Seymour dipole factorisation. J. High Energy Phys. 03, 038 (2008).

Höche, S., Krauss, F., Schönherr, M. & Siegert, F. A critical appraisal of NLO+PS matching methods. J. High Energy Phys. 09, 049 (2012).

Höche, S., Krauss, F., Schönherr, M. & Siegert, F. QCD matrix elements + parton showers. The NLO case. J. High Energy Phys. 04, 027 (2013).

Catani, S., Krauss, F., Webber, B. R. & Kuhn, R. QCD matrix elements + parton showers. J. High Energy Phys. 11, 063 (2002).

Höche, S., Krauss, F., Schumann, S. & Siegert, F. QCD matrix elements and truncated showers. J. High Energy Phys. 05, 053 (2009).

Buccioni, F. et al. OpenLoops 2. Eur. Phys. J. C 79, 866 (2019).

Cascioli, F., Maierhöfer, P. & Pozzorini, S. Scattering amplitudes with open loops. Phys. Rev. Lett. 108, 111601 (2012).

Denner, A., Dittmaier, S. & Hofer, L. Collier: a Fortran-based complex one-loop library in extended regularizations. Comput. Phys. Commun. 212, 220–238 (2017).

Buccioni, F., Pozzorini, S. & Zoller, M. On-the-fly reduction of open loops. Eur. Phys. J. C 78, 70 (2018).

Alwall, J. et al. The automated computation of tree-level and next-to-leading order differential cross sections, and their matching to parton shower simulations. J. High Energy Phys. 07, 079 (2014).