Abstract

Encouraging routine COVID-19 vaccinations is likely to be a crucial policy challenge for decades to come. To avert hundreds of thousands of unnecessary hospitalizations and deaths, adoption will need to be higher than it was in the autumn of 2022 or 2023, when less than one-fifth of Americans received booster vaccines1,2. One approach to encouraging vaccination is to eliminate the friction of transportation hurdles. Previous research has shown that friction can hinder follow-through3 and that individuals who live farther from COVID-19 vaccination sites are less likely to get vaccinated4. However, the value of providing free round-trip transportation to vaccination sites is unknown. Here we show that offering people free round-trip Lyft rides to pharmacies has no benefit over and above sending them behaviourally informed text messages reminding them to get vaccinated. We determined this by running a megastudy with millions of CVS Pharmacy patients in the United States testing the effects of (1) free round-trip Lyft rides to CVS Pharmacies for vaccination appointments and (2) seven different sets of behaviourally informed vaccine reminder messages. Our results suggest that offering previously vaccinated individuals free rides to vaccination sites is not a good investment in the United States, contrary to the high expectations of both expert and lay forecasters. Instead, people in the United States should be sent behaviourally informed COVID-19 vaccination reminders, which increased the 30-day COVID-19 booster uptake by 21% (1.05 percentage points) and spilled over to increase 30-day influenza vaccinations by 8% (0.34 percentage points) in our megastudy. More rigorous testing of interventions to promote vaccination is needed to ensure that evidence-based solutions are deployed widely and that ineffective but intuitively appealing tools are discontinued.

Similar content being viewed by others

Main

In the first 10 months after COVID-19 vaccines became available, they prevented an estimated 235,000 deaths and averted 1.6 million hospitalizations in the United States alone5. However, as of April 2023, at least 19% of Americans had still not received their first, free COVID-19 vaccine dose6, and 65% had not received all recommended, free COVID-19 booster immunizations to avert the waning efficacy of vaccines after 6–8 months1,7,8. This lack of uptake helps explain why nearly 500 Americans were still dying every day from COVID-19 in early 2023 (ref. 9). The US Food and Drug Administration has announced that reformulated COVID-19 vaccines may be recommended annually for all Americans10. To avert hundreds of thousands of unnecessary hospitalizations and deaths in the decades to come, booster vaccine adoption will need to be higher than it was in the autumn of 2022 or 2023, when less than 20% of Americans received bivalent boosters1,2.

This raises the question of how COVID-19 booster vaccination can be increased. Although vaccine mandates are effective11,12,13, they are not always popular or feasible12,13,14. Moreover, in the United States, cash incentives for vaccination have proven surprisingly ineffective15,16,17,18. By contrast, nudges sent by text message from a healthcare provider reminding Americans to get vaccinated have yielded measurable benefits, and the reminders that work are remarkably cost-effective19,20,21. A promising untested approach to encouraging vaccination is to eliminate transportation hurdles. This could add value, given that small amounts of friction can hinder follow-through3. Indeed, people who live farther from COVID-19 vaccination sites have proven less likely to get vaccinated4. Alternatively, it could be as unproductive as offering other vaccination rewards or inducements.

Here we evaluate whether providing free transportation to vaccination sites can increase vaccination. A cardinal finding from the choice architecture literature is that small transaction costs have an outsized impact on behaviour3. For example, changing the default choice on a form so that it is frictionless to enrol in a savings plan or to become an organ donor substantially increases the number of savers and organ donors, respectively22,23. Meanwhile, reducing friction in the college financial aid application process by helping senior high school students to complete paperwork markedly increases college enrolment 2 years later24. In the context of COVID-19, it has been shown that individuals who happen to live farther from vaccination sites are less likely to get vaccinated4, whereas employees who walked by work-site flu vaccination clinics for other reasons were more likely to get their flu vaccination25. Vaccine accessibility is a challenge for many populations, and it has received widespread media attention26,27,28. Thus, making vaccines more accessible through free round-trip rides to appointments would seem to be a good investment.

However, if lack of transportation were a key barrier to vaccination, then cash rewards could be used to fund rides to vaccination sites, and cash rewards have had no measurable impact on the Americans’ decisions to receive COVID-19 vaccines15,16,17,18. Furthermore, cash rewards offer a more flexible solution to accessibility hurdles than a free ride, which cannot facilitate childcare, eldercare or time off from work. The ineffectiveness of cash rewards as a means of encouraging COVID-19 vaccinations in the United States therefore suggests that free rides might not boost vaccination rates, particularly among those who have previously successfully obtained their primary COVID-19 immunization series. Notably, large investments were made in free rides to and from vaccination sites in mid-to-late 2021 (refs. 29,30,31,32) on the assumption that complementary transportation could help people to overcome a key logistical hurdle to vaccination. In fact, the free-ride intervention we test here is modelled on a similar program that was deployed by the White House from May to July of 2021 in partnership with Uber and Lyft (the effectiveness of this programme was not evaluated)33.

Because we know reminders direct attention to goals that may otherwise be forgotten34,35 and strongly increase immunization rates19,20,21,36, as well as many other policy-relevant outcomes36,37,38,39,40, we embedded our free-ride offering in a reminder message. Past research suggests that incorporating behavioural insights into reminders can increase their impact19,41,42,43. For example, conveying to people that a vaccine belongs to them (that is, they can ‘claim’ it or it is ‘reserved’ or ‘waiting’ for them) can increase immunization rates under certain conditions19,20,21,44. Offering people default appointments through reminders boosts vaccination uptake41. Moreover, prompting people to plan the date and time when they will get vaccinated increases the effectiveness of vaccine reminders42. Therefore, alongside our test of the value of free rides (shared through a behaviourally informed reminder message), we assess the impact of various other behaviourally informed reminders on encouraging booster vaccination uptake.

Here we present a megastudy—a field experiment testing many interventions at once45,46—in which we compare seven different behaviourally informed text reminders encouraging the receipt of a bivalent COVID-19 booster vaccine against text reminders offering individuals a free round-trip Lyft ride to their vaccination appointment. Our megastudy was conducted with more than 3.66 million patients of CVS Pharmacy in the United States who had previously received their primary COVID-19 vaccination series. We compare the actual impact of the interventions tested in our megastudy on vaccination uptake with predictions by both laypeople and PhD behavioural scientists. We find that offering patients free round-trip Lyft rides to and from their pharmacy has no measurable benefit over and above sending them two text reminders that follow best practices from previous research and encourage the receipt of a bivalent COVID-19 booster that is ‘recommended’ and ‘waiting’ for them19,20,21,44,47. We find slight variation in the performance of different reminder texts. The three text reminders that emerged as top performers (1) encouraged patients to make a vaccination plan and suggested a specific day of the week, time of day and CVS Pharmacy location for an appointment that matched when and where a patient had received their last vaccination at a CVS Pharmacy; (2) communicated that there were high current infection rates in a patient’s county; or (3) appeared to be sent directly by the pharmacy team at the patient’s most frequently visited CVS Pharmacy location. Notably, all of the bivalent COVID-19 booster reminders tested had a spillover benefit, whereby flu vaccination rates were increased.

Both laypeople and behavioural science experts proved to be poorly calibrated forecasters of what works to promote bivalent COVID-19 booster adoption. Both groups incorrectly predicted that offering individuals a free, round-trip ride to vaccination appointments would produce larger benefits than sending other types of reminder. These findings highlight the need for more experiments like the one we conducted to inform optimal policy decisions.

Megastudy to promote COVID-19 vaccination

In our megastudy, we focused on encouraging adoption by adults of the bivalent COVID-19 booster vaccine in the autumn of 2022. This vaccine was recommended by the Centers for Disease Control (CDC) for all adults who had completed any primary COVID-19 vaccination series or received a monovalent booster48. As of mid-November 2022 (shortly after the launch of our study), only 11% of Americans had received this recommended bivalent booster vaccine1. We partnered with CVS Pharmacy—a large US pharmacy chain with nearly 10,000 locations across the United States—to test eight interventions among their patients.

Our megastudy included 3,662,548 CVS Pharmacy patients deemed eligible for participation in our study by CVS Pharmacy on 18 October 2022 (see Methods for more details). For all analyses of the effectiveness of our interventions, we relied on records from CVS Pharmacy to assess which patients received a bivalent booster at any CVS Pharmacy within 30 days of the start of their intervention (or control) period.

We worked with CVS Pharmacy and a team of nine behavioural science experts to develop eight different intervention messages that were sent to patients by text message in early November 2022 to encourage adoption of the bivalent COVID-19 booster (see Table 1 for a summary of the interventions). All eight interventions consisted of an initial set of reminder texts with a follow-up set of reminder texts sent 7 days later, and all the tested text message reminders conveyed to patients that a vaccine was ‘recommended’ and ‘waiting for you’, language that was built on past research19,20,21. The intervention of focal interest was designed to test the value of free round-trip rides to vaccination sites and it included the aforementioned standard reminder language but also provided people with one free round-trip ride by Lyft (a popular ride-sharing app) to and from a CVS Pharmacy in the month ahead.

Our seven other interventions did not offer free round-trip Lyft rides to a CVS Pharmacy. These interventions instead layered a range of different strategies for encouraging immunization on top of the standard reminder, from conveying current (high) rates of infection in a patient’s county to providing resources to combat misinformation (Table 1).

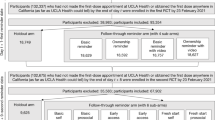

As shown in the CONSORT diagram in Fig. 1, and following our pre-registration (see Methods for more details), eligible patients were randomly assigned to one of eight different intervention conditions or a holdout control condition in which they did not receive any reminder messages to get vaccinated during the duration (30 days) of the study.

Note that after randomization, two planned interventions failed to deploy, which led 985,145 patients to be excluded from the originally planned sample. The two interventions that failed to deploy are not discussed as part of this megastudy. In addition, interventions 7 and 8 failed to deploy on launch day 1; therefore, only patients randomized to interventions 7 and 8 on launch days 2 and 3 were included in the megastudy.

Extended Data Table 1 and Supplementary Table 1 demonstrate that our nine study conditions were balanced on age, sex and vaccinations for flu in the previous flu season (P values from all three undirected F-tests > 0.064; all three Bayes factor values ⪞ 1.119 × 1023 in support of no difference). Patients were randomly assigned to receive their first reminder message on one out of three dates in November (day 1, day 2 or day 3; see Methods for details). However, conditions 7 and 8 were not administered on day 1 (as described in Methods, on day 1, conditions 7 and 8 failed to launch and these conditions therefore have no day 1 patients). This left 25 condition–day combinations (see Methods for details). Age, sex and vaccinations for flu the previous flu season were all balanced across these 25 condition–day combinations (three undirected F-tests all have P > 0.290; Bayes factor values ⪞ 6.869 × 1072 in support of no difference; Extended Data Table 1 and Supplementary Table 2). The nine study conditions were also balanced on a measure of total previous COVID-19 booster vaccinations provided by CVS Pharmacy in October 2022 (undirected F-test, P = 0.082; Bayes factor ≈ 1.646 × 1023 in support of no difference). However, a measure of the total COVID-19 vaccinations obtained by individuals prior to the launch of our first wave of text message reminders on day 1 that was extracted in December 2022 showed some imbalance (undirected F-test, P < 0.001; Extended Data Table 1 and Supplementary Table 1). This unexpected imbalance helped us to determine and confirm with CVS Pharmacy that the historical vaccination records of patients were sometimes updated after the fact (that is, when our interventions brought patients into the pharmacy, see Supplementary Information, section 3). Thus, several control variables we had pre-registered, including in our primary analyses—variables extracted in December 2022—were likely to have been influenced by the condition assignment of the patient (that is, individuals in megastudy conditions that produced more CVS Pharmacy visits for bivalent booster vaccinations had more previous vaccines ‘updated’, so they appeared to receive more vaccinations pre-treatment than other individuals). To address this, we adjusted our primary analysis strategy to an intent-to-treat strategy including all patients deemed eligible for inclusion in our study as of 18 October 2022 (except those assigned to intervention conditions in which reminders failed to send; Fig. 1), and we relied on a pre-registered robustness check that excluded control variables as our primary regression specification (Methods and Table 2, model 1). Notably, our results were robust to our original, problematic analysis strategy (in which a key control variable was likely to have been influenced by the condition assignment of the individual; Table 2, model 2).

In our holdout control condition, 5.09% of CVS Pharmacy patients received a bivalent COVID-19 booster within 30 days of their megastudy launch date. The results of our primary regression analysis (described in Methods) estimating the impact of each intervention condition are presented in Table 2, model 1. Figure 2 presents estimated vaccination rates across conditions. As Fig. 2 shows, when a vaccination reminder message indicated that a vaccine was ‘recommended’ and ‘waiting for you’ and offered patients a free round-trip Lyft ride to a local CVS Pharmacy, it produced no more vaccination uptake than our baseline text reminder without a free-ride offer, which simply indicated that a vaccine was recommended and waiting for you (two-sided Wald test Benjamini–Hochberg (BH)-adjusted P = 0.739; Bayes factor ≈ 1806.514 in support of no difference; Supplementary Table 3).

These estimates are derived from a variant of our main regression model (Table 2, model 1) in which we include an additional binary indicator for assignment to the holdout control condition and exclude the intercept. The number of patients in each megastudy condition depicted here are as follows: holdout control (492,573), baseline message (492,572), free ride (50,000), default plan (492,573), infection rates (492,573), pharmacy team message (492,573), CDC recommended (492,573), holiday protection (328,285), misinformation resources (328,826). Whiskers depict 95% CI.

On average, the eight interventions we tested increased bivalent booster vaccination rates by 20.63% (1.05 percentage points) within 30 days of deployment, and each intervention significantly increased bivalent COVID-19 booster vaccination rates of patients during this time period (all BH-adjusted P values < 0.001). We reject the null hypothesis that all eight interventions had the same effect (undirected F-test, P < 0.001; Table 2, model 1). Also, the difference in regression-estimated vaccination rates between the best-performing and the worst-performing intervention was only 0.26 percentage points (a 4.13% difference; two-sided Wald test, P < 0.001; Supplementary Table 3). Our results are robust to including pre-registered controls in our regression (Table 2, model 2), even though some of these controls were likely to be differentially influenced by a patient’s condition assignment. The results were also generally robust to excluding data from launch day 1, although doing so slightly decreased the estimated benefits of most interventions (average estimated decrease in intervention effectiveness when data from day 1 is excluded = 3.93%; Table 2, model 1, and Extended Data Table 2, model 1).

We analysed which intervention was the true top performer and how the free-ride intervention ranked against others tested. Confidence sets for ranks49 (Extended Data Table 3, model 1) showed that three reminder interventions cannot be ruled out (95% confidence) from being the true top performer, and the free-ride intervention was not one of them. The first encouraged a patient to ‘make a plan’ for getting a vaccine and specifically suggested scheduling an appointment at the same time and location when and where the patient received their last vaccination at a CVS Pharmacy (boosting vaccination rates by 23.65%, or 1.20 percentage points, BH-adjusted P < 0.001). The second conveyed that there was significant COVID-19 transmission in the patient’s county, and if infection rates in the patient’s county were in the top 50% of US counties, these reminders also conveyed the exact infection decile (for example, ‘infection rates are in the top 20% in the United States across all counties’; these messages boosted vaccination rates by 21.71%, or 1.11 percentage points, BH-adjusted P < 0.001). The final top-performing message was ostensibly sent by the pharmacy team at the patient’s most frequently visited CVS Pharmacy location (for example, ‘This message is from your Pharmacy Team at 1 Main Street’; these messages boosted vaccination rates by 21.57%, or 1.10 percentage points, BH-adjusted P < 0.001; see Supplementary Information, section 7, for more details on the test of ranks). Two-sided Wald tests confirmed that our ‘make a plan’ message significantly outperformed our baseline message (BH-adjusted P < 0.001; Supplementary Table 3), whereas our ‘infection rates’ reminder only significantly outperformed our baseline message without adjusting for multiple comparisons (unadjusted P = 0.039, BH-adjusted P = 0.126; Supplementary Table 3). The ‘pharmacy team message’ reminder only marginally outperformed our baseline message without adjusting for multiple comparisons (unadjusted P = 0.054, BH-adjusted P = 0.126; Supplementary Table 3).

After applying a James–Stein shrinkage procedure, our best-performing reminder intervention boosted vaccination uptake by an estimated 1.18 percentage points, whereas our worst-performing reminder intervention boosted vaccinations by an estimated 0.96 percentage points50. This result means that even our worst-performing message, which was designed following best practices, achieved roughly 80% of the benefits of our top-performing message (0.96/1.18 = 0.81).

We also examined two pre-registered, secondary outcomes: (1) receipt of bivalent boosters within 90 days of a patient’s launch date (instead of 30 days) and (2) flu vaccinations within 30 days of launch. Bivalent booster uptake within 90 days after launch exhibited similar, if diluted, treatment responsiveness to what is documented in the main analysis (Table 2, models 3–4). This result suggests that the interventions did not merely accelerate vaccination uptake but also increased the total number of individuals vaccinated. On average, the eight interventions increased bivalent booster vaccination rates by 9.36% (0.90 percentage points) within 90 days of deployment (from 9.65% in the holdout control to 10.55%, on average, in the intervention conditions; Table 2, model 3). This is a similar point estimate to the 1.05 percentage point increase in vaccination rates produced by the interventions during our 30-day follow-up period, but it is a smaller percent change because the 30-day baseline vaccination rate during our study was 5.09%, whereas the 90-day vaccination rate was 9.65%. All eight interventions significantly increased bivalent COVID-19 booster vaccination rates during the 90-day follow-up period (all BH-adjusted P values <0.001). Furthermore, we again reject the null hypothesis that all eight interventions had the same effect (undirected F-test P < 0.001; Table 2, model 3).

Examining the adoption of flu vaccinations showed that on average, our eight interventions increased flu vaccination rates by 7.62% within 30 days of deployment (up 0.34 percentage points from 4.52% in the holdout control group to 4.86%, on average, in the intervention groups; Table 2, model 5). Furthermore, all eight interventions significantly increased patients’ flu vaccination rates during this time period (all BH-adjusted P values < 0.001). In our primary model (Table 2, model 5), all eight interventions had the same effect (undirected F-test, P = 0.107; Bayes factor ≈ 1.860 × 1020 in support of no difference).

The efficacy of reminder text messages and the failure of free round-trip Lyft rides to boost vaccination rates over and above sending multiple vaccination reminders suggest that attentional hurdles are likely to play a greater role than transportation accessibility hurdles in preventing previously vaccinated individuals from pursuing COVID-19 booster vaccines.

Forecasts of the effects of interventions

The large outlays of resources to support free rides to vaccination sites (including the high-profile 2021 White House partnership with Lyft and Uber29,51,52) suggest that policy makers were bullish on the benefits of this approach, but the lack of benefit produced by free rides in the population we studied does not support this optimism. To determine whether this mismatch could be driven in part by inaccurate beliefs about the benefits of free rides, we conducted two follow-up forecasting surveys to measure the accuracy of people’s expectations about which interventions most effectively promote vaccination.

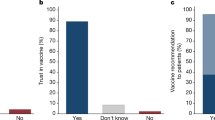

In our first forecasting study, lay participants (n = 199, Prolific sample) were presented with information about (1) our megastudy, (2) the patients included in it, and (3) the bivalent COVID-19 booster vaccination rate among patients in our holdout control condition during the 30-day megastudy period. Lay participants were then shown the exact messages sent to individuals in each of our megastudy’s intervention conditions (one at a time, in random order) and asked to forecast the bivalent COVID-19 booster vaccination rate in the intervention group in the 30 days after receiving their first message (see Methods for more details).

Lay forecasters predicted that the offer of free round-trip Lyft rides to the pharmacy would spur the most vaccinations of all interventions tested, proffering a median forecasted 30-day vaccination rate of 25.42% in the population assigned to this condition (or a 378.72% increase from baseline). At the time of these forecasting studies, we had not yet determined that data on vaccination rates of patients before 3 November 2022 were unreliable (because they were likely to be differentially influenced by individuals’ condition assignments). Therefore, when calculating summary statistics to share with forecasters, we excluded data from participants who appeared to have received a vaccine before the 3 November 2022 launch of our experiment. We told survey forecasters that 5.31% of patients in our control condition had been vaccinated, but our current analysis reports that 5.09% of patients in our control condition were vaccinated (because we did not exclude any patients from our analysis who were randomly assigned to conditions). To analyse the accuracy of the forecasters’ estimates in light of this issue, we calculated the absolute change in vaccination rates they forecasted (for example, if they predicted a treatment would produce a 6.31% vaccination rate, we would call that a predicted 1.00 percentage point boost from baseline). When describing the percentage changes in vaccination rates that were forecasted, we use a similar approach (for example, if they predicted a treatment would produce a 6.31% vaccination rate, we would call that a predicted 18.83% boost from baseline). All results described here are robust to analysing the percentage change in vaccination rates that were forecasted (see Supplementary Information, section 11). The lay forecasters predicted that this intervention would significantly outperform all other interventions tested (two-sided Wilcoxon signed-rank tests, all BH-adjusted P values < 0.010). On average, lay forecasters expected our seven reminder-only interventions to produce a median increase in vaccinations of 229.51% over baseline (a 17.50% 30-day vaccination rate). Notably, lay forecasters were substantially overoptimistic about the effects of both free rides and reminders (their estimates were 6–21 times too high for every single intervention). Lay forecasters were also poorly calibrated regarding the relative performance of the interventions: the Pearson correlation between their eight (median) forecasts and the actual regression-estimated performance of each intervention was −0.01 (Fig. 3).

Scatterplots showing median forecasted intervention effects on the x-axis and actual, regression-estimated intervention effects on the y-axis with forecasts from laypeople and experts. I1, baseline message; I2, free ride; I3, default plan; I4: infection rates; I5: pharmacy team message; I6, CDC recommended; I7, holiday protection; I8, misinformation resources.

To assess whether experts were better calibrated than lay forecasters, we conducted the same forecasting study with PhD behavioural scientists (n = 163; see Methods for more details). Similar to the lay forecasters, experts predicted that the offer of free round-trip Lyft rides to the pharmacy would outperform all other interventions tested (forecasting a median 30-day vaccination rate of 11.89% in the population assigned to this condition or a 123.92% increase from baseline). Experts predicted that this intervention would significantly outperform all other reminder-only interventions tested (two-sided Wilcoxon signed-rank tests, all BH-adjusted P values < 0.05). It is worth noting that this forecasted absolute change in vaccinations of 6.58 percentage points is not outside the realm of possibility when considering boosts in vaccination uptake that have been reported in recent articles, such as a 2010 study that found defaulting individuals into vaccination appointments increased vaccination rates by 11.72 percentage points41.

On average, expert forecasters expected our seven reminder interventions to produce a median increase in vaccinations of 63.97% over baseline (an 8.71% 30-day vaccination rate). Similar to the lay forecasters, experts were also overly optimistic about the effects of specific reminders (their estimates were 2–7 times too high for every single intervention), but they were significantly less optimistic about the performance of every intervention than lay forecasters (all BH-adjusted P values from two-sided Wilcoxon rank sum tests <0.001). Moreover, their forecast of a roughly 3 percentage point boost in vaccinations from reminder messages was in line with several recent studies that have shown that such messages can produce up to 4 percentage point increases in vaccinations when baseline vaccination rates are in the double digits19,20. Expert forecasters were also directionally better calibrated regarding the relative performance of all interventions tested than lay forecasters (consistent with considerable past research53,54), although they were still poorly calibrated overall: the correlation between their eight (median) forecasts and actual intervention effects was 0.24 (Fig. 3).

In summary, both experts and lay people incorrectly anticipated that providing patients with free rides to and from pharmacies would generate substantial unrealized value relative to sending reminders, which may help explain the large past investments made in free rides to and from vaccination sites. Forecasters were also an order of magnitude too bullish about the benefits of all of our interventions on vaccination rates, although experts were substantially less bullish than lay people. Notably, estimates of absolute changes in vaccination in response to our interventions by both groups were in line with changes that have been measured in the recent literature when baseline vaccination rates were higher19,20,41. Therefore, the overall effect size miscalibration detected may primarily reflect a failure to recognize that low vaccination base rates shift the absolute effect sizes that interventions are likely to achieve in this context.

Heterogeneity in the effects of the interventions

COVID-19 is particularly dangerous for elderly populations, making it an urgent policy priority to ensure that older Americans receive recommended booster vaccinations55,56,57. The virus has also taken a relatively greater toll on Americans with fewer resources58, and vaccine take-up has lagged in some political subcultures more than others14. In pre-registered exploratory analyses, we investigated the relative effects of our interventions on different subpopulations.

First, we assessed whether the effects of our interventions varied depending on specific characteristics of the individual, including their age, sex, past adoption of the booster vaccine (or vaccines) as measured in October 2022, and insurance coverage as measured in December 2022 (Supplementary Tables 4–24). Notably, the performance of our eight different interventions correlated highly across different subpopulations. Specifically, the average correlation between our eight treatment effect estimates across all 16 subpopulations examined was 0.72 (see Extended Data Table 4 for the correlations between our eight intervention effect estimates by subgroup). Because subgroup analyses looked so similar across interventions, Fig. 4 shows the effects of our free-ride intervention (βfree ride) by subgroup (Fig. 4a) but pools the effects of our seven reminder-only interventions (βreminder-only) when showing their performance by subgroup (Fig. 4b). As illustrated in Fig. 4 and Extended Data Fig. 1, our free-ride and reminder-only interventions were generally more effective for the following individuals: (1) older recipients (Supplementary Table 7, model 2; βfree ride = 1.23, 95% confidence intervals (CI) = 0.88–1.57, BH-adjusted P < 0.001; βreminder-only = 1.47, 95% CI = 1.37–1.58, BH-adjusted P < 0.001); (2) recipients with Medicare coverage (Supplementary Table 11, model 2; βfree ride = 0.88, 95% CI = 0.80–2.19, BH-adjusted P < 0.001; βreminder-only = 0.94, 95% CI = 1.62–2.05, BH-adjusted P < 0.001); (3) men (Supplementary Table 5, model 2; βfree ride = 1.03, 95% CI = 0.68–1.37, BH-adjusted P < 0.001; βreminder-only = 1.24, 95% CI = 1.34–1.13, BH-adjusted P < 0.001); and (4) individuals who had received at least one previous booster according to CVS Pharmacy records (Supplementary Table 9, model 2; βfree ride = 1.36, 95% CI = 1.02–1.69, BH-adjusted P < 0.001; βreminder-only = 1.48, 95% CI = 1.38–1.58, BH-adjusted P < 0.001).

a,b, Regression-estimated effects of assignment to the free-ride intervention for different subpopulations (a) and for all other reminder-only interventions pooled again by subpopulation (b) on COVID-19 bivalent booster vaccination rates at a CVS Pharmacy within 30 days (the reference group is the holdout control group). Note that these estimates are drawn from 14 new regressions (one for each subpopulation shown in the two graphs; Supplementary Tables 4–17, model 2). Each new regression uses the same specification as our main regression model (Table 2, model 1) but includes only patients from the relevant subpopulation (for example, female patients only) and includes different primary predictors (in place of eight indicators for experimental condition, the models all include one indicator for whether a patient received our free-ride intervention (to inform a), and one indicator for whether a patient received any reminder-only intervention (to inform b). For a, the number of patients in subpopulations is as follows: female (29,684), male (20,316), <median age, (24,734) ≥median age, (25,266), no previous booster(s) (20,249), 1+ previous booster(s) (29,751), non-Medicare (42,659), Medicare (7,341), non-Medicaid (42,598), Medicaid (7,402), non-commercial insurance (21,031), commercial insurance (28,969), known insurance (43,712), unknown insurance (6,288). For b, the number of patients in each subpopulation is as follows: female (1,858,359), male (1,261,616), <median age (1,536,715), ≥median age (1,583,260), no previous booster(s) (1,261,166), 1+ previous booster(s) (1,858,809), non-Medicare (2,661,549), Medicare (458,426), non-Medicaid (2,651,800), Medicaid (468,175), non-commercial insurance (1,319,882), commercial insurance (1,800,093), known insurance (2,726,694), unknown insurance (393,281). Whiskers depict 95% CI.

Next, we explored whether the impact of our interventions varied on the basis of patients’ neighbourhood characteristics (based on the zip code of the nearest CVS Pharmacy of the patient). Specifically, we explored whether there are differences in the impact of our interventions by the wealth, education, density, racial composition and 2020 Republican presidential vote share of patients’ neighbourhoods (Supplementary Tables 25–57). The interventions were generally more effective for the following populations: (1) individuals from lower-income neighbourhoods (Supplementary Table 35, model 3; βbelow median income × any intervention = 1.17, 95% CI = 1.08–1.25, BH-adjusted P < 0.001); (2) patients in neighbourhoods where fewer residents had earned Bachelor’s degrees (Supplementary Table 37, model 3; βbelow median Bachelor’s × any intervention = 1.10, 95% CI = 1.01–1.18, BH-adjusted P < 0.001); and (3) individuals from neighbourhoods where a lower proportion of residents are white (Supplementary Table 25, model 3; βbelow median white × any intervention = 1.10, 95% CI = 1.00–1.19, BH-adjusted P < 0.001). We did not observe substantial or systematic heterogeneity in treatment effects on the basis of population density, CVS Pharmacy density, 2020 Republican presidential vote share, previous COVID-19 vaccination rates or the proportion of Black, Hispanic or Asian residents in the neighbourhoods of the patients in our megastudy (Supplementary Tables 48–50 and 53–57).

Older and lower-income Americans are at particularly high risk for complications from COVID-19, and both older individuals and those from lower-income neighbourhoods responded particularly well to vaccination reminder interventions. Therefore, the benefits of implementing reminder interventions such as those studied here may be particularly substantial in these populations.

Discussion

Our megastudy demonstrated that offering previously vaccinated individuals free Lyft rides to and from a pharmacy for booster vaccines does not produce measurable benefits over and above reminding people to get vaccinated. This finding was contrary to the high expectations of both expert and lay forecasters. On a more positive note, our megastudy suggested that pharmacies and other vaccination providers in the United States have a cost-effective opportunity to increase vaccination rates by sending people a series of behaviourally informed text reminders to receive their COVID-19 booster each autumn. We identified three types of personalized text reminder that produced slightly increased expected benefits over and above other text reminders, which would be good candidates for widespread deployment. Notably, however, we estimated that a behaviourally informed text message reminder (designed to follow best practices in the academic literature by describing a vaccine as ‘recommended’ and ‘waiting for you’) achieved approximately 80% of the benefits obtained by our best-performing intervention. Moreover, using new behavioural insights to reword these text reminders can be credited with only approximately 20% of the measured benefit of our best-performing intervention.

We estimated that altogether, the text interventions we tested produced an additional 33,864 COVID-19 booster vaccinations (95% CI = 30,838–36,889) and 10,756 flu vaccinations (95% C = 7,985–13,527) in the autumn of 2022. Because COVID-19 booster vaccinations reduce infections by at least 43%59, compared with individuals who received their last monovalent COVID-19 dose at least 8 months before, these extra vaccinations probably prevented an estimated 1,857 infections (see Supplementary Information, section 6, for detailed calculations). It is likely that the benefits we see in our over 3.66-million-patient megastudy would generalize to other populations, which suggests that the scale of these benefits could potentially be increased by nearly two orders of magnitude with a national rollout of reminder messaging.

Even before the COVID-19 pandemic, the World Health Organization identified vaccine hesitancy as one of the top ten global public health threats60. The importance of finding effective ways to encourage vaccinations among populations that might otherwise neglect them has only grown. That is because vaccinations against COVID-19, flu, pneumonia, shingles, polio and other debilitating diseases avert millions of unnecessary deaths each year61,62, and vaccinations prevent even more unnecessary hospitalizations63,64, as well as staving off chronic health problems such as long COVID65,66. Our results point to the need for more rigorous testing of interventions to promote vaccination, which will help ensure that evidence-based solutions are deployed widely and that ineffective tools are discontinued. This is particularly important in light of our finding that scientific experts cannot accurately forecast what actually works to encourage vaccination nor are they well-calibrated when predicting how well interventions work.

Overall, our findings indicated that despite the optimism of lay and expert forecasters about free rides and simple reminder messages, most interventions tested produced relatively small absolute increases in vaccination rates. This means that work is needed to identify more potent methods of encouraging vaccine adoption to prevent more unnecessary hospitalizations and death from COVID-19, particularly in high-risk populations.

Our megastudy’s strengths include its large, diverse, national sample, its analysis of an objective measure of COVID-19 vaccination both 30 days and 90 days after intervention, its ability to measure spillover effects to flu vaccination decisions, and its simultaneous comparison of the impact of eight different interventions. However, a number of limitations are worth noting. First, our research focused on the benefits of providing patients in the United States with free Lyft rides to pharmacies for vaccination appointments, but our findings might have been different if we had offered free rides through another ridesharing app, by taxi or through some other service or if our test had been run in another country. Our findings also speak only to the benefit of providing free rides to vaccination sites and not to the benefits of finding other ways to increase the ease of vaccine access, such as bringing mobile vaccination clinics to remote communities67, which proved efficacious in Sierra Leone. Another limitation of our work is that Federal Communication Commission (FCC) regulations compelled us to conduct our megastudy only among individuals who had agreed to receive SMS messages from CVS Pharmacy. This sample of patients, although demographically and geographically diverse, is not necessarily representative of all populations that would benefit from the interventions tested here. It is important to note that our finding that free Lyft rides did not increase vaccinations might not hold in a sample of patients who had not previously found their way to a CVS Pharmacy for a COVID-19 vaccine. Ideally, future tests of the interventions evaluated here would be conducted with even more diverse populations and more diverse modes of transport. Another limitation of our study is that we were unable to measure the adoption of COVID-19 vaccines (or flu vaccines) at locations besides CVS Pharmacies. Although past work has found neither crowd-in nor crowd-out from reminder messages encouraging vaccination in a specific setting42, we are unable to rule out either possibility. Finally, although we were able to conduct heterogeneity analyses based on a patient’s age, sex, insurance type and previous vaccination history, one key unknown variable was the race of the patient. We obtained information about the racial composition of patients’ neighbourhoods to conduct heterogeneity analyses, but in light of racial disparities in vaccination, it would be ideal for future research studies with data on participant race to explore the relative impact of the interventions tested here on individuals from minority ethnic groups.

Methods

Ethics approval

The designs of our megastudy and our forecasting studies were reviewed and approved by the Institutional Review Board of the University of Pennsylvania. A waiver of informed consent was granted for our megastudy because of the following reasons: (1) it was deemed to pose minimal risk to patients; (2) it could not be practically carried out otherwise; and (3) only CVS Pharmacy patients who had already consented to receive SMS communications were included in the study.

Megastudy participants

Megastudy participants were CVS Pharmacy patients who (1) were 18 years or older, (2) resided in one of 65 US metropolitan areas selected for study inclusion (see Supplementary Information, section 4 for a complete list), (3) had previously received at least their primary COVID-19 vaccine series but not the bivalent booster according to CVS Pharmacy records (only patients who had completed their primary COVID-19 vaccination series were eligible for a bivalent booster according to the US Food and Drug Administration), and (4) had consented in writing to receive text messages from CVS Pharmacy (this requirement was imposed to comply with the FCC’s Telephone Consumer Protection Act, which outlaws sending communications by text without an individual’s consent).

The average age of CVS Pharmacy patients in our megastudy was 47.30 years (s.d. = 17.15), and 40.43% of patients were male. Information on the race of a patient was not available. CVS Pharmacy used SMS short codes to contact all patients in our study, and for roughly 60% of patients, the SMS short code used to contact them was familiar—meaning it had been used to send that same patient one or more pharmacy-related messages (for example, about prescription refills) in the previous 22 months.

Megastudy conditions and randomization procedures

Our megastudy included nine different conditions: eight intervention conditions and a holdout control condition. A patient’s condition determined which (if any) text messages they received from CVS Pharmacy reminding them to obtain a COVID-19 bivalent booster vaccine as part of this megastudy.

All intervention messages consisted of an initial set of reminder texts sent on 3 November (hereafter called day 1), 5 November (hereafter called day 2) or 8 November (hereafter called day 3) with a follow-up set of reminder texts sent 7 days later. All text reminders conveyed to patients that a vaccine was ‘recommended’ and ‘waiting for you’, building on past research19,20,21. Our key intervention—which was designed to test the value of free round-trip rides to vaccination sites—included this standard reminder language but also provided patients with one free round-trip ride to and from a CVS Pharmacy in the month ahead. The free ride was provided by Lyft (Extended Data Fig. 2), a popular ride-sharing company supporting over one quarter of all rideshare rides in the United States68. The Lyft codes provided were geofenced so that patients could only take the round-trip ride to and from the CVS Pharmacy locations in their metropolitan area (or subregion). CVS Pharmacy did not cover the cost of the Lyft rides or provide any incentive to patients in this study. All costs of the free Lyft rides to and from CVS Pharmacies were funded by the Social Science Research Council’s Mercury Project.

Our free-ride offering was designed to emulate the 2021 White House programme that offered people free rides by Lyft and Uber to and from vaccination sites for a limited time. Specifically, the free rides offered by the White House were available from 24 May to 4 July 2021 and required customers of Lyft (or Uber) to enter a claim code into their app to receive a free round trip ride (worth up to US$15 per ride at Lyft or up to $25 per ride at Uber29,51,52). Our programme arguably made claiming free rides slightly easier than the White House programme because it simply required one click on a link in our text message to accept our offer code (the code was also supplied directly for manual entry if preferred), and our offer was then automatically applied to the next qualifying ride taken to or from a CVS Pharmacy in the patient’s metropolitan area.

Patients encountered a price cap of $25 per ride only if they attempted to book a ride that exceeded this price limit, at which point they would be billed for spending in excess of $25. We estimated that in the zip codes where our test was conducted, the median resident lived 1.70 miles (2.7 km) from a CVS Pharmacy, such that the cost of a Lyft to or from the pharmacy would typically be under $10. Even patients living at the estimated 99th percentile distance from a CVS Pharmacy were only 9.40 miles (15.1 km) from a CVS Pharmacy, such that the cost of a Lyft to or from the pharmacy would typically be under $25 (see Supplementary Information, section 5 for calculation details and a complete distribution of distance and ride cost estimates).

The interventions tested that did not offer free round-trip Lyft rides to CVS Pharmacy in a standard reminder message instead layered a range of different strategies for encouraging immunization on top of the standard reminder, from conveying current (high) rates of infection in a patient’s county to providing resources to combat misinformation (see Table 1 for a summary of our eight interventions).

Randomization of each eligible participant to one of our nine megastudy conditions was conducted using data obtained from CVS Pharmacy on 18 October 2022 with the splitsample routine in Stata (v.17.0)69. Patients were assigned with equal probability to one of nine megastudy conditions except the intervention offering free round-trip Lyft rides to CVS Pharmacy—this intervention was capped at 50,000 people to ensure study costs would not exceed our budget. Owing to a technical error, reminder messages in two megastudy conditions (interventions 7 and 8 in Table 1) were not successfully sent on 3 November (day 1), and thus no follow-up reminder messages were sent to these intended study patients 1 week later either. These intended participants simply were not messaged or included in the megastudy. As a result, an average of 328,556 patients were included in two megastudy conditions (interventions 7 and 8), whereas an average of 492,573 patients were included in the megastudy’s remaining six conditions. See Fig. 1 for a CONSORT flow diagram depicting randomization.

Megastudy data

All megastudy data supplied by CVS Pharmacy were de-identified through the Safe Harbor method pursuant to 45 Code of Federal Regulations 164.514(b)(2). Supplied data for each patient included sex, age, dates of all previous COVID-19 and flu vaccinations at CVS Pharmacy since 2020, primary insurance type and the zip codes of the CVS Pharmacy locations that were closest to the patient’s home, the most frequently visited and the site of the patient’s last COVID-19 vaccination. We merged in several additional variables that describe the composition of residents of the zip code or county of the CVS Pharmacy closest to the patient’s home address (see Supplementary Information sections 9 and 10 for details).

Calculation of Bayes factors in support of null results

Throughout this article, we support null results by reporting approximate Bayes factors. For all nulls derived from linear probability models estimated using OLS regression, we first estimated the corresponding generalized linear model for binary data with the identity link function70 using Maximum Likelihood, then obtained the Bayesian information criterion (BIC) from the likelihood for both the null (that is, restricted) and the non-null (that is, full) model, and then tightly approximated the Bayes factor in support of the null hypothesis as Bayes factor ≈ exp([BICFull – BICRestricted]/2)71,72,73. For a null result involving a continuous dependent variable, we tightly approximated the Bayes factor directly from the sums of squared errors of the null and non-null models73.

Megastudy data analysis

We evaluated the impact of the eight interventions tested in our megastudy using a pre-registered OLS regression to predict vaccination within 30 days of the start of a patient’s intervention period (or control period). The start of a patient’s intervention period was defined as the (randomly assigned) date when they received their first reminder message (day 1, day 2 or day 3). The start of a patient’s control period was defined as the (randomly assigned) control period start date selected for purposes of comparison with the intervention conditions (day 1, day 2 or day 3). The key predictors in our regression were eight indicator variables for assignment to each intervention condition with an indicator for assignment to the holdout control condition omitted. We also included indicators for the date on which patients were assigned to receive their first reminder text (day 1 or day 2; day 3 was omitted). We estimated this regression with HC1 robust standard errors and adjusted all P values for multiple comparisons using the BH procedure74.

As noted above, a technical error prevented interventions 7 and 8 from deploying on day 1 of our study (so no patients were actually assigned to these interventions on day 1). To assess the ability of our pre-registered OLS regression to produce unbiased results despite the absence of patients in interventions 7 and 8 on launch day 1, we followed a method laid out in our second pre-registration (which was posted after this launch error became apparent but before any outcome data had been received by our research team). Specifically, we ran our standard OLS regression to predict vaccination within 30 days and added interaction terms between indicators for interventions 1–6 and launch day 1. We then conducted an undirected F-test assessing whether these interaction terms were jointly equal to zero. We failed to reject the null hypothesis (P = 0.140), which indicated a lack of heterogeneous treatment effects between launch day 1 and launch days 2 and 3 pooled (Extended Data Table 5). Furthermore, we found strong support for the null hypothesis that the effects of interventions 1–6 were identical on day 1 and days 2 and 3 pooled (Bayes factor ≈ 4.051 × 1017). Following our second pre-registration, we therefore proceeded with analysing data from all 25 available intervention-by-launch day combinations jointly and including indicators for the eight intervention conditions and launch days but no interaction terms.

In addition, after conducting our pre-registered OLS regression (outlined above) to evaluate the impact of our eight interventions on COVID-19 vaccinations, we ran a robustness check that included the following pre-registered additional controls: (1) age (as of October 2022); (2) an indicator for being 50 years or older; (3) an indicator for being male; (4) indicators for insurance type as of December 2022 (Medicare, Medicaid or unknown; commercial insurance omitted); (5) total number of previous COVID-19 vaccinations received at any CVS Pharmacy (as measured in December 2022); and (6) total number of previous COVID-19 boosters received at any CVS Pharmacy (as measured in December 2022). However, these robustness tests have the limitation that variables extracted in December 2022 were likely to be influenced patients’ condition assignment (that is, patients in megastudy conditions that produced more CVS Pharmacy visits for bivalent booster vaccinations apparently had more previous vaccinations ‘updated’, making it appear that they received more vaccinations pre-treatment than other patients; see discussion in the section ‘Megastudy to promote COVID-19 vaccination’).

In further robustness checks presented in Extended Data Table 2 and Supplementary Fig. 1, we also re-ran both regression specifications excluding all data from patients assigned to launch day 1 (because interventions 7 and 8 were not deployed on launch day 1).

Forecasting experiment with laypeople

We recruited 216 US residents who were 18 years and older from Prolific (48.15% male; average age = 35.69 years, s.d. = 13.39 years) and paid them $1.40 to complete a 7-min forecasting survey. All participants were required to take our survey on a desktop computer or tablet rather than a mobile device to ensure images would display properly. All participants were told: “We’ll ask you to review nine different sets of text messages that encouraged pharmacy patients to get their bivalent COVID-19 booster in November 2022. We’ll ask you to predict the impact each message set had on bivalent booster vaccination uptake.” They then all learned about the inclusion criteria for patients in our vaccination megastudy and were told what fraction of patients in our holdout control condition received a vaccine within 30 days of our megastudy’s launch. Because we had not yet determined that data on patients’ vaccinations before 3 November 2022 were unreliable (because it was probably differentially influenced by patients’ condition assignment) at the time of these studies, we told survey forecasters that 5.31% of patients in our control condition had been vaccinated. At this point, the forecasters were required to pass a comprehension check before proceeding—17 laypeople did not do so, leaving 199 forecasters who completed our survey and are therefore included in all analyses (52.26% male; average age = 35.69, s.d. = 13.39).

Next, the forecasters were separately shown each of the different text messaging interventions that patients in our study could have received from their pharmacy. These messages were displayed overlaid on a mobile phone screen (as they would have appeared to recipients). After viewing each set of messages and being reminded what fraction of patients in our holdout control group received a booster vaccine within 30 days of our study’s launch, the forecasters were asked: “For patients who did receive the above text messages from their pharmacy—what percentage of them do you think got the bivalent COVID-19 booster at their pharmacy within 30 days of receiving the first message above? Please enter your response to the hundredth decimal place (for example, X.XX% or XX.XX%)”. For complete study stimuli, which were closely modelled on those used in past forecasting studies21,46,75, see Supplementary Information, section 12.

Although there were only eight intervention conditions in our megastudy, one of our interventions (intervention 4: infection rates) displayed a different message to patients who lived in US counties with above median infection rates in late October 2022. To simplify the way this was communicated to survey respondents, we showed forecasters each of these two message separately and then created a weighted average of their two forecasts (weighted proportionally to the number of megastudy patients who saw each version of intervention 4) to estimate the forecasts of the impact of intervention 4 on vaccination rates.

When depicting the free-ride intervention, we did not show forecasters the Lyft app screens they would have seen had they been in the megastudy and clicked the link in their intervention message to claim a free ride to CVS Pharmacy (see Supplementary Information, section 12, screen 7). Because so few individuals in our megastudy clicked the link to claim a free ride (see Supplementary Information, section 4), giving forecasters the information shown to this small subpopulation would have provided them with a nonrepresentative experience of our stimuli.

These forecasting procedures followed standard practices in the literature21,46,75. Although incentives are sometimes provided for forecasting accuracy, they often are not21,46,75,76,77.

To analyse the accuracy of the estimates of the forecasters, we calculated the absolute change in vaccination rates they forecasted. All results we describe are robust to instead analysing the percentage change in vaccination rates that were forecasted (Supplementary Information, section 11).

Extended Data Table 6 presents the median, mean and standard deviation of the predicted effectiveness of each intervention provided by lay forecasters. Extended Data Table 7 presents the average rank order of intervention performance based on laypeople’s forecasts of intervention efficacy as well as the fraction of laypeople who forecasted each intervention would be the top performer.

Forecasting experiment with experts

We recruited 215 volunteer participants who held a PhD in psychology, economics, business or a related field in the social sciences (37.21% male; average age = 41.86 years, s.d. = 10.73 years) to complete our second forecasting survey. Participants were recruited by posting invitations on the Society for Judgement and Decision Making and the Economic Science Association listservs to anyone with the aforementioned qualifications to make predictions about “a study testing the efficacy of eight different sets of text messages encouraging people to get bivalent COVID-19 boosters”. Invitations to participate in the forecasting study were also posted on social media (Twitter and LinkedIn) in early 2023 by the study’s principal investigators with the message: “Can you predict what text messages worked best to increase bivalent COVID-19 booster vax rates this past fall? Do you have a PhD in #psych, #econ, #business, or a related field?”

The first question in our survey asked respondents to confirm that they held the requisite PhD. The remainder of the study procedures were identical to those described above for lay forecasters. Fifty-two individuals failed our attention check or dropped out of our survey before reaching it, leaving 163 participants who completed our survey and are therefore included in all analyses (49.07% male; average age = 41.86 years, s.d. = 10.73 years). For complete study stimuli, see Supplementary Information, section 12.

Extended Data Table 6 presents the median, mean and standard deviation of the predicted effectiveness of each intervention provided by expert forecasters. Extended Data Table 8 presents the average rank order of intervention performance based on the forecasts by experts of intervention efficacy as well as the fraction of experts who forecasted each intervention would be the top performer.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

The experimental data analysed in this article were provided by CVS Pharmacy. The data were de-identified pursuant to 45 CFR 164.514(b)(2). Our study’s analysis plan was pre-registered on the Open Science Framework (OSF; pre-registration 1: bit.ly/3n3KUh2; pre-registration 2: bit.ly/3ZRPzBk). Pre-registration 2 updates part of pre-registration 1 to address unexpected problems stemming from the failure to send messages, as planned, in interventions 7 and 8 on 3 November. This second pre-registration was posted before any outcome data were received or analysed. Fully anonymized and de-identified data on each study participant’s intervention condition, launch date and bivalent COVID-19 booster vaccination decision during our follow-up period are available on the OSF as are aggregated summary statistics (https://bit.ly/3MhRHgm). However, to protect patient privacy, we cannot publicly post individual-level data on patients’ covariates. Source data are provided with this paper.

Code availability

All codes used to analyse study data can also be found at the OSF (https://bit.ly/3MhRHgm).

Change history

08 July 2024

In the version of the article initially published, Extended Data Table 2 originally appeared as Extended Data Table 3; Extended Data Table 3 originally appeared as Extended Data Table 4; Extended Data Table 4 originally appeared as Extended Data Table 5 and Extended Data Table 5 originally appeared as Extended Data Table 2. The order of the Extended Data Tables has now been amended in the HTML and PDF versions of the article.

References

CDC. COVID-19 Vaccinations in the United States, County. Centers for Disease Control and Prevention https://data.cdc.gov/Vaccinations/COVID-19-Vaccinations-in-the-United-States-County/8xkx-amqh (2023).

CDC. Weekly COVID-19 Vaccination Dashboard. Centers for Disease Control and Prevention https://www.cdc.gov/vaccines/imz-managers/coverage/covidvaxview/interactive/vaccination-dashboard.html (2024).

Thaler, R. H. & Sunstein, C. R. Nudge: The Final Edition (Yale Univ. Press, 2021).

Mazar, A., Jaro, D., Tomaino, G., Carmon, Z. & Wood, W. Distance to vaccine sites is tied to decreased COVID-19 vaccine uptake. PNAS Nexus 2, pgad411 (2023).

Steele, M. K. et al. Estimated number of COVID-19 infections, hospitalizations, and deaths prevented among vaccinated persons in the US, December 2020 to September 2021. JAMA Netw. Open 5, e2220385 (2022).

CDC. COVID-19 Vaccinations in the United States. Centers for Disease Control and Prevention https://covid.cdc.gov/covid-data-tracker (2023).

Moreira, E. D. et al. Safety and efficacy of a third dose of BNT162b2 Covid-19 vaccine. N. Engl. J. Med. 386, 1910–1921 (2022).

Thomas, S. J. et al. Safety and efficacy of the BNT162b2 mRNA Covid-19 vaccine through 6 Months. N. Engl. J. Med. 385, 1761–1773 (2021).

CDC. Previous COVID-19 forecasts: deaths–2023. Centers for Disease Control and Prevention https://www.cdc.gov/coronavirus/2019-ncov/science/forecasting/forecasting-us-previous-2023.html (2023).

FDA. Future vaccination regimens addressing COVID-19. US Food and Drug Administration https://www.fda.gov/media/164699/download (2023).

Lee, J. T. et al. Employer requirements and COVID-19 vaccination and attitudes among healthcare personnel in the U.S.: findings from national immunization survey adult COVID module, August–September 2021. Vaccine 40, 7476–7482 (2022).

Mello, M. M. et al. Effectiveness of vaccination mandates in improving uptake of COVID-19 vaccines in the USA. Lancet 400, 535–538 (2022).

The White House. White House Report: Vaccination requirements are helping vaccinate more people, protect Americans from COVID-19, and strengthen the economy. The White House https://www.whitehouse.gov/wp-content/uploads/2021/10/Vaccination-Requirements-Report.pdf (2021).

Kirzinger, A., Kearney, A., Hamel, L. & Brodie, M. KFF COVID-19 Vaccine Monitor: The increasing importance of partisanship in predicting COVID-19 vaccination status. KFF https://www.kff.org/coronavirus-covid-19/poll-finding/importance-of-partisanship-predicting-vaccination-status/ (2021).

Jacobson, M., Chang, T. Y., Shah, M., Pramanik, R. & Shah, S. B. Can financial incentives and other nudges increase COVID-19 vaccinations among the vaccine hesitant? A randomized trial. Vaccine 40, 6235–6242 (2022).

Thirumurthy, H., Milkman, K. L., Volpp, K. G., Buttenheim, A. M. & Pope, D. G. Association between statewide financial incentive programs and COVID-19 vaccination rates. PLoS ONE 17, e0263425 (2022).

Chang, T. Y. et al. Reminders, but not monetary incentives, increase COVID-19 booster uptake. Proc. Natl Acad. Sci. USA 120, e2302725120 (2023).

Law, A. C., Peterson, D., Walkey, A. J. & Bosch, N. A. Lottery-based incentives and COVID-19 vaccination rates in the US. JAMA Intern. Med. 182, 235–237 (2022).

Dai, H. et al. Behavioural nudges increase COVID-19 vaccinations. Nature 597, 404–409 (2021).

Milkman, K. L. et al. A megastudy of text-based nudges encouraging patients to get vaccinated at an upcoming doctor’s appointment. Proc. Natl Acad. Sci. USA 118, e2101165118 (2021).

Milkman, K. L. et al. A 680,000-person megastudy of nudges to encourage vaccination in pharmacies. Proc. Natl Acad. Sci. USA 119, e2115126119 (2022).

Johnson, E. J. & Goldstein, D. Do defaults save lives? Science 302, 1338–1339 (2003).

Madrian, B. C. & Shea, D. F. The power of suggestion: inertia in 401(k) participation and savings behavior. Q. J. Econ. 116, 1149–1187 (2001).

Bettinger, E. P., Long, B. T., Oreopoulos, P. & Sanbonmatsu, L. The role of application assistance and information in college decisions: results from the H&R Block FAFSA experiment. Q. J. Econ. 127, 1205–1242 (2012).

Beshears, J., Choi, J. J., Laibson, D. I., Madrian, B. C. & Reynolds, G. I. Vaccination rates are associated with functional proximity but not base proximity of vaccination clinics. Med. Care 54, 578–583 (2016).

Feldman, N. Why Black and Latino people still lag on COVID vaccines—and how to fix it. NPR (26 April 2021).

Associated Press. COVID-19 vaccine bypasses some older adults. Fox News (4 March 2021).

Mueller, B. People with disabilities have a tougher time getting COVID vaccines, the C.D.C. reports. The New York Times (30 September 2021).

Lyft. Vaccines for all: announcing free or discounted rides to vaccination sites. Lyft https://www.lyft.com/blog/posts/vaccines-for-all-announcing-free-rides-for-anyone-getting-a-shot (2021).

rabbittransit. FREE rides to COVID-19 vaccination sites. rabbittransit https://www.rabbittransit.org/covid-19-vaccinations/ (2021).

Uber. 10 million free or discounted rides for vaccinations. Uber https://www.uber.com/us/en/impact/rides-for-vaccines/ (2021).

US Department of Transportation. U.S. Department of Transportation thanks transit agencies for helping Americans access COVID-19 vaccination sites nationwide and encourages more to step up as part of national month of action. US Department of Transportation https://www.transportation.gov/briefing-room/us-department-transportation-thanks-transit-agencies-helping-americans-access-covid (2021).

Siddiqui, S. & Armour, S. Uber, Lyft to provide free rides to Covid-19 vaccine sites until July 4. Wall Street Journal (11 May 2021).

Ericson, K. M. M. Forgetting we forget: overconfidence and memory. J. Eur. Econ. Assoc. 9, 43–60 (2011).

Gravert, C. in Behavioral Science in the Wild (eds Mažar, N. & Soman, D.) 120–132 (Univ. Toronto Press, 2022).

Regan, A. K., Bloomfield, L., Peters, I. & Effler, P. V. Randomized controlled trial of text message reminders for increasing influenza vaccination. Ann. Fam. Med. 15, 507–514 (2017).

Vervloet, M. et al. The effectiveness of interventions using electronic reminders to improve adherence to chronic medication: a systematic review of the literature. J. Am. Med. Inform. Assoc. 19, 696–704 (2012).

Karlan, D., McConnell, M., Mullainathan, S. & Zinman, J. Getting to the top of mind: how reminders increase saving. Manag. Sci. 62, 3393–3411 (2016).

Austin, J., Sigurdsson, S. O. & Rubin, Y. S. An examination of the effects of delayed versus immediate prompts on safety belt use. Environ. Behav. 38, 140–149 (2006).

Fishbane, A., Ouss, A. & Shah, A. K. Behavioral nudges reduce failure to appear for court. Science 370, eabb6591 (2020).

Chapman, G. B., Li, M., Colby, H. & Yoon, H. Opting in vs opting out of influenza vaccination. JAMA 304, 43–44 (2010).

Milkman, K. L., Beshears, J., Choi, J. J., Laibson, D. & Madrian, B. C. Using implementation intentions prompts to enhance influenza vaccination rates. Proc. Natl Acad. Sci. USA 108, 10415–10420 (2011).

Moehring, A. et al. Providing normative information increases intentions to accept a COVID-19 vaccine. Nat. Commun. 14, 126 (2023).

Buttenheim, A. et al. Effects of ownership text message wording and reminders on receipt of an influenza vaccination: a randomized clinical trial. JAMA Netw. Open 5, e2143388 (2022).

Duckworth, A. L. & Milkman, K. L. A guide to megastudies. PNAS Nexus 1, pgac214 (2022).

Milkman, K. L. et al. Megastudies improve the impact of applied behavioural science. Nature 600, 478–483 (2021).

Patel, M. S. et al. A randomized trial of behavioral nudges delivered through text messages to increase influenza vaccination among patients with an upcoming primary care visit. Am. J. Health Promot. 37, 324–332 (2022).

CDC. Interim clinical considerations for use of COVID-19 vaccines currently approved or authorized in the United States. Centers for Disease Control and Prevention https://www.cdc.gov/vaccines/covid-19/clinical-considerations/interim-considerations-us.html (2023).

Mogstad, M., Romano, J. P., Shaikh, A. M. & Wilhelm, D. Inference for ranks with applications to mobility across neighbourhoods and academic achievement across countries. Rev. Econ. Stud. https://doi.org/10.1093/restud/rdad006 (2023).

James, W. & Stein, C. Estimation with quadratic loss. In Proc. Fourth Berkeley Symposium on Mathematical Statistics and Probability. Volume 1: Contributions to the Theory of Statistics Vol. 4.1 (ed. Neyman, J.) 361–380 (Univ. California Press, 1961).

Khosrowshahi, D. The road to 70 percent: free vaccination rides begin today. Uber https://www.uber.com/newsroom/freevaccinerides/ (2021).

The White House. Press briefing by White House COVID-19 Response Team and Public Health Official. The White House https://www.whitehouse.gov/briefing-room/press-briefings/2021/06/08/press-briefing-by-white-house-covid-19-response-team-and-public-health-official/ (2021).

DellaVigna, S. & Pope, D. Predicting experimental results: who knows what? J. Polit. Econ. 126, 2410–2456 (2018).

Recchia, G., Freeman, A. L. J. & Spiegelhalter, D. How well did experts and laypeople forecast the size of the COVID-19 pandemic? PLoS ONE 16, e0250935 (2021).

Kislaya, I. et al. Impact of booster vaccination on COVID-19 outcomes in Portuguese population aged 80 or more years old. Eur. J. Public Health 32, ckac129.666 (2022).

Mattiuzzi, C. & Lippi, G. Efficacy of COVID-19 vaccine booster doses in older people. Eur. Geriatr. Med. 13, 275–278 (2022).

Pijls, B. G. et al. Demographic risk factors for COVID-19 infection, severity, ICU admission and death: a meta-analysis of 59 studies. BMJ Open 11, e044640 (2021).

Patel, J. A. et al. Poverty, inequality and COVID-19: the forgotten vulnerable. Public Health 183, 110–111 (2020).

Link-Gelles, R. et al. Effectiveness of bivalent mRNA vaccines in preventing symptomatic SARS-CoV-2 infection—increasing community access to testing program, United States, September–November 2022. Morb. Mortal. Wkly Rep. 71, 1526–1530 (2022).

WHO. Ten health issues WHO will tackle this year. World Health Organization https://www.who.int/news-room/spotlight/ten-threats-to-global-health-in-2019 (2019).

CDC. 14 diseases you almost forgot about (thanks to vaccines). Centers for Disease Control and Prevention https://www.cdc.gov/vaccines/parents/diseases/forgot-14-diseases.html (2022).

CDC. Shingles vaccination. Centers for Disease Control and Prevention https://www.cdc.gov/vaccines/vpd/shingles/public/shingrix/index.html (2023).

Ferdinands, J. M. et al. Prevention of influenza hospitalization among adults in the United States, 2015–2016: results from the US Hospitalized Adult Influenza Vaccine Effectiveness Network (HAIVEN). J. Infect. Dis. 220, 1265–1275 (2019).

Self, W. H. et al. Comparative effectiveness of Moderna, Pfizer–BioNTech, and Janssen (Johnson & Johnson) vaccines in preventing COVID-19 hospitalizations among adults without immunocompromising conditions—United States, March–August 2021. Morb. Mortal. Wkly Rep. 70, 1337–1343 (2021).

Ayoubkhani, D. et al. Trajectory of long COVID symptoms after COVID-19 vaccination: community based cohort study. BMJ 377, e069676 (2022).

Kuodi, P. et al. Association between BNT162b2 vaccination and reported incidence of post-Covid-19 symptoms: cross-sectional study 2020–21, Israel. NPJ Vaccines 7, 101 (2022).

Meriggi, N. F. et al. Last-mile delivery increases vaccine uptake in Sierra Leone. Nature 627, 612–619 (2024).

Kaczmarski, M. Uber vs. Lyft: who’s tops in the battle of U.S. rideshare companies. Bloomberg Second Measure https://secondmeasure.com/datapoints/rideshare-industry-overview/ (2023).

StataCorp. splitsample. Stata Package v.17.0 https://www.stata.com/manuals/dsplitsample.pdf (2023).

Dobson, A. J. & Barnett, A. G. An Introduction to Generalized Linear Models (Chapman and Hall/CRC, 2018).

Kass, R. E. & Raftery, A. E. Bayes factors. J. Am. Stat. Assoc. 90, 773–795 (1995).

Raftery, A. E. Bayesian model selection in social research. Sociol. Methodol. 25, 111–163 (1995).

Wagenmakers, E.-J. A practical solution to the pervasive problems of P values. Psychon. Bull. Rev. 14, 779–804 (2007).

Benjamini, Y. & Hochberg, Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R. Stat. Soc. Ser. B Methodol. 57, 289–300 (1995).

Linos, E., Lasky-Fink, J., Larkin, C., Moore, L. & Kirkman, E. The formality effect. Nat. Hum. Behav. https://doi.org/10.1038/s41562-023-01761-z (2023).

Mellers, B. et al. Identifying and cultivating superforecasters as a method of improving probabilistic predictions. Perspect. Psychol. Sci. 10, 267–281 (2015).

Dana, J., Atanasov, P., Tetlock, P. & Mellers, B. Are markets more accurate than polls? The surprising informational value of “just asking”. Judgm. Decis. Mak. 14, 135–147 (2019).

Acknowledgements

Research reported in this article was made possible by Flu Lab, the Social Science Research Council’s Mercury Project (with funding from the Rockefeller Foundation, the Robert Wood Johnson Foundation, Craig Newmark Philanthropies and the Alfred P. Sloan Foundation) and the Wharton Behavioral Laboratory. Support for this research was also provided in part by the AKO Foundation, J. Alexander, M. J. Leder, W. G. Lichtenstein and A. Schiffman and J. Schiffman. None of our funders had any role in study design, data collection and analysis, decision to publish or preparation of the manuscript. The content is solely the responsibility of the authors and does not necessarily represent the official views of any of the listed entities. We thank staff at CVS Pharmacy and Lyft for their collaboration with staff at the Behavior Change for Good Initiative at the University of Pennsylvania to make this research possible; and T. Li, D. Sandler, A. Swartley and A. Tumu for their research assistance. Illustrations in Supplementary Screens 1 and 6–14 were created using Canva (https://www.canva.com).

Author information

Authors and Affiliations

Contributions

Conceptualization: K.L.M., S.F.E., D.M.G., R.K., N.A.L., J.A.L., M.S.P., K.G.V. and A.L.D. Data curation: S.F.E., D.M.G., Y.J., R.S.M., R.A.S.Z., M.K.P., M.V.B., J.K.B. and C.A.M. Formal analysis: S.F.E., R.S.M., M.K.P., R.A.S.Z., R.B., C.V.d.B. and Y.J. Funding acquisition: K.L.M. and A.L.D. Investigation: K.L.M., S.F.E., D.M.G., R.S.M., R.K., M.V.B., J.K.B. and C.A.M. Methodology: K.L.M., S.F.E., D.M.G., R.K., R.B. C.V.d.B. Project administration: K.L.M., S.F.E., D.M.G., R.K., M.V.B. and C.A.M. Resources: K.L.M., S.F.E., D.M.G., R.B., J.A.L., C.V.d.B., M.V.B., C.A.M. and A.L.D. Software: S.F.E., Y.J., R.S.M., M.K.P., R.A.S.Z., R.B., C.V.d.B., M.V.B. and J.K.B. Supervision: K.L.M., S.F.E. and D.M.G. Validation: K.L.M., S.F.E., A.S.L., R.S.M., M.K.P. and R.A.S.Z. Visualization: K.L.M., S.F.E., D.M.G., A.S.L., R.S.M., M.K.P., R.A.S.Z., R.B. and C.V.d.B. Writing original draft: K.L.M. Writing, review and editing: K.L.M., S.F.E., D.M.G., Y.J., A.S.L., R.S.M., M.K.P., R.A.S.Z., R.K., R.B., N.A.L., J.A.L., M.S.P., C.V.d.B., K.G.V., M.V.B., J.K.B., C.A.M. and A.L.D.

Corresponding author

Ethics declarations

Competing interests

K.G.V. is a part-owner of VAL Health, a behavioural economics consulting firm. M.S.P. is principal and owner of Catalyst Health, a behaviour change and technology consulting firm. M.S.P. has served on advisory boards for Humana, Roche, Astellas and GlaxoSmithKline. M.V.B., C.A.M. and J.K.B. are employed by CVS Pharmacy Health. K.L.M., S.F.E., D.M.G., Y.J., A.S.L., R.S.M., M.K.P., R.A.S.Z., R.K., R.B., N.A.L., J.A.L., C.V.d.B. and A.L.D. have no competing interests to declare.

Peer review

Peer review information

Nature thanks Dean Eckles and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data figures and tables

Extended Data Fig. 1 Regression-estimated effect of all intervention arms (pooled) vs. the holdout control on bivalent COVID-19 booster vaccination rates by patient subpopulation.