Abstract

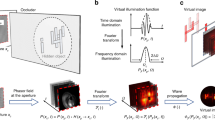

Non-line-of-sight imaging allows objects to be observed when partially or fully occluded from direct view, by analysing indirect diffuse reflections off a secondary relay surface. Despite many potential applications1,2,3,4,5,6,7,8,9, existing methods lack practical usability because of limitations including the assumption of single scattering only, ideal diffuse reflectance and lack of occlusions within the hidden scene. By contrast, line-of-sight imaging systems do not impose any assumptions about the imaged scene, despite relying on the mathematically simple processes of linear diffractive wave propagation. Here we show that the problem of non-line-of-sight imaging can also be formulated as one of diffractive wave propagation, by introducing a virtual wave field that we term the phasor field. Non-line-of-sight scenes can be imaged from raw time-of-flight data by applying the mathematical operators that model wave propagation in a conventional line-of-sight imaging system. Our method yields a new class of imaging algorithms that mimic the capabilities of line-of-sight cameras. To demonstrate our technique, we derive three imaging algorithms, modelled after three different line-of-sight systems. These algorithms rely on solving a wave diffraction integral, namely the Rayleigh–Sommerfeld diffraction integral. Fast solutions to Rayleigh–Sommerfeld diffraction and its approximations are readily available, benefiting our method. We demonstrate non-line-of-sight imaging of complex scenes with strong multiple scattering and ambient light, arbitrary materials, large depth range and occlusions. Our method handles these challenging cases without explicitly inverting a light-transport model. We believe that our approach will help to unlock the potential of non-line-of-sight imaging and promote the development of relevant applications not restricted to laboratory conditions.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

$199.00 per year

only $3.90 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The measured data and the phasor-field NLOS code supporting the findings of this study are available in the figshare repository https://doi.org/10.6084/m9.figshare.8084987. Additional data and code are available from the corresponding authors upon request.

Code availability

Our data and reconstruction code can be found in the figshare repository https://doi.org/10.6084/m9.figshare.8084987.

References

Kirmani, A., Hutchison, T., Davis, J. & Raskar, R. Looking around the corner using ultrafast transient imaging. Int. J. Comput. Vis. 95, 13–28 (2011).

Gupta, O., Willwacher, T., Velten, A., Veeraraghavan, A. & Raskar, R. Reconstruction of hidden 3D shapes using diffuse reflections. Opt. Express 20, 19096–19108 (2012).

Velten, A. et al. Recovering three-dimensional shape around a corner using ultrafast time-of-flight imaging. Nat. Commun. 3, 745 (2012).

Katz, O., Small, E. & Silberberg, Y. Looking around corners and through thin turbid layers in real time with scattered incoherent light. Nat. Photon. 6, 549–553 (2012).

Heide, F., Xiao, L., Heidrich, W. & Hullin, M. B. Diffuse mirrors: 3D reconstruction from diffuse indirect illumination using inexpensive time-of-flight sensors. In IEEE Conf. Computer Vision and Pattern Recognition (CVPR), 3222–3229 (IEEE, 2014).

Laurenzis, M. & Velten, A. Nonline-of-sight laser gated viewing of scattered photons. Opt. Eng. 53, 023102 (2014).

Buttafava, M., Zeman, J., Tosi, A., Eliceiri, K. & Velten, A. Non-line-of-sight imaging using a time-gated single photon avalanche diode. Opt. Express 23, 20997–21011 (2015).

Arellano, V., Gutierrez, D. & Jarabo, A. Fast back-projection for non-line of sight reconstruction. Opt. Express 25, 11574–11583 (2017).

O’Toole, M., Lindell, D. B. & Wetzstein, G. Confocal non-line-of-sight imaging based on the light-cone transform. Nature 555, 338–341 (2018).

Jarabo, A., Masia, B., Marco, J. & Gutierrez, D. Recent advances in transient imaging: a computer graphics and vision perspective. Visual Informatics 1, 65–79 (2017).

Velten, A. et al. Femto-photography: capturing and visualizing the propagation of light. ACM Trans. Graph. 32, 44 (2013).

Gupta, M., Nayar, S. K., Hullin, M. B. & Martin, J. Phasor imaging: a generalization of correlation-based time-of-flight imaging. ACM Trans. Graph. 34, 156 (2015).

O’Toole, M. et al. Reconstructing transient images from single-photon sensors. In 2017 IEEE Int. Conf. Computational Photography (CVPR), 1539–1547 (IEEE, 2017).

Gkioulekas, I., Levin, A., Durand, F. & Zickler, T. Micron-scale light transport decomposition using interferometry. ACM Trans. Graph. 34, 37 (2015).

Xin, S. et al. A theory of Fermat paths for non-line-of-sight shape reconstruction. In IEEE Int. Conf. Computer Vision and Pattern Recognition (CVPR), 6800–6809 (IEEE, 2019).

Tsai, C., Sankaranarayanan, A. & Gkioulekas, I. Beyond volumetric albedo a surface optimization framework for non-line-of-sight imaging. In IEEE Conf. Computer Vision and Pattern Recognition (CVPR), 1545–1555 (IEEE, 2019).

Liu, X., Bauer, S. & Velten, A. Analysis of feature visibility in non-line-of-sight measurements. In IEEE Intl Conf. Computer Vision and Pattern Recognition (CVPR) 10140–10148 (IEEE, 2019).

Wu, R. et al. Adaptive polarization-difference transient imaging for depth estimation in scattering media. Opt. Lett. 43, 1299–1302 (2018).

Laurenzis, M. & Velten, A. Feature selection and back-projection algorithms for nonline-of-sight laser-gated viewing. J. Electron. Imaging 23, 063003 (2014).

Heide, F. et al. Non-line-of-sight imaging with partial occluders and surface normals. ACM Trans. Graph. 38, 22 (2019).

Kadambi, A., Zhao, H., Shi, B. & Raskar, R. Occluded imaging with time-of-flight sensors. ACM Trans. Graph. 35, 15 (2016).

Shen, F. & Wang, A. Fast-Fourier-transform based numerical integration method for the Rayleigh–Sommerfeld diffraction formula. Appl. Opt. 45, 1102–1110 (2006).

Sen, P. et al. Dual photography. ACM Trans. Graph. 24, 745–755 (2005).

O’Toole, M. et al. Temporal frequency probing for 5D transient analysis of global light transport. ACM Trans. Graph. 33, 87 (2014).

Goodman, J. Introduction to Fourier Optics 3rd edn (Roberts, 2005).

Jarabo, A. et al. A framework for transient rendering. ACM Trans. Graph. 33, 177 (2014).

Galindo, M. et al. A dataset for benchmarking time-resolved non-line-of-sight imaging. In IEEE Intl Conf Computational Photography https://graphics.unizar.es/nlos (IEEE, 2019).

Ward, G. J. Measuring and modeling anisotropic reflection. Comput. Graph. 26, 265–272 (1992).

Acknowledgements

This work was funded by DARPA through the DARPA REVEAL project (HR0011-16-C-0025), the NASA Innovative Advanced Concepts (NIAC) Program (NNX15AQ29G), the Air Force Office of Scientific Research (AFOSR) Young Investigator Program (FA9550-15-1-0208), the Office of Naval Research (ONR, N00014-15-1-2652), the European Research Council (ERC) under the EU’s Horizon 2020 research and innovation programme (project CHAMELEON, grant no. 682080), the Spanish Ministerio de Economía y Competitividad (project TIN2016-78753-P) and the BBVA Foundation (Leonardo Grant for Researchers and Cultural Creators). We thank J. Teichman for insights and discussions in developing the phasor-field model. We also acknowledge M. Buttafava, A. Tosi and A. Ingle for help with the gated SPAD detector, and B. Masia, S. Malpica and M. Galindo for careful reading of the manuscript.

Author information

Authors and Affiliations

Contributions

X.L., S.A.R., M.L.M. and A.V. conceived the method. X.L., I.G., M.L.M. and J.H.N. implemented the reconstruction. M.L.M., X.L., J.H.N. and T.H.L. built and calibrated the system. I.G., D.G. and A.J. developed the simulation system. A.J., D.G. and A.V. coordinated the project. All authors contributed to writing the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Peer review information Nature thanks Jeffrey H. Shapiro, Ashok Veeraraghavan and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Extended data figures and tables

Extended Data Fig. 1

Capture hardware used for the results shown in this Letter.

Extended Data Fig. 2 Data comparison.

a, Raw data for one of the laser positions xp. Shown is the number of photons per second accumulated in each time bin (that is, the collected histogram divided by the integration time in seconds). Time bins are 4 ps wide. As expected, all three curves appear to follow the same mean, but there is a larger variance for lower exposure times. The raw data thus become noisier as exposure time decreases. The effects on the reconstruction are minor, as Extended Data Fig. 4 shows. Tacq, acquisition time. b, Example dataset from ref. 9 for comparison.

Extended Data Fig. 3 Visualization of the raw data for our long-exposure office scene.

a, Base-10 logarithm of the photon counts in all time bins. Pos index, laser position index; the 24,000 laser positions on the wall are labelled with these consecutive numbers. b–d, After removal of the first 833 time bins in each dataset, the plots show: the photon counts for the laser position that received the largest total number of photons in the dataset (b); the counts for the laser position that received the median number of photon counts (c); and the counts for the laser position that contains the time bin with the global maximum count in the entire set (d).

Extended Data Fig. 4 Robustness to multiple reflections.

Result for the synthetic bookshelf scene. a, Without interreflections. b, Including high-order interreflections. The quality of the results is very similar. c, Primary data (streak images) from the same scene without (top), and with interreflections (middle). The synthetic data clearly show how the presence of interreflections adds, as expected, low-frequency information resembling echoes of light. The bottom image shows primary data captured from the real office scene in Fig. 2. It follows the same behaviour as the middle image, revealing the presence of strong interreflections. Colours refer to numerical values from Matlab’s ‘fire colormap’, in arbitrary units.

Extended Data Fig. 5 Robustness to ambient light and noise.

a, Hidden bookshelf. b, Imaging results with increasingly higher exposure times; even at 50 ms, there is no significant loss in quality. Top row, image using only the pulsed laser as illumination source. Bottom row, on adding a large amount of ambient light (same conditions as the photograph in a), the quality remains constant. c, Difference between the 50-ms- and 1,000-ms-exposure captures for the lights-off case.

Extended Data Fig. 6 Short-exposure reconstructions.

Reconstruction of the office scene using very short capture times. a, Photograph of the captured scene. b, From left to right, reconstructions for data captured with 10 ms, 5 ms and 1 ms exposure time per laser. The total capture time was about 4 min, 2 min and 24 s, respectively.

Extended Data Fig. 7 Short-exposure data.

Photon counts in the raw data for our office scene for 10 ms (top row), 5 ms (centre row) and 1 ms (bottom row) exposure times per laser position. After removing the first 833 time bins in each dataset, the columns show: the photon counts for the laser position that received the largest total number of photons in the dataset (left); the counts for the laser position that received the median number of photon counts (centre); and the laser position that contains the time bin with the global maximum count in the entire set (right).

Extended Data Fig. 8 Comparison to prior methods.

Reconstruction of the office scene using very short capture times of 1 ms per laser (24 s in total). a, Filtered backprojection using the Laplacian filter. b, LOG-filtered backprojection. c, Our method.

Extended Data Fig. 9 Robustness to scene reflectance.

a, Geometry of our experimental set-up. b, From left to right, imaging results for the Lambertian targets (roughness 1) and increasingly specular surfaces (roughness 0.4 and roughness 0.2). The reconstructed irradiance is essentially the same for all cases.

Extended Data Fig. 10 Reconstruction comparison on a public dataset.

From left to right: confocal NLOS deconvolution, filtered (LOG) backprojection (FBP) and our proposed method. A large improvement in reconstruction quality for the simple scenes included in the dataset (isolated objects with no interreflections) is not to be expected, as existing methods already deliver reconstructions approaching their resolution limits. Nevertheless, our method achieves improved contrast and cleaner contours, owing to better handling of multiply scattered light.

Extended Data Fig. 11 Reconstruction comparison (noisy data).

From left to right: confocal NLOS deconvolution, FBP and our proposed method. Top row represents a non-retroreflective object; bottom row represents a retroreflective object captured in sunlight. In the presence of noisy data, FBP fails. Confocal NLOS includes a Wiener filter that needs to be explicitly estimated. Our phasor-field virtual wave method yields better results automatically. This is particularly important in complex scenes with interreflections, where the background is not uniform across the scene, and the noise level cannot be reliably estimated.

Supplementary information

Supplementary Information

This file contains the details discussion of the methods used in the online paper including supplementary discussion and derivations supporting the main manuscript.

Video 1

Additional results illustrate the transient virtual camera which can reveal the multi-bounce signal and virtual photo camera refocusing example using operators mentioned in the main text.

Rights and permissions

About this article

Cite this article

Liu, X., Guillén, I., La Manna, M. et al. Non-line-of-sight imaging using phasor-field virtual wave optics. Nature 572, 620–623 (2019). https://doi.org/10.1038/s41586-019-1461-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41586-019-1461-3

This article is cited by

-

Two-edge-resolved three-dimensional non-line-of-sight imaging with an ordinary camera

Nature Communications (2024)

-

Research Advances on Non-Line-of-Sight Imaging Technology

Journal of Shanghai Jiaotong University (Science) (2024)

-

Learning diffractive optical communication around arbitrary opaque occlusions

Nature Communications (2023)

-

Overlapping speckle correlation algorithm for high-resolution imaging and tracking of objects in unknown scattering media

Nature Communications (2023)

-

Non-line-of-sight snapshots and background mapping with an active corner camera

Nature Communications (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.