Abstract

The inferotemporal (IT) cortex is responsible for object recognition, but it is unclear how the representation of visual objects is organized in this part of the brain. Areas that are selective for categories such as faces, bodies, and scenes have been found1,2,3,4,5, but large parts of IT cortex lack any known specialization, raising the question of what general principle governs IT organization. Here we used functional MRI, microstimulation, electrophysiology, and deep networks to investigate the organization of macaque IT cortex. We built a low-dimensional object space to describe general objects using a feedforward deep neural network trained on object classification6. Responses of IT cells to a large set of objects revealed that single IT cells project incoming objects onto specific axes of this space. Anatomically, cells were clustered into four networks according to the first two components of their preferred axes, forming a map of object space. This map was repeated across three hierarchical stages of increasing view invariance, and cells that comprised these maps collectively harboured sufficient coding capacity to approximately reconstruct objects. These results provide a unified picture of IT organization in which category-selective regions are part of a coarse map of object space whose dimensions can be extracted from a deep network.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

$199.00 per year

only $3.90 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the lead corresponding author (D.Y.T.) upon reasonable request.

References

Kanwisher, N., McDermott, J. & Chun, M. M. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 17, 4302–4311 (1997).

Tsao, D. Y., Freiwald, W. A., Knutsen, T. A., Mandeville, J. B. & Tootell, R. B. Faces and objects in macaque cerebral cortex. Nat. Neurosci. 6, 989–995 (2003).

Downing, P. E., Jiang, Y., Shuman, M. & Kanwisher, N. A cortical area selective for visual processing of the human body. Science 293, 2470–2473 (2001).

Popivanov, I. D., Jastorff, J., Vanduffel, W. & Vogels, R. Heterogeneous single-unit selectivity in an fMRI-defined body-selective patch. J. Neurosci. 34, 95–111 (2014).

Kornblith, S., Cheng, X., Ohayon, S. & Tsao, D. Y. A network for scene processing in the macaque temporal lobe. Neuron 79, 766–781 (2013).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet classification with deep convolutional neural networks. Adv. Neural Information Proc. Syst. 25, 1097–1105 (2012).

Gross, C. G., Rocha-Miranda, C. E. & Bender, D. B. Visual properties of neurons in inferotemporal cortex of the macaque. J. Neurophysiol. 35, 96–111 (1972).

Lafer-Sousa, R. & Conway, B. R. Parallel, multi-stage processing of colors, faces and shapes in macaque inferior temporal cortex. Nat. Neurosci. 16, 1870–1878 (2013).

Verhoef, B.-E., Bohon, K. S. & Conway, B. R. Functional architecture for disparity in macaque inferior temporal cortex and its relationship to the architecture for faces, color, scenes, and visual field. J. Neurosci. 35, 6952–6968 (2015).

Janssen, P., Vogels, R. & Orban, G. A. Selectivity for 3D shape that reveals distinct areas within macaque inferior temporal cortex. Science 288, 2054–2056 (2000).

Yue, X., Pourladian, I. S., Tootell, R. B. & Ungerleider, L. G. Curvature-processing network in macaque visual cortex. Proc. Natl Acad. Sci. USA 111, E3467–E3475 (2014).

Fujita, I., Tanaka, K., Ito, M. & Cheng, K. Columns for visual features of objects in monkey inferotemporal cortex. Nature 360, 343–346 (1992).

Levy, I., Hasson, U., Avidan, G., Hendler, T. & Malach, R. Center-periphery organization of human object areas. Nat. Neurosci. 4, 533–539 (2001).

Konkle, T. & Oliva, A. A real-world size organization of object responses in occipitotemporal cortex. Neuron 74, 1114–1124 (2012).

Moeller, S., Freiwald, W. A. & Tsao, D. Y. Patches with links: a unified system for processing faces in the macaque temporal lobe. Science 320, 1355–1359 (2008).

Freiwald, W. A. & Tsao, D. Y. Functional compartmentalization and viewpoint generalization within the macaque face-processing system. Science 330, 845–851 (2010).

Yamins, D. L. et al. Performance-optimized hierarchical models predict neural responses in higher visual cortex. Proc. Natl Acad. Sci. USA 111, 8619–8624 (2014).

Chang, L. & Tsao, D. Y. The code for facial identity in the primate brain. Cell 169, 1013–1028.e1014 (2017).

Kumar, S., Popivanov, I. D. & Vogels, R. Transformation of visual representations across ventral stream body-selective patches. Cereb. Cortex 29, 215–229 (2019).

Tsao, D. Y., Moeller, S. & Freiwald, W. A. Comparing face patch systems in macaques and humans. Proc. Natl Acad. Sci. USA 105, 19514–19519 (2008).

Vaziri, S., Carlson, E. T., Wang, Z. & Connor, C. E. A channel for 3D environmental shape in anterior inferotemporal cortex. Neuron 84, 55–62 (2014).

McCandliss, B. D., Cohen, L. & Dehaene, S. The visual word form area: expertise for reading in the fusiform gyrus. Trends Cogn. Sci. 7, 293–299 (2003).

Baldassi, C. et al. Shape similarity, better than semantic membership, accounts for the structure of visual object representations in a population of monkey inferotemporal neurons. PLoS Comput. Biol. 9, e1003167 (2013).

Dosovitskiy, A. & Brox, T. Generating images with perceptual similarity metrics based on deep networks. Adv. Neural Information Proc. Syst. 29, 658–666 (2016).

Aparicio, P. L., Issa, E. B. & DiCarlo, J. J. Neurophysiological organization of the middle face patch in macaque inferior temporal cortex. J. Neurosci. 36, 12729–12745 (2016).

Arcaro, M. J., Schade, P. F., Vincent, J. L., Ponce, C. R. & Livingstone, M. S. Seeing faces is necessary for face-domain formation. Nat. Neurosci. 20, 1404–1412 (2017).

Zhuang, C., Zhai, A. L. & Yamins, D. in Proc. IEEE Intl Conf. Computer Vision 6002–6012 (2019).

Bao, P. & Tsao, D. Y. Representation of multiple objects in macaque category-selective areas. Nat. Commun. 9, 1774 (2018).

Rajalingham, R. & DiCarlo, J. J. Reversible inactivation of different millimeter-scale regions of primate IT results in different patterns of core object recognition deficits. Neuron 102, 493–505.e495 (2019).

Haile, T. M., Bohon, K. S., Romero, M. C. & Conway, B. R. Visual stimulus-driven functional organization of macaque prefrontal cortex. Neuroimage 188, 427–444 (2019).

Saleem, K. S. & Logothetis, N. K. A Combined MRI and Histology Atlas of the Rhesus Monkey Brain in Stereotaxic Coordinates (Academic, 2012).

Tsao, D. Y., Freiwald, W. A., Tootell, R. B. & Livingstone, M. S. A cortical region consisting entirely of face-selective cells. Science 311, 670–674 (2006).

Chang, L., Bao, P. & Tsao, D. Y. The representation of colored objects in macaque color patches. Nat. Commun. 8, 2064 (2017).

Ponce, C. R. et al. Evolving images for visual neurons using a deep generative network reveals coding principles and neuronal preferences. Cell 177, 999–1009.e1010 (2019).

Ohayon, S., Grimaldi, P., Schweers, N. & Tsao, D. Y. Saccade modulation by optical and electrical stimulation in the macaque frontal eye field. J. Neurosci. 33, 16684–16697 (2013).

Dale, A. M., Fischl, B. & Sereno, M. I. Cortical surface-based analysis. I. Segmentation and surface reconstruction. Neuroimage 9, 179–194 (1999).

Reuter, M. & Fischl, B. Avoiding asymmetry-induced bias in longitudinal image processing. Neuroimage 57, 19–21 (2011).

Reveley, C. et al. Three-dimensional digital template atlas of the macaque brain. Cereb. Cortex 27, 4463–4477 (2017).

Ohayon, S. & Tsao, D. Y. MR-guided stereotactic navigation. J. Neurosci. Methods 204, 389–397 (2012).

Konkle, T. & Caramazza, A. Tripartite organization of the ventral stream by animacy and object size. J. Neurosci. 33, 10235–10242 (2013).

Long, B., Yu, C.-P. & Konkle, T. Mid-level visual features underlie the high-level categorical organization of the ventral stream. Proc. Natl Acad. Sci. USA 115, E9015–E9024 (2018).

Acknowledgements

This work was supported by NIH (DP1-NS083063, R01-EY030650), the Howard Hughes Medical Institute, and the Tianqiao and Chrissy Chen Institute for Neuroscience at Caltech. We thank A. Flores for technical support, and members of the Tsao laboratory, N. Kanwisher, A. Kennedy, S. Kornblith, and A. Tsao for critical comments.

Author information

Authors and Affiliations

Contributions

P.B. and D.Y.T. designed the experiments, P.B. and L.S. collected the data, and P.B. analysed the data. M.M. provided technical advice on neural networks. P.B. and D.Y.T. interpreted the data and wrote the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data figures and tables

Extended Data Fig. 1 Time courses from NML1-3 during microstimulation of NML2.

a, Sagittal (top) and coronal (bottom) slices showing activation in response to microstimulation of NML2. Dark track shows electrode targeting NML2. b, Time courses of microstimulation (black) together with fMRI response (red) from each of the three patches of the NML network.

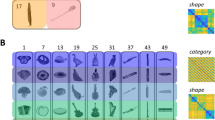

Extended Data Fig. 2 Stimuli used in electrophysiological recordings.

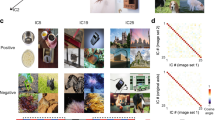

a, Fifty-one objects from six categories were shown to monkeys. b, Twenty-four views for one example object, resulting from rotations in the x–z plane (abscissa) combined with rotations in the y–z plane (ordinate). c, A line segment that was parametrically varied along three dimensions was used to test the hypothesis that cells in the NML network are selective for aspect ratio: 4 aspect ratio levels × 13 curvature levels × 12 orientation levels. d, Thirty-six example object images from an image set containing 1,593 images.

Extended Data Fig. 3 Additional neuronal response properties from different patches.

a1, Average responses to 51 objects across all cells from patch NML2 plotted against those from patch NML1. The response to each object was defined as the average response across 24 views and across all cells recorded from a given patch. b1, As in a1 for NML3 against NML2. c1, As in a1 for NML3 against NML1. a2, b2, c2, As in a1, b1, c1 for three patches of the body network. a3, As in a1 for Stubby3 against Stubby2. d, Similarity matrix showing the Pearson correlation values (r) between the average responses to 51 objects from 9 patches across 4 networks. e, Left, cumulative distributions of view-invariant identity correlations for cells in the three patches of the NML network. Right, as on left for cells in the three patches of the body network. For each cell, the view-invariant identity correlation was computed as the average across all pairs of views of the correlation between response vectors to the 51 objects at a pair of distinct views. The distribution of view-invariant identity correlations was significantly different between NML1 and NML2 (two-tailed t-test, P < 0.005, t(118) = 2.96), NML2 and NML3 (two-tailed t-test, P < 0.005, t(169) = 2.9), Body1 and Body2 (two-tailed t-test, P < 0.0001, t(131) = 6.4), and Body2 and Body3 (two-tailed t-test, P < 0.05, t(126) = 2.04). *P < 0.05, **P < 0.01. f1, Time course of view-invariant object identity selectivity for the three patches in the NML network, computed using responses to 11 objects at 24 views and a 50-ms sliding response window (solid lines). As a control, time courses of correlations between responses to different objects across different views were also computed (dashed lines) (see Methods). f2, As in f1 for body network. f3, As in f1 for stubby network. g, Top, average responses to each image across all cells recorded from each patch plotted against the logarithm of aspect ratio of the object in each image (see Methods). Pearson r values are indicated in each plot (all P < 10−10). The rightmost column shows results with cells from all three patches grouped together. Bottom, As on top, with responses to each object averaged across 24 views, and associated aspect ratios also averaged. The rightmost column shows results with cells from all three patches grouped together.

Extended Data Fig. 4 Building an object space using a deep network.

a, A diagram illustrating the structure of AlexNet6. Five convolution layers are followed by three fully connected layers. The number of units in each layer is indicated below each layer. b, Images with extreme values (highest: red, lowest: blue) of PC1 and PC2. c, The cumulative explained variance of responses of units in fc6 by 100 PCs; 50 dimensions explain 85% of variance. d, Images in the 1,593-image set with extreme values (highest: red, lowest: blue) of PC1 and PC2 built using the 1,593 image set after affine transform (see Methods). Preferred features are generally consistent with those computed using the original image set shown in b. However, PC2 no longer clearly corresponds to an animate–inanimate axis; instead, it corresponds to curved versus rectilinear shapes. e, Distributions showing the canonical correlation value between the first two PCs obtained by the 1,224-image set and the first two PCs built by other sets of images (1,224 randomly selected non-background object images, left: PC1, right: PC2; see Methods for details). The red triangles indicate the arithmetic mean of the distributions. f, We passed 19,300 object images through AlexNet and built PC1–PC2 space using PCA. Then we projected 1,224 images onto this PC1–PC2 space. The top 100 images for each network are indicated by coloured dots (compare Fig. 4b). g, Decoding accuracy for 40 images using object spaces built by responses of different layers of AlexNet (computed as in Extended Data Fig. 11d). There are multiple points for each layer because we performed PCA before and after pooling, activation, and normalization functions. Layer fc6 showed the highest decoding accuracy, motivating our use of the object space generated by this layer throughout the paper. h, To compare IT clustering determined by AlexNet with that by other deep network architectures, we first identified the layer of each network that gave the best decoding accuracy, as in g. The bar plot shows decoding accuracy for 40 images in the 9 different networks using the best-performing layer for each network. i, Canonical correlation values between the first two PCs obtained by Alexnet and first two PCs built by eight other deep-learning networks (labelled 2–9). The layer of each network that yielded the highest decoding accuracy for 40 images was used for this analysis. The name of each network and layer can be found in j. j, As in Fig. 4b using PC1 and PC2 computed from eight other networks.

Extended Data Fig. 5 Neurons across IT perform axis coding.

a1, The distribution of consistency of preferred axis for cells in the NML network (see Methods). a2, As in a1 for the body network. a3, As in a1 for the stubby network. b, Different trials of responses to the stimuli were randomly split into two halves, and the average response across half of the trials was used to predict that of the other half. Percentage variances explained, after Spearman–Brown correction (mean 87.8%), are plotted against that of the axis model (mean 49.1%). Mean explainable variance for 29 cells was 55.9%. c, Percentage variances explained by a Gaussian model plotted against that of the axis model. d, Percentage variances explained by a quadratic model plotted against that of the axis model. Inspection of coefficients of the quadratic model revealed a negligible quadratic term (mean ratio of 2nd-order coefficients/1st-order coefficient, 0.028). e1, Top, red line shows the average modulation along the preferred axis across the population of NML1 cells. The grey lines show, for each cell in NML1, the modulation along the single axis orthogonal to the preferred axis in the 50D object space that accounts for the most variability. The blue line and error bars represent the mean and s.d. of the grey lines. Middle, bottom, analogous plots for NML2 and NML3, respectively. e2, As in e1 for the three body patches. e3, As in e1 for the two stubby patches.

Extended Data Fig. 6 Similar functional organization is observed using a different stimulus set.

a, Projection of preferred axes onto PC1 versus PC2 for all neurons recorded using two different stimulus sets (left, 1,593 images from freepngs image set; right, the original 1,224 images consisting of 51 objects × 24 views). The PC1–PC2 space for both plots was computed using the 1,224 images. Different colours encode neurons from different networks. b, Top 21 preferred stimuli based on average responses from the neurons recorded in three networks to the two different image sets. c1, Four classes of silhouette images that project strongly onto the four quadrants of object space. c2, Coronal slices from posterior, middle, and anterior IT of monkeys M2 and M3 showing the spatial arrangement of the four networks revealed using the silhouette images in c1 in an experiment analogous to that in Fig. 4a. d1, Four classes of ‘fake object’ images that project strongly onto the four quadrants of object space. Note that fake objects that project onto the face quadrant no longer resemble real faces. d2, As in c2 with fake object images from d1. e1, Four example stimuli generated by deep dream techniques that project strongly onto the four quadrants of object space. e2, As in c2 with deep dream images from e1. The results in c–e support the idea that IT is organized according to the first two axes of object space rather than low-level features, semantic meaning, or image organization.

Extended Data Fig. 7 Response time courses from the four IT networks spanning object space.

Time courses were averaged across two monkeys. To avoid selection bias, odd runs were used to identity regions of interest, and even runs were used to compute average time courses from these regions.

Extended Data Fig. 8 Searching for substructure within patches.

a, Axial view of the Stubby2 patch, together with projections of three recording sites. b, Mean responses to 51 objects from neurons grouped by recording sites shown in a (same format as Fig. 2a (top)). c, Axial view of the Stubby3 patch, together with projections of two recording sites. d, Mean responses to 51 objects from neurons grouped by recording sites shown in c. e, Projection of preferred axis onto PC1–PC2 space for neurons recorded from different sites within the Stubby2 patch. There is no clear separation between neurons from the three sites in PC1–PC2 space. The grey dots represent all other neurons across the four networks. f, As in e for cells recorded from two sites in the Stubby3 patch. g1, Projection of preferred axes onto PC1–PC2 space for all recorded neurons. Different colours encode neurons from different networks. g2, As in g1, but the colour represents the cluster to which the neurons belong. Clusters were determined by k-means analysis, with the number of clusters set to four, and the distance between neurons defined by the correlation between preferred axes in the 50D object space (see Methods). Comparison of g1 and g2 reveals highly similarity between the anatomical clustering of IT networks and the functional clustering determined by k-means analysis. g3, Calinski–Harabasz criterion values were plotted against the number of clusters for k-means analysis performed with different numbers of clusters (see Methods). The optimal cluster number is four. h1, As in g1 for projection of preferred axes onto PC3 versus PC4. h2, As in h1, but the colour represents the cluster to which the neurons belong. Clusters were determined by k-means analysis, with the number of clusters set to four, and the distance between neurons defined by the correlation between preferred axes in the 48D object space obtained by removing the first two dimensions. The difference between h1 and h2 suggests that there is no anatomical clustering for dimensions beyond the first two PCs. h3, As in g3, with k-means analysis in the 48D object space. By the Calinski–Harabasz criterion, there is no functional clustering for higher dimensions beyond the first two.

Extended Data Fig. 9 The object space model parsimoniously explains previous accounts of IT organization.

a1, The object images used in ref. 14 are projected onto PC1–PC2 space (computed as in Fig. 4b, by first passing each image through AlexNet). A clear gradient from big (red) to small (blue) objects is seen. a2, As in a1, for the inanimate objects (big and small) used in ref. 40. a3, As in a1, for the original object images used in ref. 41. a4, As in a1, for the texform images used in ref. 41. b2–4, Projection of animate and inanimate images from original object images (b2, b3) and texforms (b4). c, Left, coloured dots depict projection of stimuli from the four conditions used in ref. 21. Right, example stimuli (blue, small object-like; cyan, large object-like; red, landscape-like; magenta, cave-like). d, Left, grey dots depict 1,224 stimuli projected onto object PC1–PC2 space; coloured dots depict projection of stimuli from the four blocks of the curvature localizer used in ref. 11. Right, example stimuli from the four blocks of the curvature localizer (blue, real-world round shapes; cyan, computer-generated 3D sphere arrays; red, real-world rectilinear shapes; magenta, computer-generated 3D pyramid arrays). e, Images of English and Chinese words are projected onto object PC1–PC2 space (black diamonds), superimposed on the plot from Fig. 4b. They are grouped into a small region, consistent with their modular representation by the visual word form area.

Extended Data Fig. 10 Object space dimensions are a better descriptor of response selectivity in the body patch than category labels.

a, Four classes of stimuli: 1, body stimuli that project strongly onto the body quadrant of object space (bright red); 2, body stimuli that project weakly onto the body quadrant of object space (dark red); 3, non-body stimuli that project equally strongly as group 2 onto the body quadrant of object space (dark blue); and 4, non-body stimuli that project negatively onto the body quadrant of object space (bright blue). b, Predicted response of the body patch to each image from the four stimulus conditions in a, computed by projecting the object space representation of each image onto the preferred axis of the body patch (determined from the average response of body patch neurons to the 1,224 stimuli). c, Left, fMRI response time course from the body patches to the four stimulus conditions in a. Centre, mean normalized single-unit responses from neurons in Body1 patch to the four stimulus conditions. Right, mean local field potential from Body1 patch to the four stimulus conditions. Shading represents s.e.

Extended Data Fig. 11 Object decoding and recovery of images by searching a large auxiliary object database.

a, Schematic illustrating the decoding model. To construct and test the model, we used responses of m recorded cells to n images. Population responses to images from all but one object were used to determine the transformation from responses to feature values by linear regression, and then the feature values of the remaining object were predicted (for each of 24 views). b, Model predictions plotted against actual feature values for the first PC of object space. c, Percentage explained variances for all 50 dimensions using linear regression based on the responses of four neural populations: 215 NML cells (yellow); 190 body cells (green); 67 stubby cells (magenta); 482 combined cells (black). d, Decoding accuracy as a function of the number of object images randomly drawn from the stimulus set for the same four neural populations as in c. Dashed line indicates chance performance. e, Decoding accuracy for 40 images plotted against different numbers of cells randomly drawn from same four populations as in c. f, Decoding accuracy for 40 images plotted as a function of the numbers of PCs used to parametrize object images. g, Example reconstructed images from the three groups defined in h. In each pair, the original image is shown on the left, and image reconstructed using neural data are shown on the right. h, Distribution of normalized distances between predicted and reconstructed feature vectors. The normalized distance takes account of the fact that the object images used for reconstruction did not include any of the object images shown to the monkey, setting a limit on how good the reconstruction can be (see Methods). A normalized distance of one means that the reconstruction has found the best solution possible. Images were sorted into three groups on the basis of normalized distance. i, Distribution of specialization indices SIij across objects for the NML (left), body (centre) and stubby (right) networks (see Methods and Supplementary Information). Example objects for each network with SIij ≈ 1 are shown. Red bars, objects with SIij significantly greater than 0 (two-tailed t-test, P < 0.01).

Supplementary information

Supplementary Information

This file contains Supplementary Notes and Supplementary Tables 1-2.

Rights and permissions

About this article

Cite this article

Bao, P., She, L., McGill, M. et al. A map of object space in primate inferotemporal cortex. Nature 583, 103–108 (2020). https://doi.org/10.1038/s41586-020-2350-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41586-020-2350-5

This article is cited by

-

Toward viewing behavior for aerial scene categorization

Cognitive Research: Principles and Implications (2024)

-

Probing the brain’s visual catalogue

Nature Reviews Psychology (2024)

-

Improved modeling of human vision by incorporating robustness to blur in convolutional neural networks

Nature Communications (2024)

-

High-dimensional topographic organization of visual features in the primate temporal lobe

Nature Communications (2023)

-

Express detection of visual objects by primate superior colliculus neurons

Scientific Reports (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.