Abstract

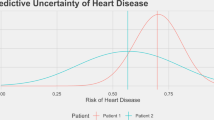

Predictive machine-learning systems often do not convey the degree of confidence in the correctness of their outputs. To prevent unsafe prediction failures from machine-learning models, the users of the systems should be aware of the general accuracy of the model and understand the degree of confidence in each individual prediction. In this Perspective, we convey the need of prediction-uncertainty metrics in healthcare applications, with a focus on radiology. We outline the sources of prediction uncertainty, discuss how to implement prediction-uncertainty metrics in applications that require zero tolerance to errors and in applications that are error-tolerant, and provide a concise framework for understanding prediction uncertainty in healthcare contexts. For machine-learning-enabled automation to substantially impact healthcare, machine-learning models with zero tolerance for false-positive or false-negative errors must be developed intentionally.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$99.00 per year

only $8.25 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Challen, R. et al. Artificial intelligence, bias and clinical safety. BMJ Qual. Saf. 28, 231–237 (2019).

Hendrycks, D. & Gimpel, K. A baseline for detecting misclassified and out-of-distribution examples in neural networks. Preprint at arXiv https://arxiv.org/abs/1610.02136 (2018).

Goodfellow, I. J., Shlens, J. & Szegedy, C. Explaining and harnessing adversarial examples. Preprint at arXiv https://arxiv.org/abs/1412.6572 (2015).

Amodei, D. et al. Concrete problems in AI safety. Preprint at arXiv https://arxiv.org/abs/1606.06565 (2016).

Nguyen, A., Yosinski, J. & Clune, J. Deep neural networks are easily fooled: high confidence predictions for unrecognizable images. In Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 427–436 (2015).

He, J. et al. The practical implementation of artificial intelligence technologies in medicine. Nat. Med. 25, 30–36 (2019).

Kompa, B., Snoek, J. & Beam, A. L. Second opinion needed: communicating uncertainty in medical machine learning. NPJ Digit. Med. 4, 4 (2021).

Guo, C., Pleiss, G., Sun, Y. & Weinberger, K. Q. On calibration of modern neural networks. In Proc. 34th Int. Conference on Machine Learning (PMLR) 70, 1321–1330 (2017).

Dyer, T. et al. Diagnosis of normal chest radiographs using an autonomous deep-learning algorithm. Clin. Radiol. 76, 473–473 (2021).

Dyer, T. et al. Validation of an artificial intelligence solution for acute triage and rule-out normal of non-contrast CT head scans. Neuroradiology 64, 735–743 (2022).

Liang, X., Nguyen, D. & Jiang, S. B. Generalizability issues with deep learning models in medicine and their potential solutions: illustrated with Cone-Beam Computed Tomography (CBCT) to Computed Tomography (CT) image conversion. Mach. Learn. Sci. Technol. 2, 015007 (2020).

Navarrete-Dechent, C. et al. Automated dermatological diagnosis: hype or reality? J. Invest. Dermatol. 138, 2277–2279 (2018).

Krois, J. et al. Generalizability of deep learning models for dental image analysis. Sci. Rep. 11, 6102 (2021).

Sathitratanacheewin, S., Sunanta, P. & Pongpirul, K. Deep learning for automated classification of tuberculosis-related chest X-ray: dataset distribution shift limits diagnostic performance generalizability. Heliyon 6, e04614 (2020).

Xin, K. Z., Li, D. & Yi, P. H. Limited generalizability of deep learning algorithm for pediatric pneumonia classification on external data. Emerg. Radiol. 29, 107–113 (2022).

Zech, J. R. et al. Variable generalization performance of a deep learning model to detect pneumonia in chest radiographs: a cross-sectional study. PLoS Med. 15, e1002683 (2018).

Chen, J. S. et al. Deep learning for the diagnosis of stage in retinopathy of prematurity: accuracy and generalizability across populations and cameras. Ophthalmol. Retina 5, 1027–1035 (2021).

Jiang, H., Kim, B., Guan, M. & Gupta, M. To trust or not to trust a classifier. In Advances in Neural Information Processing Systems 31 (2018).

Geifman, Y. & El-Yaniv, R. Selectivenet: a deep neural network with an integrated reject option. In Proc. 36th Int. Conference on Machine Learning (PMLR) 97, 2151–2159 (2019).

Madras, D., Pitassi, T. & Zemel, R. Predict responsibly: improving fairness and accuracy by learning to defer. In Advances in Neural Information Processing Systems 31 (2018).

Kim, D. et al. Accurate auto-labeling of chest X-ray images based on quantitative similarity to an explainable AI model. Nat. Commun. 13, 1867 (2022).

Bernhardt, M. et al. Active label cleaning for improved dataset quality under resource constraints. Nat. Commun. 13, 1161 (2022).

Krause, J. et al. Grader variability and the importance of reference standards for evaluating machine learning models for diabetic retinopathy. Ophthalmology 125, 1264–1272 (2018).

Basha, S. H. S., Dubey, S. R., Pulabaigari, V. & Mukherjee, S. Impact of fully connected layers on performance of convolutional neural networks for image classification. Neurocomputing 378, 112–119 (2020).

Trabelsi, A., Chaabane, M. & Ben-Hur, A. Comprehensive evaluation of deep learning architectures for prediction of DNA/RNA sequence binding specificities. Bioinformatics 35, i269–i277 (2019).

Boland, G. W. L. Voice recognition technology for radiology reporting: transforming the radiologist’s value proposition. J. Am. Coll. Radiol. 4, 865–867 (2007).

Heleno, B., Thomsen, M. F., Rodrigues, D. S., Jorgensen, K. J. & Brodersen, J. Quantification of harms in cancer screening trials: literature review. BMJ 347, f5334–f5334 (2013).

Dans, L. F., Silvestre, M. A. A. & Dans, A. L. Trade-off between benefit and harm is crucial in health screening recommendations. Part I: general principles. J. Clin. Epidemiol. 64, 231–239 (2011).

Peryer, G., Golder, S., Junqueira, D. R., Vohra, S. & Loke, Y. K. in Cochrane Handbook for Systematic Reviews of Interventions (eds Higgins, J. P. et al.) Ch. 19, 493–505 (John Wiley & Sons, 2011).

Mukhoti, J., Kirsch, A., van Amersfoort, J., Torr, P. H. S. & Gal, Y. Deep deterministic uncertainty: a simple baseline. Preprint at arXiv https://arxiv.org/abs/2102.11582 (2022).

Kruschke, J. K. in The Cambridge Handbook of Computational Psychology (ed. Sun, R.) 267–301 (Cambridge Univ. Press, 2008).

Bowman, C. R., Iwashita, T. & Zeithamova, D. Tracking prototype and exemplar representations in the brain across learning. eLife 9, e59360 (2020).

Platt, J. C. in Advances in Large Margin Classifiers (eds Smola, A. J. et al.) (MIT Press, 1999).

Ding, Z., Han, X., Liu, P. & Niethammer, M. Local temperature scaling for probability calibration. In Proc. IEEE/CVF International Conference on Computer Vision 6889–6899 (2021).

Clinciu, M.-A. & Hastie, H. A survey of explainable AI terminology. In Proc. 1st Workshop on Interactive Natural Language Technology for Explainable Artificial Intelligence (NL4XAI) 8–13 (2019).

Biran, O. & Cotton, C. Explanation and justification in machine learning: a survey. In IJCAI-17 Workshop on Explainable Artificial Intelligence (XAI) 8, 8–13 (2017).

Acknowledgements

We thank the staff at Massachusetts General Brigham’s Enterprise Medical Imaging team and Data Science Office.

Author information

Authors and Affiliations

Contributions

M.C., D.K., J.C., M.H.L. and S.D. conceived the project. M.C., D.K., J.C. and S.D. developed the theory and performed the implementations. N.G.L., V.D., J.S., M.H.L., R.G.G. and M.S.G. verified the clinical implementations. M.C., D.K., R.G.G., M.S.G. and S.D. contributed to writing the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Biomedical Engineering thanks Steve Jiang and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary figures and tables.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chua, M., Kim, D., Choi, J. et al. Tackling prediction uncertainty in machine learning for healthcare. Nat. Biomed. Eng 7, 711–718 (2023). https://doi.org/10.1038/s41551-022-00988-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41551-022-00988-x

This article is cited by

-

Ηand dexterities assessment in stroke patients based on augmented reality and machine learning through a box and block test

Scientific Reports (2024)

-

Large language models streamline automated machine learning for clinical studies

Nature Communications (2024)