Abstract

Condensed-matter physics is the study of the collective behaviour of infinitely complex assemblies of electrons, nuclei, magnetic moments, atoms or qubits1. This complexity is reflected in the size of the state space, which grows exponentially with the number of particles, reminiscent of the ‘curse of dimensionality’ commonly encountered in machine learning2. Despite this curse, the machine learning community has developed techniques with remarkable abilities to recognize, classify, and characterize complex sets of data. Here, we show that modern machine learning architectures, such as fully connected and convolutional neural networks3, can identify phases and phase transitions in a variety of condensed-matter Hamiltonians. Readily programmable through modern software libraries4,5, neural networks can be trained to detect multiple types of order parameter, as well as highly non-trivial states with no conventional order, directly from raw state configurations sampled with Monte Carlo6,7.

Similar content being viewed by others

Main

Conventionally, the study of phases in condensed-matter systems is performed with the help of tools that have been carefully designed to elucidate the underlying physical structures of various states. Among the most powerful are Monte Carlo simulations, which consist of two steps: a stochastic importance sampling over state space, and the evaluation of estimators for physical quantities calculated from these samples7. These estimators are constructed on the basis of a variety of physical motivations; for example, the availability of an experimental measure such as a specific heat; or, the encoding of a theoretical device such as an order parameter1. However, some technologically important states of matter, such as topologically ordered states1,8, can not be straightforwardly identified with standard estimators9,10.

Machine learning, already explored as a tool in condensed-matter research11,12,13,14,15,16, provides a complementary paradigm to the above approach. The ability of modern machine learning techniques to classify, identify, or interpret massive data sets such as images foreshadows their suitability to provide physicists with similar analyses on the exponentially large data sets embodied in the state space of condensed-matter systems. We first demonstrate this on the prototypical example of the square-lattice ferromagnetic Ising model, H = −J∑ 〈ij〉σizσjz. We set J = 1 and the Ising variables σiz = ±1 so that for N lattice sites, the state space is of size 2N. Standard Monte Carlo techniques provide samples of configurations for any temperature T, weighted by the Boltzmann distribution. The existence of a well-understood phase transition at temperature Tc (ref. 17), between a high-temperature paramagnetic phase and a low-temperature ferromagnetic phase, allows us the opportunity to attempt to classify the two different types of configurations without the use of Monte Carlo estimators. Instead, we construct a fully connected feed-forward neural network, implemented with TensorFlow4, to perform supervised learning directly on the thermalized and uncorrelated raw configurations sampled by a Monte Carlo simulation. As illustrated in Fig. 1a, the neural network is composed of an input layer with values determined by the spin configurations, a 100-unit hidden layer of sigmoid neurons, and an analogous output layer. When trained on a broad range of data at temperatures above and below Tc, the neural network is able to correctly classify data in a test set. Finite-size scaling is capable of systematically narrowing in on the thermodynamic value of Tc in a way analogous to measurements of the magnetization: a data collapse of the output layer (Fig. 1b) leads to an estimate of the critical exponent ν ≃ 1.0 ± 0.2, while a size scaling of the crossing temperature T∗/J estimates Tc/J ≃ 2.266 ± 0.002 (Fig. 1c). One can understand the training of the network through a simple toy model involving a hidden layer of only three analytically ‘trained’ perceptrons, representing the possible combinations of high- and low-temperature magnetic states exclusively on the basis of their magnetization. Similarly, our 100-unit neural network relies on the magnetization of the configurations in the classification task. Details about the toy model, the 100-unit neural network, as well as a low-dimensional visualization of the training data, which may be used as a preprocessing step to generate the labels if they are not available a priori, are discussed in the Supplementary Figs 1, 2, and 4. We note that in a recent development, a closely related neural-network-based approach allows for the determination of critical points using a confusion scheme18, which works even in the absence of labels. Finally, we mention that similar success rates occur if the model is modified to have antiferromagnetic couplings, H = J∑ 〈ij〉σizσjz, illustrating that the neural network is not only useful in identifying a global spin polarization, but an order parameter with other ordering wavevectors (here q = (π, π)).

a, The output layer averaged over a test set as a function of T/J for the square-lattice ferromagnetic Ising model. The inset in a displays a schematic of the fully connected neural network used in our simulations. b, Plot showing data collapse of the average output layer as a function of tL1/ν, where t = (T − Tc)/J is the reduced temperature. Linear system sizes L = 10,20,30,40 and 60 are represented by crosses, up triangles, circles, diamonds and squares, respectively. c, Plot of the finite-size scaling of the crossing temperature T∗/J (down triangles). d–f, Analogous data to a–b, but for the triangular Ising ferromagnet using the neural network trained for the square-lattice model. The vertical orange lines signal the critical temperatures of the models in the thermodynamic limit,  for the square lattice17 and Tc/J = 4/ln3 for the triangular lattice19. The dashed vertical lines represent our estimates of Tc/J from finite-size scaling. The error bars represent one standard deviation statistical uncertainty (see Supplementary Information).

for the square lattice17 and Tc/J = 4/ln3 for the triangular lattice19. The dashed vertical lines represent our estimates of Tc/J from finite-size scaling. The error bars represent one standard deviation statistical uncertainty (see Supplementary Information).

The power of neural networks lies in their ability to generalize to tasks beyond their original design. For example, what if one was presented with a data set of configurations from an Ising Hamiltonian where the lattice structure (and therefore its Tc) is not known? We illustrate this scenario by taking our above feed-forward neural network, already trained on configurations for the square-lattice ferromagnetic Ising model, and provide it a test set produced by Monte Carlo simulations of the triangular lattice ferromagnetic Ising Hamiltonian. In Fig. 1d–f we present the averaged output layer versus T, the corresponding data collapse, and a size scaling of T∗/J, allowing us to successfully estimate the critical parameters Tc/J = 3.65 ± 0.01 and ν ≃ 1.0 ± 0.3 consistent with the exact values19.

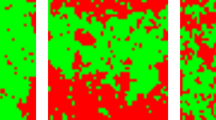

We now turn to the application of such techniques to problems of greater interest in modern condensed matter, such as disordered or topological phases, where no conventional order parameter exists. Coulomb phases, for example, are states of frustrated lattice models where local energetic constraints imposed by the Hamiltonian lead to extensively degenerate classical ground states. We consider a two-dimensional square-ice Hamiltonian given by H = J∑ vQv2, where the charge Qv = ∑ i∈vσiz is the sum over the Ising variables located in the lattice bonds incident on vertex v, as shown in Fig. 2. In a conventional approach, the ground states and the high-temperature states are distinguished by their spin–spin correlation functions: power-law decay at T = 0, and exponential decay at T = ∞. Instead we feed raw Monte Carlo configurations to train a neural network (Fig. 1a) to distinguish ground states from high-temperature states (Fig. 2a, b). For a square-ice system with N = 2 × 16 × 16 spins, we find that a neural network with 100 hidden units successfully distinguishes the states with a 99% accuracy. The network does so solely based on spin configurations, with no information about the underlying lattice—a feat difficult for the human eye, even if supplemented with a layout of the underlying Hamiltonian locality.

a, A high-temperature state. b, A ground state of the square-ice Hamiltonian. c, A ground state configuration of the Ising lattice gauge theory. Dark circles represent spins up, while white circles represent spins down. The vertices and plaquettes defining the models are shown in the insets of b and c. d, Illustration of the convolutional neural network of the Ising gauge theory. The convolutional layer applies 64 2 × 2 filters to the configuration on each sublattice, followed by rectified linear units (ReLu). The outcome is followed by a fully connected layer with 64 units and a softmax output layer. The green line represents the sliding of the maps across the configuration.

We now examine an Ising lattice gauge theory, the prototypical example of a topological phase of matter, without an order parameter at T = 0 (refs 8,20). The Hamiltonian is H = −J∑ p ∏ i∈pσiz, where the Ising spins live on the bonds of a two-dimensional square lattice with plaquettes p (see Fig. 2c). The ground state is again a degenerate manifold8,21 with exponentially decaying spin–spin correlations. As in the square-ice model, we attempt to use the neural network in Fig. 1a to classify the high- and low-temperature states, but find that the training fails to classify the test sets to an accuracy of over 50%—equivalent to simply guessing. Instead, we employ a convolutional neural network (CNN)3,22 which readily takes advantage of the two-dimensional structure as well as the translational invariance of the model. We optimize the CNN in Fig. 2d using Monte Carlo configurations from the Ising gauge theory at T = 0 and T = ∞. The CNN discriminates high-temperature from ground states with an accuracy of 100% in spite of the lack of an order parameter or qualitative differences in the spin–spin correlations. We find that the discriminative power of the CNN relies on the detection of satisfied local energetic constraints of the theory, namely whether ∏ i∈pσiz is either +1 (satisfied) or −1 (unsatisfied) on each plaquette of the system (see the Supplementary Fig. 5). We construct an analytical model to explicitly exploit the presence of local constraints in the classification task, which discriminates our test sets with an accuracy of 100% (see Supplementary Fig. 6).

Notice that, because there is no finite-temperature phase transition in the Ising gauge theory, we have restricted our analysis to temperatures T = 0 and T = ∞, only. However, in finite systems, violations of the local constraints are strongly suppressed, and the system is expected to slowly cross over to the high-temperature phase. The crossover temperature T∗ happens as the number of thermally excited defects ∼N exp(−2Jβ) is of the order of one, implying  (ref. 23). As the presence of local defects is the mechanism through which the CNN decides whether a system is in its ground state or not, we expect that it will be able to detect the crossover temperature in a test set at small but finite temperatures. In Fig. 3 we present the results of the output neurons of our analytical model for different system sizes averaged over test sets at different temperatures. We estimate the inverse crossover temperature β∗J based on the crossing point of the low- and high-temperature output neurons. As expected theoretically, this depends on the system size, and as shown in the inset in Fig. 3, a clear logarithmic crossover is apparent. This result showcases the ability of the CNN to detect not only phase transitions, but also non-trivial crossovers between topological phases and their high-temperature counterparts.

(ref. 23). As the presence of local defects is the mechanism through which the CNN decides whether a system is in its ground state or not, we expect that it will be able to detect the crossover temperature in a test set at small but finite temperatures. In Fig. 3 we present the results of the output neurons of our analytical model for different system sizes averaged over test sets at different temperatures. We estimate the inverse crossover temperature β∗J based on the crossing point of the low- and high-temperature output neurons. As expected theoretically, this depends on the system size, and as shown in the inset in Fig. 3, a clear logarithmic crossover is apparent. This result showcases the ability of the CNN to detect not only phase transitions, but also non-trivial crossovers between topological phases and their high-temperature counterparts.

Output neurons for different system sizes averaged over test sets versus βJ. Linear system sizes L = 4, 8, 12, 16, 20, 24 and 28 are represented by crosses, up triangles, circles, diamonds, squares, stars and hexagons. The inset displays β∗J (octagons) versus L in a semilog scale. The error bars represent one standard deviation statistical uncertainty.

A final implementation of our approach on a system of noninteracting spinless fermions subject to a quasi-periodic potential24 demonstrates that neural networks can distinguish metallic from Anderson localized phases, and can be used to study the localization transition between them (see the Supplementary Figs 3 and 4).

We have shown that neural network technology, developed for applications such as computer vision and natural language processing, can be used to encode phases of matter and discriminate phase transitions in correlated many-body systems. In particular, we have argued that neural networks encode information about conventional ordered phases by learning the order parameter of the phase, without knowledge of the energy or locality conditions of the Hamiltonian. Furthermore, we have shown that neural networks can encode basic information about unconventional phases such as the ones present in the square-ice model and the Ising lattice gauge theory, as well as Anderson localized phases. These results indicate that neural networks have the potential to represent ground state wavefunctions. For instance, ground states of the toric code1,8 can be represented by convolutional neural networks akin to the one in Fig. 2d (see Supplementary Fig. 6 and Supplementary Table 1). We thus anticipate their use in the field of quantum technology25, such as quantum error correction protocols26, and quantum state tomography27. As in all other areas of ‘big data’, we are already witnessing the rapid adoption of machine learning techniques as a basic research tool in condensed matter and statistical physics.

Data availability.

The data that support the plots within this paper and other findings of this study are available from the corresponding author upon request.

References

Wen, X. Quantum Field Theory of Many-Body Systems: From the Origin of Sound to an Origin of Light and Electrons (Oxford Graduate Texts, OUP Oxford, 2004).

Bellman, R. Dynamic Programming 1st edn (Princeton Univ. Press, 1957).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning (MIT Press, 2016); http://www.deeplearningbook.org

Abadi, M. et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems (2015); http://tensorflow.org

Bergstra, J. et al. Theano: a CPU and GPU math expression compiler. Proc. Python Sci. Comput. Conf. (SciPy) (2010).

Avella, A. & Mancini, F. Strongly Correlated Systems: Numerical Methods (Springer Series in Solid-State Sciences, 2013).

Sandvik, A. W. Computational studies of quantum spin systems. AIP Conf. Proc. 1297, 135–338 (2010).

Kitaev, A. Fault-tolerant quantum computation by anyons. Ann. Phys. 303, 2–30 (2003).

Levin, M. & Wen, X.-G. Detecting topological order in a ground state wave function. Phys. Rev. Lett. 96, 110405 (2006).

Kitaev, A. & Preskill, J. Topological entanglement entropy. Phys. Rev. Lett. 96, 110404 (2006).

Arsenault, L.-F., Lopez-Bezanilla, A., von Lilienfeld, O. A. & Millis, A. J. Machine learning for many-body physics: the case of the Anderson impurity model. Phys. Rev. B 90, 155136 (2014).

Kusne, A. G. et al. On-the-fly machine-learning for high-throughput experiments: search for rare-earth-free permanent magnets. Sci. Rep. 4, 6367 (2014).

Kalinin, S. V., Sumpter, B. G. & Archibald, R. K. Big-deep-smart data in imaging for guiding materials design. Nat. Mater. 14, 973–980 (2015).

Ghiringhelli, L. M., Vybiral, J., Levchenko, S. V., Draxl, C. & Scheffler, M. Big data of materials science: critical role of the descriptor. Phys. Rev. Lett. 114, 105503 (2015).

Schoenholz, S. S., Cubuk, E. D., Sussman, D. M., Kaxiras, E. & Liu, A. J. A structural approach to relaxation in glassy liquids. Nat. Phys. 12, 469–471 (2016).

Mehta, P. & Schwab, D. J. An exact mapping between the variational renormalization group and deep learning. Preprint at http://arXiv.org/abs/1410.3831 (2014).

Onsager, L. Crystal statistics. I. A two-dimensional model with an order-disorder transition. Phys. Rev. 65, 117–149 (1944).

van Nieuwenburg, E. P. L., Liu, Y.-H. & Huber, S. Learning phase transitions by confusion. Nat. Phys. http://dx.doi.org/10.1038/nphys4037 (2017).

Newell, G. F. Crystal statistics of a two-dimensional triangular Ising lattice. Phys. Rev. 79, 876–882 (1950).

Kogut, J. B. An introduction to lattice gauge theory and spin systems. Rev. Mod. Phys. 51, 659–713 (1979).

Castelnovo, C. & Chamon, C. Topological order and topological entropy in classical systems. Phys. Rev. B 76, 174416 (2007).

LeCun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. IEEE Proc. 86, 2278–2324 (1998).

Castelnovo, C. & Chamon, C. Entanglement and topological entropy of the toric code at finite temperature. Phys. Rev. B 76, 184442 (2007).

Aubry, S. & André, G. Analyticity breaking and anderson localization in incommensurate lattices. Ann. Isr. Phys. Soc. 3, 133 (1980).

Amin, M. H., Andriyash, E., Rolfe, J., Kulchytskyy, B. & Melko, R. Quantum Boltzmann machine. Preprint at http://arXiv.org/abs/1601.02036 (2016).

Torlai, G. & Melko, R. G. A neural decoder for topological codes. Preprint at http://arXiv.org/abs/1610.04238 (2016).

Landon-Cardinal, O. & Poulin, D. Practical learning method for multi-scale entangled states. New J. Phys. 14, 085004 (2012).

Acknowledgements

We would like to thank G. Baskaran, C. Castelnovo, A. Chandran, L. E. Hayward Sierens, B. Kulchytskyy, D. Schwab, M. Stoudenmire, G. Torlai, G. Vidal and Y. Wan for discussions and encouragement. We thank A. Del Maestro for a careful reading of the manuscript. This research was supported by NSERC of Canada, the Perimeter Institute for Theoretical Physics, the John Templeton Foundation, and the Shared Hierarchical Academic Research Computing Network (SHARCNET). R.G.M. acknowledges support from a Canada Research Chair. Research at Perimeter Institute is supported through Industry Canada and by the Province of Ontario through the Ministry of Research & Innovation.

Author information

Authors and Affiliations

Contributions

All authors contributed significantly to this work.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Supplementary information

Supplementary information

Supplementary information (PDF 5391 kb)

Rights and permissions

About this article

Cite this article

Carrasquilla, J., Melko, R. Machine learning phases of matter. Nature Phys 13, 431–434 (2017). https://doi.org/10.1038/nphys4035

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/nphys4035

This article is cited by

-

Understanding quantum machine learning also requires rethinking generalization

Nature Communications (2024)

-

Improved machine learning algorithm for predicting ground state properties

Nature Communications (2024)

-

Deep learning bulk spacetime from boundary optical conductivity

Journal of High Energy Physics (2024)

-

Modeling \(^4\)He\({_N}\) Clusters with Wave Functions Based on Neural Networks

Journal of Low Temperature Physics (2024)

-

Quantum error mitigation in the regime of high noise using deep neural network: Trotterized dynamics

Quantum Information Processing (2024)